超详细yolov8/11-obb旋转框全流程概述:配置环境、数据标注、训练、验证/预测、onnx部署(c++/python)详解

因为yolo的检测/分割/姿态/旋转/分类模型的环境配置、训练、推理预测等命令非常类似,这里不再详细叙述环境配置,主要参考【超详细yolo8/11-detect目标检测全流程概述:配置环境、数据标注、训练、验证/预测、onnx部署(c++/python)详解】,下面有相关链接,这里主要针对旋转框的数据标注、格式转换、模型部署等不同细节部分详细说明;

【YOLOv8/11-detect目标检测全流程教程】超详细yolo8/11-detect目标检测全流程概述:配置环境、数据标注、训练、验证/预测、onnx部署(c++/python)详解

【环境配置】Ubuntu/Debian小白从零开始配置深度学习环境和各种软件库(显卡驱动、CUDA、CUDNN、Pytorch、OpenCv、PCL、Cmake …)【持续维护】

【yolo全家桶github官网】https://github.com/ultralytics/ultralytics

【yolo说明文档】https://docs.ultralytics.com/zh/

文章目录

- 一、数据准备(标注和转换)

- 1.1手动画框旋转基础标注

- 1.2分割大模型sam辅助标注

- 1.3预训练模型onnx自动标注

- 1.4python脚本格式转化和X-anylabeling软件自带转换

- 二、模型部署

- c++版本

- python版本

一、数据准备(标注和转换)

这里 【强烈推荐使用】 X-anylabeling, windows可以直接下载exe文件使用,linux下直接运行可执行文件,非常方便,而且后续可以加入分割大模型sam、sam2、自动标注等,可以实现快速标注;

官网链接:https://github.com/CVHub520/X-AnyLabeling

使用说明:https://blog.csdn.net/CVHub/article/details/144595664?spm=1001.2014.3001.5502

1.1手动画框旋转基础标注

手动选择旋转框标注,键盘X C V B分别调整旋转的大幅度和小幅度旋转

1.2分割大模型sam辅助标注

可以加载分割大模型sam,标注速度要快很多,加载时候需要科学上网 会自动下载对应的onnx模型,若是界面上点击会自动下载不用再配置;若不行需要手动下载onnx模型,并且需要写配置模型yaml文件,并在选择模型时候加载这个文件。这个.yaml文件主要修改模型路径。给出sam的onnx模型

sam_vit_b_01ec64 的onnx模型,已包含编码和解码,【百度网盘】 【csdn免费资源文件】

配置的yaml文件

type: segment_anything

name: segment_anything_vit_b_quant-r20230520

display_name: Segment Anything (ViT-Base Quant)

# encoder_model_path: https://github.com/CVHub520/X-AnyLabeling/releases/download/v0.2.0/sam_vit_b_01ec64.encoder.quant.onnx

# decoder_model_path: https://github.com/CVHub520/X-AnyLabeling/releases/download/v0.2.0/sam_vit_b_01ec64.decoder.quant.onnx

encoder_model_path: D:\CvHub_YoLo_obb\sam_vit_h_4b8939.encoder.quant.onnx

decoder_model_path: D:\CvHub_YoLo_obb\sam_vit_h_4b8939.decoder.quant.onnx

input_size: 1024

max_width: 1024

max_height: 682

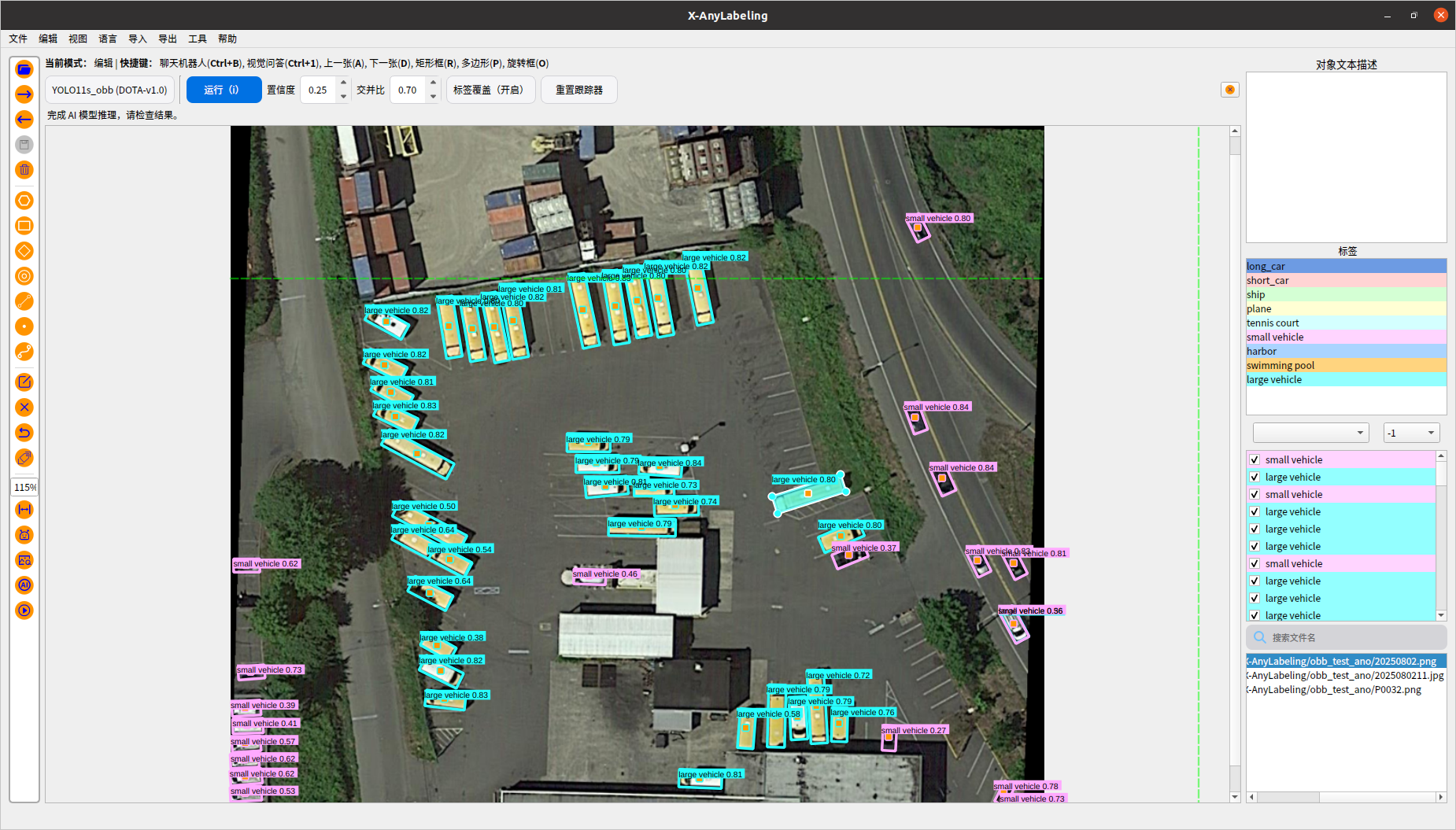

1.3预训练模型onnx自动标注

需要写yaml配置文件,主要是指定onnx模型的路径、iou、conf、classes等;

type: yolo11_obb

name: yolo11n_obb-r20240930

provider: Ultralytics

display_name: YOLO11n_obb (DOTA-v1.0)

#model_path: https://github.com/CVHub520/X-AnyLabeling/releases/download/v2.4.4/yolo11s-obb.onnx

model_path: /home/xxx/yolov11/yolo_base_model/yolo11n-obb.onnx

iou_threshold: 0.7

conf_threshold: 0.25

classes: #就是label名称- plane- ship- storage tank- baseball diamond- tennis court- basketball court- ground track field- harbor- bridge- large vehicle- small vehicle- helicopter- roundabout- soccer ball field- swimming pool

可以设置置信度 交并比 滤除或者保留多的旋转目标

1.4python脚本格式转化和X-anylabeling软件自带转换

DOTAv1数据格式

x1, y1, x2, y2, x3, y3, x4, y4, category, difficult

x1, y1, x2, y2, x3, y3, x4, y4表示四个顶点的x坐标和y坐标。category表示物体的分类,difficult表示识别难度(1困难,0简单);

YOLO-OBB数据格式

class_index x1 y1 x2 y2 x3 y3 x4 y4

#例子

0 0.780811 0.743961 0.782371 0.74686 0.777691 0.752174 0.776131 0.749758

class_index表示分类的索引,其他8项是4个顶点的坐标,坐标是归一化后的;

python脚本转换

json2txt_obb.py

import json

import os

import cv2

import numpy as npdef visualize_obb_annotations(txt_dir, img_dir, output_dir, class_colors):"""可视化YOLO OBB格式标注(优化版):param txt_dir: TXT标注文件目录:param img_dir: 对应图片目录:param output_dir: 可视化结果输出目录:param class_colors: 类别颜色映射字典 {class_idx: (B,G,R)}"""os.makedirs(output_dir, exist_ok=True)# 支持的图片格式supported_formats = ('.jpg', '.jpeg', '.png', '.bmp')for txt_name in os.listdir(txt_dir):if not txt_name.endswith('.txt'):continue# 尝试查找对应图片(支持多种格式)img_name_no_ext = os.path.splitext(txt_name)[0]img_path = Nonefor fmt in supported_formats:temp_path = os.path.join(img_dir, img_name_no_ext + fmt)if os.path.exists(temp_path):img_path = temp_pathbreakif img_path is None:print(f"Warning: No corresponding image found for {txt_name}")continueimg = cv2.imread(img_path)if img is None:print(f"Warning: Could not read image {img_path}")continueprint(f"Processing {txt_name}...")txt_path = os.path.join(txt_dir, txt_name)with open(txt_path, 'r') as f:lines = f.readlines()for line in lines:parts = line.strip().split()if len(parts) != 9:continueclass_idx = int(parts[0])# 反归一化点坐标points = np.array([float(x) for x in parts[1:]], dtype=np.float32)img_h, img_w = img.shape[:2]abs_points = points.reshape(4, 2) * np.array([img_w, img_h])color = class_colors.get(class_idx, (0, 255, 0))# 绘制多边形cv2.polylines(img, [abs_points.astype(np.int32)], isClosed=True, color=color, thickness=2)# 绘制点for (x, y) in abs_points:cv2.circle(img, (int(x), int(y)), 4, color, -1)cv2.circle(img, (50, 100), 4, color, -1)# 绘制类别标签cv2.putText(img, str(class_idx), tuple(abs_points[0].astype(np.int32)),cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255,255,255), 2)# 保持原图片格式输出output_ext = os.path.splitext(img_path)[1]output_name = img_name_no_ext + output_extoutput_path = os.path.join(output_dir, output_name)cv2.imwrite(output_path, img)import shutildef convert_json_to_txt(json_dir, output_dir, class_list, copy_dir=None):"""将Labelme生成的JSON标注文件转换为YOLO OBB格式的TXT文件,并可选拷贝txt和图片到指定文件夹:param json_dir: JSON文件目录路径:param output_dir: 输出TXT文件目录路径:param class_list: 类别名称列表:param copy_dir: 可选,拷贝txt和图片的目标文件夹"""class_index = {name: idx for idx, name in enumerate(class_list)}os.makedirs(output_dir, exist_ok=True)if copy_dir:os.makedirs(copy_dir, exist_ok=True)for filename in os.listdir(json_dir):if not filename.endswith('.json'):continueprint(f"Processing {filename}...")json_path = os.path.join(json_dir, filename)with open(json_path, 'r') as f:data = json.load(f)img_width = data['imageWidth']img_height = data['imageHeight']txt_name = os.path.splitext(filename)[0] + '.txt'txt_path = os.path.join(output_dir, txt_name)with open(txt_path, 'w') as txt_file:for shape in data['shapes']:if len(shape['points']) != 4: # 只处理4个点的标注continuelabel = shape['label']points = shape['points']class_idx = class_index.get(label, -1)if class_idx == -1:continue# 归一化4个点坐标normalized_points = []for x, y in points:nx = x / img_widthny = y / img_heightnormalized_points.extend([nx, ny])# 确保有8个坐标值(4个点)if len(normalized_points) == 8:line = f"{class_idx} " + " ".join([f"{coord:.6f}" for coord in normalized_points])txt_file.write(line + '\n')# 拷贝txt和图片到copy_dirif copy_dir:# 拷贝txtshutil.copy(txt_path, os.path.join(copy_dir, txt_name))# 拷贝图片(假设图片为jpg或png)img_base = os.path.splitext(filename)[0]for ext in ['.jpg', '.png', '.jpeg', '.bmp']:img_path = os.path.join(json_dir, img_base + ext)if os.path.exists(img_path):shutil.copy(img_path, os.path.join(copy_dir, img_base + ext))breakif __name__ == "__main__":# 使用示例#json_folder = "F:/Data/20250612" # 替换为JSON文件目录json_folder = "./obb_test_ano" # 替换为JSON文件目录output_folder = "./obb_test_ano" # 替换为输出txt目录selected_folder = "./obb_test_result" # 替换为可视化结果路径 classes = ["long_car", "short_car","plane","ship","4"] # 替换为实际类别列表# 调用转换函数print("Converting JSON to TXT...")#convert_json_to_txt(json_folder, output_folder, classes)convert_json_to_txt(json_folder, output_folder, classes, copy_dir=selected_folder)class_colors = {0: (255,0,0), 1: (0,0,255),2:(0,255,0), 3:(0,125,255)}# 可视化标注# 这里假设输出目录和图片目录相同visualize_obb_annotations(output_folder, output_folder, selected_folder, class_colors)

里面添加了辅助验证函数visualize_obb_annotations,把txt内容映射换源到原图上,用于验证转换是否有问题;

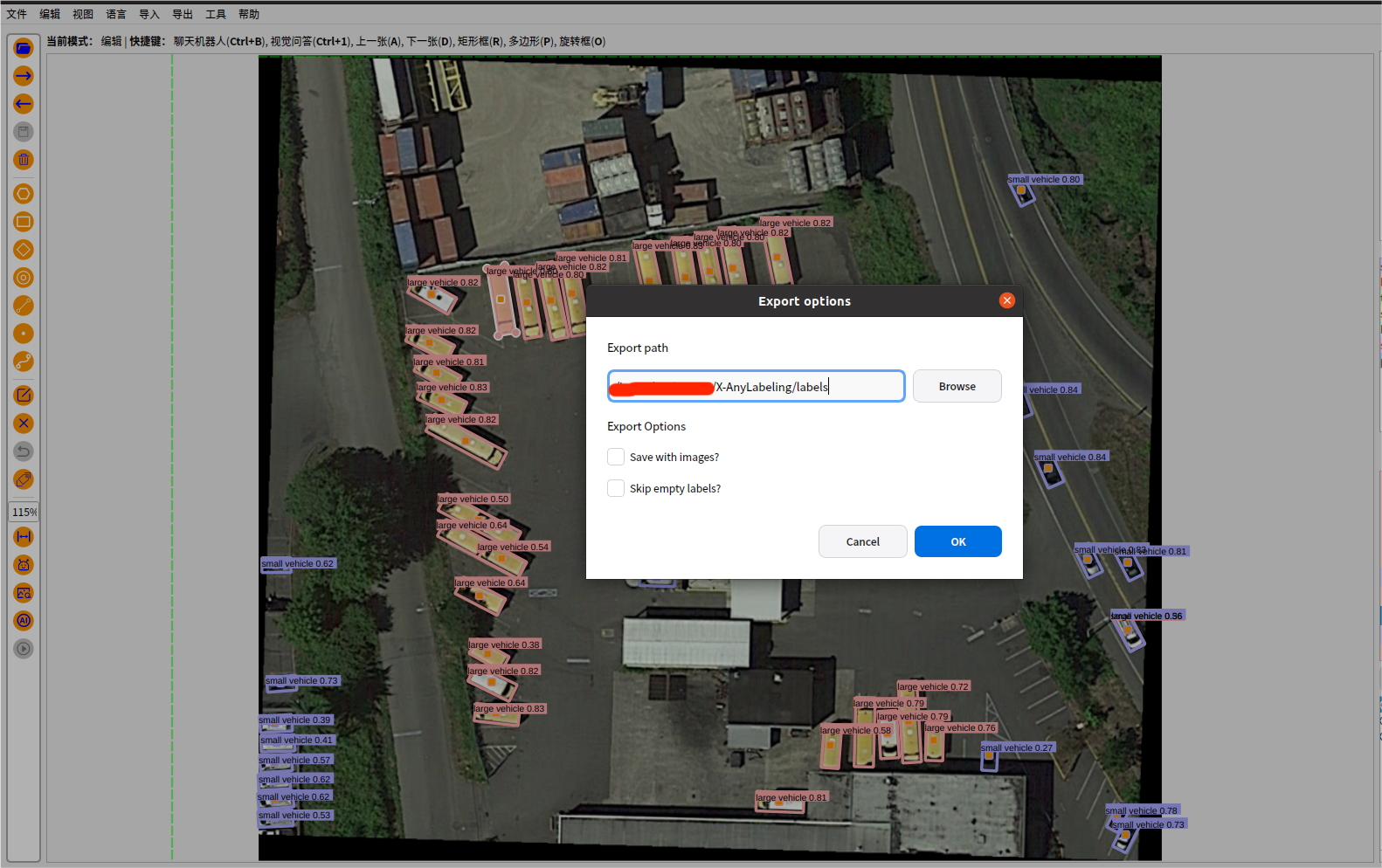

X-anylabeling软件自带转换

其实X-anylabeling这个软件带的有转换格式的按钮,需要创建一个 classes.txt 文件,用于存放所有标签的索引信息;

因为在使用 X-AnyLabeling 导出YOLO格式标签时,需要先选中一个描述类别的.txt文件,该文件按行列出所有类别(例如long_car、short_car、ship、plane)。然后选择 导出->yolo旋转框标签->加载这个classt.txt->选择标签的导出的路径,即可;会一次性导出所有标签;

yolo-obb训练、预测、导出命令

#obb的训练、预测和导出onnx命令

yolo obb train data=dataset.yaml model=yolo11n-obb.pt epochs=300 imgsz=1920 amp=False batch=2 lr0=0.001 mosaic=0.05 patience=200

#模型预测

yolo obb predict model=runs/detect/train4/weights/best.pt source=/xxx/images/test save=true conf=0.4 iou=0.5

#模型导出

yolo export model=/xxx/yolov11/runs/obb/train4/weights/best.pt format=onnx opset=17 simplify=True

二、模型部署

c++版本

主要参考大佬github开源文件https://github.com/UNeedCryDear/yolov8-opencv-onnxruntime-cpp

和检测相似,其中不管旋转、分割、检测啥的,都有yolov8_utils.h和yolov8_utils.cpp这两个文件,和目标检测里面的一样,可以参考上面的大佬的,或者下面这篇文章

【超详细yolo8/11-detect目标检测全流程概述:配置环境、数据标注、训练、验证/预测、onnx部署(c++/python)详解】

主要涉及五个文件,main.cpp yolov8_utils.h yolov8_obb_onnx.h yolov8_utils.cpp yolov8_obb_onnx.cpp,其中yolov8_utils.h和yolov8_utils.cpp和yolo8/11-detect目标检测一样,这里就不贴码了; 把onnx初始化放到主程序里面,执行检测时候不再初始化;

主要修改图片和模型路径,还有yolov8_obb_onnx.h里面的模型宽度和高度。

yolov8_obb_onnx.h 的头文件,修改_netWidth和_netHidth大小,换成自己onnx模型输入的大小。

#pragma once

#include <iostream>

#include<memory>

#include <opencv2/opencv.hpp>

#include "yolov8_utils.h"

#include<onnxruntime_cxx_api.h>//#include <tensorrt_provider_factory.h> //if use OrtTensorRTProviderOptionsV2

//#include <onnxruntime_c_api.h>class Yolov8ObbOnnx {

public:Yolov8ObbOnnx() :_OrtMemoryInfo(Ort::MemoryInfo::CreateCpu(OrtAllocatorType::OrtDeviceAllocator, OrtMemType::OrtMemTypeCPUOutput)) {};~Yolov8ObbOnnx() {if (_OrtSession != nullptr)delete _OrtSession;};// delete _OrtMemoryInfo;public:/** \brief Read onnx-model* \param[in] modelPath:onnx-model path* \param[in] isCuda:if true,use Ort-GPU,else run it on cpu.* \param[in] cudaID:if isCuda==true,run Ort-GPU on cudaID.* \param[in] warmUp:if isCuda==true,warm up GPU-model.*/bool ReadModel(const std::string& modelPath, bool isCuda = false, int cudaID = 0, bool warmUp = true);/** \brief detect.* \param[in] srcImg:a 3-channels image.* \param[out] output:detection results of input image.*/bool OnnxDetect(cv::Mat& srcImg, std::vector<OutputParams>& output);/** \brief detect,batch size= _batchSize* \param[in] srcImg:A batch of images.* \param[out] output:detection results of input images.*/bool OnnxBatchDetect(std::vector<cv::Mat>& srcImg, std::vector<std::vector<OutputParams>>& output);private:template <typename T>T VectorProduct(const std::vector<T>& v){return std::accumulate(v.begin(), v.end(), 1, std::multiplies<T>());};int Preprocessing(const std::vector<cv::Mat>& srcImgs, std::vector<cv::Mat>& outSrcImgs, std::vector<cv::Vec4d>& params);const int _netWidth = 1024; //ONNX-net-input-widthconst int _netHeight = 1024; //ONNX-net-input-heightint _batchSize = 1; //if multi-batch,set thisbool _isDynamicShape = false;//onnx support dynamic shapefloat _classThreshold = 0.25;float _nmsThreshold = 0.45;float _maskThreshold = 0.5;//ONNXRUNTIME Ort::Env _OrtEnv = Ort::Env(OrtLoggingLevel::ORT_LOGGING_LEVEL_ERROR, "Yolov8");Ort::SessionOptions _OrtSessionOptions = Ort::SessionOptions();Ort::Session* _OrtSession = nullptr;Ort::MemoryInfo _OrtMemoryInfo;

#if ORT_API_VERSION < ORT_OLD_VISONchar* _inputName, * _output_name0;

#elsestd::shared_ptr<char> _inputName, _output_name0;

#endifstd::vector<char*> _inputNodeNames; //����ڵ���std::vector<char*> _outputNodeNames;//����ڵ���size_t _inputNodesNum = 0; //����ڵ���size_t _outputNodesNum = 0; //����ڵ���ONNXTensorElementDataType _inputNodeDataType; //��������ONNXTensorElementDataType _outputNodeDataType;std::vector<int64_t> _inputTensorShape; //��������shapestd::vector<int64_t> _outputTensorShape;public:std::vector<std::string> _className = { "plane", "ship", "storage tank","baseball diamond", "tennis court", "basketball court","ground track field", "harbor", "bridge","large vehicle", "small vehicle", "helicopter","roundabout", "soccer ball field", "swimming pool"};std::vector<std::string> bm_Name = {"bengdai", "label", "label_color"};

};

yolov8_obb_onnx.cpp

#include "yolov8_obb_onnx.h"

//using namespace std;

//using namespace cv;

//using namespace cv::dnn;

using namespace Ort;bool Yolov8ObbOnnx::ReadModel(const std::string& modelPath, bool isCuda, int cudaID, bool warmUp) {if (_batchSize < 1) _batchSize = 1;try{if (!CheckModelPath(modelPath))return false;std::vector<std::string> available_providers = GetAvailableProviders();auto cuda_available = std::find(available_providers.begin(), available_providers.end(), "CUDAExecutionProvider");if (isCuda && (cuda_available == available_providers.end())){std::cout << "Your ORT build without GPU. Change to CPU." << std::endl;std::cout << "************* Infer model on CPU! *************" << std::endl;}else if (isCuda && (cuda_available != available_providers.end())){std::cout << "************* Infer model on GPU! *************" << std::endl;

#if ORT_API_VERSION < ORT_OLD_VISONOrtCUDAProviderOptions cudaOption;cudaOption.device_id = cudaID;_OrtSessionOptions.AppendExecutionProvider_CUDA(cudaOption);

#elseOrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(_OrtSessionOptions, cudaID);

#endif}else{std::cout << "************* Infer model on CPU! *************" << std::endl;}//_OrtSessionOptions.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_EXTENDED);#ifdef _WIN32std::wstring model_path(modelPath.begin(), modelPath.end());_OrtSession = new Ort::Session(_OrtEnv, model_path.c_str(), _OrtSessionOptions);

#else_OrtSession = new Ort::Session(_OrtEnv, modelPath.c_str(), _OrtSessionOptions);

#endifOrt::AllocatorWithDefaultOptions allocator;//init input_inputNodesNum = _OrtSession->GetInputCount();

#if ORT_API_VERSION < ORT_OLD_VISON_inputName = _OrtSession->GetInputName(0, allocator);_inputNodeNames.push_back(_inputName);

#else_inputName = std::move(_OrtSession->GetInputNameAllocated(0, allocator));_inputNodeNames.push_back(_inputName.get());

#endif//std::cout << _inputNodeNames[0] << std::endl;Ort::TypeInfo inputTypeInfo = _OrtSession->GetInputTypeInfo(0);auto input_tensor_info = inputTypeInfo.GetTensorTypeAndShapeInfo();_inputNodeDataType = input_tensor_info.GetElementType();_inputTensorShape = input_tensor_info.GetShape();if (_inputTensorShape[0] == -1){_isDynamicShape = true;_inputTensorShape[0] = _batchSize;}if (_inputTensorShape[2] == -1 || _inputTensorShape[3] == -1) {_isDynamicShape = true;_inputTensorShape[2] = _netHeight;_inputTensorShape[3] = _netWidth;}//init output_outputNodesNum = _OrtSession->GetOutputCount();

#if ORT_API_VERSION < ORT_OLD_VISON_output_name0 = _OrtSession->GetOutputName(0, allocator);_outputNodeNames.push_back(_output_name0);

#else_output_name0 = std::move(_OrtSession->GetOutputNameAllocated(0, allocator));_outputNodeNames.push_back(_output_name0.get());

#endifOrt::TypeInfo type_info_output0(nullptr);type_info_output0 = _OrtSession->GetOutputTypeInfo(0); //output0auto tensor_info_output0 = type_info_output0.GetTensorTypeAndShapeInfo();_outputNodeDataType = tensor_info_output0.GetElementType();_outputTensorShape = tensor_info_output0.GetShape();//_outputMaskNodeDataType = tensor_info_output1.GetElementType(); //the same as output0//_outputMaskTensorShape = tensor_info_output1.GetShape();//if (_outputTensorShape[0] == -1)//{// _outputTensorShape[0] = _batchSize;// _outputMaskTensorShape[0] = _batchSize;//}//if (_outputMaskTensorShape[2] == -1) {// //size_t ouput_rows = 0;// //for (int i = 0; i < _strideSize; ++i) {// // ouput_rows += 3 * (_netWidth / _netStride[i]) * _netHeight / _netStride[i];// //}// //_outputTensorShape[1] = ouput_rows;// _outputMaskTensorShape[2] = _segHeight;// _outputMaskTensorShape[3] = _segWidth;//}//warm upif (isCuda && warmUp) {//draw runstd::cout << "Start warming up" << std::endl;size_t input_tensor_length = VectorProduct(_inputTensorShape);float* temp = new float[input_tensor_length];std::vector<Ort::Value> input_tensors;std::vector<Ort::Value> output_tensors;input_tensors.push_back(Ort::Value::CreateTensor<float>(_OrtMemoryInfo, temp, input_tensor_length, _inputTensorShape.data(),_inputTensorShape.size()));for (int i = 0; i < 3; ++i) {output_tensors = _OrtSession->Run(Ort::RunOptions{ nullptr },_inputNodeNames.data(),input_tensors.data(),_inputNodeNames.size(),_outputNodeNames.data(),_outputNodeNames.size());}delete[]temp;}}catch (const std::exception&) {return false;}return true;}int Yolov8ObbOnnx::Preprocessing(const std::vector<cv::Mat>& srcImgs, std::vector<cv::Mat>& outSrcImgs, std::vector<cv::Vec4d>& params) {outSrcImgs.clear();cv::Size input_size = cv::Size(_netWidth, _netHeight);for (int i = 0; i < srcImgs.size(); ++i) {cv::Mat temp_img = srcImgs[i];cv::Vec4d temp_param = {1,1,0,0};if (temp_img.size() != input_size) {cv::Mat borderImg;LetterBox(temp_img, borderImg, temp_param, input_size, false, false, true, 32);//std::cout << borderImg.size() << std::endl;outSrcImgs.push_back(borderImg);params.push_back(temp_param);}else {outSrcImgs.push_back(temp_img);params.push_back(temp_param);}}int lack_num = _batchSize- srcImgs.size();if (lack_num > 0) {for (int i = 0; i < lack_num; ++i) {cv::Mat temp_img = cv::Mat::zeros(input_size, CV_8UC3);cv::Vec4d temp_param = { 1,1,0,0 };outSrcImgs.push_back(temp_img);params.push_back(temp_param);}}return 0;

}

bool Yolov8ObbOnnx::OnnxDetect(cv::Mat& srcImg, std::vector<OutputParams>& output) {std::vector<cv::Mat> input_data = { srcImg };std::vector<std::vector<OutputParams>> tenp_output;if (OnnxBatchDetect(input_data, tenp_output)) {output = tenp_output[0];return true;}else return false;

}

bool Yolov8ObbOnnx::OnnxBatchDetect(std::vector<cv::Mat>& srcImgs, std::vector<std::vector<OutputParams>>& output) {std::vector<cv::Vec4d> params;std::vector<cv::Mat> input_images;cv::Size input_size(_netWidth, _netHeight);//preprocessingPreprocessing(srcImgs, input_images, params);// long long startTime = std::chrono::system_clock::now().time_since_epoch().count(); //ns// long long timeNow = std::chrono::system_clock::now().time_since_epoch().count();// std::cout <<"img preprocess time: " <<(timeNow - startTime) * 0.000001 << "ms\n";cv::Mat blob = cv::dnn::blobFromImages(input_images, 1 / 255.0, input_size, cv::Scalar(0, 0, 0), true, false);int64_t input_tensor_length = VectorProduct(_inputTensorShape);std::vector<Ort::Value> input_tensors;std::vector<Ort::Value> output_tensors;input_tensors.push_back(Ort::Value::CreateTensor<float>(_OrtMemoryInfo, (float*)blob.data, input_tensor_length, _inputTensorShape.data(), _inputTensorShape.size()));long long startTime = std::chrono::system_clock::now().time_since_epoch().count(); //nsoutput_tensors = _OrtSession->Run(Ort::RunOptions{ nullptr },_inputNodeNames.data(),input_tensors.data(),_inputNodeNames.size(),_outputNodeNames.data(),_outputNodeNames.size());long long timeNow = std::chrono::system_clock::now().time_since_epoch().count();std::cout <<"onnx run time: " <<(timeNow - startTime) * 0.000001 << "ms\n";//post-processfloat* all_data = output_tensors[0].GetTensorMutableData<float>();_outputTensorShape = output_tensors[0].GetTensorTypeAndShapeInfo().GetShape();int net_width = _outputTensorShape[1];int socre_array_length = net_width - 5;int angle_index = net_width - 1;int64_t one_output_length = VectorProduct(_outputTensorShape) / _outputTensorShape[0];for (int img_index = 0; img_index < srcImgs.size(); ++img_index) {cv::Mat output0 = cv::Mat(cv::Size((int)_outputTensorShape[2], (int)_outputTensorShape[1]), CV_32F, all_data).t(); //[bs,116,8400]=>[bs,8400,116]all_data += one_output_length;float* pdata = (float*)output0.data;int rows = output0.rows;std::vector<int> class_ids;//���id����std::vector<float> confidences;//���ÿ��id��Ӧ���Ŷ�����std::vector<cv::RotatedRect> boxes;//ÿ��id���ο�for (int r = 0; r < rows; ++r) { //stridecv::Mat scores(1, socre_array_length, CV_32F, pdata + 4);cv::Point classIdPoint;double max_class_socre;minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);max_class_socre = (float)max_class_socre;if (max_class_socre >= _classThreshold) {//rect [x,y,w,h]float x = (pdata[0] - params[img_index][2]) / params[img_index][0]; //xfloat y = (pdata[1] - params[img_index][3]) / params[img_index][1]; //yfloat w = pdata[2] / params[img_index][0]; //wfloat h = pdata[3] / params[img_index][1]; //hfloat angle = pdata[angle_index] / CV_PI * 180.0;class_ids.push_back(classIdPoint.x);confidences.push_back(max_class_socre);//cv::RotatedRect temp_rotated;//BBox2Obb(x, y, w, h, angle, temp_rotated);//boxes.push_back(temp_rotated);boxes.push_back(cv::RotatedRect(cv::Point2f(x, y), cv::Size(w, h), angle));}pdata += net_width;//��һ��}std::vector<int> nms_result;cv::dnn::NMSBoxes(boxes, confidences, _classThreshold, _nmsThreshold, nms_result);std::vector<std::vector<float>> temp_mask_proposals;std::vector<OutputParams> temp_output;for (int i = 0; i < nms_result.size(); ++i) {int idx = nms_result[i];OutputParams result;result.id = class_ids[idx];result.confidence = confidences[idx];result.rotatedBox = boxes[idx];temp_output.push_back(result);}output.push_back(temp_output);}if (output.size())return true;elsereturn false;

}

main.cpp 使用方法

#include <iostream>

#include<opencv2/opencv.hpp>

#include<math.h>

#include"yolov8_obb_onnx.h"

#include<time.h>

//#define VIDEO_OPENCV //if define, use opencv for video.using namespace std;

using namespace cv;

using namespace dnn;template<typename _Tp>

std::vector<OutputParams> yolov8_onnx(_Tp& task, cv::Mat& img, std::string& model_path)

{// if (task.ReadModel(model_path, false,0,true)) {// std::cout << "read net ok!" << std::endl;// }//生成随机颜色 std::vector<cv::Scalar> color;srand(time(0));for (int i = 0; i < 80; i++) {int b = rand() % 256;int g = rand() % 256;int r = rand() % 256;color.push_back(cv::Scalar(b, g, r));}std::vector<OutputParams> result;PoseParams pose_detect;if (task.OnnxDetect(img, result)) {//std::cout<<"111"<<std::endl;DrawPred(img, result, task._className, color,false);//DrawPredPose(img, result, pose_detect, false);// 遍历所有检测结果// cv::Mat combinedMask = cv::Mat::zeros(img.size(), CV_8UC1);// for (const auto& output : result) {// // 获取当前检测框的ROI// cv::Mat roi = combinedMask(output.box);// cv::Mat boxMaskBinary;// output.boxMask.convertTo(boxMaskBinary, CV_8UC1);// // 将当前mask合并到总mask上// // 这里使用OR操作,可以根据需要改为其他合并方式// cv::bitwise_or(roi, boxMaskBinary, roi);// }// cv::imwrite("combinedMask.png", combinedMask);}else {std::cout << "Detect Failed!" << std::endl;}//system("pause");return result;

}

int main(){std::string img_path = "./P0032.png";std::string model_path_detect = "./model/yolo11n-obb.onnx";cv::Mat src = imread(img_path); cv::Mat img = src.clone(); Yolov8ObbOnnx task_obb_ort;;if (task_obb_ort.ReadModel(model_path_detect, false,0,true)) {std::cout << "read net ok!" << std::endl;}std::vector<OutputParams> results_detect;long long startTime = std::chrono::system_clock::now().time_since_epoch().count(); //nsresults_detect=yolov8_onnx(task_obb_ort, img, model_path_detect); //yolov8 onnxruntime long long timeNow = std::chrono::system_clock::now().time_since_epoch().count();double timeuse = (timeNow - startTime) * 0.000001;//std::cout<<"end detect"<<endl;std::cout << (timeNow - startTime) * 0.000001 << "ms\n";OutputParams& output = results_detect[0];//第一个线缆信息std::cout<<"---- conf: "<<output.confidence<<std::endl;std::vector<cv::Point2f> getPoints;cv::waitKey(0);return 0;

}

CmakeLists.txt

CMAKE_MINIMUM_REQUIRED(VERSION 3.0.0)

project(YOLOv8)SET (ONNXRUNTIME_DIR /home/xxx/onnxruntime-linux-x64-gpu-1.17.1)find_package(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

# 打印opencv信息

message(STATUS "OpenCV library status:")

message(STATUS " config: ${OpenCV_DIR}")

message(STATUS " version: ${OpenCV_VERSION}")

message(STATUS " libraries: ${OpenCV_LIBS}")

message(STATUS " include path: ${OpenCV_INCLUDE_DIRS}")# 添加PCL环境

find_package(PCL REQUIRED)

add_definitions(${PCL_DEFINITIONS})

include_directories(${PCL_INCLUDE_DIRS})

link_directories(${PCL_LIBRARY_DIRS})

find_package(VTK REQUIRED)ADD_EXECUTABLE(YOLOv8 yolov8_utils.h yolov8_obb_onnx.hmain.cpp yolov8_utils.cpp yolov8_obb_onnx.cpp )

SET(CMAKE_CXX_STANDARD 14)

SET(CMAKE_CXX_STANDARD_REQUIRED ON)TARGET_INCLUDE_DIRECTORIES(YOLOv8 PRIVATE "${ONNXRUNTIME_DIR}/include")

TARGET_COMPILE_FEATURES(YOLOv8 PRIVATE cxx_std_14)

TARGET_LINK_LIBRARIES(YOLOv8 ${OpenCV_LIBS}

)

# TARGET_LINK_LIBRARIES(YOLOv8 "${ONNXRUNTIME_DIR}/lib/libonnxruntime.so")

# TARGET_LINK_LIBRARIES(YOLOv8 "${ONNXRUNTIME_DIR}/lib/libonnxruntime.so.1.13.1")

if (WIN32)TARGET_LINK_LIBRARIES(YOLOv8 "${ONNXRUNTIME_DIR}/lib/onnxruntime.lib")

endif(WIN32)if (UNIX)TARGET_LINK_LIBRARIES(YOLOv8 "${ONNXRUNTIME_DIR}/lib/libonnxruntime.so")

endif(UNIX)python版本

import cv2

import onnxruntime as ort

from PIL import Image

import numpy as np

import time

import math

# 置信度

confidence_thres = 0.7

# iou阈值

iou_thres = 0.7

# 类别

CLASSES = ['plane', 'ship', 'storage tank', 'baseball diamond', 'tennis court', 'basketball court','ground track field', 'harbor', 'bridge', 'large vehicle', 'small vehicle', 'helicopter', 'roundabout','soccer ball field', 'swimming pool']

classes = {0: 'plane', 1: 'ship', 2: 'storage tank', 3: 'baseball diamond',4: 'tennis court', 5: 'basketball court', 6: 'ground track field',7: 'harbor', 8: 'bridge', 9: 'large vehicle', 10: 'small vehicle',11: 'helicopter', 12: 'roundabout', 13: 'soccer ball field',14: 'swimming pool'

}

# 随机颜色

color_palette = np.random.uniform(100, 255, size=(len(classes), 3))# 判断是使用GPU或CPU

providers = [('CUDAExecutionProvider', {'device_id': 0,}),'CPUExecutionProvider',

]

def letterbox(im, new_shape=(1024, 1024), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True, stride=32):# Resize and pad image while meeting stride-multiple constraintsshape = im.shape[:2] # current shape [height, width]if isinstance(new_shape, int):new_shape = (new_shape, new_shape)# Scale ratio (new / old)r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])if not scaleup: # only scale down, do not scale up (for better val mAP)r = min(r, 1.0)# Compute paddingratio = r, r # width, height ratiosnew_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh paddingif auto: # minimum rectangledw, dh = np.mod(dw, stride), np.mod(dh, stride) # wh paddingelif scaleFill: # stretchdw, dh = 0.0, 0.0new_unpad = (new_shape[1], new_shape[0])ratio = new_shape[1] / shape[1], new_shape[0] / shape[0] # width, height ratiosdw /= 2 # divide padding into 2 sidesdh /= 2if shape[::-1] != new_unpad: # resizeim = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))left, right = int(round(dw - 0.1)), int(round(dw + 0.1))im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add borderreturn im, ratio, (dw, dh)

def preprocess_me(img0s,new_shape):"""Preprocesses the input image before performing inference.Returns:image_data: Preprocessed image data ready for inference."""# Set Dataprocess & Run inferenceimg, _, delta_wh = letterbox(img0s, new_shape, auto=True)print('++++img_shape', img.shape)# Convertimg = img.transpose((2, 0, 1))[::-1] # HWC to CHW, BGR to RGBimg = np.ascontiguousarray(img)img = img.astype(dtype=np.float32)img /= 255 # 0 - 255 to 0.0 - 1.0if len(img.shape) == 3:img = img[None] # expand for batch dim if len(img.shape) == 3:img = img[None] # expand for batch dim# Return the preprocessed image datareturn img, delta_wh

#一种计算旋转框IOU的方法

class CSXYWHR:def __init__(self, classId, score, x, y, w, h, angle):self.classId = classIdself.score = scoreself.x = xself.y = yself.w = wself.h = hself.angle = angle

class DetectBox:def __init__(self, classId, score, pt1x, pt1y, pt2x, pt2y, pt3x, pt3y, pt4x, pt4y, angle):self.classId = classIdself.score = scoreself.pt1x = pt1xself.pt1y = pt1yself.pt2x = pt2xself.pt2y = pt2yself.pt3x = pt3xself.pt3y = pt3yself.pt4x = pt4xself.pt4y = pt4yself.angle = angledef get_covariance_matrix(boxes):a, b, c = boxes.w, boxes.h, boxes.anglecos = math.cos(c)sin = math.sin(c)cos2 = math.pow(cos, 2)sin2 = math.pow(sin, 2)return a * cos2 + b * sin2, a * sin2 + b * cos2, (a - b) * cos * sindef probiou(obb1, obb2, eps=1e-7):x1, y1 = obb1.x, obb1.yx2, y2 = obb2.x, obb2.ya1, b1, c1 = get_covariance_matrix(obb1)a2, b2, c2 = get_covariance_matrix(obb2)t1 = (((a1 + a2) * math.pow((y1 - y2), 2) + (b1 + b2) * math.pow((x1 - x2), 2)) / ((a1 + a2) * (b1 + b2) - math.pow((c1 + c2), 2) + eps)) * 0.25t2 = (((c1 + c2) * (x2 - x1) * (y1 - y2)) / ((a1 + a2) * (b1 + b2) - math.pow((c1 + c2), 2) + eps)) * 0.5temp1 = (a1 * b1 - math.pow(c1, 2)) if (a1 * b1 - math.pow(c1, 2)) > 0 else 0temp2 = (a2 * b2 - math.pow(c2, 2)) if (a2 * b2 - math.pow(c2, 2)) > 0 else 0t3 = math.log((((a1 + a2) * (b1 + b2) - math.pow((c1 + c2), 2)) / (4 * math.sqrt((temp1 * temp2)) + eps)+ eps)) * 0.5if (t1 + t2 + t3) > 100:bd = 100elif (t1 + t2 + t3) < eps:bd = epselse:bd = t1 + t2 + t3hd = math.sqrt((1.0 - math.exp(-bd) + eps))return 1 - hddef nms_rotated(boxes, nms_thresh):pred_boxes = []sort_boxes = sorted(boxes, key=lambda x: x.score, reverse=True)for i in range(len(sort_boxes)):if sort_boxes[i].classId != -1:pred_boxes.append(sort_boxes[i])for j in range(i + 1, len(sort_boxes), 1):ious = probiou(sort_boxes[i], sort_boxes[j])if ious > nms_thresh:sort_boxes[j].classId = -1return pred_boxes

def xywhr2xyxyxyxy(x, y, w, h, angle):cos_value = math.cos(angle)sin_value = math.sin(angle)vec1x= w / 2 * cos_valuevec1y = w / 2 * sin_valuevec2x = -h / 2 * sin_valuevec2y = h / 2 * cos_valuept1x = x + vec1x + vec2xpt1y = y + vec1y + vec2ypt2x = x + vec1x - vec2xpt2y = y + vec1y - vec2ypt3x = x - vec1x - vec2xpt3y = y - vec1y - vec2ypt4x = x - vec1x + vec2xpt4y = y - vec1y + vec2yreturn pt1x, pt1y, pt2x, pt2y, pt3x, pt3y, pt4x, pt4y#接下来是另一种

def calculate_obb_iou(box1, box2):"""计算两个旋转矩形框的交并比(IoU)参数:box1: 第一个旋转框的4个角点坐标,格式为[x1,y1,x2,y2,x3,y3,x4,y4]box2: 第二个旋转框的4个角点坐标,格式同上返回:两个旋转框的交并比,范围[0,1]"""# 将输入的坐标列表转换为OpenCV所需的轮廓格式# 每个轮廓是4个点的数组,每个点是(x,y)坐标contour1 = np.array(box1[:8]).reshape(4, 2).astype(np.float32) # 转换为4x2矩阵contour2 = np.array(box2[:8]).reshape(4, 2).astype(np.float32) # 转换为4x2矩阵# 计算两个旋转矩形的相交区域面积# cv2.intersectConvexConvex返回两个值:相交面积和相交区域轮廓intersection_area, _ = cv2.intersectConvexConvex(contour1, contour2)# 分别计算两个旋转矩形的面积area1 = cv2.contourArea(contour1) # 第一个框的面积area2 = cv2.contourArea(contour2) # 第二个框的面积# 计算并集面积 = 面积1 + 面积2 - 相交面积union_area = area1 + area2 - intersection_area# 处理除零情况并返回IoU值return intersection_area / union_area if union_area > 0 else 0def custom_NMSBoxesRotated(boxes, scores, confidence_threshold, iou_threshold):"""旋转框非极大值抑制(NMS)算法参数:boxes: 所有旋转框的列表,每个框格式为[x1,y1,x2,y2,x3,y3,x4,y4]scores: 每个框对应的置信度分数confidence_threshold: 置信度阈值,低于此值的框将被过滤iou_threshold: IoU阈值,高于此值的框将被视为重叠返回:保留的框的索引列表"""# 处理空输入情况if len(boxes) == 0:return []# 将输入转换为numpy数组以便处理scores = np.array(scores)boxes = np.array(boxes)# 第一步:根据置信度阈值过滤低质量检测框keep_mask = scores > confidence_threshold # 创建布尔掩码filtered_boxes = boxes[keep_mask] # 保留高置信度框filtered_scores = scores[keep_mask] # 对应的分数# 如果没有框通过阈值过滤,直接返回空列表if len(filtered_boxes) == 0:return []# 第二步:按置信度分数从高到低排序# argsort默认升序,[::-1]变为降序sorted_indices = np.argsort(filtered_scores)[::-1]selected_indices = [] # 最终保留的框索引# 第三步:NMS主循环while len(sorted_indices) > 0:# 取出当前最高分的框current_idx = sorted_indices[0]selected_indices.append(current_idx) # 保留这个框# 如果只剩最后一个框,直接结束if len(sorted_indices) == 1:break# 获取当前框和剩余所有框current_box = filtered_boxes[current_idx]remaining_boxes = filtered_boxes[sorted_indices[1:]]# 计算当前框与所有剩余框的IoUiou_values = []for box in remaining_boxes:iou_values.append(calculate_obb_iou(current_box, box))iou_values = np.array(iou_values)# 找出IoU低于阈值的框(不重叠的框)non_overlap_mask = iou_values <= iou_threshold# 更新待处理框列表(跳过当前框和重叠框)# +1是因为remaining_boxes相对于sorted_indices[1:]偏移了一位sorted_indices = sorted_indices[1:][non_overlap_mask]return selected_indicesdef draw_rotated_detections(img, box, score, class_id):"""绘制旋转框检测结果box: [x1,y1,x2,y2,x3,y3,x4,y4]"""points = np.array(box[:8]).reshape(-1, 2).astype(np.int32)color = color_palette[class_id].tolist()# 绘制旋转框cv2.drawContours(img, [points], 0, color, 2)# 绘制标签label = f'{classes[class_id]}: {score:.2f}'(label_width, label_height), _ = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 1)# 找到合适的标签位置text_origin = tuple(points[0])label_x = text_origin[0]label_y = text_origin[1] - 10 if text_origin[1] - 10 > label_height else text_origin[1] + 10# 绘制标签背景cv2.rectangle(img, (label_x, label_y - label_height), (label_x + label_width, label_y + label_height), color, cv2.FILLED)# 绘制标签文本cv2.putText(img, label, (label_x, label_y), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0), 1, cv2.LINE_AA)def preprocess(img, input_width, input_height):"""在执行推理之前预处理输入图像。返回:image_data: 为推理准备好的预处理后的图像数据。"""# 获取输入图像的高度和宽度img_height, img_width = img.shape[:2]# 将图像颜色空间从BGR转换为RGBimg = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)# 将图像大小调整为匹配输入形状img = cv2.resize(img, (input_width, input_height))# 通过除以255.0来归一化图像数据image_data = np.array(img) / 255.0# 转置图像,使通道维度为第一维image_data = np.transpose(image_data, (2, 0, 1)) # 通道首# 扩展图像数据的维度以匹配预期的输入形状image_data = np.expand_dims(image_data, axis=0).astype(np.float32)# 返回预处理后的图像数据return image_data, img_height, img_width

def postprocess(input_image, output, input_width, input_height, img_width, img_height,delta_wh):"""后处理旋转框输出"""# 假设模型输出格式为 [cx,cy,w,h,angle,conf,cls0,cls1,...]pred = np.transpose(np.squeeze(output[0]))#pred = output[0].squeeze(0) # 移除批次维度# 调试信息print(f"模型输出形状: {pred.shape}, 检测框数量: {pred.shape[0]}")for i in range(min(20, len(pred))):i+=1000print(f"Box {i}: center=({pred[i][0]:.2f},{pred[i][1]:.2f}), size=({pred[i][2]:.2f},{pred[i][3]:.2f}), "f"angle={pred[i][4]:.2f}rad, conf={pred[i][5]:.2f}, class_scores={np.max(pred[i][6:]):.2f}")detect_result=[]boxes = []scores = []class_ids = []rows = pred.shape[0]print(rows)# 计算缩放因子# x_factor = img_width / input_width# y_factor = img_height / input_height# x_factor = img_width / (input_width - 2*dw)# y_factor = img_height / (input_height - 2*dh)x_factor = img_width / input_widthy_factor = img_height / input_height# x_factor = img_width / input_width# y_factor = img_height / input_heightfor i in range(rows):# 从当前行提取类别得分classes_scores = pred[i][4:(pred.shape[1]-1)]#classes_scores = pred[:, 4]# 找到类别得分中的最大得分max_score = np.amax(classes_scores)#print(max_score)# 如果最大得分高于置信度阈值if max_score >= confidence_thres:# 获取得分最高的类别IDclass_id = np.argmax(classes_scores)# 从当前行提取边界框坐标,输出是cx cy w h 还是 x y w h #x, y, w, h = pred[i][0], pred[i][1], pred[i][2], pred[i][3]# 计算边界框的缩放坐标# left = int((x - w / 2) * x_factor)# top = int((y - h / 2) * y_factor)# width = int(w * x_factor)# height = int(h * y_factor)cx, cy, w, h,angle = pred[i][0], pred[i][1], pred[i][2], pred[i][3], pred[i][19]#print("cx %d ,cy %d, w %d, h %d, angle %d\n",cx, cy, w, h,angle)# 转换为图像坐标cx = cx * x_factorcy = cy * y_factorw = w * x_factorh = h * y_factor# 转换为旋转矩形rect = ((cx, cy), (w, h), -np.degrees(angle))# 获取四个顶点box = cv2.boxPoints(rect)box = box.reshape(-1).tolist()#print(-angle * 180 / np.pi)# 将类别ID、得分和框坐标添加到各自的列表中class_ids.append(class_id)scores.append(max_score)boxes.append(box)box_ = CSXYWHR(class_id, max_score, cx, cy, w, h, angle)detect_result.append(box_)# NMSprint('before nms num is:', len(detect_result))pred_boxes = nms_rotated(detect_result, 0.5)print('after nms num is:', len(pred_boxes))resutl = []for i in range(len(pred_boxes)):classid = pred_boxes[i].classIdscore = pred_boxes[i].scorecx = pred_boxes[i].xcy = pred_boxes[i].ycw = pred_boxes[i].wch = pred_boxes[i].hangle = pred_boxes[i].anglebw_ = cw if cw > ch else chbh_ = ch if cw > ch else cwbt = angle % math.pi if cw > ch else (angle + math.pi / 2) % math.pipt1x, pt1y, pt2x, pt2y, pt3x, pt3y, pt4x, pt4y = xywhr2xyxyxyxy(cx, cy, bw_, bh_, bt)bbox = DetectBox(classid, score, pt1x, pt1y, pt2x, pt2y, pt3x, pt3y, pt4x, pt4y, angle)resutl.append(bbox)return resutl# # 应用旋转NMS# print("开始nms")# #indices = custom_NMSBoxesRotated(boxes, scores, confidence_thres, iou_thres)# print("检测出个数:", len(indices))# # 绘制结果# for i in indices:# draw_rotated_detections(input_image, boxes[i], scores[i], class_ids[i])# return input_imagedef init_detect_model(model_path):session = ort.InferenceSession(model_path, providers=providers)model_inputs = session.get_inputs()input_shape = model_inputs[0].shapeinput_width = input_shape[2]input_height = input_shape[3]return session, model_inputs, input_width, input_heightdef detect_object(image, session, model_inputs, input_width, input_height):if isinstance(image, Image.Image):result_image = np.array(image)else:result_image = image.copy()img_data, img_height, img_width = preprocess(result_image, input_width, input_height)delta_wh = (0,0)#img_data ,delta_wh = preprocess_me(result_image,new_shape=(input_width,input_height))print("pre_img_shape: ")outputs = session.run(None, {model_inputs[0].name: img_data})#output_image = postprocess(result_image, outputs, input_width, input_height, img_width, img_height)pred_boxes = postprocess(result_image, outputs, input_width, input_height, img_width, img_height,delta_wh)print('obj num is :', len(pred_boxes))for i in range(len(pred_boxes)):classId = pred_boxes[i].classIdscore = pred_boxes[i].score#pt1x = int(pred_boxes[i].pt1x / input_width * image_w)pt1x = int(pred_boxes[i].pt1x )pt1y = int(pred_boxes[i].pt1y )pt2x = int(pred_boxes[i].pt2x )pt2y = int(pred_boxes[i].pt2y )pt3x = int(pred_boxes[i].pt3x )pt3y = int(pred_boxes[i].pt3y )pt4x = int(pred_boxes[i].pt4x )pt4y = int(pred_boxes[i].pt4y )angle = pred_boxes[i].anglecv2.line(image, (pt1x, pt1y), (pt2x, pt2y), (0, 255, 0), 2)cv2.line(image, (pt2x, pt2y), (pt3x, pt3y), (0, 255, 0), 2)cv2.line(image, (pt3x, pt3y), (pt4x, pt4y), (0, 255, 0), 2)cv2.line(image, (pt4x, pt4y), (pt1x, pt1y), (0, 255, 0), 2)title = CLASSES[classId] + "%.2f" % scorecv2.putText(image, title, (pt1x, pt1y), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 0, 255), 2, cv2.LINE_AA)cv2.imwrite('./test_onnx_result11.jpg', image)return imageif __name__ == '__main__':#只需修改模型和图片的路径即可model_path = "/home/xxx/yolov11/yolo_base_model/yolo11n-obb.onnx" # 替换为你的模型路径session, model_inputs, input_width, input_height = init_detect_model(model_path)image_data = cv2.imread("./obb_test_ano/P0032.png")result_image = detect_object(image_data, session, model_inputs, input_width, input_height)#cv2.imwrite("output.jpg", result_image)#cv2.imshow('Result', result_image)#cv2.waitKey(0)