kubeasz二进制部署k8s生产环境集群

1.1 k8s高可用集群环境规划信息:

1.1.1 服务器统计:

| 类型 | 服务器IP地址 | 备注 |

|---|---|---|

| Ansible(2台) | 192.168.121.101/102 | K8s集群部署服务器,可以和其他服务器共用 |

| K8s Master(3台) | 192.168.121.101/102/103 | K8s控制端,通过一个VIP做高可用 |

| Harbor(2台) | 192.168.121.104/105 | 高可用镜像服务器 |

| Etcd(至少3台) | 192.168.121.106/107/108 | 保存k8s集群数据的服务器 |

| Hproxy(2台) | 192.168.121.109/110 | 高可用etcd代理服务器 |

| Node节点(2-N台) | 192.168.121.111/112/113 | 真正运行容器的服务器,高可用环境至少两台 |

1.2 服务器准备

| 类型 | 服务器IP | 主机名 | VIP |

|---|---|---|---|

| master1 | 192.168.121.101 | master1 | 192.168.121.188 |

| master2 | 192.168.121.102 | master2 | 192.168.121.188 |

| master3 | 192.168.121.103 | master3 | 192.168.121.188 |

| Harbor1 | 192.168.121.104 | harbor1 | |

| Harbor2 | 192.168.121.105 | harbor2 | |

| etcd1 | 192.168.121.106 | etcd1 | |

| etcd2 | 192.168.121.107 | etcd2 | |

| etcd3 | 192.168.121.108 | etcd3 | |

| haproxy1 | 192.168.121.109 | haproxy1 | |

| haproxy2 | 192.168.121.110 | haproxy2 | |

| node1 | 192.168.121.111 | node1 | |

| node2 | 192.168.121.112 | node2 | |

| node3 | 192.168.121.113 | node3 |

1.3 k8s集群软件清单

端口:192.168.121.188:6443

操作系统: ubuntu server 22.04.5

k8s版本: 1.20.x

calico: 3.4.41.4 基础环境准备

1.4.1 系统配置

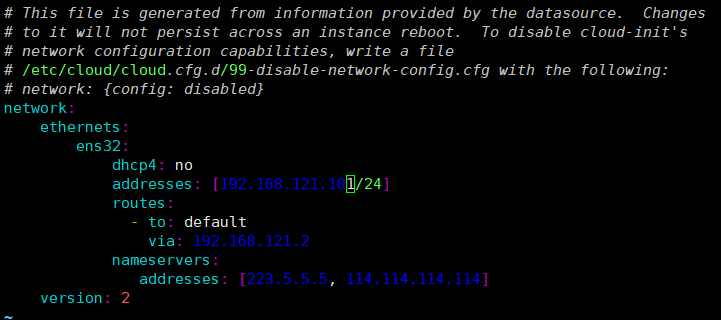

1.4.1.1 配置静态ip

把虚拟机配置成静态的ip地址,这样虚拟机重启后ip地址不会发生改变。

# 每台服务器都需要执行,也可以在一台服务器完成,剩余的服务器克隆模板服务器

root@master1:~# vim /etc/netplan/50-cloud-init.yaml

network:ethernets: # 以太网接口配置eht0: # 网卡名称(你的服务器网卡为eht0)dhcp4: no # 禁用IPv4动态获取(不使用DHCP)addresses: [192.168.121.101/24] # 静态IPv4地址及子网掩码(/24即255.255.255.0)routes: # 路由配置- to: default # 目标:默认路由(所有非本地流量)via: 192.168.121.2 # 网关地址(通过该地址访问外部网络)nameservers: # DNS服务器配置addresses: [223.5.5.5, 114.114.114.114] # 阿里云DNS和国内公共DNSversion: 2 # 配置版本(netplan v2语法)

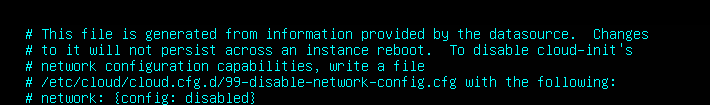

禁用 cloud-init 对网络的自动配置

vim /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg

network: {config: disabled}

reboot # 重启系统生效

1.4.1.2 配置主机名

# 每台服务器都需要修改

root@master1:~# hostnamectl set-hostname 主机名1.4.1.3 配置 hosts文件

# 每台服务器都需要执行,也可以在一台服务器完成,剩余的服务器克隆模板服务器

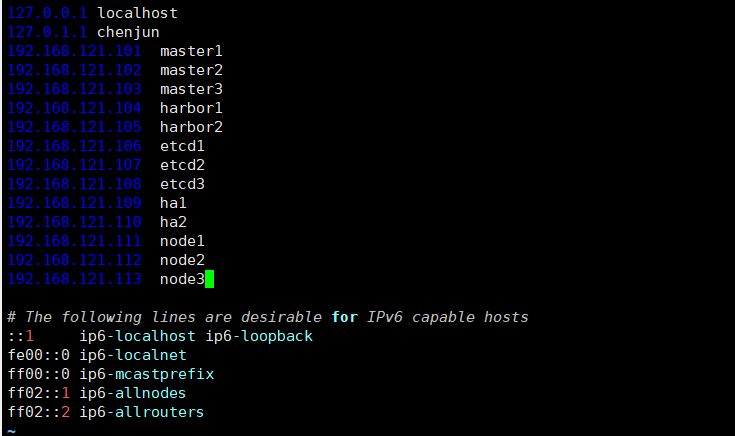

root@master1:~# vim /etc/hosts

192.168.121.101 master1

192.168.121.102 master2

192.168.121.103 master3

192.168.121.104 harbor1

192.168.121.105 harbor2

192.168.121.106 etcd1

192.168.121.107 etcd2

192.168.121.108 etcd3

192.168.121.109 haproxy1

192.168.121.110 haproxy2

192.168.121.111 node1

192.168.121.112 node2

192.168.121.113 node3

192.168.121.188 VIP1.4.1.4 关闭selinux

# 输入getenforce,查看selinux运行模式

# Ubuntu 默认不安装、不启用 SELinux

root@master1:~# getenforce

Command 'getenforce' not found, but can be installed with:

apt install selinux-utils

# 如果输出以上内容表示没有安装selinux不需要管

#Enforcing:强制模式(SELinux 生效,违反策略的行为会被阻止)。

#Permissive:宽容模式(SELinux 不阻止违规行为,但会记录日志)。

#Disabled:禁用模式(SELinux 完全不工作)。1.4.1.5 关闭防火墙

# 查看防火墙状态

root@master1:~# ufw status

Status: inactive

# 输出以上内容说明防火墙未启用

# ufw enable 开机自启动

# ufw disable 禁用防火墙1.4.1.6 永久关闭swap交换分区

root@master1:~# vim /etc/fstab

# 注释#/swap.img none swap sw 0 0

# 重启后生效1.4.1.7 修改内核参数

#加载 br_netfilter 模块

modprobe br_netfilter

#验证模块是否加载成功:

lsmod |grep br_netfilter

#修改内核参数

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#使刚才修改的内核参数生效

sysctl -p /etc/sysctl.d/k8s.conf

1.4.1.8 配置阿里云repo源

# 备份原软件源配置文件

cp /etc/apt/sources.list /etc/apt/sources.list.bak

# 查看 Ubuntu 版本代号

lsb_release -c、

# 编辑软件源配置文件

vim /etc/apt/sources.list

# 替换为阿里云镜像源

# 阿里云镜像源

deb http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-security main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-updates main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-proposed main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ jammy-backports main restricted universe multiverse

# 更新软件包缓存

apt update1.4.1.9 设置时区

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime # 更换为上海时区1.4.1.10 安装常用命令

apt-get updateapt-get purge ufw lxd lxd-client lxcfs lxc-common #卸载不用的包apt-get install iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip1.4.1.11 安装docker

apt-get update

apt-get -y install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

apt-get -y update && apt-get -y install docker-ce

docker info1.4.1.12 拍摄快照

1.4.2 高可用负载均衡

1.4.2.1 keepalived 高可用

在ha1和ha2两台服务器安装keepalived

root@haproxy1:/# apt install keepalived -y

root@haproxy2:/# apt install keepalived -y复制配置模板到/etc/keepalived/目录下

root@haproxy1:/etc/keepalived# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@haproxy2:/etc/keepalived# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf分别修改配置文件

ha1主服务器keepalived配置文件

# 主配置文件

root@ha1:/etc/keepalived# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {notification_email {acassen}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 192.168.200.1smtp_connect_timeout 30router_id LVS_DEVEL

}

vrrp_instance VI_1 {state MASTER # 主MASTER interface eht0 # 绑定网卡名称garp_master_delay 10smtp_alertvirtual_router_id 51priority 100advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.121.188 dev eht0 label eht0:0192.168.121.189 dev eht0 label eht0:1192.168.121.190 dev eht0 label eht0:2}

}

# 重启keepalived

root@haproxy1:/etc/keepalived# systemctl restart keepalived

# 设置开机自启动

root@haproxy1:/etc/keepalived# systemctl enable keepalived

# 查看vip是否以及挂上

root@haproxy1:/etc/keepalived# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eht0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000link/ether 00:0c:29:4b:b4:b6 brd ff:ff:ff:ff:ff:ffaltname enp2s0inet 192.168.121.109/24 brd 192.168.121.255 scope global ethovalid_lft forever preferred_lft foreverinet 192.168.121.188/32 scope global eht0:0valid_lft forever preferred_lft foreverinet 192.168.121.189/32 scope global eht0:1valid_lft forever preferred_lft foreverinet 192.168.121.190/32 scope global eht0:2valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe4b:b4b6/64 scope link valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:29:eb:58:5c brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverha2备服务器keepalived配置文件

# 备配置文件

root@haproxy2:/etc/keepalived# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {notification_email {acassen}notification_email_from Alexandre.Cassen@firewall.locsmtp_server 192.168.200.1smtp_connect_timeout 30router_id LVS_DEVEL

}vrrp_instance VI_1 {state BACKUP # 备BACKUPinterface eth0 # 绑定网卡名称garp_master_delay 10smtp_alertvirtual_router_id 51priority 80 # 降低优先级advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {192.168.121.188 dev eth0 label eht0:0192.168.121.189 dev eth0 label eht0:1192.168.121.190 dev eth0 label eht0:2}

}# 重启keepalived

root@haproxy2:/etc/keepalived# systemctl restart keepalived

# 设置开机自启动

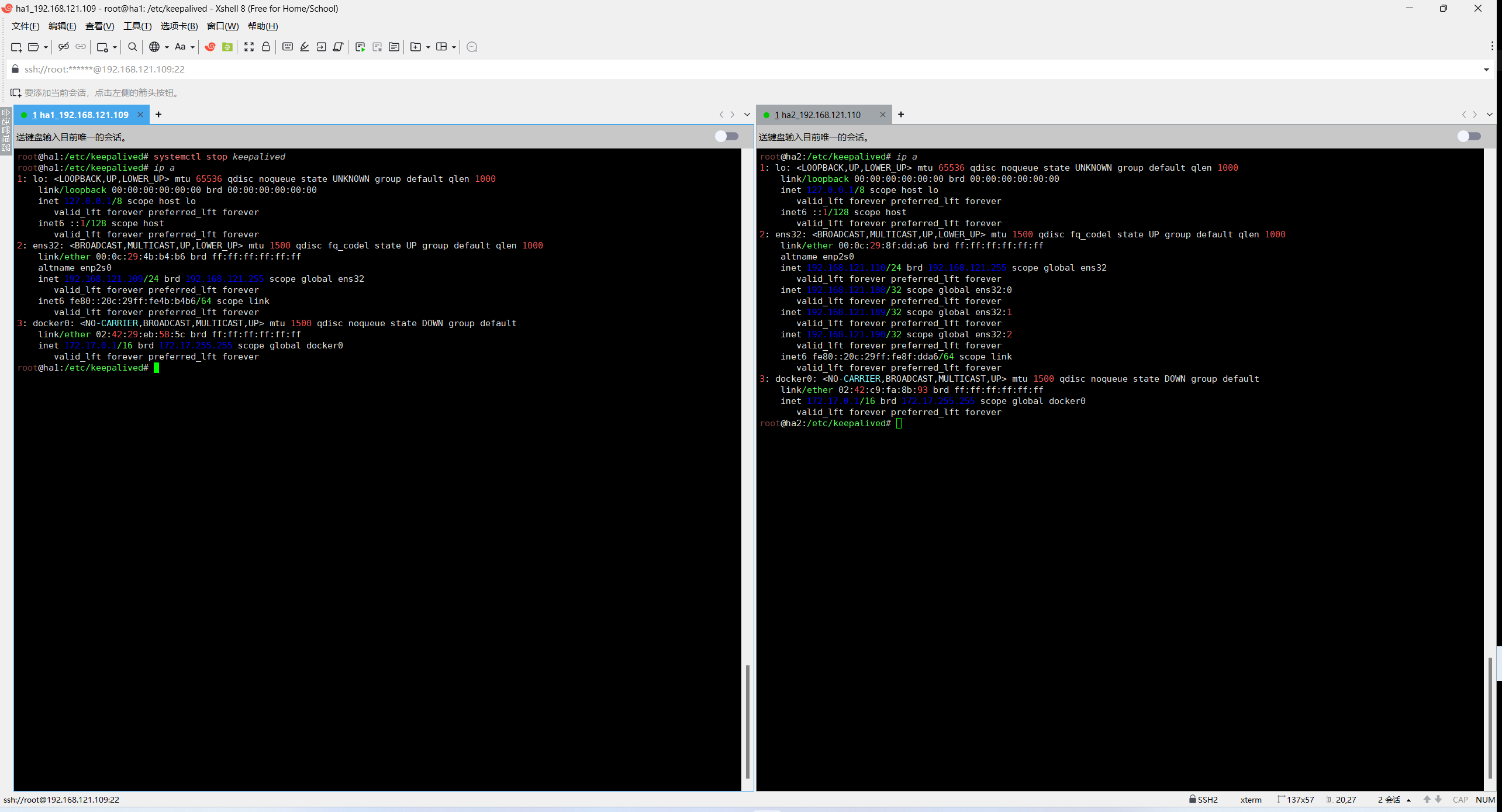

root@haproxy2:/etc/keepalived# systemctl enable keepalived测试vip漂移是否正常

# 主服务器关闭keepalived

root@haproxy1:/etc/keepalived# systemctl stop keepalived

root@haproxy1:/etc/keepalived# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eht0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000link/ether 00:0c:29:4b:b4:b6 brd ff:ff:ff:ff:ff:ffaltname enp2s0inet 192.168.121.109/24 brd 192.168.121.255 scope global eht0valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe4b:b4b6/64 scope link valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:29:eb:58:5c brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft forever# 备服务器查看状态

root@haproxy2:/etc/keepalived# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eht0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000link/ether 00:0c:29:8f:dd:a6 brd ff:ff:ff:ff:ff:ffaltname enp2s0inet 192.168.121.110/24 brd 192.168.121.255 scope global eht0valid_lft forever preferred_lft foreverinet 192.168.121.188/32 scope global eht0:0valid_lft forever preferred_lft foreverinet 192.168.121.189/32 scope global eht0:1valid_lft forever preferred_lft foreverinet 192.168.121.190/32 scope global eht0:2valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:fe8f:dda6/64 scope link valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:c9:fa:8b:93 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft forevervip正常漂移,启动主服务器keepalived后vip自动漂移回来

1.4.2.2 haproxy负载均衡器

安装haproxy

root@haproxy1:/etc/keepalived# apt install haproxy -y

root@haproxy2:/etc/keepalived# apt install haproxy -y配置监听

root@haproxy1:/# vim /etc/haproxy/haproxy.cfg

root@haproxy2:/# vim /etc/haproxy/haproxy.cfg

# 在文件末尾添加下面内容

# 定义一个名为k8s-api-6443的监听块,用于处理Kubernetes API的负载均衡

listen k8s-api-6443# 绑定到本地IP 192.168.121.188的6443端口,接收客户端请求bind 192.168.121.188:6443# 设置工作模式为TCP(Kubernetes API基于TCP协议通信)mode tcp# 定义后端服务器master1:指向192.168.121.101的6443端口# inter 3s:健康检查间隔时间3秒# fall 3:连续3次检查失败则标记为不可用# rise 1:1次检查成功则标记为可用server master1 192.168.121.101:6443 check inter 3s fall 3 rise 1# 定义后端服务器master2,配置同master1server master2 192.168.121.102:6443 check inter 3s fall 3 rise 1# 定义后端服务器master3,配置同master1server master3 192.168.121.103:6443 check inter 3s fall 3 rise 1

# 重启并设置开机自启动

root@haproxy1:/etc/keepalived# systemctl restart haproxy && systemctl enable haproxy

root@haproxy2:/etc/keepalived# systemctl restart haproxy && systemctl enable haproxy

# 备服务器起不来是正常的,无法监听本机没有的地址上,因为vip188在主服务器上不在备服务器上所以需要修改/etc/sysctl.conf文件

root@haproxy1:# vim /etc/sysctl.conf # 主服务器也配置一下,万一vip漂移到了ha2,负载均衡器就失效了

root@haproxy2:# vim /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1 # 有则修改1,无则添加

root@ha2:/etc/keepalived# sysctl -p

net.ipv4.ip_forward = 1

vm.max_map_count = 262144

kernel.pid_max = 4194303

fs.file-max = 1000000

net.ipv4.tcp_max_tw_buckets = 6000

net.netfilter.nf_conntrack_max = 2097152

net.ipv4.ip_nonlocal_bind = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness = 0

root@haproxy2:/etc/keepalived# systemctl restart haproxy

root@haproxy2:/etc/keepalived# systemctl status haproxy

● haproxy.service - HAProxy Load BalancerLoaded: loaded (/lib/systemd/system/haproxy.service; enabled; vendor preset: enabled)Active: active (running) since Sat 2025-09-20 16:04:56 CST; 4s agoDocs: man:haproxy(1)file:/usr/share/doc/haproxy/configuration.txt.gzProcess: 3030 ExecStartPre=/usr/sbin/haproxy -Ws -f $CONFIG -c -q $EXTRAOPTS (code=exited, status=0/SUCCESS)Main PID: 3032 (haproxy)Tasks: 2 (limit: 2177)Memory: 71.2MCPU: 99msCGroup: /system.slice/haproxy.service├─3032 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock└─3034 /usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -S /run/haproxy-master.sock

Sep 20 16:04:56 ha2 systemd[1]: Starting HAProxy Load Balancer...

Sep 20 16:04:56 ha2 haproxy[3032]: [WARNING] (3032) : parsing [/etc/haproxy/haproxy.cfg:23] : 'option httplog' not usable with proxy 'k>

Sep 20 16:04:56 ha2 haproxy[3032]: [NOTICE] (3032) : New worker #1 (3034) forked

Sep 20 16:04:56 ha2 systemd[1]: Started HAProxy Load Balancer.

Sep 20 16:04:56 ha2 haproxy[3034]: [WARNING] (3034) : Server k8s-api-6443/master1 is DOWN, reason: Layer4 connection problem, info: "Co>

Sep 20 16:04:57 ha2 haproxy[3034]: [WARNING] (3034) : Server k8s-api-6443/master2 is DOWN, reason: Layer4 connection problem, info: "Co>

Sep 20 16:04:58 ha2 haproxy[3034]: [WARNING] (3034) : Server k8s-api-6443/master3 is DOWN, reason: Layer4 connection problem, info: "Co>

Sep 20 16:04:58 ha2 haproxy[3034]: [NOTICE] (3034) : haproxy version is 2.4.24-0ubuntu0.22.04.2

Sep 20 16:04:58 ha2 haproxy[3034]: [NOTICE] (3034) : path to executable is /usr/sbin/haproxy

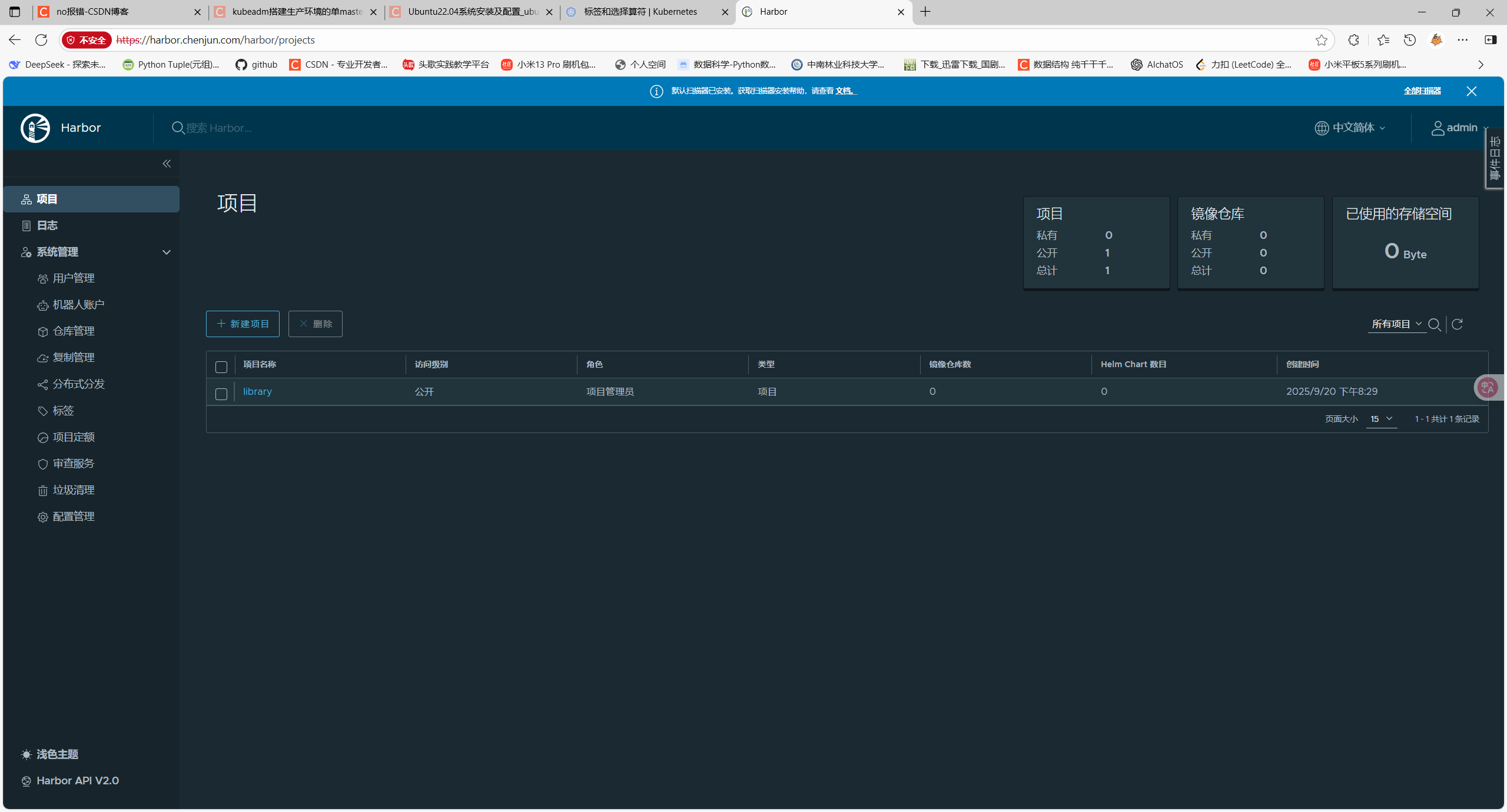

Sep 20 16:04:58 ha2 haproxy[3034]: [ALERT] (3034) : proxy 'k8s-api-6443' has no server available!1.4.3 Harbor安装

上传安装包至harbor1服务器以及harbor2服务器

root@harbor1:/apps/harbor# tar -xvf harbor-offline-installer-v2.4.2.tgz

root@harbor1:/apps/harbor# cd /harbor

root@harbor1:/apps/harbor# cp harbor.yml.tmpl harbor.yml

root@harbor1:/apps/harbor# vim harbor.yml

# 修改配置文件hostname为主机域名harbor1

hostname: harbor.chenjun.com

# 生成ssl证书

# 生成私有key

root@harbor1:/apps/harbor# mkdir certs

root@harbor1:/apps/harbor# cd certs/

root@harbor1:/apps/harbor/certs# openssl genrsa -out ./harbor-ca.key

root@harbor1:/apps/harbor/certs# openssl req -x509 -new -nodes -key ./harbor-ca.key -subj "/CN=harbor1.chenjun.com" -days 7120 -out ./harbor-ca.crt

root@harbor1:/apps/harbor/certs# ls

harbor-ca.crt harbor-ca.key

root@harbor1:/apps/harbor/certs# cd ..

root@harbor1:/apps/harbor# vim harbor.yml

# 修改私钥公钥path路径

https:port: 443certificate: /apps/harbor/certs/harbor-ca.crtprivate_key: /apps/harbor/certs/harbor-ca.key

# 修改harbor密码

harbor_admin_password: 123456安装harbor

root@harbor1:/apps/harbor# ./install.sh --with-trivy --with-chartmuseum安装完成之后可以在浏览器输入之前设置的域名harbor.chenjun.com访问web页面

1.5 k8s集群部署

1.5.1 master1节点部署ansible

root@master1:/# apt install ansible -y1.5.2 配置ssh免密登录

在master1节点分发密钥到三个master节点三个etcd节点三个node节点

root@master1:/# ssh-copy-id 192.168.121.101

root@master1:/# ssh-copy-id 192.168.121.102

root@master1:/# ssh-copy-id 192.168.121.103

root@master1:/# ssh-copy-id 192.168.121.106

root@master1:/# ssh-copy-id 192.168.121.107

root@master1:/# ssh-copy-id 192.168.121.108

root@master1:/# ssh-copy-id 192.168.121.111

root@master1:/# ssh-copy-id 192.168.121.112

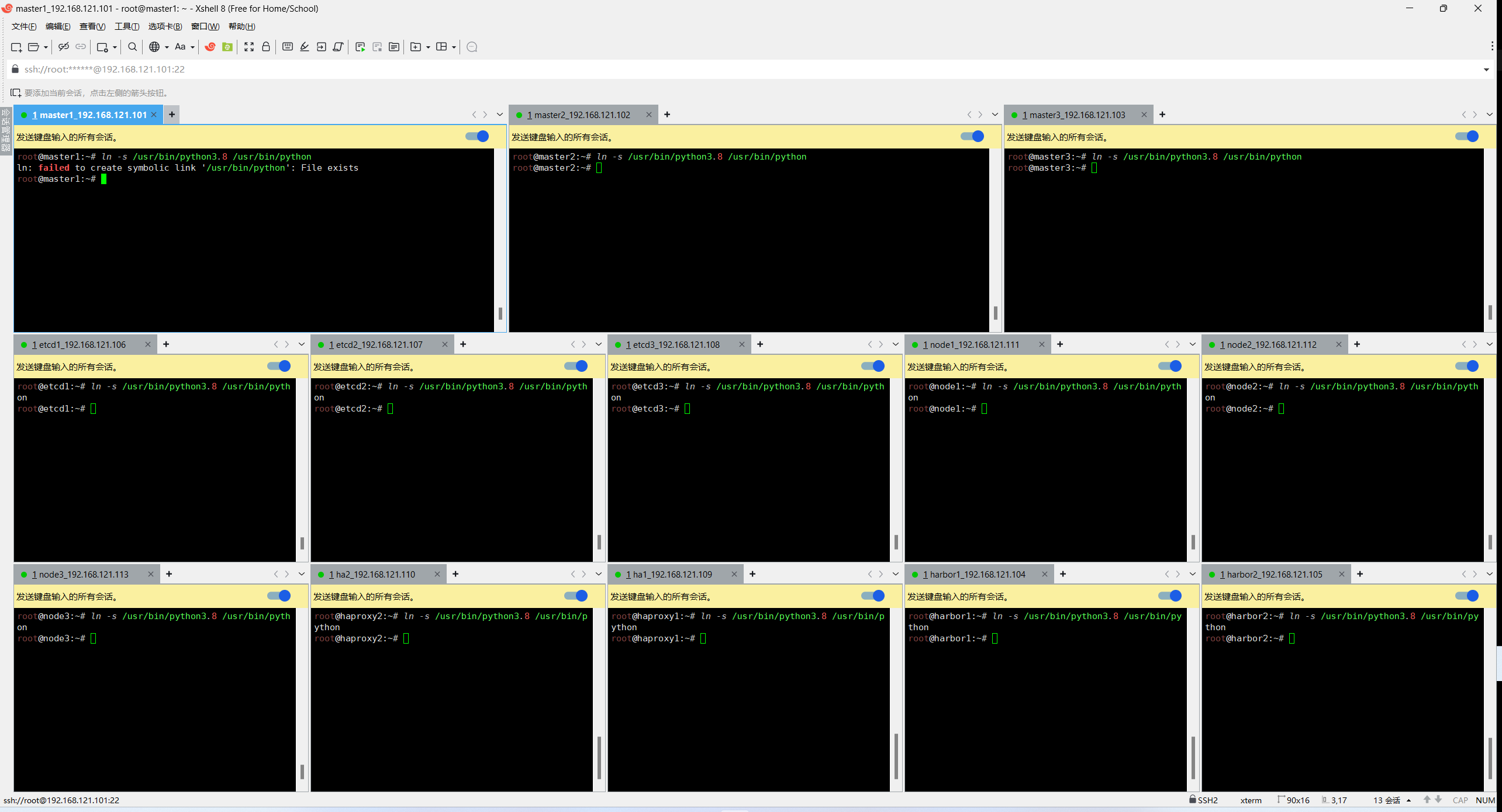

root@master1:/# ssh-copy-id 192.168.121.1131.5.3 配置python3的软连接

# 使用xshell发送命令到所有会话

ln -s /usr/bin/python3.8 /usr/bin/python

1.5.4 在部署节点编排k8s安装

下载项目源码、二进制及离线镜像

root@master1:/# export release=3.2.0

root@master1:/# wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

root@master1:/# chmod +x ./ezdown

root@master1:/# vim ezdown

# 修改docker版本19.03.15

root@master1:/# DOCKER_VER=19.03.15

# 执行(需要解决代理问题,访问docker仓库拉取镜像)

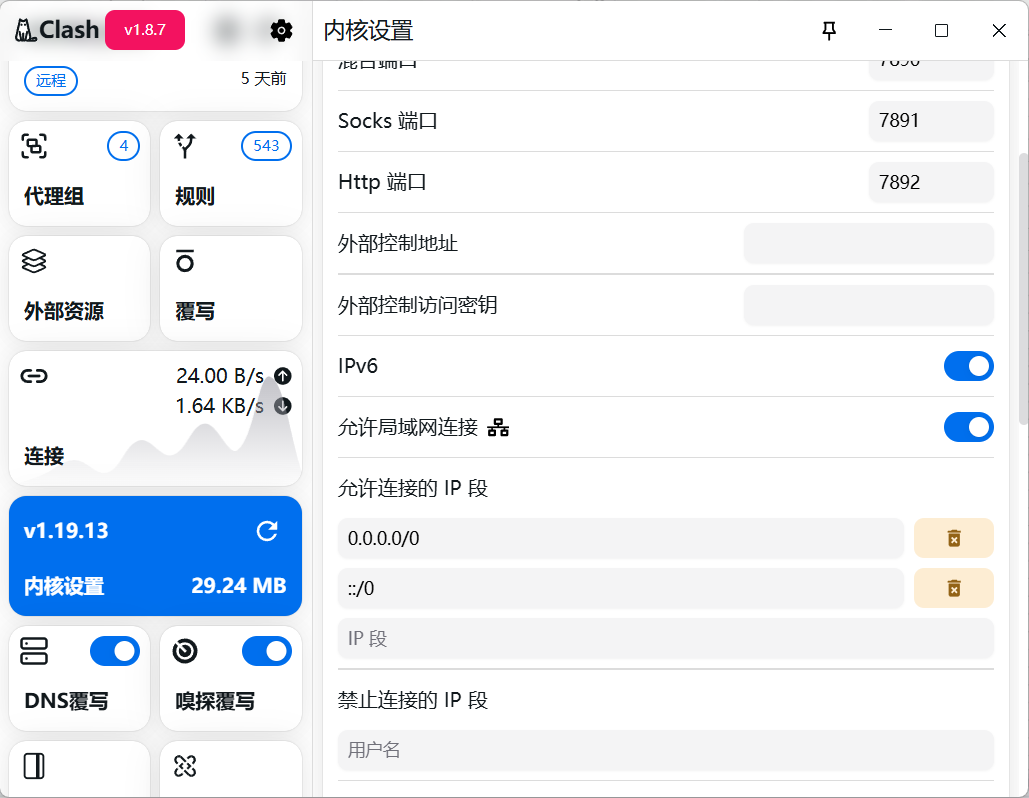

root@master1:/# ./ezdown -D访问不了国外网站,代理解决方案

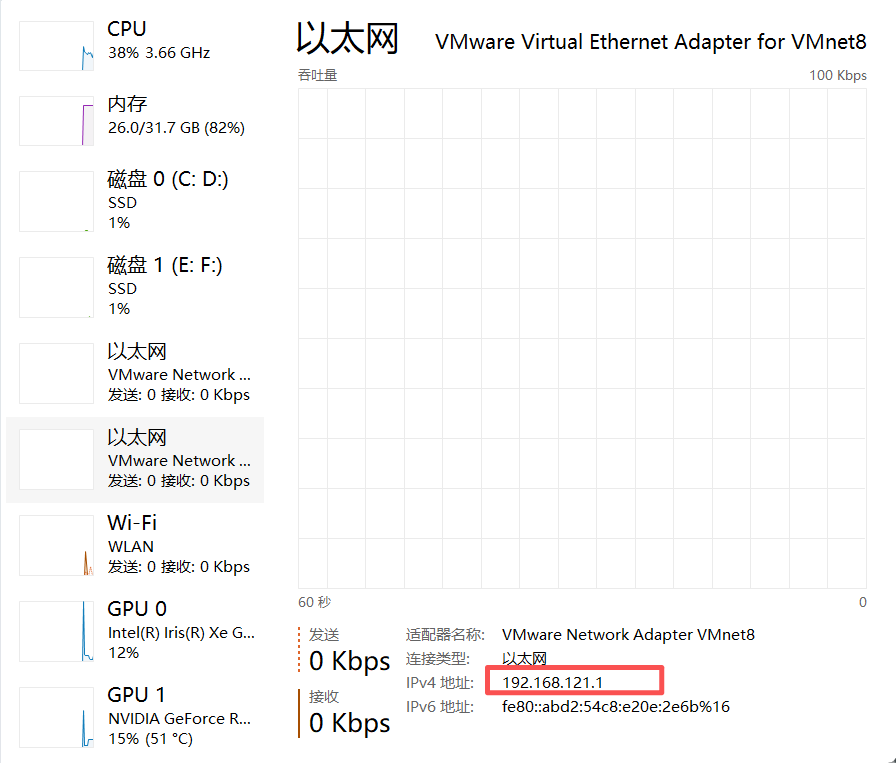

windows主机需要安装代理软件如claxxx,并开启代理,允许局域网可连接

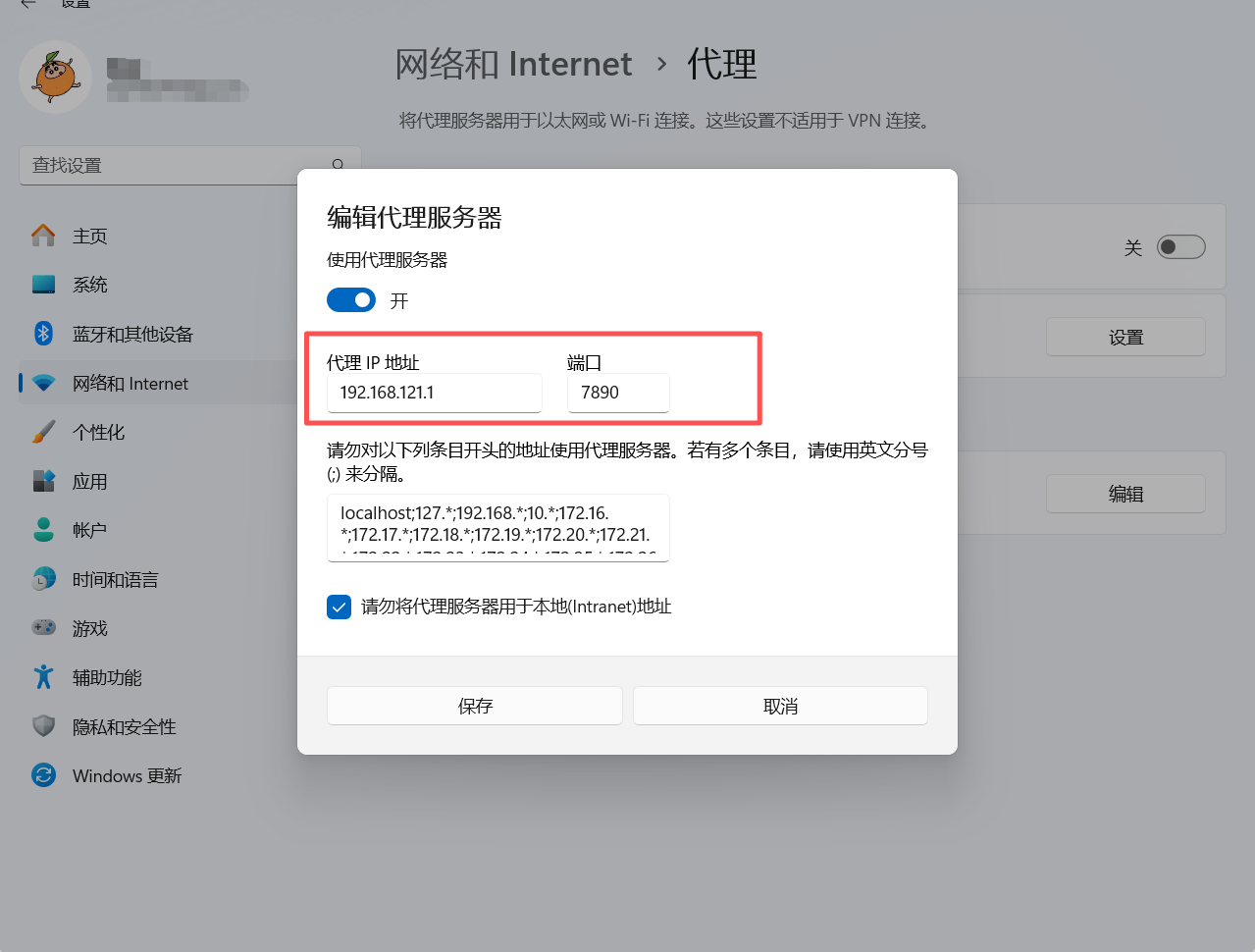

2.windows设置->网络和internet->代理->手动设置代理使用代理服务器->输入代理ip地址和端口

代理ip地址为vmnet8的IP地址可在任务管理器查看,端口则是代理软件内的端口一般是7890

linux写入环境变量

vim /etc/profile

export http_proxy="http://代理服务器IP:端口"

export https_proxy="http://代理服务器IP:端口"

source /etc/profiledocker 使用代理

新版本

"proxies": {"http-proxy": "http://127.0.0.1:7890","https-proxy": "http://127.0.0.1:7890"}

老版本

# 创建配置目录(如果不存在)

sudo mkdir -p /etc/systemd/system/docker.service.d

# 创建代理配置文件

sudo vim /etc/systemd/system/docker.service.d/http-proxy.conf

[Service]

Environment="HTTP_PROXY=http://127.0.0.1:7890"

Environment="HTTPS_PROXY=http://127.0.0.1:7890"

Environment="NO_PROXY=192.168.121.104,192.168.121.105,harbor1.chenjun.com,loalhosts,harbor2.chenjun.com,127.0.0.1" # 无需代理的地址(可选)

# 重新加载 systemd 配置

sudo systemctl daemon-reload

# 重启 Docker 服务

sudo systemctl restart docker

# 检查启动状态

sudo systemctl status docker1.5.5 创建集群配置实例

root@master1:~# cd /etc/kubeasz/

# 创建一个名为k8s-01的集群,会拷贝两个文件 example/config.yml example/hosts.multi-node

root@master1:/etc/kubeasz# ./ezctl new k8s-01

2025-09-21 15:27:49 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01

2025-09-21 15:27:49 DEBUG set versions

2025-09-21 15:27:49 DEBUG disable registry mirrors

2025-09-21 15:27:49 DEBUG cluster k8s-01: files successfully created.

2025-09-21 15:27:49 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts'

2025-09-21 15:27:49 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'

root@master1:/etc/kubeasz# cd clusters/k8s-01

root@master1:/etc/kubeasz/clusters/k8s-01# ls

config.yml hosts

# 修改hosts文件改为集群实际ip

[etcd]

192.168.121.106

192.168.121.107

192.168.121.108

# 先两个后续扩容

# master node(s)

[kube_master]

192.168.121.101

192.168.121.102

# 先两个后续扩容

# work node(s)

[kube_node]

192.168.121.111

192.168.121.112

# 打开外部负载均衡器

[ex_lb]

192.168.121.120 LB_ROLE=backup EX_APISERVER_VIP=192.168.121.188 EX_APISERVER_PORT=6443

192.168.121.129 LB_ROLE=master EX_APISERVER_VIP=192.168.121.188 EX_APISERVER_PORT=6443

# 修改运行时为docker

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# service 网段选择性修改

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.100.0.0/16"

# pod 网段选择性修改

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.200.0.0/16"

# [docker]信任的HTTP仓库 添加两台harbor服务器的ip

INSECURE_REG: '["127.0.0.1/8","192.168.121.104","192.168.121.105"]'

# 修改端口范围 30000-65000

# NodePort Range

NODE_PORT_RANGE="30000-65000"

# DNS域名可改可不改

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="chenjun.local"

# api-server等组件的二进制路径

bin_dir="/usr/local/bin"

# 修改k8s配置

root@master1:~# vim config.yml

# 修改访问入口

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:- "191.168.121.188" # vip# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# 修改最大pod数量

# node节点最大pod 数

MAX_PODS: 300

# coredns 自动安装 改成no 后续自己装

dns_install: "no"

# metric server 自动安装 改成no 后续自己装

metricsserver_install: "no"

# dashboard 自动安装 改成no 后续自己装

dashboard_install: "no"

# 不要负载均衡初始化

root@master1:/etc/kubeasz# vim /etc/kubeasz/playbooks/01.prepare.yml

- hosts:- kube_master- kube_node- etcd#- ex_lb 注释ex_lb- chronyroles:- { role: os-harden, when: "OS_HARDEN|bool" }- { role: chrony, when: "groups['chrony']|length > 0" }

# to create CA, kubeconfig, kube-proxy.kubeconfig etc.

- hosts: localhostroles:- deploy

# prepare tasks for all nodes

- hosts:- kube_master- kube_node- etcdroles:- prepare启动第一步 初始化

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 01第二步 安装etcd集群

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 02第三步 安装 docker环境

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 03第四步 部署k8s集群 master

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 04第五步 安装node集群

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 05第六步 安装网络组件

root@master1:/etc/kubeasz# ./ezctl setup k8s-01 06测试推送镜像到harbor

# 必须和harbor创建的名字一致

root@master1:/etc/kubeasz# mkdir /etc/docker/certs.d/harbor1.chenjun.com -p

# 分发私钥到master服务器

root@harbor1:/# scp /apps/harbor/certs/harbor-ca.crt master1:/etc/docker/certs.d/harbor1.chenjun.com/

# master1配置harbor服务器域名解析

root@master1:/etc/kubeasz# vim /etc/hosts

192.168.121.104 harbor1.chenjun.com

root@master1:/etc/kubeasz# docker login harbor1.chenjun.com

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

在登录harbor仓库的时候遇到了一个问题

root@master1:/etc/kubeasz# curl -k https://harbor1.chenjun.com/v2/

The deployment could not be found on Vercel.

DEPLOYMENT_NOT_FOUND

hkg1::f4qpb-1758451462099-e76ed2a7000b域名harbor1.chenjun.com指向 Vercel 的服务器始终访问失败404等报错信息,是开了代理的问题

在docker代理配置文件里面 添加不代理的地址解决了

vim /etc/systemd/system/docker.service.d/http-proxy.conf

Environment="NO_PROXY=192.168.121.104,192.168.121.105,harbor1.chenjun.com,loalhosts,harbor2.chenjun.com,127.0.0.1" # 无需代理的地址上传镜像

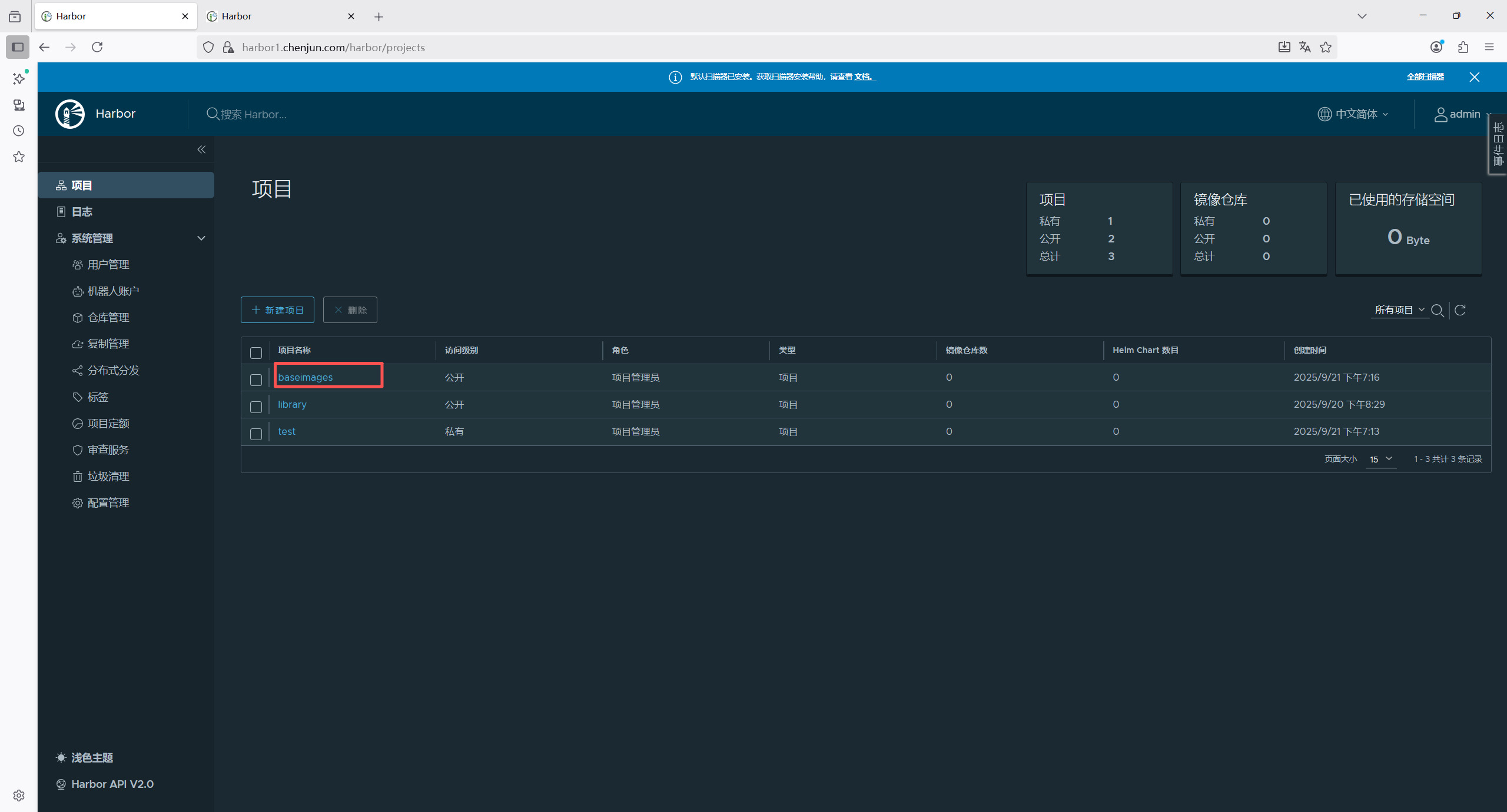

# 需要在harbor web页面新建一个项目

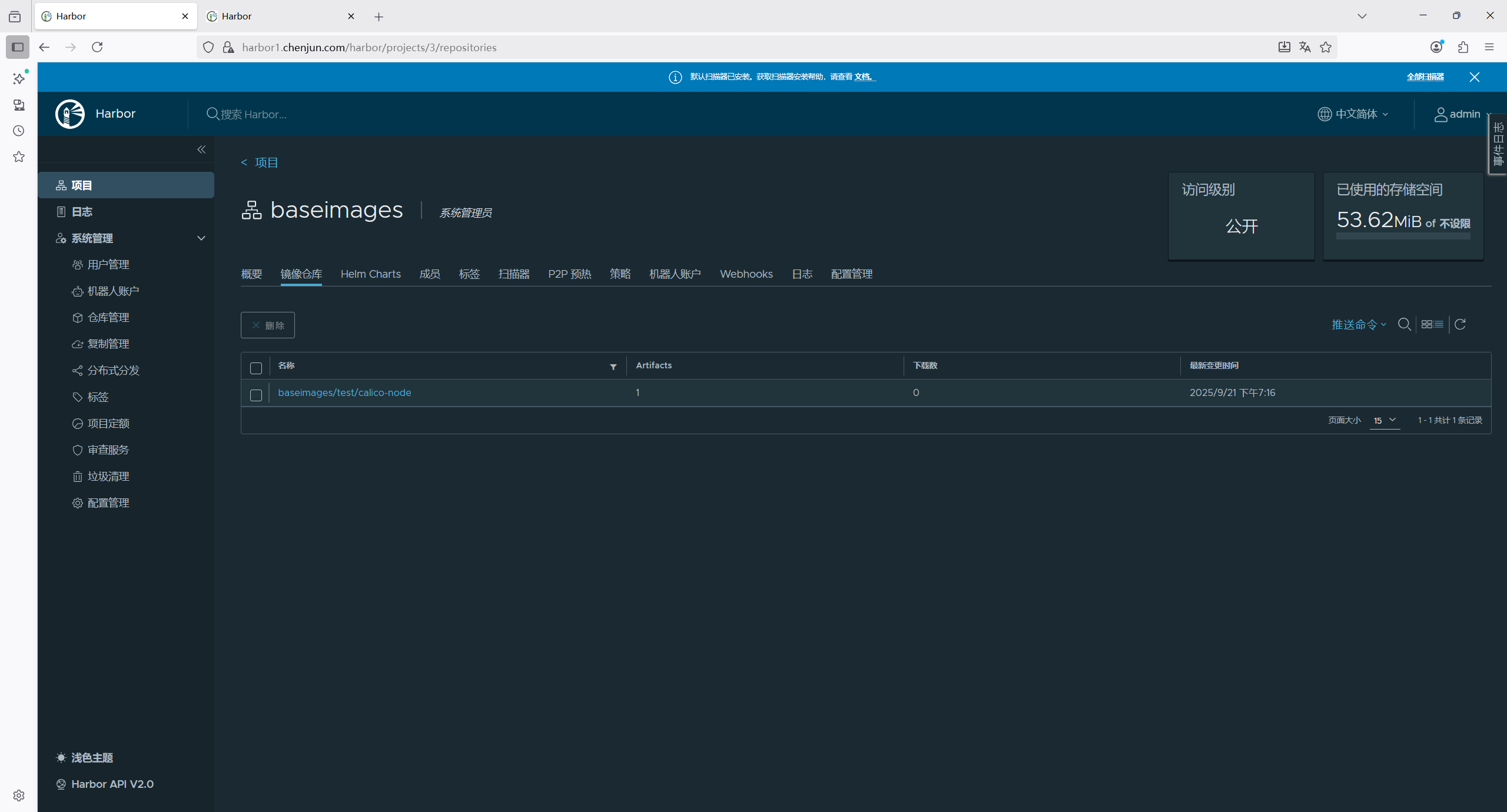

root@master1:/etc/kubeasz# docker tag calico/node:v3.19.3 harbor1.chenjun.com/baseimages/test/calico-node:v3.19.3

root@master1:/etc/kubeasz# docker push harbor1.chenjun.com/baseimages/test/calico-node:v3.19.3

The push refers to repository [harbor1.chenjun.com/baseimages/test/calico-node]

5c8b8d7a47a1: Pushed

803fb24398e2: Pushed

v3.19.3: digest: sha256:d84a6c139c86fabc6414267ee4c87879d42d15c2e0eaf96030c91a59e27e7a6f size: 737

1.5.6 测试网络通信

root@master1:/etc/kubeasz# calicoctl node status

Calico process is running.

IPv4 BGP status

+-----------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+-----------------+-------------------+-------+----------+-------------+

| 192.168.121.102 | node-to-node mesh | up | 11:01:48 | Established |

| 192.168.121.111 | node-to-node mesh | up | 11:00:12 | Established |

| 192.168.121.112 | node-to-node mesh | up | 11:00:14 | Established |

+-----------------+-------------------+-------+----------+-------------+

root@master1:/etc/kubeasz# kubectl run net-test1 --image=centos:7.9.2009 sleep 3600000

pod/net-test1 created

root@master1:/etc/kubeasz# kubectl run net-test2 --image=centos:7.9.2009 sleep 3600000

pod/net-test2 created

root@master1:/etc/kubeasz# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 3m2s 10.200.166.129 192.168.121.111 <none> <none>

net-test2 1/1 Running 0 54s 10.200.166.130 192.168.121.111 <none> <none>

# 进入容器内部,ping 另外一个pod和w'sai

root@master1:/etc/kubeasz# kubectl exec -it net-test1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@net-test1 /]# ping 10.200.166.129

PING 10.200.166.129 (10.200.166.129) 56(84) bytes of data.

64 bytes from 10.200.166.129: icmp_seq=1 ttl=64 time=0.044 ms

64 bytes from 10.200.166.129: icmp_seq=2 ttl=64 time=0.251 ms

^C

--- 10.200.166.129 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1019ms

rtt min/avg/max/mdev = 0.044/0.147/0.251/0.104 ms

[root@net-test1 /]# ping 10.200.166.130

PING 10.200.166.130 (10.200.166.130) 56(84) bytes of data.

64 bytes from 10.200.166.130: icmp_seq=1 ttl=63 time=6.77 ms

64 bytes from 10.200.166.130: icmp_seq=2 ttl=63 time=0.056 ms

64 bytes from 10.200.166.130: icmp_seq=3 ttl=63 time=0.056 ms

^C

--- 10.200.166.130 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.056/2.296/6.778/3.169 ms

[root@net-test1 /]# ping 223.5.5.5

PING 223.5.5.5 (223.5.5.5) 56(84) bytes of data.

64 bytes from 223.5.5.5: icmp_seq=1 ttl=127 time=32.7 ms

64 bytes from 223.5.5.5: icmp_seq=2 ttl=127 time=26.2 ms

^C

--- 223.5.5.5 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 26.299/29.512/32.725/3.213 ms