Linux 系统下 ZONE 区域的划分

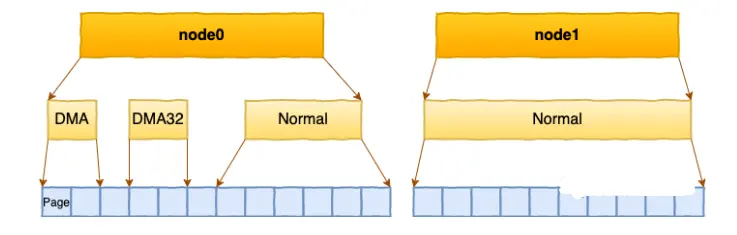

每个 node 又会划分成若干的 zone(区域) 。zone 表示内存中的一块范围

-

ZONE_DMA:地址段最低的一块内存区域,ISA(Industry Standard Architecture)设备DMA访问

-

ZONE_DMA32:该Zone用于支持32-bits地址总线的DMA设备,只在64-bits系统里才有效

-

ZONE_NORMAL:在X86-64架构下,DMA和DMA32之外的内存全部在NORMAL的Zone里管理

在每个zone下,都包含了许许多多个 Page(页面), 在linux下一个Page的大小一般是 4 KB。

在你的机器上,你可以使用通过 zoneinfo 查看到你机器上 zone 的划分,也可以看到每个 zone 下所管理的页面有多少个。

# cat /proc/zoneinfo Node 0, zone DMApages free 3973managed 3973 Node 0, zone DMA32pages free 390390managed 427659 Node 0, zone Normalpages free 15021616managed 15990165 Node 1, zone Normalpages free 16012823managed 16514393

每个页面大小是4K,很容易可以计算出每个 zone 的大小。比如对于上面 Node1 的 Normal, 16514393 * 4K = 66 GB。

基于伙伴系统管理空闲页面

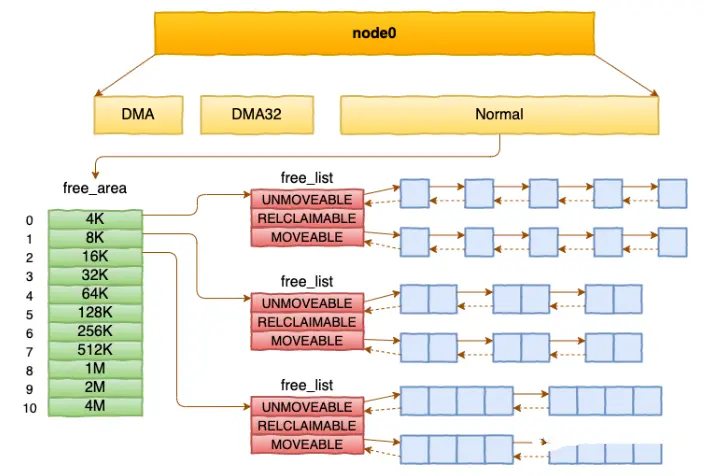

每个 zone 下面都有如此之多的页面,Linux使用伙伴系统对这些页面进行高效的管理。在内核中,表示 zone 的数据结构是 struct zone。其下面的一个数组 free_area 管理了绝大部分可用的空闲页面。这个数组就是伙伴系统实现的重要数据结构。

Zone

struct zone {

834 /* Read-mostly fields */

835

836 /* zone watermarks, access with *_wmark_pages(zone) macros */

837 unsigned long _watermark[NR_WMARK];

838 unsigned long watermark_boost;

839

840 unsigned long nr_reserved_highatomic;

841

842 /*

843 * We don't know if the memory that we're going to allocate will be

844 * freeable or/and it will be released eventually, so to avoid totally

845 * wasting several GB of ram we must reserve some of the lower zone

846 * memory (otherwise we risk to run OOM on the lower zones despite

847 * there being tons of freeable ram on the higher zones). This array is

848 * recalculated at runtime if the sysctl_lowmem_reserve_ratio sysctl

849 * changes.

850 */

851 long lowmem_reserve[MAX_NR_ZONES];

852

853 #ifdef CONFIG_NUMA

854 int node;

855 #endif

856 struct pglist_data *zone_pgdat;

857 struct per_cpu_pages __percpu *per_cpu_pageset;

858 struct per_cpu_zonestat __percpu *per_cpu_zonestats;

859 /*

860 * the high and batch values are copied to individual pagesets for

861 * faster access

862 */

863 int pageset_high;

864 int pageset_batch;

865

866 #ifndef CONFIG_SPARSEMEM

867 /*

868 * Flags for a pageblock_nr_pages block. See pageblock-flags.h.

869 * In SPARSEMEM, this map is stored in struct mem_section

870 */

871 unsigned long *pageblock_flags;

872 #endif /* CONFIG_SPARSEMEM */

873

874 /* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

875 unsigned long zone_start_pfn;

876

877 /*

878 * spanned_pages is the total pages spanned by the zone, including

879 * holes, which is calculated as:

880 * spanned_pages = zone_end_pfn - zone_start_pfn;

881 *

882 * present_pages is physical pages existing within the zone, which

883 * is calculated as:

884 * present_pages = spanned_pages - absent_pages(pages in holes);

885 *

886 * present_early_pages is present pages existing within the zone

887 * located on memory available since early boot, excluding hotplugged

888 * memory.

889 *

890 * managed_pages is present pages managed by the buddy system, which

891 * is calculated as (reserved_pages includes pages allocated by the

892 * bootmem allocator):

893 * managed_pages = present_pages - reserved_pages;

894 *

895 * cma pages is present pages that are assigned for CMA use

896 * (MIGRATE_CMA).

897 *

898 * So present_pages may be used by memory hotplug or memory power

899 * management logic to figure out unmanaged pages by checking

900 * (present_pages - managed_pages). And managed_pages should be used

901 * by page allocator and vm scanner to calculate all kinds of watermarks

902 * and thresholds.

903 *

904 * Locking rules:

905 *

906 * zone_start_pfn and spanned_pages are protected by span_seqlock.

907 * It is a seqlock because it has to be read outside of zone->lock,

908 * and it is done in the main allocator path. But, it is written

909 * quite infrequently.

910 *

911 * The span_seq lock is declared along with zone->lock because it is

912 * frequently read in proximity to zone->lock. It's good to

913 * give them a chance of being in the same cacheline.

914 *

915 * Write access to present_pages at runtime should be protected by

916 * mem_hotplug_begin/done(). Any reader who can't tolerant drift of

917 * present_pages should use get_online_mems() to get a stable value.

918 */

919 atomic_long_t managed_pages;

920 unsigned long spanned_pages;

921 unsigned long present_pages;

922 #if defined(CONFIG_MEMORY_HOTPLUG)

923 unsigned long present_early_pages;

924 #endif

925 #ifdef CONFIG_CMA

926 unsigned long cma_pages;

927 #endif

928

929 const char *name;

930

931 #ifdef CONFIG_MEMORY_ISOLATION

932 /*

933 * Number of isolated pageblock. It is used to solve incorrect

934 * freepage counting problem due to racy retrieving migratetype

935 * of pageblock. Protected by zone->lock.

936 */

937 unsigned long nr_isolate_pageblock;

938 #endif

939

940 #ifdef CONFIG_MEMORY_HOTPLUG

941 /* see spanned/present_pages for more description */

942 seqlock_t span_seqlock;

943 #endif

944

945 int order;

946

947 int initialized;

948

949 /* Write-intensive fields used from the page allocator */

950 CACHELINE_PADDING(_pad1_);

951

952 /* free areas of different sizes */

953 struct free_area free_area[NR_PAGE_ORDERS];

954

955 #ifdef CONFIG_UNACCEPTED_MEMORY

956 /* Pages to be accepted. All pages on the list are MAX_ORDER */

957 struct list_head unaccepted_pages;

958 #endif

959

960 /* zone flags, see below */

961 unsigned long flags;

962

963 /* Primarily protects free_area */

964 spinlock_t lock;

965

966 /* Write-intensive fields used by compaction and vmstats. */

967 CACHELINE_PADDING(_pad2_);

968

969 /*

970 * When free pages are below this point, additional steps are taken

971 * when reading the number of free pages to avoid per-cpu counter

972 * drift allowing watermarks to be breached

973 */

974 unsigned long percpu_drift_mark;

975

976 #if defined CONFIG_COMPACTION || defined CONFIG_CMA

977 /* pfn where compaction free scanner should start */

978 unsigned long compact_cached_free_pfn;

979 /* pfn where compaction migration scanner should start */

980 unsigned long compact_cached_migrate_pfn[ASYNC_AND_SYNC];

981 unsigned long compact_init_migrate_pfn;

982 unsigned long compact_init_free_pfn;

983 #endif

984

985 #ifdef CONFIG_COMPACTION

986 /*

987 * On compaction failure, 1<<compact_defer_shift compactions

988 * are skipped before trying again. The number attempted since

989 * last failure is tracked with compact_considered.

990 * compact_order_failed is the minimum compaction failed order.

991 */

992 unsigned int compact_considered;

993 unsigned int compact_defer_shift;

994 int compact_order_failed;

995 #endif

996

997 #if defined CONFIG_COMPACTION || defined CONFIG_CMA

998 /* Set to true when the PG_migrate_skip bits should be cleared */

999 bool compact_blockskip_flush;

1000 #endif

1001

1002 bool contiguous;

1003

1004 CACHELINE_PADDING(_pad3_);

1005 /* Zone statistics */

1006 atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

1007 atomic_long_t vm_numa_event[NR_VM_NUMA_EVENT_ITEMS];

1008 } ____cacheline_internodealigned_in_smp;free_area是一个11个元素的数组,在每一个数组分别代表的是空闲可分配连续4K、8K、16K、......、4M内存链表。

#define MAX_ORDER 10#define NR_PAGE_ORDERS (MAX_ORDER + 1)/* free areas of different sizes */ 953 struct free_area free_area[NR_PAGE_ORDERS];struct free_area { 122 struct list_head free_list[MIGRATE_TYPES]; 123 unsigned long nr_free; 124 };

通过cat /proc/pagetypeinfo, 你可以看到当前系统里伙伴系统里各个尺寸的可用连续内存块数量。

内核提供分配器函数alloc_pages到上面的多个链表中寻找可用连续页面。

struct page * alloc_pages(gfp_t gfp_mask, unsigned int order)/** 2268 * alloc_pages - Allocate pages. 2269 * @gfp: GFP flags. 2270 * @order: Power of two of number of pages to allocate. 2271 * 2272 * Allocate 1 << @order contiguous pages. The physical address of the 2273 * first page is naturally aligned (eg an order-3 allocation will be aligned 2274 * to a multiple of 8 * PAGE_SIZE bytes). The NUMA policy of the current 2275 * process is honoured when in process context. 2276 * 2277 * Context: Can be called from any context, providing the appropriate GFP 2278 * flags are used. 2279 * Return: The page on success or NULL if allocation fails. 2280 */ 2281 struct page *alloc_pages(gfp_t gfp, unsigned order) 2282 { 2283 struct mempolicy *pol = &default_policy; 2284 struct page *page; 2285 2286 if (!in_interrupt() && !(gfp & __GFP_THISNODE)) 2287 pol = get_task_policy(current); 2288 2289 /* 2290 * No reference counting needed for current->mempolicy 2291 * nor system default_policy 2292 */ 2293 if (pol->mode == MPOL_INTERLEAVE) 2294 page = alloc_page_interleave(gfp, order, interleave_nodes(pol)); 2295 else if (pol->mode == MPOL_PREFERRED_MANY) 2296 page = alloc_pages_preferred_many(gfp, order, 2297 policy_node(gfp, pol, numa_node_id()), pol); 2298 else 2299 page = __alloc_pages(gfp, order, 2300 policy_node(gfp, pol, numa_node_id()), 2301 policy_nodemask(gfp, pol)); 2302 2303 return page; 2304 } 2305 EXPORT_SYMBOL(alloc_pages);

⚙️ 关键参数解析

-

。常见的标志包括:gfp_t gfp(GFP flags): 这是内存分配的控制标志,它告诉内核在何处以及如何分配内存GFP_KERNEL: 标准的内核内存分配,可能触发直接内存回收,因此只能在进程上下文中使用(不能中断上下文)。GFP_ATOMIC: 用于原子上下文(如中断处理),分配不会休眠,但成功率较低。GFP_DMA/GFP_DMA32: 指定从 ZONE_DMA 或 ZONE_DMA32 分配内存,供需要特定地址范围的DMA设备使用。

-

unsigned int order: 表示请求的连续页数,其值为 2 的幂次方。例如,order为 0 表示请求 1 个页,order为 1 表示请求 2 个连续页,依此类推。内核中MAX_ORDER定义了可分配的最大阶数,如果order超过此值,分配会失败。

🚀 伙伴系统分配流程

__alloc_pages 是伙伴系统的主要入口,其内部通过 快速路径 和 慢速路径 来尝试分配:

- 快速路径:首先尝试

get_page_from_freelist,它直接扫描内存区域(zone)的空闲链表(free_area数组)来寻找足够大小的连续空闲页块。这是最理想的情况,分配速度很快。 - 慢速路径:如果快速路径失败(例如当前zone空闲内存不足),则进入

__alloc_pages_slowpath。慢速路径会采取更复杂的措施来获取内存,可能包括:- 唤醒

kswapd内核线程进行后台内存回收。 - 如果内存压力大,可能触发直接内存回收。

- 尝试内存压缩以对抗碎片。

- 在极端情况下,可能触发 OOM Killer 终止进程以释放内存。

- 唤醒

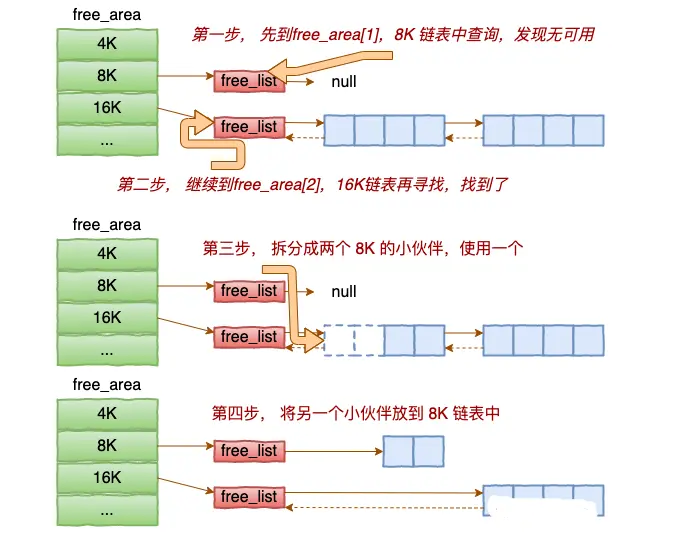

alloc_pages是怎么工作的呢?我们举个简单的小例子。假如要申请8K-连续两个页框的内存。为了描述方便,我们先暂时忽略UNMOVEABLE、RELCLAIMABLE等不同类型

基于伙伴系统的内存分配中,有可能需要将大块内存拆分成两个小伙伴。在释放中,可能会将两个小伙伴合并再次组成更大块的连续内存。

后续完善....