docker底层的隔离机制和资源控制机制

docker底层的隔离机制和资源控制机制

- 一、核心隔离技术:Namespace(命名空间)

- 二、资源限制技术:Cgroups(控制组)

- 通过 Cgroups v2 接口手动配置 CPU 资源限制的过程

- 通过 systemd-run 对进程进行内存限制

一、核心隔离技术:Namespace(命名空间)

作用:隔离资源

命名空间是内存里存放数据的一个空间

八大命名空间

| Namespace类型 | 隔离内容 |

|---|---|

| Mount(mnt) | 文件系统挂载点 |

| UTS(Unix Time-Sharing System) | 主机名和域名 |

| IPC(Inter-Process Communication) | 进程间通信资源 |

| PID(Process ID) | 进程ID号 |

| Network(net) | 网络设备、栈、端口等 |

| User(user) | 用户和用户组ID |

| Cgroup(cgroup) | Cgroup根目录 |

| Time(time) | 系统时钟 |

一个进程可以属于多个 Namespace

容器的隔离是 “多 Namespace 组合” 的结果

查看命名空间

[root@docker proc]# ps aux|grep mysql

systemd+ 1851 0.2 5.2 1200788 193204 ? Ssl 18:40 0:01 mysqld

root 2117 0.0 0.0 6408 2176 pts/0 S+ 18:51 0:00 grep --color=auto mysql

[root@docker proc]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

077fcb497613 mysql:5.7.41 "docker-entrypoint.s…" 2 days ago Up 11 minutes 33060/tcp, 0.0.0.0:33090->3306/tcp, [::]:33090->3306/tcp sc-mysql-1

dfaba1e1f3c2 nginx "/docker-entrypoint.…" 2 days ago Up 11 minutes 0.0.0.0:8080->80/tcp, [::]:8080->80/tcp sc-nginx-1

[root@docker proc]# cd 1851

[root@docker 1851]# ls

arch_status clear_refs cpuset fdinfo limits mountinfo numa_maps patch_state schedstat stack task wchan

attr cmdline cwd gid_map loginuid mounts oom_adj personality sessionid stat timens_offsets

autogroup comm environ io map_files mountstats oom_score projid_map setgroups statm timers

auxv coredump_filter exe ksm_merging_pages maps net oom_score_adj root smaps status timerslack_ns

cgroup cpu_resctrl_groups fd ksm_stat mem ns pagemap sched smaps_rollup syscall uid_map

[root@docker 1851]# cd ns

[root@docker ns]# ls

cgroup ipc mnt net pid pid_for_children time time_for_children user uts

[root@docker ns]# ll

total 0

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 cgroup -> 'cgroup:[4026532740]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 ipc -> 'ipc:[4026532738]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:40 mnt -> 'mnt:[4026532736]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:40 net -> 'net:[4026532741]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 pid -> 'pid:[4026532739]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 pid_for_children -> 'pid:[4026532739]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 time -> 'time:[4026531834]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 time_for_children -> 'time:[4026531834]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 user -> 'user:[4026531837]'

lrwxrwxrwx. 1 systemd-coredump systemd-coredump 0 Sep 10 18:55 uts -> 'uts:[4026532737]'

二、资源限制技术:Cgroups(控制组)

cgroups --》资源控制 --》限制cpu、内存使用等(限制进程的资源)

linux系统里如何限制mysql软件只能使用1G的内存空间?

1.使用docker容器启动mysql, -m 1000000000 --》自动会创建cgroup策略来限制mysql容器对应的进程的内存使用空间

2.不使用容器启动mysql,直接使用cgroup来限制mysqld进程使用的内存空间

Cgroups是linux 内核本来就有的技术

Cgroups v2(目录结构为 /sys/fs/cgroup/ 直接包含控制组),而早期的 Cgroups v1 是按子系统分目录(如 /sys/fs/cgroup/cpu/、/sys/fs/cgroup/memory/)。v2 采用统一的层级结构,更简洁

编辑一个消耗机器cpu资源的脚本

[root@docker cgroup]# vim test.sh

#!/bin/bash

i=1

while true

doecho "$i"((i++))

done

通过 Cgroups v2 接口手动配置 CPU 资源限制的过程

[root@docker cgroup]# cd /sys/fs/cgroup

# 创建一个控制组,所有与该控制组相关的配置文件都会出现在此目录中

[root@docker cgroup]# mkdir sc

[root@docker cgroup]# ls

cgroup.controllers cgroup.subtree_control cpu.stat io.cost.model memory.stat sc system.slice

cgroup.max.depth cgroup.threads cpu.stat.local io.cost.qos memory.zswap.writeback sys-fs-fuse-connections.mount user.slice

cgroup.max.descendants cpuset.cpus.effective dev-hugepages.mount io.stat misc.capacity sys-kernel-config.mount

cgroup.procs cpuset.cpus.isolated dev-mqueue.mount memory.numa_stat misc.current sys-kernel-debug.mount

cgroup.stat cpuset.mems.effective init.scope memory.reclaim misc.peak sys-kernel-tracing.mount

[root@docker cgroup]# cd sc

[root@docker sc]# ls

cgroup.controllers cpu.max.burst hugetlb.1GB.events io.bfq.weight memory.oom.group misc.events

cgroup.events cpuset.cpus hugetlb.1GB.events.local io.latency memory.peak misc.events.local

cgroup.freeze cpuset.cpus.effective hugetlb.1GB.max io.max memory.reclaim misc.max

cgroup.kill cpuset.cpus.exclusive hugetlb.1GB.numa_stat io.stat memory.stat misc.peak

cgroup.max.depth cpuset.cpus.exclusive.effective hugetlb.1GB.rsvd.current io.weight memory.swap.current pids.current

cgroup.max.descendants cpuset.cpus.partition hugetlb.1GB.rsvd.max memory.current memory.swap.events pids.events

cgroup.procs cpuset.mems hugetlb.2MB.current memory.events memory.swap.high pids.events.local

cgroup.stat cpuset.mems.effective hugetlb.2MB.events memory.events.local memory.swap.max pids.max

cgroup.subtree_control cpu.stat hugetlb.2MB.events.local memory.high memory.swap.peak pids.peak

cgroup.threads cpu.stat.local hugetlb.2MB.max memory.low memory.zswap.current rdma.current

cgroup.type cpu.weight hugetlb.2MB.numa_stat memory.max memory.zswap.max rdma.max

cpu.idle cpu.weight.nice hugetlb.2MB.rsvd.current memory.min memory.zswap.writeback

cpu.max hugetlb.1GB.current hugetlb.2MB.rsvd.max memory.numa_stat misc.current# 限制一个进程最多可以使用50%的cpu资源

[root@docker sc]# echo 50000 > /sys/fs/cgroup/sc/cpu.max# 将当前终端的pid号写入,这样当前终端产生的子进程也会受到限制

[root@docker sc]# echo $$ > /sys/fs/cgroup/sc/cgroup.procs

[root@docker sc]# cat /sys/fs/cgroup/sc/cgroup.procs

1643

2581

# 执行脚本方便查看cpu使用率

[root@docker sc]# bash /cgroup/test.sh

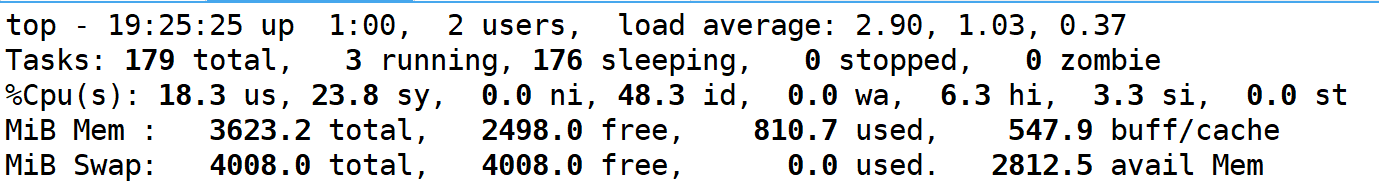

开启另外一个终端,使用top命令查看cpu的使用率

通过 systemd-run 对进程进行内存限制

[root@docker sc]# sudo systemd-run --unit=mem-limit-demo --scope -p MemoryMax=500M -p MemorySwapMax=0 /usr/bin/tail /dev/zero

Running scope as unit: mem-limit-demo.scope

Killed

[root@docker sc]# tail /dev/zero

Killed

[root@docker sc]# systemctl status mem-limit-demo.scope

× mem-limit-demo.scope - /usr/bin/tail /dev/zeroLoaded: loaded (/run/systemd/transient/mem-limit-demo.scope; transient)Transient: yesActive: failed (Result: oom-kill) since Wed 2025-09-10 19:57:49 CST; 1min 43s agoDuration: 2.611sCPU: 2.602sSep 10 19:57:47 docker systemd[1]: Started /usr/bin/tail /dev/zero.

Sep 10 19:57:49 docker systemd[1]: mem-limit-demo.scope: A process of this unit has been killed by the OOM killer.

Sep 10 19:57:49 docker systemd[1]: mem-limit-demo.scope: Failed with result 'oom-kill'.

Sep 10 19:57:49 docker systemd[1]: mem-limit-demo.scope: Consumed 2.602s CPU time.

消耗cpu的命令

[root@docker sc]# yum install stress-ng -y

[root@docker sc]# stress-ng --cpu 2 --timeout 60s

stress-ng: info: [2813] setting to a 1 min run per stressor

stress-ng: info: [2813] dispatching hogs: 2 cpu

stress-ng: info: [2813] skipped: 0

stress-ng: info: [2813] passed: 2: cpu (2)

stress-ng: info: [2813] failed: 0

stress-ng: info: [2813] metrics untrustworthy: 0

stress-ng: info: [2813] successful run completed in 1 min

无论是 systemd-run 还是 stress-ng,其资源控制的底层都依赖 Cgroups:

- systemd 通过 MemoryMax、CPUQuota 等参数自动配置 Cgroups,简化了资源限制的操作;

- 压力测试工具(如 stress-ng、yes、dd)则用于模拟资源消耗,验证 Cgroups 限制是否生效