hadoop-3.3.6和hbase-2.4.13

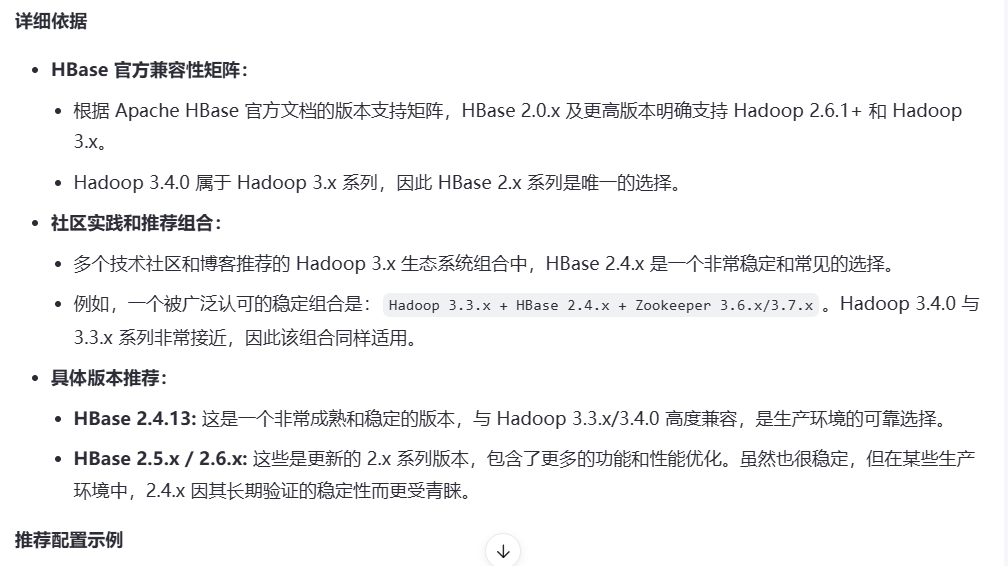

版本选择

首先问问hadoop版本的支持矩阵,在看看大家推荐的组合版本。听劝,总没错

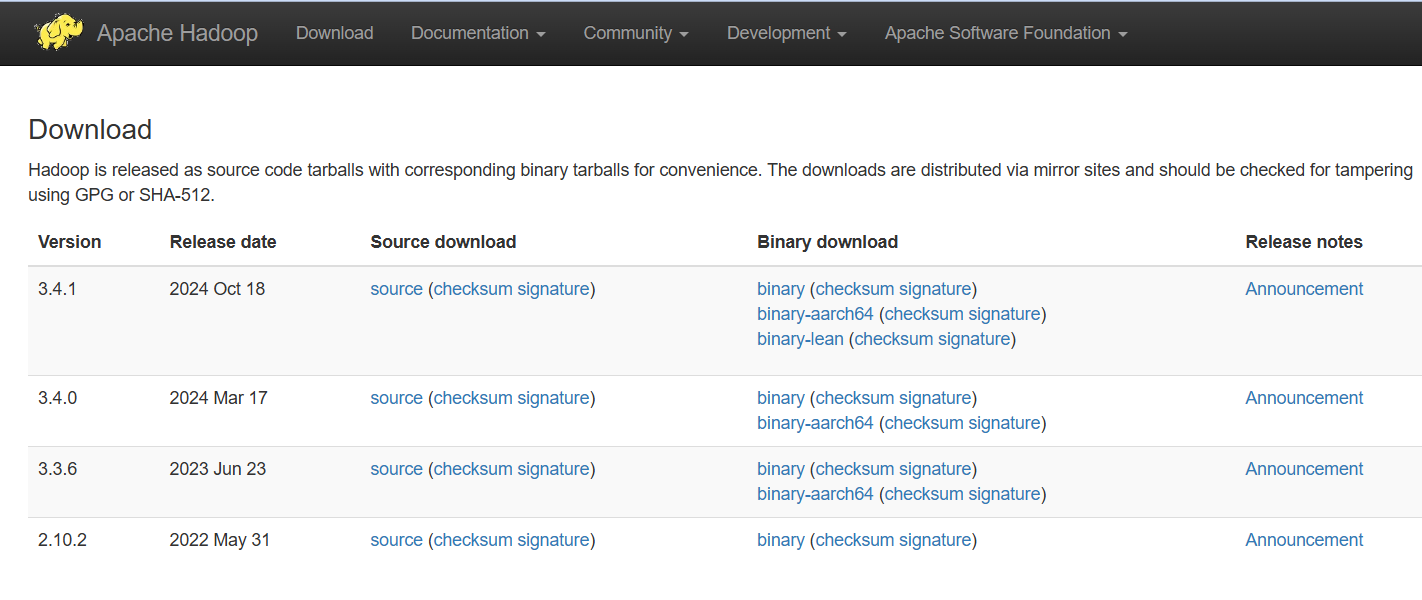

hadop-3.3.6下载

Apache Hadoop![]() https://hadoop.apache.org/releases.html官网一看,hadoop-3.3.6之上还有两个最新的版本,听劝,人家都说此版本最稳定。

https://hadoop.apache.org/releases.html官网一看,hadoop-3.3.6之上还有两个最新的版本,听劝,人家都说此版本最稳定。

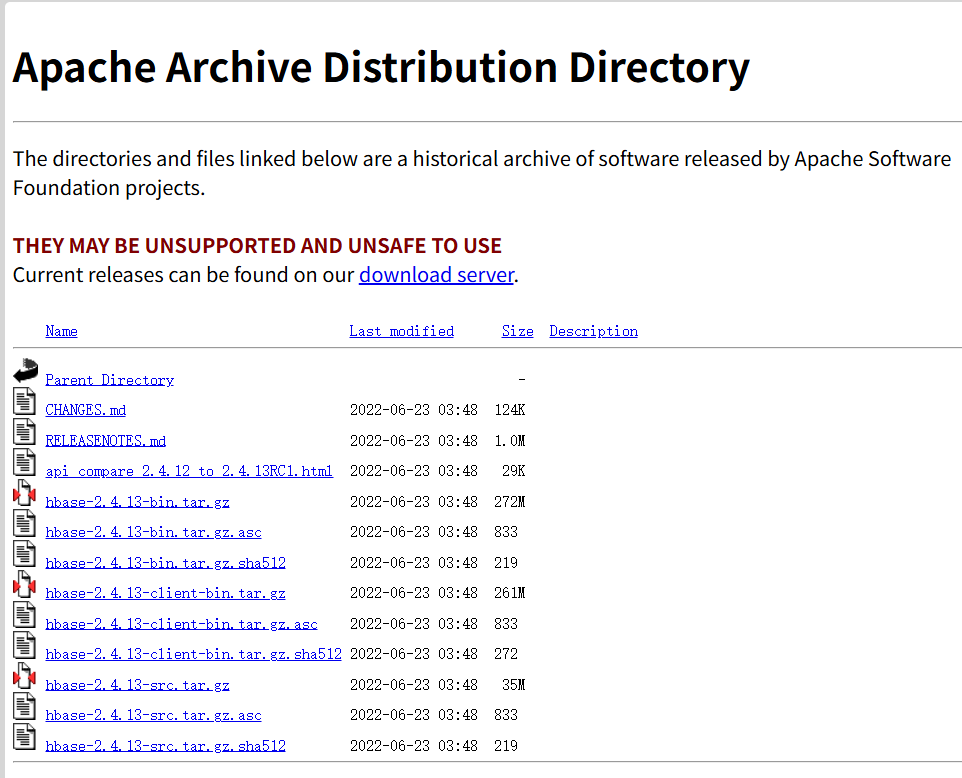

hbase-2.4.13下载

hbase-2.4.13

hadoop安装

core-site.xml

<configuration><property><name>fs.defaultFS</name><value>hdfs://10.10.10.10:8020</value></property><property><name>io.file.buffer.size</name><value>131072</value></property>

</configuration>hdfs-site.xml

<property><name>dfs.namenode.http-address</name><value>10.10.10.10:9870</value> </property><property><name>dfs.namenode.name.dir</name><value>file:///data/hadoop/apps/hadoop-3.3.6/data/hdfs/nn</value></property><property><name>dfs.namenode.hosts</name><value>10.10.10.10</value></property><property><name>dfs.blocksize</name><value>268435456</value> <!-- 256MB --></property><property><name>dfs.namenode.handler.count</name><value>20</value></property><property><name>dfs.datanode.data.dir</name><value>file:///data/hadoop/apps/hadoop-3.3.6/data/hdfs/dn</value></property><property><name>dfs.replication</name><value>3</value></property><property><name>dfs.permissions.enabled</name><value>true</value></property>mapred-site.xml

<property><name>mapreduce.framework.name</name> <value>yarn</value> </property><property> <name>mapreduce.application.classpath</name><value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/</value></property>yarn-site.xml

<!-- Site specific YARN configuration properties --><!-- 启用 MapReduce Shuffle 服务 --><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><!-- NodeManager 环境变量白名单(增强版) --><property><name>yarn.nodemanager.env-whitelist</name><value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME,PYTHON_HOME,CONDA_HOME</value></property><!-- NodeManager 可用物理内存(MB) --><property><name>yarn.nodemanager.resource.memory-mb</name><value>4096</value></property><!-- NodeManager 可用虚拟 CPU 核心数 --><property><name>yarn.nodemanager.resource.cpu-vcores</name><value>4</value></property><!-- 容器最小内存 --><property><name>yarn.scheduler.minimum-allocation-mb</name><value>1024</value></property><!-- 容器最大内存 --><property><name>yarn.scheduler.maximum-allocation-mb</name><value>2048</value></property><!-- 启用日志聚合 --><property><name>yarn.log-aggregation-enable</name><value>true</value></property><property><name>yarn.log-aggregation.retain-seconds</name><value>604800</value> <!-- 7 天 --></property><!-- ResourceManager 地址(替换为你的 RM 主机) --><property><name>yarn.resourcemanager.address</name><value>10.10.10.10:8032</value></property><property><name>yarn.resourcemanager.scheduler.address</name><value>10.10.10.10:8030</value></property><property><name>yarn.resourcemanager.webapp.address</name><value>10.10.10.10:8088</value> </property>hadoop-env.sh

# less hadoop-env.sh |grep -v "#" | grep -v "^$"

export JAVA_HOME=/data/hadoop/apps/jdk8

export HADOOP_HOME=/data/hadoop/apps/hadoop-3.3.6

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HADOOP_NAMENODE_OPTS="-Xms1024m -Xmx1024m -XX:+UseParallelGC -Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Xms1024m -Xmx1024m -Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS"

export HADOOP_OS_TYPE=${HADOOP_OS_TYPE:-$(uname -s)}

export HADOOP_SSH_OPTS="-o Port=2222 -o StrictHostKeyChecking=no -o ConnectTimeout=10s"

export HADOOP_LOG_DIR=${HADOOP_HOME}/logs

export HADOOP_PID_DIR=${HADOOP_HOME}/tmp格式化

# hdfs namenode -format

2025-08-21 14:52:09,479 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = host-10-10-10-10/10.10.10.10

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.3.6

。。。

2025-08-21 14:52:12,066 INFO namenode.FSImage: Allocated new BlockPoolId: BP-314140009-10.10.10.10-1755759132057

2025-08-21 14:52:12,128 INFO common.Storage: Storage directory /data/hadoop/apps/hadoop-3.3.6/data/hdfs/nn has been successfully formatted.

2025-08-21 14:52:12,173 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hadoop/apps/hadoop-3.3.6/data/hdfs/nn/current/fsimage.ckpt_0000000000000000000 using no compression

2025-08-21 14:52:12,464 INFO namenode.FSImageFormatProtobuf: Image file /data/hadoop/apps/hadoop-3.3.6/data/hdfs/nn/current/fsimage.ckpt_0000000000000000000 of size 396 bytes saved in 0 seconds .

2025-08-21 14:52:12,479 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2025-08-21 14:52:12,541 INFO namenode.FSNamesystem: Stopping services started for active state

2025-08-21 14:52:12,541 INFO namenode.FSNamesystem: Stopping services started for standby state

2025-08-21 14:52:12,546 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2025-08-21 14:52:12,547 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at host-10-10-10-10/10.10.10.10

************************************************************/一键启动

# ./start-all.sh

WARNING: Attempting to start all Apache Hadoop daemons as hadoop in 10 seconds.

WARNING: This is not a recommended production deployment configuration.

WARNING: Use CTRL-C to abort.

Starting namenodes on [host-10-10-10-10]

host-10-10-10-10: Warning: Permanently added '[host-10-10-10-10]:2222' (ED25519) to the list of known hosts.

hadoop@host-10-10-10-10's password:

host-10-10-10-10: WARNING: HADOOP_NAMENODE_OPTS has been replaced by HDFS_NAMENODE_OPTS. Using value of HADOOP_NAMENODE_OPTS.

Starting datanodes

10.10.10.10: Warning: Permanently added '[10.10.10.10]:2222' (ED25519) to the list of known hosts.

hadoop@10.10.10.10's password:

10.10.10.10: WARNING: HADOOP_DATANODE_OPTS has been replaced by HDFS_DATANODE_OPTS. Using value of HADOOP_DATANODE_OPTS.

Starting secondary namenodes [host-10-10-10-10]

hadoop@host-10-10-10-10's password:

host-10-10-10-10: WARNING: HADOOP_SECONDARYNAMENODE_OPTS has been replaced by HDFS_SECONDARYNAMENODE_OPTS. Using value of HADOOP_SECONDARYNAMENODE_OPTS.

Starting resourcemanager

Starting nodemanagers

hadoop@10.10.10.10's password: 一直输密码还能忍,当然得陪配置免密了。

# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Your identification has been saved in /data/hadoop/.ssh/id_rsa

Your public key has been saved in /data/hadoop/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:p/G9guDQ5ab/XyyRUf4vQSpuobZPTSrb2UhdBu4A9B4 hadoop@host-10-10-10-10

The key's randomart image is:

+---[RSA 3072]----+

| . . |

| . . o |

| . E o o |

| .o o * . |

| . oS * * + .|

| . o oB @ = ..|

| o +=.B * + .|

| o..O.+ + . |

| .+o*o+ |

+----[SHA256]-----+

[hadoop@host-10-10-10-10 sbin]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hadoop@host-10-10-10-10 sbin]$ chmod 700 ~/.ssh/

[hadoop@host-10-10-10-10 sbin]$ chmod 644 ~/.ssh/authorized_keys jps查看

# jps

17424 NameNode

17568 DataNode

18721 Jps

15378 ResourceManager

17846 SecondaryNameNode

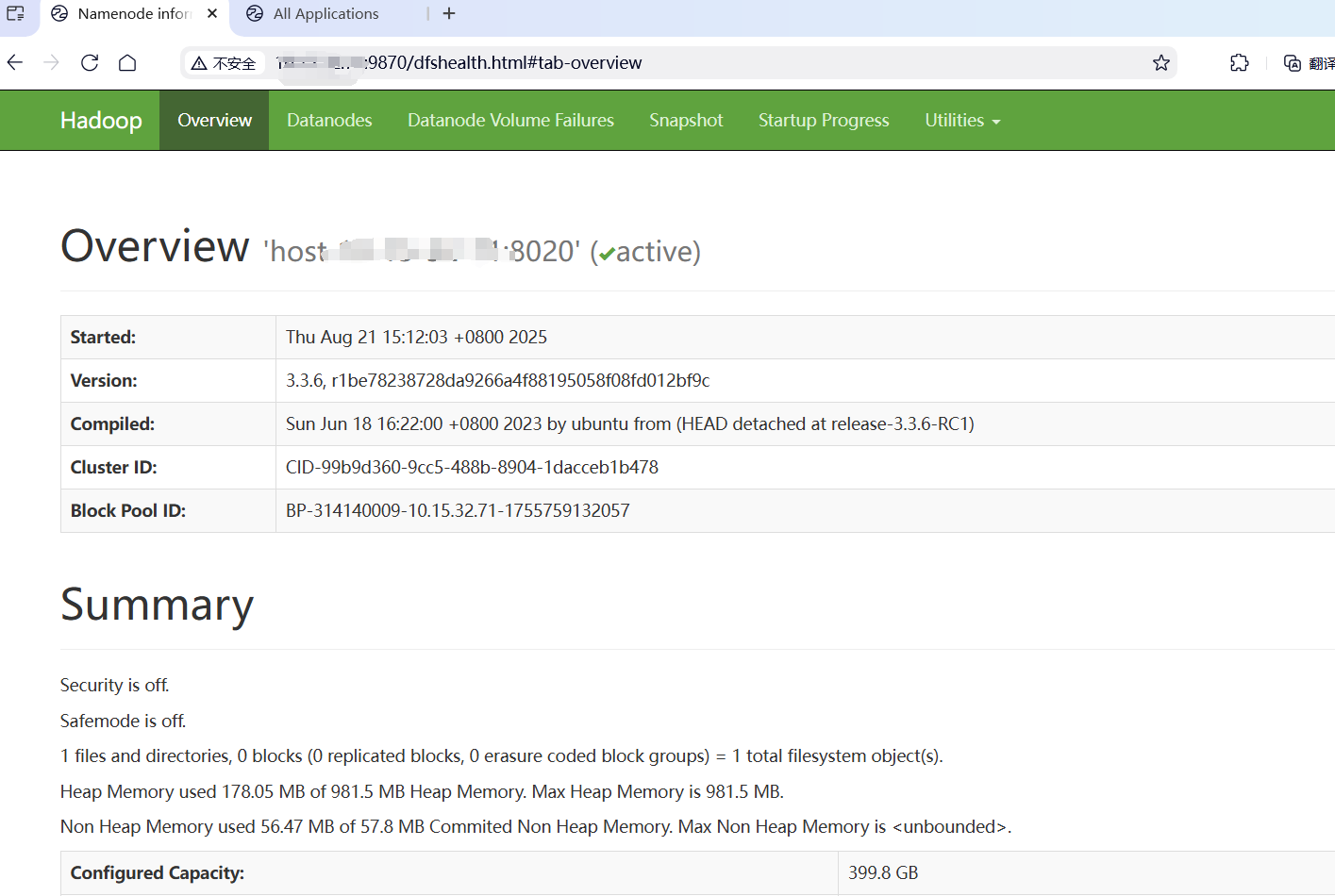

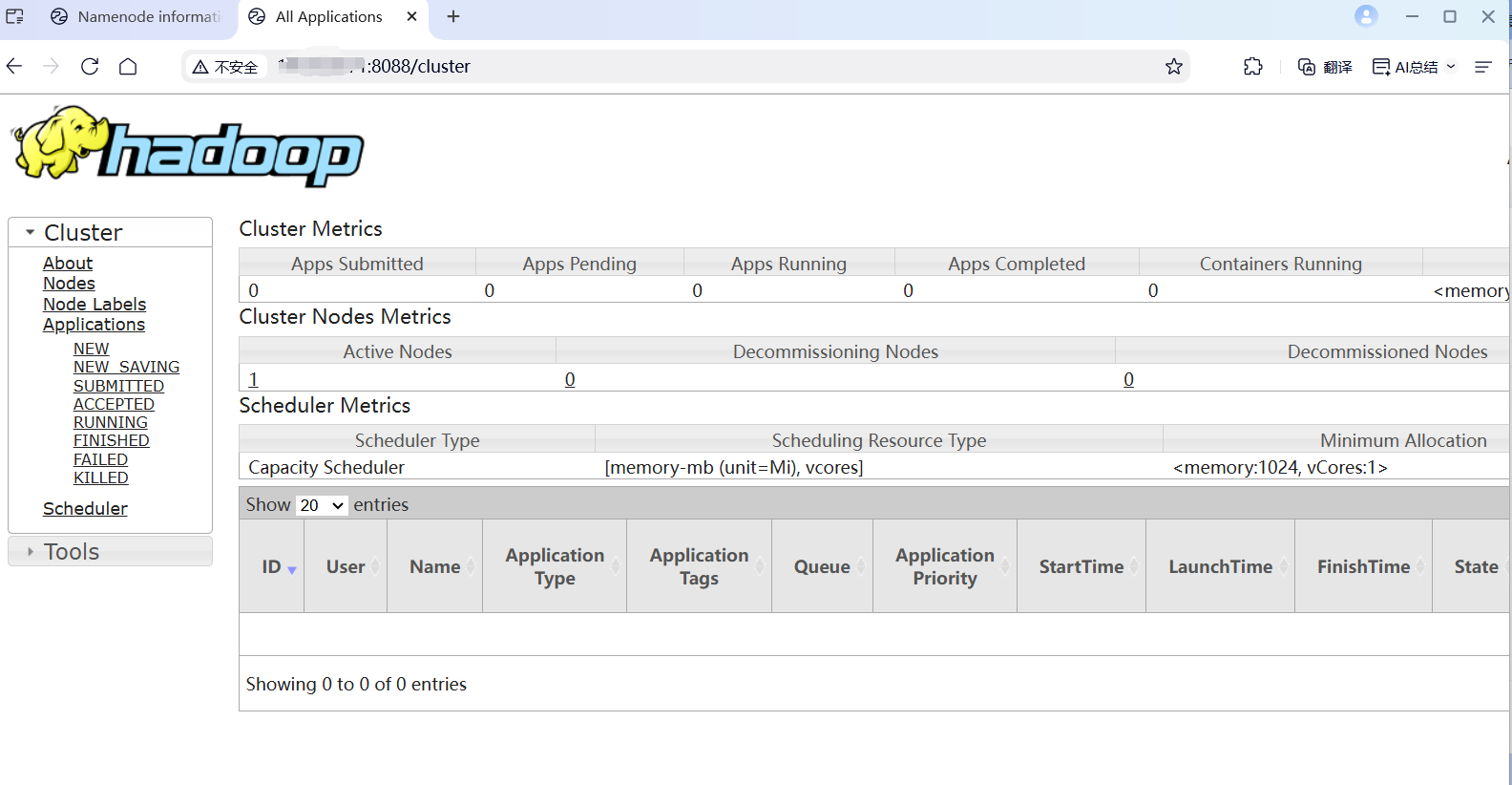

18298 NodeManager页面查看

hdfs-site.xml的dfs.namenode.http-address配置信息

hadoop的页面

yarn-site.xml的yarn.resourcemanager.webapp.address

hbase安装

hbase-site.xml

<property><name>hbase.rootdir</name><value>hdfs://10.10.10.10:8020/hbase</value></property><!-- 分布式模式 --><property><name>hbase.cluster.distributed</name><value>true</value></property><property><name>hbase.wal.provider</name><value>filesystem</value><!-- 可选:显式关闭 Async WAL --><!-- <value>default</value> 也可以 --></property><property><name>hbase.wal.dir</name><value>hdfs://10.10.10.10:8020/hbase-wals</value></property><!-- ZooKeeper 地址(如果使用外部 ZooKeeper) --><property><name>hbase.zookeeper.quorum</name><value>10.10.10.10</value></property><property><name>hbase.zookeeper.property.clientPort</name><value>12181</value></property><!-- 关闭 stream capability 强制检查(根据环境决定) --><property><name>hbase.unsafe.stream.capability.enforce</name><value>true</value></property>hbase-env.sh

# less hbase-env.sh |grep -v "#" | grep -v "^$"

export JAVA_HOME=/data/hadoop/apps/jdk8

export HBASE_HEAPSIZE=2G

export HBASE_HOME=/data/hadoop/apps/hbase

export HADOOP_HOME=/data/hadoop/apps/hadoop-3.3.6

export HBASE_SSH_OPTS="-o Port=2222 -o ConnectTimeout=1 -o SendEnv=HBASE_CONF_DIR"

export HBASE_LOG_DIR=${HBASE_HOME}/logs

export HBASE_MANAGES_ZK=false新增hadoop配置

# 假设 Hadoop 配置在 /data/apps/hadoop-3.3.6/etc/hadoop/

# HBase 安装在 /data/apps/hbase_2.4.14/cd /data/apps/hbase_2.4.14/conf# 创建软链接

ln -s /data/apps/hadoop-3.3.6/etc/hadoop/core-site.xml core-site.xml

ln -s /data/apps/hadoop-3.3.6/etc/hadoop/hdfs-site.xml hdfs-site.xml报错一

2025-08-21 16:47:43,112 WARN [RS-EventLoopGroup-1-2] concurrent.DefaultPromise: An exception was thrown by org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper$4.operationComplete()

java.lang.IllegalArgumentException: object is not an instance of declaring classat sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.hbase.io.asyncfs.ProtobufDecoder.<init>(ProtobufDecoder.java:64)at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper.processWriteBlockResponse(FanOutOneBlockAsyncDFSOutputHelper.java:342)at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper.access$100(FanOutOneBlockAsyncDFSOutputHelper.java:112)at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper$4.operationComplete(FanOutOneBlockAsyncDFSOutputHelper.java:424)at org.apache.hbase.thirdparty.io.netty.util.concurrent.DefaultPromise.notifyListener0(DefaultPromise.java:578)at org.apache.hbase.thirdparty.io.netty.util.concurrent.DefaultPromise.notifyListenersNow(DefaultPromise.java:552)at org.apache.hbase.thirdparty.io.netty.util.concurrent.DefaultPromise.notifyListeners(DefaultPromise.java:491)at org.apache.hbase.thirdparty.io.netty.util.concurrent.DefaultPromise.addListener(DefaultPromise.java:184)at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper.initialize(FanOutOneBlockAsyncDFSOutputHelper.java:418)at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper.access$300(FanOutOneBlockAsyncDFSOutputHelper.java:112)问题根源分析

这个异常发生在 HBase 的 异步 WAL 写入模块(AsyncFSWAL),具体是:

- 类:

FanOutOneBlockAsyncDFSOutputHelper - 方法:

operationComplete()回调 - 原因:反射调用失败,

Method.invoke()时传入的对象类型不匹配。

可能原因:

| 原因 | 说明 |

|---|---|

| HBase 与 Hadoop 版本不兼容 | 最常见!HBase 使用了 Hadoop 内部类(如 DFSOutputStream),但 Hadoop 版本不同导致类结构变化,反射失败。 |

| HBase 使用了异步 WAL(AsyncFSWAL),但 Hadoop 不支持或存在 bug | Async WAL 依赖 Netty 和 Hadoop 的底层异步写入接口,版本不匹配容易出问题。 |

| JAR 包冲突 | 比如 HBase 自带的 Hadoop 客户端版本与集群不一致。 |

解决方案

✅ 方案 1:检查 HBase 与 Hadoop 版本兼容性(最重要!)

HBase 对 Hadoop 版本有严格要求。请确认:

| 工具 | 版本 | 检查命令 |

|---|---|---|

| HBase | hbase version 或 hbase --version | |

| Hadoop | hadoop version |

✅ 官方兼容性参考(HBase 2.4.x):

| HBase 版本 | 推荐 Hadoop 版本 |

|---|---|

| HBase 2.4.x | Hadoop 3.1.x - 3.3.x |

🔗 参考:HBase 官方文档 - 配置 Hadoop

检查后发现

# hbase version

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/data/hadoop/apps/hbase/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/data/hadoop/apps/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

HBase 2.4.13

Source code repository git://buildbox/home/apurtell/tmp/RM-2.4/hbase revision=90fb1ddc1df9b345f26687d5d24cedfb19621d63

Compiled by apurtell on Wed Jun 22 20:16:39 PDT 2022

From source with checksum 1247916416d2a3239678ac2d5bda617f149d9e25cfce484d6352284cf826fc4515f06ea2f06a613a76d31aa76409bf7512d84c7380b5b4cf7cdcd67ba129f768

[hadoop@host-10-10-10-10 bin]$

[hadoop@host-10-10-10-10 bin]$

# hadoop version

Hadoop 3.3.6

Source code repository https://github.com/apache/hadoop.git -r 1be78238728da9266a4f88195058f08fd012bf9c

Compiled by ubuntu on 2023-06-18T08:22Z

Compiled on platform linux-x86_64

Compiled with protoc 3.7.1

From source with checksum 5652179ad55f76cb287d9c633bb53bbd

This command was run using /data/hadoop/apps/hadoop-3.3.6/share/hadoop/common/hadoop-common-3.3.6.jar这就不是版本的事,继续找。

✅ 方案 2:临时禁用 Async WAL(推荐用于排查)

在 hbase-site.xml 中关闭异步 WAL 写入,改用传统的 FSWAL:

<property><name>hbase.wal.provider</name><value>filesystem</value><!-- 可选:显式关闭 Async WAL --><!-- <value>default</value> 也可以 -->

</property>Async WAL 的值是

asyncfs,默认是filesystem(即同步 WAL)。

✅ 这样可以绕过 FanOutOneBlockAsyncDFSOutputHelper 的问题。

新增配置,用方案二解决了。

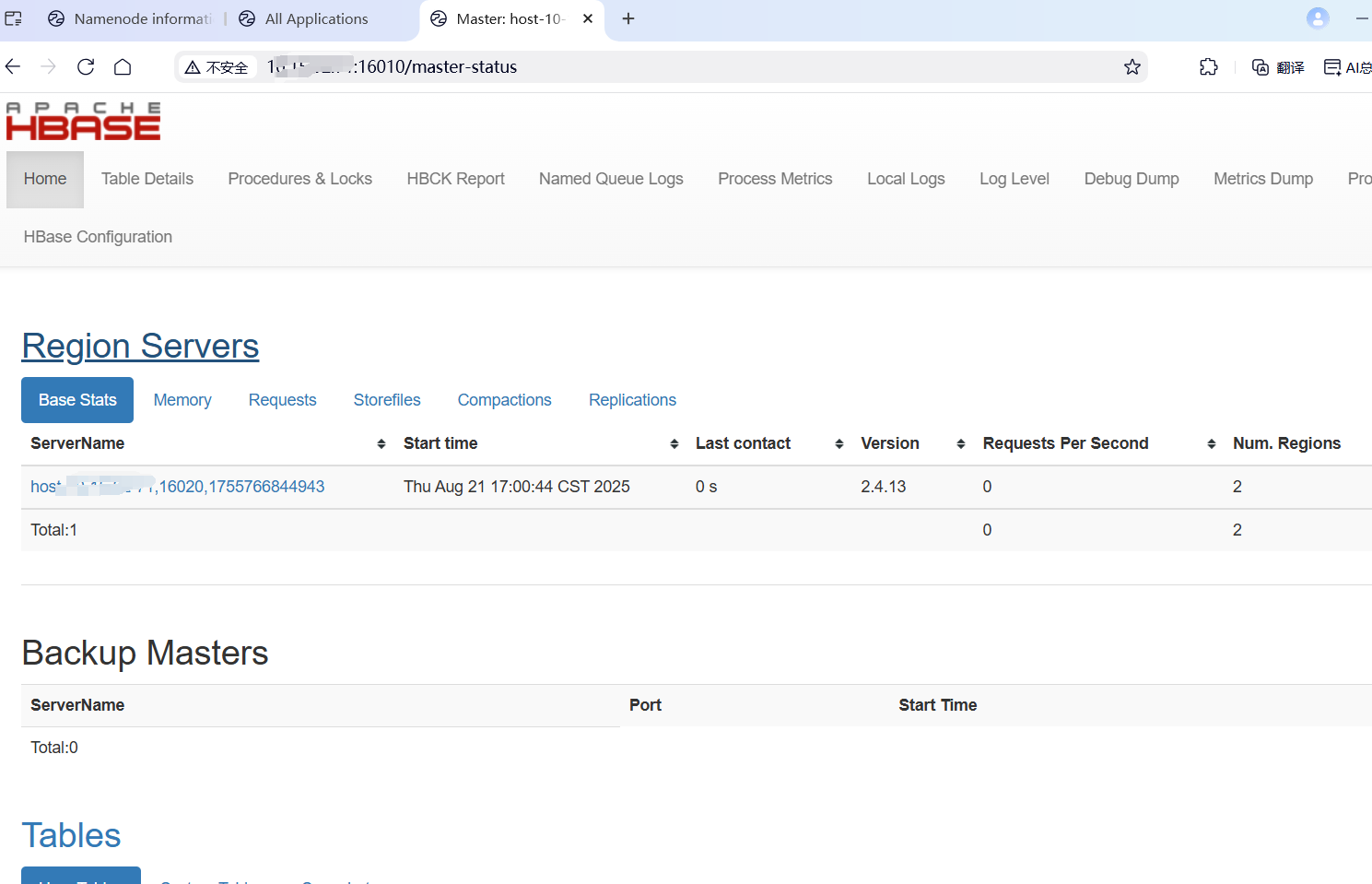

页面

测试

#./hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/data/apps/hbase/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/data/apps/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.4.13, r90fb1ddc1df9b345f26687d5d24cedfb19621d63, Wed Jun 22 20:16:39 PDT 2022

Took 0.0020 seconds

hbase:001:0> list

TABLE

0 row(s)

Took 0.5458 seconds

=> []

hbase:002:0> create 'emp','a'

Created table emp

Took 0.7416 seconds

=> Hbase::Table - emp

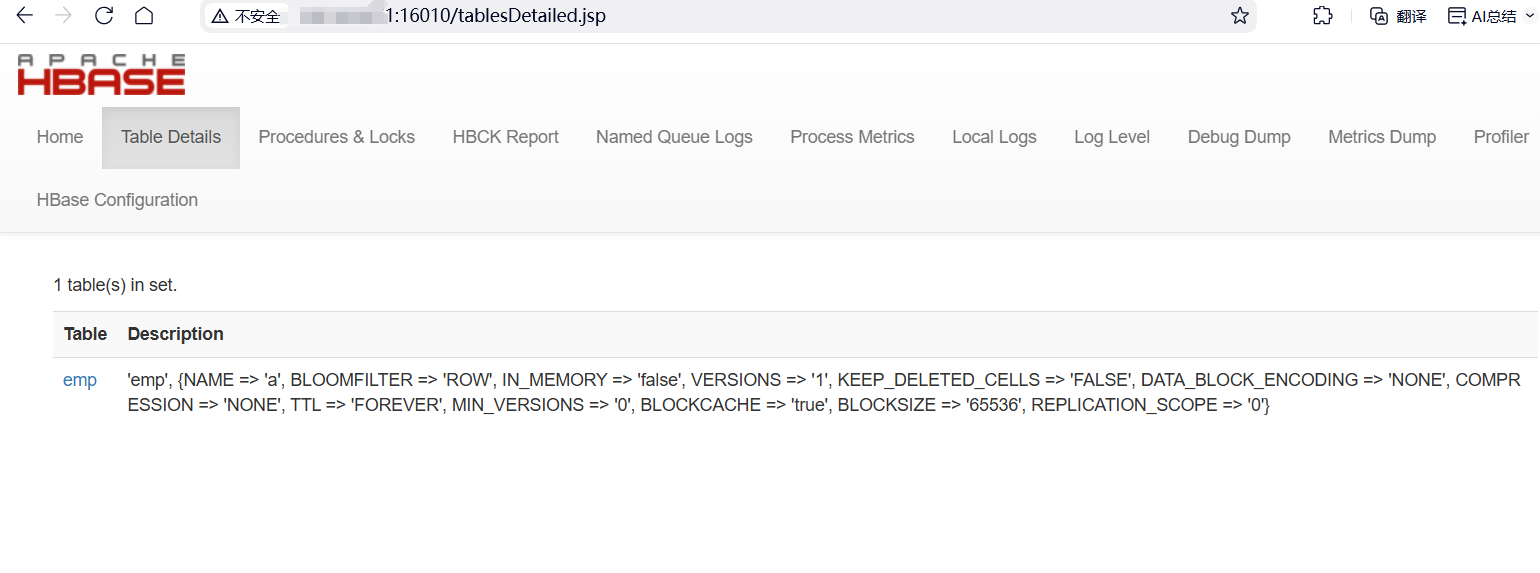

hbase:003:0> describe 'emp'

Table emp is ENABLED

emp

COLUMN FAMILIES DESCRIPTION

{NAME => 'a', BLOOMFILTER => 'ROW', IN_MEMORY => 'false', VERSIONS => '1', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', COMPRESSION => 'NON

E', TTL => 'FOREVER', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'} 1 row(s)

Quota is disabled

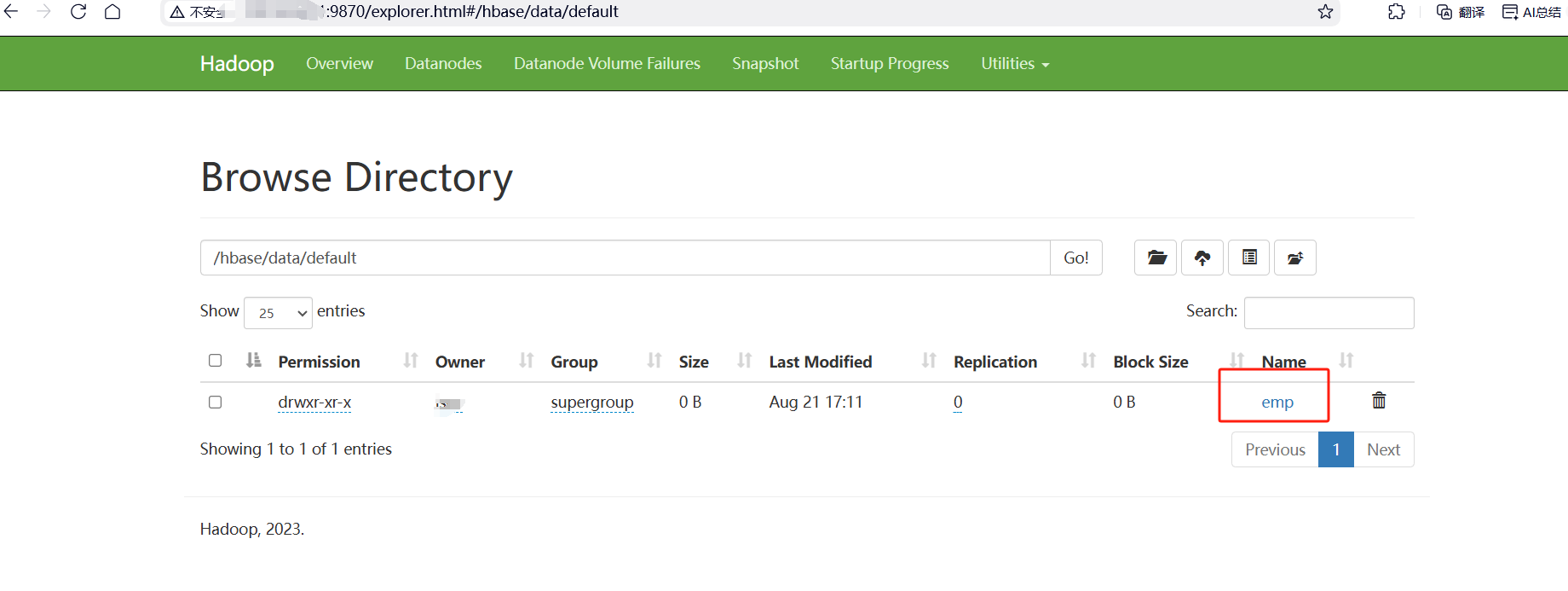

Took 0.2157 seconds 页面查看

hdfs中查看

# hdfs dfs -ls /hbase/data/default

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2025-08-21 17:11 /hbase/data/default/emphdfs常用的命令

# 列出所有表

hdfs dfs -ls /hbase/data/default/# 查看某表的所有 Region

hdfs dfs -ls /hbase/data/default/<table_name>/# 查看某 Region 的列族数据

hdfs dfs -ls /hbase/data/default/<table_name>/<region_id>/<column_family>/# 查看 HFile 文件大小

hdfs dfs -du -h /hbase/data/default/my_table/# 查看某个 HFile 内容(需用 HBase 工具)

hbase org.apache.hadoop.hbase.io.hfile.HFile -v -f hdfs://10.15.32.71:9000/hbase/data/default/my_table/.../123456789.hfile# 检查 HBase 根目录是否存在

hdfs dfs -ls /hbase