YOLOv8交通信号灯检测

如果你还不懂YOLOv8的安装、推理,自定义数据集训练与搭建的,可以看一下老师这篇文章和视频

https://blog.csdn.net/chajinglong/article/details/149105590?spm=1001.2014.3001.5501![]() https://blog.csdn.net/chajinglong/article/details/149105590?spm=1001.2014.3001.5501

https://blog.csdn.net/chajinglong/article/details/149105590?spm=1001.2014.3001.5501

https://www.bilibili.com/video/BV1qtHeeMEnC/?spm_id_from=333.337.search-card.all.click&vd_source=27c8ea1c143ecfe9f586177e5e7027cfhttps%3A%2F%2Fwww.bilibili.com%2Fvideo%2FBV1qtHeeMEnC%2F%3Fspm_id_from%3D333.337.search-card.all.click&vd_source=27c8ea1c143ecfe9f586177e5e7027cf![]() https://www.bilibili.com/video/BV1qtHeeMEnC/?spm_id_from=333.337.search-card.all.click&vd_source=27c8ea1c143ecfe9f586177e5e7027cfhttps%3A%2F%2Fwww.bilibili.com%2Fvideo%2FBV1qtHeeMEnC%2F%3Fspm_id_from%3D333.337.search-card.all.click&vd_source=27c8ea1c143ecfe9f586177e5e7027cf

https://www.bilibili.com/video/BV1qtHeeMEnC/?spm_id_from=333.337.search-card.all.click&vd_source=27c8ea1c143ecfe9f586177e5e7027cfhttps%3A%2F%2Fwww.bilibili.com%2Fvideo%2FBV1qtHeeMEnC%2F%3Fspm_id_from%3D333.337.search-card.all.click&vd_source=27c8ea1c143ecfe9f586177e5e7027cf

很多同学问老师,这些课程能不能把文档写出来,分享到更多的人,那么今天它来了,整理成大纲给到同学们,希望能够帮助到你们,如果觉得写的可以,可以对照上面的视频和详细资料进行学习。

【YOLOv8零基础从入门到实战进阶系列教程】

https://www.bilibili.com/cheese/play/ep1342527?query_from=0&search_id=6883789517812043464&search_query=yolov8&csource=common_hpsearch_null_null&spm_id_from=333.337.search-card.all.click![]() https://www.bilibili.com/cheese/play/ep1342527?query_from=0&search_id=6883789517812043464&search_query=yolov8&csource=common_hpsearch_null_null&spm_id_from=333.337.search-card.all.click

https://www.bilibili.com/cheese/play/ep1342527?query_from=0&search_id=6883789517812043464&search_query=yolov8&csource=common_hpsearch_null_null&spm_id_from=333.337.search-card.all.click

目录

1 前言

1.1 | 物体检测

1.2 | YOLOv8

1.3 | 研究目标

2 | 安装和导入必要的库

3 | 数据集

3.1 | 在检测之前显示原始图像

3.1.1. 显示一些训练集中的图像

3.1.2. 获取图像的形状,以便在训练步骤中使用

4 | 预训练的YOLOv8检测交通标志

5 | 基于YOLOv8的交通标志检测模型

5.1 | 使用自定义交通标志数据集进行模型训练

5.1.1. 训练步骤

运行历史:

总结:

6 | 使用预训练的YOLOv8从视频中检测交通标志

7 | 保存模型

1 前言

1.1 | 物体检测

计算机视觉是人工智能的一个领域,专注于教计算机如何解释和理解视觉信息。计算机视觉中一个流行且强大的技术是YOLO,意味着“你只看一次”(You Only Look Once)。

YOLO旨在实时识别和定位图像或视频流中的物体。与传统方法依赖复杂管道和多次处理不同,YOLO采取了不同的方式,将物体检测视为一个单一的回归问题。该算法将输入图像划分为网格,并预测每个网格单元内物体的边界框和类别概率。它同时预测类别标签及其对应的边界框,使得YOLO在处理速度和效率上非常出色。YOLO以其实时性能著称,使其能够以惊人的速度处理图像和视频。

通过利用深度卷积神经网络,YOLO能够学习识别各种物体,并准确地定位它们在图像中的位置。它能够同时检测不同类别的多个物体,特别适用于那些对实时处理和高检测精度要求极高的应用场景,如自动驾驶、视频监控和机器人技术。

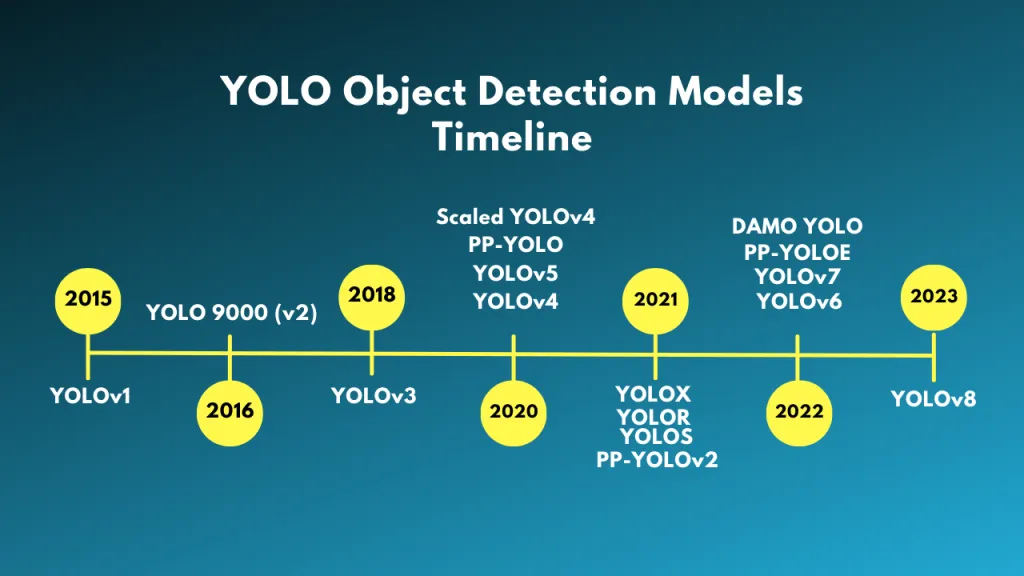

1.2 | YOLOv8

YOLOv8是由Ultralytics开发的YOLO AI模型的最新版本,在分类、物体检测和图像分割等任务中展现了卓越的效果。YOLOv8模型快速、准确且易于使用,成为各种物体检测和图像分割任务的理想选择。它们可以在大型数据集上进行训练,并能在多种硬件平台上运行,从CPU到GPU均可适用。YOLOv8检测模型没有后缀,是默认的YOLOv8模型(例如:yolov8n.pt),并且是预先在COCO数据集上训练的。更多详情请参阅检测文档。

1.3 | 研究目标

使用YOLOv8实现标志检测在多个实际应用中具有巨大的潜力。例如,它可以大大增强交通管理系统,实现对各种交通标志的高效检测和识别。这项技术在提高道路安全方面发挥着重要作用,使得车辆能够准确地解读和响应交通标志传递的信息。此外,利用YOLOv8进行标志检测还能在城市规划和基础设施建设中发挥作用,通过分析不同地区标志的存在和状况提供有价值的数据支持。

通过开展这个项目,我的目标是利用YOLOv8的强大能力,开发一个可靠的标志检测解决方案,从而提高各个依赖于精确高效标志识别的领域的工作效率和安全性。

2 | 安装和导入必要的库

In [1]:

# Install Essential Libraries

!pip install ultralyticsCollecting ultralyticsDownloading ultralytics-8.2.58-py3-none-any.whl (802 kB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 802.7/802.7 kB 4.0 MB/s eta 0:00:00a 0:00:01

Requirement already satisfied: numpy<2.0.0,>=1.23.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (1.23.5)

Requirement already satisfied: matplotlib>=3.3.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (3.7.2)

Requirement already satisfied: opencv-python>=4.6.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (4.8.0.76)

Requirement already satisfied: pillow>=7.1.2 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (9.5.0)

Requirement already satisfied: pyyaml>=5.3.1 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (6.0)

Requirement already satisfied: requests>=2.23.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.31.0)

Requirement already satisfied: scipy>=1.4.1 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (1.11.2)

Requirement already satisfied: torch>=1.8.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.0.0)

Requirement already satisfied: torchvision>=0.9.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (0.15.1)

Requirement already satisfied: tqdm>=4.64.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (4.66.1)

Requirement already satisfied: psutil in /opt/conda/lib/python3.10/site-packages (from ultralytics) (5.9.3)

Requirement already satisfied: py-cpuinfo in /opt/conda/lib/python3.10/site-packages (from ultralytics) (9.0.0)

Requirement already satisfied: pandas>=1.1.4 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.0.2)

Requirement already satisfied: seaborn>=0.11.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (0.12.2)

Collecting ultralytics-thop>=2.0.0 (from ultralytics)Downloading ultralytics_thop-2.0.0-py3-none-any.whl (25 kB)

Requirement already satisfied: contourpy>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (1.1.0)

Requirement already satisfied: cycler>=0.10 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (4.40.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (1.4.4)

Requirement already satisfied: packaging>=20.0 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (21.3)

Requirement already satisfied: pyparsing<3.1,>=2.3.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /opt/conda/lib/python3.10/site-packages (from pandas>=1.1.4->ultralytics) (2023.3)

Requirement already satisfied: tzdata>=2022.1 in /opt/conda/lib/python3.10/site-packages (from pandas>=1.1.4->ultralytics) (2023.3)

Requirement already satisfied: charset-normalizer<4,>=2 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (3.1.0)

Requirement already satisfied: idna<4,>=2.5 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (1.26.15)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (2023.7.22)

Requirement already satisfied: filelock in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (3.12.2)

Requirement already satisfied: typing-extensions in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (4.6.3)

Requirement already satisfied: sympy in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (1.12)

Requirement already satisfied: networkx in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (3.1)

Requirement already satisfied: jinja2 in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (3.1.2)

Requirement already satisfied: six>=1.5 in /opt/conda/lib/python3.10/site-packages (from python-dateutil>=2.7->matplotlib>=3.3.0->ultralytics) (1.16.0)

Requirement already satisfied: MarkupSafe>=2.0 in /opt/conda/lib/python3.10/site-packages (from jinja2->torch>=1.8.0->ultralytics) (2.1.3)

Requirement already satisfied: mpmath>=0.19 in /opt/conda/lib/python3.10/site-packages (from sympy->torch>=1.8.0->ultralytics) (1.3.0)

Installing collected packages: ultralytics-thop, ultralytics

Successfully installed ultralytics-8.2.58 ultralytics-thop-2.0.0In [2]:

# Import Essential Libraries

import os

import random

import pandas as pd

from PIL import Image

import cv2

from ultralytics import YOLO

from IPython.display import Video

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set(style='darkgrid')

import pathlib

import glob

from tqdm.notebook import trange, tqdm

import warnings

warnings.filterwarnings('ignore')unfold_moreShow hidden output

In [3]:

# Configure the visual appearance of Seaborn plots

sns.set(rc={'axes.facecolor': '#eae8fa'}, style='darkgrid')3 | 数据集

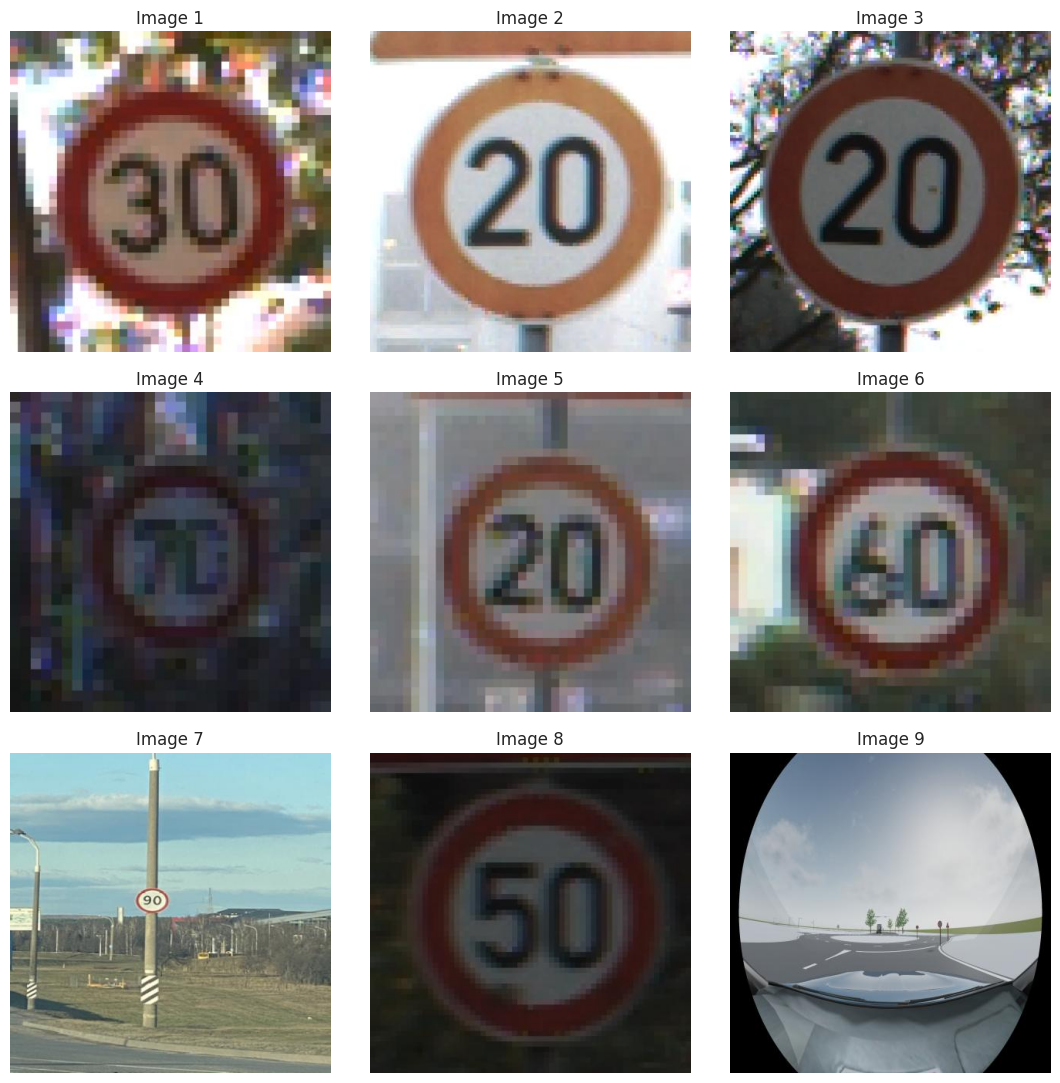

3.1 | 在检测之前显示原始图像

3.1.1. 显示一些训练集中的图像

In [4]:

Image_dir = '/kaggle/input/cardetection/car/train/images'num_samples = 9

image_files = os.listdir(Image_dir)# Randomly select num_samples images

rand_images = random.sample(image_files, num_samples)fig, axes = plt.subplots(3, 3, figsize=(11, 11))for i in range(num_samples):image = rand_images[i]ax = axes[i // 3, i % 3]ax.imshow(plt.imread(os.path.join(Image_dir, image)))ax.set_title(f'Image {i+1}')ax.axis('off')plt.tight_layout()

plt.show()

3.1.2. 获取图像的形状,以便在训练步骤中使用

In [5]:

# Get the size of the image

image = cv2.imread("/kaggle/input/cardetection/car/train/images/00000_00000_00012_png.rf.23f94508dba03ef2f8bd187da2ec9c26.jpg")

h, w, c = image.shape

print(f"The image has dimensions {w}x{h} and {c} channels.")The image has dimensions 416x416 and 3 channels.4 | 预训练的YOLOv8检测交通标志

In [6]:

# Use a pretrained YOLOv8n model

model = YOLO("yolov8n.pt") # Use the model to detect object

image = "/kaggle/input/cardetection/car/train/images/FisheyeCamera_1_00228_png.rf.e7c43ee9b922f7b2327b8a00ccf46a4c.jpg"

result_predict = model.predict(source = image, imgsz=(640))# show results

plot = result_predict[0].plot()

plot = cv2.cvtColor(plot, cv2.COLOR_BGR2RGB)

display(Image.fromarray(plot))Downloading https://github.com/ultralytics/assets/releases/download/v8.2.0/yolov8n.pt to 'yolov8n.pt'...100%|██████████| 6.25M/6.25M [00:00<00:00, 78.3MB/s]image 1/1 /kaggle/input/cardetection/car/train/images/FisheyeCamera_1_00228_png.rf.e7c43ee9b922f7b2327b8a00ccf46a4c.jpg: 640x640 (no detections), 7.9ms

Speed: 10.4ms preprocess, 7.9ms inference, 55.1ms postprocess per image at shape (1, 3, 640, 640)

5 | 基于YOLOv8的交通标志检测模型

5.1 | 使用自定义交通标志数据集进行模型训练

平均精度均值 (mAP) 是用来评估目标检测算法在图像中识别和定位物体效果的一个指标。它同时考虑了不同类别的精度和召回率。通过计算每个类别的平均精度 (AP),然后取平均值,mAP为算法性能提供了一个整体评估。

如果在最后一个训练周期(epoch)后获得的mAP结果不令人满意,可以通过以下几种方式来提高模型效果:

- 延长训练过程,通过增加训练周期数 (epochs):训练更多周期可以让模型学习更多的模式,可能会提高性能。你可以在运行训练命令时通过增加

--epochs参数的值来实现。 - 尝试不同的参数值:你可以尝试调整不同的参数,看看是否能对结果产生积极影响。以下是一些可以调整的参数:

-

- 批量大小 (Batch Size):改变批量大小会影响模型的收敛和泛化能力。你可以通过调整

--batch-size参数来找到最优值。 - 初始学习率 (lr0):初始学习率决定了训练过程开始时的步长。你可以调整

--lr0参数来控制模型学习的速度。 - 学习率范围 (lrf):学习率范围决定了训练过程中使用的学习率范围。尝试不同的

--lrf值可以帮助找到更好的学习率调度。 - 选择不同的优化器:优化器负责根据计算的梯度更新模型的参数。更换优化器有时可以改善模型的收敛性和最终效果。Ultralytics YOLOv8支持多种优化器,如SGD、Adam和RMSprop。你可以通过修改

--optimizer参数来选择不同的优化器。

- 批量大小 (Batch Size):改变批量大小会影响模型的收敛和泛化能力。你可以通过调整

有关恢复中断的训练和其他训练选项的更多详细信息,请参考Ultralytics YOLOv8的文档。

为了优化模型的性能,我们进行了不同参数值和优化器的实验。在训练阶段,我探索了多个训练周期(epochs)、批量大小(batch sizes)、初始学习率(lr0)和丢弃率(dropout)的组合。以下是实验中使用的参数值:

- 训练周期 (Epochs):10、50、100

- 批量大小 (Batch sizes):8、16、32、64

- 初始学习率 (lr0):0.001、0.0003、0.0001

- 丢弃率 (Dropout):0.15、0.25

此外,我们还使用了不同的优化器(包括Adam、SGD和auto)来评估其对模型收敛性和整体结果的影响。

经过严格的实验和训练,我们很高兴呈现我们的研究结果。YOLOv8模型的性能评估基于多个指标,包括平均精度均值(mAP)。

5.1.1. 训练步骤

In [7]:

!pip install --upgrade ultralytics rayRequirement already satisfied: ultralytics in /opt/conda/lib/python3.10/site-packages (8.2.58)

Requirement already satisfied: ray in /opt/conda/lib/python3.10/site-packages (2.5.1)

Collecting rayDownloading ray-2.32.0-cp310-cp310-manylinux2014_x86_64.whl (65.7 MB)━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 65.7/65.7 MB 17.0 MB/s eta 0:00:0000:0100:01

Requirement already satisfied: numpy<2.0.0,>=1.23.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (1.23.5)

Requirement already satisfied: matplotlib>=3.3.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (3.7.2)

Requirement already satisfied: opencv-python>=4.6.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (4.8.0.76)

Requirement already satisfied: pillow>=7.1.2 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (9.5.0)

Requirement already satisfied: pyyaml>=5.3.1 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (6.0)

Requirement already satisfied: requests>=2.23.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.31.0)

Requirement already satisfied: scipy>=1.4.1 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (1.11.2)

Requirement already satisfied: torch>=1.8.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.0.0)

Requirement already satisfied: torchvision>=0.9.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (0.15.1)

Requirement already satisfied: tqdm>=4.64.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (4.66.1)

Requirement already satisfied: psutil in /opt/conda/lib/python3.10/site-packages (from ultralytics) (5.9.3)

Requirement already satisfied: py-cpuinfo in /opt/conda/lib/python3.10/site-packages (from ultralytics) (9.0.0)

Requirement already satisfied: pandas>=1.1.4 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.0.2)

Requirement already satisfied: seaborn>=0.11.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (0.12.2)

Requirement already satisfied: ultralytics-thop>=2.0.0 in /opt/conda/lib/python3.10/site-packages (from ultralytics) (2.0.0)

Requirement already satisfied: click>=7.0 in /opt/conda/lib/python3.10/site-packages (from ray) (8.1.7)

Requirement already satisfied: filelock in /opt/conda/lib/python3.10/site-packages (from ray) (3.12.2)

Requirement already satisfied: jsonschema in /opt/conda/lib/python3.10/site-packages (from ray) (4.17.3)

Requirement already satisfied: msgpack<2.0.0,>=1.0.0 in /opt/conda/lib/python3.10/site-packages (from ray) (1.0.5)

Requirement already satisfied: packaging in /opt/conda/lib/python3.10/site-packages (from ray) (21.3)

Requirement already satisfied: protobuf!=3.19.5,>=3.15.3 in /opt/conda/lib/python3.10/site-packages (from ray) (3.20.3)

Requirement already satisfied: aiosignal in /opt/conda/lib/python3.10/site-packages (from ray) (1.3.1)

Requirement already satisfied: frozenlist in /opt/conda/lib/python3.10/site-packages (from ray) (1.3.3)

Requirement already satisfied: contourpy>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (1.1.0)

Requirement already satisfied: cycler>=0.10 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (4.40.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (1.4.4)

Requirement already satisfied: pyparsing<3.1,>=2.3.1 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in /opt/conda/lib/python3.10/site-packages (from matplotlib>=3.3.0->ultralytics) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /opt/conda/lib/python3.10/site-packages (from pandas>=1.1.4->ultralytics) (2023.3)

Requirement already satisfied: tzdata>=2022.1 in /opt/conda/lib/python3.10/site-packages (from pandas>=1.1.4->ultralytics) (2023.3)

Requirement already satisfied: charset-normalizer<4,>=2 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (3.1.0)

Requirement already satisfied: idna<4,>=2.5 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (1.26.15)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/lib/python3.10/site-packages (from requests>=2.23.0->ultralytics) (2023.7.22)

Requirement already satisfied: typing-extensions in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (4.6.3)

Requirement already satisfied: sympy in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (1.12)

Requirement already satisfied: networkx in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (3.1)

Requirement already satisfied: jinja2 in /opt/conda/lib/python3.10/site-packages (from torch>=1.8.0->ultralytics) (3.1.2)

Requirement already satisfied: attrs>=17.4.0 in /opt/conda/lib/python3.10/site-packages (from jsonschema->ray) (23.1.0)

Requirement already satisfied: pyrsistent!=0.17.0,!=0.17.1,!=0.17.2,>=0.14.0 in /opt/conda/lib/python3.10/site-packages (from jsonschema->ray) (0.19.3)

Requirement already satisfied: six>=1.5 in /opt/conda/lib/python3.10/site-packages (from python-dateutil>=2.7->matplotlib>=3.3.0->ultralytics) (1.16.0)

Requirement already satisfied: MarkupSafe>=2.0 in /opt/conda/lib/python3.10/site-packages (from jinja2->torch>=1.8.0->ultralytics) (2.1.3)

Requirement already satisfied: mpmath>=0.19 in /opt/conda/lib/python3.10/site-packages (from sympy->torch>=1.8.0->ultralytics) (1.3.0)

Installing collected packages: rayAttempting uninstall: rayFound existing installation: ray 2.5.1Uninstalling ray-2.5.1:Successfully uninstalled ray-2.5.1

Successfully installed ray-2.32.0In [8]:

# Build from YAML and transfer weights

Final_model = YOLO('yolov8n.pt') # Training The Final Model

Result_Final_model = Final_model.train(data="/kaggle/input/cardetection/car/data.yaml",epochs = 30, batch = -1, optimizer = 'auto')Ultralytics YOLOv8.2.58 🚀 Python-3.10.12 torch-2.0.0 CUDA:0 (Tesla T4, 15095MiB)

engine/trainer: task=detect, mode=train, model=yolov8n.pt, data=/kaggle/input/cardetection/car/data.yaml, epochs=30, time=None, patience=100, batch=-1, imgsz=640, save=True, save_period=-1, cache=False, device=None, workers=8, project=None, name=train, exist_ok=False, pretrained=True, optimizer=auto, verbose=True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=10, resume=False, amp=True, fraction=1.0, profile=False, freeze=None, multi_scale=False, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save_json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, vid_stride=1, stream_buffer=False, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, embed=None, show=False, save_frames=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, show_boxes=True, line_width=None, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=None, workspace=4, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, label_smoothing=0.0, nbs=64, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, bgr=0.0, mosaic=1.0, mixup=0.0, copy_paste=0.0, auto_augment=randaugment, erasing=0.4, crop_fraction=1.0, cfg=None, tracker=botsort.yaml, save_dir=runs/detect/train

Downloading https://ultralytics.com/assets/Arial.ttf to '/root/.config/Ultralytics/Arial.ttf'...100%|██████████| 755k/755k [00:00<00:00, 14.3MB/s]

2024-07-16 13:49:53,480 INFO util.py:124 -- Outdated packages:ipywidgets==7.7.1 found, needs ipywidgets>=8

Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.

2024-07-16 13:49:53,951 INFO util.py:124 -- Outdated packages:ipywidgets==7.7.1 found, needs ipywidgets>=8

Run `pip install -U ipywidgets`, then restart the notebook server for rich notebook output.Overriding model.yaml nc=80 with nc=15from n params module arguments 0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2] 1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2] 2 -1 1 7360 ultralytics.nn.modules.block.C2f [32, 32, 1, True] 3 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2] 4 -1 2 49664 ultralytics.nn.modules.block.C2f [64, 64, 2, True] 5 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2] 6 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True] 7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2] 8 -1 1 460288 ultralytics.nn.modules.block.C2f [256, 256, 1, True] 9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5] 10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1] 12 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1] 13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest'] 14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1] 15 -1 1 37248 ultralytics.nn.modules.block.C2f [192, 64, 1] 16 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2] 17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1] 18 -1 1 123648 ultralytics.nn.modules.block.C2f [192, 128, 1] 19 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2] 20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1] 21 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1] 22 [15, 18, 21] 1 754237 ultralytics.nn.modules.head.Detect [15, [64, 128, 256]]

Model summary: 225 layers, 3,013,773 parameters, 3,013,757 gradients, 8.2 GFLOPsTransferred 319/355 items from pretrained weights

TensorBoard: Start with 'tensorboard --logdir runs/detect/train', view at http://localhost:6006/wandb: Logging into wandb.ai. (Learn how to deploy a W&B server locally: https://wandb.me/wandb-server)

wandb: You can find your API key in your browser here: https://wandb.ai/authorize

wandb: Paste an API key from your profile and hit enter, or press ctrl+c to quit:wandb: Appending key for api.wandb.ai to your netrc file: /root/.netrcwandb版本 0.17.4 已发布!要升级,请运行:$ pip install wandb --upgrade

当前使用的wandb版本是 0.15.9

运行数据已保存在本地:/kaggle/working/wandb/run-20240716_135017-1k0krjw8

正在将运行结果同步到Weights & Biases(文档)

查看项目:https://wandb.ai/karimi-parisa1371/YOLOv8

查看运行结果:https://wandb.ai/karimi-parisa1371/YOLOv8/runs/1k0krjw8

Freezing layer 'model.22.dfl.conv.weight'

AMP: running Automatic Mixed Precision (AMP) checks with YOLOv8n...

AMP: checks passed ✅

AutoBatch: Computing optimal batch size for imgsz=640 at 60.0% CUDA memory utilization.

AutoBatch: CUDA:0 (Tesla T4) 14.74G total, 0.23G reserved, 0.07G allocated, 14.44G freeParams GFLOPs GPU_mem (GB) forward (ms) backward (ms) input output3013773 8.209 0.319 26.08 84.57 (1, 3, 640, 640) list3013773 16.42 0.308 19.02 47.82 (2, 3, 640, 640) list3013773 32.84 0.543 17.84 52.4 (4, 3, 640, 640) list3013773 65.67 1.072 24.29 56.11 (8, 3, 640, 640) list3013773 131.3 2.032 38.84 66.61 (16, 3, 640, 640) list

AutoBatch: Using batch-size 71 for CUDA:0 8.87G/14.74G (60%) ✅train: Scanning /kaggle/input/cardetection/car/train/labels... 3530 images, 3 backgrounds, 0 corrupt: 100%|██████████| 3530/3530 [00:15<00:00, 234.89it/s]train: WARNING ⚠️ Cache directory /kaggle/input/cardetection/car/train is not writeable, cache not saved.

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))val: Scanning /kaggle/input/cardetection/car/valid/labels... 801 images, 0 backgrounds, 0 corrupt: 100%|██████████| 801/801 [00:03<00:00, 225.86it/s]val: WARNING ⚠️ Cache directory /kaggle/input/cardetection/car/valid is not writeable, cache not saved.

Plotting labels to runs/detect/train/labels.jpg...

optimizer: 'optimizer=auto' found, ignoring 'lr0=0.01' and 'momentum=0.937' and determining best 'optimizer', 'lr0' and 'momentum' automatically...

optimizer: AdamW(lr=0.000526, momentum=0.9) with parameter groups 57 weight(decay=0.0), 64 weight(decay=0.0005546875000000001), 63 bias(decay=0.0)

TensorBoard: model graph visualization added ✅

Image sizes 640 train, 640 val

Using 2 dataloader workers

Logging results to runs/detect/train

Starting training for 30 epochs...Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size1/30 9.53G 0.9003 3.816 1.231 125 640: 100%|██████████| 50/50 [00:40<00:00, 1.24it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:05<00:00, 1.03it/s]all 801 944 0.0462 0.762 0.106 0.0891Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size2/30 9.41G 0.7314 2.68 1.092 106 640: 100%|██████████| 50/50 [00:37<00:00, 1.32it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.22it/s]all 801 944 0.267 0.42 0.277 0.202Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size3/30 9.43G 0.7322 2.254 1.071 94 640: 100%|██████████| 50/50 [00:36<00:00, 1.36it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:05<00:00, 1.11it/s]all 801 944 0.456 0.496 0.4 0.324Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size4/30 9.44G 0.7114 1.914 1.067 121 640: 100%|██████████| 50/50 [00:37<00:00, 1.34it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:05<00:00, 1.20it/s]all 801 944 0.563 0.562 0.61 0.497Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size5/30 9.44G 0.6876 1.604 1.04 109 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.22it/s]all 801 944 0.811 0.665 0.768 0.629Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size6/30 9.41G 0.6686 1.391 1.037 114 640: 100%|██████████| 50/50 [00:37<00:00, 1.33it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.21it/s]all 801 944 0.803 0.69 0.787 0.667Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size7/30 9.44G 0.6538 1.275 1.022 110 640: 100%|██████████| 50/50 [00:36<00:00, 1.37it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.22it/s]all 801 944 0.685 0.639 0.728 0.616Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size8/30 9.44G 0.6359 1.136 1.011 113 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.23it/s]all 801 944 0.842 0.738 0.835 0.707Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size9/30 9.44G 0.6243 1.051 1.004 111 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.21it/s]all 801 944 0.884 0.769 0.874 0.733Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size10/30 9.43G 0.6375 1.008 1.009 142 640: 100%|██████████| 50/50 [00:37<00:00, 1.33it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.25it/s]all 801 944 0.871 0.738 0.854 0.72Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size11/30 9.44G 0.6091 0.9358 0.9947 122 640: 100%|██████████| 50/50 [00:37<00:00, 1.33it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.25it/s]all 801 944 0.886 0.839 0.9 0.766Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size12/30 9.44G 0.6083 0.9206 1.001 109 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.23it/s]all 801 944 0.924 0.791 0.893 0.753Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size13/30 9.44G 0.5964 0.8514 0.9823 115 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.26it/s]all 801 944 0.912 0.817 0.905 0.758Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size14/30 9.41G 0.5914 0.8261 0.9857 120 640: 100%|██████████| 50/50 [00:37<00:00, 1.33it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.24it/s]all 801 944 0.929 0.843 0.921 0.785Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size15/30 9.43G 0.5747 0.7861 0.9822 113 640: 100%|██████████| 50/50 [00:37<00:00, 1.33it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.25it/s]all 801 944 0.933 0.842 0.927 0.795Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size16/30 9.41G 0.5731 0.7841 0.9787 116 640: 100%|██████████| 50/50 [00:37<00:00, 1.34it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.22it/s]all 801 944 0.939 0.848 0.931 0.788Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size17/30 9.41G 0.5672 0.759 0.9772 109 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:05<00:00, 1.20it/s]all 801 944 0.924 0.86 0.937 0.795Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size18/30 9.44G 0.5655 0.7365 0.9752 117 640: 100%|██████████| 50/50 [00:37<00:00, 1.34it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.21it/s]all 801 944 0.938 0.847 0.929 0.794Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size19/30 9.43G 0.5632 0.7142 0.9728 126 640: 100%|██████████| 50/50 [00:37<00:00, 1.35it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:05<00:00, 1.19it/s]all 801 944 0.96 0.834 0.938 0.8Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size20/30 9.43G 0.5526 0.6856 0.9676 108 640: 100%|██████████| 50/50 [00:37<00:00, 1.32it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.27it/s]all 801 944 0.953 0.853 0.94 0.802Closing dataloader mosaic

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size21/30 9.42G 0.5574 0.5288 0.9404 62 640: 100%|██████████| 50/50 [00:38<00:00, 1.29it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.23it/s]all 801 944 0.963 0.818 0.933 0.792Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size22/30 9.42G 0.5494 0.5011 0.936 69 640: 100%|██████████| 50/50 [00:35<00:00, 1.41it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.26it/s]all 801 944 0.926 0.881 0.944 0.809Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size23/30 9.42G 0.5365 0.4774 0.9326 60 640: 100%|██████████| 50/50 [00:34<00:00, 1.45it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.20it/s]all 801 944 0.926 0.873 0.944 0.809Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size24/30 9.42G 0.529 0.4575 0.9216 67 640: 100%|██████████| 50/50 [00:34<00:00, 1.45it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.25it/s]all 801 944 0.944 0.871 0.948 0.811Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size25/30 9.41G 0.5257 0.4379 0.9295 64 640: 100%|██████████| 50/50 [00:35<00:00, 1.43it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.25it/s]all 801 944 0.946 0.885 0.953 0.821Epoch GPU_mem box_loss cls_loss dfl_loss Instances SizeClass Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.28it/s]

all 801 944 0.941 0.886 0.952 0.82Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size27/30 9.42G 0.5022 0.4093 0.9085 66 640: 100%|██████████| 50/50 [00:35<00:00, 1.43it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.26it/s]all 801 944 0.945 0.891 0.956 0.826Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size28/30 9.42G 0.5079 0.3928 0.9126 67 640: 100%|██████████| 50/50 [00:35<00:00, 1.42it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.29it/s]all 801 944 0.929 0.904 0.955 0.824Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size29/30 9.42G 0.4945 0.3838 0.9014 65 640: 100%|██████████| 50/50 [00:34<00:00, 1.44it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:04<00:00, 1.24it/s]all 801 944 0.939 0.909 0.958 0.826Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size30/30 9.42G 0.4902 0.3806 0.9019 68 640: 100%|██████████| 50/50 [00:35<00:00, 1.42it/s]Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:05<00:00, 1.16it/s]all 801 944 0.943 0.905 0.957 0.8330 epochs completed in 0.358 hours.

Optimizer stripped from runs/detect/train/weights/last.pt, 6.3MB

Optimizer stripped from runs/detect/train/weights/best.pt, 6.3MBValidating runs/detect/train/weights/best.pt...

Ultralytics YOLOv8.2.58 🚀 Python-3.10.12 torch-2.0.0 CUDA:0 (Tesla T4, 15095MiB)

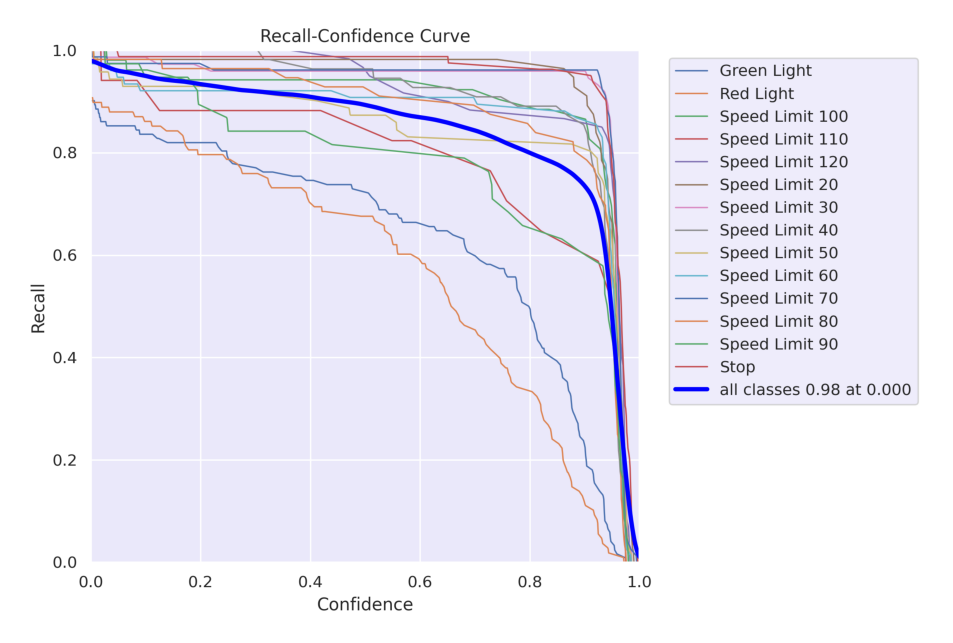

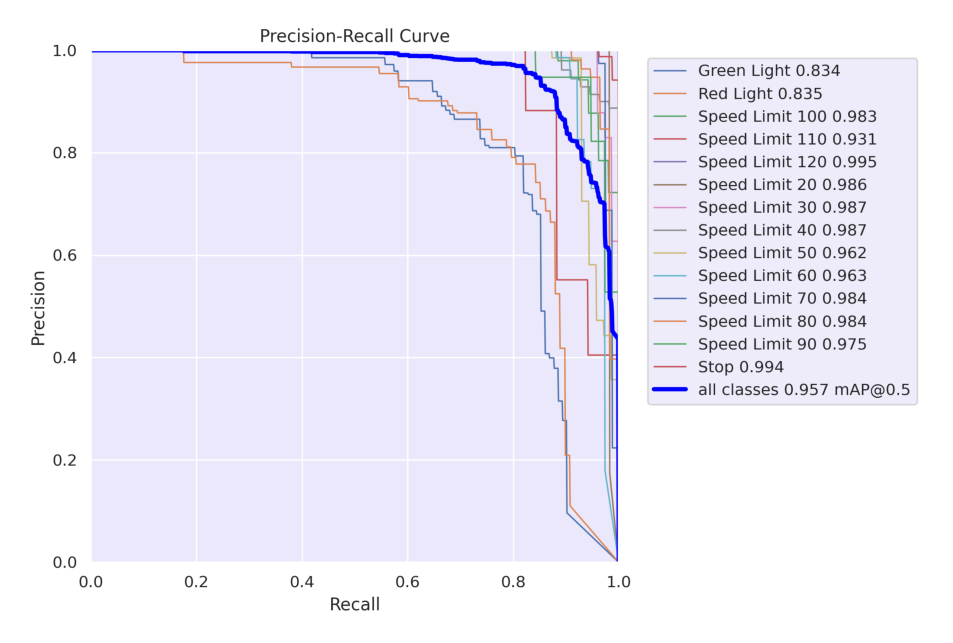

Model summary (fused): 168 layers, 3,008,573 parameters, 0 gradients, 8.1 GFLOPsClass Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 6/6 [00:07<00:00, 1.28s/it]all 801 944 0.943 0.906 0.957 0.83Green Light 87 122 0.826 0.741 0.834 0.507Red Light 74 108 0.881 0.694 0.835 0.536Speed Limit 100 52 52 0.92 0.942 0.983 0.891Speed Limit 110 17 17 0.882 0.882 0.931 0.852Speed Limit 120 60 60 1 0.991 0.995 0.919Speed Limit 20 56 56 0.987 0.982 0.986 0.876Speed Limit 30 71 74 0.951 0.959 0.987 0.914Speed Limit 40 53 55 0.901 0.964 0.987 0.887Speed Limit 50 68 71 0.985 0.9 0.962 0.866Speed Limit 60 76 76 0.945 0.921 0.963 0.883Speed Limit 70 78 78 0.987 0.962 0.984 0.894Speed Limit 80 56 56 0.963 0.931 0.984 0.869Speed Limit 90 38 38 1 0.827 0.975 0.787Stop 81 81 0.973 0.988 0.994 0.933

Speed: 1.5ms preprocess, 2.4ms inference, 0.0ms loss, 0.9ms postprocess per image

Results saved to runs/detect/train等待W&B进程完成...(成功)。

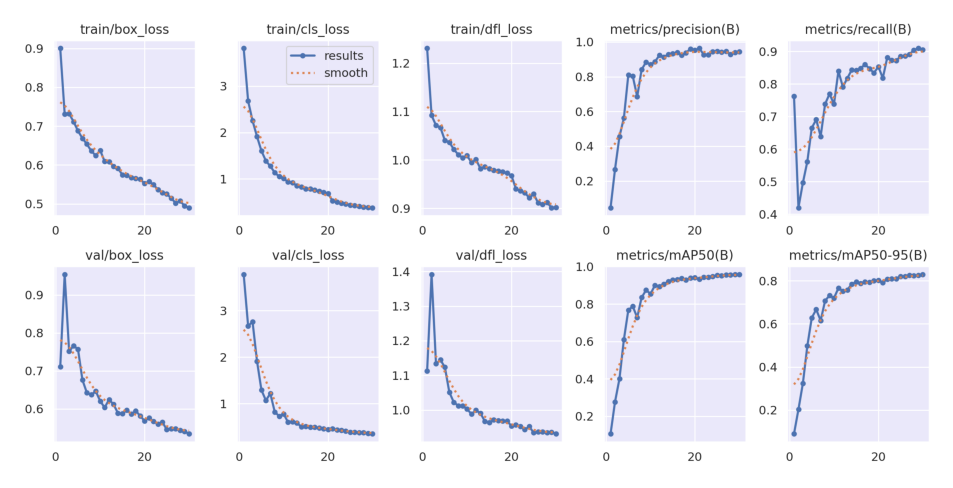

运行历史:

| lr/pg0 | ▃▆███▇▇▇▆▆▆▆▅▅▅▅▄▄▄▄▃▃▃▃▂▂▂▂▁▁ |

| lr/pg1 | ▃▆███▇▇▇▆▆▆▆▅▅▅▅▄▄▄▄▃▃▃▃▂▂▂▂▁▁ |

| lr/pg2 | ▃▆███▇▇▇▆▆▆▆▅▅▅▅▄▄▄▄▃▃▃▃▂▂▂▂▁▁ |

| metrics/mAP50(B) | ▁▂▃▅▆▇▆▇▇▇█▇██████████████████ |

| metrics/mAP50-95(B) | ▁▂▃▅▆▆▆▇▇▇▇▇▇█████████████████ |

| metrics/precision(B) | ▁▃▄▅▇▇▆▇▇▇▇███████████████████ |

| metrics/recall(B) | ▆▁▂▃▅▅▄▆▆▆▇▆▇▇▇▇▇▇▇▇▇█▇▇██████ |

| model/GFLOPs | ▁ |

| model/parameters | ▁ |

| model/speed_PyTorch(ms) | ▁ |

| train/box_loss | █▅▅▅▄▄▄▃▃▄▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂▁▁▁▁▁ |

| train/cls_loss | █▆▅▄▃▃▃▃▂▂▂▂▂▂▂▂▂▂▂▂▁▁▁▁▁▁▁▁▁▁ |

| train/dfl_loss | █▅▅▅▄▄▄▃▃▃▃▃▃▃▃▃▃▃▃▂▂▂▂▁▂▁▁▁▁▁ |

| val/box_loss | ▄█▅▅▅▃▃▃▃▂▂▃▂▂▂▂▂▂▂▂▂▂▁▂▁▁▁▁▁▁ |

| val/cls_loss | █▆▆▄▃▂▃▂▂▂▂▂▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁▁ |

| val/dfl_loss | ▄█▄▄▄▃▂▂▂▂▂▂▂▂▁▂▂▂▂▁▁▁▁▁▁▁▁▁▁▁ |

总结:

| lr/pg0 | 2e-05 |

| lr/pg1 | 2e-05 |

| lr/pg2 | 2e-05 |

| metrics/mAP50(B) | 0.95717 |

| metrics/mAP50-95(B) | 0.82952 |

| metrics/precision(B) | 0.94297 |

| metrics/recall(B) | 0.90601 |

| model/GFLOPs | 8.209 |

| model/parameters | 3013773 |

| model/speed_PyTorch(ms) | 2.415 |

| train/box_loss | 0.49017 |

| train/cls_loss | 0.38064 |

| train/dfl_loss | 0.90189 |

| val/box_loss | 0.53427 |

| val/cls_loss | 0.35107 |

| val/dfl_loss | 0.93138 |

View run train at: https://wandb.ai/karimi-parisa1371/YOLOv8/runs/1k0krjw8

Synced 6 W&B file(s), 24 media file(s), 5 artifact file(s) and 0 other file(s)

Find logs at: ./wandb/run-20240716_135017-1k0krjw8/logs

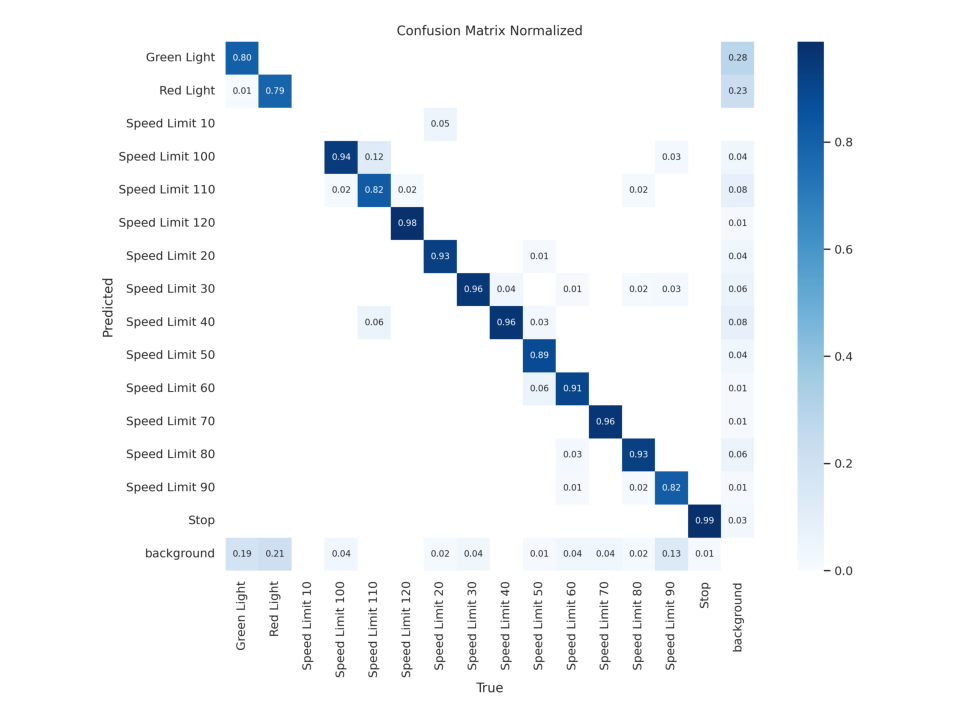

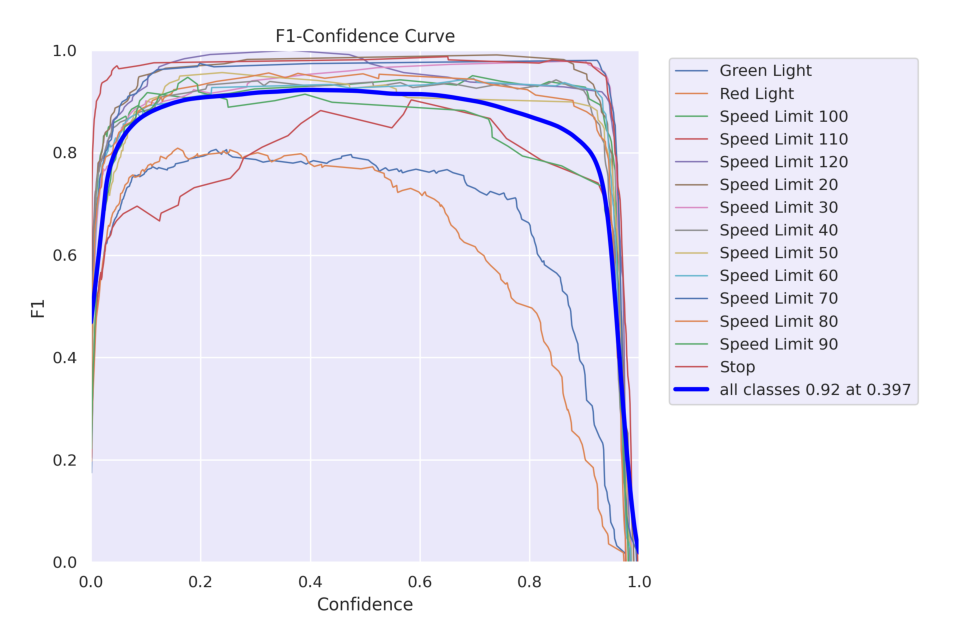

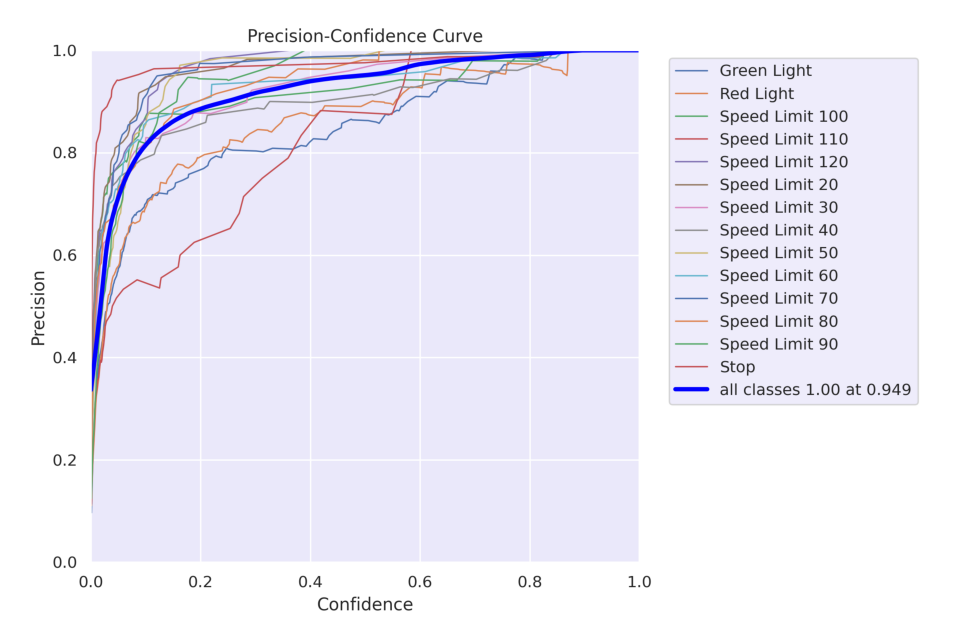

5.1.2. Validation Step

In [9]:

import os

import cv2

import matplotlib.pyplot as pltdef display_images(post_training_files_path, image_files):for image_file in image_files:image_path = os.path.join(post_training_files_path, image_file)img = cv2.imread(image_path)img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)plt.figure(figsize=(10, 10), dpi=120)plt.imshow(img)plt.axis('off')plt.show()# List of image files to display

image_files = ['confusion_matrix_normalized.png','F1_curve.png','P_curve.png','R_curve.png','PR_curve.png','results.png'

]# Path to the directory containing the images

post_training_files_path = '/kaggle/working/runs/detect/train'# Display the images

display_images(post_training_files_path, image_files)

In [ ]:

In [10]:

Result_Final_model = pd.read_csv('/kaggle/working/runs/detect/train/results.csv')

Result_Final_model.tail(10)Out[10]:

| epoch | train/box_loss | train/cls_loss | train/dfl_loss | metrics/precision(B) | metrics/recall(B) | metrics/mAP50(B) | metrics/mAP50-95(B) | val/box_loss | val/cls_loss | val/dfl_loss | lr/pg0 | lr/pg1 | lr/pg2 | |

| 20 | 21 | 0.55740 | 0.52881 | 0.94040 | 0.96343 | 0.81798 | 0.93275 | 0.79194 | 0.57663 | 0.45824 | 0.95732 | 0.000179 | 0.000179 | 0.000179 |

| 21 | 22 | 0.54938 | 0.50105 | 0.93599 | 0.92566 | 0.88105 | 0.94360 | 0.80859 | 0.56651 | 0.43104 | 0.95234 | 0.000161 | 0.000161 | 0.000161 |

| 22 | 23 | 0.53653 | 0.47742 | 0.93256 | 0.92601 | 0.87273 | 0.94378 | 0.80903 | 0.56020 | 0.42374 | 0.94359 | 0.000144 | 0.000144 | 0.000144 |

| 23 | 24 | 0.52901 | 0.45752 | 0.92159 | 0.94448 | 0.87110 | 0.94789 | 0.81055 | 0.56475 | 0.40938 | 0.95220 | 0.000127 | 0.000127 | 0.000127 |

| 24 | 25 | 0.52568 | 0.43794 | 0.92954 | 0.94604 | 0.88493 | 0.95295 | 0.82069 | 0.54540 | 0.38604 | 0.93454 | 0.000109 | 0.000109 | 0.000109 |

| 25 | 26 | 0.51426 | 0.42990 | 0.91126 | 0.94099 | 0.88593 | 0.95179 | 0.82002 | 0.54739 | 0.38483 | 0.93668 | 0.000092 | 0.000092 | 0.000092 |

| 26 | 27 | 0.50218 | 0.40930 | 0.90848 | 0.94543 | 0.89077 | 0.95616 | 0.82587 | 0.54801 | 0.37515 | 0.93724 | 0.000075 | 0.000075 | 0.000075 |

| 27 | 28 | 0.50789 | 0.39283 | 0.91260 | 0.92909 | 0.90408 | 0.95452 | 0.82420 | 0.54305 | 0.37637 | 0.93477 | 0.000057 | 0.000057 | 0.000057 |

| 28 | 29 | 0.49446 | 0.38381 | 0.90141 | 0.93868 | 0.90941 | 0.95800 | 0.82593 | 0.54046 | 0.35780 | 0.93587 | 0.000040 | 0.000040 | 0.000040 |

| 29 | 30 | 0.49017 | 0.38064 | 0.90189 | 0.94317 | 0.90490 | 0.95719 | 0.83020 | 0.53427 | 0.35107 | 0.93138 | 0.000023 | 0.000023 | 0.000023 |

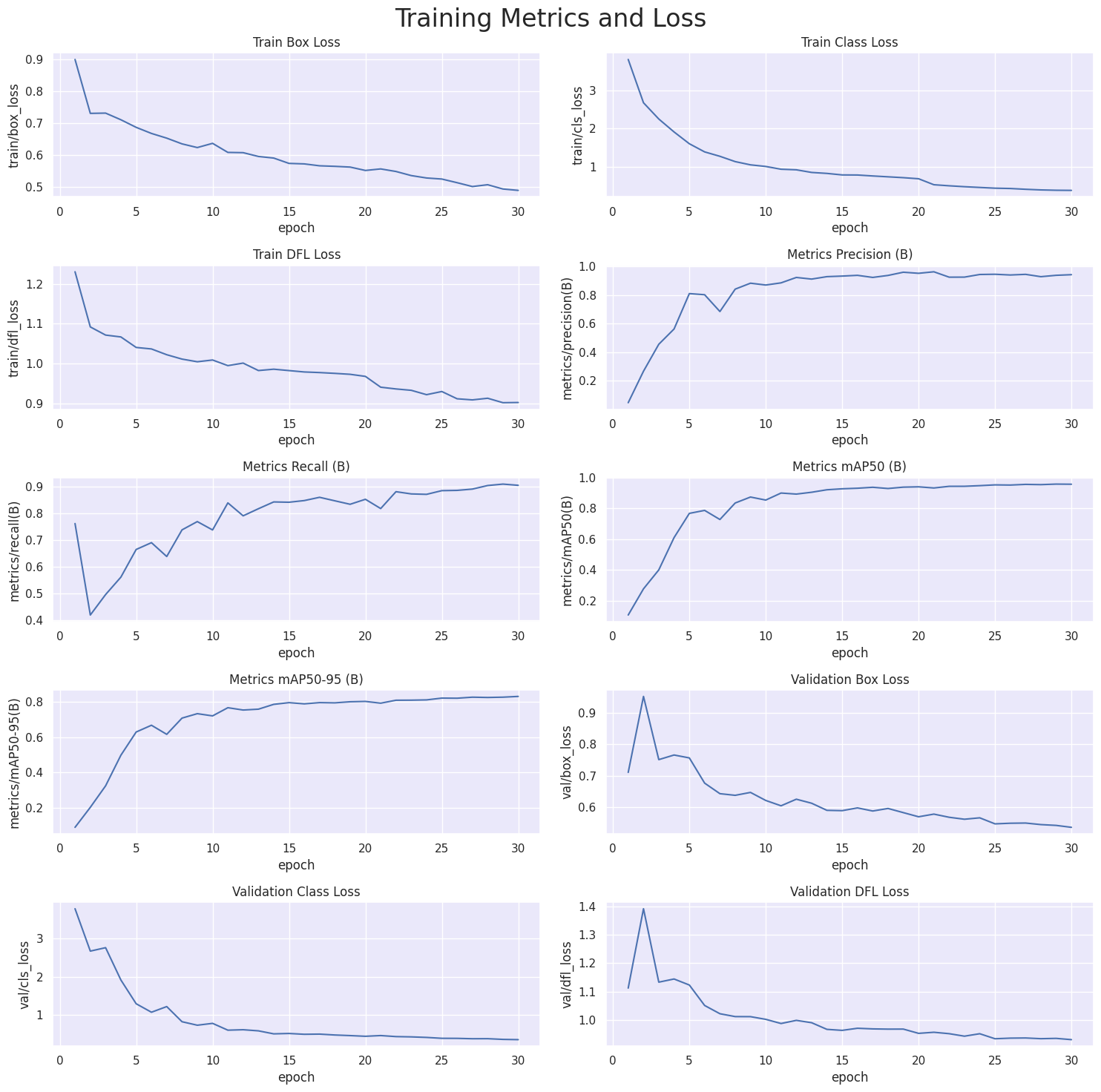

In [13]:

# Read the results.csv file as a pandas dataframe

Result_Final_model.columns = Result_Final_model.columns.str.strip()# Create subplots

fig, axs = plt.subplots(nrows=5, ncols=2, figsize=(15, 15))# Plot the columns using seaborn

sns.lineplot(x='epoch', y='train/box_loss', data=Result_Final_model, ax=axs[0,0])

sns.lineplot(x='epoch', y='train/cls_loss', data=Result_Final_model, ax=axs[0,1])

sns.lineplot(x='epoch', y='train/dfl_loss', data=Result_Final_model, ax=axs[1,0])

sns.lineplot(x='epoch', y='metrics/precision(B)', data=Result_Final_model, ax=axs[1,1])

sns.lineplot(x='epoch', y='metrics/recall(B)', data=Result_Final_model, ax=axs[2,0])

sns.lineplot(x='epoch', y='metrics/mAP50(B)', data=Result_Final_model, ax=axs[2,1])

sns.lineplot(x='epoch', y='metrics/mAP50-95(B)', data=Result_Final_model, ax=axs[3,0])

sns.lineplot(x='epoch', y='val/box_loss', data=Result_Final_model, ax=axs[3,1])

sns.lineplot(x='epoch', y='val/cls_loss', data=Result_Final_model, ax=axs[4,0])

sns.lineplot(x='epoch', y='val/dfl_loss', data=Result_Final_model, ax=axs[4,1])# Set titles and axis labels for each subplot

axs[0,0].set(title='Train Box Loss')

axs[0,1].set(title='Train Class Loss')

axs[1,0].set(title='Train DFL Loss')

axs[1,1].set(title='Metrics Precision (B)')

axs[2,0].set(title='Metrics Recall (B)')

axs[2,1].set(title='Metrics mAP50 (B)')

axs[3,0].set(title='Metrics mAP50-95 (B)')

axs[3,1].set(title='Validation Box Loss')

axs[4,0].set(title='Validation Class Loss')

axs[4,1].set(title='Validation DFL Loss')plt.suptitle('Training Metrics and Loss', fontsize=24)

plt.subplots_adjust(top=0.8)

plt.tight_layout()

plt.show()

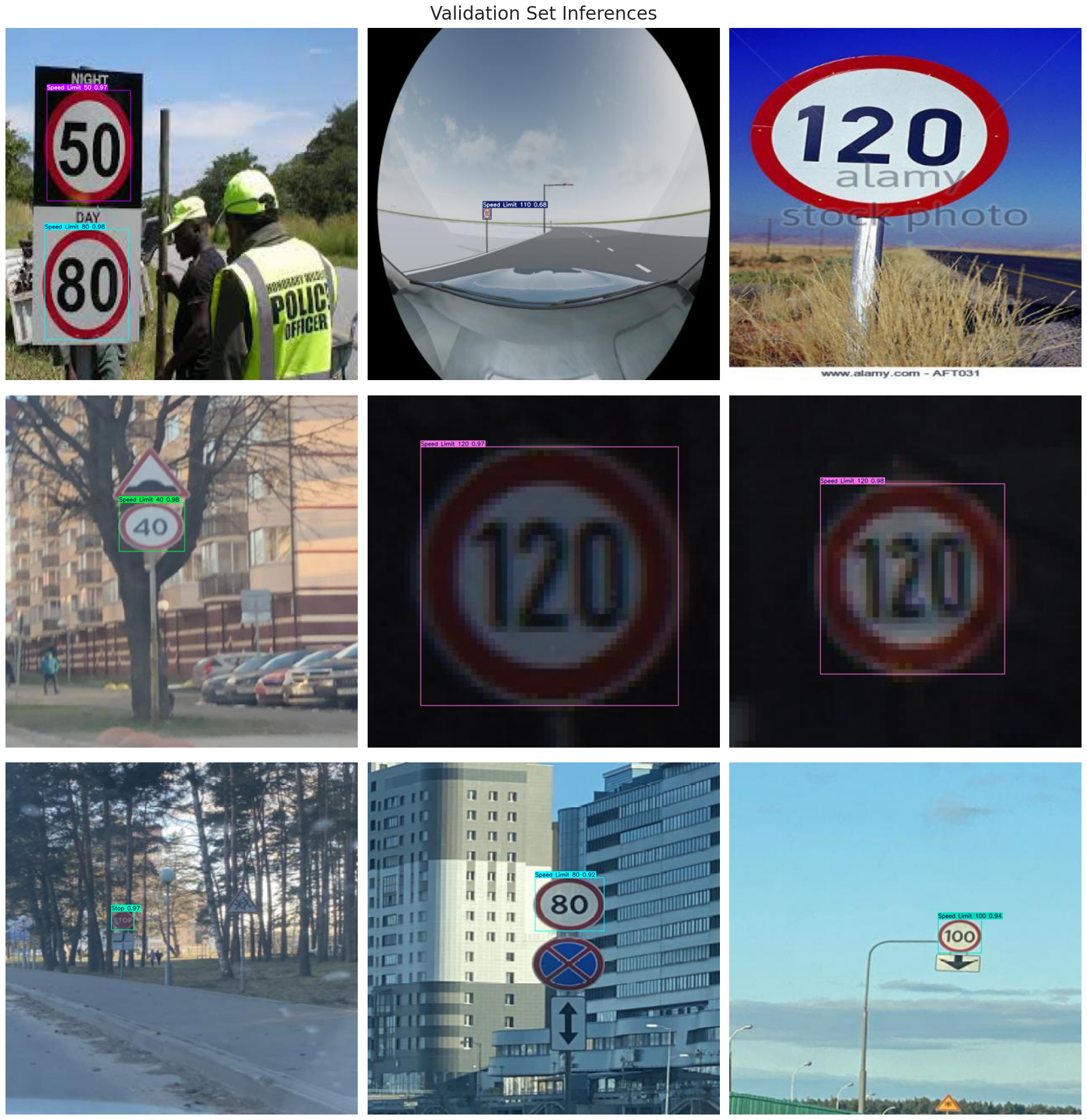

5.2 | Validation of the Model By TestSet

In [14]:

# Loading the best performing model

Valid_model = YOLO('/kaggle/working/runs/detect/train/weights/best.pt')# Evaluating the model on the validset

metrics = Valid_model.val(split = 'val')# final results

print("precision(B): ", metrics.results_dict["metrics/precision(B)"])

print("metrics/recall(B): ", metrics.results_dict["metrics/recall(B)"])

print("metrics/mAP50(B): ", metrics.results_dict["metrics/mAP50(B)"])

print("metrics/mAP50-95(B): ", metrics.results_dict["metrics/mAP50-95(B)"])Ultralytics YOLOv8.2.58 🚀 Python-3.10.12 torch-2.0.0 CUDA:0 (Tesla T4, 15095MiB)

Model summary (fused): 168 layers, 3,008,573 parameters, 0 gradients, 8.1 GFLOPsval: Scanning /kaggle/input/cardetection/car/valid/labels... 801 images, 0 backgrounds, 0 corrupt: 100%|██████████| 801/801 [00:00<00:00, 822.02it/s]

val: WARNING ⚠️ Cache directory /kaggle/input/cardetection/car/valid is not writeable, cache not saved.Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 51/51 [00:06<00:00, 7.37it/s]all 801 944 0.942 0.906 0.957 0.83Green Light 87 122 0.819 0.741 0.833 0.512Red Light 74 108 0.881 0.694 0.835 0.541Speed Limit 100 52 52 0.92 0.942 0.983 0.891Speed Limit 110 17 17 0.882 0.881 0.932 0.845Speed Limit 120 60 60 1 0.991 0.995 0.916Speed Limit 20 56 56 0.987 0.982 0.986 0.875Speed Limit 30 71 74 0.951 0.959 0.987 0.911Speed Limit 40 53 55 0.901 0.964 0.987 0.894Speed Limit 50 68 71 0.985 0.901 0.962 0.862Speed Limit 60 76 76 0.945 0.921 0.963 0.881Speed Limit 70 78 78 0.987 0.962 0.984 0.895Speed Limit 80 56 56 0.963 0.931 0.984 0.865Speed Limit 90 38 38 1 0.828 0.976 0.792Stop 81 81 0.973 0.988 0.994 0.934

Speed: 0.5ms preprocess, 3.9ms inference, 0.0ms loss, 0.7ms postprocess per image

Results saved to runs/detect/val

precision(B): 0.9423951129777693

metrics/recall(B): 0.9060281630136168

metrics/mAP50(B): 0.9571950392546826

metrics/mAP50-95(B): 0.82966502583859375.3 | Making Predictions On Test Images

In [15]:

# Normalization function

def normalize_image(image):return image / 255.0# Image resizing function

def resize_image(image, size=(640, 640)):return cv2.resize(image, size)# Path to validation images

dataset_path = '/kaggle/input/cardetection/car' # Place your dataset path here

valid_images_path = os.path.join(dataset_path, 'test', 'images')# List of all jpg images in the directory

image_files = [file for file in os.listdir(valid_images_path) if file.endswith('.jpg')]# Check if there are images in the directory

if len(image_files) > 0:# Select 9 images at equal intervalsnum_images = len(image_files)step_size = max(1, num_images // 9) # Ensure the interval is at least 1selected_images = [image_files[i] for i in range(0, num_images, step_size)]# Prepare subplotsfig, axes = plt.subplots(3, 3, figsize=(20, 21))fig.suptitle('Validation Set Inferences', fontsize=24)for i, ax in enumerate(axes.flatten()):if i < len(selected_images):image_path = os.path.join(valid_images_path, selected_images[i])# Load imageimage = cv2.imread(image_path)# Check if the image is loaded correctlyif image is not None:# Resize imageresized_image = resize_image(image, size=(640, 640))# Normalize imagenormalized_image = normalize_image(resized_image)# Convert the normalized image to uint8 data typenormalized_image_uint8 = (normalized_image * 255).astype(np.uint8)# Predict with the modelresults = Valid_model.predict(source=normalized_image_uint8, imgsz=640, conf=0.5)# Plot image with labelsannotated_image = results[0].plot(line_width=1)annotated_image_rgb = cv2.cvtColor(annotated_image, cv2.COLOR_BGR2RGB)ax.imshow(annotated_image_rgb)else:print(f"Failed to load image {image_path}")ax.axis('off')plt.tight_layout()plt.show()0: 640x640 1 Speed Limit 50, 1 Speed Limit 80, 7.4ms

Speed: 1.5ms preprocess, 7.4ms inference, 1.3ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Speed Limit 110, 7.4ms

Speed: 1.7ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 (no detections), 7.4ms

Speed: 1.8ms preprocess, 7.4ms inference, 0.5ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Speed Limit 40, 7.4ms

Speed: 1.7ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Speed Limit 120, 7.4ms

Speed: 1.9ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Speed Limit 120, 7.4ms

Speed: 1.8ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Stop, 7.4ms

Speed: 1.8ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Speed Limit 80, 7.4ms

Speed: 1.8ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)0: 640x640 1 Speed Limit 100, 7.4ms

Speed: 1.8ms preprocess, 7.4ms inference, 1.1ms postprocess per image at shape (1, 3, 640, 640)

6 | 使用预训练的YOLOv8从视频中检测交通标志

6.1 | Show Original Video Before Detect

Tip: Due to the substantial volume of the video generated from the image dataset, I have to utilize only a truncated segment of the original video for this project.

In [17]:

# Convert mp4

!ffmpeg -y -loglevel panic -i /kaggle/input/cardetection/video.mp4 output.mp4## <b>6 <span style='color:#e61227'>|</span> Export The Final Model Of Detect Traffic Signs </b>**Tip:** The ultimate goal of training a model is to deploy it for real-world applications. Export mode in Ultralytics YOLOv8 offers a versatile range of options for exporting your trained model to different formats, making it deployable across various platforms and devices.# Display the video

Video("output.mp4", width=960)Out[17]:

In [ ]:

# Use the model to detect signs

Valid_model.predict(source="/kaggle/input/cardetection/video.mp4", show=True,save = True)In [27]:

# show result

# Convert format

!ffmpeg -y -loglevel panic -i /kaggle/working/runs/detect/predict/video.avi result_out.mp4# Display the video

Video("result_out.mp4", width=960)Out[27]:

7 | 保存模型

In [16]:

# Export the model

Valid_model.export(format='onnx')Ultralytics YOLOv8.2.58 🚀 Python-3.10.12 torch-2.0.0 CPU (Intel Xeon 2.00GHz)PyTorch: starting from '/kaggle/working/runs/detect/train/weights/best.pt' with input shape (1, 3, 640, 640) BCHW and output shape(s) (1, 19, 8400) (6.0 MB)ONNX: starting export with onnx 1.14.1 opset 17...

================ Diagnostic Run torch.onnx.export version 2.0.0 ================

verbose: False, log level: Level.ERROR

======================= 0 NONE 0 NOTE 0 WARNING 0 ERROR ========================ONNX: export success ✅ 0.8s, saved as '/kaggle/working/runs/detect/train/weights/best.onnx' (11.7 MB)Export complete (2.4s)

Results saved to /kaggle/working/runs/detect/train/weights

Predict: yolo predict task=detect model=/kaggle/working/runs/detect/train/weights/best.onnx imgsz=640

Validate: yolo val task=detect model=/kaggle/working/runs/detect/train/weights/best.onnx imgsz=640 data=/kaggle/input/cardetection/car/data.yaml

Visualize: https://netron.appOut[16]:

'/kaggle/working/runs/detect/train/weights/best.onnx'

感谢大家在百忙之中学习,你们的认可是老师最大的进步!!!