Day 43 复习日--Fashion Mnist数据集

@浙大疏锦行

今日任务:

- 回顾之前的内容

- kaggle找到一个图像数据集,用cnn网络进行训练并且用grad-cam做可视化

- 进阶:并拆分成多个文件(可选)

简单回顾

Fashion-Mnist

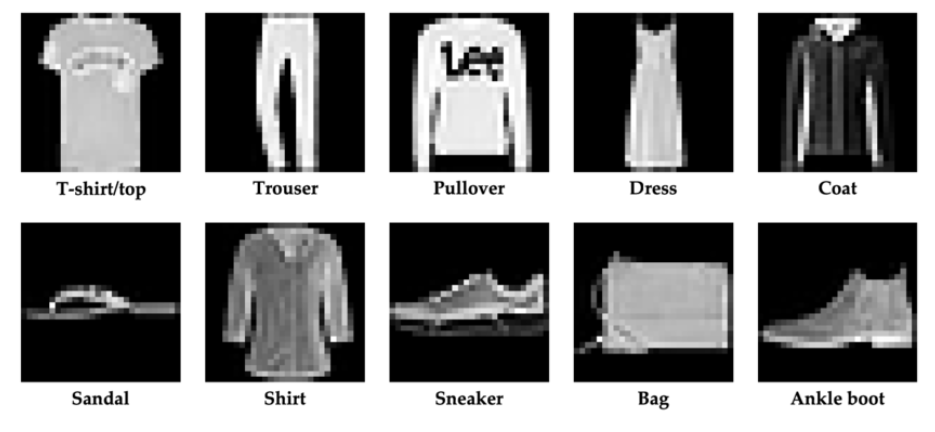

介绍

Fashion-MNIST 是包含时尚图像的类似 MNIST 的数据集,由 60,000 个示例的训练集和 10,000 个示例的测试集组成。每个示例都是 28x28 的灰度图像,与 10 个类的标签相关联。它由 Zalando 发布,旨在替代传统的 MNIST 数据集。

10个类别如下:

| 标注编号 | 描述 |

|---|---|

| 0 | T-shirt/top(T恤) |

| 1 | Trouser(裤子) |

| 2 | Pullover(套衫) |

| 3 | Dress(裙子) |

| 4 | Coat(外套) |

| 5 | Sandal(凉鞋) |

| 6 | Shirt(汗衫) |

| 7 | Sneaker(运动鞋) |

| 8 | Bag(包) |

| 9 | Ankle boot(踝靴) |

代码

1. 计算均值和方差

# 计算均值和标准差

import torchvision.transforms as transforms

from torchvision.datasets import FashionMNIST

from torch.utils.data import DataLoader

# 加载数据集

dataset = FashionMNIST(root='./data', train=True, download=True, transform=transforms.ToTensor())

loader = DataLoader(dataset, batch_size=1000, shuffle=False)

# 初始化变量

mean = 0.0

std = 0.0

total_samples = 0

# 遍历数据集计算均值和标准差

for images, _ in loader:batch_samples = images.size(0) # 当前批次的样本数images = images.view(batch_samples, -1) # 展平图像mean += images.mean(1).sum() # 累加均值std += images.std(1).sum() # 累加标准差total_samples += batch_samples

mean /= total_samples

std /= total_samples

print(f"FashionMNIST 数据集的均值: {mean:.4f}") # 0.2860

print(f"FashionMNIST 数据集的标准差: {std:.4f}") # 0.32052. 导入相关库、预处理、导入数据

# 导入相关库

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torchvision import datasets,transforms

import matplotlib.pyplot as plt

torch.manual_seed(42)# 创建transform

train_transform = transforms.Compose([transforms.RandomCrop(28,padding=4), # 随机裁剪:先在原图填充4像素,再随机裁剪28*28transforms.RandomHorizontalFlip(), # 随机水平翻转,p=0.5transforms.ColorJitter(brightness=0.2, contrast=0.2), # 灰度图像:亮度、对比度随机变化transforms.RandomRotation(15), # 随机旋转图像(最大角度为15度)transforms.ToTensor(), # 归一化transforms.Normalize((0.2860,), (0.3205,)) # 标准化

])

test_transform = transforms.Compose([transforms.ToTensor(), # 归一化transforms.Normalize((0.2860,), (0.3205,)) # 标准化

])# 导入数据

train_dataset = datasets.FashionMNIST(root='./data/fashion',train=True,download=True,transform=train_transform

)

test_dataset = datasets.FashionMNIST(root='./data/fashion',train=False,transform=test_transform

)# 查看数据

classes = ['T-shirt','Trouser','Pullover','Dress','Coat','Scandal','Shirt','Sneaker','Bag','Ankle Boot']

sample_idx = torch.randint(0,len(train_dataset),size=(1,)).item()

image,label = train_dataset[sample_idx]def imshow(img):img = img*0.3205 + 0.2860plt.imshow(img.numpy()[0],cmap='gray')plt.show()

print('Label:{}'.format(classes(label)))

imshow(image)查看数据:

3. 创建dataloader、定义模型

三层卷积+两层全连接层

# dataloader

batch_size = 64

train_loader = DataLoader(train_dataset,batch_size,shuffle=True)

test_loader = DataLoader(test_dataset,batch_size,shuffle=False)# 定义模型

class CNN(nn.Module):def __init__(self):super(CNN,self).__init__()# 第一层卷积self.conv1 = nn.Conv2d(1,32,kernel_size=3,padding=1)# 第二层卷积self.conv2 = nn.Conv2d(32,64,kernel_size=3,padding=1)# 第三层卷积self.conv3 = nn.Conv2d(64,128,kernel_size=3,padding=1)# 池化层self.pool = nn.MaxPool2d(kernel_size=2,stride=2)self.pool3 = nn.MaxPool2d(kernel_size=2,stride=2,ceil_mode=True) # 向上取整,像素不能为小数# 第一层全连接层self.flatten = nn.Flatten()self.fc1 = nn.Linear(128*4*4,256)# 第二层全连接层self.fc2 = nn.Linear(256,10)def forward(self,x):# 第一层卷积x = self.pool(F.relu(self.conv1(x))) # 输出:32*14*14# 第二层卷积x = self.pool(F.relu(self.conv2(x))) # 输出:64*7*7# 第三层卷积x = self.pool3(F.relu(self.conv3(x))) # 输出:128*4*4(池化向上取整)# 第一层全连接层x = self.flatten(x) # 展平x = F.relu(self.fc1(x)) # 第二层全连接层x = self.fc2(x)return xdevice = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print('使用的设备:{}'.format(device))model = CNN().to(device)4. 封装训练(引入调度器)、测试和可视化过程

# 训练

# 损失函数和优化器,加入调度器

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(),lr=0.001)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,mode='min',factor=0.5,patience=3)

# 循环训练

def train(model,epochs,train_loader,test_loader,device,optimizer,criterion,scheduler):model.train()all_iter_losses = []iteration_indices = []train_history_loss = []train_history_acc = []test_history_loss = []test_history_acc = []# epoch循环for epoch in range(epochs):running_loss = 0total = 0correct = 0# batch循环for batch_idx,(data,target) in enumerate(train_loader):data,target = data.to(device),target.to(device)optimizer.zero_grad() # 清零梯度output = model(data) # 前向传播loss = criterion(output,target) # 损失值计算loss.backward() # 反向传播optimizer.step() # 更新参数# 存储loss_value = loss.item()all_iter_losses.append(loss_value)iteration_indices.append(epoch*len(train_loader) + batch_idx + 1)# 统计running_loss += loss_valuetotal += target.size(0)_,predicted = output.max(1)correct += predicted.eq(target).sum().item()# 打印每100个batch的结果if (batch_idx + 1) % 100 == 0:print(f'Epoch: {epoch+1}/{epochs} | Batch: {batch_idx+1}/{len(train_loader)} 'f'| 单Batch损失: {loss_value:.4f} | 累计平均损失: {running_loss/(batch_idx+1):.4f}')# 计算每个epoch的loss和accuracyepoch_train_loss = running_loss / len(train_loader)epoch_train_acc = 100. * correct / totalepoch_test_loss,epoch_test_acc = test(model,test_loader,device,criterion)train_history_loss.append(epoch_train_loss)train_history_acc.append(epoch_train_acc)test_history_loss.append(epoch_test_loss)test_history_acc.append(epoch_test_acc)# 调度器,更新学习率if scheduler:scheduler.step(epoch_test_loss)# 打印epoch的结果print(f'Epoch {epoch+1}/{epochs} 完成 | 训练准确率: {epoch_train_acc:.2f}% | 测试准确率: {epoch_test_acc:.2f}%')# 可视化#plot_iteration_loss(all_iter_losses,iteration_indices)plot_epoch_metrics(train_history_acc, test_history_acc, train_history_loss, test_history_loss)return epoch_test_acc# 测试

def test(model,test_loader,device,criterion):model.eval()test_loss = 0test_total = 0test_correct = 0with torch.no_grad():for data,target in test_loader:data,target = data.to(device),target.to(device)out = model(data) # 前向传播t_loss = criterion(out,target) # 损失值计算test_loss += t_loss.item()test_total += target.size(0)_,predicted = out.max(1)test_correct += predicted.eq(target).sum().item()avg_loss = test_loss / len(test_loader)acc = 100. * test_correct / test_totalreturn avg_loss,acc# 可视化

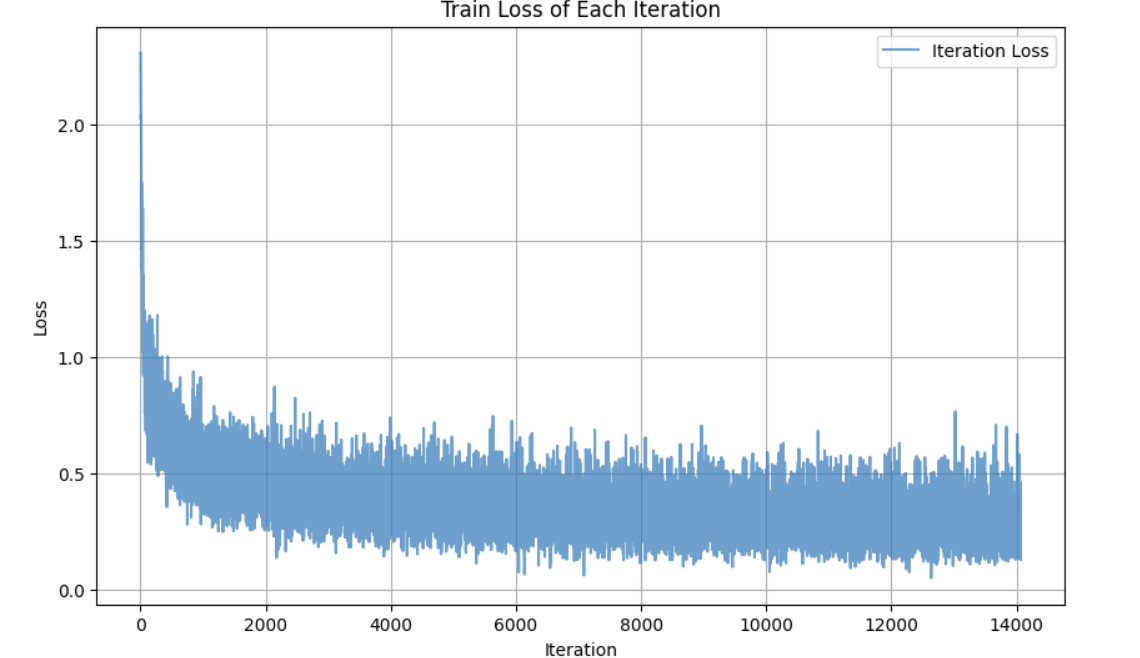

def plot_iteration_loss(losses,indices):plt.figure(figsize=(10,6))plt.plot(indices,losses,alpha=0.7,label='Iteration Loss')plt.xlabel('Iteration')plt.ylabel('Loss')plt.title('Train Loss of Each Iteration')plt.legend()plt.grid(True)plt.show()

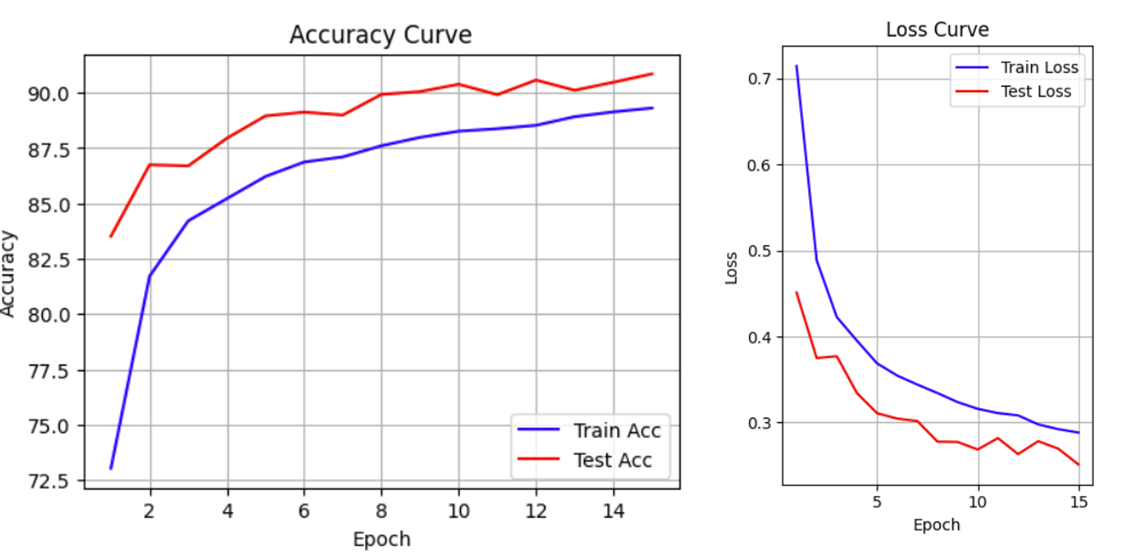

def plot_epoch_metrics(train_acc, test_acc, train_loss, test_loss):epochs = range(1,len(train_acc)+1)plt.figure(figsize=(12,8))# 准确率曲线plt.subplot(1,2,1)plt.plot(epochs,train_acc,color='blue',label='Train Acc')plt.plot(epochs,test_acc,color='red',label='Test Acc')plt.xlabel('Epoch')plt.ylabel('Accuracy')plt.title('Accuracy Curve')plt.legend()plt.grid(True)plt.show()# 损失值曲线plt.subplot(1,2,2)plt.plot(epochs,train_loss,color='blue',label='Train Loss')plt.plot(epochs,test_loss,color='red',label='Test Loss')plt.xlabel('Epoch')plt.ylabel('Loss')plt.title('Loss Curve')plt.legend()plt.grid(True)plt.tight_layout()plt.show()5.调用

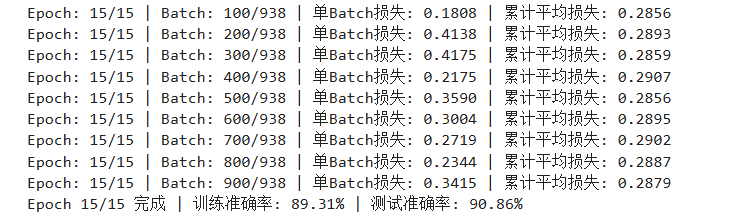

# 8-调用

epochs = 15

print("开始使用CNN训练模型...")

final_acc = train(model,epochs,train_loader,test_loader,device,optimizer,criterion,scheduler)

print('训练完成!最终测试准确率:{:.2f}%'.format(final_acc))最终准确率为90.86 %,累计平均损失为0.2879

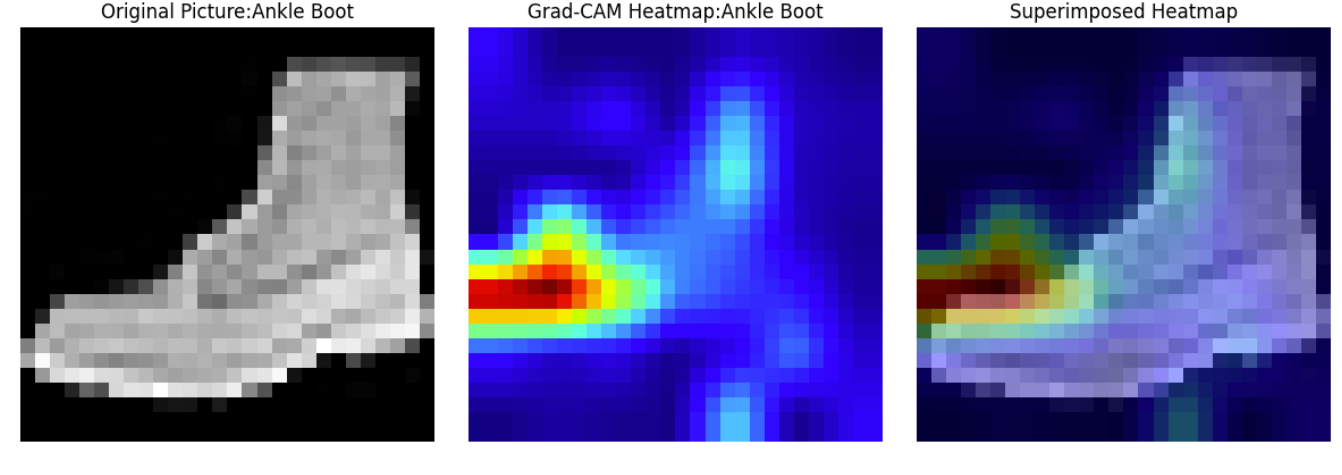

6.Grad-CAM查看

# Grad-CAM可视化

model.eval()# 定义GradCAM类

class GradCAM:def __init__(self,model,target_layer):self.model = model # 模型self.target_layer = target_layer # 目标层self.gradients = None # 梯度self.activations = None # 特征图# 注册钩子,用于获取目标层的前向传播输出和反向传播梯度self.register_hooks()def register_hooks(self):def forward_hook(module,input,output): # 参数自动输入self.activations = output.detach()def backward_hook(module,grad_input,grad_output):self.gradients = grad_output[0].detach() # grad_output = (d_loss/d_output,)# 在目标层注册前向钩子和反向钩子self.target_layer.register_forward_hook(forward_hook)self.target_layer.register_backward_hook(backward_hook)def generate_cam(self,input_image,target_class=None):model_output = self.model(input_image) # 前向传播# 目标类别if target_class is None: # 没有指定目标类别target_class = torch.argmax(model_output,dim=1).item() #选取模型预测概率最大的作为目标类别self.model.zero_grad() # 清除梯度# 构造one-hot向量,使得目标类别对应的梯度为1,其余为0,然后进行反向传播计算梯度one_hot = torch.zeros_like(model_output) # 与model_output大小相同的全零张量one_hot[0,target_class] = 1 # 目标类别对应的梯度为1,其余为0model_output.backward(gradient=one_hot) # 突出目标类别# 获取之前保存的目标层的梯度和激活值gradients = self.gradientsactivations = self.activations# 计算权重:对梯度进行全局平均池化,得到每个通道的权重,用于衡量每个通道的重要性weights = torch.mean(gradients,dim=(2,3),keepdim=True)# 加权激活映射:将权重与激活值相乘并求和,得到类激活映射的初步结果cam = torch.sum(gradients*weights,dim=1,keepdim=True)# ReLU激活,只保留对目标类别有正贡献的区域,去除负贡献的影响cam = F.relu(cam)# 调整大小并归一化,将类激活映射调整为与输入图像相同的尺寸(32x32),并归一化到[0, 1]范围cam = F.interpolate(cam,size=(28,28),mode='bilinear',align_corners=False)cam = cam - cam.min()cam = cam / cam.max() if cam.max() > 0 else cam# cam.cpu().squeeze().numpy():将张量从GPU移到CPU,然后移除所有大小为1的维度,再转换为数组return cam.cpu().squeeze().numpy(),target_class

import warnings

warnings.filterwarnings("ignore")

import matplotlib.pyplot as plt

import numpy as np

# 设置中文字体支持

plt.rcParams["font.family"] = ["SimHei"]

plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题

# 随机选择图像

idx = np.random.randint(len(test_dataset))

classes = ['T-shirt','Trouser','Pullover','Dress','Coat','Scandal','Shirt','Sneaker','Bag','Ankle Boot']

#idx = 102 # 选择测试集中的第101张图片 (索引从0开始)

image, label = test_dataset[idx]

# print(f"选择的图像类别: {classes[label]}")# 转换为numpy数组,imshow()需要数组作为输入

def tensor_to_np(tensor):img = tensor.cpu().numpy()[0] # 灰度图像:形状为 [1, H, W],取第一个通道->[H, W]mean = 0.2860 # 单值均值std = 0.3205 # 单值标准差img = std * img + meanimg = np.clip(img,0,1)return img# 添加批次维度并移动到设备,单张图像[channels, height, width](3D张量)

# unsqueeze(dim) 方法:在指定维度dim处添加一个大小为1的维度

input_tensor = image.unsqueeze(0).to(device)# 初始化Grad-CAM

grad_cam = GradCAM(model,model.conv3) # 选择最后一个卷积层

# 生成热图

heatmap,pred_class = grad_cam.generate_cam(input_tensor)# 可视化

plt.figure(figsize=(12,4))# 1-原始图像

plt.subplot(1,3,1)

img_original = tensor_to_np(image)

plt.imshow(img_original,cmap='gray') # 指定灰度色彩映射

plt.title(f'Original Picture:{classes[label]}')

plt.axis('off')# 2-热力图

plt.subplot(1,3,2)

plt.imshow(heatmap,cmap='jet')

plt.title(f'Grad-CAM Heatmap:{classes[pred_class]}')

plt.axis('off')# 3-叠加图

plt.subplot(1,3,3)

# 将灰度图像转换为三通道的"伪彩色"图像用于叠加

img_rgb = np.stack([img_original] * 3, axis=-1) # 形状 [H, W, 3]

# 创建彩色热力图

heatmap_resized = np.uint8(255 * heatmap)

heatmap_colored = plt.cm.jet(heatmap_resized)[:, :, :3]

# 叠加热力图和原图

superimposed_img = heatmap_colored * 0.4 + img_rgb * 0.6

superimposed_img = np.clip(superimposed_img, 0, 1) # 确保值在[0,1]范围内plt.imshow(superimposed_img)

plt.title("Superimposed Heatmap")

plt.axis('off')plt.tight_layout()

#plt.savefig('grad_cam_result.png')

plt.show()