qt调用摄像头进行yolo的实时检测

Qt 调用 YOLO 进行目标检测的核心流程可概括为:先从视频流中获取原始帧,将其转换为 YOLO 模型兼容的图像格式(如 BGR 通道的 OpenCV 矩阵);随后通过 YOLO 模型执行推理,得到目标的位置、类别等检测结果;接着在图像上绘制检测框及标签以可视化结果;再将处理后的图像转换回 Qt 可直接渲染的格式(如 RGBA 的 QImage);最后输出并展示处理后的画面,完成从视频采集到实时检测可视化的闭环。

首先进行摄像头的调用,我借鉴的是这个:

【QT】摄像头调用_qt调用摄像头-CSDN博客

摄像头调用成功之后进行yolo的处理:

准备工作:ultralytics/ultralytics: Ultralytics YOLO 🚀

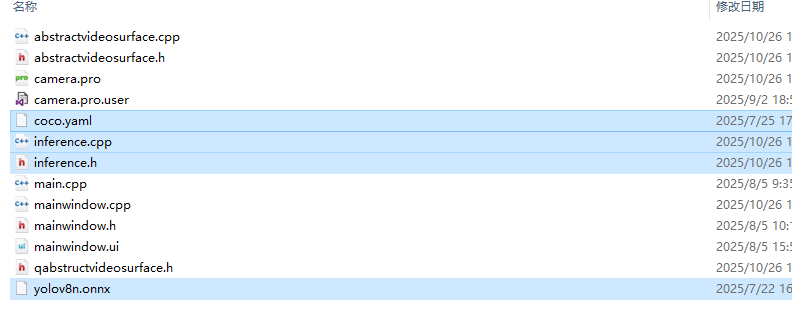

从官网准备yolov8n.onnx,以及coco.yaml,接着从examples/YOLOv8-ONNXRuntime-CPP/复制inference.h和inference.cpp到你的项目中:

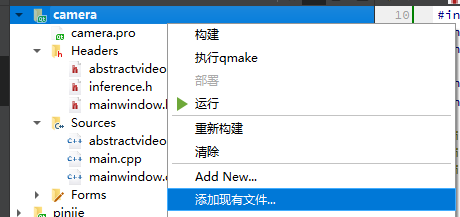

在qt中进行添加现有文件inference.h和inference.cpp:

接着修改abstractvideosurface.h(完整代码):

#ifndef ABSTRACTVIDEOSURFACE_H

#define ABSTRACTVIDEOSURFACE_H#include <QAbstractVideoSurface>

#include <QImage>#include "inference.h"

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <fstream>

#include <vector>

#include <string>#include <thread> // 支持多线程

#include <mutex>using namespace cv;

using namespace cv::dnn;

using namespace std;class AbstractVideoSurface : public QAbstractVideoSurface

{Q_OBJECT

public:AbstractVideoSurface(QObject* parent = NULL);virtual bool present(const QVideoFrame &frame);~AbstractVideoSurface(); // 添加析构函数释放 YOLOvirtual QList<QVideoFrame::PixelFormat> supportedPixelFormats(QAbstractVideoBuffer::HandleType type = QAbstractVideoBuffer::NoHandle) const;signals:void sndImage(QImage);private:YOLO_V8* yolo; // YOLOv8 检测器std::vector<std::string> class_list; // COCO 类别const float CONFIDENCE_THRESHOLD = 0.1f; // 置信度阈值const float IOU_THRESHOLD = 0.4f; // NMS 阈值std::mutex yoloMutex; // 新增:互斥锁保护YOLO访问

};#endif // ABSTRACTVIDEOSURFACE_H

abstractvideosurface.cpp(完整代码):

#include "abstractvideosurface.h"

#include <fstream>

#include<QDebug>#include <thread> AbstractVideoSurface::AbstractVideoSurface(QObject* parent):QAbstractVideoSurface(parent)

{// 初始化 YOLOv8yolo = new YOLO_V8();// 加载 COCO 类别std::ifstream file("C:/camera/coco.yaml");if (!file.is_open()) {qDebug() << "Failed to open coco.yaml";return;}std::string line;std::vector<std::string> lines;while (std::getline(file, line)) {lines.push_back(line);}std::size_t start = 0, end = 0;for (std::size_t i = 0; i < lines.size(); i++) {if (lines[i].find("names:") != std::string::npos) {start = i + 1;}else if (start > 0 && lines[i].find(':') == std::string::npos) {end = i;break;}}for (std::size_t i = start; i < end; i++) {std::stringstream ss(lines[i]);std::string name;std::getline(ss, name, ':');std::getline(ss, name);class_list.push_back(name);}yolo->classes = class_list;// 配置 YOLOv8 参数DL_INIT_PARAM params;params.modelPath = "C:/camera/yolov8n.onnx";params.imgSize = {640, 640};params.rectConfidenceThreshold = CONFIDENCE_THRESHOLD;params.iouThreshold = IOU_THRESHOLD;params.modelType = YOLO_DETECT_V8;params.cudaEnable = true;

//改true如果有CUDA和ONNX Runtime CUDA 支持,建议先false在cpu上成功再调试CUDA相关yolo->CreateSession(params);yolo->WarmUpSession(); // 预热模型

}AbstractVideoSurface::~AbstractVideoSurface()

{qDebug() << "Releasing YOLO resources";delete yolo; // 释放 YOLOv8qDebug() << "YOLO resources released";

}bool AbstractVideoSurface::present(const QVideoFrame &frame)

{QVideoFrame fm = frame;fm.map(QAbstractVideoBuffer::ReadOnly);// QVideoFrame → QImageQImage image(fm.bits(), fm.width(), fm.height(), fm.imageFormatFromPixelFormat(fm.pixelFormat()));image = image.copy(); // <--- 加这一行image = image.convertToFormat(QImage::Format_RGBA8888); // 确保RGBAimage = image.mirrored(1);//表示横向镜像,纵向镜像是默认值// QImage → cv::Matcv::Mat input_image(image.height(), image.width(), CV_8UC4, image.bits(), image.bytesPerLine());cv::Mat bgr_image;cv::cvtColor(input_image, bgr_image, cv::COLOR_RGBA2BGR);// qDebug() << "1";// 新增:使用多线程处理YOLO推理和绘制,避免阻塞主线程std::thread detectionThread([this, bgr_image]() mutable { // mutable允许修改捕获的bgr_imagestd::vector<DL_RESULT> res;{std::lock_guard<std::mutex> lock(yoloMutex); // 锁定YOLO访问yolo->RunSession(bgr_image, res);}qDebug() << "2 Detected:" << res.size();// 绘制检测框for (auto& re : res) {cv::Scalar color(0, 255, 0); // 绿色检测框cv::rectangle(bgr_image, re.box, color, 2);std::string label = class_list[re.classId] + " " + std::to_string(re.confidence).substr(0, 4);cv::putText(bgr_image, label, cv::Point(re.box.x, re.box.y - 5),cv::FONT_HERSHEY_SIMPLEX, 0.6, cv::Scalar(0, 0, 255), 2);}// 转回 QImage(BGR → RGB)cv::Mat display_image;cv::cvtColor(bgr_image, display_image, cv::COLOR_BGR2RGB);QImage detected_image(display_image.data, display_image.cols, display_image.rows, display_image.step, QImage::Format_RGB888);// 从线程中发出信号(Qt信号跨线程安全)emit sndImage(detected_image.copy());});detectionThread.detach(); // 分离线程,让其独立运行(或使用join根据需要)fm.unmap();return true;}QList<QVideoFrame::PixelFormat> AbstractVideoSurface::supportedPixelFormats(QAbstractVideoBuffer::HandleType type) const

{Q_UNUSED(type);

// 这个函数在 AbstractVideoSurface构造函数的时候,自动调用,目的是确认AbstractVideoSurface能够支持的像素格式,摄像头拍摄到的图片,他的像素格式是 RGB_3// 所以,我们要把RGB_32这个像素格式,添加到AbstractVideoSurface支持列表里面了

// 当前函数,返回的就是AbstractVideoSurface所支持的像素格式的列表return QList<QVideoFrame::PixelFormat>() << QVideoFrame::Format_RGB32;// 此处的 operoatr<< 相遇 QList::append方法

}接着还需要修改mainwindow.cpp(完整代码):

#include "mainwindow.h"

#include "ui_mainwindow.h"MainWindow::MainWindow(QWidget *parent): QMainWindow(parent), ui(new Ui::MainWindow)

{ui->setupUi(this);sender = new QUdpSocket(this);reciver = new QUdpSocket(this);reciver->bind(12345,QUdpSocket::ReuseAddressHint);QObject::connect(reciver,SIGNAL(readyRead()),this,SLOT(onReadyRead()));/*① 通过QCameraInfo类的defaultCamera获取摄像头信息,以QCameraInfo对象保存② 将包含有摄像头信息的QCameraInfo对象,传入QCamera构造函数,构造一个QCamera对象,该对象就能管理刚才获取到的摄像头了③ QCamera对象,管理的摄像头,拍摄到的图片,必须交给QAbstructVideoSurface对象去处理QAbstructVideoSurface这个类是一个纯虚类,必须继承过后重写里面的纯虚方法

*/QCameraInfo info = QCameraInfo::defaultCamera();camera = new QCamera(info);AbstractVideoSurface* surface = new AbstractVideoSurface(this);//图像处理类camera->setViewfinder(surface);//将camera拍摄到的图片交给AbstractVideoSurface类对象里面的present函数进行处理QObject::connect(surface,SIGNAL(sndImage(QImage)),this,SLOT(rcvImage(QImage)));// 连接“开始”按钮connect(ui->pushButton, &QPushButton::clicked, this, &MainWindow::on_pushButton_clicked);// 连接“停止”按钮connect(ui->pushButton_2, &QPushButton::clicked, this, &MainWindow::on_pushButton_2_clicked);}MainWindow::~MainWindow()

{camera->stop(); // 确保摄像头停止delete camera; // 释放摄像头对象delete sender; // 释放发送端if (reciver->isOpen()) {reciver->close(); // 确保接收端关闭}delete reciver; // 释放接收端delete ui;

}void MainWindow::onReadyRead()

{

// 读取图片的时候,一样的道理,要将QByteArray里面的数据,作为QImage读取

/*有一个类叫做 QImageReader,里面有一个方法叫做readQImageReader的构造函数,支持传入一个QIODevice,能传入QIODeviec,就等于说是传入了一个QByteArray

*/int size = reciver->pendingDatagramSize();//获取图片尺寸QByteArray arr;arr.resize(size);//将arr适应图片大小reciver->readDatagram(arr.data(),size);//QByteArray的data()方法,获取首地址// 读取数据,保存到arr里面QBuffer buf(&arr);QImageReader reader(&buf);QImage image = reader.read();QPixmap pic = QPixmap::fromImage(image);ui->label->setPixmap(pic);}void MainWindow::rcvImage(QImage image)

{QPixmap pic = QPixmap::fromImage(image);pic = pic.scaled(ui->label->size());ui->label->setPixmap(pic);QImage img = pic.toImage();/*writeDatagram里面,数据最多是一个QByteArray,所以,我们现在要想办法,把QImage存入QByteArray(或者转换成QByteArray)

*/

/*整个转换逻辑如下:QImage 里面有一个方法叫做 save,可以将QImage保存到 QIODevice的对象里面去QIODevice有一个派生类叫做 QBuffer,也就是说QIamge::save可以做到将QImage保存到一个QBuffer里面去QBuffer的构造函数,支持传入一个QByteArray,作为QBuffer的缓存区也就是意味着:写入,保存进入QBuffer的数据,实际上是保存到了 QByteArray里面来

*/QByteArray arr;QBuffer buf(&arr);//将arr作为buf缓存区img.save(&buf,"JPG");//将image保存到buf里面去,实际上就是保存到buf的缓存区arr里面去sender->writeDatagram(arr,QHostAddress::Broadcast,12345);if(reciver->isOpen()){reciver->close();}

}void MainWindow::on_pushButton_clicked()

{camera->start();

}void MainWindow::on_pushButton_2_clicked()

{ui->label->clear();camera->stop();

}核心代码就这些了,剩下来给补全:

mainwindow.h:

#ifndef MAINWINDOW_H

#define MAINWINDOW_H#include <QMainWindow>#include <QCamera>

#include <QCameraInfo>

#include "abstractvideosurface.h"

#include <QImage>

#include <QUdpSocket>

#include <QBuffer>

#include <QImageReader>QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

QT_END_NAMESPACEclass MainWindow : public QMainWindow

{Q_OBJECTpublic:MainWindow(QWidget *parent = nullptr);~MainWindow();QCamera* camera;QUdpSocket* sender;QUdpSocket* reciver;public slots:void rcvImage(QImage);void onReadyRead();private:Ui::MainWindow *ui;void on_pushButton_clicked();void on_pushButton_2_clicked();};

#endif // MAINWINDOW_H

main:

#include "mainwindow.h"#include <QApplication>int main(int argc, char *argv[])

{QApplication a(argc, argv);MainWindow w;w.show();return a.exec();

}

inference.h:

// Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license#pragma once#define RET_OK nullptr#ifdef _WIN32

#include <Windows.h>

#include <direct.h>

#include <io.h>

#endif#include <string>

#include <vector>

#include <cstdio>

#include <opencv2/opencv.hpp>

#include "onnxruntime_cxx_api.h"#ifdef USE_CUDA

#include <cuda_fp16.h>

#endifenum MODEL_TYPE

{//FLOAT32 MODELYOLO_DETECT_V8 = 1,YOLO_POSE = 2,YOLO_CLS = 3,//FLOAT16 MODELYOLO_DETECT_V8_HALF = 4,YOLO_POSE_V8_HALF = 5,YOLO_CLS_HALF = 6

};typedef struct _DL_INIT_PARAM

{std::string modelPath;MODEL_TYPE modelType = YOLO_DETECT_V8;std::vector<int> imgSize = { 640, 640 };float rectConfidenceThreshold = 0.6;float iouThreshold = 0.5;int keyPointsNum = 2;//Note:kpt number for posebool cudaEnable = false;int logSeverityLevel = 3;int intraOpNumThreads = 1;

} DL_INIT_PARAM;typedef struct _DL_RESULT

{int classId;float confidence;cv::Rect box;std::vector<cv::Point2f> keyPoints;

} DL_RESULT;class YOLO_V8

{

public:YOLO_V8();~YOLO_V8();public:const char* CreateSession(DL_INIT_PARAM& iParams);const char* RunSession(cv::Mat& iImg, std::vector<DL_RESULT>& oResult);const char* WarmUpSession();template<typename N>const char* TensorProcess(clock_t& starttime_1, cv::Mat& iImg, N& blob, std::vector<int64_t>& inputNodeDims,std::vector<DL_RESULT>& oResult);const char* PreProcess(cv::Mat& iImg, std::vector<int> iImgSize, cv::Mat& oImg);std::vector<std::string> classes{};private:Ort::Env env;Ort::Session* session;bool cudaEnable;Ort::RunOptions options;std::vector<const char*> inputNodeNames;std::vector<const char*> outputNodeNames;MODEL_TYPE modelType;std::vector<int> imgSize;float rectConfidenceThreshold;float iouThreshold;float resizeScales;//letterbox scale

};

inference.cpp:

// Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license#include "inference.h"

#include <regex>#define benchmark

#define min(a,b) (((a) < (b)) ? (a) : (b))

YOLO_V8::YOLO_V8() {}YOLO_V8::~YOLO_V8() {delete session;

}#ifdef USE_CUDA

namespace Ort

{template<>struct TypeToTensorType<half> { static constexpr ONNXTensorElementDataType type = ONNX_TENSOR_ELEMENT_DATA_TYPE_FLOAT16; };

}

#endiftemplate<typename T>

char* BlobFromImage(cv::Mat& iImg, T& iBlob) {int channels = iImg.channels();int imgHeight = iImg.rows;int imgWidth = iImg.cols;for (int c = 0; c < channels; c++){for (int h = 0; h < imgHeight; h++){for (int w = 0; w < imgWidth; w++){iBlob[c * imgWidth * imgHeight + h * imgWidth + w] = typename std::remove_pointer<T>::type((iImg.at<cv::Vec3b>(h, w)[c]) / 255.0f);}}}return RET_OK;

}const char* YOLO_V8::PreProcess(cv::Mat& iImg, std::vector<int> iImgSize, cv::Mat& oImg)

{if (iImg.channels() == 3){oImg = iImg.clone();cv::cvtColor(oImg, oImg, cv::COLOR_BGR2RGB);}else{cv::cvtColor(iImg, oImg, cv::COLOR_GRAY2RGB);}switch (modelType){case YOLO_DETECT_V8:case YOLO_POSE:case YOLO_DETECT_V8_HALF:case YOLO_POSE_V8_HALF://LetterBox{if (iImg.cols >= iImg.rows){resizeScales = iImg.cols / (float)iImgSize.at(0);cv::resize(oImg, oImg, cv::Size(iImgSize.at(0), int(iImg.rows / resizeScales)));}else{resizeScales = iImg.rows / (float)iImgSize.at(0);cv::resize(oImg, oImg, cv::Size(int(iImg.cols / resizeScales), iImgSize.at(1)));}cv::Mat tempImg = cv::Mat::zeros(iImgSize.at(0), iImgSize.at(1), CV_8UC3);oImg.copyTo(tempImg(cv::Rect(0, 0, oImg.cols, oImg.rows)));oImg = tempImg;break;}case YOLO_CLS://CenterCrop{int h = iImg.rows;int w = iImg.cols;int m = min(h, w);int top = (h - m) / 2;int left = (w - m) / 2;cv::resize(oImg(cv::Rect(left, top, m, m)), oImg, cv::Size(iImgSize.at(0), iImgSize.at(1)));break;}}return RET_OK;

}const char* YOLO_V8::CreateSession(DL_INIT_PARAM& iParams) {const char* Ret = nullptr;std::regex pattern("[\u4e00-\u9fa5]");bool result = std::regex_search(iParams.modelPath, pattern);if (result){Ret = "[YOLO_V8]:Your model path is error.Change your model path without chinese characters.";std::cout << Ret << std::endl;return Ret;}try{rectConfidenceThreshold = iParams.rectConfidenceThreshold;iouThreshold = iParams.iouThreshold;imgSize = iParams.imgSize;modelType = iParams.modelType;cudaEnable = iParams.cudaEnable;env = Ort::Env(ORT_LOGGING_LEVEL_WARNING, "Yolo");Ort::SessionOptions sessionOption;if (iParams.cudaEnable){OrtCUDAProviderOptions cudaOption;cudaOption.device_id = 0;sessionOption.AppendExecutionProvider_CUDA(cudaOption);}sessionOption.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_ALL);sessionOption.SetIntraOpNumThreads(iParams.intraOpNumThreads);sessionOption.SetLogSeverityLevel(iParams.logSeverityLevel);#ifdef _WIN32int ModelPathSize = MultiByteToWideChar(CP_UTF8, 0, iParams.modelPath.c_str(), static_cast<int>(iParams.modelPath.length()), nullptr, 0);wchar_t* wide_cstr = new wchar_t[ModelPathSize + 1];MultiByteToWideChar(CP_UTF8, 0, iParams.modelPath.c_str(), static_cast<int>(iParams.modelPath.length()), wide_cstr, ModelPathSize);wide_cstr[ModelPathSize] = L'\0';const wchar_t* modelPath = wide_cstr;

#elseconst char* modelPath = iParams.modelPath.c_str();

#endif // _WIN32session = new Ort::Session(env, modelPath, sessionOption);Ort::AllocatorWithDefaultOptions allocator;size_t inputNodesNum = session->GetInputCount();for (size_t i = 0; i < inputNodesNum; i++){Ort::AllocatedStringPtr input_node_name = session->GetInputNameAllocated(i, allocator);char* temp_buf = new char[50];strcpy(temp_buf, input_node_name.get());inputNodeNames.push_back(temp_buf);}size_t OutputNodesNum = session->GetOutputCount();for (size_t i = 0; i < OutputNodesNum; i++){Ort::AllocatedStringPtr output_node_name = session->GetOutputNameAllocated(i, allocator);char* temp_buf = new char[10];strcpy(temp_buf, output_node_name.get());outputNodeNames.push_back(temp_buf);}options = Ort::RunOptions{ nullptr };WarmUpSession();return RET_OK;}catch (const std::exception& e){const char* str1 = "[YOLO_V8]:";const char* str2 = e.what();std::string result = std::string(str1) + std::string(str2);char* merged = new char[result.length() + 1];std::strcpy(merged, result.c_str());std::cout << merged << std::endl;delete[] merged;return "[YOLO_V8]:Create session failed.";}}const char* YOLO_V8::RunSession(cv::Mat& iImg, std::vector<DL_RESULT>& oResult) {

#ifdef benchmarkclock_t starttime_1 = clock();

#endif // benchmarkchar* Ret = RET_OK;cv::Mat processedImg;PreProcess(iImg, imgSize, processedImg);if (modelType < 4){float* blob = new float[processedImg.total() * 3];BlobFromImage(processedImg, blob);std::vector<int64_t> inputNodeDims = { 1, 3, imgSize.at(0), imgSize.at(1) };TensorProcess(starttime_1, iImg, blob, inputNodeDims, oResult);}else{

#ifdef USE_CUDAhalf* blob = new half[processedImg.total() * 3];BlobFromImage(processedImg, blob);std::vector<int64_t> inputNodeDims = { 1,3,imgSize.at(0),imgSize.at(1) };TensorProcess(starttime_1, iImg, blob, inputNodeDims, oResult);

#endif}return Ret;

}template<typename N>

const char* YOLO_V8::TensorProcess(clock_t& starttime_1, cv::Mat& iImg, N& blob, std::vector<int64_t>& inputNodeDims,std::vector<DL_RESULT>& oResult) {Ort::Value inputTensor = Ort::Value::CreateTensor<typename std::remove_pointer<N>::type>(Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU), blob, 3 * imgSize.at(0) * imgSize.at(1),inputNodeDims.data(), inputNodeDims.size());

#ifdef benchmarkclock_t starttime_2 = clock();

#endif // benchmarkauto outputTensor = session->Run(options, inputNodeNames.data(), &inputTensor, 1, outputNodeNames.data(),outputNodeNames.size());

#ifdef benchmarkclock_t starttime_3 = clock();

#endif // benchmarkOrt::TypeInfo typeInfo = outputTensor.front().GetTypeInfo();auto tensor_info = typeInfo.GetTensorTypeAndShapeInfo();std::vector<int64_t> outputNodeDims = tensor_info.GetShape();auto output = outputTensor.front().GetTensorMutableData<typename std::remove_pointer<N>::type>();delete[] blob;switch (modelType){case YOLO_DETECT_V8:case YOLO_DETECT_V8_HALF:{int signalResultNum = outputNodeDims[1];//84int strideNum = outputNodeDims[2];//8400std::vector<int> class_ids;std::vector<float> confidences;std::vector<cv::Rect> boxes;cv::Mat rawData;if (modelType == YOLO_DETECT_V8){// FP32rawData = cv::Mat(signalResultNum, strideNum, CV_32F, output);}else{// FP16rawData = cv::Mat(signalResultNum, strideNum, CV_16F, output);rawData.convertTo(rawData, CV_32F);}// Note:// ultralytics add transpose operator to the output of yolov8 model.which make yolov8/v5/v7 has same shape// https://github.com/ultralytics/assets/releases/download/v8.3.0/yolov8n.ptrawData = rawData.t();float* data = (float*)rawData.data;for (int i = 0; i < strideNum; ++i){float* classesScores = data + 4;cv::Mat scores(1, this->classes.size(), CV_32FC1, classesScores);cv::Point class_id;double maxClassScore;cv::minMaxLoc(scores, 0, &maxClassScore, 0, &class_id);if (maxClassScore > rectConfidenceThreshold){confidences.push_back(maxClassScore);class_ids.push_back(class_id.x);float x = data[0];float y = data[1];float w = data[2];float h = data[3];int left = int((x - 0.5 * w) * resizeScales);int top = int((y - 0.5 * h) * resizeScales);int width = int(w * resizeScales);int height = int(h * resizeScales);boxes.push_back(cv::Rect(left, top, width, height));}data += signalResultNum;}std::vector<int> nmsResult;cv::dnn::NMSBoxes(boxes, confidences, rectConfidenceThreshold, iouThreshold, nmsResult);for (int i = 0; i < nmsResult.size(); ++i){int idx = nmsResult[i];DL_RESULT result;result.classId = class_ids[idx];result.confidence = confidences[idx];result.box = boxes[idx];oResult.push_back(result);}#ifdef benchmarkclock_t starttime_4 = clock();double pre_process_time = (double)(starttime_2 - starttime_1) / CLOCKS_PER_SEC * 1000;double process_time = (double)(starttime_3 - starttime_2) / CLOCKS_PER_SEC * 1000;double post_process_time = (double)(starttime_4 - starttime_3) / CLOCKS_PER_SEC * 1000;if (cudaEnable){std::cout << "[YOLO_V8(CUDA)]: " << pre_process_time << "ms pre-process, " << process_time << "ms inference, " << post_process_time << "ms post-process." << std::endl;}else{std::cout << "[YOLO_V8(CPU)]: " << pre_process_time << "ms pre-process, " << process_time << "ms inference, " << post_process_time << "ms post-process." << std::endl;}

#endif // benchmarkbreak;}case YOLO_CLS:case YOLO_CLS_HALF:{cv::Mat rawData;if (modelType == YOLO_CLS) {// FP32rawData = cv::Mat(1, this->classes.size(), CV_32F, output);} else {// FP16rawData = cv::Mat(1, this->classes.size(), CV_16F, output);rawData.convertTo(rawData, CV_32F);}float *data = (float *) rawData.data;DL_RESULT result;for (int i = 0; i < this->classes.size(); i++){result.classId = i;result.confidence = data[i];oResult.push_back(result);}break;}default:std::cout << "[YOLO_V8]: " << "Not support model type." << std::endl;}return RET_OK;}const char* YOLO_V8::WarmUpSession() {clock_t starttime_1 = clock();cv::Mat iImg = cv::Mat(cv::Size(imgSize.at(0), imgSize.at(1)), CV_8UC3);cv::Mat processedImg;PreProcess(iImg, imgSize, processedImg);if (modelType < 4){float* blob = new float[iImg.total() * 3];BlobFromImage(processedImg, blob);std::vector<int64_t> YOLO_input_node_dims = { 1, 3, imgSize.at(0), imgSize.at(1) };Ort::Value input_tensor = Ort::Value::CreateTensor<float>(Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU), blob, 3 * imgSize.at(0) * imgSize.at(1),YOLO_input_node_dims.data(), YOLO_input_node_dims.size());auto output_tensors = session->Run(options, inputNodeNames.data(), &input_tensor, 1, outputNodeNames.data(),outputNodeNames.size());delete[] blob;clock_t starttime_4 = clock();double post_process_time = (double)(starttime_4 - starttime_1) / CLOCKS_PER_SEC * 1000;if (cudaEnable){std::cout << "[YOLO_V8(CUDA)]: " << "Cuda warm-up cost " << post_process_time << " ms. " << std::endl;}}else{

#ifdef USE_CUDAhalf* blob = new half[iImg.total() * 3];BlobFromImage(processedImg, blob);std::vector<int64_t> YOLO_input_node_dims = { 1,3,imgSize.at(0),imgSize.at(1) };Ort::Value input_tensor = Ort::Value::CreateTensor<half>(Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU), blob, 3 * imgSize.at(0) * imgSize.at(1), YOLO_input_node_dims.data(), YOLO_input_node_dims.size());auto output_tensors = session->Run(options, inputNodeNames.data(), &input_tensor, 1, outputNodeNames.data(), outputNodeNames.size());delete[] blob;clock_t starttime_4 = clock();double post_process_time = (double)(starttime_4 - starttime_1) / CLOCKS_PER_SEC * 1000;if (cudaEnable){std::cout << "[YOLO_V8(CUDA)]: " << "Cuda warm-up cost " << post_process_time << " ms. " << std::endl;}

#endif}return RET_OK;

}

pro文件:

QT += core gui

QT +=multimedia

QT +=network

greaterThan(QT_MAJOR_VERSION, 4): QT += widgetsCONFIG += c++11# The following define makes your compiler emit warnings if you use

# any Qt feature that has been marked deprecated (the exact warnings

# depend on your compiler). Please consult the documentation of the

# deprecated API in order to know how to port your code away from it.

DEFINES += QT_DEPRECATED_WARNINGS# You can also make your code fail to compile if it uses deprecated APIs.

# In order to do so, uncomment the following line.

# You can also select to disable deprecated APIs only up to a certain version of Qt.

#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0SOURCES += \abstractvideosurface.cpp \inference.cpp \main.cpp \mainwindow.cppHEADERS += \abstractvideosurface.h \inference.h \mainwindow.hFORMS += \mainwindow.ui# 设置 ONNX Runtime 路径

ONNX_RUNTIME_PATH = D:/onnxruntime-win-x64-1.14.1# 添加头文件路径

INCLUDEPATH += $$ONNX_RUNTIME_PATH/include# 添加库文件路径

LIBS += -L$$ONNX_RUNTIME_PATH/lib# 链接 ONNX Runtime 的静态库(根据你实际使用的库名修改)

LIBS += -lonnxruntime# OpenCV 头文件路径

INCLUDEPATH += D:/opencv4.5.1/opencv/build/include# OpenCV 库文件路径(MSVC2017 64位)

LIBS += -LD:/opencv4.5.1/opencv/build/x64/vc15/lib \-lopencv_world451d # Default rules for deployment.

qnx: target.path = /tmp/$${TARGET}/bin

else: unix:!android: target.path = /opt/$${TARGET}/bin

!isEmpty(target.path): INSTALLS += target

其次会遇到一些问题:

1.C:\zyu\camera\inference.cpp:102: error: C2440: “=”: 无法从“const char [86]”转换为“char *”

解决方法:就是在inference.cpp中改为const char* Ret = nullptr,也就是遇到char*加上const即可,inference.h和inference.cpp完整代码中进行了修改

2.打开cuda: params.cudaEnable = true;出现:Could not locate zlibwapi.dll. Please make sure it is in your library path!

解决方法:就是在把zlibwapi.dll放在..\build-camera-Desktop_Qt_5_14_2_MSVC2017_64bit-Debug\debug下

3.卡顿原因:视频卡顿主要源于 YOLOv8 推理耗时高(约140ms/帧,CPU 限制),主线程处理推理和 UI 更新导致阻塞,QCamera 帧率或格式不匹配增加延迟,频繁内存分配(如 cv::Mat/QImage 转换)及 UDP 大数据传输进一步加剧问题。

解决方法:启用 CUDA 加速(需 FP16 模型),将推理移到单独 QThread,跳帧检测(每 2 帧一次),优化 QCamera 分辨率/帧率(640x480,15 FPS),减少 UDP 数据量(传 JSON 框坐标)并确保 fm.unmap() 释放内存。可以自己慢慢打磨。

4.关闭停不下来:懒得找,多按几次好像就能停下来。

5.针对pro文件配置问题,我推荐都将dll文件放入..\build-camera-Desktop_Qt_5_14_2_MSVC2017_64bit-Debug\debug下

6.配置:onnxruntime-win-x64-gpu-1.14.1,opencv4.5.1,qt5.14.2,msvc2017,debug和Release模式记得和opencv进行统一。