OpenShift Virtualization - 为使用 ovn-k8s-cni-overlay 类型网络的 VM 自动分配 IP 地址

《OpenShift / RHEL / DevSecOps 汇总目录》

说明:本文已经在支持 OpenShift Local 4.19 的环境中验证

文章目录

- 创建 ovn-k8s-cni-overlay 类型的 NetworkAttachmentDefinition

- 部署 DHCP 服务

- 创建使用 DHCP 的 VM

- 参考

OVN Kubernetes L2 overlay network 是 OpenShift 中使用 OVN-Kubernetes CNI 插件实现的一种基于覆盖网络(Overlay Network)的二层(L2)虚拟网络模型,可用于为 Pod 提供跨节点的、隔离的、高性能的网络通信能力。

创建 ovn-k8s-cni-overlay 类型的 NetworkAttachmentDefinition

- 创建一个 ovn-k8s-cni-overlay 类型的 NetworkAttachmentDefinition。

$ cat <<EOF | oc apply -f -

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:name: flatl2namespace: my-vm

spec:config: |{"cniVersion": "0.3.1","name": "flat12","netAttachDefName": "my-vm/flatl2","topology": "layer2","type": "ovn-k8s-cni-overlay"}

EOF

部署 DHCP 服务

- 为命名空间中的名为 default 的 ServiceAccount 提权。

$ oc adm policy add-scc-to-user anyuid -z default -n my-vm

- 部署提供 DHCP 功能的容器镜像。DHCP 服务运行的 Pod 占用 192.168.123.1/24 地址,DHCP 服务提供 192.168.123.2 - 192.168.123.254 范围的地址。

$ cat <<EOF | oc apply -f -

kind: ConfigMap

apiVersion: v1

metadata:name: dhcp-server-conf

data:dhcpd.conf: |authoritative;default-lease-time 86400;max-lease-time 86400;subnet 192.168.123.0 netmask 255.255.255.0 {range 192.168.123.2 192.168.123.254;option broadcast-address 192.168.123.255;}

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: dhcp-db

spec:accessModes:- ReadWriteOnceresources:requests:storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:name: dhcp-serverlabels:app: dhcp

spec:replicas: 1selector:matchLabels:app: dhcptemplate:metadata:labels:app: dhcpannotations:k8s.v1.cni.cncf.io/networks: '[{"name": "flatl2","ips": ["192.168.123.1/24"]}]'spec:containers:- name: serverimage: ghcr.io/maiqueb/ovn-k-secondary-net-dhcp:mainargs: ["-4", "-f", "-d", "--no-pid", "-cf", "/etc/dhcp/dhcpd.conf"]securityContext:runAsUser: 1000privileged: truevolumeMounts:- name: multus-daemon-configmountPath: /etc/dhcpreadOnly: true- name: dhcpdbmountPath: "/var/lib/dhcp"volumes:- name: multus-daemon-configconfigMap:name: dhcp-server-confitems:- key: dhcpd.confpath: dhcpd.conf- name: dhcpdbpersistentVolumeClaim:claimName: dhcp-db

EOF

- 确认运行 DHCP 的 Pod 使用了 192.168.123.1/24 地址。

$ $ oc exec deploy/dhcp-server -- ip a show net1

3: net1@if921: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1400 qdisc noqueue state UP link/ether 0a:58:c0:a8:7b:01 brd ff:ff:ff:ff:ff:ffinet 192.168.123.1/24 brd 192.168.123.255 scope global net1valid_lft forever preferred_lft foreverinet6 fe80::858:c0ff:fea8:7b01/64 scope link valid_lft forever preferred_lft forever

创建使用 DHCP 的 VM

- 基于 Fedora 模板创建 2 个虚机,vm-server 和 vm-client,登录用户/密码都是:fedora/fedora。

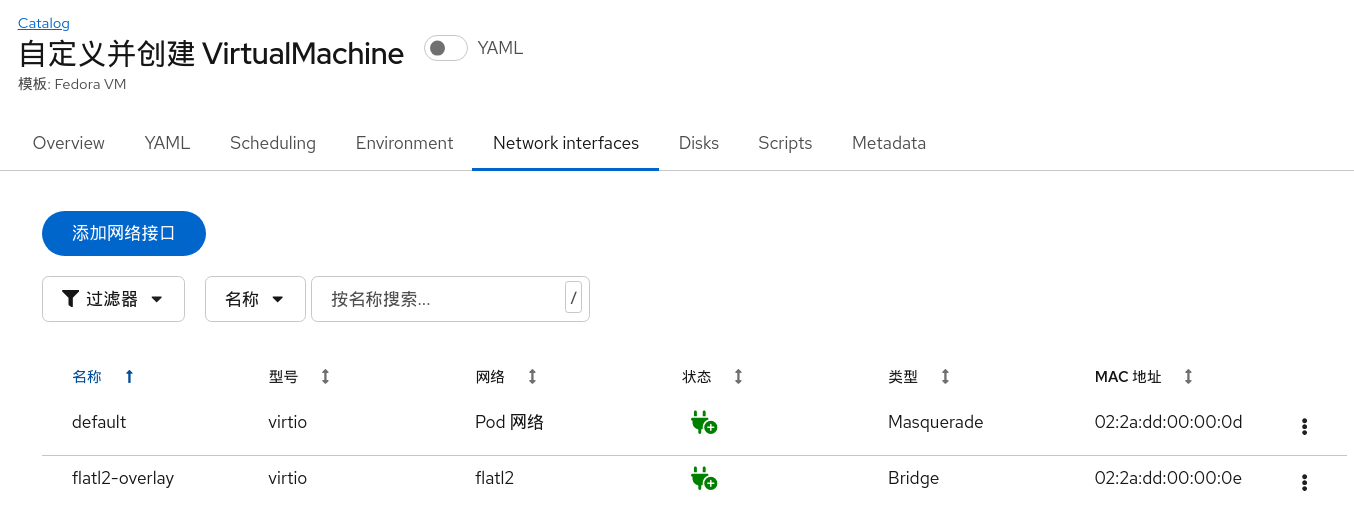

注意:在创建机的时候都要添加第二个网络接口,该网络接口将使用前面创建的名为 flatl2 的 ovn-k8s-cni-overlay 类型网络平面。

- 使用以下两段 YAML 分别为 vm-server 和 vm-client 虚机设置 Cloud-init 初始化脚本。

vm-server 的 Cloud-init 初始化脚本:

networkData: |version: 2ethernets:eth1:dhcp4: true

userData: |- #cloud-configuser: fedorapassword: fedorachpasswd: { expire: False }ssh_pwauth: truepackages:- nginxruncmd:- [ "systemctl", "enable", "--now", "nginx" ]

vm-client 的 Cloud-init 初始化脚本:

networkData: |version: 2ethernets:eth1:dhcp4: true

userData: |-#cloud-configuser: fedorapassword: fedorachpasswd: { expire: False }ssh_pwauth: true

- 在 2 个虚机运行后查看他们 eth1 网络接口分配的地址,确认都是 192.168.123 网段。

$ oc get vmi vm-server -ojsonpath="{@.status.interfaces}" | jq

[{"infoSource": "domain, guest-agent","interfaceName": "eth0","ipAddress": "10.217.0.170","ipAddresses": ["10.217.0.170"],"linkState": "up","mac": "02:2a:dd:00:00:04","name": "default","podInterfaceName": "eth0","queueCount": 1},{"infoSource": "domain, guest-agent, multus-status","interfaceName": "eth1","ipAddress": "192.168.123.2","ipAddresses": ["192.168.123.2","fe80::1823:865c:93cd:6798"],"linkState": "up","mac": "02:2a:dd:00:00:05","name": "flatl2-overlay","podInterfaceName": "podb5ec4ca89f1","queueCount": 1}

]$ oc get vmi vm-client -ojsonpath="{@.status.interfaces}" | jq

[{"infoSource": "domain, guest-agent","interfaceName": "eth0","ipAddress": "10.217.0.171","ipAddresses": ["10.217.0.171"],"linkState": "up","mac": "02:2a:dd:00:00:07","name": "default","podInterfaceName": "eth0","queueCount": 1},{"infoSource": "domain, guest-agent, multus-status","interfaceName": "eth1","ipAddress": "192.168.123.3","ipAddresses": ["192.168.123.3","fe80::71c7:9a88:8243:9eb9"],"linkState": "up","mac": "02:2a:dd:00:00:08","name": "flatl2-overlay","podInterfaceName": "podb5ec4ca89f1","queueCount": 1}

]

- 确认在 vm-client 虚机中可以通过 192.168.123 网段地址访问到 vm-server 虚机中的 nginx 服务。

[fedora@vm-client ~]$ curl -k 192.168.123.2

参考

https://www.redhat.com/en/blog/secondary-network-overlays-virtualization-workloads

https://github.com/maiqueb/ovn-k-secondary-net-dhcp/blob/main/README.md