(五) Dotnet对AI控制台添加构造工厂类

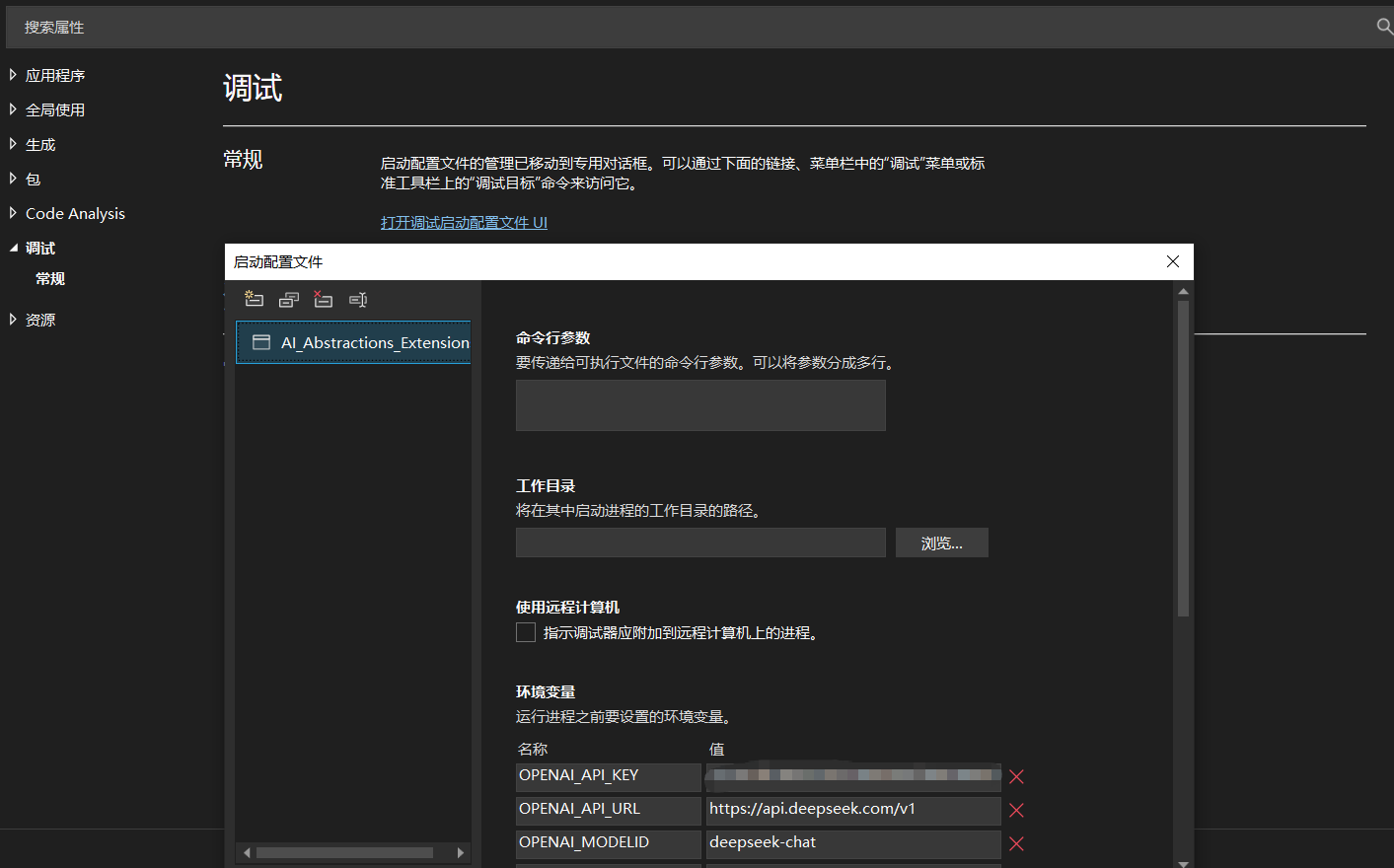

环境变量配置

统一将模型配置(API 服务地址、API_KEY、模型Id)均作为环境变量读取。

代码调整部分如下:

string apikey = Environment.GetEnvironmentVariable("OPENAI_API_KEY");// 通过环境变量获取apikey

string model = Environment.GetEnvironmentVariable("OPENAI_MODELID"); // 非思考

string endpoint = Environment.GetEnvironmentVariable("OPENAI_API_URL"); // 提供兼容openai的访问api

如果按照环境变量的方式,仅适用于单一模型加载,对于多模型和多平台,就显得不够用了,同时,对于模型服务的依赖注入代码也存在问题,ChatClient 实例在注册前就已经固化了该客户端实例实际请求的模型服务,在请求服务时,传入ModelId 并不能生效。

// model是必传项

IChatClient chatclient = new OpenAI.Chat.ChatClient(model, new ApiKeyCredential(apikey), new OpenAIClientOptions

{Endpoint = new Uri(endpoint) // 指定api访问地址

})

.AsIChatClient();

// 省略部分内容

// 构建Host实例

var host = builder.Build();

// 获取ichatclient实现实例

IChatClient client = host.Services.GetRequiredService<IChatClient>();

//-------------------------对话配置实例----------------------------

ChatOptions chatOptions = new ChatOptions();

// 设置Temperature 0.1 - 0.5(严谨、可复现)

chatOptions.Temperature = 0.5f;

// top-p 0.7-0.85

chatOptions.TopP = 0.7f;

// top-k 20-40

chatOptions.TopK = 20;

// 模型服务id

chatOptions.ModelId = "deepseek-reasoner";

// 设置会话Id

//chatOptions.ConversationId = Guid.NewGuid().ToString();

//-------------------------对话配置实例-----------------------------

while (true) {

//-------------------------对话配置-----------------------------

await foreach (ChatResponseUpdate update in client.GetStreamingResponseAsync(history, chatOptions))

{// 输出实时流对话结果Console.Write(update);// 存储此次消息响应单项updates.Add(update);

}

//-------------------------对话配置------------------------------

// 省略部分内容

}

实际请求发出前GetStreamingResponseAsync(),虽然传入了模型id,实际源码中,使用的是构造实例传入的_modelid,配置属性并未使用。

public IAsyncEnumerable<ChatResponseUpdate> GetStreamingResponseAsync(IEnumerable<ChatMessage> messages, ChatOptions? options = null, CancellationToken cancellationToken = default)

{// 省略内容var openAIChatMessages = ToOpenAIChatMessages(messages, options);var openAIOptions = ToOpenAIOptions(options);// 省略内容

}

private ChatCompletionOptions ToOpenAIOptions(ChatOptions? options)

{result.FrequencyPenalty ??= options.FrequencyPenalty;result.MaxOutputTokenCount ??= options.MaxOutputTokens;result.TopP ??= options.TopP;result.PresencePenalty ??= options.PresencePenalty;result.Temperature ??= options.Temperature;result.Seed ??= options.Seed;if (options.StopSequences is { Count: > 0 } stopSequences){}if (options.Tools is { Count: > 0 } tools){}result.ResponseFormat ??= ToOpenAIChatResponseFormat(options.ResponseFormat, options);return result;

}

创建配置映射

appsettings.Development.json中配置结构如下:

{"AiPlatforms": [{"Name": "DeepSeek","Type": "OpenAICompatible","Url": "https://api.deepseek.com/v1","Token": "<访问凭证>","Models": [{"Name": "ds-chat","ModelId": "deepseek-chat"},{"Name": "ds-chat-think","ModelId": "deepseek-reasoner"}]},{"Name": "硅基流动","Type": "OpenAICompatible","Url": "https://api.siliconflow.cn/v1","Token": "<访问凭证>","Models": []}]

}

平台配置类AiPlatformConfig,配置根目录用于区分不同服务平台。

/// <summary>

/// 表示一个 AI 平台(如 OpenAI、Azure、自研网关)

/// </summary>

public record AiPlatformConfig

{// 平台名称public required string Name { get; init; }// 平台服务地址public required string Url { get; init; }// 平台访问凭证public required string Token { get; init; }// 模型服务类型public required ModelServiceType Type { get; init; }// 平台下支持的模型列表public required List<ModelConfig> Models { get; init; }// 可选:平台级额外配置public Dictionary<string, string>? Properties { get; init; }

}

模型配置类ModelConfig。

/// <summary>

/// 表示平台下的一个具体模型

/// </summary>

public record ModelConfig

{// 工厂中用于获取客户端的键public required string Name { get; init; }// 实际发送给 API 的模型标识public required string ModelId { get; init; } // 自定义属性public Dictionary<string, string>? Properties { get; init; }

}

模型服务类型ModelServiceType。

// 表示支持的接入模式

public enum ModelServiceType

{OpenAICompatible, // 兼容 OpenAIPorxyGateway, // 代理网关CustomClient // 自定义 Client

}

工厂类接口

依托依赖注入.Net6 支持命令服务注册,对于ChatClient 实例进行服务注册,创建抽象接口IModelServiceFactory,作为服务注入点。

/// <summary>

/// 管理所有模型服务的工厂,支持多种接入模式。

/// </summary>

public interface IModelServiceFactory

{/// <summary>/// 获取指定名称的 IChatClient 实例。/// </summary>IChatClient GetClient(string name);

}

工厂类实现(AI参与)

创建ModelServiceFactory类,实现IModelServiceFactory接口。

public class ModelServiceFactory : IModelServiceFactory

{// HttpClient工厂private readonly IHttpClientFactory _httpClientFactory;// 命名chatclient字典private readonly IServiceCollection _services;// 服务提供器private readonly IServiceProvider _serviceProvider;// 运行日志private readonly ILogger<ModelServiceFactory> _logger;public ModelServiceFactory(IServiceCollection services,IHttpClientFactory httpClientFactory,IEnumerable<AiPlatformConfig> platforms,ILogger<ModelServiceFactory> logger){_services = services;_httpClientFactory = httpClientFactory;_logger = logger;// 遍历平台服务配置foreach (var platform in platforms){// 遍历目标平台模型,按照名称构建chatclientforeach (var model in platform.Models){var key = model.Name;// 注册命名服务services.AddKeyedSingleton(key, new Lazy<IChatClient>(() => CreateClient(platform, model)));_logger.LogInformation($"注册模型命名服务:{key}");}}_serviceProvider = _services.BuildServiceProvider();}public IChatClient GetClient(string name){// 通过模型服务名称获取实例Lazy<IChatClient> lazyClient = _serviceProvider.GetKeyedService<Lazy<IChatClient>>(name);if (lazyClient == null)throw new InvalidOperationException($"Model service not found: {name}");_logger.LogInformation($"获取模型命名服务:{name}");return lazyClient.Value;}/// <summary>/// 按照平台配置和模型配置创建/// chatclient实例/// </summary>/// <param name="platform">平台配置实例</param>/// <param name="model">模型配置实例</param>/// <returns></returns>/// <exception cref="NotSupportedException"></exception>private IChatClient CreateClient(AiPlatformConfig platform, ModelConfig model){IChatClient innerClient = platform.Type switch{// OpenAi 兼容接口服务ModelServiceType.OpenAICompatible =>new OpenAI.Chat.ChatClient(model.ModelId, new ApiKeyCredential(platform.Token), new OpenAIClientOptions{Endpoint = new Uri(platform.Url) // 指定api访问地址}).AsIChatClient(),// 无关逻辑// 不匹配抛出异常_ => throw new NotSupportedException($"Unsupported service type: {platform.Type}")};// 构建带中间件的客户端return ChatClientBuilderChatClientExtensions.AsBuilder(innerClient)//-------------------------添加缓存---------------------------------.UseDistributedCache(new MemoryDistributedCache(Options.Create(new MemoryDistributedCacheOptions()))) // 使用缓存//-------------------------添加缓存---------------------------------//-------------------------使用日志---------------------------------.UseLogging() // 引入日志//-------------------------使用日志---------------------------------//-------------------------数据遥测---------------------------------.UseOpenTelemetry()//-------------------------数据遥测---------------------------------//-------------------------本地函数---------------------------------.UseFunctionInvocation()//-------------------------本地函数---------------------------------.Build(_serviceProvider);}

}

创建扩展类

创建扩展类ModelServiceExtensions简化注册。

public static class ModelServiceExtensions

{// 添加模型服务配置public static IServiceCollection AddModelServices(this IServiceCollection services,IConfiguration configuration){// 获取配置var platforms = configuration.GetSection("AiPlatforms").Get<List<AiPlatformConfig>>()?? throw new InvalidOperationException("AiPlatforms configuration is missing.");// 添加HttpClientFactory配置services.AddHttpClient();// 注册Ai配置集合为单例services.AddSingleton<IEnumerable<AiPlatformConfig>>(platforms);IServiceProvider provider = services.BuildServiceProvider();// 实例化构造工厂类IHttpClientFactory clientFactory = provider.GetService<IHttpClientFactory>();IEnumerable<AiPlatformConfig> platforms2 = provider.GetService<IEnumerable<AiPlatformConfig>>();if (clientFactory == null || platforms2 == null){throw new NullReferenceException("clientFactory or platforms2 is not null");}ILogger<ModelServiceFactory> logger = provider.GetService<ILogger<ModelServiceFactory>>();IModelServiceFactory modelServiceFactory = new ModelServiceFactory(services, clientFactory, platforms2,logger);// 注册工厂接口和类services.AddSingleton(provider => modelServiceFactory);return services;}

}

主程序代码调整

internal class Program

{async static Task Main(string[] args){// 构建默认主机构建器实例var builder = Host.CreateApplicationBuilder();//-------------------------添加缓存中间件---------------------------// 添加缓存服务-内存作为缓存介质builder.Services.AddDistributedMemoryCache();//-------------------------添加缓存中间件---------------------------// 添加模型服务builder.Services.AddModelServices(builder.Configuration);// 构建Host实例IHost host = builder.Build();// IChatClient实例名称string clientname = "ds-chat";// 获取ichatclient实现实例IModelServiceFactory serviceFactory = host.Services.GetService<IModelServiceFactory>();IChatClient client = serviceFactory.GetClient(clientname);// 省略不变内容}

}

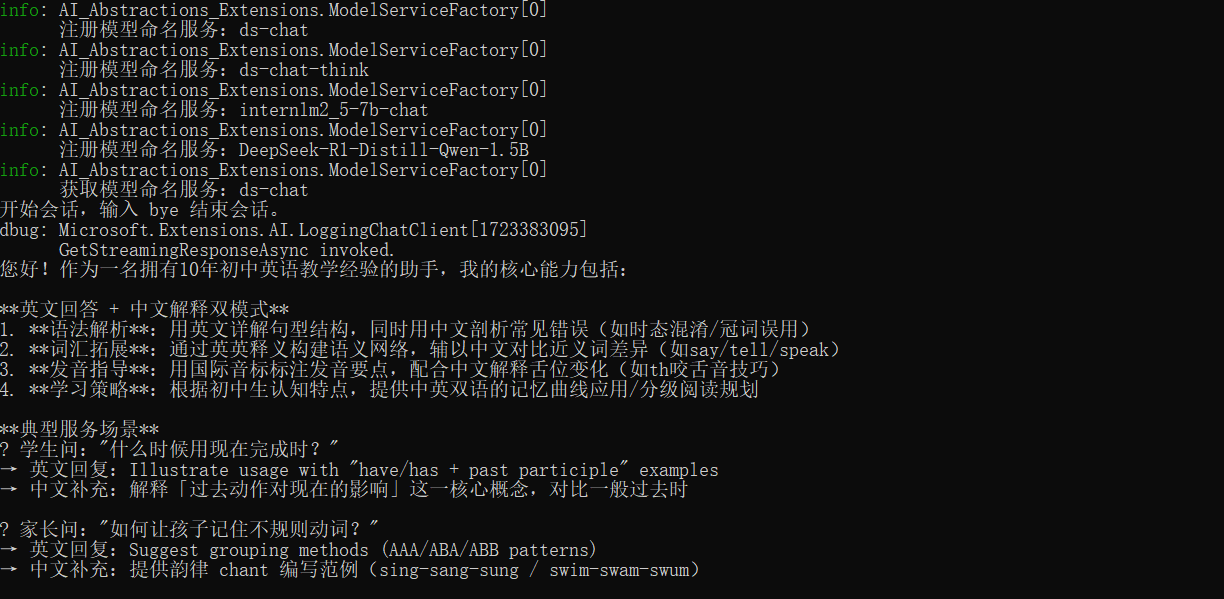

运行结果如下: