项目1:FFMPEG推流器讲解(一):FFMPEG重要结构体讲解

一.本章节介绍

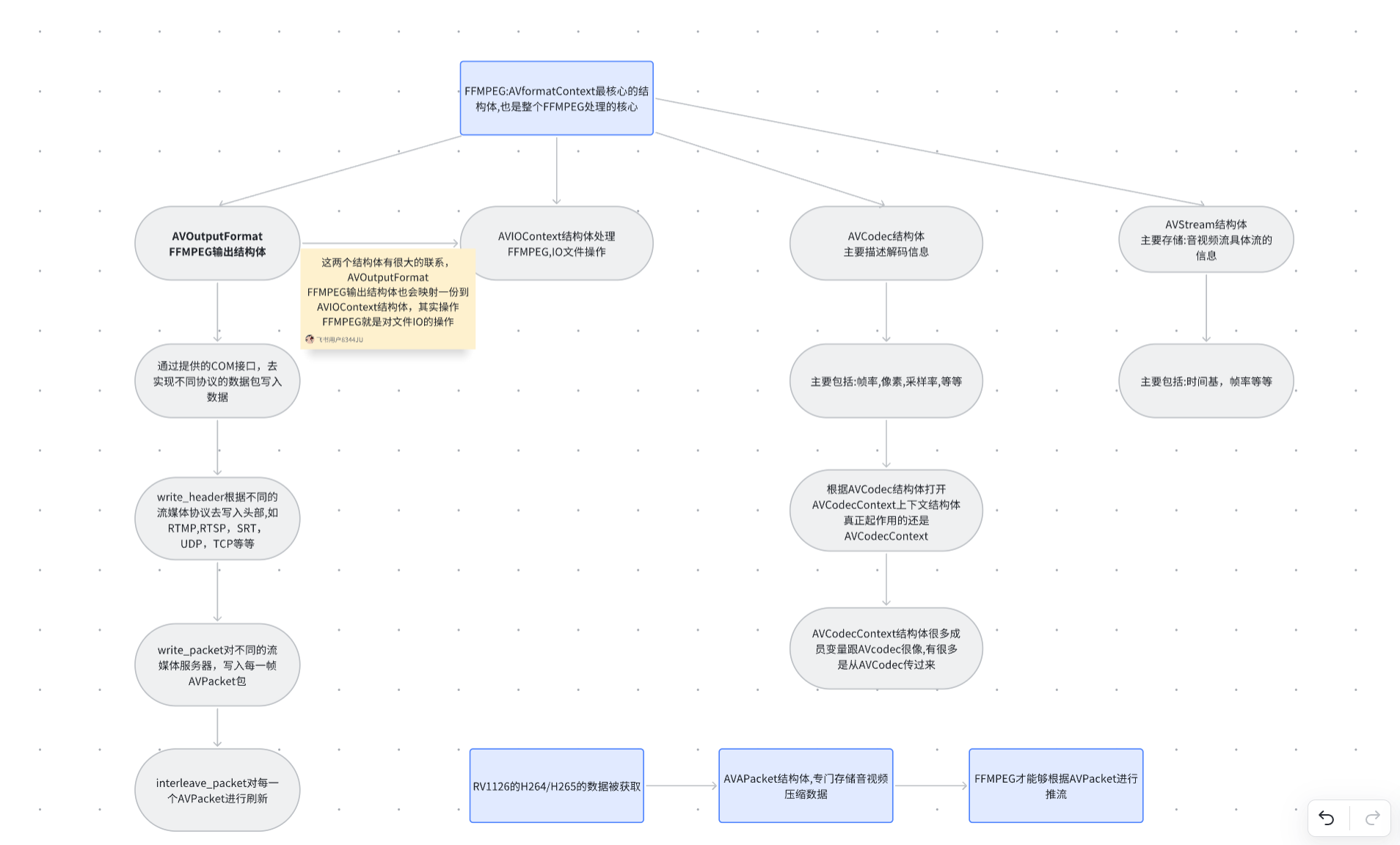

本章节主要介绍FFMPEG的结构体,FFMPEG是音视频的瑞士军刀,它提供了一系列丰富的音视频处理接口。如:编码、解码、推流、滤镜等等。在我们这个项目里面,FFMPEG主要的作用是进行视频推流的功能,就是把RV1126编码的视频码流利用FFMPEG框架推送到流媒体服务器。

二.FFMPEG重点结构体讲解

FFMPEG中有六个比较重要的结构体,分别是AVFormatContext、AVOutputFormat、 AVStream、AVCodec、AVCodecContext、AVPacket、AVFrame、AVIOContext结构体,这几个结构体是贯穿着整个FFMPEG核心功能,并且也是我们这个项目中最重要的几个结构体。下面我们来重点介绍这几个结构体:

2.1. AVFormatContext结构体

这个结构体是统领全局的基本结构体,这个结构体最主要作用的是处理封装、解封装等核心功能。我们来看看AVFormatContext具体的成员变量:

typedef struct AVFormatContext {/*** A class for logging and @ref avoptions. Set by avformat_alloc_context().* Exports (de)muxer private options if they exist.*/const AVClass *av_class;/*** The input container format.** Demuxing only, set by avformat_open_input().*/ff_const59 struct AVInputFormat *iformat;/*** The output container format.** Muxing only, must be set by the caller before avformat_write_header().*/ff_const59 struct AVOutputFormat *oformat;/*** Format private data. This is an AVOptions-enabled struct* if and only if iformat/oformat.priv_class is not NULL.** - muxing: set by avformat_write_header()* - demuxing: set by avformat_open_input()*/void *priv_data;/*** I/O context.** - demuxing: either set by the user before avformat_open_input() (then* the user must close it manually) or set by avformat_open_input().* - muxing: set by the user before avformat_write_header(). The caller must* take care of closing / freeing the IO context.** Do NOT set this field if AVFMT_NOFILE flag is set in* iformat/oformat.flags. In such a case, the (de)muxer will handle* I/O in some other way and this field will be NULL.*/AVIOContext *pb;/* stream info *//*** Flags signalling stream properties. A combination of AVFMTCTX_*.* Set by libavformat.*/int ctx_flags;/*** Number of elements in AVFormatContext.streams.** Set by avformat_new_stream(), must not be modified by any other code.*/unsigned int nb_streams;/*** A list of all streams in the file. New streams are created with* avformat_new_stream().** - demuxing: streams are created by libavformat in avformat_open_input().* If AVFMTCTX_NOHEADER is set in ctx_flags, then new streams may also* appear in av_read_frame().* - muxing: streams are created by the user before avformat_write_header().** Freed by libavformat in avformat_free_context().*/AVStream **streams;#if FF_API_FORMAT_FILENAME/*** input or output filename** - demuxing: set by avformat_open_input()* - muxing: may be set by the caller before avformat_write_header()** @deprecated Use url instead.*/attribute_deprecatedchar filename[1024];

#endif/*** input or output URL. Unlike the old filename field, this field has no* length restriction.** - demuxing: set by avformat_open_input(), initialized to an empty* string if url parameter was NULL in avformat_open_input().* - muxing: may be set by the caller before calling avformat_write_header()* (or avformat_init_output() if that is called first) to a string* which is freeable by av_free(). Set to an empty string if it* was NULL in avformat_init_output().** Freed by libavformat in avformat_free_context().*/char *url;/*** Position of the first frame of the component, in* AV_TIME_BASE fractional seconds. NEVER set this value directly:* It is deduced from the AVStream values.** Demuxing only, set by libavformat.*/int64_t start_time;/*** Duration of the stream, in AV_TIME_BASE fractional* seconds. Only set this value if you know none of the individual stream* durations and also do not set any of them. This is deduced from the* AVStream values if not set.** Demuxing only, set by libavformat.*/int64_t duration;/*** Total stream bitrate in bit/s, 0 if not* available. Never set it directly if the file_size and the* duration are known as FFmpeg can compute it automatically.*/int64_t bit_rate;unsigned int packet_size;int max_delay;/*** Flags modifying the (de)muxer behaviour. A combination of AVFMT_FLAG_*.* Set by the user before avformat_open_input() / avformat_write_header().*/int flags;

#define AVFMT_FLAG_GENPTS 0x0001 ///< Generate missing pts even if it requires parsing future frames.

#define AVFMT_FLAG_IGNIDX 0x0002 ///< Ignore index.

#define AVFMT_FLAG_NONBLOCK 0x0004 ///< Do not block when reading packets from input.

#define AVFMT_FLAG_IGNDTS 0x0008 ///< Ignore DTS on frames that contain both DTS & PTS

#define AVFMT_FLAG_NOFILLIN 0x0010 ///< Do not infer any values from other values, just return what is stored in the container

#define AVFMT_FLAG_NOPARSE 0x0020 ///< Do not use AVParsers, you also must set AVFMT_FLAG_NOFILLIN as the fillin code works on frames and no parsing -> no frames. Also seeking to frames can not work if parsing to find frame boundaries has been disabled

#define AVFMT_FLAG_NOBUFFER 0x0040 ///< Do not buffer frames when possible

#define AVFMT_FLAG_CUSTOM_IO 0x0080 ///< The caller has supplied a custom AVIOContext, don't avio_close() it.

#define AVFMT_FLAG_DISCARD_CORRUPT 0x0100 ///< Discard frames marked corrupted

#define AVFMT_FLAG_FLUSH_PACKETS 0x0200 ///< Flush the AVIOContext every packet.

/*** When muxing, try to avoid writing any random/volatile data to the output.* This includes any random IDs, real-time timestamps/dates, muxer version, etc.** This flag is mainly intended for testing.*/

#define AVFMT_FLAG_BITEXACT 0x0400

#if FF_API_LAVF_MP4A_LATM

#define AVFMT_FLAG_MP4A_LATM 0x8000 ///< Deprecated, does nothing.

#endif

#define AVFMT_FLAG_SORT_DTS 0x10000 ///< try to interleave outputted packets by dts (using this flag can slow demuxing down)

#define AVFMT_FLAG_PRIV_OPT 0x20000 ///< Enable use of private options by delaying codec open (this could be made default once all code is converted)

#if FF_API_LAVF_KEEPSIDE_FLAG

#define AVFMT_FLAG_KEEP_SIDE_DATA 0x40000 ///< Deprecated, does nothing.

#endif

#define AVFMT_FLAG_FAST_SEEK 0x80000 ///< Enable fast, but inaccurate seeks for some formats

#define AVFMT_FLAG_SHORTEST 0x100000 ///< Stop muxing when the shortest stream stops.

#define AVFMT_FLAG_AUTO_BSF 0x200000 ///< Add bitstream filters as requested by the muxer/*** Maximum size of the data read from input for determining* the input container format.* Demuxing only, set by the caller before avformat_open_input().*/int64_t probesize;/*** Maximum duration (in AV_TIME_BASE units) of the data read* from input in avformat_find_stream_info().* Demuxing only, set by the caller before avformat_find_stream_info().* Can be set to 0 to let avformat choose using a heuristic.*/int64_t max_analyze_duration;const uint8_t *key;int keylen;unsigned int nb_programs;AVProgram **programs;/*** Forced video codec_id.* Demuxing: Set by user.*/enum AVCodecID video_codec_id;/*** Forced audio codec_id.* Demuxing: Set by user.*/enum AVCodecID audio_codec_id;/*** Forced subtitle codec_id.* Demuxing: Set by user.*/enum AVCodecID subtitle_codec_id;/*** Maximum amount of memory in bytes to use for the index of each stream.* If the index exceeds this size, entries will be discarded as* needed to maintain a smaller size. This can lead to slower or less* accurate seeking (depends on demuxer).* Demuxers for which a full in-memory index is mandatory will ignore* this.* - muxing: unused* - demuxing: set by user*/unsigned int max_index_size;/*** Maximum amount of memory in bytes to use for buffering frames* obtained from realtime capture devices.*/unsigned int max_picture_buffer;/*** Number of chapters in AVChapter array.* When muxing, chapters are normally written in the file header,* so nb_chapters should normally be initialized before write_header* is called. Some muxers (e.g. mov and mkv) can also write chapters* in the trailer. To write chapters in the trailer, nb_chapters* must be zero when write_header is called and non-zero when* write_trailer is called.* - muxing: set by user* - demuxing: set by libavformat*/unsigned int nb_chapters;AVChapter **chapters;/*** Metadata that applies to the whole file.** - demuxing: set by libavformat in avformat_open_input()* - muxing: may be set by the caller before avformat_write_header()** Freed by libavformat in avformat_free_context().*/AVDictionary *metadata;/*** Start time of the stream in real world time, in microseconds* since the Unix epoch (00:00 1st January 1970). That is, pts=0 in the* stream was captured at this real world time.* - muxing: Set by the caller before avformat_write_header(). If set to* either 0 or AV_NOPTS_VALUE, then the current wall-time will* be used.* - demuxing: Set by libavformat. AV_NOPTS_VALUE if unknown. Note that* the value may become known after some number of frames* have been received.*/int64_t start_time_realtime;/*** The number of frames used for determining the framerate in* avformat_find_stream_info().* Demuxing only, set by the caller before avformat_find_stream_info().*/int fps_probe_size;/*** Error recognition; higher values will detect more errors but may* misdetect some more or less valid parts as errors.* Demuxing only, set by the caller before avformat_open_input().*/int error_recognition;/*** Custom interrupt callbacks for the I/O layer.** demuxing: set by the user before avformat_open_input().* muxing: set by the user before avformat_write_header()* (mainly useful for AVFMT_NOFILE formats). The callback* should also be passed to avio_open2() if it's used to* open the file.*/AVIOInterruptCB interrupt_callback;/*** Flags to enable debugging.*/int debug;

#define FF_FDEBUG_TS 0x0001/*** Maximum buffering duration for interleaving.** To ensure all the streams are interleaved correctly,* av_interleaved_write_frame() will wait until it has at least one packet* for each stream before actually writing any packets to the output file.* When some streams are "sparse" (i.e. there are large gaps between* successive packets), this can result in excessive buffering.** This field specifies the maximum difference between the timestamps of the* first and the last packet in the muxing queue, above which libavformat* will output a packet regardless of whether it has queued a packet for all* the streams.** Muxing only, set by the caller before avformat_write_header().*/int64_t max_interleave_delta;/*** Allow non-standard and experimental extension* @see AVCodecContext.strict_std_compliance*/int strict_std_compliance;/*** Flags for the user to detect events happening on the file. Flags must* be cleared by the user once the event has been handled.* A combination of AVFMT_EVENT_FLAG_*.*/int event_flags;

#define AVFMT_EVENT_FLAG_METADATA_UPDATED 0x0001 ///< The call resulted in updated metadata./*** Maximum number of packets to read while waiting for the first timestamp.* Decoding only.*/int max_ts_probe;/*** Avoid negative timestamps during muxing.* Any value of the AVFMT_AVOID_NEG_TS_* constants.* Note, this only works when using av_interleaved_write_frame. (interleave_packet_per_dts is in use)* - muxing: Set by user* - demuxing: unused*/int avoid_negative_ts;

#define AVFMT_AVOID_NEG_TS_AUTO -1 ///< Enabled when required by target format

#define AVFMT_AVOID_NEG_TS_MAKE_NON_NEGATIVE 1 ///< Shift timestamps so they are non negative

#define AVFMT_AVOID_NEG_TS_MAKE_ZERO 2 ///< Shift timestamps so that they start at 0/*** Transport stream id.* This will be moved into demuxer private options. Thus no API/ABI compatibility*/int ts_id;/*** Audio preload in microseconds.* Note, not all formats support this and unpredictable things may happen if it is used when not supported.* - encoding: Set by user* - decoding: unused*/int audio_preload;/*** Max chunk time in microseconds.* Note, not all formats support this and unpredictable things may happen if it is used when not supported.* - encoding: Set by user* - decoding: unused*/int max_chunk_duration;/*** Max chunk size in bytes* Note, not all formats support this and unpredictable things may happen if it is used when not supported.* - encoding: Set by user* - decoding: unused*/int max_chunk_size;/*** forces the use of wallclock timestamps as pts/dts of packets* This has undefined results in the presence of B frames.* - encoding: unused* - decoding: Set by user*/int use_wallclock_as_timestamps;/*** avio flags, used to force AVIO_FLAG_DIRECT.* - encoding: unused* - decoding: Set by user*/int avio_flags;/*** The duration field can be estimated through various ways, and this field can be used* to know how the duration was estimated.* - encoding: unused* - decoding: Read by user*/enum AVDurationEstimationMethod duration_estimation_method;/*** Skip initial bytes when opening stream* - encoding: unused* - decoding: Set by user*/int64_t skip_initial_bytes;/*** Correct single timestamp overflows* - encoding: unused* - decoding: Set by user*/unsigned int correct_ts_overflow;/*** Force seeking to any (also non key) frames.* - encoding: unused* - decoding: Set by user*/int seek2any;/*** Flush the I/O context after each packet.* - encoding: Set by user* - decoding: unused*/int flush_packets;/*** format probing score.* The maximal score is AVPROBE_SCORE_MAX, its set when the demuxer probes* the format.* - encoding: unused* - decoding: set by avformat, read by user*/int probe_score;/*** number of bytes to read maximally to identify format.* - encoding: unused* - decoding: set by user*/int format_probesize;/*** ',' separated list of allowed decoders.* If NULL then all are allowed* - encoding: unused* - decoding: set by user*/char *codec_whitelist;/*** ',' separated list of allowed demuxers.* If NULL then all are allowed* - encoding: unused* - decoding: set by user*/char *format_whitelist;/*** An opaque field for libavformat internal usage.* Must not be accessed in any way by callers.*/AVFormatInternal *internal;/*** IO repositioned flag.* This is set by avformat when the underlaying IO context read pointer* is repositioned, for example when doing byte based seeking.* Demuxers can use the flag to detect such changes.*/int io_repositioned;/*** Forced video codec.* This allows forcing a specific decoder, even when there are multiple with* the same codec_id.* Demuxing: Set by user*/AVCodec *video_codec;/*** Forced audio codec.* This allows forcing a specific decoder, even when there are multiple with* the same codec_id.* Demuxing: Set by user*/AVCodec *audio_codec;/*** Forced subtitle codec.* This allows forcing a specific decoder, even when there are multiple with* the same codec_id.* Demuxing: Set by user*/AVCodec *subtitle_codec;/*** Forced data codec.* This allows forcing a specific decoder, even when there are multiple with* the same codec_id.* Demuxing: Set by user*/AVCodec *data_codec;/*** Number of bytes to be written as padding in a metadata header.* Demuxing: Unused.* Muxing: Set by user via av_format_set_metadata_header_padding.*/int metadata_header_padding;/*** User data.* This is a place for some private data of the user.*/void *opaque;/*** Callback used by devices to communicate with application.*/av_format_control_message control_message_cb;/*** Output timestamp offset, in microseconds.* Muxing: set by user*/int64_t output_ts_offset;/*** dump format separator.* can be ", " or "\n " or anything else* - muxing: Set by user.* - demuxing: Set by user.*/uint8_t *dump_separator;/*** Forced Data codec_id.* Demuxing: Set by user.*/enum AVCodecID data_codec_id;#if FF_API_OLD_OPEN_CALLBACKS/*** Called to open further IO contexts when needed for demuxing.** This can be set by the user application to perform security checks on* the URLs before opening them.* The function should behave like avio_open2(), AVFormatContext is provided* as contextual information and to reach AVFormatContext.opaque.** If NULL then some simple checks are used together with avio_open2().** Must not be accessed directly from outside avformat.* @See av_format_set_open_cb()** Demuxing: Set by user.** @deprecated Use io_open and io_close.*/attribute_deprecatedint (*open_cb)(struct AVFormatContext *s, AVIOContext **p, const char *url, int flags, const AVIOInterruptCB *int_cb, AVDictionary **options);

#endif/*** ',' separated list of allowed protocols.* - encoding: unused* - decoding: set by user*/char *protocol_whitelist;/*** A callback for opening new IO streams.** Whenever a muxer or a demuxer needs to open an IO stream (typically from* avformat_open_input() for demuxers, but for certain formats can happen at* other times as well), it will call this callback to obtain an IO context.** @param s the format context* @param pb on success, the newly opened IO context should be returned here* @param url the url to open* @param flags a combination of AVIO_FLAG_** @param options a dictionary of additional options, with the same* semantics as in avio_open2()* @return 0 on success, a negative AVERROR code on failure** @note Certain muxers and demuxers do nesting, i.e. they open one or more* additional internal format contexts. Thus the AVFormatContext pointer* passed to this callback may be different from the one facing the caller.* It will, however, have the same 'opaque' field.*/int (*io_open)(struct AVFormatContext *s, AVIOContext **pb, const char *url,int flags, AVDictionary **options);/*** A callback for closing the streams opened with AVFormatContext.io_open().*/void (*io_close)(struct AVFormatContext *s, AVIOContext *pb);/*** ',' separated list of disallowed protocols.* - encoding: unused* - decoding: set by user*/char *protocol_blacklist;/*** The maximum number of streams.* - encoding: unused* - decoding: set by user*/int max_streams;/*** Skip duration calcuation in estimate_timings_from_pts.* - encoding: unused* - decoding: set by user*/int skip_estimate_duration_from_pts;

} AVFormatContext;

2.1.1. AVInputFormat * iformat:输入数据的封装格式,仅作用于解封装用avformat_open_input

2.1.2. AVOutputFormat * ofomat:输出数据的封装格式,仅作用于解封装用avformat_write_header

2.1.3. AVIOContext * pb:I/O的上下文,在解封装中由用户在avformat_open_input之前来设置,若封装的时候用户在avformat_write_header之前设置

2.1.4. int nb_streams:流的个数,若只有视频流nb_streams = 1, 若同时有视频流和音频流则nb_streams = 2。

2.1.5. AVStream **stream:列出文件中所有流的列表

2.1.6. int64_t start_time:第一帧的位置

2.1.7. int64_t duration:流的持续时间

2.1.8. int64_t bit_rate:流的比特率

2.1.9. int64_t probsize:输入读取的用于确定容器格式的大小

2.1.10. AVDictionary *metadata:元数据

2.1.10. AVDictionary *metadata:元数据

2.1.11. AVCodec * video_codec:视频编解码器

2.1.12. AVCodec * audio_codec:音频编解码器

2.1.13. AVCodec *subtitle_codec:字幕编解码器

2.1.14. AVCodec *data_codec:数据编解码器2.2. AVOutputFormat 结构体:

这个结构体的功能类似于COM接口,表示文件容器输出格式,这个结构体的特点是着重于函数的实现具体的成员变量:

typedef struct AVOutputFormat {const char *name;/*** Descriptive name for the format, meant to be more human-readable* than name. You should use the NULL_IF_CONFIG_SMALL() macro* to define it.*/const char *long_name;const char *mime_type;const char *extensions; /**< comma-separated filename extensions *//* output support */enum AVCodecID audio_codec; /**< default audio codec */enum AVCodecID video_codec; /**< default video codec */enum AVCodecID subtitle_codec; /**< default subtitle codec *//*** can use flags: AVFMT_NOFILE, AVFMT_NEEDNUMBER,* AVFMT_GLOBALHEADER, AVFMT_NOTIMESTAMPS, AVFMT_VARIABLE_FPS,* AVFMT_NODIMENSIONS, AVFMT_NOSTREAMS, AVFMT_ALLOW_FLUSH,* AVFMT_TS_NONSTRICT, AVFMT_TS_NEGATIVE*/int flags;/*** List of supported codec_id-codec_tag pairs, ordered by "better* choice first". The arrays are all terminated by AV_CODEC_ID_NONE.*/const struct AVCodecTag * const *codec_tag;const AVClass *priv_class; ///< AVClass for the private context/****************************************************************** No fields below this line are part of the public API. They* may not be used outside of libavformat and can be changed and* removed at will.* New public fields should be added right above.******************************************************************//*** The ff_const59 define is not part of the public API and will* be removed without further warning.*/

#if FF_API_AVIOFORMAT

#define ff_const59

#else

#define ff_const59 const

#endifff_const59 struct AVOutputFormat *next;/*** size of private data so that it can be allocated in the wrapper*/int priv_data_size;int (*write_header)(struct AVFormatContext *);/*** Write a packet. If AVFMT_ALLOW_FLUSH is set in flags,* pkt can be NULL in order to flush data buffered in the muxer.* When flushing, return 0 if there still is more data to flush,* or 1 if everything was flushed and there is no more buffered* data.*/int (*write_packet)(struct AVFormatContext *, AVPacket *pkt);int (*write_trailer)(struct AVFormatContext *);/*** Currently only used to set pixel format if not YUV420P.*/int (*interleave_packet)(struct AVFormatContext *, AVPacket *out,AVPacket *in, int flush);/*** Test if the given codec can be stored in this container.** @return 1 if the codec is supported, 0 if it is not.* A negative number if unknown.* MKTAG('A', 'P', 'I', 'C') if the codec is only supported as AV_DISPOSITION_ATTACHED_PIC*/int (*query_codec)(enum AVCodecID id, int std_compliance);void (*get_output_timestamp)(struct AVFormatContext *s, int stream,int64_t *dts, int64_t *wall);/*** Allows sending messages from application to device.*/int (*control_message)(struct AVFormatContext *s, int type,void *data, size_t data_size);/*** Write an uncoded AVFrame.** See av_write_uncoded_frame() for details.** The library will free *frame afterwards, but the muxer can prevent it* by setting the pointer to NULL.*/int (*write_uncoded_frame)(struct AVFormatContext *, int stream_index,AVFrame **frame, unsigned flags);/*** Returns device list with it properties.* @see avdevice_list_devices() for more details.*/int (*get_device_list)(struct AVFormatContext *s, struct AVDeviceInfoList *device_list);/*** Initialize device capabilities submodule.* @see avdevice_capabilities_create() for more details.*/int (*create_device_capabilities)(struct AVFormatContext *s, struct AVDeviceCapabilitiesQuery *caps);/*** Free device capabilities submodule.* @see avdevice_capabilities_free() for more details.*/int (*free_device_capabilities)(struct AVFormatContext *s, struct AVDeviceCapabilitiesQuery *caps);enum AVCodecID data_codec; /**< default data codec *//*** Initialize format. May allocate data here, and set any AVFormatContext or* AVStream parameters that need to be set before packets are sent.* This method must not write output.** Return 0 if streams were fully configured, 1 if not, negative AVERROR on failure** Any allocations made here must be freed in deinit().*/int (*init)(struct AVFormatContext *);/*** Deinitialize format. If present, this is called whenever the muxer is being* destroyed, regardless of whether or not the header has been written.** If a trailer is being written, this is called after write_trailer().** This is called if init() fails as well.*/void (*deinit)(struct AVFormatContext *);/*** Set up any necessary bitstream filtering and extract any extra data needed* for the global header.* Return 0 if more packets from this stream must be checked; 1 if not.*/int (*check_bitstream)(struct AVFormatContext *, const AVPacket *pkt);

} AVOutputFormat;可以看到AVOutputFormat结构体有很多个成员变量,我们来重点说一下这里面比较重要的成员变量,因为这里面有一些成员变量不是必须的。

2.2.1 const char *name; //描述的名称

2.2.2. const char *long_name;//格式的描述性名称,易于阅读。

2.2.3. enum AVCodecID audio_codec; //默认的音频编解码器

2.2.4. enum AVCodecID video_codec; //默认的视频编解码器

2.2.5. enum AVCodecID subtitle_codec; //默认的字幕编解码器

2.2.6. struct AVOutputFormat *next; //链表NEXT

2.2.7. int (*write_header)(struct AVFormatContext *); //写入数据头部

2.2.8. int (*write_packet)(struct AVFormatContext *, AVPacket *pkt);//写一个数据包。 如果在标志中设置AVFMT_ALLOW_FLUSH,则pkt可以为NULL。

2.2.9. int (*write_trailer)(struct AVFormatContext *); //写入数据尾部

2.2.10. int (*interleave_packet)(struct AVFormatContext *, AVPacket *out, AVPacket *in, int flush); //刷新AVPacket,并写入

2.2.11. int (*control_message)(struct AVFormatContext *s, int type, void *data, size_t data_size);//允许从应用程序向设备发送消息。

2.2.12. int (*write_uncoded_frame)(struct AVFormatContext *, int stream_index, AVFrame **frame, unsigned flags);//写一个未编码的AVFrame。

2.2.13. int (*init)(struct AVFormatContext *);//初始化格式。 可以在此处分配数据,并设置在发送数据包之前需要设置的任何AVFormatContext或AVStream参数。

2.2.14.void (*deinit)(struct AVFormatContext *);//取消初始化格式。

2.2.15. int (*check_bitstream)(struct AVFormatContext *, const AVPacket *pkt);//设置任何必要的比特流过滤,并提取全局头部所需的任何额外数据2.3.AVStream结构体:

AVStream包含了每一个视频/音频流信息的结构体,同样的这个结构体也有很多重要的成员变量,我们来看看这些成员变量具体的含义。

typedef struct AVStream {int index; /**< stream index in AVFormatContext *//*** Format-specific stream ID.* decoding: set by libavformat* encoding: set by the user, replaced by libavformat if left unset*/int id;

#if FF_API_LAVF_AVCTX/*** @deprecated use the codecpar struct instead*/attribute_deprecatedAVCodecContext *codec;

#endifvoid *priv_data;/*** This is the fundamental unit of time (in seconds) in terms* of which frame timestamps are represented.** decoding: set by libavformat* encoding: May be set by the caller before avformat_write_header() to* provide a hint to the muxer about the desired timebase. In* avformat_write_header(), the muxer will overwrite this field* with the timebase that will actually be used for the timestamps* written into the file (which may or may not be related to the* user-provided one, depending on the format).*/AVRational time_base;/*** Decoding: pts of the first frame of the stream in presentation order, in stream time base.* Only set this if you are absolutely 100% sure that the value you set* it to really is the pts of the first frame.* This may be undefined (AV_NOPTS_VALUE).* @note The ASF header does NOT contain a correct start_time the ASF* demuxer must NOT set this.*/int64_t start_time;/*** Decoding: duration of the stream, in stream time base.* If a source file does not specify a duration, but does specify* a bitrate, this value will be estimated from bitrate and file size.** Encoding: May be set by the caller before avformat_write_header() to* provide a hint to the muxer about the estimated duration.*/int64_t duration;int64_t nb_frames; ///< number of frames in this stream if known or 0int disposition; /**< AV_DISPOSITION_* bit field */enum AVDiscard discard; ///< Selects which packets can be discarded at will and do not need to be demuxed./*** sample aspect ratio (0 if unknown)* - encoding: Set by user.* - decoding: Set by libavformat.*/AVRational sample_aspect_ratio;AVDictionary *metadata;/*** Average framerate** - demuxing: May be set by libavformat when creating the stream or in* avformat_find_stream_info().* - muxing: May be set by the caller before avformat_write_header().*/AVRational avg_frame_rate;/*** For streams with AV_DISPOSITION_ATTACHED_PIC disposition, this packet* will contain the attached picture.** decoding: set by libavformat, must not be modified by the caller.* encoding: unused*/AVPacket attached_pic;/*** An array of side data that applies to the whole stream (i.e. the* container does not allow it to change between packets).** There may be no overlap between the side data in this array and side data* in the packets. I.e. a given side data is either exported by the muxer* (demuxing) / set by the caller (muxing) in this array, then it never* appears in the packets, or the side data is exported / sent through* the packets (always in the first packet where the value becomes known or* changes), then it does not appear in this array.** - demuxing: Set by libavformat when the stream is created.* - muxing: May be set by the caller before avformat_write_header().** Freed by libavformat in avformat_free_context().** @see av_format_inject_global_side_data()*/AVPacketSideData *side_data;/*** The number of elements in the AVStream.side_data array.*/int nb_side_data;/*** Flags for the user to detect events happening on the stream. Flags must* be cleared by the user once the event has been handled.* A combination of AVSTREAM_EVENT_FLAG_*.*/int event_flags;

#define AVSTREAM_EVENT_FLAG_METADATA_UPDATED 0x0001 ///< The call resulted in updated metadata./*** Real base framerate of the stream.* This is the lowest framerate with which all timestamps can be* represented accurately (it is the least common multiple of all* framerates in the stream). Note, this value is just a guess!* For example, if the time base is 1/90000 and all frames have either* approximately 3600 or 1800 timer ticks, then r_frame_rate will be 50/1.*/AVRational r_frame_rate;#if FF_API_LAVF_FFSERVER/*** String containing pairs of key and values describing recommended encoder configuration.* Pairs are separated by ','.* Keys are separated from values by '='.** @deprecated unused*/attribute_deprecatedchar *recommended_encoder_configuration;

#endif/*** Codec parameters associated with this stream. Allocated and freed by* libavformat in avformat_new_stream() and avformat_free_context()* respectively.** - demuxing: filled by libavformat on stream creation or in* avformat_find_stream_info()* - muxing: filled by the caller before avformat_write_header()*/AVCodecParameters *codecpar;/****************************************************************** All fields below this line are not part of the public API. They* may not be used outside of libavformat and can be changed and* removed at will.* Internal note: be aware that physically removing these fields* will break ABI. Replace removed fields with dummy fields, and* add new fields to AVStreamInternal.******************************************************************/#define MAX_STD_TIMEBASES (30*12+30+3+6)/*** Stream information used internally by avformat_find_stream_info()*/struct {int64_t last_dts;int64_t duration_gcd;int duration_count;int64_t rfps_duration_sum;double (*duration_error)[2][MAX_STD_TIMEBASES];int64_t codec_info_duration;int64_t codec_info_duration_fields;int frame_delay_evidence;/*** 0 -> decoder has not been searched for yet.* >0 -> decoder found* <0 -> decoder with codec_id == -found_decoder has not been found*/int found_decoder;int64_t last_duration;/*** Those are used for average framerate estimation.*/int64_t fps_first_dts;int fps_first_dts_idx;int64_t fps_last_dts;int fps_last_dts_idx;} *info;int pts_wrap_bits; /**< number of bits in pts (used for wrapping control) */// Timestamp generation support:/*** Timestamp corresponding to the last dts sync point.** Initialized when AVCodecParserContext.dts_sync_point >= 0 and* a DTS is received from the underlying container. Otherwise set to* AV_NOPTS_VALUE by default.*/int64_t first_dts;int64_t cur_dts;int64_t last_IP_pts;int last_IP_duration;/*** Number of packets to buffer for codec probing*/int probe_packets;/*** Number of frames that have been demuxed during avformat_find_stream_info()*/int codec_info_nb_frames;/* av_read_frame() support */enum AVStreamParseType need_parsing;struct AVCodecParserContext *parser;/*** last packet in packet_buffer for this stream when muxing.*/struct AVPacketList *last_in_packet_buffer;AVProbeData probe_data;

#define MAX_REORDER_DELAY 16int64_t pts_buffer[MAX_REORDER_DELAY+1];AVIndexEntry *index_entries; /**< Only used if the format does notsupport seeking natively. */int nb_index_entries;unsigned int index_entries_allocated_size;/*** Stream Identifier* This is the MPEG-TS stream identifier +1* 0 means unknown*/int stream_identifier;/*** Details of the MPEG-TS program which created this stream.*/int program_num;int pmt_version;int pmt_stream_idx;int64_t interleaver_chunk_size;int64_t interleaver_chunk_duration;/*** stream probing state* -1 -> probing finished* 0 -> no probing requested* rest -> perform probing with request_probe being the minimum score to accept.* NOT PART OF PUBLIC API*/int request_probe;/*** Indicates that everything up to the next keyframe* should be discarded.*/int skip_to_keyframe;/*** Number of samples to skip at the start of the frame decoded from the next packet.*/int skip_samples;/*** If not 0, the number of samples that should be skipped from the start of* the stream (the samples are removed from packets with pts==0, which also* assumes negative timestamps do not happen).* Intended for use with formats such as mp3 with ad-hoc gapless audio* support.*/int64_t start_skip_samples;/*** If not 0, the first audio sample that should be discarded from the stream.* This is broken by design (needs global sample count), but can't be* avoided for broken by design formats such as mp3 with ad-hoc gapless* audio support.*/int64_t first_discard_sample;/*** The sample after last sample that is intended to be discarded after* first_discard_sample. Works on frame boundaries only. Used to prevent* early EOF if the gapless info is broken (considered concatenated mp3s).*/int64_t last_discard_sample;/*** Number of internally decoded frames, used internally in libavformat, do not access* its lifetime differs from info which is why it is not in that structure.*/int nb_decoded_frames;/*** Timestamp offset added to timestamps before muxing* NOT PART OF PUBLIC API*/int64_t mux_ts_offset;/*** Internal data to check for wrapping of the time stamp*/int64_t pts_wrap_reference;/*** Options for behavior, when a wrap is detected.** Defined by AV_PTS_WRAP_ values.** If correction is enabled, there are two possibilities:* If the first time stamp is near the wrap point, the wrap offset* will be subtracted, which will create negative time stamps.* Otherwise the offset will be added.*/int pts_wrap_behavior;/*** Internal data to prevent doing update_initial_durations() twice*/int update_initial_durations_done;/*** Internal data to generate dts from pts*/int64_t pts_reorder_error[MAX_REORDER_DELAY+1];uint8_t pts_reorder_error_count[MAX_REORDER_DELAY+1];/*** Internal data to analyze DTS and detect faulty mpeg streams*/int64_t last_dts_for_order_check;uint8_t dts_ordered;uint8_t dts_misordered;/*** Internal data to inject global side data*/int inject_global_side_data;/*** display aspect ratio (0 if unknown)* - encoding: unused* - decoding: Set by libavformat to calculate sample_aspect_ratio internally*/AVRational display_aspect_ratio;/*** An opaque field for libavformat internal usage.* Must not be accessed in any way by callers.*/AVStreamInternal *internal;

} AVStream;

2.3.1. index/id:这个数字是自动生成的,根据index可以从streams表中找到该流

2.3.2.time_base:流的时间基,这是一个实数,该AVStream中流媒体数据的PTS和DTS都将会以这个时间基准。视频时间基准以帧率为基准,音频以采样率为时间基准

2.3.3.start_time:流的起始时间,以流的时间基准为单位,通常是该流的第一个PTS

2.3.4.duration:流的总时间,以流的时间基准为单位

2.3.5.need_parsing:对该流的parsing过程的控制

2.3.6.nb_frames:流内的帧数目

2.3.7.avg_frame_rate:平均帧率

2.3.8.codec:该流对应的AVCodecContext结构,调用avformat_open_input生成

2.3.9.parser:指向该流对应的AVCodecParserContext结构,调用av_find_stream_info生成2.4.AVCodec结构体:

AVCodec是ffmpeg的音视频编解码,它提供了个钟音视频的编码库和解码库,FFMPEG通过AVCODEC可以将音视频数据编码成对应的数据压缩包。

typedef struct AVCodec {/*** Name of the codec implementation.* The name is globally unique among encoders and among decoders (but an* encoder and a decoder can share the same name).* This is the primary way to find a codec from the user perspective.*/const char *name;/*** Descriptive name for the codec, meant to be more human readable than name.* You should use the NULL_IF_CONFIG_SMALL() macro to define it.*/const char *long_name;enum AVMediaType type;enum AVCodecID id;/*** Codec capabilities.* see AV_CODEC_CAP_**/int capabilities;const AVRational *supported_framerates; ///< array of supported framerates, or NULL if any, array is terminated by {0,0}const enum AVPixelFormat *pix_fmts; ///< array of supported pixel formats, or NULL if unknown, array is terminated by -1const int *supported_samplerates; ///< array of supported audio samplerates, or NULL if unknown, array is terminated by 0const enum AVSampleFormat *sample_fmts; ///< array of supported sample formats, or NULL if unknown, array is terminated by -1const uint64_t *channel_layouts; ///< array of support channel layouts, or NULL if unknown. array is terminated by 0uint8_t max_lowres; ///< maximum value for lowres supported by the decoderconst AVClass *priv_class; ///< AVClass for the private contextconst AVProfile *profiles; ///< array of recognized profiles, or NULL if unknown, array is terminated by {FF_PROFILE_UNKNOWN}/*** Group name of the codec implementation.* This is a short symbolic name of the wrapper backing this codec. A* wrapper uses some kind of external implementation for the codec, such* as an external library, or a codec implementation provided by the OS or* the hardware.* If this field is NULL, this is a builtin, libavcodec native codec.* If non-NULL, this will be the suffix in AVCodec.name in most cases* (usually AVCodec.name will be of the form "<codec_name>_<wrapper_name>").*/const char *wrapper_name;/****************************************************************** No fields below this line are part of the public API. They* may not be used outside of libavcodec and can be changed and* removed at will.* New public fields should be added right above.******************************************************************/int priv_data_size;struct AVCodec *next;/*** @name Frame-level threading support functions* @{*//*** If defined, called on thread contexts when they are created.* If the codec allocates writable tables in init(), re-allocate them here.* priv_data will be set to a copy of the original.*/int (*init_thread_copy)(AVCodecContext *);/*** Copy necessary context variables from a previous thread context to the current one.* If not defined, the next thread will start automatically; otherwise, the codec* must call ff_thread_finish_setup().** dst and src will (rarely) point to the same context, in which case memcpy should be skipped.*/int (*update_thread_context)(AVCodecContext *dst, const AVCodecContext *src);/** @} *//*** Private codec-specific defaults.*/const AVCodecDefault *defaults;/*** Initialize codec static data, called from avcodec_register().** This is not intended for time consuming operations as it is* run for every codec regardless of that codec being used.*/void (*init_static_data)(struct AVCodec *codec);int (*init)(AVCodecContext *);int (*encode_sub)(AVCodecContext *, uint8_t *buf, int buf_size,const struct AVSubtitle *sub);/*** Encode data to an AVPacket.** @param avctx codec context* @param avpkt output AVPacket (may contain a user-provided buffer)* @param[in] frame AVFrame containing the raw data to be encoded* @param[out] got_packet_ptr encoder sets to 0 or 1 to indicate that a* non-empty packet was returned in avpkt.* @return 0 on success, negative error code on failure*/int (*encode2)(AVCodecContext *avctx, AVPacket *avpkt, const AVFrame *frame,int *got_packet_ptr);int (*decode)(AVCodecContext *, void *outdata, int *outdata_size, AVPacket *avpkt);int (*close)(AVCodecContext *);/*** Encode API with decoupled packet/frame dataflow. The API is the* same as the avcodec_ prefixed APIs (avcodec_send_frame() etc.), except* that:* - never called if the codec is closed or the wrong type,* - if AV_CODEC_CAP_DELAY is not set, drain frames are never sent,* - only one drain frame is ever passed down,*/int (*send_frame)(AVCodecContext *avctx, const AVFrame *frame);int (*receive_packet)(AVCodecContext *avctx, AVPacket *avpkt);/*** Decode API with decoupled packet/frame dataflow. This function is called* to get one output frame. It should call ff_decode_get_packet() to obtain* input data.*/int (*receive_frame)(AVCodecContext *avctx, AVFrame *frame);/*** Flush buffers.* Will be called when seeking*/void (*flush)(AVCodecContext *);/*** Internal codec capabilities.* See FF_CODEC_CAP_* in internal.h*/int caps_internal;/*** Decoding only, a comma-separated list of bitstream filters to apply to* packets before decoding.*/const char *bsfs;/*** Array of pointers to hardware configurations supported by the codec,* or NULL if no hardware supported. The array is terminated by a NULL* pointer.** The user can only access this field via avcodec_get_hw_config().*/const struct AVCodecHWConfigInternal **hw_configs;

} AVCodec;

2.4.1. AVCodecID:AVCODECID编码器的ID号,这里的编码器ID包含了视频的编码器ID,如:AV_CODEC_ID_H264、AV_CODEC_ID_H265等等。音频的编码器ID:AV_CODEC_ID_AAC、AV_CODEC_ID_MP3等等。2.4.2. AVMediaType:指明当前编码器的类型,包括:视频(AVMEDIA_TYPE_VIDEO)、音频(AVMEDIA_TYPE_AUDIO)、字幕(AVMEDIA_TYPE_SUBTITILE)。AVMediaType具体定义:

enum AVMediaType {

AVMEDIA_TYPE_UNKNOWN = -1, ///< Usually treated as AVMEDIA_TYPE_DATA

AVMEDIA_TYPE_VIDEO,

AVMEDIA_TYPE_AUDIO,

AVMEDIA_TYPE_DATA, ///< Opaque data information usually continuous

AVMEDIA_TYPE_SUBTITLE,

AVMEDIA_TYPE_ATTACHMENT, ///< Opaque data information usually sparse

AVMEDIA_TYPE_NB

};

2.4.3. AVRotational supported_framerates:支持的帧率,这个参数仅支持视频设置

2.4.4. enum AVPixelFormat * pix_fmts:支持的像素格式,这个参数支持视频设置

2.4.5. int * supported_samplerates:支持的采样率,这个参数支持音频设置

2.4.6. enum AVSampleFormat * sample_fmts:支持的采样格式,这个参数仅支持音频设置

2.4.7. uint64_t * channel_layouts:支持的声道数,这个参数仅支持音频设置

2.4.8. int private_data_size:私有数据的大小2.5.AVCodecContext结构体:

AVCodecContext是FFMPEG编解码上下文的结构体,它内部包含了AVCodec编解码参数结构体。除了AVCodec结构体外,还有AVCodecInternal、AVRotational结构体,这包含了AVCodecID、AVMediaType、AVPixelFormat、AVSampleFormat等类型,其中包含视频的分辨率width、height、帧率framerate、码率bitrate等。

重要参数:

2.5.1. AVMediaType codec_type:指明当前编码器的类型,包括:视频(AVMEDIA_TYPE_VIDEO)、音频(AVMEDIA_TYPE_AUDIO)、字幕(AVMEDIA_TYPE_SUBTITILE)。AVMediaType具体定义:

enum AVMediaType {

AVMEDIA_TYPE_UNKNOWN = -1, ///< Usually treated as AVMEDIA_TYPE_DATA

AVMEDIA_TYPE_VIDEO,

AVMEDIA_TYPE_AUDIO,

AVMEDIA_TYPE_DATA, ///< Opaque data information usually continuous

AVMEDIA_TYPE_SUBTITLE,

AVMEDIA_TYPE_ATTACHMENT, ///< Opaque data information usually sparse

AVMEDIA_TYPE_NB

};

2.5.2. AVMediaType codec_type:指明当前编码器的类型,包括:视频(AVMEDIA_TYPE_VIDEO)、音频(AVMEDIA_TYPE_AUDIO)、字幕(AVMEDIA_TYPE_SUBTITILE)。2.5.3. AVCodec * codec:指明相应的编解码器,如H264/H265等等

2.5.4. AVCodecID * codec_id:编解码器的ID,这个在上面有详细的说明

2.5.5. void * priv_data:指向相对应的编解码器

2.5.6. int bit_rate:编码的码流,这里包含了音频码流和视频码流码率的设置

2.5.7.thread_count:编解码时候线程的数量,这个由用户自己设置和CPU数量有关。

2.5.8. AVRational time_base:根据该参数可以将pts转换为时间

2.5.9. int width, height:每一帧的宽和高

2.5.10. AVRational time_base:根据该参数可以将pts转换为时间

2.5.11. int gop_size:一组图片的数量,专门用于视频编码

2.5.12. enum AVPixelFormat pix_fmt:像素格式,编码的时候用户设置

2.5.13. int refs:参考帧的数量

2.5.14.enum AVColorSpace colorspace:YUV色彩空间类型

2.5.15.enum AVColorSpace color_range:MPEG JPEG YUV范围

2.5.16.int sample_rate:音频采样率

2.5.17.int channel:声道数(音频)

2.5.18. AVSampleFormat sample_fmt:采样格式

2.5.19.int frame_size:每个音频帧中每个声道的采样数量

2.5.20.int profile:配置类型

2.5.21.int level:级别2.6.AVPacket

AVPacket是FFmpeg中的核心结构体,用于存储解复用后、解码前的压缩音视频数据。它不仅包含压缩数据本身,还记录了关键参数信息,包括显示时间戳(pts)、解码时间戳(dts)、数据时长以及流索引等。

对于视频数据,AVPacket通常存储H.264/H.265等视频码流;而音频数据则主要保存AAC/MP3等音频码流格式。

typedef struct AVPacket {/*** A reference to the reference-counted buffer where the packet data is* stored.* May be NULL, then the packet data is not reference-counted.*/AVBufferRef *buf;/*** Presentation timestamp in AVStream->time_base units; the time at which* the decompressed packet will be presented to the user.* Can be AV_NOPTS_VALUE if it is not stored in the file.* pts MUST be larger or equal to dts as presentation cannot happen before* decompression, unless one wants to view hex dumps. Some formats misuse* the terms dts and pts/cts to mean something different. Such timestamps* must be converted to true pts/dts before they are stored in AVPacket.*/int64_t pts;/*** Decompression timestamp in AVStream->time_base units; the time at which* the packet is decompressed.* Can be AV_NOPTS_VALUE if it is not stored in the file.*/int64_t dts;uint8_t *data;int size;int stream_index;/*** A combination of AV_PKT_FLAG values*/int flags;/*** Additional packet data that can be provided by the container.* Packet can contain several types of side information.*/AVPacketSideData *side_data;int side_data_elems;/*** Duration of this packet in AVStream->time_base units, 0 if unknown.* Equals next_pts - this_pts in presentation order.*/int64_t duration;int64_t pos; ///< byte position in stream, -1 if unknown#if FF_API_CONVERGENCE_DURATION/*** @deprecated Same as the duration field, but as int64_t. This was required* for Matroska subtitles, whose duration values could overflow when the* duration field was still an int.*/attribute_deprecatedint64_t convergence_duration;

#endif

} AVPacket;

2.6.1. AVBufferRef * buf:对数据包所在的引用的计数缓冲区引用

2.6.2. int64_t pts:显示时间戳,它是来视频播放的时候用于控制视频显示顺序和控制速度。PTS是一个相对的时间戳,通常它以毫秒为单位,表示该帧在播放器的哪个时间节点进行显示

2.6.3. int64_t dts:解码时间戳,它是来指视频或者音频在解码时候的时间戳,用于控制解码器的解码顺序和速度。DTS也是一个相对的时间戳,通常它以毫秒为单位,表示该帧在解码器中哪个时间节点进行解码

2.6.4. uint8_t * data:具体的缓冲区数据,这里的数据可以是视频H264数据也可以是音频的AAC数据

2.6.5. int size:缓冲区长度,这里就是具体的码流数据的长度

2.6.6.int stream_index:流媒体索引值,假设同时有音频和视频。那stream_index = 0等于视频数据,stream_index = 1等于音频数据

2.6.7.flags:专门用在视频编码码流的标识符,默认都要添加AV_PKT_FLAG,这指的是每一帧都要添加一个关键帧,否则画面则无法正常解码出来。

2.6.8. AVPacketSideData * side_data:容器可以提供的额外数据包数据,包含多种类型的边信息。

2.6.9. int side_data_element:额外数据包的长度

2.6.10. int64_t duration:AVPacket的持续时间,它的时间以AVStream->time_base为单位作为计算,若未知则为0.

2.6.11.int64_t pos:字节的位置,这个很少用2.7.AVFrame

AVFrame的结构体一般存储音视频的原始数据,若存储视频数据的话则存储YUV/RGB等数据;若存储音频数据的话,则会存储PCM数据,此外还包含了一些重要的相关信息。

typedef struct AVFrame {

#define AV_NUM_DATA_POINTERS 8/*** pointer to the picture/channel planes.* This might be different from the first allocated byte** Some decoders access areas outside 0,0 - width,height, please* see avcodec_align_dimensions2(). Some filters and swscale can read* up to 16 bytes beyond the planes, if these filters are to be used,* then 16 extra bytes must be allocated.** NOTE: Except for hwaccel formats, pointers not needed by the format* MUST be set to NULL.*/uint8_t *data[AV_NUM_DATA_POINTERS];/*** For video, size in bytes of each picture line.* For audio, size in bytes of each plane.** For audio, only linesize[0] may be set. For planar audio, each channel* plane must be the same size.** For video the linesizes should be multiples of the CPUs alignment* preference, this is 16 or 32 for modern desktop CPUs.* Some code requires such alignment other code can be slower without* correct alignment, for yet other it makes no difference.** @note The linesize may be larger than the size of usable data -- there* may be extra padding present for performance reasons.*/int linesize[AV_NUM_DATA_POINTERS];/*** pointers to the data planes/channels.** For video, this should simply point to data[].** For planar audio, each channel has a separate data pointer, and* linesize[0] contains the size of each channel buffer.* For packed audio, there is just one data pointer, and linesize[0]* contains the total size of the buffer for all channels.** Note: Both data and extended_data should always be set in a valid frame,* but for planar audio with more channels that can fit in data,* extended_data must be used in order to access all channels.*/uint8_t **extended_data;/*** @name Video dimensions* Video frames only. The coded dimensions (in pixels) of the video frame,* i.e. the size of the rectangle that contains some well-defined values.** @note The part of the frame intended for display/presentation is further* restricted by the @ref cropping "Cropping rectangle".* @{*/int width, height;/*** @}*//*** number of audio samples (per channel) described by this frame*/int nb_samples;/*** format of the frame, -1 if unknown or unset* Values correspond to enum AVPixelFormat for video frames,* enum AVSampleFormat for audio)*/int format;/*** 1 -> keyframe, 0-> not*/int key_frame;/*** Picture type of the frame.*/enum AVPictureType pict_type;/*** Sample aspect ratio for the video frame, 0/1 if unknown/unspecified.*/AVRational sample_aspect_ratio;/*** Presentation timestamp in time_base units (time when frame should be shown to user).*/int64_t pts;#if FF_API_PKT_PTS/*** PTS copied from the AVPacket that was decoded to produce this frame.* @deprecated use the pts field instead*/attribute_deprecatedint64_t pkt_pts;

#endif/*** DTS copied from the AVPacket that triggered returning this frame. (if frame threading isn't used)* This is also the Presentation time of this AVFrame calculated from* only AVPacket.dts values without pts values.*/int64_t pkt_dts;/*** picture number in bitstream order*/int coded_picture_number;/*** picture number in display order*/int display_picture_number;/*** quality (between 1 (good) and FF_LAMBDA_MAX (bad))*/int quality;/*** for some private data of the user*/void *opaque;#if FF_API_ERROR_FRAME/*** @deprecated unused*/attribute_deprecateduint64_t error[AV_NUM_DATA_POINTERS];

#endif/*** When decoding, this signals how much the picture must be delayed.* extra_delay = repeat_pict / (2*fps)*/int repeat_pict;/*** The content of the picture is interlaced.*/int interlaced_frame;/*** If the content is interlaced, is top field displayed first.*/int top_field_first;/*** Tell user application that palette has changed from previous frame.*/int palette_has_changed;/*** reordered opaque 64 bits (generally an integer or a double precision float* PTS but can be anything).* The user sets AVCodecContext.reordered_opaque to represent the input at* that time,* the decoder reorders values as needed and sets AVFrame.reordered_opaque* to exactly one of the values provided by the user through AVCodecContext.reordered_opaque*/int64_t reordered_opaque;/*** Sample rate of the audio data.*/int sample_rate;/*** Channel layout of the audio data.*/uint64_t channel_layout;/*** AVBuffer references backing the data for this frame. If all elements of* this array are NULL, then this frame is not reference counted. This array* must be filled contiguously -- if buf[i] is non-NULL then buf[j] must* also be non-NULL for all j < i.** There may be at most one AVBuffer per data plane, so for video this array* always contains all the references. For planar audio with more than* AV_NUM_DATA_POINTERS channels, there may be more buffers than can fit in* this array. Then the extra AVBufferRef pointers are stored in the* extended_buf array.*/AVBufferRef *buf[AV_NUM_DATA_POINTERS];/*** For planar audio which requires more than AV_NUM_DATA_POINTERS* AVBufferRef pointers, this array will hold all the references which* cannot fit into AVFrame.buf.** Note that this is different from AVFrame.extended_data, which always* contains all the pointers. This array only contains the extra pointers,* which cannot fit into AVFrame.buf.** This array is always allocated using av_malloc() by whoever constructs* the frame. It is freed in av_frame_unref().*/AVBufferRef **extended_buf;/*** Number of elements in extended_buf.*/int nb_extended_buf;AVFrameSideData **side_data;int nb_side_data;/*** @defgroup lavu_frame_flags AV_FRAME_FLAGS* @ingroup lavu_frame* Flags describing additional frame properties.** @{*//*** The frame data may be corrupted, e.g. due to decoding errors.*/

#define AV_FRAME_FLAG_CORRUPT (1 << 0)

/*** A flag to mark the frames which need to be decoded, but shouldn't be output.*/

#define AV_FRAME_FLAG_DISCARD (1 << 2)

/*** @}*//*** Frame flags, a combination of @ref lavu_frame_flags*/int flags;/*** MPEG vs JPEG YUV range.* - encoding: Set by user* - decoding: Set by libavcodec*/enum AVColorRange color_range;enum AVColorPrimaries color_primaries;enum AVColorTransferCharacteristic color_trc;/*** YUV colorspace type.* - encoding: Set by user* - decoding: Set by libavcodec*/enum AVColorSpace colorspace;enum AVChromaLocation chroma_location;/*** frame timestamp estimated using various heuristics, in stream time base* - encoding: unused* - decoding: set by libavcodec, read by user.*/int64_t best_effort_timestamp;/*** reordered pos from the last AVPacket that has been input into the decoder* - encoding: unused* - decoding: Read by user.*/int64_t pkt_pos;/*** duration of the corresponding packet, expressed in* AVStream->time_base units, 0 if unknown.* - encoding: unused* - decoding: Read by user.*/int64_t pkt_duration;/*** metadata.* - encoding: Set by user.* - decoding: Set by libavcodec.*/AVDictionary *metadata;/*** decode error flags of the frame, set to a combination of* FF_DECODE_ERROR_xxx flags if the decoder produced a frame, but there* were errors during the decoding.* - encoding: unused* - decoding: set by libavcodec, read by user.*/int decode_error_flags;

#define FF_DECODE_ERROR_INVALID_BITSTREAM 1

#define FF_DECODE_ERROR_MISSING_REFERENCE 2

#define FF_DECODE_ERROR_CONCEALMENT_ACTIVE 4

#define FF_DECODE_ERROR_DECODE_SLICES 8/*** number of audio channels, only used for audio.* - encoding: unused* - decoding: Read by user.*/int channels;/*** size of the corresponding packet containing the compressed* frame.* It is set to a negative value if unknown.* - encoding: unused* - decoding: set by libavcodec, read by user.*/int pkt_size;#if FF_API_FRAME_QP/*** QP table*/attribute_deprecatedint8_t *qscale_table;/*** QP store stride*/attribute_deprecatedint qstride;attribute_deprecatedint qscale_type;attribute_deprecatedAVBufferRef *qp_table_buf;

#endif/*** For hwaccel-format frames, this should be a reference to the* AVHWFramesContext describing the frame.*/AVBufferRef *hw_frames_ctx;/*** AVBufferRef for free use by the API user. FFmpeg will never check the* contents of the buffer ref. FFmpeg calls av_buffer_unref() on it when* the frame is unreferenced. av_frame_copy_props() calls create a new* reference with av_buffer_ref() for the target frame's opaque_ref field.** This is unrelated to the opaque field, although it serves a similar* purpose.*/AVBufferRef *opaque_ref;/*** @anchor cropping* @name Cropping* Video frames only. The number of pixels to discard from the the* top/bottom/left/right border of the frame to obtain the sub-rectangle of* the frame intended for presentation.* @{*/size_t crop_top;size_t crop_bottom;size_t crop_left;size_t crop_right;/*** @}*//*** AVBufferRef for internal use by a single libav* library.* Must not be used to transfer data between libraries.* Has to be NULL when ownership of the frame leaves the respective library.** Code outside the FFmpeg libs should never check or change the contents of the buffer ref.** FFmpeg calls av_buffer_unref() on it when the frame is unreferenced.* av_frame_copy_props() calls create a new reference with av_buffer_ref()* for the target frame's private_ref field.*/AVBufferRef *private_ref;

} AVFrame;重要参数:

2.7.1. uint8_t * data[AV_NUM_DATA_POINTERS]:原始数据,如果是视频数据则是(YUV,RGB这些数据;音频数据的话是PCM)

2.7.2.int linesize[AV_NUM_DATA_POINTERS]:data中一行数据的大小,要注意:这个值未必就完全等于图像的宽,一般都会大于图像的宽度

2.7.3. width、height:视频帧的宽度和高度

2.7.4. int nb_samples:音频的一个AVFrame种可能包含多个音频帧,这个参数就是包含了多少个音频帧

2.7.5. format:解码后的原始数据类型(如:YUV420、YUV422、RGB24等等)

2.7.6. int key_frame:是否是关键帧

2.7.7. eum AVPictureType pict_type:帧类型(I帧、P帧、B帧),下面是PictureType的枚举类型

enum AVPictureType {AV_PICTURE_TYPE_NONE = 0, ///< UndefinedAV_PICTURE_TYPE_I, ///< IntraAV_PICTURE_TYPE_P, ///< PredictedAV_PICTURE_TYPE_B, ///< Bi-dir predictedAV_PICTURE_TYPE_S, ///< S(GMC)-VOP MPEG-4AV_PICTURE_TYPE_SI, ///< Switching IntraAV_PICTURE_TYPE_SP, ///< Switching PredictedAV_PICTURE_TYPE_BI, ///< BI type

};2.7.8. AVRational sample_aspect_ration:宽高比(16:9、4:3)

2.7.9. int64_t pts:显示时间戳

2.7.10. coded_picture_number:编码帧序号

2.7.11. display_picture_number:显示帧序号2.8. AVIOContext结构体

AVIOContext结构体是FFMPEG管理输入输出的结构体,下面我们来看看这里面比较重要的结构体成员变量

typedef struct {/*** A class for private options.** If this AVIOContext is created by avio_open2(), av_class is set and* passes the options down to protocols.** If this AVIOContext is manually allocated, then av_class may be set by* the caller.** warning -- this field can be NULL, be sure to not pass this AVIOContext* to any av_opt_* functions in that case.*/AVClass *av_class;unsigned char *buffer; /**< Start of the buffer. */int buffer_size; /**< Maximum buffer size */unsigned char *buf_ptr; /**< Current position in the buffer */unsigned char *buf_end; /**< End of the data, may be less thanbuffer+buffer_size if the read function returnedless data than requested, e.g. for streams whereno more data has been received yet. */void *opaque; /**< A private pointer, passed to the read/write/seek/...functions. */int (*read_packet)(void *opaque, uint8_t *buf, int buf_size);int (*write_packet)(void *opaque, uint8_t *buf, int buf_size);int64_t (*seek)(void *opaque, int64_t offset, int whence);int64_t pos; /**< position in the file of the current buffer */int must_flush; /**< true if the next seek should flush */int eof_reached; /**< true if eof reached */int write_flag; /**< true if open for writing */int max_packet_size;unsigned long checksum;unsigned char *checksum_ptr;unsigned long (*update_checksum)(unsigned long checksum, const uint8_t *buf, unsigned int size);int error; /**< contains the error code or 0 if no error happened *//*** Pause or resume playback for network streaming protocols - e.g. MMS.*/int (*read_pause)(void *opaque, int pause);/*** Seek to a given timestamp in stream with the specified stream_index.* Needed for some network streaming protocols which don't support seeking* to byte position.*/int64_t (*read_seek)(void *opaque, int stream_index,int64_t timestamp, int flags);/*** A combination of AVIO_SEEKABLE_ flags or 0 when the stream is not seekable.*/int seekable;/*** max filesize, used to limit allocations* This field is internal to libavformat and access from outside is not allowed.*/int64_t maxsize;

} AVIOContext;重要参数:

2.8.1. unsigned char * buffer:缓存开始位置

2.8.2. int buffer_size:缓存大小

2.8.3. unsigned char * buf_end:缓存结束的位置

2.8.4. void * opaque:URLContext结构体,下面我们来看看URLContext结构体的详细

URLContext结构体成员变量:

typedef struct URLContext {const AVClass *av_class; ///< information for av_log(). Set by url_open().struct URLProtocol *prot;int flags;int is_streamed; /**< true if streamed (no seek possible), default = false */int max_packet_size; /**< if non zero, the stream is packetized with this max packet size */void *priv_data;char *filename; /**< specified URL */int is_connected;AVIOInterruptCB interrupt_callback;

} URLContext;2.8.4.1. AVClass:它是在FFMPEG中具体应用很多,它类似于C++中的虚基类的结构体,用于实现多继承的功能。它定义了一系列的函数指针,这些函数指针可以被子类所覆盖了,从而实现子类对父类的重载。2.8.4.2. URLProtocol:每个协议对应一个URLProtocol,这个结构体也不在FFMPEG提供的头文件中。我们具体来看看这个结构体的定义typedef struct URLProtocol {const char *name;int (*url_open)(URLContext *h, const char *url, int flags);int (*url_read)(URLContext *h, unsigned char *buf, int size);int (*url_write)(URLContext *h, const unsigned char *buf, int size);int64_t (*url_seek)(URLContext *h, int64_t pos, int whence);int (*url_close)(URLContext *h);struct URLProtocol *next;int (*url_read_pause)(URLContext *h, int pause);int64_t (*url_read_seek)(URLContext *h, int stream_index,int64_t timestamp, int flags);int (*url_get_file_handle)(URLContext *h);int priv_data_size;const AVClass *priv_data_class;int flags;int (*url_check)(URLContext *h, int mask);

} URLProtocol;2.8.4.2.1. url_open:打开对应的文件IO的url地址

2.8.4.2.2. url_read:读取对应的文件IO的url地址的数据内容

2.8.4.2.3. url_write:写入对应的文件IO的url地址的数据内容

2.8.4.2.4. url_seek:移动文件指针

2.8.4.2.5. url_close:关闭文件指针在这个结构体里面提供了一些列的回调函数,每个回调函数都会有对应的协议进行编写。关系图理解: