前端使用koa实现调取deepseekapi实现ai聊天

流程,前端调取聊天接口,聊天接口做中转,我们使用node.js(用koa更方便快捷)去调取deepseek模型获取数据,deepseek模型需要下载Ollama。

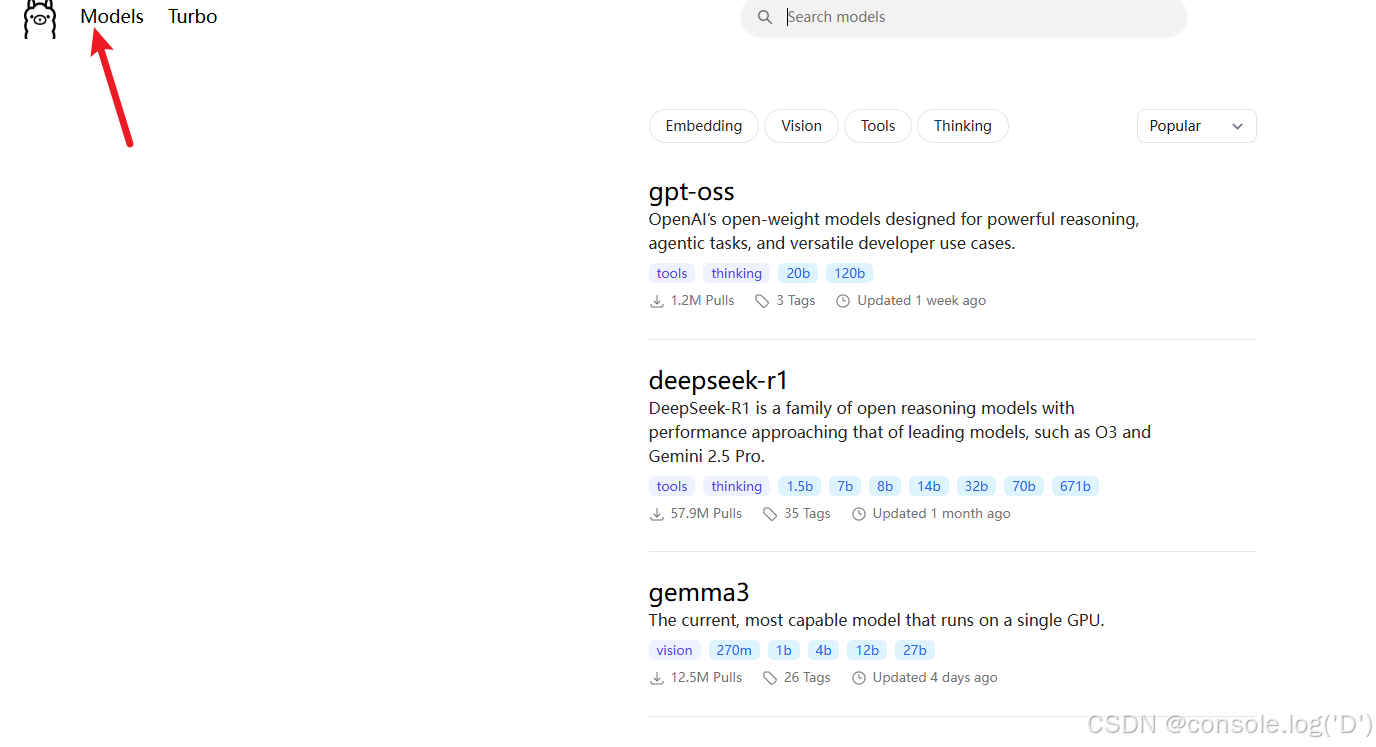

2.安城成功后去下载模型

3.命令窗可使用win+r ,使用命令ollama run deepseek-r1:1.xxxxxx 根据对应模型启动

1.koa的代码(中转站,相当于后端,去调取deepseek)

const Koa = require('koa');

const Router =require('koa-router')

const axios = require('axios');

const bodyParser = require('koa-bodyparser'); // 引入中间件

//实例化

const app = new Koa();

const router =new Router()

app.use(bodyParser());

app.use(router.routes()).use(router.allowedMethods())

//导入数据

// const indexData=require('./datas/index.json')

const OLLAMA_BASE_URL = 'http://127.0.0.1:11434';

//获取模型列表

router.get('/models', async (ctx) => {try {const response = await axios.get(`${OLLAMA_BASE_URL}/api/tags`);ctx.body = {success: true,models: response.data.models,};} catch (error) {ctx.status = 500;ctx.body = {success: false,error: error.message,};}});// 与 DeepSeek 模型对话

router.post('/api/chat', async (ctx) => {try {const { prompt } = ctx.request.body;if (!prompt) {ctx.status = 400;ctx.body = { error: 'Prompt is required' };return;}const response = await axios.post(`${OLLAMA_BASE_URL}/api/generate`, {model:"deepseek-r1:1.5b",prompt,stream: false // 设置为 true 如果需要流式响应});ctx.body = {code:200,success: true,response: response.data};} catch (error) {console.error('Ollama API error:', error);ctx.status = 500;ctx.body = {error: 'Failed to get response from Ollama',details: error.message};}});//监听某个端口

app.listen(3001,'0.0.0.0',(error)=>{if(error){console.log('服务器启动错误',error)return}else{console.log('服务器启动成功端口号为127.0.0.1:3001')}

})2.前端代码只需要正常调用koa暴露的接口就好(只需关注使用方式,参数自定义)

//聊天发送onChatSubmit() {let keyword = this.chatKeyword;this.chatKeyword = "";this.chatAiList.push({ai: "",my: keyword || "",});chatApi.chat({ prompt: keyword || "" }).then((res) => {this.aiChatWord = res?.response?.response || "";this.chatAiList.push({ai: this.aiChatWord || "",my: "",});}).catch((err) => {});},

代表成功

代表成功