AI Competitor Intelligence Agent Team

Github:

Shubhamsaboo/awesome-llm-apps: Collection of awesome LLM apps with AI Agents and RAG using OpenAI, Anthropic, Gemini and opensource models.

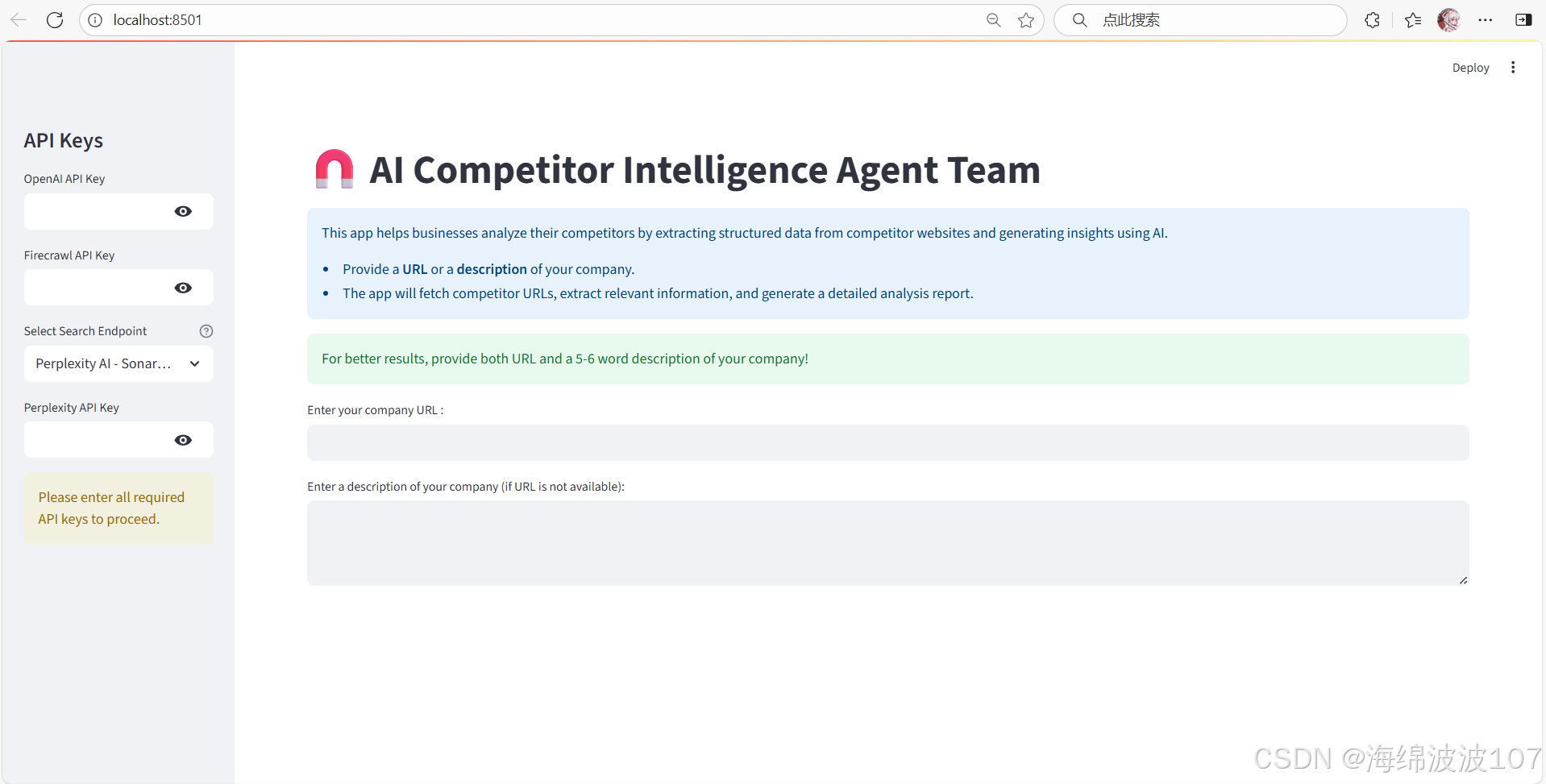

输入三个API之后,我以艾可蓝集团为例搜索了竞争公司报告

该算法会使用搜索引擎+爬虫工具搜集信息给出报告

不过这个效果差强人意只是有点模样,并没有分析地很好,还有很大优化的空间,不过下面我们先关注一下目前的算法:

1. 整体架构

这个应用采用了模块化设计,主要分为以下几个部分:

-

用户界面层:使用Streamlit构建的Web界面

-

API集成层:集成多个外部API服务(OpenAI、Exa、Perplexity、Firecrawl等)

-

业务逻辑层:处理竞争对手分析的核心逻辑

-

数据模型层:使用Pydantic定义数据结构

2. 主要功能组件

2.1 用户界面

# Streamlit UI配置

st.set_page_config(page_title="AI Competitor Intelligence Agent Team", layout="wide")# 侧边栏API密钥输入

st.sidebar.title("API Keys")

openai_api_key = st.sidebar.text_input("OpenAI API Key", type="password")

firecrawl_api_key = st.sidebar.text_input("Firecrawl API Key", type="password")# 主界面

st.title("🧲 AI Competitor Intelligence Agent Team")

st.info("应用描述信息...")UI部分使用Streamlit构建,包含:

-

侧边栏用于输入各种API密钥

-

主界面显示应用标题和描述

-

输入框用于输入公司URL或描述

-

分析按钮触发整个分析流程

2.2 核心功能模块

2.2.1 竞争对手URL获取

def get_competitor_urls(url: str = None, description: str = None) -> list[str]:if search_engine == "Perplexity AI - Sonar Pro":# 使用Perplexity API获取相似公司URL...else: # Exa AI# 使用Exa API获取相似公司URL...这个函数根据用户选择的搜索引擎(Perplexity或Exa),使用相应的API获取竞争对手URL。它支持通过公司URL或描述来查找相似公司。Agno框架提供了强大的爬虫工具。

firecrawl_agent = Agent(model=OpenAIChat(id="gpt-4o", api_key=st.session_state.openai_api_key),tools=[firecrawl_tools, DuckDuckGoTools()],show_tool_calls=True,markdown=True)- DuckDuckGoTools - 负责发现和搜索竞争对手URL

FirecrawlTools - 负责爬取这些URL并提取详细数据

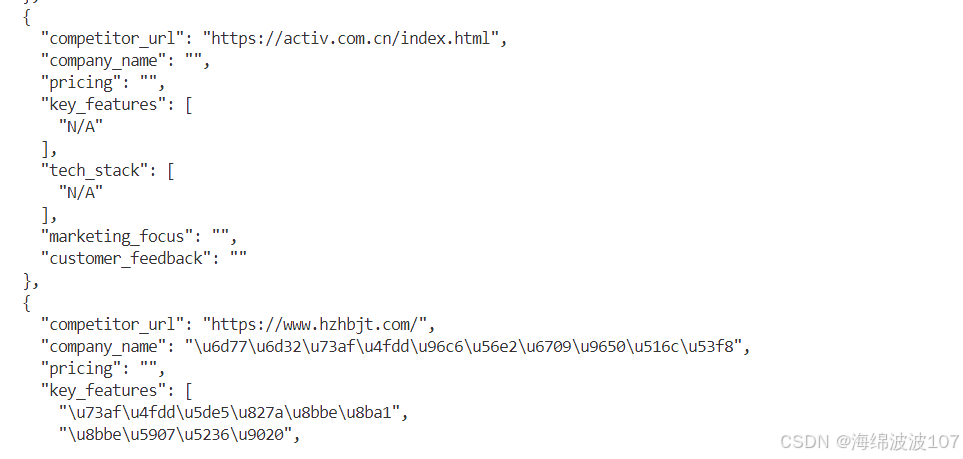

2.2.2 竞争对手信息提取

class CompetitorDataSchema(BaseModel):company_name: str = Field(description="公司名称")pricing: str = Field(description="定价详情")key_features: List[str] = Field(description="主要特性")tech_stack: List[str] = Field(description="技术栈")marketing_focus: str = Field(description="营销重点")customer_feedback: str = Field(description="客户反馈")def extract_competitor_info(competitor_url: str) -> Optional[dict]:# 使用Firecrawl提取网站信息...这部分定义了竞争对手数据的结构模型,并使用Firecrawl API从竞争对手网站提取结构化信息。

为什么Firecrawl需要提供提示词?

因为Firecrawl使用了AI驱动的智能提取,而不是传统的网页抓取。

AI驱动的数据提取

传统爬虫与AI爬虫对比

| 传统爬虫 | Firecrawl AI爬虫 |

|---|---|

| 依赖HTML标签定位 | 基于语义分析提取 |

| 需要精确CSS选择器 | 支持自然语言描述 |

| 网站改版易失效 | 自适应结构变化 |

| 仅能提取固定格式 | 理解上下文语义 |

提示词的核心作用

1. 指导AI精准理解需求

extraction_prompt = """

全面提取公司业务信息,包括:

- 公司基本信息

- 价格方案与套餐详情

- 核心功能特性

- 技术架构说明

- 市场定位与受众

- 客户评价反馈请综合分析全站内容,确保各字段信息完整。

"""

2. 规范数据结构输出

response = app.extract([url_pattern],prompt=extraction_prompt,schema=CompetitorDataSchema.model_json_schema() # 结构化输出定义

)

提示词的必要性

1. 语义识别

- 准确识别"价格"在不同场景下的表述

- 理解"费用"、"套餐"等同义表达

2. 上下文关联

- 跨页面关联相关信息

- 智能区分"核心功能"与"附加特性"

3. 数据归类

- 确保信息精准分类

- 维护数据一致性

Firecrawl通过融合传统爬虫的数据采集能力和AI的语义理解能力,实现了智能化的数据提取解决方案。

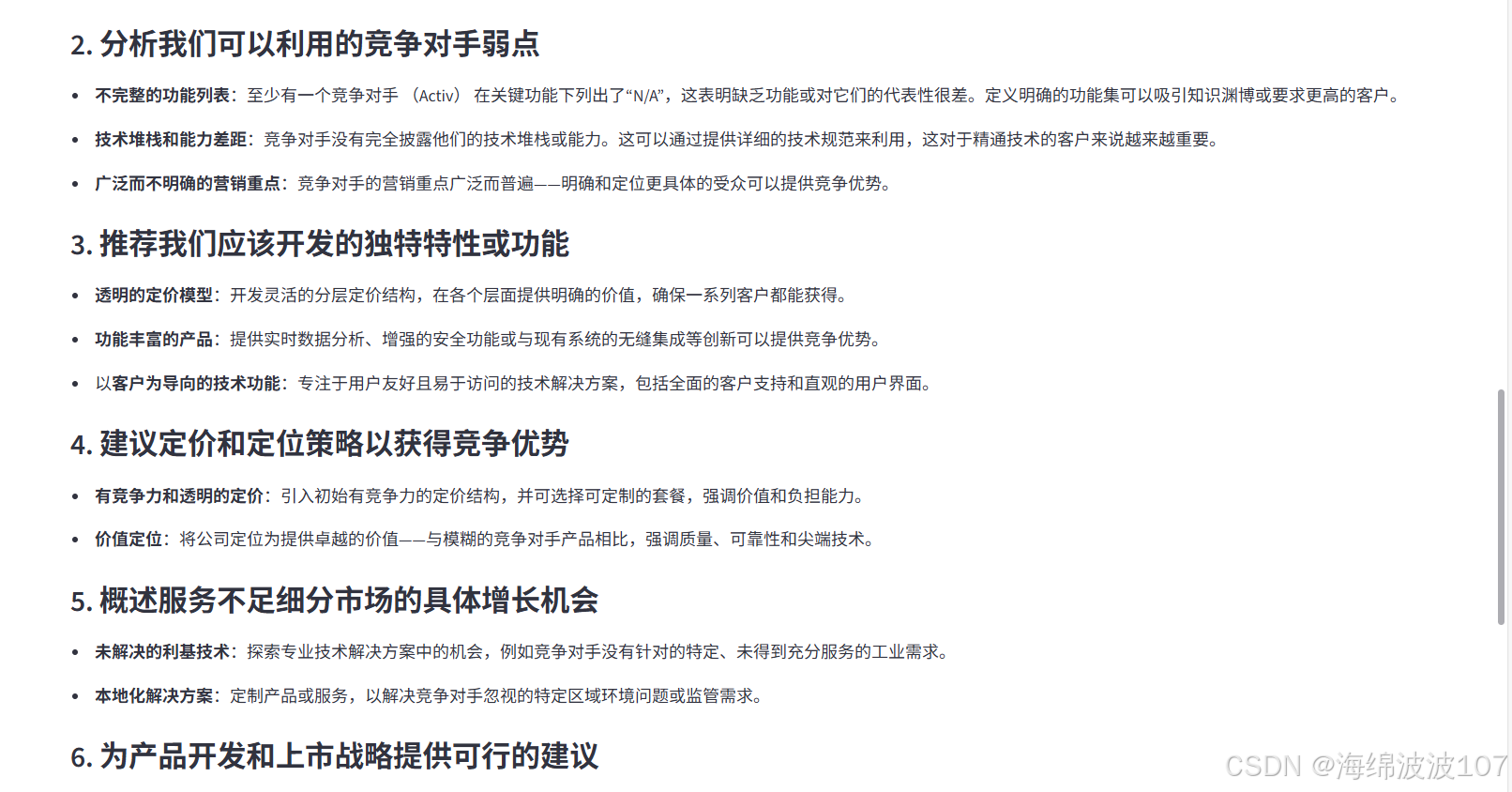

2.2.3 分析与报告生成

def generate_comparison_report(competitor_data: list) -> None:# 生成对比表格...def generate_analysis_report(competitor_data: list):# 使用AI生成分析报告...这两个函数负责:

-

将提取的竞争对手数据转换为易于比较的表格形式

实际情况中,搜索引擎+AI爬虫的组合在爬取信息上还是有很多遗漏,信息搜集的效果一般,这也是这个项目后续需要改进的地方 -

使用GPT-4生成深入的市场分析和建议

2.3 代理(Agent)系统

firecrawl_agent = Agent(...)

analysis_agent = Agent(...)

comparison_agent = Agent(...)应用使用了三个不同的AI代理:

-

firecrawl_agent- 处理网页抓取相关任务 -

analysis_agent- 生成分析报告 -

comparison_agent- 进行数据对比

这三个Agent在代码中定义了,但在后续的代码中并没有被实际使用。只在最后使用了analysis_agent一次

从前面搜集的结构化信息提供给提示词,并run分析智能体

report = analysis_agent.run(f"""Analyze the following competitor data in JSON format and identify market opportunities to improve my own company:{formatted_data}Tasks:1. Identify market gaps and opportunities based on competitor offerings2. Analyze competitor weaknesses that we can capitalize on3. Recommend unique features or capabilities we should develop4. Suggest pricing and positioning strategies to gain competitive advantage5. Outline specific growth opportunities in underserved market segments6. Provide actionable recommendations for product development and go-to-market strategyFocus on finding opportunities where we can differentiate and do better than competitors.Highlight any unmet customer needs or pain points we can address.""")可能是原本计划让不同Agent负责不同任务,但是最终改为直接使用FirecrawlApp和函数式编程,简化了实现,但没有清理代码。

3. 工作流程

整个应用的工作流程如下:

-

用户输入:

-

提供公司URL或描述

-

输入必要的API密钥

-

-

获取竞争对手URL:

-

根据用户选择的搜索引擎(Perplexity或Exa)查找相似公司

-

-

提取竞争对手信息:

-

对每个竞争对手URL,使用Firecrawl提取结构化数据

-

数据包括公司名称、定价、特性、技术栈等

-

-

生成报告:

-

创建可视化对比表格

-

使用GPT-4生成市场分析和建议

-

-

显示结果:

-

在Streamlit界面中展示分析结果

-

4. 关键技术点

4.1 多API集成

应用集成了多个API服务:

-

Exa/Perplexity:用于查找相似公司

-

Firecrawl:用于网页内容提取

-

OpenAI:用于生成分析报告

4.2 结构化数据提取

使用Pydantic模型定义数据结构,确保提取的信息格式一致:

class CompetitorDataSchema(BaseModel):company_name: str = Field(...)pricing: str = Field(...)...4.3 错误处理

代码中包含多处错误处理,确保某个API失败时应用仍能运行:

try:# API调用

except Exception as e:st.error(f"Error fetching...")return []4.4 用户体验优化

-

使用

st.spinner()显示加载状态 -

提供详细的错误提示

-

支持多种输入方式(URL或描述)

源代码:

import streamlit as st

from exa_py import Exa

from agno.agent import Agent

from agno.tools.firecrawl import FirecrawlTools

from agno.models.openai import OpenAIChat

from agno.tools.duckduckgo import DuckDuckGoTools

import pandas as pd

import requests

from firecrawl import FirecrawlApp

from pydantic import BaseModel, Field

from typing import List, Optional

import json# Streamlit UI

st.set_page_config(page_title="AI Competitor Intelligence Agent Team", layout="wide")# Sidebar for API keys

st.sidebar.title("API Keys")

openai_api_key = st.sidebar.text_input("OpenAI API Key", type="password")

firecrawl_api_key = st.sidebar.text_input("Firecrawl API Key", type="password")# Add search engine selection before API keys

search_engine = st.sidebar.selectbox("Select Search Endpoint",options=["Perplexity AI - Sonar Pro", "Exa AI"],help="Choose which AI service to use for finding competitor URLs"

)# Show relevant API key input based on selection

if search_engine == "Perplexity AI - Sonar Pro":perplexity_api_key = st.sidebar.text_input("Perplexity API Key", type="password")# Store API keys in session stateif openai_api_key and firecrawl_api_key and perplexity_api_key:st.session_state.openai_api_key = openai_api_keyst.session_state.firecrawl_api_key = firecrawl_api_keyst.session_state.perplexity_api_key = perplexity_api_keyelse:st.sidebar.warning("Please enter all required API keys to proceed.")

else: # Exa AIexa_api_key = st.sidebar.text_input("Exa API Key", type="password")# Store API keys in session stateif openai_api_key and firecrawl_api_key and exa_api_key:st.session_state.openai_api_key = openai_api_keyst.session_state.firecrawl_api_key = firecrawl_api_keyst.session_state.exa_api_key = exa_api_keyelse:st.sidebar.warning("Please enter all required API keys to proceed.")# Main UI

st.title("🧲 AI Competitor Intelligence Agent Team")

st.info("""This app helps businesses analyze their competitors by extracting structured data from competitor websites and generating insights using AI.- Provide a **URL** or a **description** of your company.- The app will fetch competitor URLs, extract relevant information, and generate a detailed analysis report."""

)

st.success("For better results, provide both URL and a 5-6 word description of your company!")# Input fields for URL and description

url = st.text_input("Enter your company URL :")

description = st.text_area("Enter a description of your company (if URL is not available):")# Initialize API keys and tools

if "openai_api_key" in st.session_state and "firecrawl_api_key" in st.session_state:if (search_engine == "Perplexity AI - Sonar Pro" and "perplexity_api_key" in st.session_state) or \(search_engine == "Exa AI" and "exa_api_key" in st.session_state):# Initialize Exa only if selectedif search_engine == "Exa AI":exa = Exa(api_key=st.session_state.exa_api_key)firecrawl_tools = FirecrawlTools(api_key=st.session_state.firecrawl_api_key,scrape=False,crawl=True,limit=5)firecrawl_agent = Agent(model=OpenAIChat(id="gpt-4o", api_key=st.session_state.openai_api_key),tools=[firecrawl_tools, DuckDuckGoTools()],show_tool_calls=True,markdown=True)analysis_agent = Agent(model=OpenAIChat(id="gpt-4o", api_key=st.session_state.openai_api_key),show_tool_calls=True,markdown=True)# New agent for comparing competitor datacomparison_agent = Agent(model=OpenAIChat(id="gpt-4o", api_key=st.session_state.openai_api_key),show_tool_calls=True,markdown=True)def get_competitor_urls(url: str = None, description: str = None) -> list[str]:if not url and not description:raise ValueError("Please provide either a URL or a description.")if search_engine == "Perplexity AI - Sonar Pro":perplexity_url = "https://api.perplexity.ai/chat/completions"content = "Find me 3 competitor company URLs similar to the company with "if url and description:content += f"URL: {url} and description: {description}"elif url:content += f"URL: {url}"else:content += f"description: {description}"content += ". ONLY RESPOND WITH THE URLS, NO OTHER TEXT."payload = {"model": "sonar-pro","messages": [{"role": "system","content": "Be precise and only return 3 company URLs ONLY."},{"role": "user","content": content}],"max_tokens": 1000,"temperature": 0.2,}headers = {"Authorization": f"Bearer {st.session_state.perplexity_api_key}","Content-Type": "application/json"}try:response = requests.post(perplexity_url, json=payload, headers=headers)response.raise_for_status()urls = response.json()['choices'][0]['message']['content'].strip().split('\n')return [url.strip() for url in urls if url.strip()]except Exception as e:st.error(f"Error fetching competitor URLs from Perplexity: {str(e)}")return []else: # Exa AItry:if url:result = exa.find_similar(url=url,num_results=3,exclude_source_domain=True,category="company")else:result = exa.search(description,type="neural",category="company",use_autoprompt=True,num_results=3)return [item.url for item in result.results]except Exception as e:st.error(f"Error fetching competitor URLs from Exa: {str(e)}")return []class CompetitorDataSchema(BaseModel):company_name: str = Field(description="Name of the company")pricing: str = Field(description="Pricing details, tiers, and plans")key_features: List[str] = Field(description="Main features and capabilities of the product/service")tech_stack: List[str] = Field(description="Technologies, frameworks, and tools used")marketing_focus: str = Field(description="Main marketing angles and target audience")customer_feedback: str = Field(description="Customer testimonials, reviews, and feedback")def extract_competitor_info(competitor_url: str) -> Optional[dict]:try:# Initialize FirecrawlApp with API keyapp = FirecrawlApp(api_key=st.session_state.firecrawl_api_key)# Add wildcard to crawl subpagesurl_pattern = f"{competitor_url}/*"extraction_prompt = """Extract detailed information about the company's offerings, including:- Company name and basic information- Pricing details, plans, and tiers- Key features and main capabilities- Technology stack and technical details- Marketing focus and target audience- Customer feedback and testimonialsAnalyze the entire website content to provide comprehensive information for each field."""response = app.extract([url_pattern],prompt=extraction_prompt,schema=CompetitorDataSchema.model_json_schema())# Handle ExtractResponse objecttry:if hasattr(response, 'success') and response.success:if hasattr(response, 'data') and response.data:extracted_info = response.data# Create JSON structurecompetitor_json = {"competitor_url": competitor_url,"company_name": extracted_info.get('company_name', 'N/A') if isinstance(extracted_info, dict) else getattr(extracted_info, 'company_name', 'N/A'),"pricing": extracted_info.get('pricing', 'N/A') if isinstance(extracted_info, dict) else getattr(extracted_info, 'pricing', 'N/A'),"key_features": extracted_info.get('key_features', [])[:5] if isinstance(extracted_info, dict) and extracted_info.get('key_features') else getattr(extracted_info, 'key_features', [])[:5] if hasattr(extracted_info, 'key_features') else ['N/A'],"tech_stack": extracted_info.get('tech_stack', [])[:5] if isinstance(extracted_info, dict) and extracted_info.get('tech_stack') else getattr(extracted_info, 'tech_stack', [])[:5] if hasattr(extracted_info, 'tech_stack') else ['N/A'],"marketing_focus": extracted_info.get('marketing_focus', 'N/A') if isinstance(extracted_info, dict) else getattr(extracted_info, 'marketing_focus', 'N/A'),"customer_feedback": extracted_info.get('customer_feedback', 'N/A') if isinstance(extracted_info, dict) else getattr(extracted_info, 'customer_feedback', 'N/A')}return competitor_jsonelse:return Noneelse:return Noneexcept Exception as response_error:return Noneexcept Exception as e:return Nonedef generate_comparison_report(competitor_data: list) -> None:# Create DataFrame directly from competitor dataif not competitor_data:st.error("No competitor data available for comparison")return# Prepare data for DataFrametable_data = []for competitor in competitor_data:row = {'Company': f"{competitor.get('company_name', 'N/A')} ({competitor.get('competitor_url', 'N/A')})",'Pricing': competitor.get('pricing', 'N/A')[:100] + '...' if len(competitor.get('pricing', '')) > 100 else competitor.get('pricing', 'N/A'),'Key Features': ', '.join(competitor.get('key_features', [])[:3]) if competitor.get('key_features') else 'N/A','Tech Stack': ', '.join(competitor.get('tech_stack', [])[:3]) if competitor.get('tech_stack') else 'N/A','Marketing Focus': competitor.get('marketing_focus', 'N/A')[:100] + '...' if len(competitor.get('marketing_focus', '')) > 100 else competitor.get('marketing_focus', 'N/A'),'Customer Feedback': competitor.get('customer_feedback', 'N/A')[:100] + '...' if len(competitor.get('customer_feedback', '')) > 100 else competitor.get('customer_feedback', 'N/A')}table_data.append(row)# Create DataFramedf = pd.DataFrame(table_data)# Display the tablest.subheader("Competitor Comparison")st.dataframe(df, use_container_width=True)# Also show raw data for debuggingwith st.expander("View Raw Competitor Data"):st.json(competitor_data)def generate_analysis_report(competitor_data: list):# Format the competitor data for the promptformatted_data = json.dumps(competitor_data, indent=2)print("Analysis Data:", formatted_data) # For debuggingreport = analysis_agent.run(f"""Analyze the following competitor data in JSON format and identify market opportunities to improve my own company:{formatted_data}Tasks:1. Identify market gaps and opportunities based on competitor offerings2. Analyze competitor weaknesses that we can capitalize on3. Recommend unique features or capabilities we should develop4. Suggest pricing and positioning strategies to gain competitive advantage5. Outline specific growth opportunities in underserved market segments6. Provide actionable recommendations for product development and go-to-market strategyFocus on finding opportunities where we can differentiate and do better than competitors.Highlight any unmet customer needs or pain points we can address.""")return report.content# Run analysis when the user clicks the buttonif st.button("Analyze Competitors"):if url or description:with st.spinner("Fetching competitor URLs..."):competitor_urls = get_competitor_urls(url=url, description=description)st.write(f"Found {len(competitor_urls)} competitor URLs")if not competitor_urls:st.error("No competitor URLs found!")st.stop()competitor_data = []successful_extractions = 0failed_extractions = 0for i, comp_url in enumerate(competitor_urls):with st.spinner(f"Analyzing Competitor {i+1}/{len(competitor_urls)}: {comp_url}"):competitor_info = extract_competitor_info(comp_url)if competitor_info is not None:competitor_data.append(competitor_info)successful_extractions += 1st.success(f"✓ Successfully analyzed {comp_url}")else:failed_extractions += 1st.error(f"✗ Failed to analyze {comp_url}")if competitor_data:st.success(f"Successfully analyzed {successful_extractions}/{len(competitor_urls)} competitors!")# Generate and display comparison reportwith st.spinner("Generating comparison table..."):generate_comparison_report(competitor_data)# Generate and display final analysis reportwith st.spinner("Generating analysis report..."):analysis_report = generate_analysis_report(competitor_data)st.subheader("Competitor Analysis Report")st.markdown(analysis_report)st.success("Analysis complete!")else:st.error("Could not extract data from any competitor URLs")st.write("This might be due to:")st.write("- API rate limits (try again in a few minutes)")st.write("- Website access issues (some sites block automated access)")st.write("- Invalid URLs (try with a different company description)")else:st.error("Please provide either a URL or a description.")