langchain langGraph 中streaming 流式输出 stream_mode

一. 支持的流模式

官网地址

stream_mode 有values,updates,custom,messages,debugvalues:

每个步骤之后都会打印完整值

updates:

只输出在节点中state有变化的值

messages:

只输出在节点(例如:generate_poem)中调用 LLM

debug:

打印每个节点更详细的信息

cusomter:

从图形节点内部流式传输自定义数据。

writer = get_stream_writer()

writer({“custom_key”: “Generating custom data inside node”})

from typing import TypedDict

from langgraph.graph import StateGraph, START, END

from qwen_model import model

class State(TypedDict):topic: strjoke: strdef refine_topic(state: State):return {"topic": state["topic"] + " and cats"}def generate_joke(state: State):return {"joke": f"This is a joke about {state['topic']}"}def generate_poem(state: State):topic=model.invoke("生成一首简单的小诗").contentreturn {"topic": topic}graph = (StateGraph(State).add_node(refine_topic).add_node(generate_joke).add_node(generate_poem).add_edge(START, "refine_topic").add_edge("refine_topic", "generate_joke").add_edge("generate_joke", "generate_poem").add_edge("generate_poem", END).compile()

)# The stream() method returns an iterator that yields streamed outputs

for stream_event in graph.stream({"topic": "ice cream"},# Set stream_mode="updates" to stream only the updates to the graph state after each node# Other stream modes are also available. See supported stream modes for detailsstream_mode=["values"],

):stream_mode, event = stream_eventprint(f"stream_mode:{stream_mode},chunk={event}")

values:

打印每个节点的state值。从START开始输出接收的值

stream_mode:values,chunk={'topic': 'ice cream'}

stream_mode:values,chunk={'topic': 'ice cream and cats'}

stream_mode:values,chunk={'topic': 'ice cream and cats', 'joke': 'This is a joke about ice cream and cats'}

stream_mode:values,chunk={'topic': '春风拂柳绿, \n花开映水清。 \n燕语呢喃起, \n轻风送我行。', 'joke': 'This is a joke about ice cream and cats'}

updates:

只输出在节点中state有变化的值

stream_mode:updates,chunk={'refine_topic': {'topic': 'ice cream and cats'}}

stream_mode:updates,chunk={'generate_joke': {'joke': 'This is a joke about ice cream and cats'}}

stream_mode:updates,chunk={'generate_poem': {'topic': '春风拂柳青, \n花开映水清。 \n燕语呢喃起, \n山色入画屏。'}}

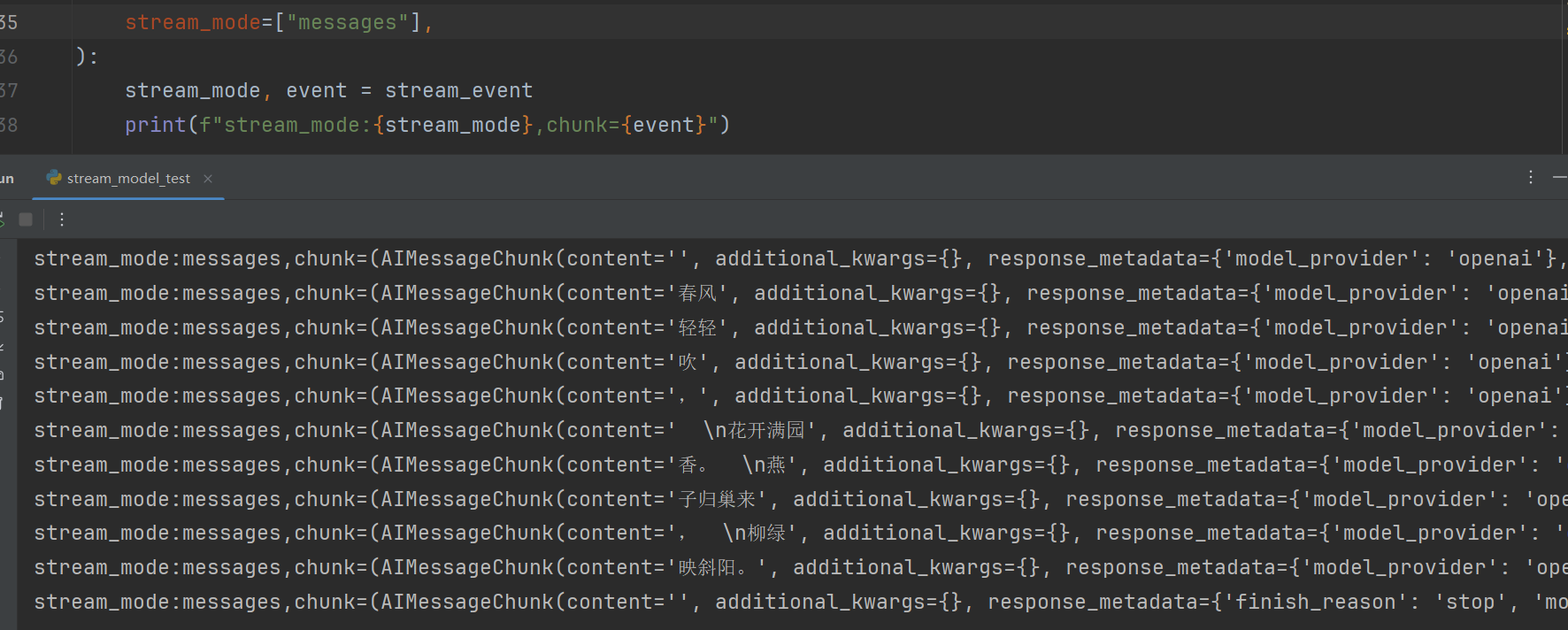

messages:

只输出在节点(例如:generate_poem)中调用 LLM

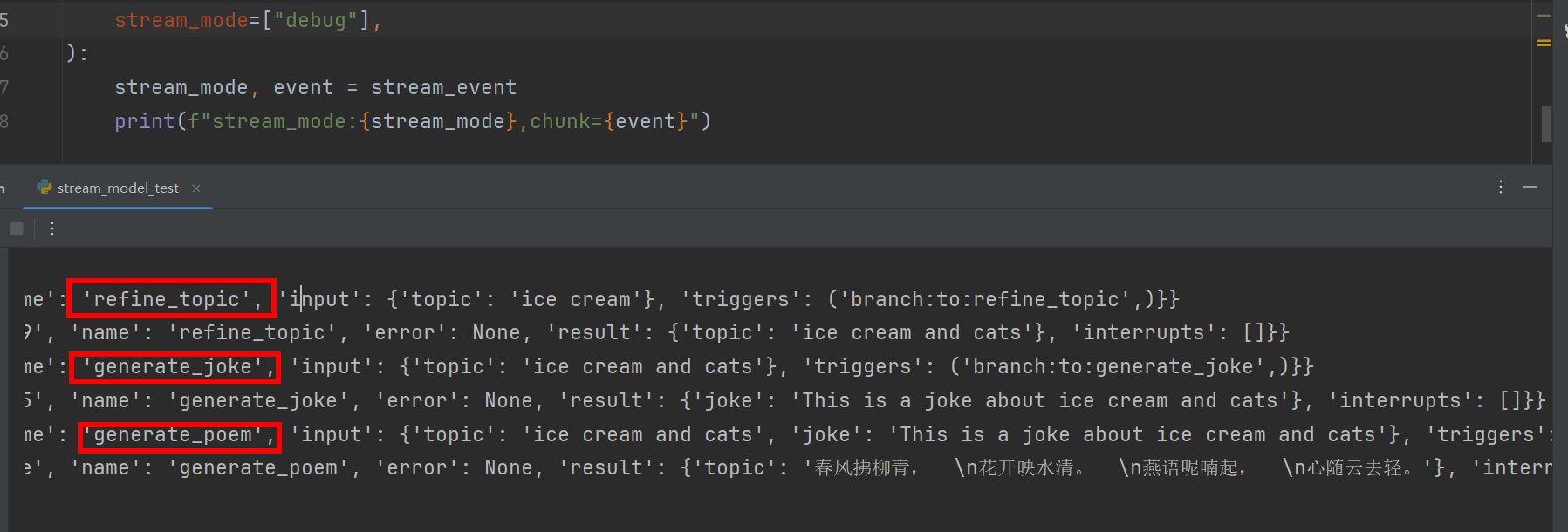

debug:

打印每个节点更详细的信息

二.对接前端的流式输出api

使用fastapi框架的StreamingResponse,协议使用text/event-stream,message_generator是一个文本生成器

import json

from collections.abc import AsyncGeneratorfrom fastapi import APIRouter

from fastapi.responses import StreamingResponse

from langchain_core.messages import HumanMessage

from pydantic import BaseModel, Fieldclass StreamInput(BaseModel):"""Basic user input for the agent."""message: str = Field(description="User input to the agent.",examples=["What is the weather in Tokyo?"],)thread_id: str | None = Field(description="Thread ID to persist and continue a multi-turn conversation.",default=None,examples=["847c6285-8fc9-4560-a83f-4e6285809254"],)router = APIRouter()@router.post("/stream", response_class=StreamingResponse)

async def stream(user_input: StreamInput) -> StreamingResponse:return StreamingResponse(message_generator(user_input),media_type="text/event-stream",)async def message_generator(user_input: StreamInput

) -> AsyncGenerator[str, None]:user_msg = {"messages": [HumanMessage(content=user_input.message)]}kwargs = {"input": user_msg,}# 运行图async for stream_event in agent.astream(**kwargs, stream_mode=["updates", "messages", "custom"]):stream_mode, event = stream_eventif stream_mode == "messages":msg, metadata = eventcontent = msg.contentif content:yield f"data: {json.dumps({'type': 'token', 'content': content})}\n\n"yield "data: [DONE]\n\n"