vscode debug Transformer源码说明

- 首选确认conda env 环境中未使用 install 安装Transformer;

- 下载源码:

git clone https://github.com/huggingface/transformers.git

cd transformers# pip

pip install '.[torch]'

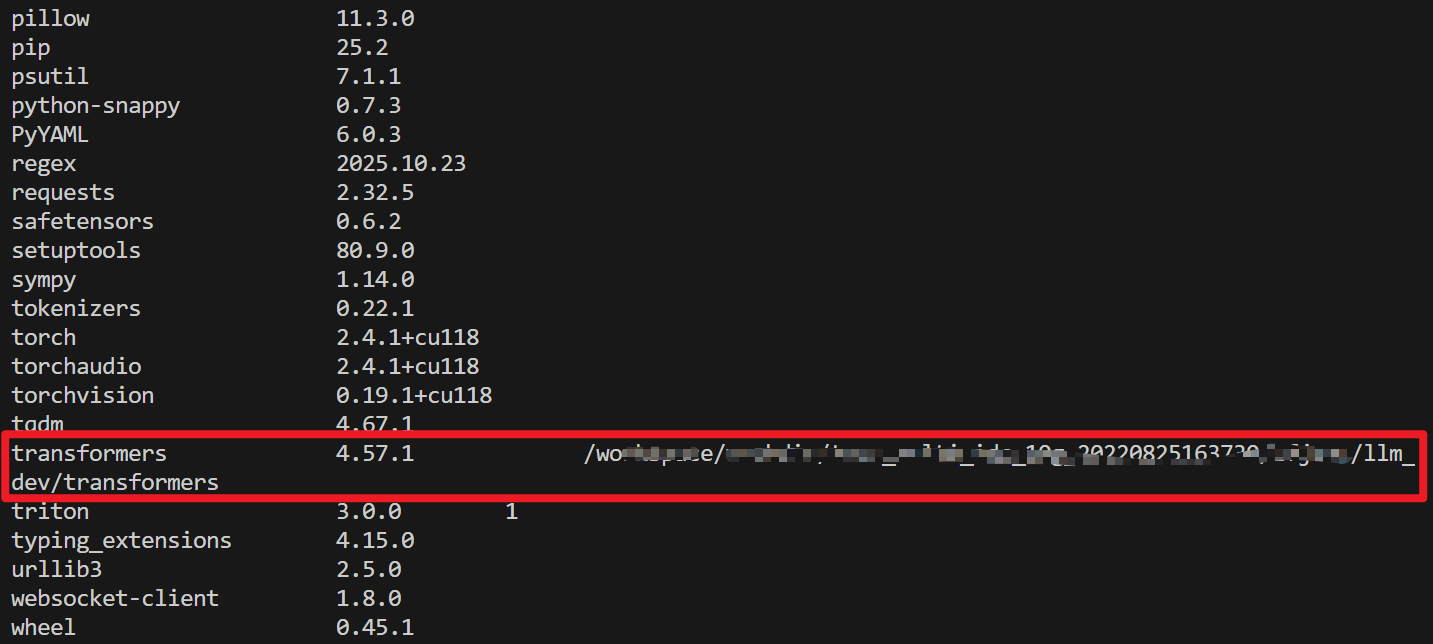

安装后通过pip list 可以看到是指向你的本地目录:

3. 自测demo,放在根目录即可

from transformers import AutoModelForCausalLM, AutoTokenizermodel_name = "Qwen/Qwen3-0.6B"# load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True, use_fast=False)

model = AutoModelForCausalLM.from_pretrained(model_name,trust_remote_code=True,dtype="auto",device_map="auto"

)# prepare the model input

prompt = "Give me a short introduction to large language model."

messages = [{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)# conduct text completion

generated_ids = model.generate(**model_inputs,max_new_tokens=512

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()content = tokenizer.decode(output_ids, skip_special_tokens=True)print("content:", content)

- vscode debug:按照以往代码debug即可;

-

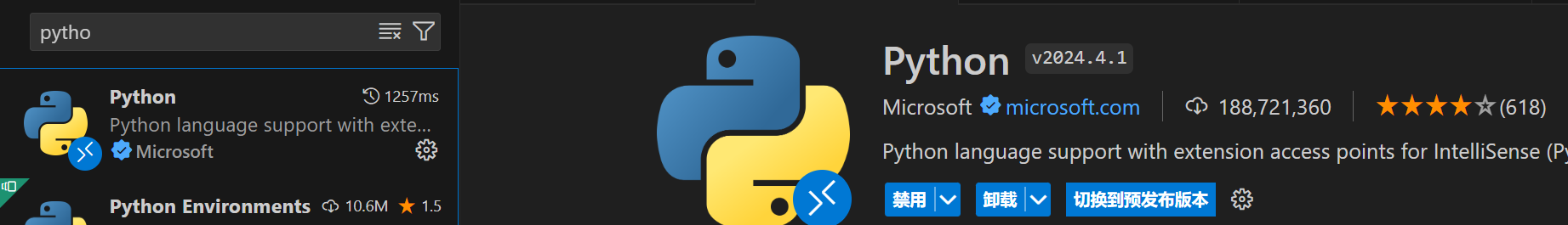

安装插件

-

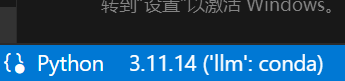

vscode 右下角选择你的env,如下

-

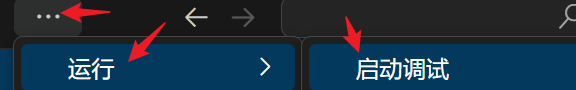

点击上方的三个点-》运行-》启动调试