OpenAI智能体框架_Num1

博客参考:datawhale

环境准备

采用的环境镜像:datawhalechina/llm-preview/llm-preview

这里我们采用本地下载模型的方式,由于OpenAI智能体框架只能使用Ollama,且服务器下载Ollama服务较慢,所以将本地下载好的模型暴露成API的方式来提供服务:

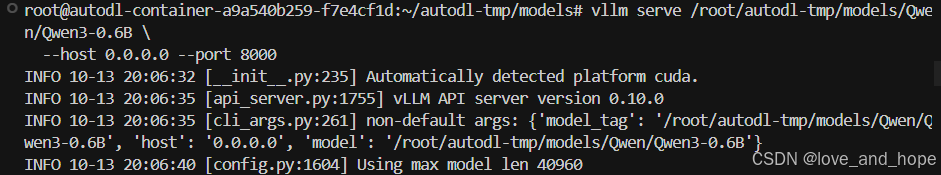

from modelscope import snapshot_downloadmodel_dir = snapshot_download('Qwen/Qwen3-0.6B', cache_dir='/root/autodl-tmp/models', revision='master')vllm serve /root/autodl-tmp/models/Qwen/Qwen3-0.6B \--host 0.0.0.0 \--port 8000 \--enable-auto-tool-choice \--tool-call-parser qwen3_coder

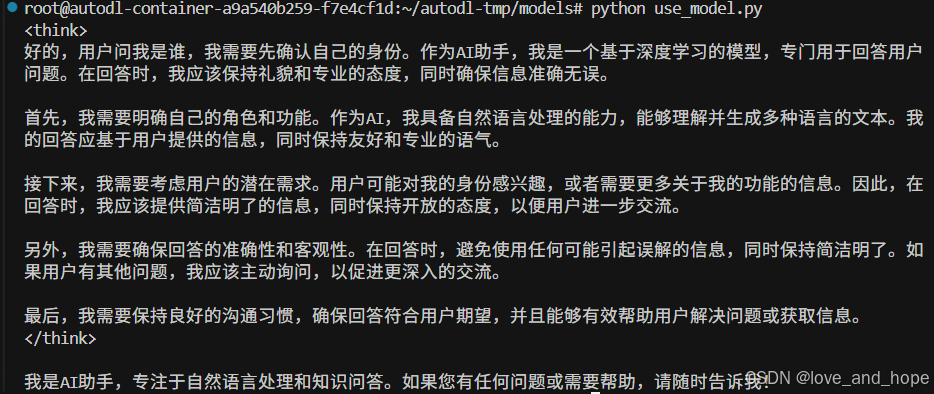

from litellm import completion# 使用 LiteLLM 调用本地 vLLM 服务

response = completion(model="openai//root/autodl-tmp/models/Qwen/Qwen3-0.6B", # 使用 openai 格式调用本地模型api_base="http://127.0.0.1:8000/v1", # 指向 vLLM 服务的 v1 端点api_key="EMPTY", # vLLM 默认不验证 keymessages=[{"role": "user", "content": "你好,你是谁?"}]

)# 打印模型回复

print(response.choices[0].message.content)

litellm 提供的 OpenAI 兼容端点

chat_model = "openai//root/autodl-tmp/models/Qwen/Qwen3-0.6B"

base_url = "http://127.0.0.1:8000/v1"

api_key = "EMPTY"from agents import Agent, Runner, set_tracing_disabled

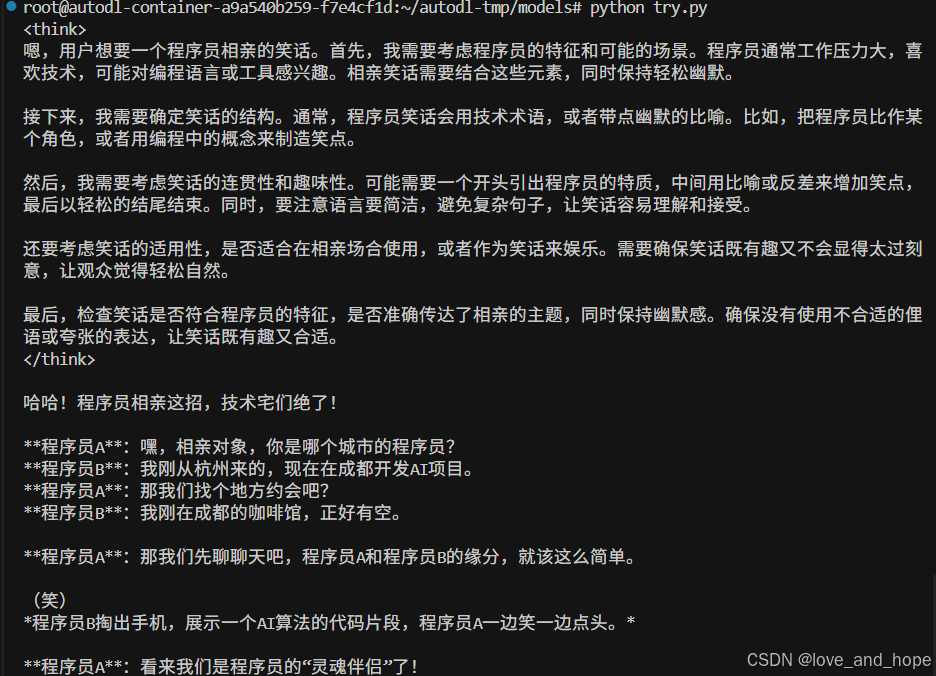

from agents.extensions.models.litellm_model import LitellmModelset_tracing_disabled(disabled=True)llm = LitellmModel(model=chat_model, api_key=api_key, base_url=base_url)

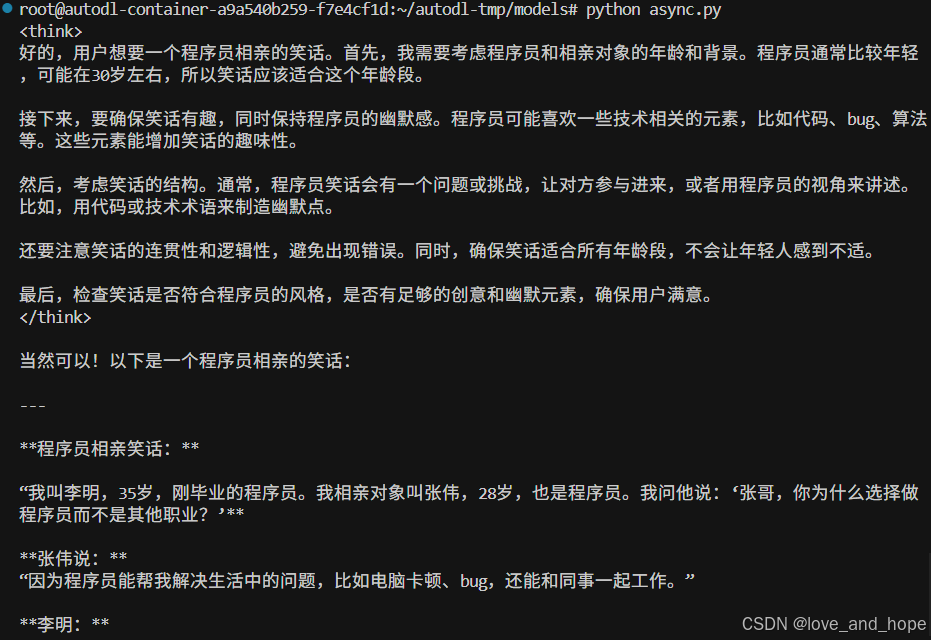

agent = Agent(name="Assistant", model=llm, instructions="You are a helpful assistant")result = Runner.run_sync(agent, "给我讲个程序员相亲的笑话")

print(result.final_output)

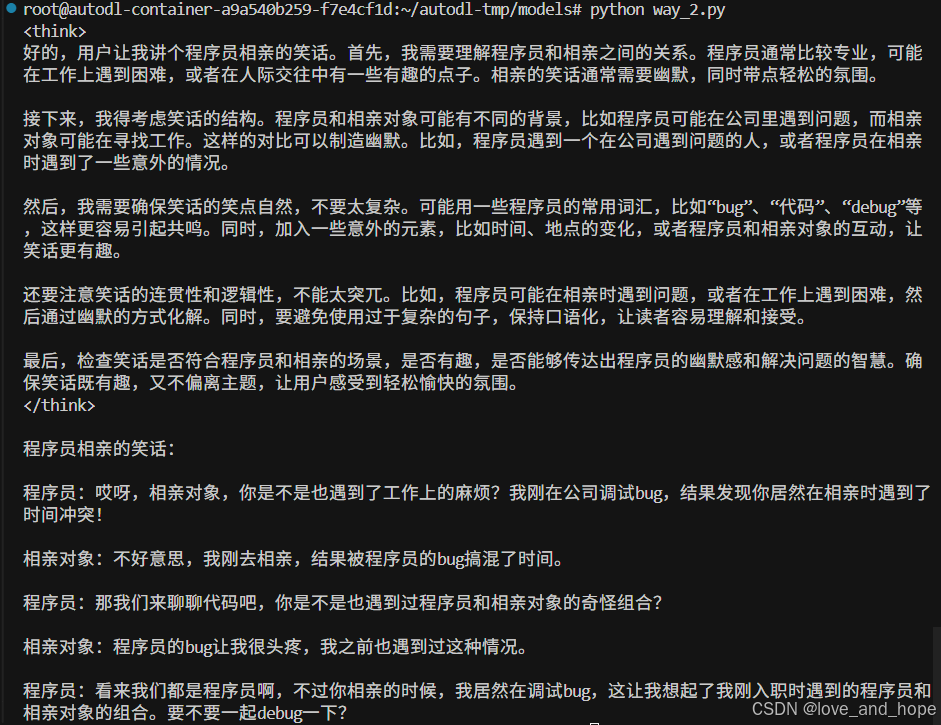

openai 包来配置模型

from agents import Agent, Runner, set_tracing_disabled, set_default_openai_api, set_default_openai_client

from openai import AsyncOpenAI

import os

# from dotenv import load_dotenv# 加载环境变量

# load_dotenv()chat_model = "openai//root/autodl-tmp/models/Qwen/Qwen3-0.6B"

client = AsyncOpenAI(base_url="http://127.0.0.1:8000/v1",api_key="EMPTY",

)

set_default_openai_client(client=client, use_for_tracing=False)

set_default_openai_api("chat_completions")

set_tracing_disabled(disabled=True)agent = Agent(name="Assistant", model=chat_model, instructions="You are a helpful assistant")

result = Runner.run_sync(agent, "给我讲个程序员相亲的笑话")

print(result.final_output)

以上写法无法并行运行,只能同步运行。可以注意到写法是Runner.run_sync

异步写法:

from agents import Agent, Runner, set_tracing_disabled

from agents.extensions.models.litellm_model import LitellmModel

import os

import asyncio

#from dotenv import load_dotenv# 加载环境变量

# load_dotenv()

# 从环境变量中读取dashscope_api_key

# dashscope_api_key = os.getenv('DASHSCOPE_API_KEY')

dashscope_base_url = 'http://127.0.0.1:8000/v1'

dashscope_model = "openai//root/autodl-tmp/models/Qwen/Qwen3-0.6B"

llm = LitellmModel(model=dashscope_model, api_key="EMPTY", base_url=dashscope_base_url)

agent = Agent(name="Assistant", model=llm, instructions="You are a helpful assistant")

async def main():result = await Runner.run(agent, "给我讲个程序员相亲的笑话")print(result.final_output)

if __name__ == "__main__":asyncio.run(main())

初步尝鲜

三部分内容:

①Agents(代理):代理是一个被赋予任务并有能力自主执行特定操作的实体。代理通常配备了指令和工具,能根据指令执行复杂的任务。

例子:

假设你有一个“旅行规划助手”代理,它的任务是帮助你规划一次旅行。这个代理可以根据用户的需求,如目的地、预算、旅行日期等,查询航班、酒店、交通信息等,完成旅行计划的制定。

②Handoffs(任务交接):任务交接意味着代理在执行过程中,如果遇到某些无法完成的任务,能够将这些任务委托给其他更专门的代理或系统处理。这样可以确保整个工作流顺畅地继续。

例子:

在旅行规划助手的例子中,假设用户要求代理在特定日期找到一个“当地最棒的餐厅”。但是,旅行规划助手并不具备餐厅推荐的能力,于是它可以将这个任务交给一个专门的“餐厅推荐代理”。这个餐厅推荐代理根据用户的偏好来找到合适的餐厅。

③Guardrails(护栏):护栏是用于限制或验证代理行为的机制,确保代理不会做出不合适或不符合要求的操作。它通常用于输入验证和结果控制,以避免代理输出错误或不合适的内容。

例子:

在旅行规划助手的例子中,假设用户输入了一个非常不现实的要求,比如“计划在明天就去巴黎,预算500美元”。此时,护栏机制可以检查这个请求,发现它超出了合理的预算范围,并返回一条警告,提醒用户计划不现实,并建议调整预算或旅行时间。

搞定llm

from agents import Agent, Runner, set_tracing_disabled

from agents.extensions.models.litellm_model import LitellmModel

import os

import asyncio

#from dotenv import load_dotenv# 加载环境变量

# load_dotenv()

# 从环境变量中读取dashscope_api_key

# dashscope_api_key = os.getenv('DASHSCOPE_API_KEY')

dashscope_base_url = 'http://127.0.0.1:8000/v1'

dashscope_model = "openai//root/autodl-tmp/models/Qwen/Qwen3-0.6B"

llm = LitellmModel(model=dashscope_model, api_key="EMPTY", base_url=dashscope_base_url)

构建第一个Agent

agent = Agent(name="Math Tutor", model=llm, instructions="You provide help with math problems. Explain your reasoning at each step and include examples",)

增加更多Agents

新增其他Agent,并且为这些Agent设置一个handoff_descriptions参数,用来告诉Agent如何移交任务

from agents import Agenthistory_tutor_agent = Agent(name="History Tutor",handoff_description="Specialist agent for historical questions",instructions="You provide assistance with historical queries. Explain important events and context clearly.",model=llm,

)math_tutor_agent = Agent(name="Math Tutor",handoff_description="Specialist agent for math questions",instructions="You provide help with math problems. Explain your reasoning at each step and include examples",model=llm,

)

handoff_description是用来描述一个代理(Agent)在多代理系统中的角色和它专门处理的任务类型。当一个代理在处理过程中遇到无法解决的任务时,它可以将任务“交接”(handoff)给另一个专门的代理。handoff_description 就是用来指明该代理擅长处理哪些类型的任务,以及它可以处理的内容范围。

定义交接

在每个Agent上,可以定义一个传出切换选项的清单,Agent可以从中选择,以决定如何在任务上取得进展。

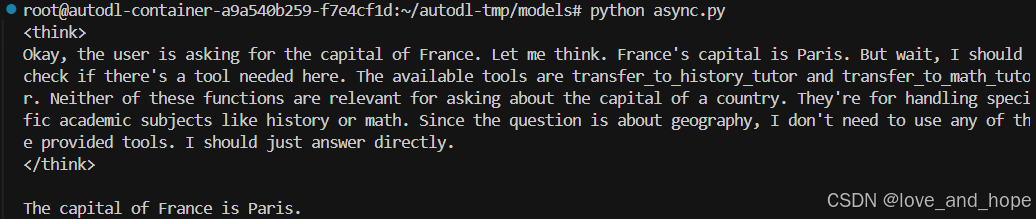

async def main():result = await Runner.run(triage_agent, "What is the capital of France?")print(result.final_output)

if __name__ == "__main__":asyncio.run(main())

添加护栏

可以定义在输入或输出上运行的自定义护栏。

class HomeworkOutput(BaseModel): # 后续要将输出转换为该格式进行返回is_homework: boolreasoning: strguardrail_agent = Agent( #护栏agentname="Guardrail check",instructions="Check if the user is asking about homework.",output_type=HomeworkOutput,model=llm,

)async def homework_guardrail(ctx, agent, input_data): # 护栏函数,ctx为上下文,用于获取上下文信息result = await Runner.run(guardrail_agent, input_data, context=ctx.context)final_output = result.final_output_as(HomeworkOutput)return GuardrailFunctionOutput(output_info=final_output,tripwire_triggered=not final_output.is_homework,)同时,triage_agent也需要添加input_guardrails参数:

triage_agent = Agent(name="Triage Agent",instructions="You determine which agent to use based on the user's homework question",handoffs=[history_tutor_agent, math_tutor_agent],input_guardrails=[InputGuardrail(guardrail_function=homework_guardrail),],model=llm,

)

在 agents 框架(BerriAI/agents SDK)中,ctx 是由 Runner 在运行一个代理(Agent)时自动创建并传入的上下文对象,用于保存和传递本轮运行的状态信息。

ctx说明:

# 第1轮:不传 context,Runner 会自动创建

res1 = await Runner.run(triage_agent, "Q1")

ctx = res1.context # 从结果里取出这轮产生的上下文# 第2轮:复用第1轮的上下文

res2 = await Runner.run(triage_agent, "Q2", context=ctx)

整体代码

from agents.agent import Agent

from agents.run import Runner

from agents.tracing import set_tracing_disabled

from agents.guardrail import GuardrailFunctionOutput, InputGuardrail

from agents.extensions.models.litellm_model import LitellmModel

from pydantic import BaseModel

import os

import asyncio

#from dotenv import load_dotenv# 加载环境变量

# load_dotenv()

# 从环境变量中读取dashscope_api_key

# dashscope_api_key = os.getenv('DASHSCOPE_API_KEY')

dashscope_base_url = 'http://127.0.0.1:8000/v1'

dashscope_model = "openai//root/autodl-tmp/models/Qwen/Qwen3-0.6B"

llm = LitellmModel(model=dashscope_model, api_key="EMPTY", base_url=dashscope_base_url)class HomeworkOutput(BaseModel): # 后续要将输出转换为该格式进行返回is_homework: boolreasoning: strguardrail_agent = Agent( #护栏agentname="Guardrail check",instructions="Check if the user is asking about homework.",output_type=HomeworkOutput,model=llm,

)async def homework_guardrail(ctx, agent, input_data): # 护栏函数,ctx为上下文,用于获取上下文信息result = await Runner.run(guardrail_agent, input_data, context=ctx.context)final_output = result.final_output_as(HomeworkOutput)return GuardrailFunctionOutput(output_info=final_output,tripwire_triggered=not final_output.is_homework,)history_tutor_agent = Agent(name="History Tutor",handoff_description="Specialist agent for historical questions",instructions="You provide assistance with historical queries. Explain important events and context clearly.",model=llm,

)math_tutor_agent = Agent(name="Math Tutor",handoff_description="Specialist agent for math questions",instructions="You provide help with math problems. Explain your reasoning at each step and include examples",model=llm,

)triage_agent = Agent(name="Triage Agent",instructions="You determine which agent to use based on the user's homework question",handoffs=[history_tutor_agent, math_tutor_agent],input_guardrails=[InputGuardrail(guardrail_function=homework_guardrail),],model=llm,

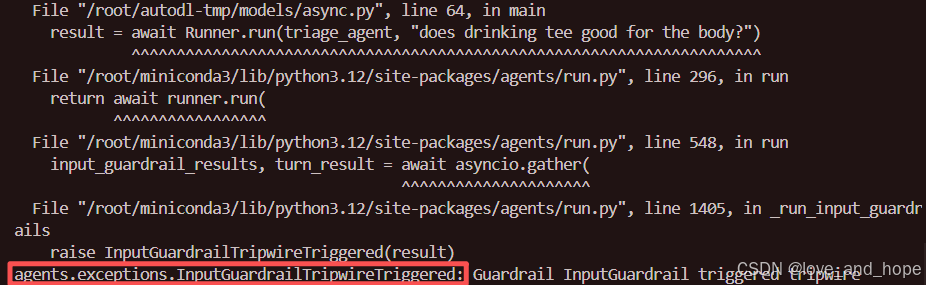

)async def main():result = await Runner.run(triage_agent, "does drinking tee good for the body?")print(result.final_output)

if __name__ == "__main__":asyncio.run(main())

带有try…except:

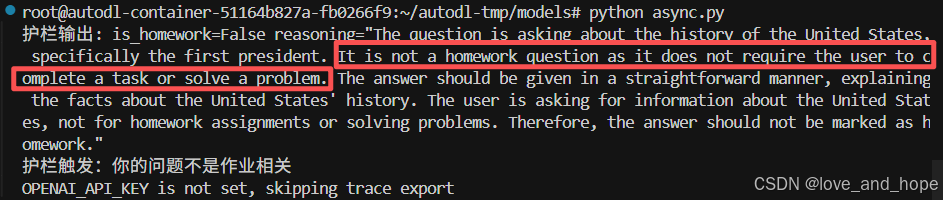

async def main():try:#print("开始执行")result = await Runner.run(triage_agent, "如何证明勾股定理?")#print(result.final_output)print("结果:", result.final_output)except InputGuardrailTripwireTriggered as e:print("护栏触发:你的问题不是作业相关")

问题:who was the first president of the united states

更改几次prompt都失败了,所以我认为是选择的qwen参数量太小了,才0.6B,感觉效果不是很好