K8S1.28.2安装与部署

文章目录

- 前言

- 1、K8s集群部署环境准备

- 1.1 环境架构

- 1.2 配置主机名

- 1.3 关闭防火墙和selinux

- 1.4 关闭swap分区

- 1.5 配置内核参数和优化

- 1.6 安装ipset、ipvsadm

- 1.7 安装Containerd

- 1.7.1 安装依赖软件包

- 1.7.2 添加阿里Docker源

- 1.7.3 添加overlay和netfilter模块

- 1.7.4 安装Containerd(安装最新版本)

- 1.7.5 创建Containerd的配置文件

- 1.7.6 启动containerd

- 2、安装kubectl、kubelet、kubeadm

- 2.1 添加阿里kubernetes源

- 2.2 安装kubectl、kubelet、kubeadm

- 2.2.1 查看所有的可用版本

- 2.2.2 安装当前最新版本1.28.2

- 2.2.3 启动kubelet

- 3、部署Kubernetes集群

- 3.1 初始化Kubernetes集群

- 3.1.1 查看k8s v1.28.2初始化所需要的镜像

- 3.1.2 初始化K8s集群

- 3.2 根据提示创建kubectl

- 3.3 查看node节点和Pod

- 3.4 安装Pod网络插件calico(CNI)

- 3.4.1 k8s 组网方案对比

- ① flannel方案

- 部署 flannel

- ② calico方案

- ③ calico安装

- 3.5 再次查看pod和node

- 3.6 添加工作节点加入Kubernetes集群

- 3.7 再次查看Node

- 3.8 kubectl命令补全功能

- 3.9 安装Nerdctl管理工具

- 4、安装kubernetes-dashboard

- 4.1 下载配置文件

- 4.2 修改配置文件

- 4.3 查看pod和service

- 4.4 访问 Dashboard页面

- 4.5 创建用户

- 4.6 创建 Token

- 4.7 获取Token

- 4.8 使用Token登录Dashboard

前言

小装一下v1.28.2的K8S这和之前装的v1.20.2不同的是,一个使用了containerd,一个使用了docker,因此有不同的部署方式,并且本文中网络联通的方式使用的是calico方案,之前使用的flannel方案

1、K8s集群部署环境准备

1.1 环境架构

| IP | 主机名 | 操作系统 | Kubelet 版本 | 用途 |

|---|---|---|---|---|

| 192.168.10.123 | master01 | CentOS 7.9.2009 | v1.28.2 | 管理节点 |

| 192.168.10.124 | node01 | CentOS 7.9.2009 | v1.28.2 | 工作节点 |

| 192.168.10.125 | node02 | CentOS 7.9.2009 | v1.28.2 | 工作节点 |

1.2 配置主机名

注:以下操作所有节点需要执行

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node02

# 配置主机映射

cat >>/etc/hosts <<EOF

192.168.10.123 master01

192.168.10.124 node01

192.168.10.125 node02

EOF

1.3 关闭防火墙和selinux

#关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

#关闭增强功能

setenforce 0

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

1.4 关闭swap分区

swapoff -a

sed -i '/swap/s/^/#/g' /etc/fstab

1.5 配置内核参数和优化

cat >/etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOFat >/etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

1.6 安装ipset、ipvsadm

yum -y install conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

cat >/etc/modules-load.d/ipvs.conf <<EOF

# Load IPVS at boot

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

nf_conntrack_ipv4

EOF

systemctl enable --now systemd-modules-load.service

# 确认内核模块加载成功

[root@k8s-master ~]# lsmod |egrep "ip_vs|nf_conntrack_ipv4"

1.7 安装Containerd

1.7.1 安装依赖软件包

yum -y install yum-utils device-mapper-persistent-data lvm2

1.7.2 添加阿里Docker源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

1.7.3 添加overlay和netfilter模块

cat >>/etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

1.7.4 安装Containerd(安装最新版本)

yum -y install containerd.io

1.7.5 创建Containerd的配置文件

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

sed -i '/SystemdCgroup/s/false/true/g' /etc/containerd/config.toml

sed -i '/sandbox_image/s/registry.k8s.io/registry.aliyuncs.com\/google_containers/g' /etc/containerd/config.toml

1.7.6 启动containerd

systemctl enable containerd

systemctl start containerd

2、安装kubectl、kubelet、kubeadm

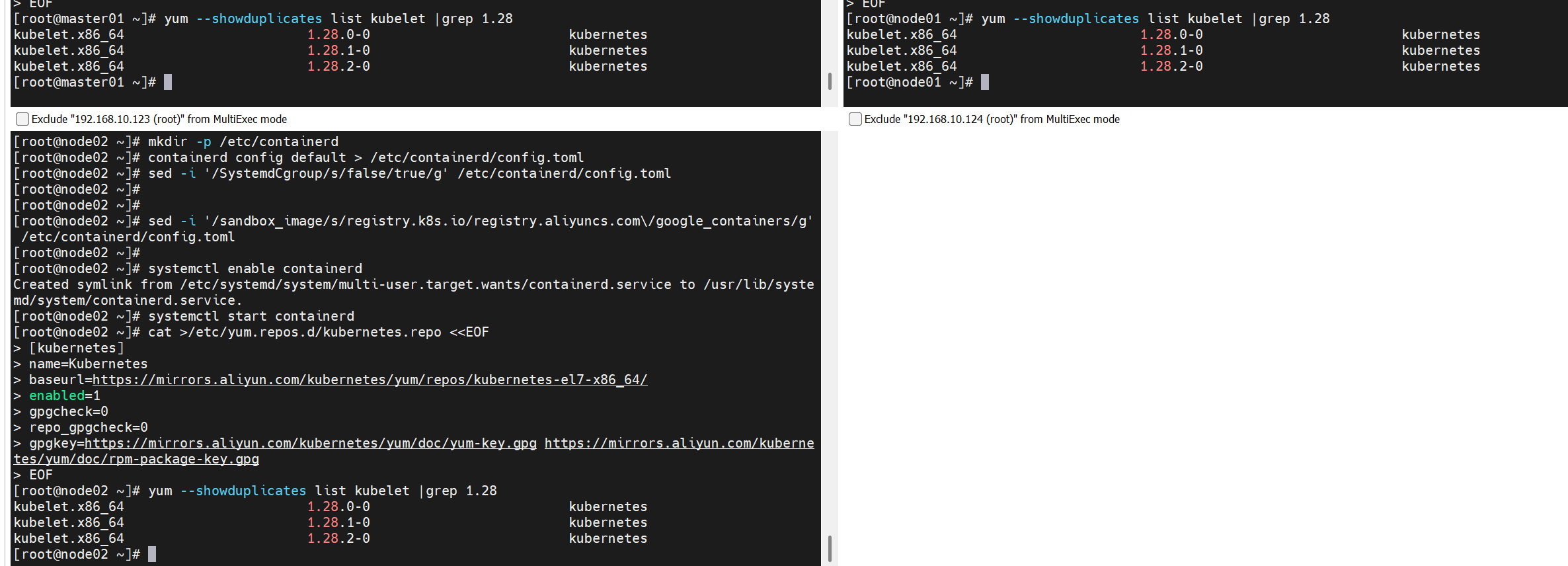

2.1 添加阿里kubernetes源

cat >/etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.2 安装kubectl、kubelet、kubeadm

2.2.1 查看所有的可用版本

[root@master01 opt]# yum --showduplicates list kubelet |grep 1.28

kubelet.x86_64 1.28.0-0 kubernetes

kubelet.x86_64 1.28.1-0 kubernetes

kubelet.x86_64 1.28.2-0 kubernetes

2.2.2 安装当前最新版本1.28.2

yum -y install kubectl-1.28.2 kubelet-1.28.2 kubeadm-1.28.2

2.2.3 启动kubelet

需要优先启动

systemctl enable kubelet

systemctl start kubelet

3、部署Kubernetes集群

3.1 初始化Kubernetes集群

3.1.1 查看k8s v1.28.2初始化所需要的镜像

kubeadm config images list --kubernetes-version=v1.28.2

3.1.2 初始化K8s集群

cat >/tmp/kubeadm-init.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: v1.28.2

controlPlaneEndpoint: 192.168.10.123:6443

imageRepository: registry.aliyuncs.com/google_containers

networking:podSubnet: 172.16.0.0/16serviceSubnet: 172.15.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

EOF

这是企业的

cat >/tmp/kubeadm-init.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: v1.28.2

controlPlaneEndpoint: 192.168.10.123:6443

imageRepository: registry.aliyuncs.com/google_containers

networking:podSubnet: 10.244.0.0/16serviceSubnet: 10.96.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

EOF

执行初始化命令:

只要在master上装就行

kubeadm init --config=/tmp/kubeadm-init.yaml --ignore-preflight-errors=all

当在所有节点上使用初始化时,会无法建立高可用集群

# 重置 kubeadm 状态(清理控制平面组件、证书、配置文件等)

kubeadm reset -f# 清理残留的网络配置(CNI 插件相关)

rm -rf /etc/cni/net.d# 清理 kubelet 数据目录

rm -rf /var/lib/kubelet/*# 重启 kubelet 服务

systemctl restart kubelet

初始化过程日志(部分关键日志):

[init] Using Kubernetes version: v1.28.2

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W1007 18:36:58.230490 20186 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image.

^C

[root@master01 opt]# ^C

[root@master01 opt]# vim /tmp/kubeadm-init.yaml

[root@master01 opt]#

[root@master01 opt]# kubeadm init --config=/tmp/kubeadm-init.yaml --ignore-preflight-errors=all

[init] Using Kubernetes version: v1.28.2

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W1007 18:39:07.263117 20257 checks.go:835] detected that the sandbox image "registry.aliyuncs.com/google_containers/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.aliyuncs.com/google_containers/pause:3.9" as the CRI sandbox image.

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01] and IPs [172.15.0.1 192.168.10.123]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master01] and IPs [192.168.10.123 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master01] and IPs [192.168.10.123 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.003452 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-fromexternal-load-balancers]

[mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: kq3r2z.fmyp8jp31m74w6sq

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:kubeadm join 192.168.10.123:6443 --token kq3r2z.fmyp8jp31m74w6sq \--discovery-token-ca-cert-hash sha256:babe339bb09fe24d1d794be3d91cffeebb40f859ceb104ca286f9121412972de \--control-plane Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.10.123:6443 --token kq3r2z.fmyp8jp31m74w6sq \--discovery-token-ca-cert-hash sha256:babe339bb09fe24d1d794be3d91cffeebb40f859ceb104ca286f9121412972de

注:记录生成的最后部分内容,此内容需要在其它节点加入Kubernetes集群时执行。

3.2 根据提示创建kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

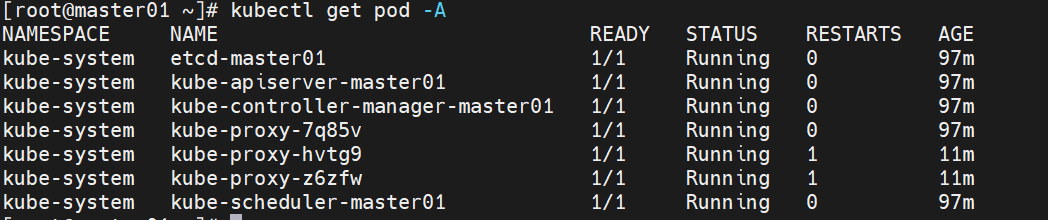

3.3 查看node节点和Pod

[root@master01 opt]# kubectl get node

[root@master01 opt]# kubectl get pod -A

注:Node节点为NotReady,因为corednspod没有启动,缺少网络pod,查看结果如下:

3.4 安装Pod网络插件calico(CNI)

3.4.1 k8s 组网方案对比

① flannel方案

需要在每个节点上把发向容器的数据包进行封装后,再用隧道将封装后的数据包发送到运行着目标Pod 的node节点上。目标node节点再负责去掉封装,将去除封装的数据包发送到目标Pod上。数据通信性能则大受影响。

部署 flannel

-

K8S 中 Pod 网络通信

- Pod 内容器与容器之间的通信:在同一个 Pod 内的容器(Pod 内的容器是不会跨宿主机的)共享同一个网络命名空间,相当于它们在同一台机器上一样,可以用 localhost 地址访问彼此的端口。

- 同一个 Node 内 Pod 之间的通信:每个 Pod 都有一个真实的全局 IP 地址,同一个 Node 内的不同 Pod 之间可以直接采用对方 Pod 的 IP 地址进行通信,Pod1 与 Pod2 都是通过 Veth 连接到同一个 docker0/cni0 网桥,网段相同,所以它们之间可以直接通信。

- 不同 Node 上 Pod 之间的通信:Pod 地址与 docker0 在同一网段,docker0 网段与宿主机网卡是两个不同的网段,且不同 Node 之间的通信只能通过宿主机的物理网卡进行。要想实现不同 Node 上 Pod 之间的通信,就必须想办法通过主机的物理网卡 IP 地址进行寻址和通信。因此要满足两个条件:Pod 的 IP 不能冲突;将 Pod 的 IP 和所在的 Node 的 IP 关联起来,通过这个关联让不同 Node 上 Pod 之间直接通过内网 IP 地址通信。

-

Overlay Network:叠加网络,在二层或者三层基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路隧道连接起来。通过Overlay技术(可以理解成隧道技术),在原始报文外再包一层四层协议(UDP协议),通过主机网络进行路由转发。这种方式性能有一定损耗,主要体现在对原始报文的修改。目前Overlay主要采用VXLAN。

-

VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封装并将数据发送给目标地址。

-

Flannel:Flannel 的功能是让集群中的不同节点主机创建的 Docker 容器都具有全集群唯一的虚拟 IP 地址。Flannel 是 Overlay 网络的一种,也是将 TCP 源数据包封装在另一种网络包里面进行路由转发和通信,目前支持 UDP、VXLAN、Host-gw 3种数据转发方式。

-

Flannel UDP 模式的工作原理:数据从主机 A 上 Pod 的源容器中发出后,经由所在主机的 docker0/cni0 网络接口转发到 flannel0 接口,flanneld 服务监听在 flannel0 虚拟网卡的另外一端。Flannel 通过 Etcd 服务维护了一张节点间的路由表。源主机 A 的 flanneld 服务将原本的数据内容封装到 UDP 报文中, 根据自己的路由表通过物理网卡投递给目的节点主机 B 的 flanneld 服务,数据到达以后被解包,然后直接进入目的节点的 flannel0 接口, 之后被转发到目的主机的 docker0/cni0 网桥,最后就像本机容器通信一样由 docker0/cni0 转发到目标容器。

-

ETCD 之 Flannel 提供说明:存储管理Flannel可分配的IP地址段资源;监控 ETCD 中每个 Pod 的实际地址,并在内存中建立维护 Pod 节点路由表。由于 UDP 模式是在用户态做转发,会多一次报文隧道封装,因此性能上会比在内核态做转发的 VXLAN 模式差。

-

VXLAN 模式:VXLAN 模式使用比较简单,flannel 会在各节点生成一个 flannel.1 的 VXLAN 网卡(VTEP设备,负责 VXLAN 封装和解封装)。VXLAN 模式下作是由内核进行的,flannel 不转发数据,仅动态设置 ARP 表和 MAC 表项。UDP 模式的 flannel0 网卡是三层转发,使用 flannel0 时在物理网络之上构建三层网络,属于 ip in udp;VXLAN封包与解包的工模式是二层实现,overlay 是数据帧,属于 mac in udp。

-

Flannel VXLAN 模式跨主机的工作原理:

- 数据帧从主机 A 上 Pod 的源容器中发出后,经由所在主机的 docker0/cni0 网络接口转发到 flannel.1 接口;

- flannel.1 收到数据帧后添加 VXLAN 头部,封装在 UDP 报文中;

- 主机 A 通过物理网卡发送封包到主机 B 的物理网卡中;

- 主机 B 的物理网卡再通过 VXLAN 默认端口 4789 转发到 flannel.1 接口进行解封装;

- 解封装以后,内核将数据帧发送到 cni0,最后由 cni0 发送到桥接到此接口的容器 B 中。

-

② calico方案

Calico不使用隧道或NAT来实现转发,而是把Host当作Internet中的路由器,使用BGP同步路由,并使用 iptables来做安全访问策略,完成跨Host转发。采用直接路由的方式,这种方式性能损耗最低,不需要修改报文数据,但是如果网络比较复杂场景下,路由表会很复杂,对运维同事提出了较高的要求。

-

Calico 主要组成部分:

- Calico CNI插件:主要负责与kubernetes对接,供kubelet调用使用。

- Felix:负责维护宿主机上的路由规则、FIB转发信息库等。

- BIRD:负责分发路由规则,类似路由器。

- Confd:配置管理组件。

-

Calico 工作原理:Calico 是通过路由表来维护每个 pod 的通信。Calico 的 CNI 插件会为每个容器设置一个 veth pair 设备,然后把另一端接入到宿主机网络空间,由于没有网桥,CNI 插件还需要在宿主机上为每个容器的 veth pair 设备配置一条路由规则,用于接收传入的 IP 包。有了这样的 veth pair 设备以后,容器发出的 IP 包就会通过 veth pair 设备到达宿主机,然后宿主机根据路由规则的下一跳地址,发送给正确的网关,然后到达目标宿主机,再到达目标容器。这些路由规则都是 Felix 维护配置的,而路由信息则是 Calico BIRD 组件基于 BGP 分发而来。calico 实际上是将集群里所有的节点都当做边界路由器来处理,他们一起组成了一个全互联的网络,彼此之间通过 BGP 交换路由,这些节点我们叫做 BGP Peer。

方案选择建议:目前比较常用的CNI网络组件是flannel和calico,flannel的功能比较简单,不具备复杂的网络策略配置能力;calico是比较出色的网络管理插件,但具备复杂网络配置能力的同时,往往意味着本身的配置比较复杂。所以相对而言,比较小而简单的集群使用flannel,考虑到日后扩容,未来网络可能需要加入更多设备,配置更多网络策略,则使用calico更好。

③ calico安装

kubectl apply -f https://docs.tigera.io/archive/v3.24/manifests/calico.yaml

# 获取yaml文件

[root@master01 opt]# wget https://docs.tigera.io/archive/v3.25/manifests/calico.yaml

# 修改文件

[root@master01 opt]# vim calico.yaml

# 修改内容(关键行):

4601 - name: CALICO_IPV4POOL_CIDR

4602 value: "10.244.0.0/16"

# 应用配置

[root@master01 opt]# kubectl apply -f calico.yaml

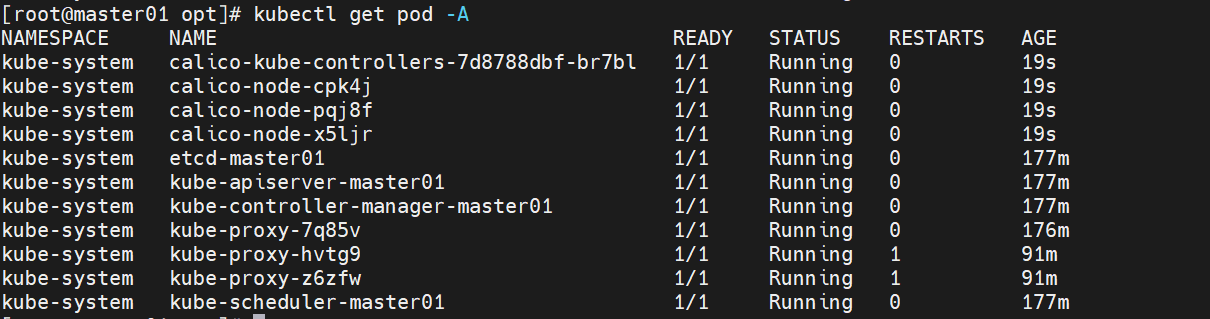

3.5 再次查看pod和node

[root@k8s-master ~]# kubectl get pod -A

[root@k8s-master ~]# kubectl get node

或者使用

nerdctl -n k8s.io load -i coredns-v1.10.1.tar

nerdctl -n k8s.io load -i calico-cni-v3.24.5.tar

nerdctl -n k8s.io load -i calico-node-v3.24.5.tar

nerdctl -n k8s.io load -i calico-controllers-v3.24.5.tar

kubectl apply -f calico.yaml

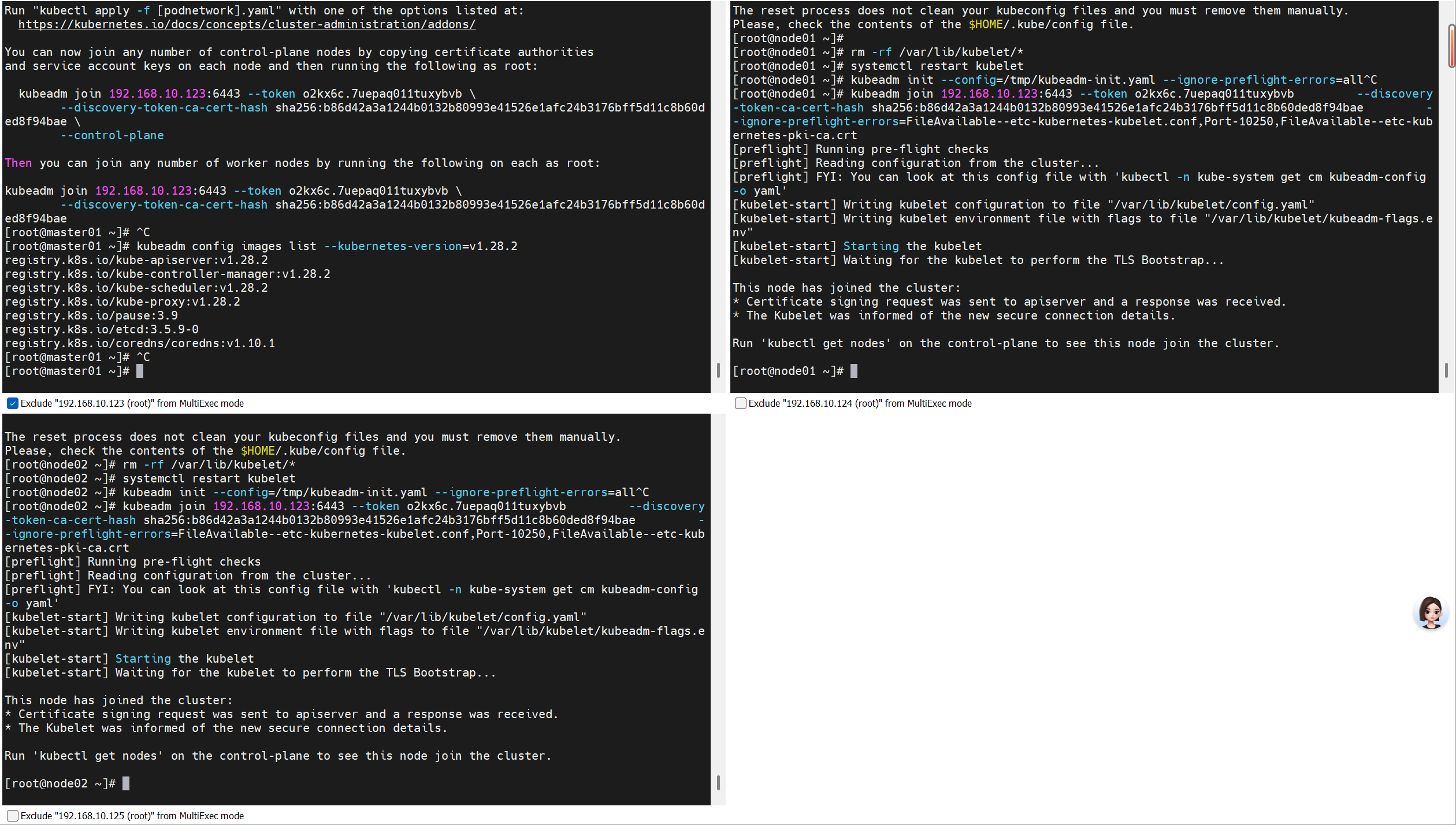

3.6 添加工作节点加入Kubernetes集群

在node01节点执行:

[root@node01 ~]# kubeadm join 192.168.10.123:6443 --token kq3r2z.fmyp8jp31m74w6sq \

--discovery-token-ca-cert-hash sha256:babe339bb09fe24d1d794be3d91cffeebb40f859ceb104ca286f9121412972de

在node02节点执行:

[root@node02 ~]# kubeadm join 192.168.10.123:6443 --token kq3r2z.fmyp8jp31m74w6sq \

--discovery-token-ca-cert-hash sha256:babe339bb09fe24d1d794be3d91cffeebb40f859ceb104ca286f9121412972de

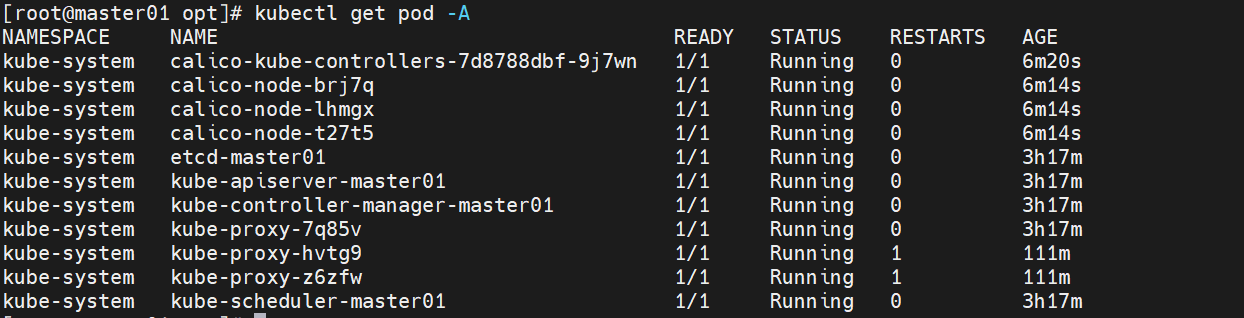

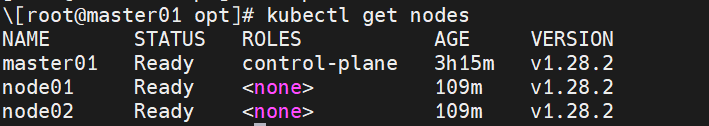

3.7 再次查看Node

kubectl get pod -A

kubectl get nodes

如果kubectl get pod -A已经全部running但是kubectl get nodes的node01、node02还是NOTReady,重启node1和node2即可

systemctl restart containerd

systemctl restart kubelet

3.8 kubectl命令补全功能

yum -y install bash-completion

echo "source <(kubectl completion bash)" >> /etc/profile

source /etc/profile

3.9 安装Nerdctl管理工具

在所有节点中安装 Nerdctl管理工具:

[root@master01 opt]# yum -y install wget

[root@master01 opt]# wget -q -c https://github.com/containerd/nerdctl/releases/download/v1.7.0/nerdctl-1.7.0-linux-amd64.tar.gz

[root@master01 opt]# tar xf nerdctl-1.7.0-linux-amd64.tar.gz -C /usr/local/bin

# 查看

[root@master01 opt]# nerdctl -n k8s.io ps

# 永久补全命令

echo "source <(nerdctl completion bash)" >> ~/.bashrc

source ~/.bashrc

# 测试(可在任何节点执行)

nerdctl run -d --name nginx -p 8080:80 nginx:alpine

4、安装kubernetes-dashboard

官方部署dashboard的服务没使用nodeport,需将yaml文件下载到本地,在service里添加nodeport。

4.1 下载配置文件

[root@master01 opt]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

curl -k -o recommended.yaml https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

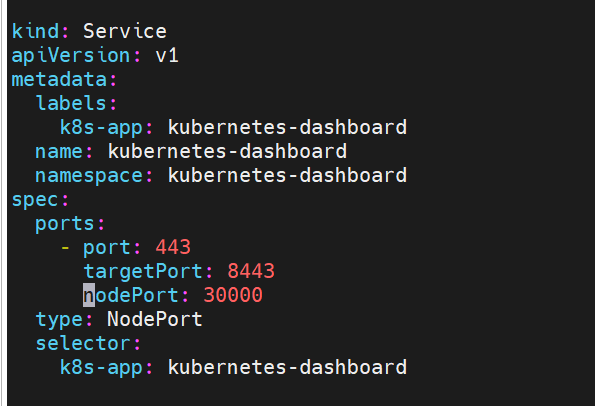

4.2 修改配置文件

[root@master01 opt]#vim recommended.yaml

# 或者使用以下命令直接创建修改后的部署

kubectl apply -f - <<EOF

apiVersion: v1

kind: Namespace

metadata:name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

---

apiVersion: v1

kind: Service

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

spec:ports:- port: 443targetPort: 8443nodePort: 30000 #修改type: NodePort #修改selector:k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-certsnamespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-csrfnamespace: kubernetes-dashboard

type: Opaque

data:csrf: ""

---

apiVersion: v1

kind: Secret

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-key-holdernamespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: ConfigMap

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-settingsnamespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

rules:# Allow Dashboard to get, update and delete Dashboard exclusive secrets.- apiGroups: [""]resources: ["secrets"]resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]verbs: ["get", "update", "delete"]# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.- apiGroups: [""]resources: ["configmaps"]resourceNames: ["kubernetes-dashboard-settings"]verbs: ["get", "update"]# Allow Dashboard to get metrics.- apiGroups: [""]resources: ["services"]resourceNames: ["heapster", "dashboard-metrics-scraper"]verbs: ["proxy"]- apiGroups: [""]resources: ["services/proxy"]resourceNames: ["heapster", "dashboard-metrics-scraper"]verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard

rules:# Allow Metrics Scraper to get metrics from the Metrics server- apiGroups: ["metrics.k8s.io"]resources: ["pods", "nodes"]verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kubernetes-dashboard

subjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: kubernetes-dashboard

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kubernetes-dashboard

subjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kubernetes-dashboard

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kubernetes-dashboard

spec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardspec:containers:- name: kubernetes-dashboardimage: kubernetesui/dashboard:v2.7.0imagePullPolicy: IfNotPresent # 关键修改ports:- containerPort: 8443protocol: TCPargs:- --auto-generate-certificates- --namespace=kubernetes-dashboardvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs# Create on-disk volume to store exec logs- mountPath: /tmpname: tmp-volumelivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30securityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule

---

apiVersion: v1

kind: Service

metadata:labels:k8s-app: dashboard-metrics-scrapername: dashboard-metrics-scrapernamespace: kubernetes-dashboard

spec:ports:- port: 8000targetPort: 8000selector:k8s-app: dashboard-metrics-scraper

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:k8s-app: dashboard-metrics-scrapername: dashboard-metrics-scrapernamespace: kubernetes-dashboard

spec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: dashboard-metrics-scrapertemplate:metadata:labels:k8s-app: dashboard-metrics-scraperspec:containers:- name: dashboard-metrics-scraperimage: kubernetesui/metrics-scraper:v1.0.8imagePullPolicy: IfNotPresent # 关键修改ports:- containerPort: 8000protocol: TCPlivenessProbe:httpGet:path: /port: 8000scheme: HTTPinitialDelaySeconds: 30timeoutSeconds: 30volumeMounts:- mountPath: /tmpname: tmp-volumesecurityContext:allowPrivilegeEscalation: falsereadOnlyRootFilesystem: truerunAsUser: 1001runAsGroup: 2001serviceAccountName: kubernetes-dashboardnodeSelector:"kubernetes.io/os": linux# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedulevolumes:- name: tmp-volumeemptyDir: {}

EOF#执行下面的yaml

[root@master01 opt]# kubectl apply -f recommended.yaml

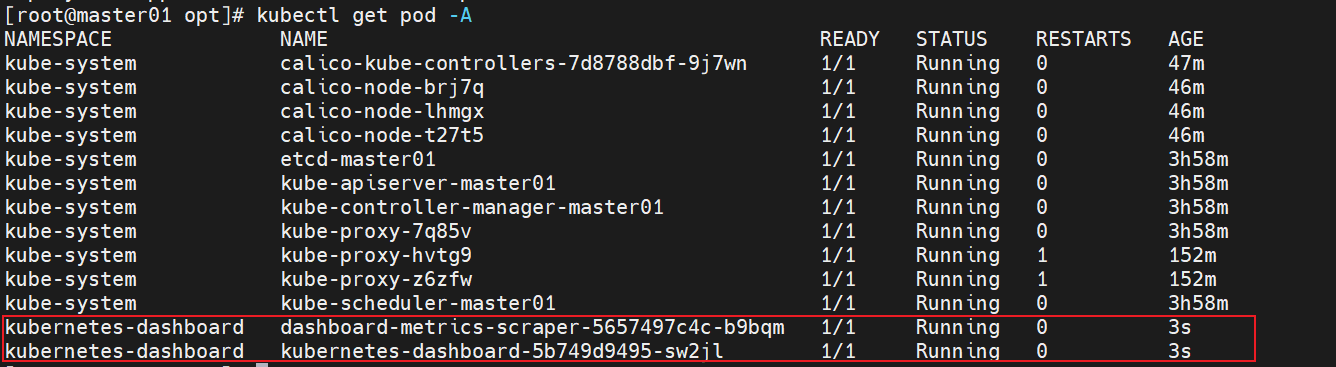

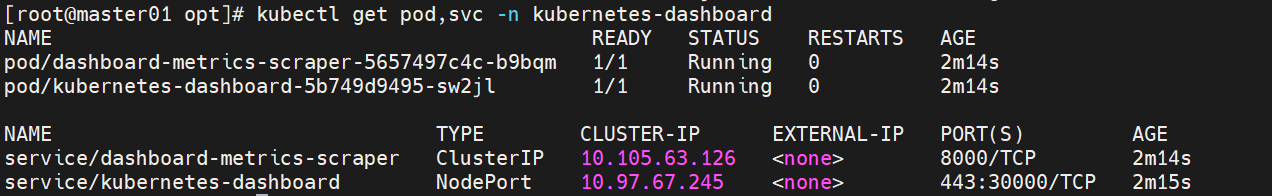

4.3 查看pod和service

[root@k8s-master ~]# kubectl get pod,svc -n kubernetes-dashboard

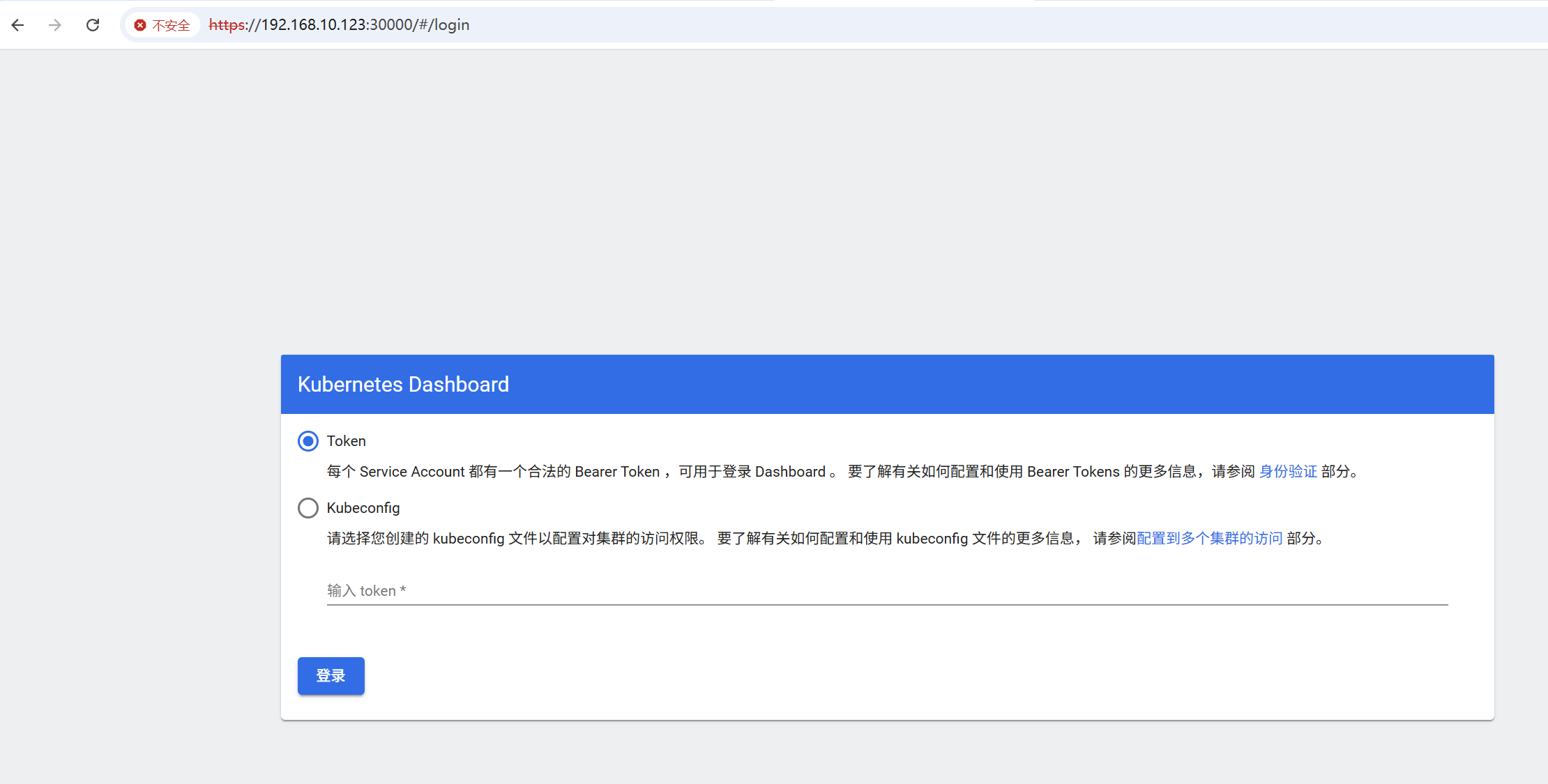

4.4 访问 Dashboard页面

浏览器输入https://192.168.10.123:30000/,首次访问会提示“您的连接不是私密连接”,点击“继续前往192.168.10.123(不安全)”进入登录页面,登录页面支持“Token”和“Kubeconfig”两种登录方式。

4.5 创建用户

[root@master01 opt]# vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: adminnamespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: admin

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-admin

subjects:

- kind: ServiceAccountname: adminnamespace: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:name: kubernetes-dashboard-adminnamespace: kubernetes-dashboardannotations:kubernetes.io/service-account.name: "admin"

type: kubernetes.io/service-account-token

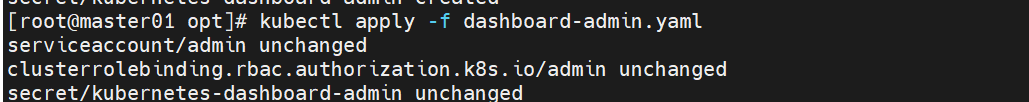

[root@master01 opt]# kubectl apply -f dashboard-admin.yaml

4.6 创建 Token

[root@master01 opt]# kubectl -n kubernetes-dashboard create token admin

eyJhbGciOiJSUzI1NiIsImtpZCI6InFaUjIwY3NNSzlRXzZDc0NDcG9IYzBYWkU5R09qbl9SZXlSTDdJek1fNVUifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNzYwMDIzOTM1LCJpYXQiOjE3NjAwMjAzMzUsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbiIsInVpZCI6IjgwNDFjNWI3LWQ2MWEtNDg4Ni1hMjY5LTg0Zjc3OTYzZDRlNCJ9fSwibmJmIjoxNzYwMDIwMzM1LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4ifQ.oxxLTRhlxuoZzs-AMEvIQUcWTSa9f-Ba2e8CiELV5vMIGmmawiXjB8cqjD3mKY26Kmif6h6Uj_kaHvWboRDNYgCxTCVhJOD55xavUW3IUYIUPyDEsqY3XNUfsLhciAu0GHJzPw3BMYSuXr7xLdsHon8n1D0DFvXm5byeZegyfLYmz_SCrpWl_rCHWGkcV5GlHTlveM_OpDgQgGwGjowQNQDfb1AzQ9xhyrnuEsDgFbnDX-E7fkzSbzNc7Zw7ZZp1eMTY4n9qUyG_WU8XsTiae2gqI9NrucMcUVDBmaeLYsEgia5qQXzkC2HiW4ohPyISfVcND8UBUzIknSL60oWeug

4.7 获取Token

[root@master01 opt]# Token=$(kubectl -n kubernetes-dashboard get secret |awk '/kubernetes-dashboard-admin/ {print $1}')

[root@master01 opt]# kubectl describe secrets -n kubernetes-dashboard ${Token} |grep token |awk 'NR==NF {print $2}'

eyJhbGciOiJSUzI1NiIsImtpZCI6InFaUjIwY3NNSzlRXzZDc0NDcG9IYzBYWkU5R09qbl9SZXlSTDdJek1fNVUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjgwNDFjNWI3LWQ2MWEtNDg4Ni1hMjY5LTg0Zjc3OTYzZDRlNCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbiJ9.Gq4lOspaNq02Azoadc_Mwk6UuZNuolCB3DBK3q4wJjbzJmXmGBp44mompXv4QNowxU04S3OL-nibOp4qEj-nwzkl7aeq5ap5bJbQysbB7RrdLa3lij2iJQXWsQXsutx2P4iVJLZpwGLiMr-P6Ts90TrHlfj6_EaP4J5ugsDzENGbEEMPCLtCtCi5bCmMrahRxji7Tq2wNlyDQh-JvtoAbuXi9uE0nLyA6eCvoPind-becZrLZgyBPXCoQNxDchhp_WBkxftK98BYBxumzdm6vaFI6g4SuuDxfw3rnDKDVEa6oExFNRtAQWt86G801EFDBuz9ZwVOoHfn0ioGpfqcXQ

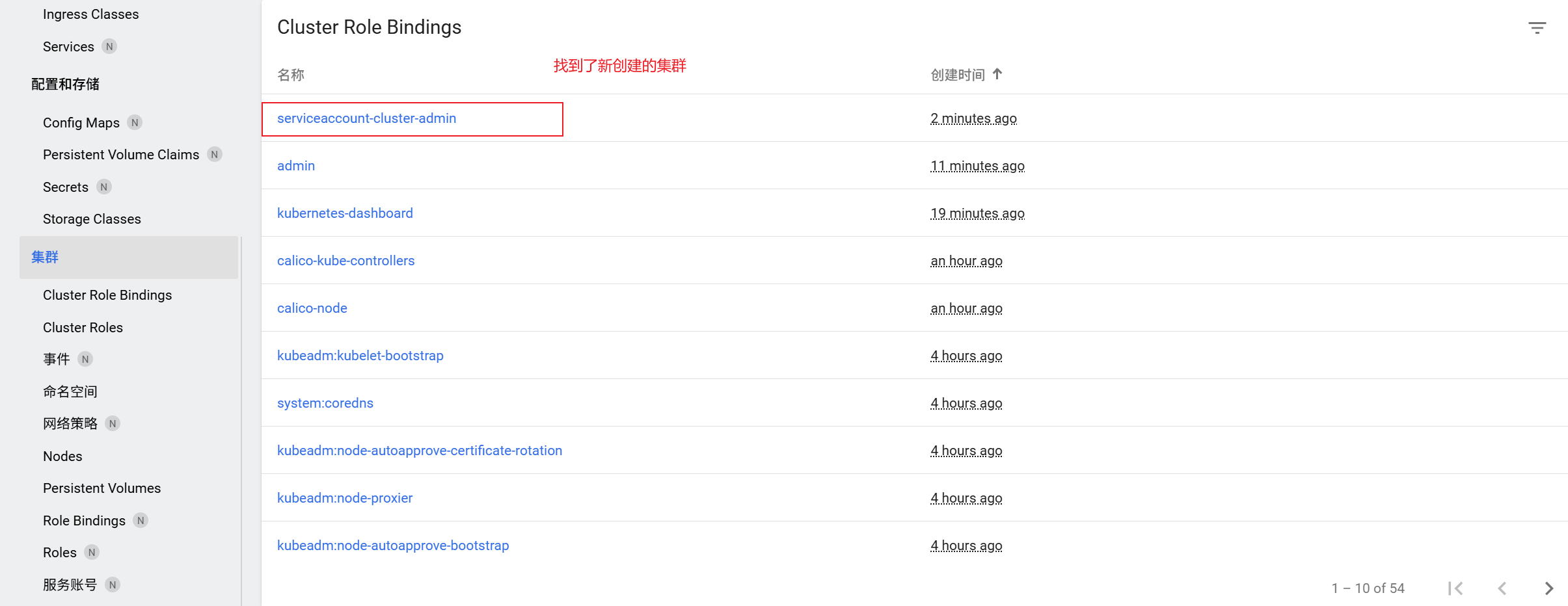

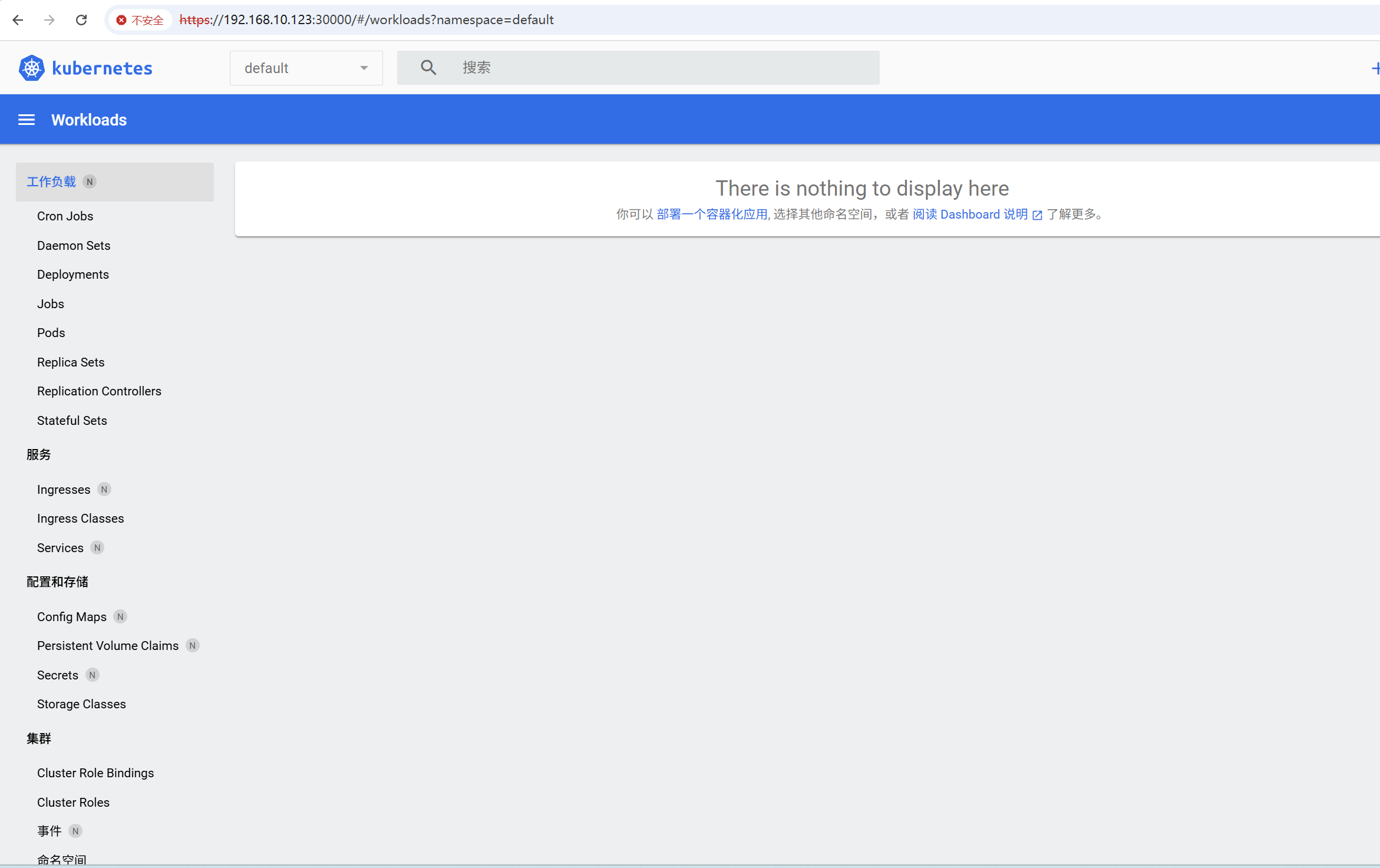

4.8 使用Token登录Dashboard

将获取到的Token复制到登录页面的“输入token”框中,点击“登录”即可进入Dashboard主页面。若登录后提示“找不到资源”或无namespace可选,执行以下命令授权:

kubectl create clusterrolebinding serviceaccount-cluster-admin --clusterrole=cluster-admin --user=system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard