Memblock-3

Use memblock before paging_init()

即使在paging_init()完成之前,你也可以用memblock的部分功能。如使用 memblock_add()来添加memory,也可以使用memblock_reserve()来注册reserve memory;不过,由于内核的线性映射还没完成,你不能通过memblock_alloc()来分配内存!

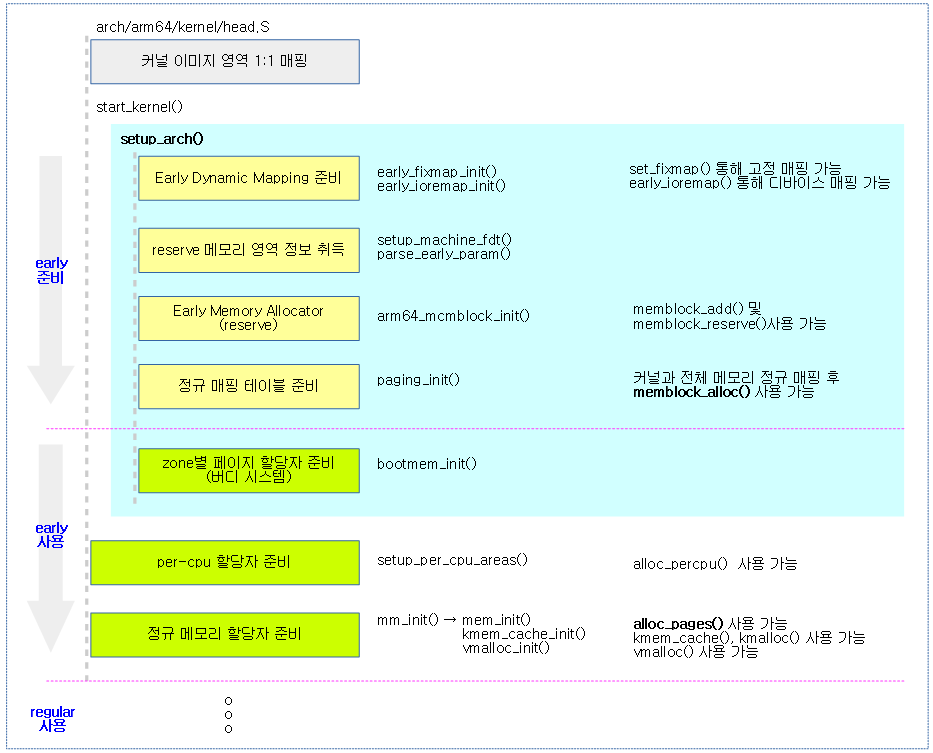

The following figure shows the main memory mapping and the step-by-step state of the allocators before the regular memory allocators are ready.

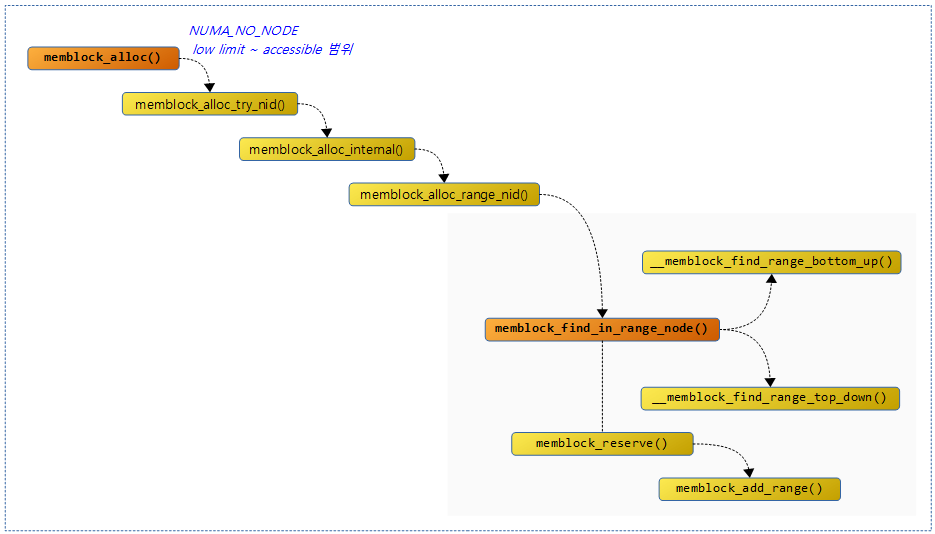

下图是memblock_alloc()的调用链:

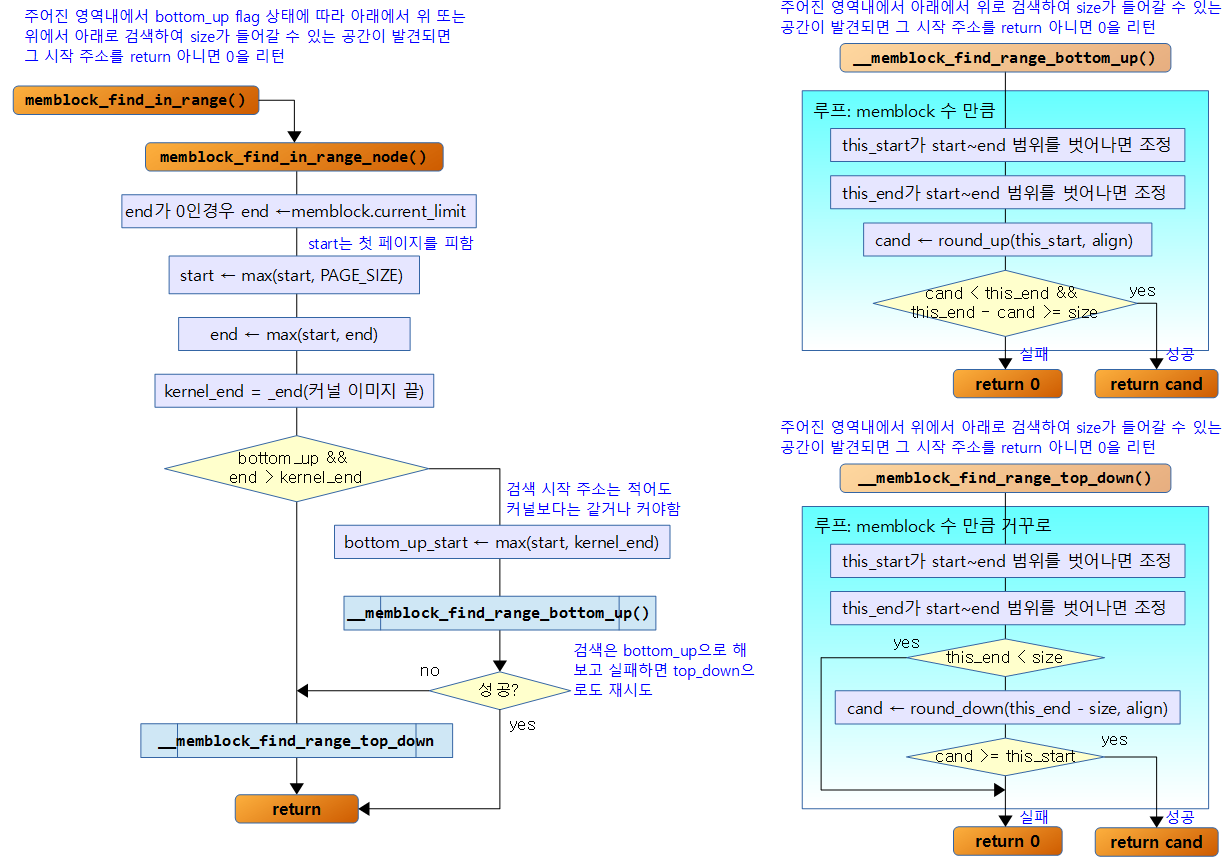

memblock_find_in_range_node()

MEMBLOCK_HOTPLUG

- With the addition of memory hotplug functionality to Linux, a new movable attribute field was added to memory nodes.

- For nodes that are movable, code has been added to migrate all of the pages in use on the entire node to another node while the hotplug function is being performed.

- The kernel and the memory it uses are installed on nodes that do not support the hotplug function, and these nodes always have the non-movable attribute.

- Currently, x86 64-bit large-capacity servers support the hotplug function. These nodes have a minimum capacity of 16G and are usually located above the non-moveable node where the kernel image is located. Under these conditions, when the kernel allocates memory, it allocates memory starting from the top movable node. In this case, when a moveable node is detached, a large amount of memory that has been allocated and used must be migrated from the movable node to another node.

- In order to reduce the frequency of data page migration due to the hotplug feature, a feature to allocate memory from the bottom up (from low address to high address) was required, and code was added to allow memory allocation from the kernel location, that is, from the end (_end) of the kernel to the top. In this way, the allocated memory has a very high probability of being on the non-movable node where the kernel is.

- The ARM architecture does not support memory hotplug function as of now (kernel v4.4), unlike CPU hotplug function. Therefore, memory allocation uses top-down method.

- CONFIG_MEMORY_HOTPLUG, CONFIG_HOTPLUG_CPU

- See also: mm/memblock.c: introduce bottom-up allocation mode

MEMBLOCK_MIRROR

- Among the x86 XEON server system functions, memory can be set to dual mirror to secure high reliability. When the entire memory is set to mirror, kernel software intervention is not required. However, when only a part of the memory, not the entire memory, needs to be set to mirror and used, this flag is set in the registered memory memblock area so that kernel memory can be used with priority. This information is configured by the UEFI firmware of the server system by sending the mirror setting to the kernel.

Reference: address range mirror (2016) | Taku Izumi – Download pdf

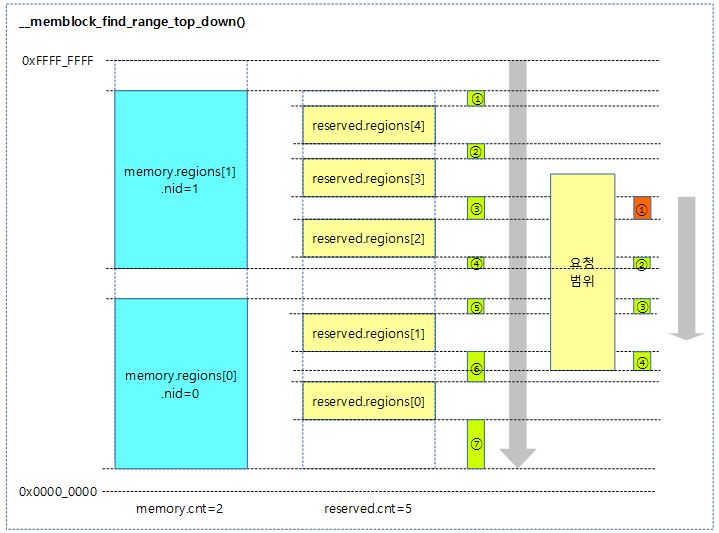

The following figure shows the processing of the memblock_find_in_range() function.

In the figure below, when the __memblock_find_range_top_down() function operates, 7 free spaces are obtained through for_each_free_mem_range_reverse(), and the cases included in the range from start to end are compressed into 4, and if they match the match condition that includes the requested size in order from 1 to 4, the address of this space is returned.

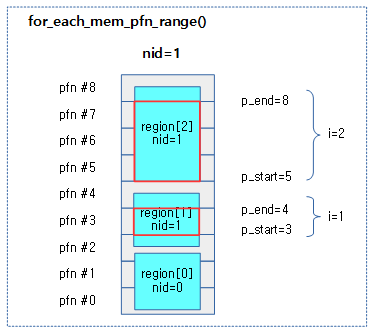

for_each_mem_pfn_range()

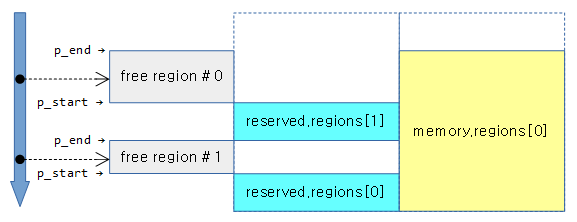

The figure below shows the cases that match the condition when the for_each_mem_pfn_range() macro loop is run with the nid=1 value, expressed in red boxes, and each value is as follows.

- Except for the memblock area with nid=0 at the very bottom, two match cases occur.

- The i value is 1, so we get p_start=3, p_end=4, and p_nid=1.

- With the value of i being 2, we get p_start=5, p_end=8, and p_nid=1.

- Actual memory memblocks are made up of very large pages, unlike the figure below, and are almost always aligned, so they are quite different from the figure below, but they should be used to help understand the calculation method.

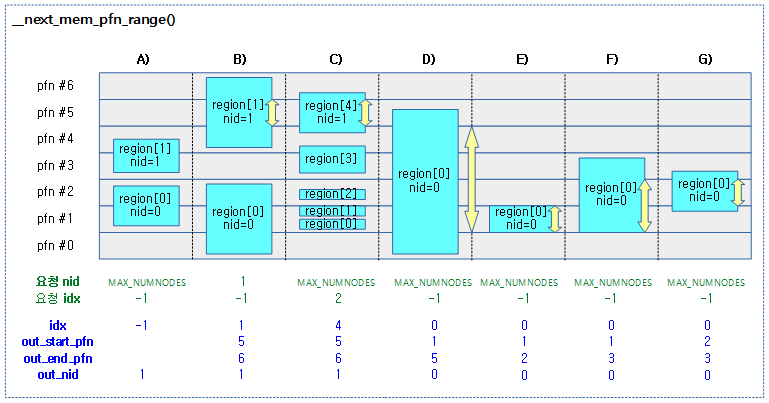

__next_mem_pfn_range()

The figure below shows how the __next_mem_pfn_range() function searches for blocks matching the conditions starting from request idx+1 when executed for each of the seven cases. The figure below is also much smaller than the actual memory memblock sizes, so it should be used to help understand the calculation method.

- In case A), there is no single complete page that is not fragmented.

- In case B), a node ID is specified.

- @idx

- Index of the matched memblock area

- @out_start_pfn

- Start of non-fragmented page pfn

- @out_end_pfn

- End of non-fragmented page pfn + 1

- @out_nid

- Node id of the matched memblock area

__next_mem_range()

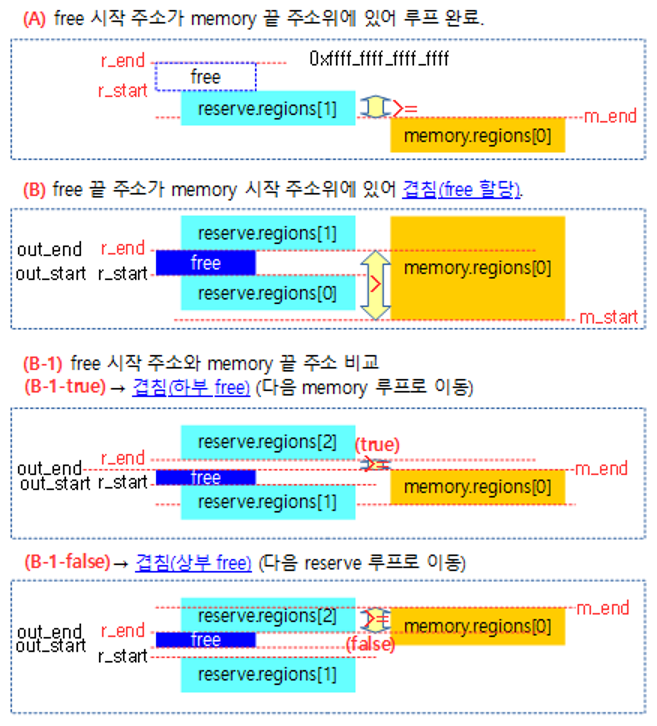

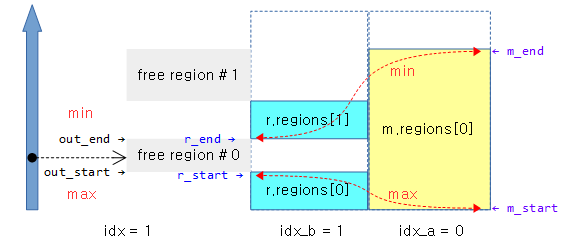

The following figure shows the cases used to compute free areas when the __next_mem_range() function loops to find free areas between reserved areas.

Below is an example of using the __next__mem_range(0x1UUL) macro when 1 memory memblock and 2 reserve memblocks are registered.

- The idx_a index of loop 1 is 0, so use the information from memory.regions[0]

- The idx_b index of loop 2 is 1, using reserve.regions[1] and the region information of the previous reserve memblock.

- In the confirmed free area, r_start is specified as the end address of reserve.regions[0] and r_end is specified as the start address of reserve.regions[1].

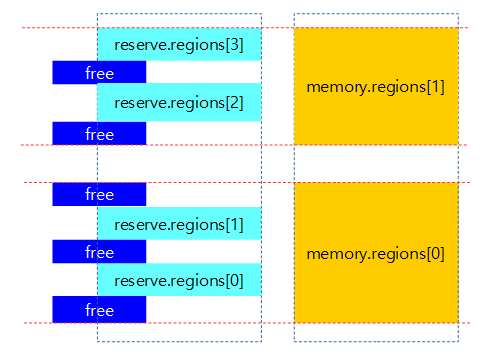

With the two memory memblocks and four reserve memblocks registered below, the range where the free area is searched is expressed using the __next__mem_range(0x1UUL) macro.

As shown below, the area excluding the reserved area within the memory area is a free area, and two areas are provided in a reverse loop.

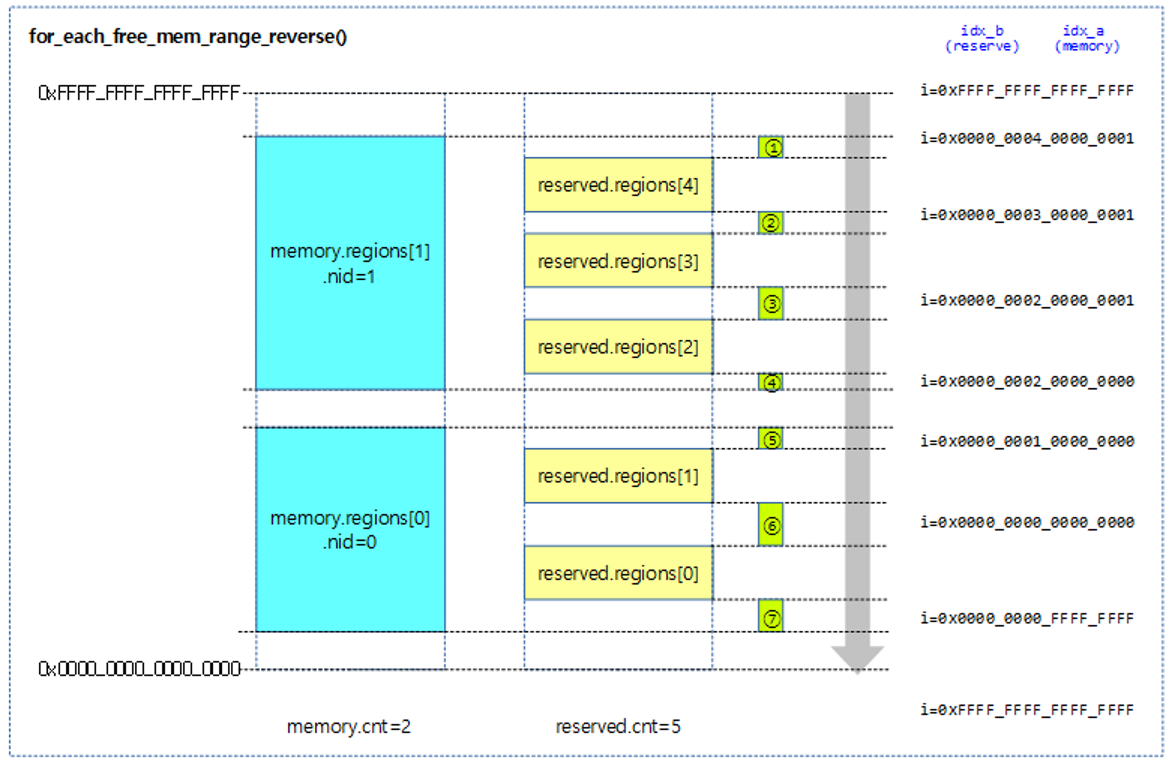

As shown below, tracking of the i value is expressed when there are multiple memory regions.

- You can see that the initial start of the i value is 0xffff_ffff_ffff_ffff and changes as follows each time the free area is returned.

- The moment the for each loop ends, the value of i changes back to 0xffff_ffff_ffff_ffff.