nfs存储卷,rc,deploy,ds控制器及kube-proxy工作原理和metallb组件

🌟存储卷案例之nfs

什么是nfs

nfs表示网络文件系统,存在客户端和服务端,需要单独部署服务端。

K8S在使用nfs时,集群应该安装nfs的相关模块。

nfs的应用场景:

- 1.实现跨节点不同Pod的数据共享;

- 2.实现跨节点存储数据;

Ubuntu系统部署nfs-server

K8S集群所有节点安装nfs驱动

apt -y install nfs-kernel-server

创建服务端的共享目录

[root@master231 ~]# mkdir -pv /zhu/data/nfs-server

mkdir: created directory '/zhu/data'

mkdir: created directory '/zhu/data/nfs-server'

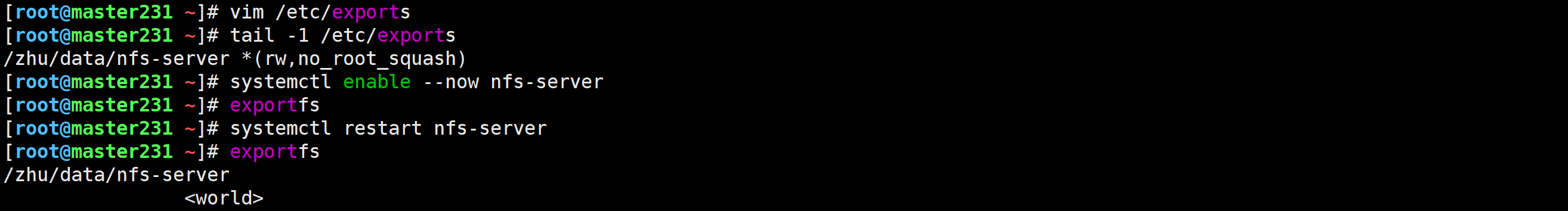

重启配置生效

[root@master231 ~]# tail -1 /etc/exports

/zhu/data/nfs-server *(rw,no_root_squash)

[root@master231 ~]# systemctl enable --now nfs-server

[root@master231 ~]# exportfs

[root@master231 ~]# systemctl restart nfs-server

[root@master231 ~]# exportfs

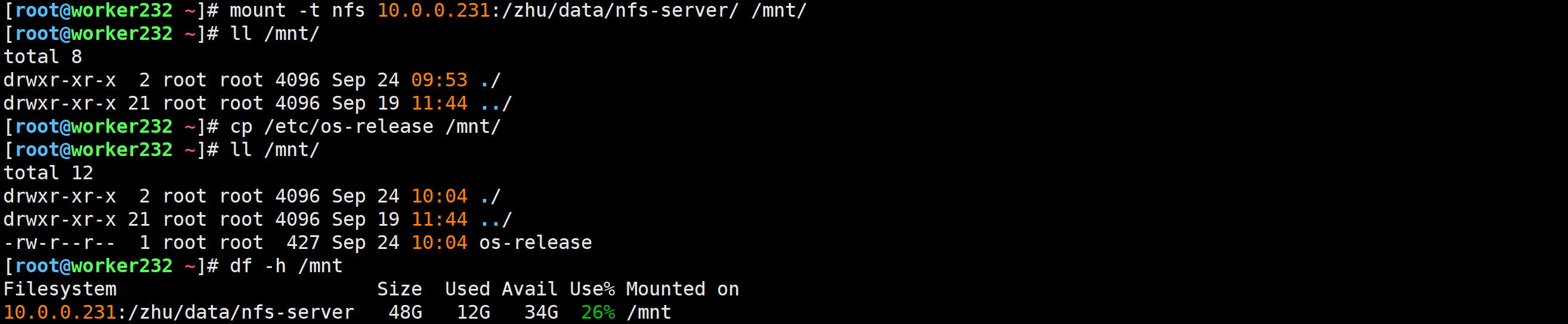

客户端worker232验证测试

[root@worker232 ~]# mount -t nfs 10.0.0.231:/zhu/data/nfs-server/ /mnt/

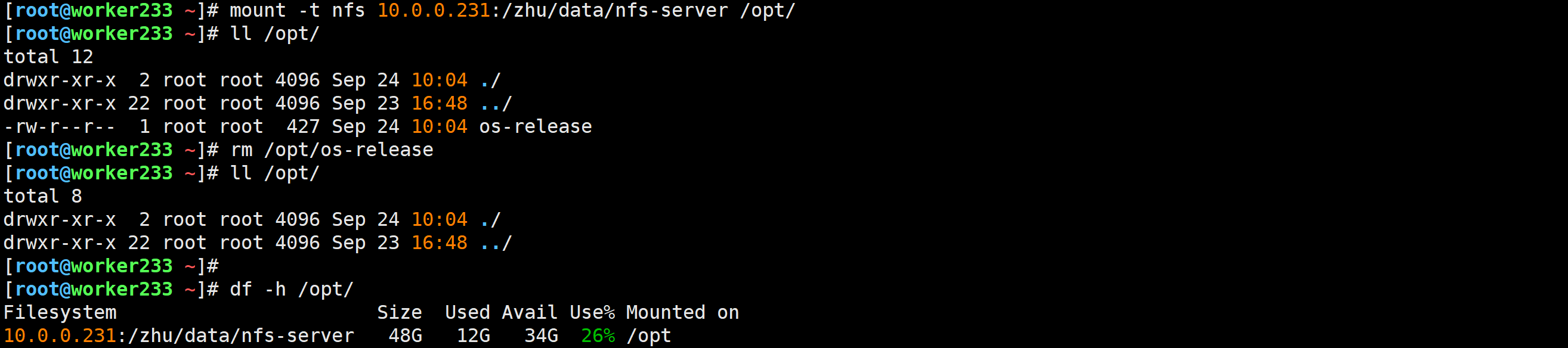

客户端work233验证测试

[root@worker233 ~]# mount -t nfs 10.0.0.231:/zhu/data/nfs-server /opt/

k8s使用nfs存储卷案例

编写资源清单

[root@master231 case-demo]# cat ../volumes/06-pods-nfs.yaml

apiVersion: v1

kind: Pod

metadata:name: xiuxian-apps-v1labels:apps: xiuxian

spec:volumes:- name: data# 指定存储卷类型是nfsnfs:# NFS服务器地址server: 10.0.0.231# nfs的共享路径path: /zhu/data/nfs-servernodeName: worker232containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /usr/share/nginx/html/---apiVersion: v1

kind: Pod

metadata:name: xiuxian-apps-v2labels:apps: xiuxian

spec:volumes:- name: datanfs:server: 10.0.0.231path: /zhu/data/nfs-servernodeName: worker233containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2volumeMounts:- name: datamountPath: /usr/share/nginx/html/

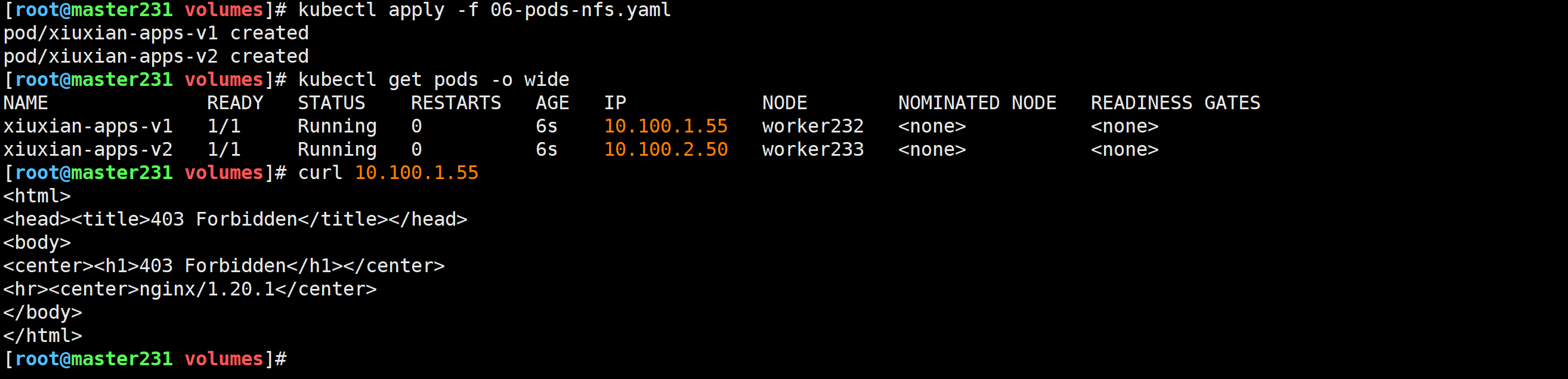

创建测试

[root@master231 volumes]# kubectl apply -f 06-pods-nfs.yaml

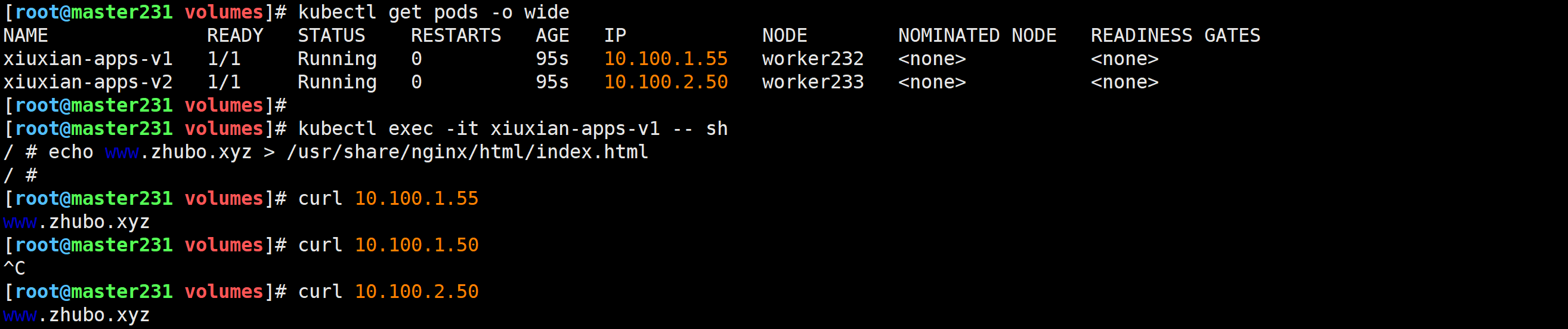

修改Pod数据并验证

[root@master231 volumes]# kubectl exec -it xiuxian-apps-v1 -- sh

/ # echo www.zhubo.xyz > /usr/share/nginx/html/index.html

/ #

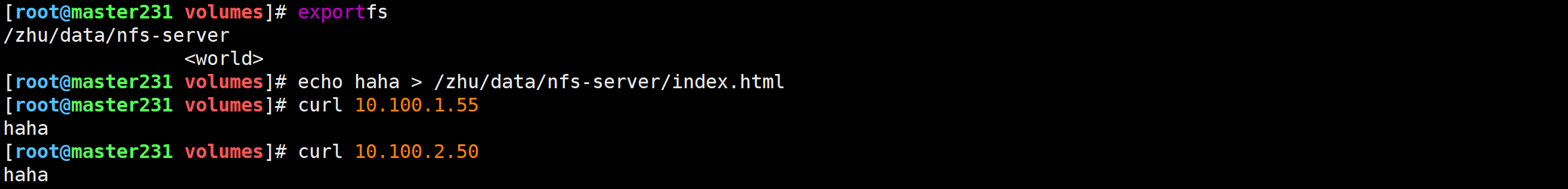

修改nfs服务器端文件并验证

[root@master231 volumes]# echo haha > /zhu/data/nfs-server/index.html

删除资源

[root@master231 volumes]# kubectl delete -f 06-pods-nfs.yaml

pod "xiuxian-apps-v1" deleted

pod "xiuxian-apps-v2" deleted

🌟使用k8s部署wordpress

部署要求

- mysql部署到worker233节点;

- wordpress部署到worker232节点,且windows可以正常访问wordpress;

- 测试验证,删除MySQL和WordPress容器后数据不丢失,实现秒级恢复。

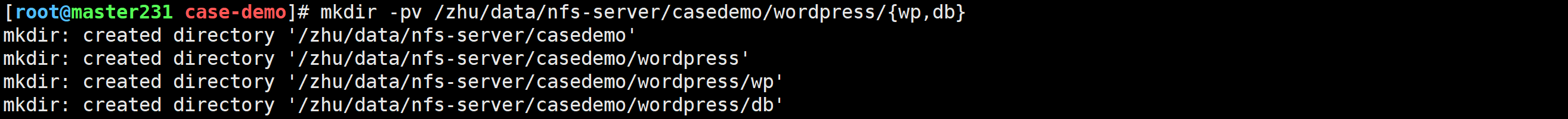

创建数据目录

[root@master231 case-demo]# mkdir -pv /zhu/data/nfs-server/casedemo/wordpress/{wp,db}

编写资源清单

[root@master231 case-demo]# cat 02-po-svc-volumes-wordpress.yaml

apiVersion: v1

kind: Pod

metadata:name: dbnamespace: defaultlabels:app: db

spec:volumes:- name: datanfs:server: 10.0.0.231path: /zhu/data/nfs-server/casedemo/wordpress/dbnodeName: worker232hostNetwork: truecontainers:- name: dbimage: harbor250.zhubl.xyz/zhubl-db/mysql:8.0.36-oraclevolumeMounts:- name: datamountPath: /var/lib/mysqlports:- containerPort: 3306name: mysql-serverargs:- --character-set-server=utf8 - --collation-server=utf8_bin- --default-authentication-plugin=mysql_native_passwordenv:- name: MYSQL_ALLOW_EMPTY_PASSWORDvalue: "yes"- name: MYSQL_DATABASEvalue: "wordpress"- name: MYSQL_USERvalue: wordpress- name: MYSQL_PASSWORDvalue: wordpress---apiVersion: v1

kind: Pod

metadata:name: wpnamespace: defaultlabels:app: wp

spec:volumes:- name: datanfs:server: 10.0.0.231path: /zhu/data/nfs-server/casedemo/wordpress/wpnodeName: worker233containers:- name: wpimage: harbor250.zhubl.xyz/zhubl-wordpress/wordpress:6.7.1-php8.1-apachevolumeMounts:- name: datamountPath: /var/www/htmlports:- containerPort: 80name: webenv:- name: WORDPRESS_DB_HOSTvalue: "10.0.0.232"- name: WORDPRESS_DB_NAMEvalue: "wordpress"- name: WORDPRESS_DB_USERvalue: wordpress- name: WORDPRESS_DB_PASSWORDvalue: wordpress---apiVersion: v1

kind: Service

metadata:name: svc-wpnamespace: default

spec:type: NodePortselector:app: wpports:- port: 80targetPort: webnodePort: 30080

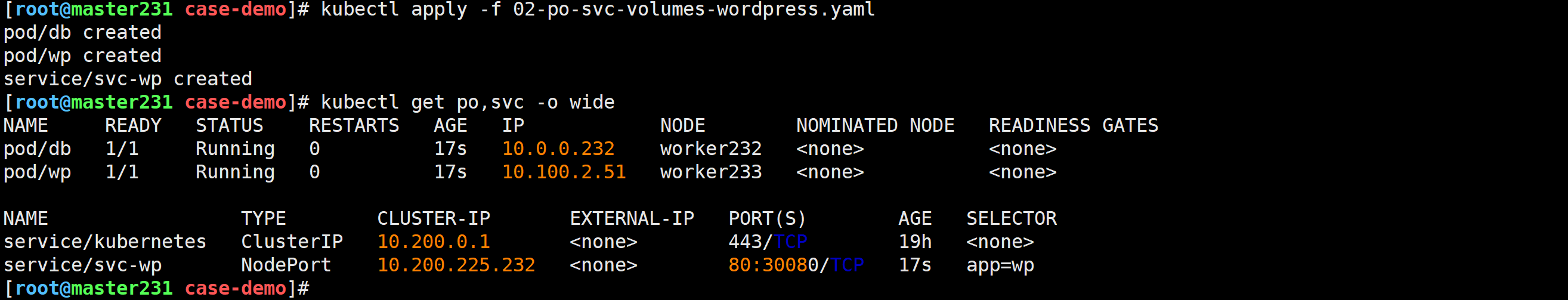

创建资源

[root@master231 case-demo]# kubectl apply -f 02-po-svc-volumes-wordpress.yaml

pod/db created

pod/wp created

service/svc-wp created

访问webUI

http://10.0.0.231:30080

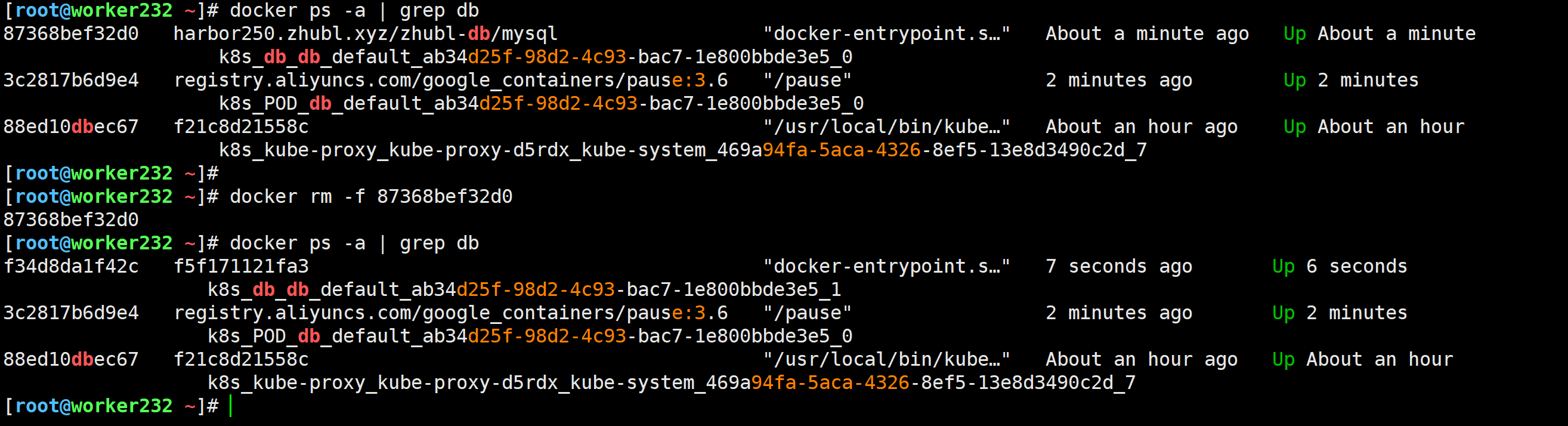

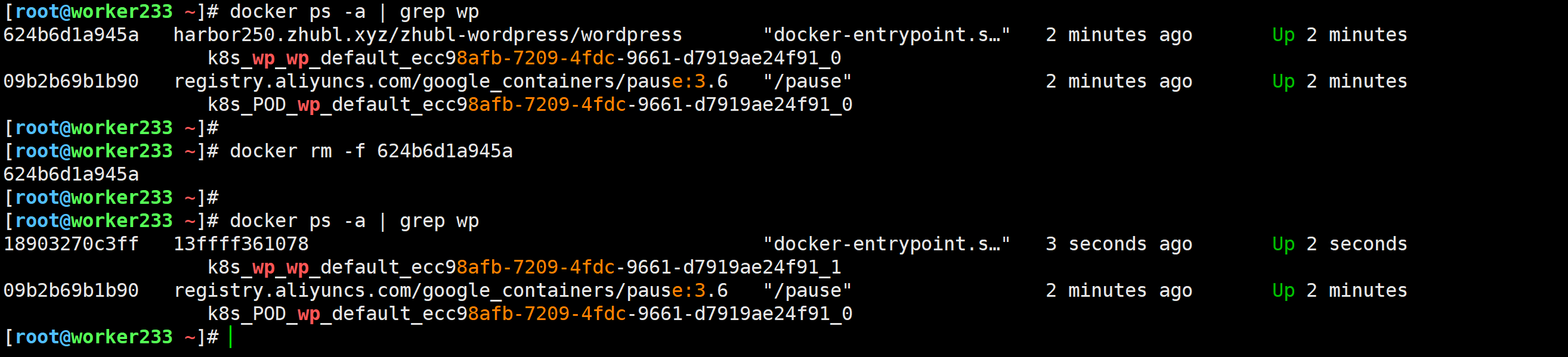

删除db和wp的容器

[root@worker232 ~]# docker rm -f 87368bef32d0[root@worker233 ~]# docker rm -f 624b6d1a945a

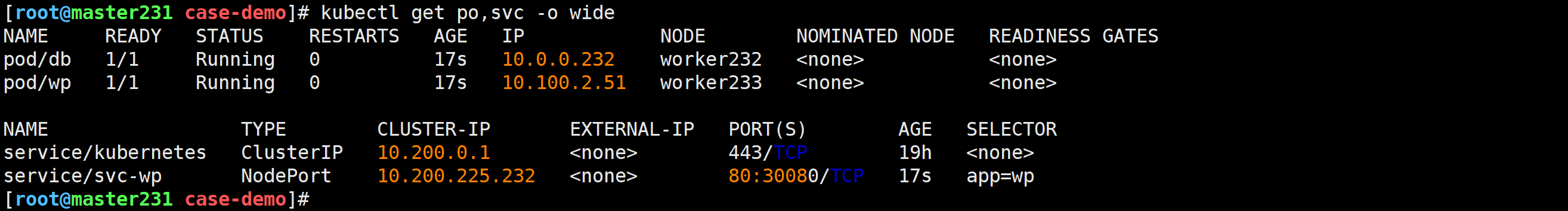

查看pod是否重启

[root@master231 case-demo]# kubectl get po,svc -o wide

验证数据是否丢失

🌟replicasets控制器

什么rs

和rc类似,rs也是用来控制Pod副本数量。rs全称为"replicasets"

相对于rc而言,rs实现更加轻量级且功能更强大。

使用rs实现类似于rc的效果

[root@master231 replicasets]# cat 01-rs-xiuxian.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:name: rs-xiuxian

spec:replicas: 3# 定义标签选择器基于标签关联Podselector:# 基于标签进行匹配matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

创建资源

[root@master231 replicasets]# kubectl apply -f 01-rs-xiuxian.yaml

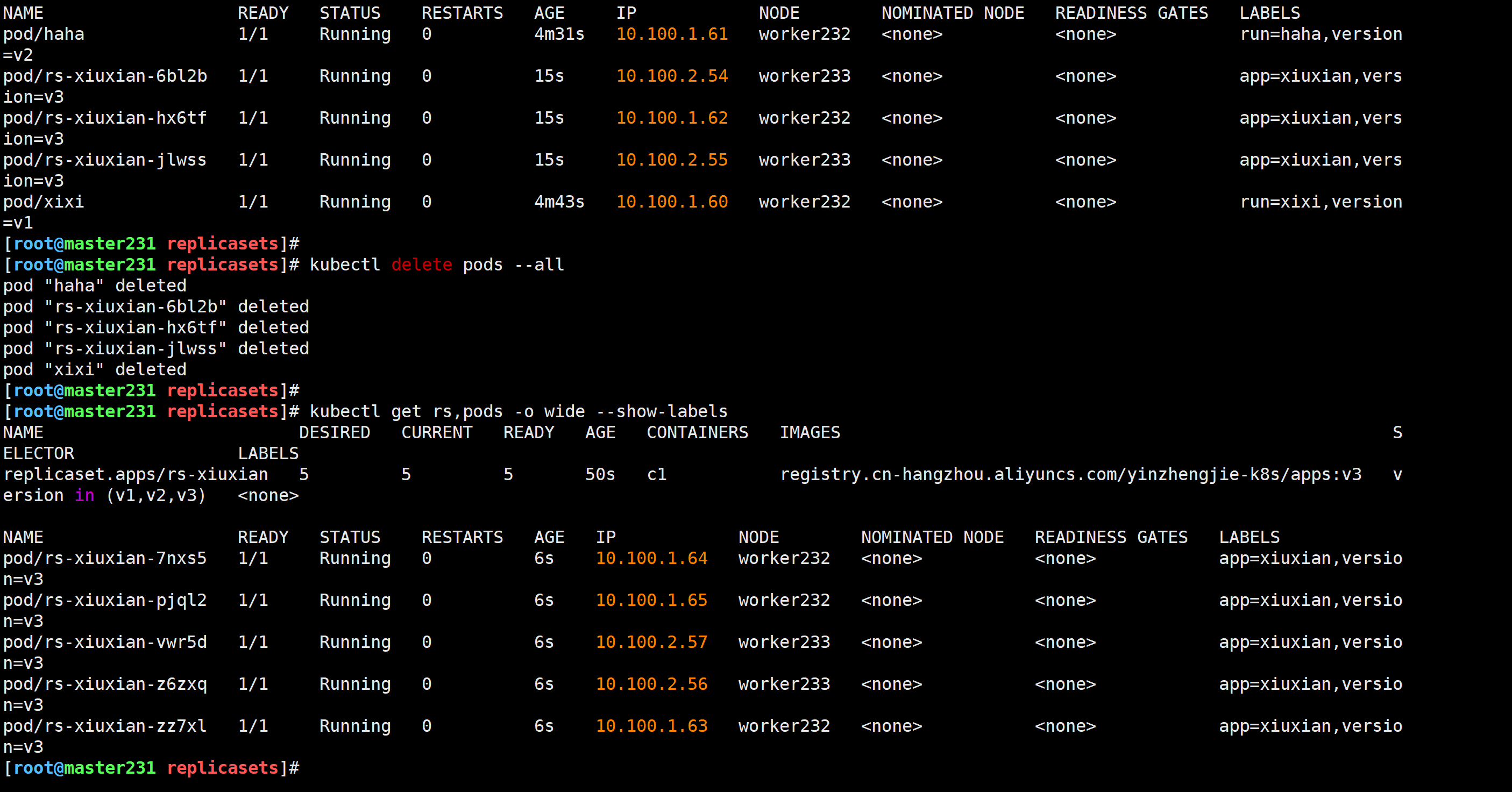

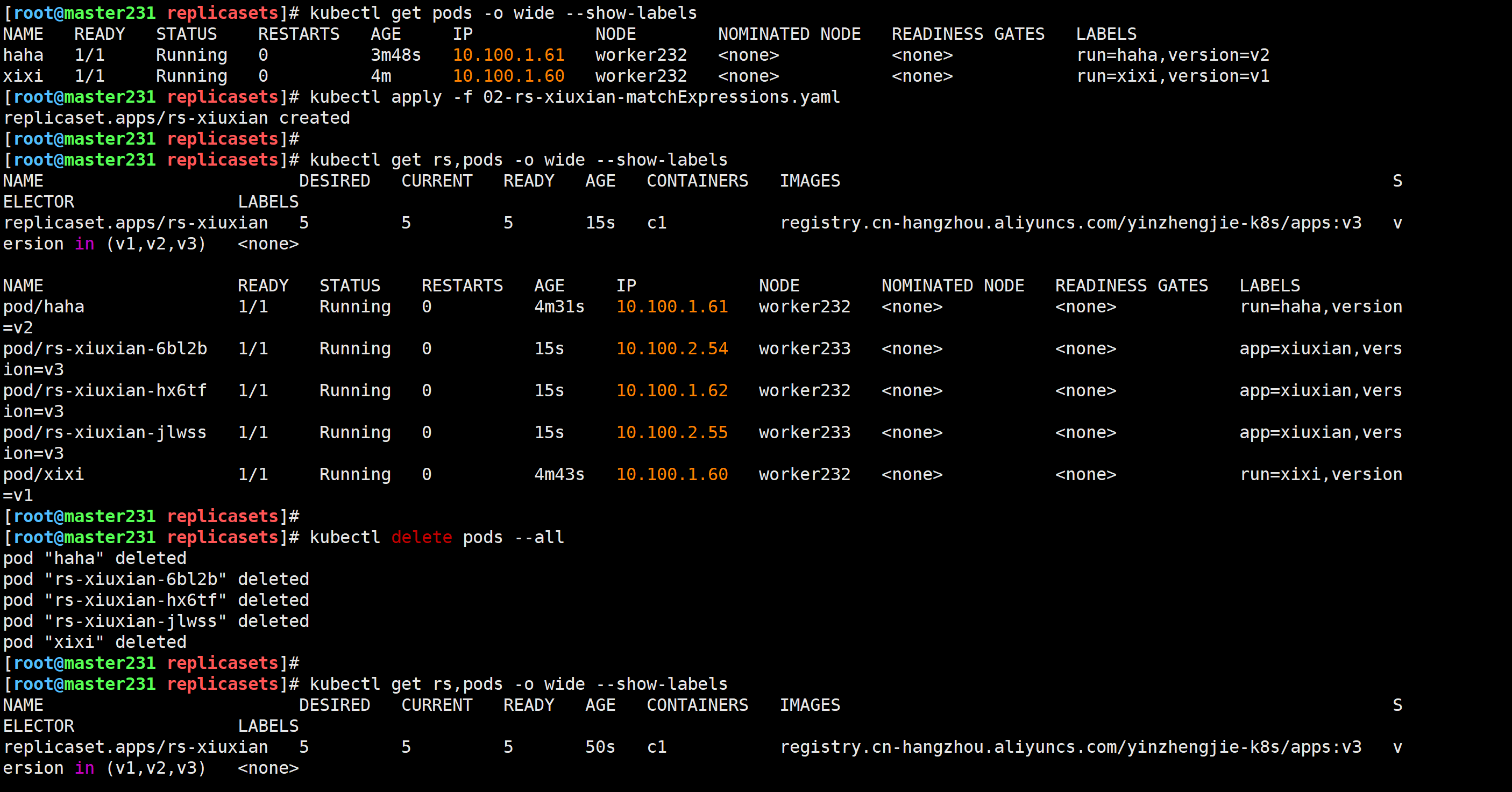

rs优于rc的效果

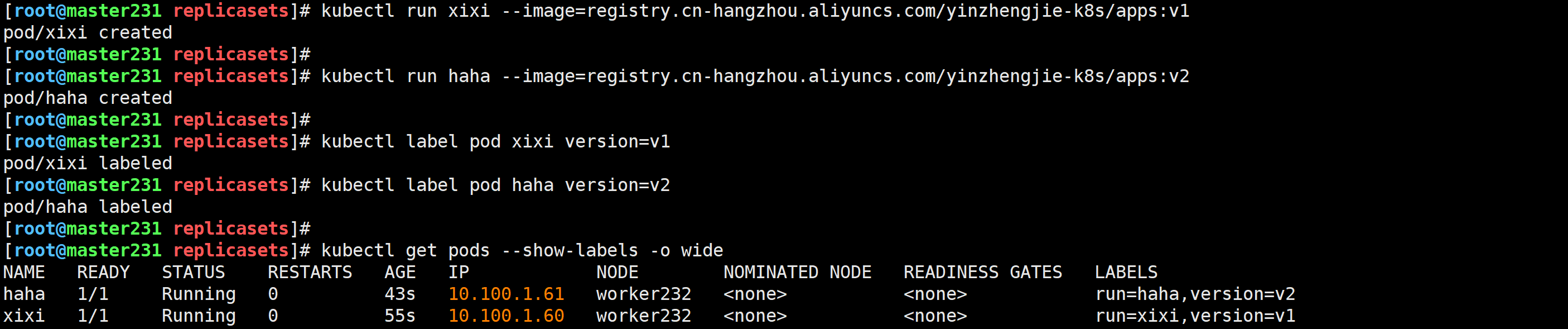

创建测试pod

[root@master231 replicasets]# kubectl run xixi --image=registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1[root@master231 replicasets]# kubectl run haha --image=registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

编写资源清单

[root@master231 replicasets]# cat 02-rs-xiuxian-matchExpressions.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:name: rs-xiuxian

spec:replicas: 5selector:# 基于标签表达式进行匹配matchExpressions:- key: versionvalues:- v1- v2- v3# 指定key和value之间的关系,有效值为: In, NotIn, Exists and DoesNotExist# In:# 表示key的值必须在vlaue列表中匹配。# NotIn:# 和In相反。# Exists:# 只要存在key,value可以省略。# DoesNotExist:# 只要不存在key,value也可以省略。operator: Intemplate:metadata:labels:app: xiuxianversion: v3spec:containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v3

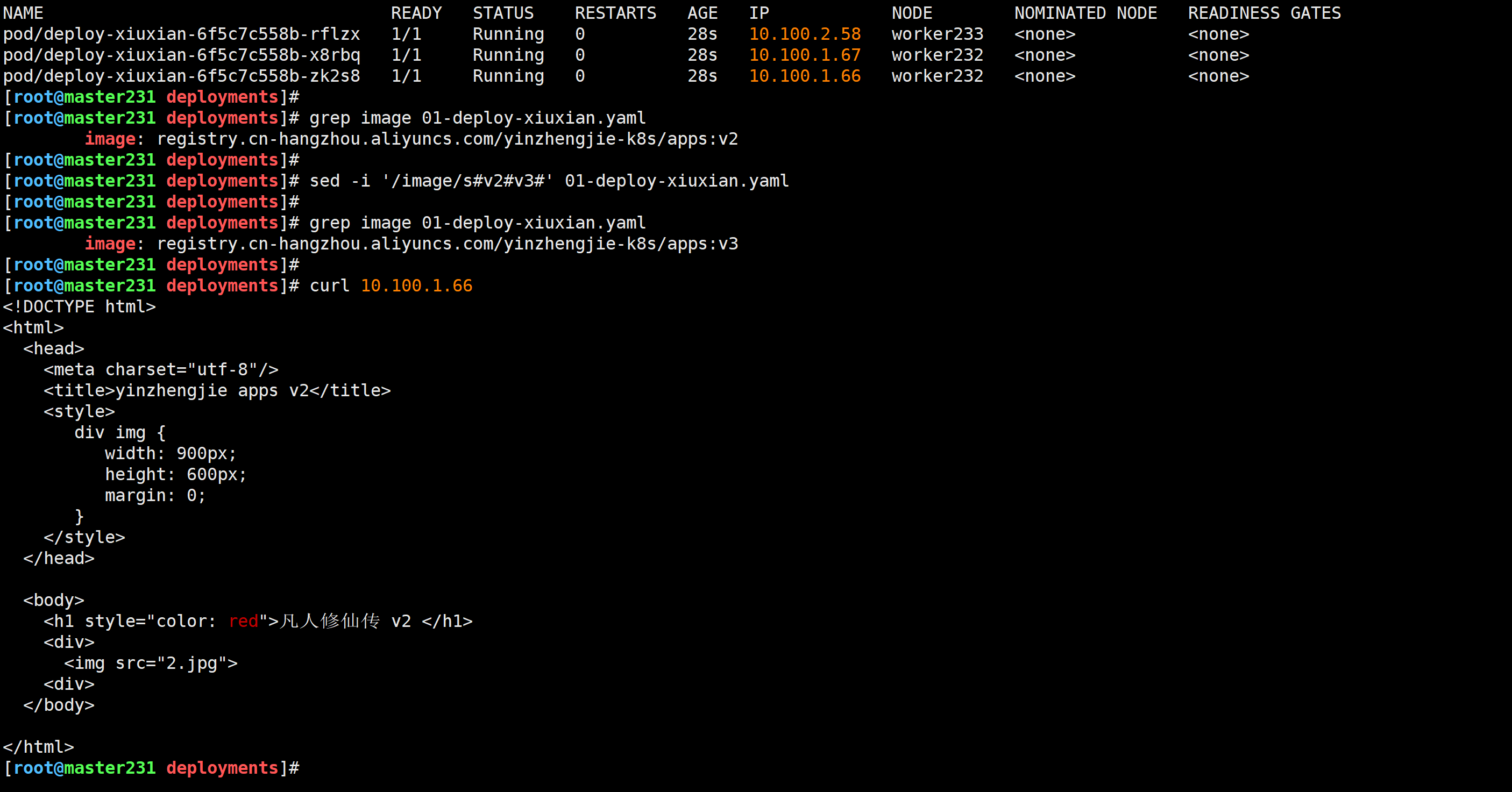

🌟deploy控制器

什么是deploy

deploy的全称为"deployments",该控制器不直接操作pod,而是底层调用rs控制器间接控制Pod。

deploy相比于rs可以实现声明式更新。

编写资源清单

[root@master231 deployments]# cat 01-deploy-xiuxian.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian

spec:replicas: 3selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

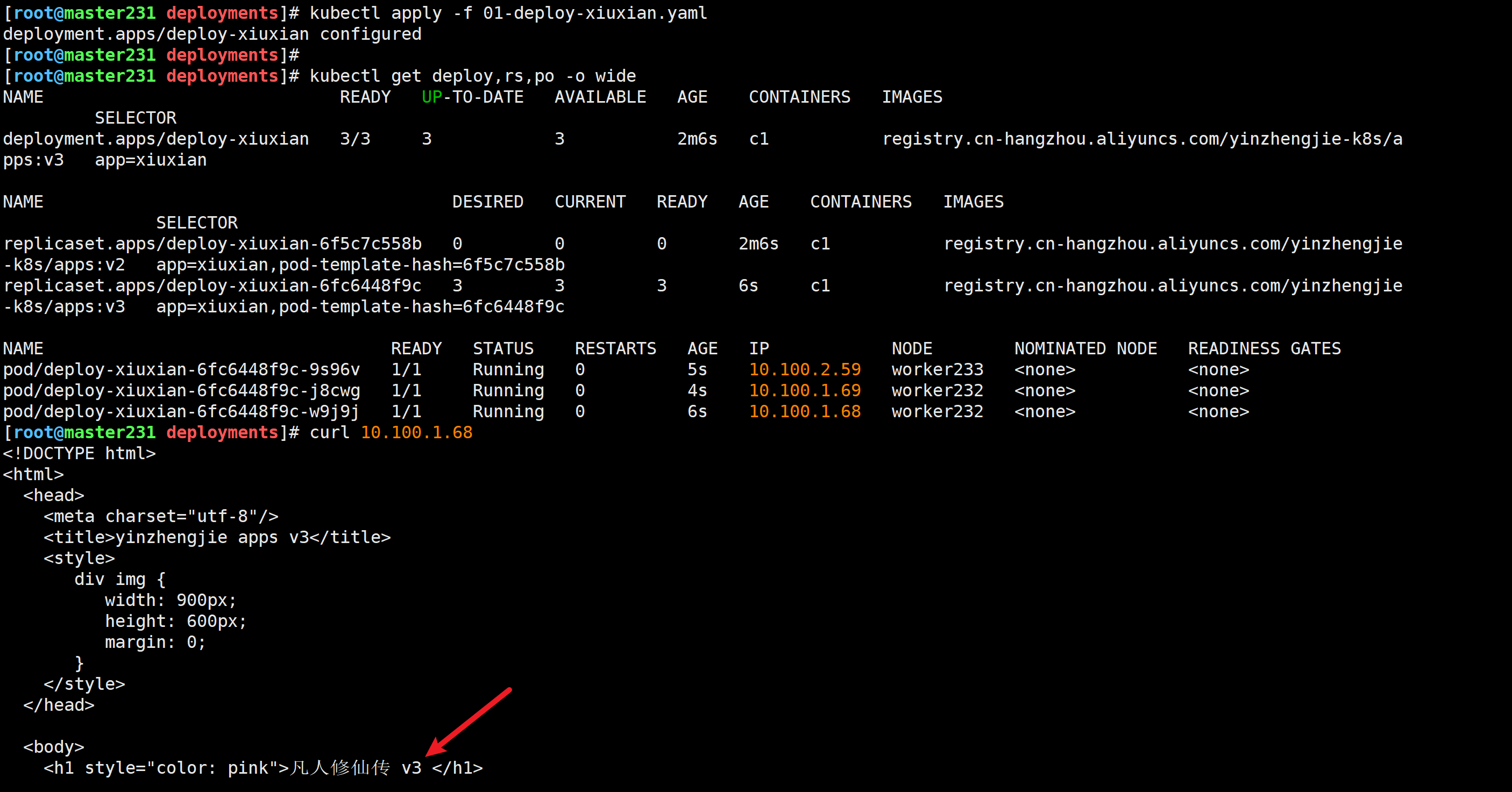

声明式更新验证

[root@master231 deployments]# sed -i '/image/s#v2#v3#' 01-deploy-xiuxian.yaml

[root@master231 deployments]# kubectl apply -f 01-deploy-xiuxian.yaml

🌟ds控制器

什么是ds控制器

ds的全称为"daemonsets",该控制器可以控制每个worker有且仅有一个Pod。

主要引用场景就是需要再每个客户端部署一个应用实例的情况,比如zabbix-agent,node-exporter,kube-proxy,…

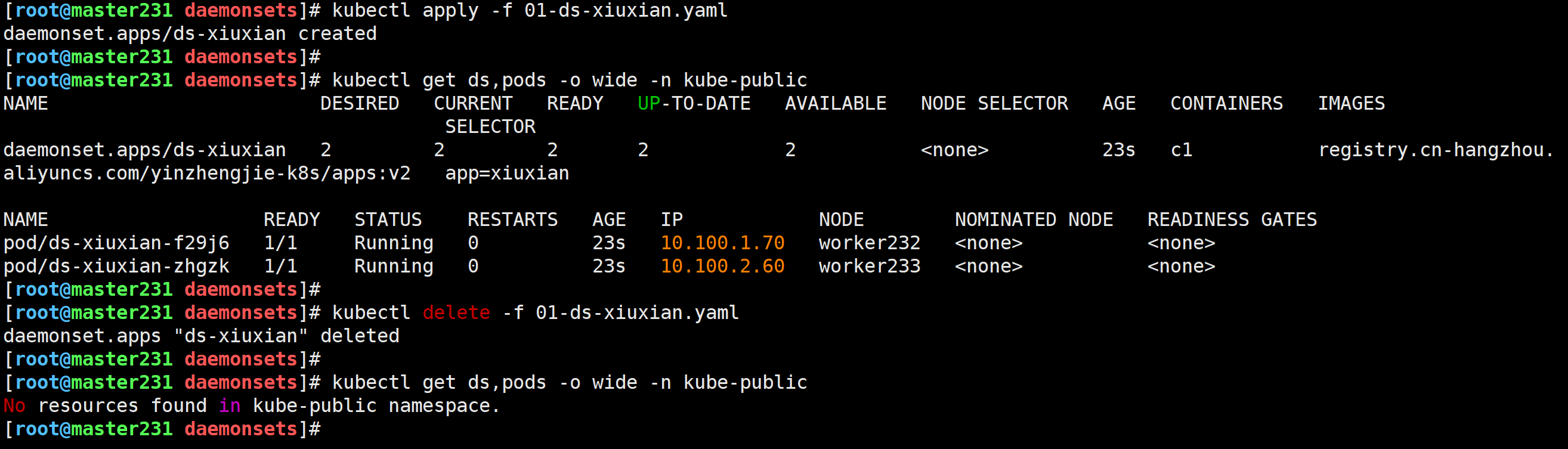

编写资源清单

[root@master231 daemonsets]# cat 01-ds-xiuxian.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:name: ds-xiuxiannamespace: kube-public

spec:selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

创建资源和验证

[root@master231 daemonsets]# kubectl apply -f 01-ds-xiuxian.yaml

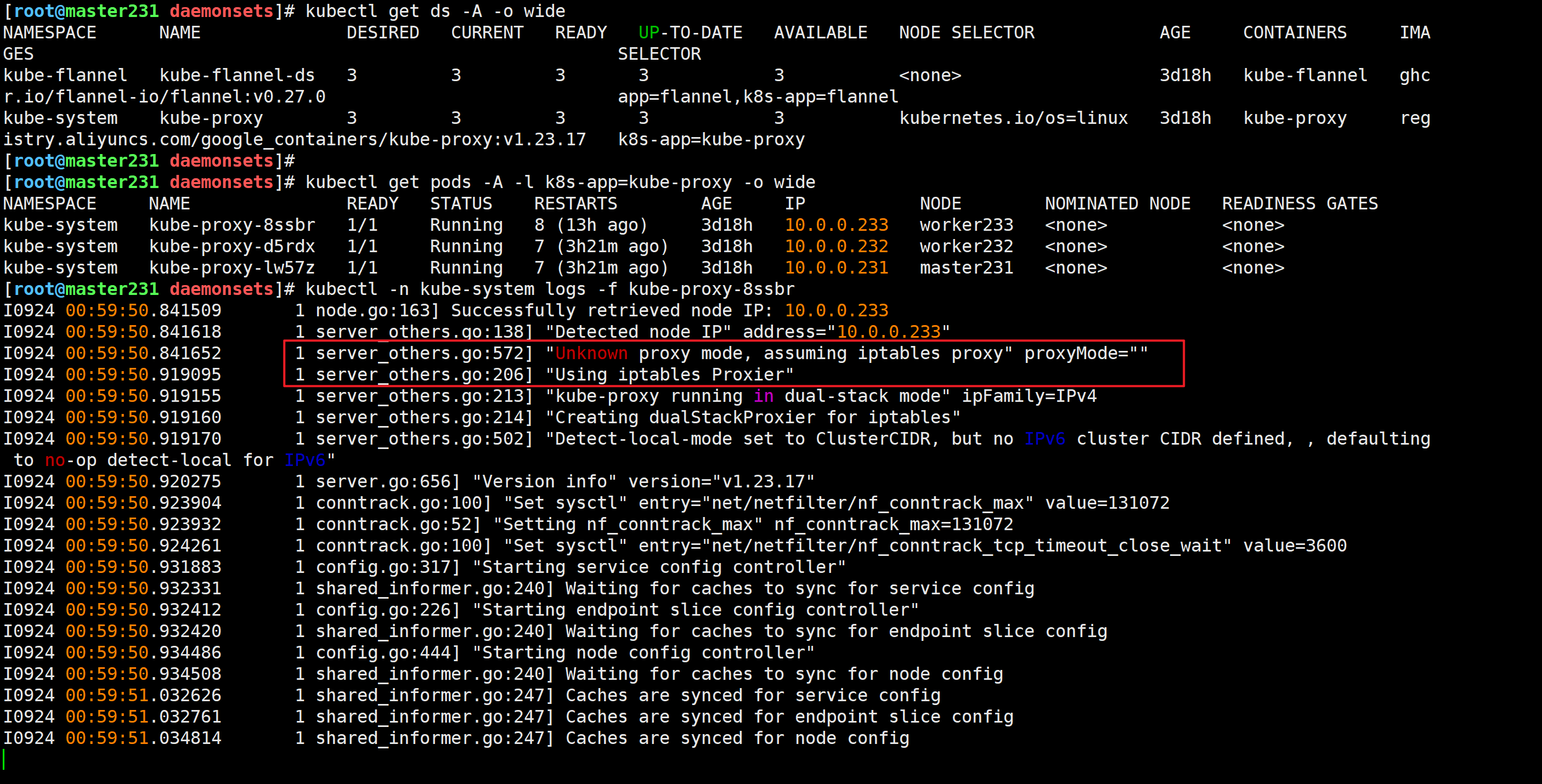

🌟切换kube-proxy的工作模式为ipvs

Service的底层工作模式

Service底层基于kube-proxy组件实现代理。

而kube-proxy组件支持iptables,ipvs两种工作模式。

看kube-proxy的Pod日志查看默认的代理模式

[root@master231 daemonsets]# kubectl -n kube-system logs -f kube-proxy-8ssbr

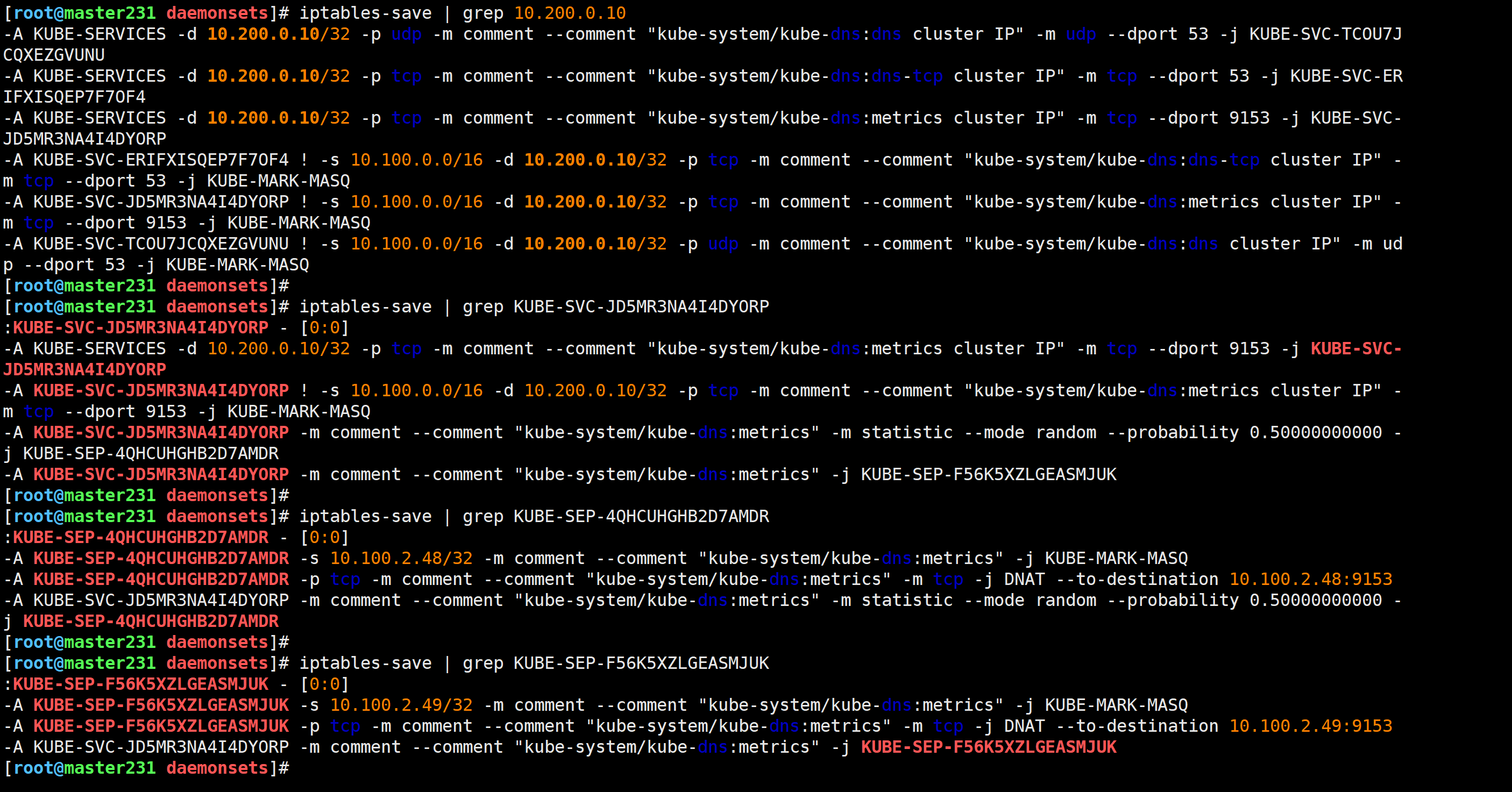

验证底层的确是基于iptables实现的

[root@master231 daemonsets]# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 21h

kube-system kube-dns ClusterIP 10.200.0.10 <none> 53/UDP,53/TCP,9153/TCP 3d18h

[root@master231 daemonsets]#

[root@master231 daemonsets]# iptables-save | grep 10.200.0.10

-A KUBE-SERVICES -d 10.200.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.200.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.200.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.100.0.0/16 -d 10.200.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.100.0.0/16 -d 10.200.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.100.0.0/16 -d 10.200.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

[root@master231 daemonsets]#

[root@master231 daemonsets]# iptables-save | grep KUBE-SVC-JD5MR3NA4I4DYORP

:KUBE-SVC-JD5MR3NA4I4DYORP - [0:0]

-A KUBE-SERVICES -d 10.200.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.100.0.0/16 -d 10.200.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-4QHCUHGHB2D7AMDR

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-SEP-F56K5XZLGEASMJUK

[root@master231 daemonsets]#

[root@master231 daemonsets]# iptables-save | grep KUBE-SEP-4QHCUHGHB2D7AMDR

:KUBE-SEP-4QHCUHGHB2D7AMDR - [0:0]

-A KUBE-SEP-4QHCUHGHB2D7AMDR -s 10.100.2.48/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-4QHCUHGHB2D7AMDR -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.100.2.48:9153

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-4QHCUHGHB2D7AMDR

[root@master231 daemonsets]#

[root@master231 daemonsets]# iptables-save | grep KUBE-SEP-F56K5XZLGEASMJUK

:KUBE-SEP-F56K5XZLGEASMJUK - [0:0]

-A KUBE-SEP-F56K5XZLGEASMJUK -s 10.100.2.49/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-F56K5XZLGEASMJUK -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.100.2.49:9153

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-SEP-F56K5XZLGEASMJUK

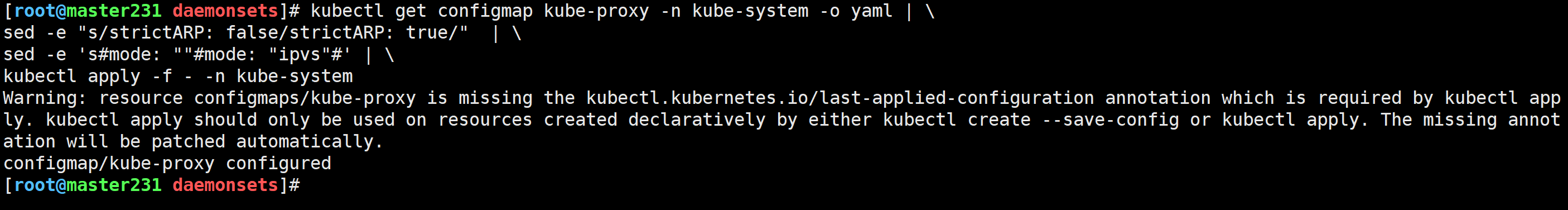

修改kube-proxy的代理模式

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

sed -e 's#mode: ""#mode: "ipvs"#' | \

kubectl apply -f - -n kube-system

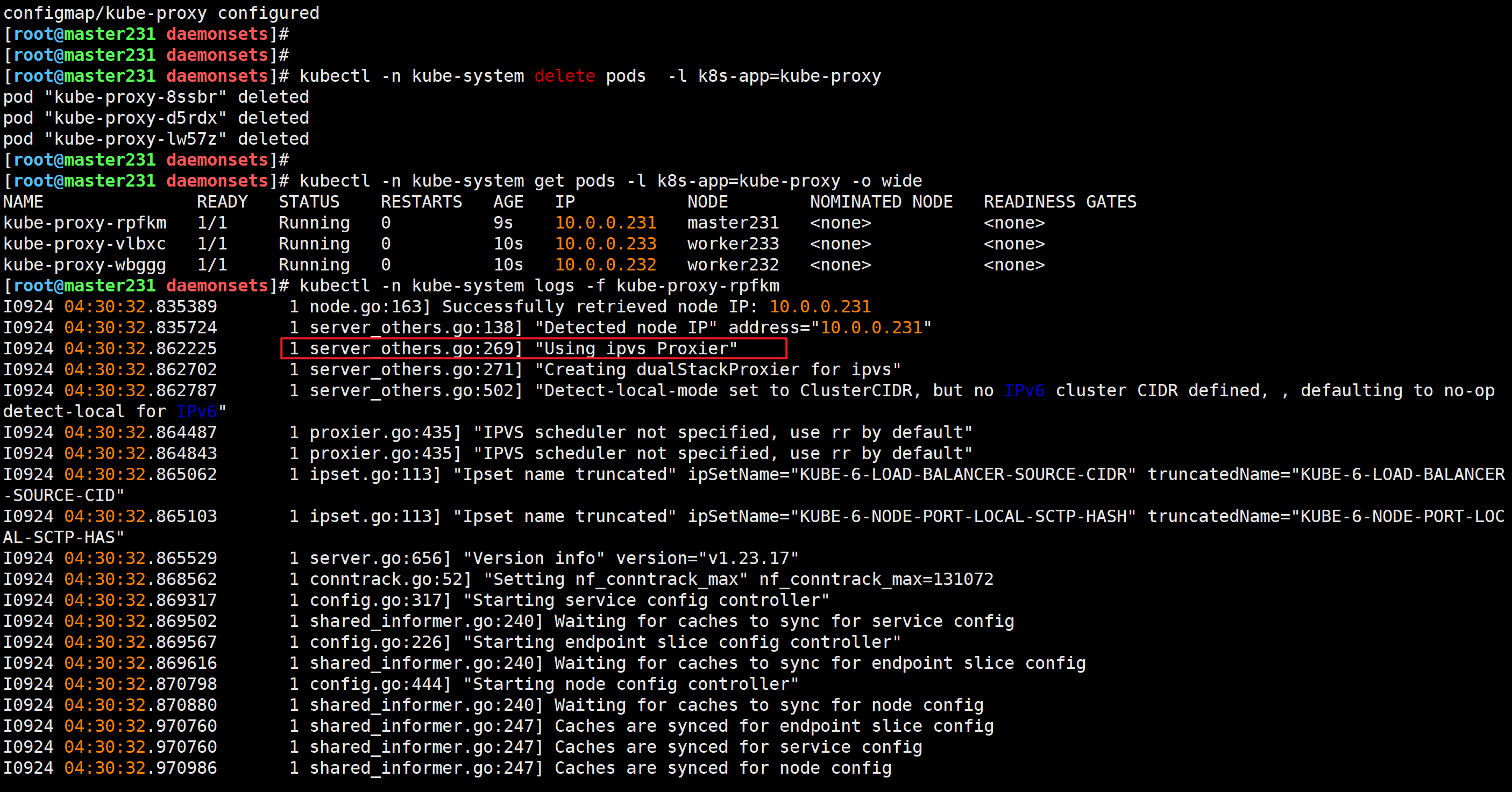

删除pod使得配置生效

[root@master231 daemonsets]# kubectl -n kube-system delete pods -l k8s-app=kube-proxy

[root@master231 daemonsets]# kubectl -n kube-system get pods -l k8s-app=kube-proxy -o wide

[root@master231 daemonsets]# kubectl -n kube-system logs -f kube-proxy-rpfkm

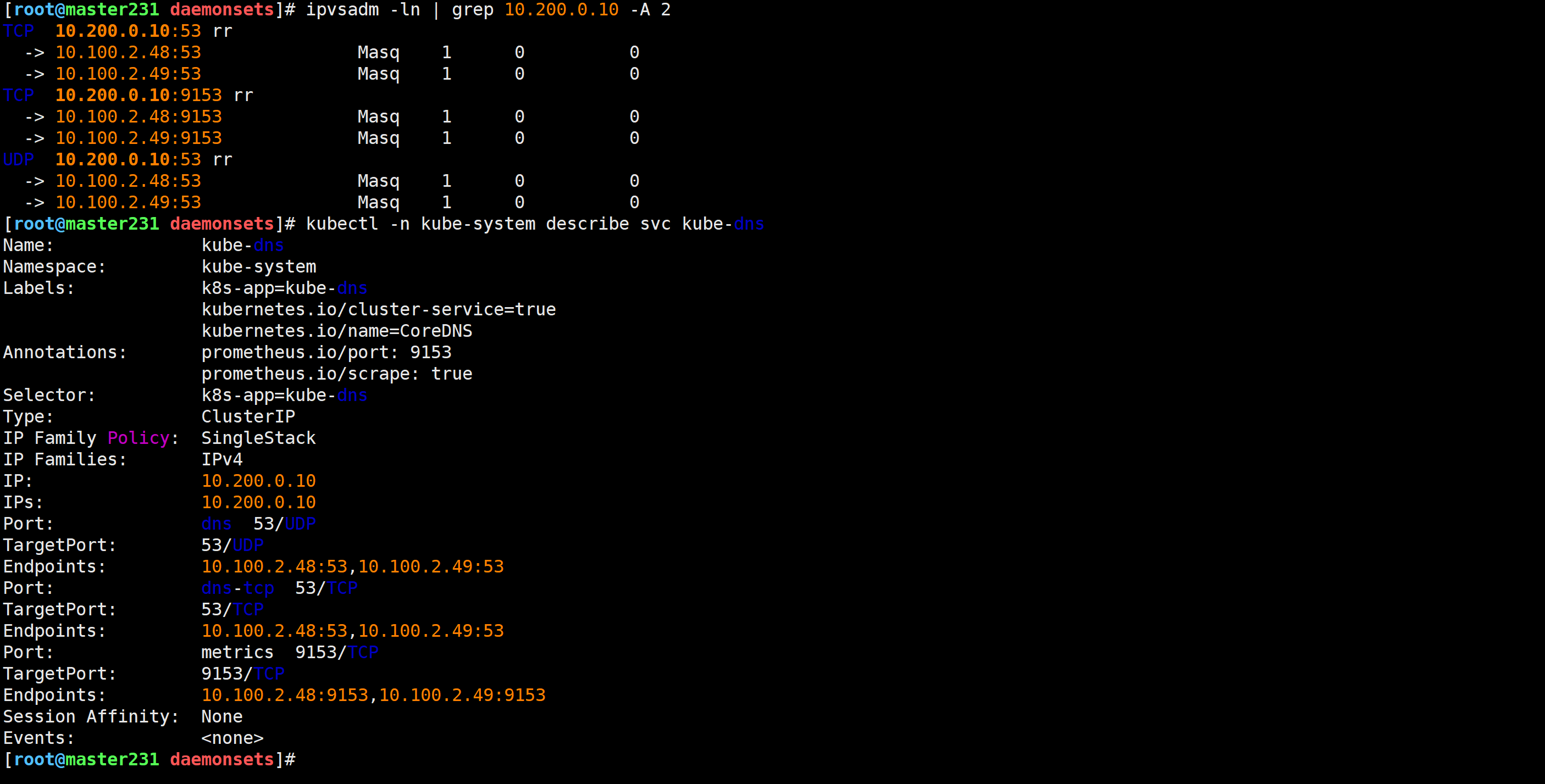

验证ipvs的实现逻辑

可读性较好,且性能更强。

[root@master231 daemonsets]# ipvsadm -ln | grep 10.200.0.10 -A 2

[root@master231 daemonsets]# kubectl -n kube-system describe svc kube-dns

🌟部署MetallB组件实现LoadBalancer

metallb概述

如果我们需要在自己的Kubernetes中暴露LoadBalancer的应用,那么Metallb是一个不错的解决方案。

Metallb官网地址:

https://metallb.universe.tf/installation/

https://metallb.universe.tf/configuration/_advanced_bgp_configuration/

如果想要做替代产品,也可以考虑国内kubesphere开源的OpenELB组件来代替。

参考链接: https://www.cnblogs.com/yinzhengjie/p/18962461

部署Metallb

配置kube-proxy代理模式为ipvs

kubectl get configmap kube-proxy -n kube-system -o yaml | \

sed -e "s/strictARP: false/strictARP: true/" | \

sed -e 's#mode: ""#mode: "ipvs"#' | \

kubectl apply -f - -n kube-system

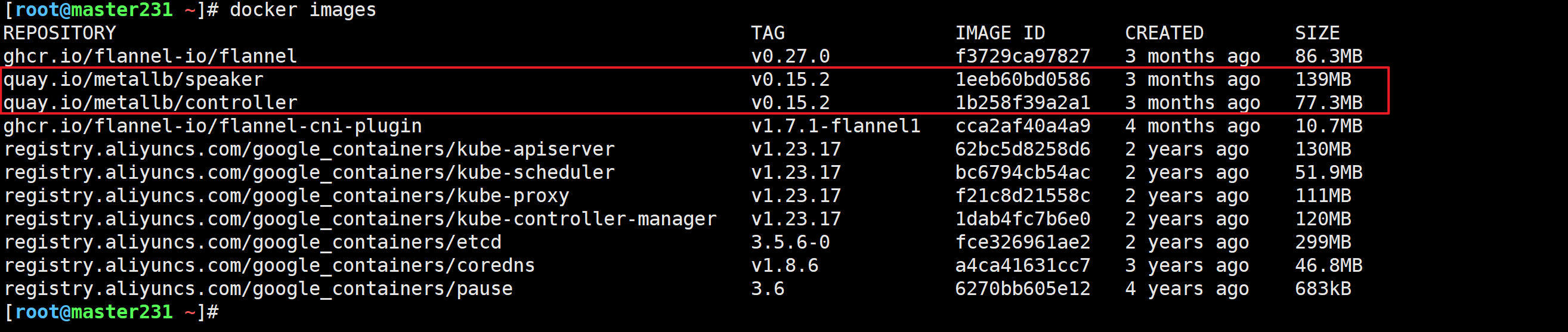

K8S集群所有节点导入镜像

docker load -i metallb-controller-v0.15.2.tar.gz

docker load -i metallb-speaker-v0.15.2.tar.gz

下载metallb组件的资源清单

[root@master231 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.15.2/config/manifests/metallb-native.yaml

部署Metallb

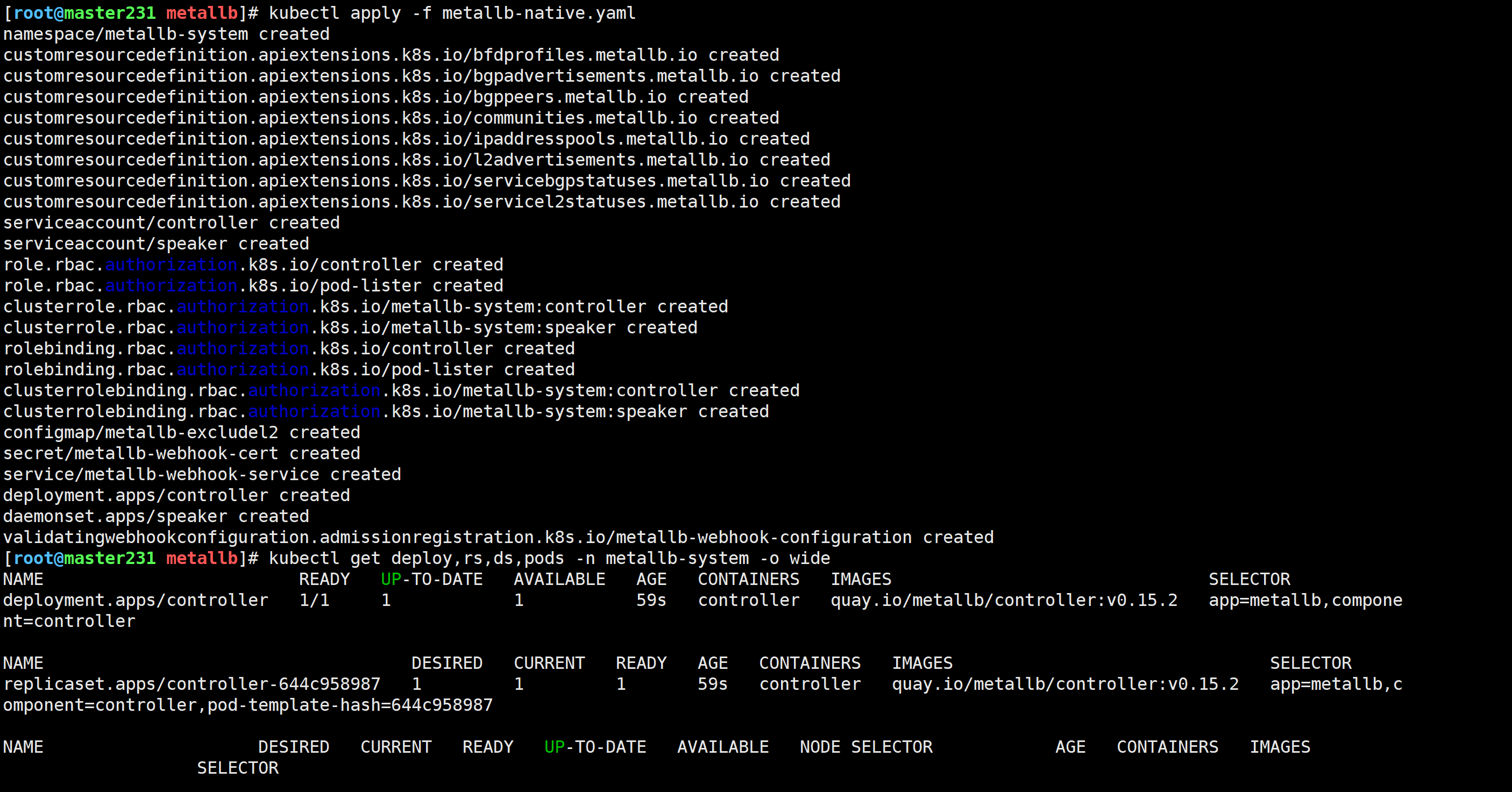

[root@master231 metallb]# kubectl apply -f metallb-native.yaml

创建存储池

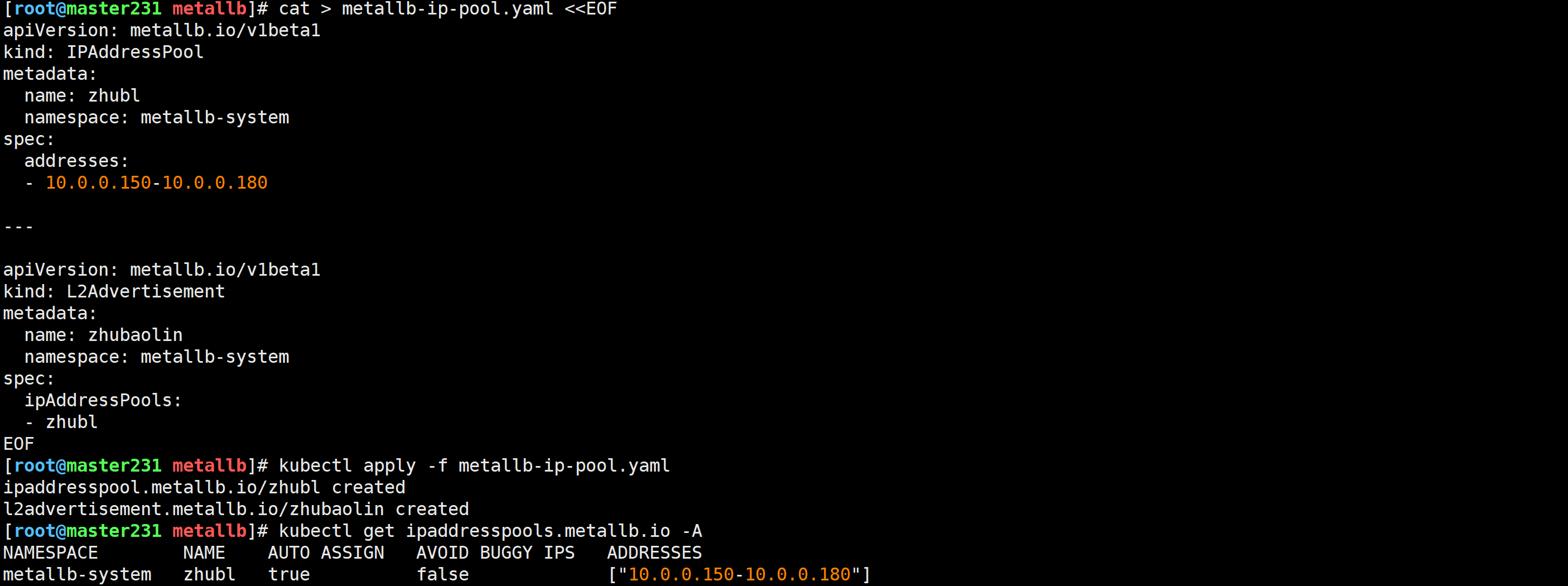

[root@master231 metallb]# cat > metallb-ip-pool.yaml <<EOF

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:name: zhublnamespace: metallb-system

spec:addresses:# 注意改为你自己为MetalLB分配的IP地址,改地址,建议设置为你windows能够访问的网段。【建议设置你的虚拟机Vmnet8网段】- 10.0.0.150-10.0.0.180---apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:name: zhubaolinnamespace: metallb-system

spec:ipAddressPools:- zhubl

EOF[root@master231 metallb]# kubectl apply -f metallb-ip-pool.yaml

[root@master231 metallb]# kubectl get ipaddresspools.metallb.io -A

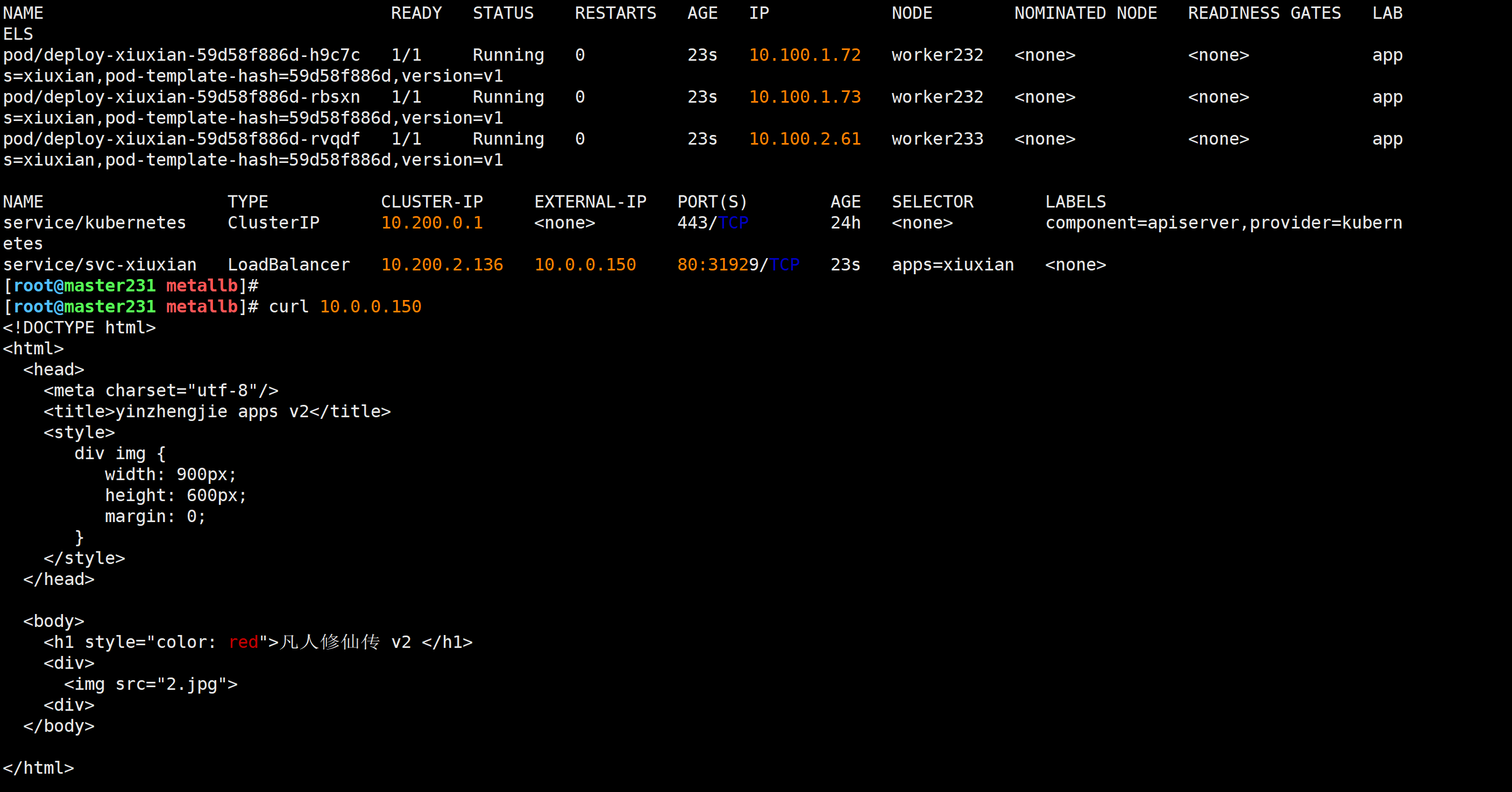

创建LoadBalancer的Service测试验证

[root@master231 metallb]# cat deploy-svc-xiuxian.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian

spec:replicas: 3selector:matchLabels:apps: xiuxiantemplate:metadata:labels:apps: xiuxianversion: v1spec:containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2ports:- containerPort: 80name: web---apiVersion: v1

kind: Service

metadata:name: svc-xiuxian

spec:type: LoadBalancerselector:apps: xiuxianports:- port: 80[root@master231 metallb]# kubectl apply -f deploy-svc-xiuxian.yaml

[root@master231 metallb]# kubectl get deploy,rs,po,svc -o wide --show-labels

删除资源

[root@master231 metallb]# kubectl delete -f deploy-svc-xiuxian.yaml

🌟修改Service的NodePort端口范围

默认情况下svc的NodePort端口范围是30000-32767

修改api-server的配置文件

[root@master231 ~]# cat /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:...name: kube-apiservernamespace: kube-system

spec:containers:- command:- kube-apiserver- --service-node-port-range=3000-50000...

让kubelet热加载静态Pod目录文件(选做)

[root@master231 ~]# mv /etc/kubernetes/manifests/kube-apiserver.yaml /opt/

[root@master231 ~]# mv /opt/kube-apiserver.yaml /etc/kubernetes/manifests/

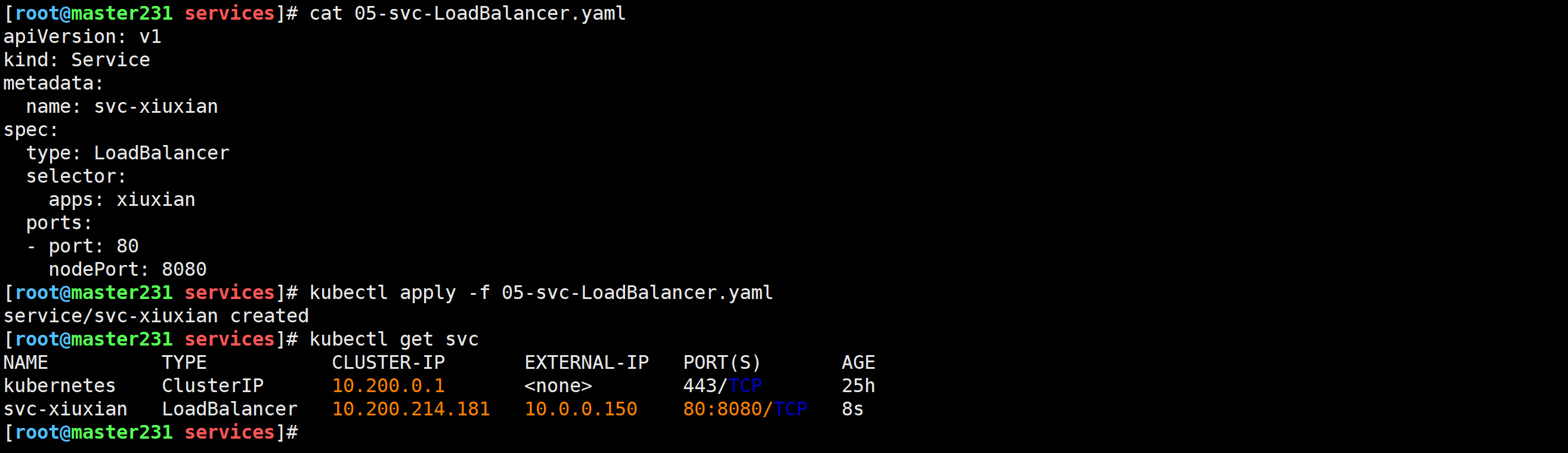

修改svc资源清单

[root@master231 services]# cat 05-svc-LoadBalancer.yaml

apiVersion: v1

kind: Service

metadata:name: svc-xiuxian

spec:type: LoadBalancerselector:apps: xiuxianports:- port: 80nodePort: 8080

[root@master231 services]# 创建资源

[root@master231 services]# kubectl apply -f 05-svc-LoadBalancer.yaml

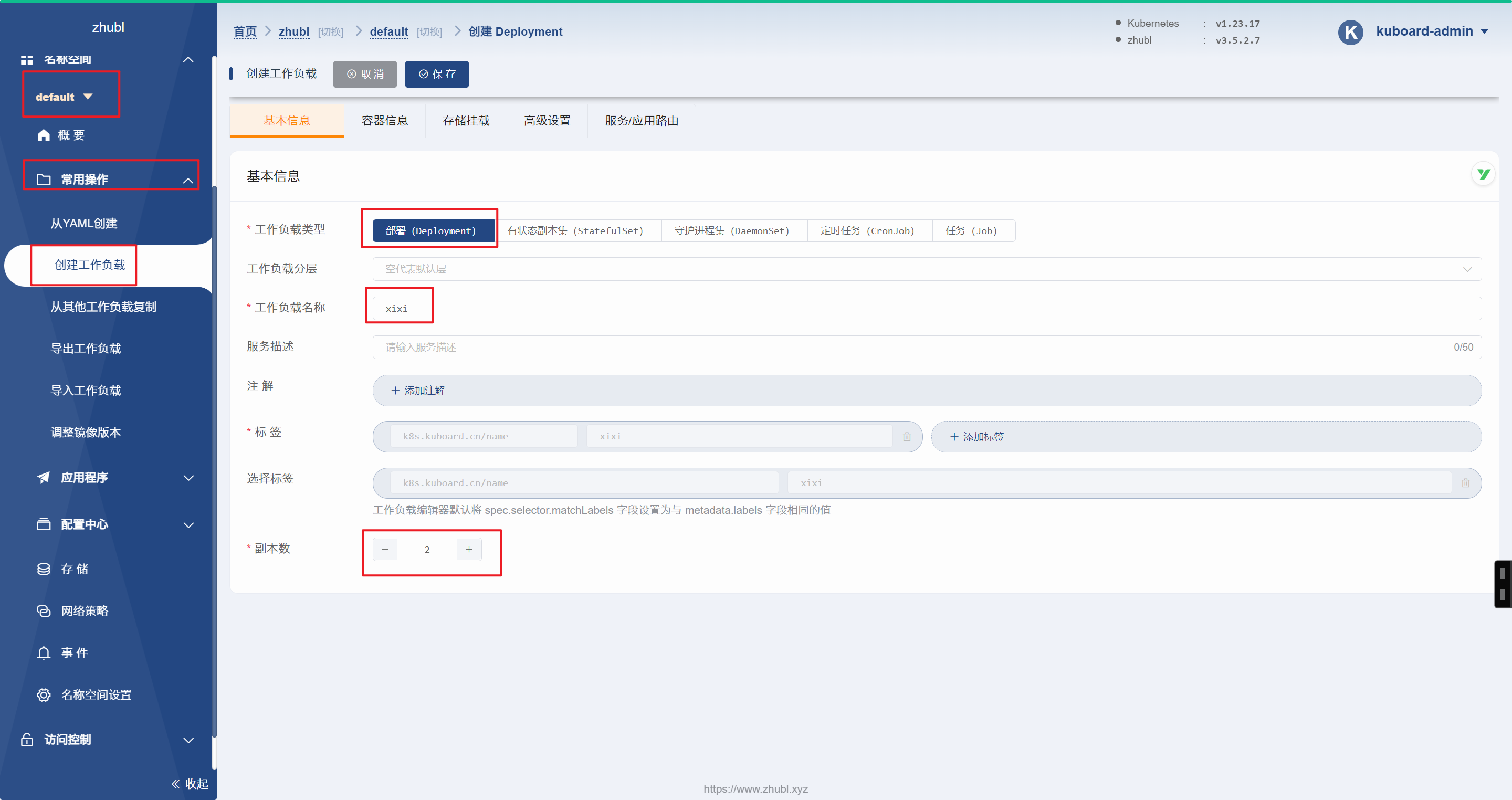

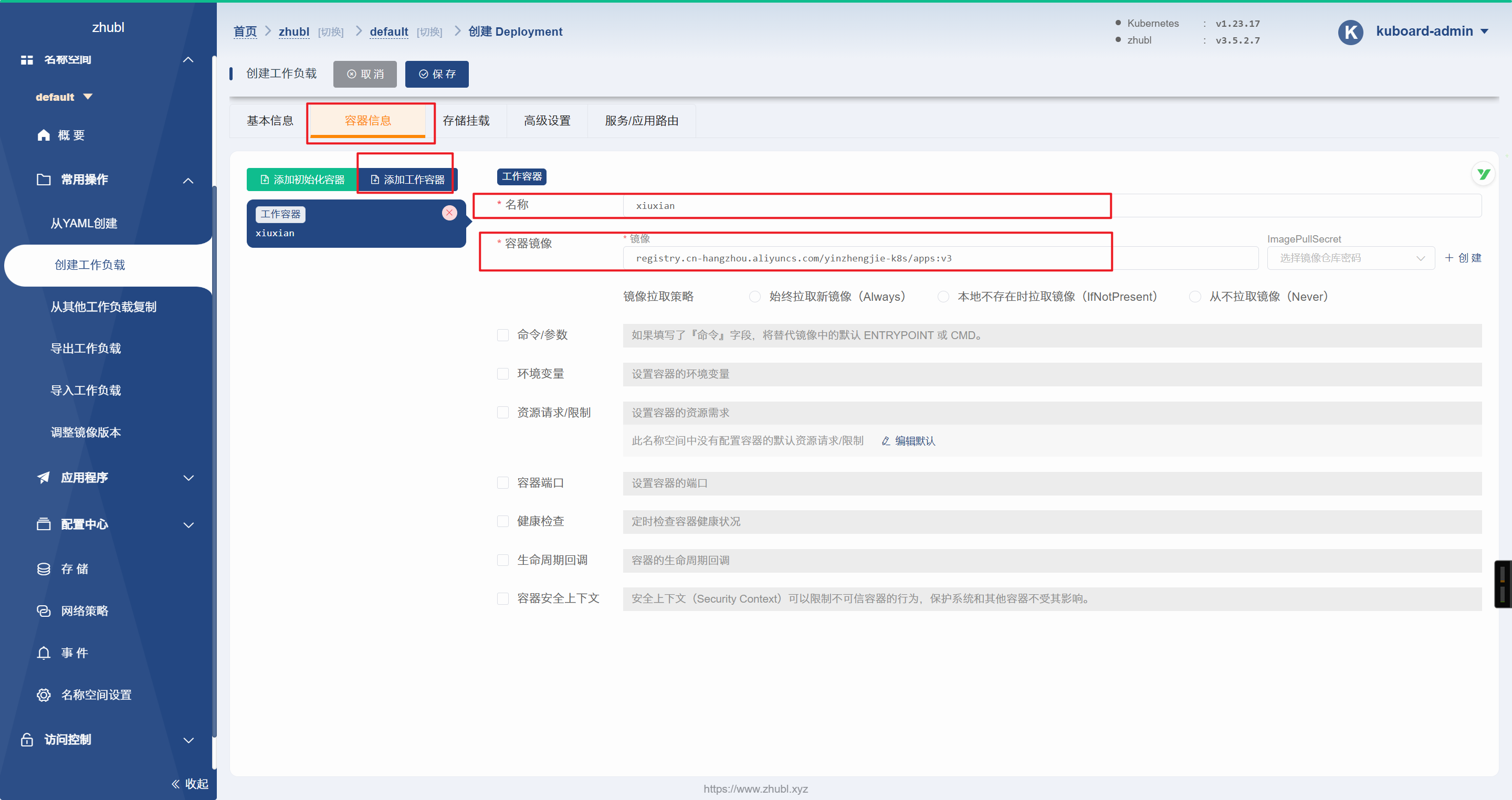

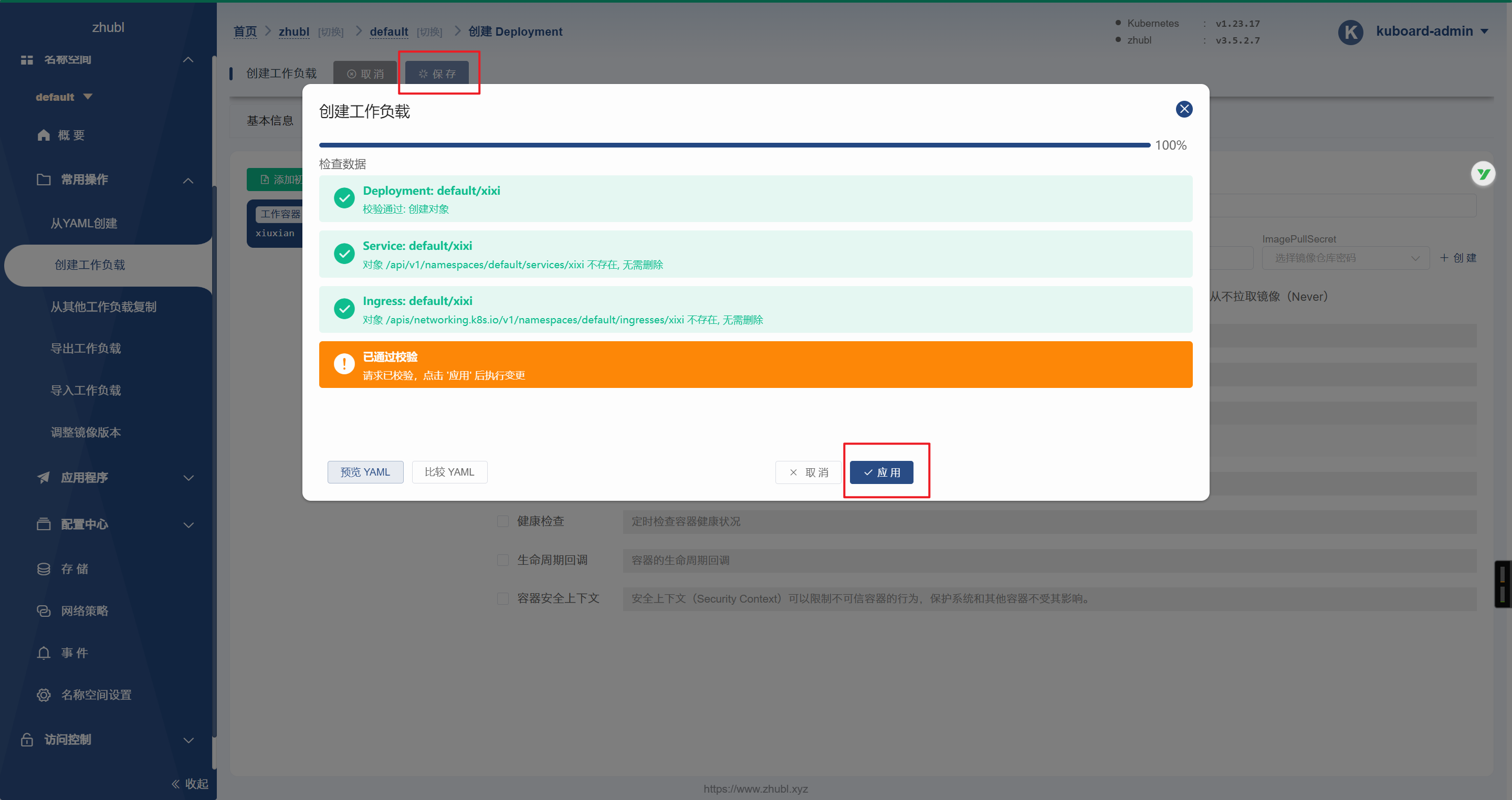

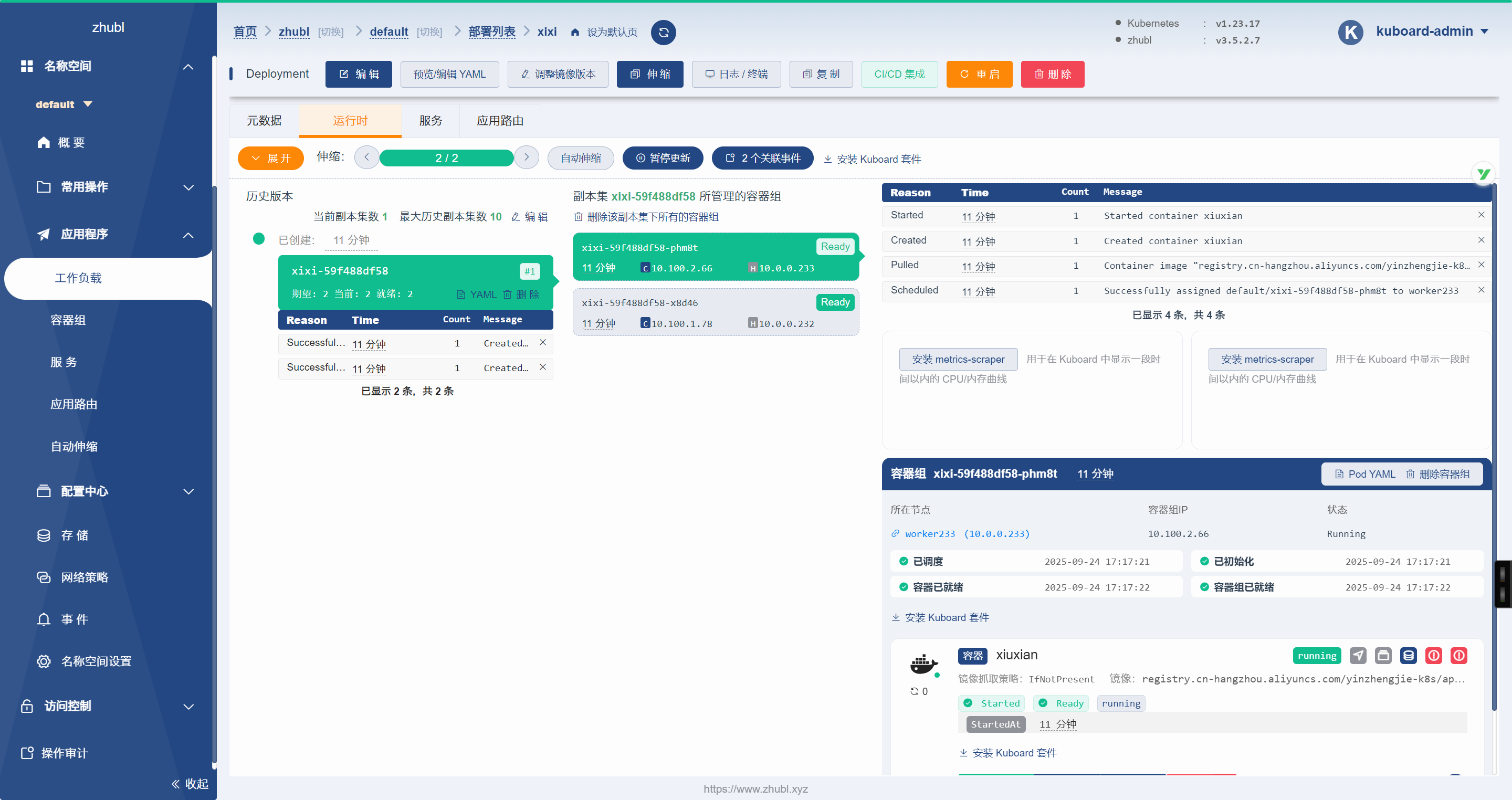

🌟kuboard管理K8S集群使用技巧

创建Deployment

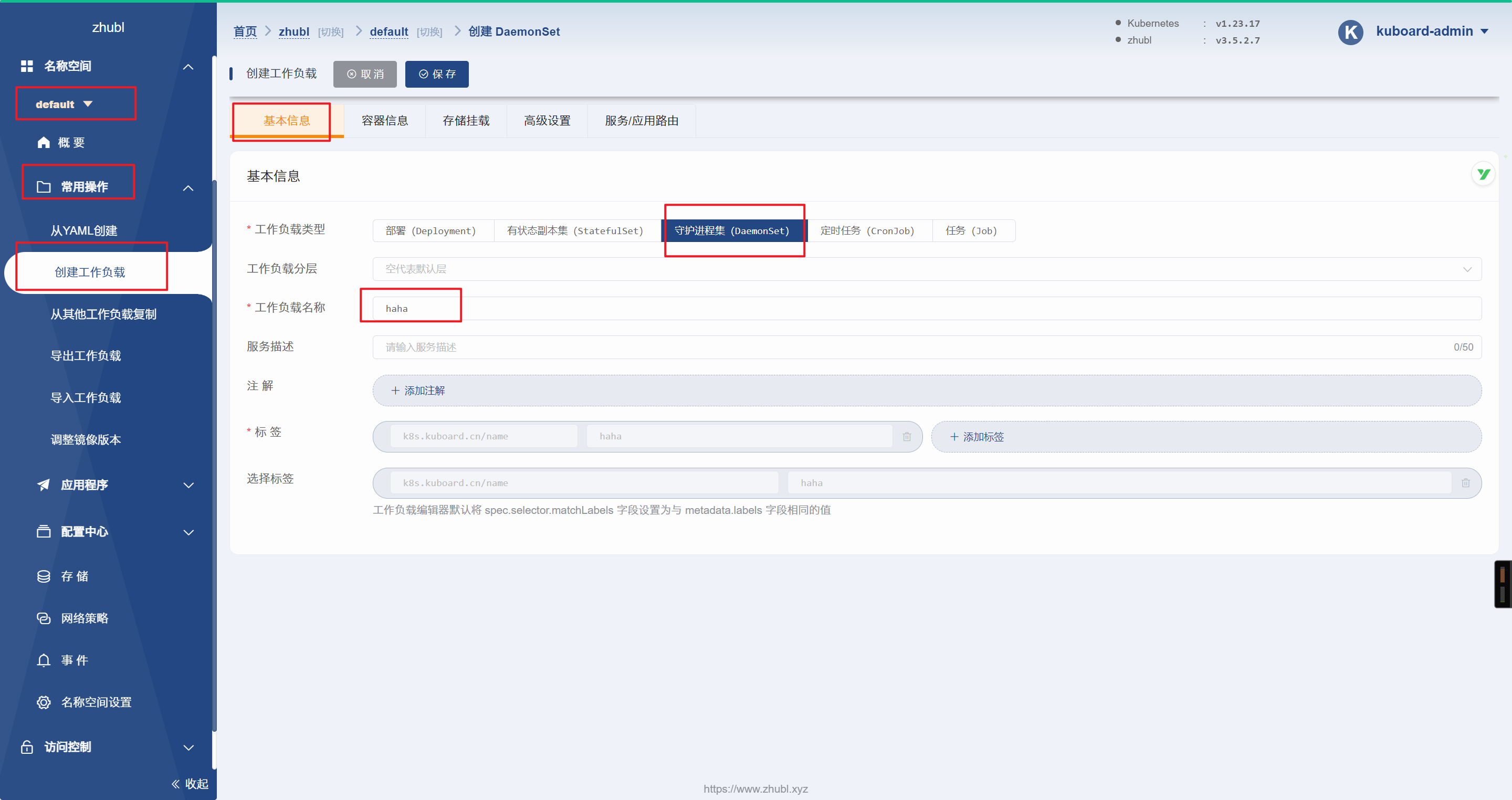

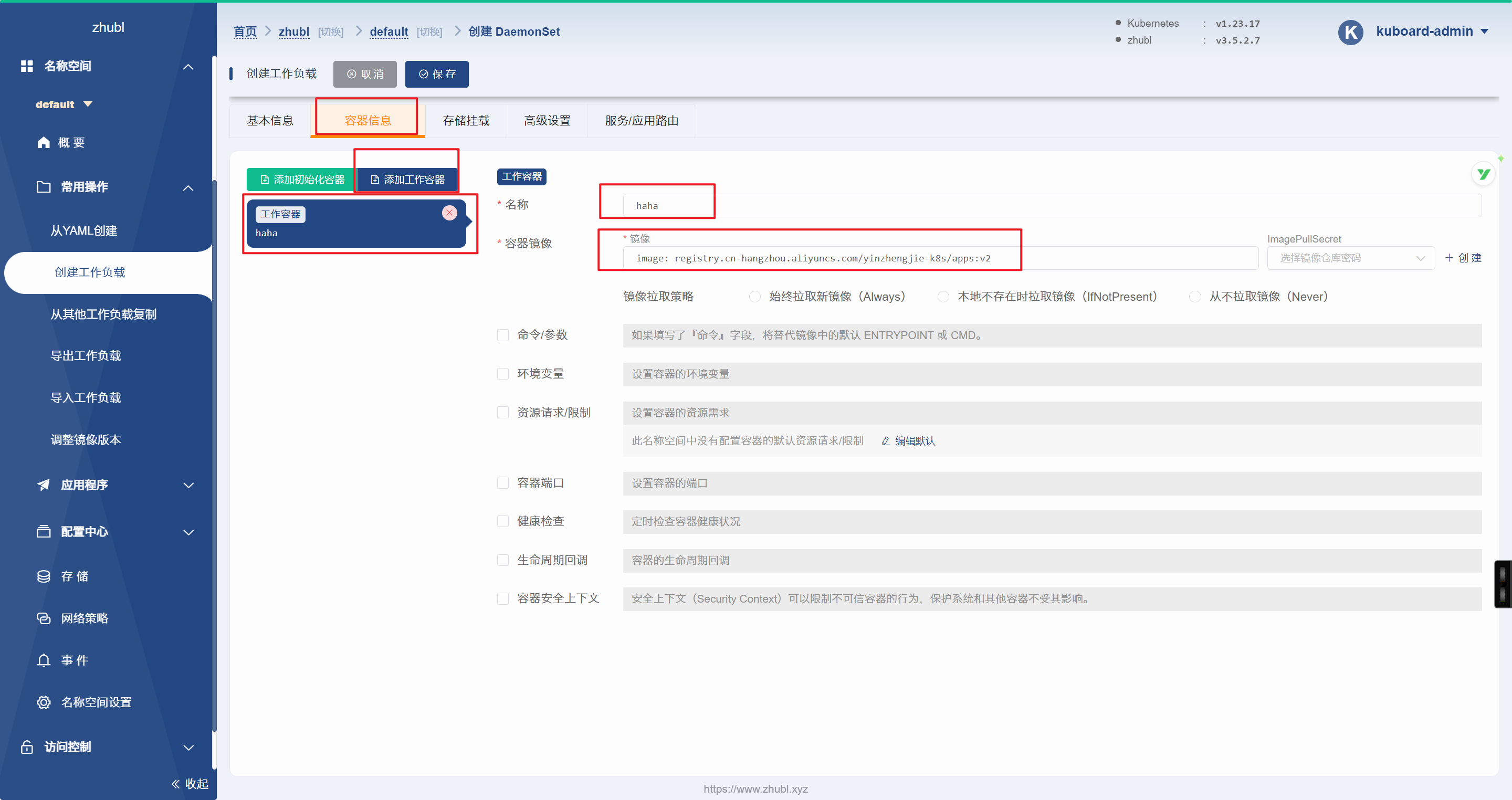

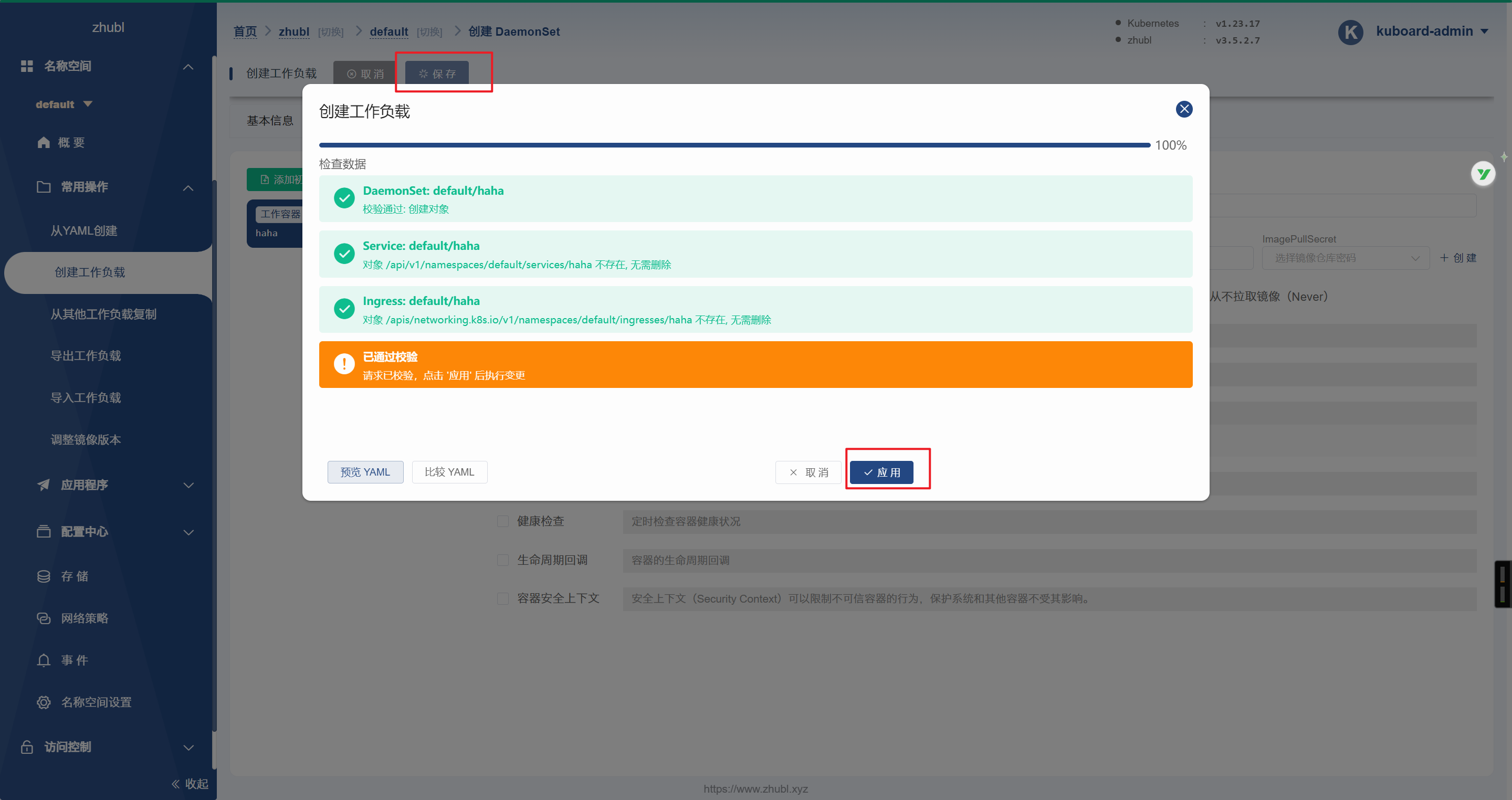

创建守护进程集(DaemonSet)

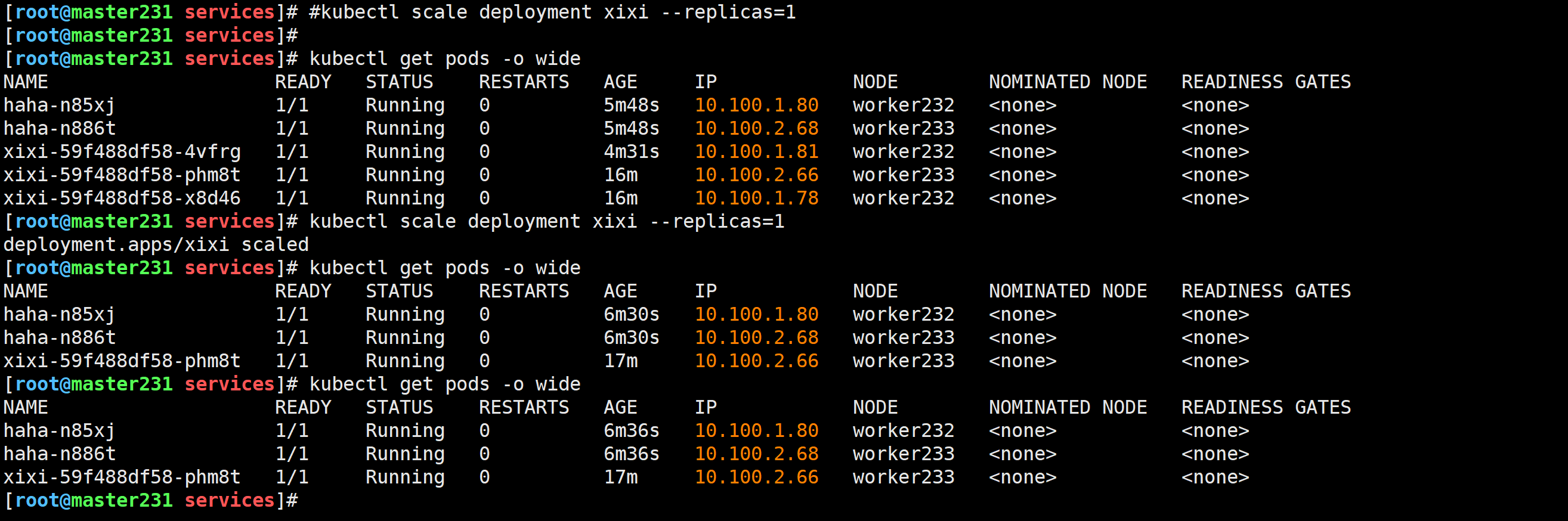

命令行修改pod的副本数量

[root@master231 ~]# kubectl scale deployment xixi --replicas=1