Ceph PG scrub 流程

Ceph PG scrub 流程

mgr端

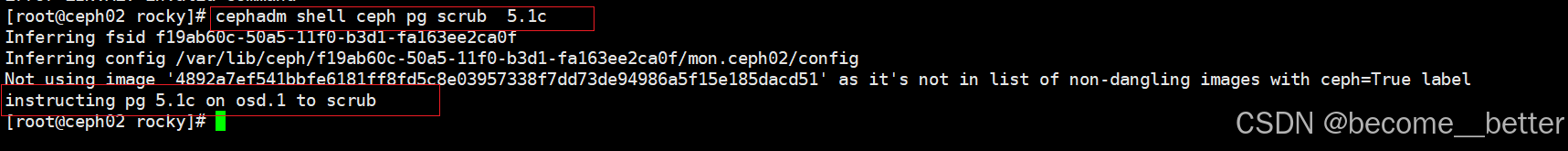

mgr向osd 发送 MOSDScrub2 消息,驱使OSD 进行 scrub,客户端锁打印的消息,就是mgr所提供的。

if (prefix == "pg scrub" ||prefix == "pg repair" ||prefix == "pg deep-scrub") {string scrubop = prefix.substr(3, string::npos);pg_t pgid;spg_t spgid;string pgidstr;cmd_getval(cmdctx->cmdmap, "pgid", pgidstr);if (!pgid.parse(pgidstr.c_str())) {ss << "invalid pgid '" << pgidstr << "'";cmdctx->reply(-EINVAL, ss);return true;}for (auto& con : p->second) {assert(HAVE_FEATURE(con->get_features(), SERVER_OCTOPUS));std::vector<spg_t> pgs = { spgid };con->send_message(new MOSDScrub2(monc->get_fsid(),epoch,pgs,scrubop == "repair",scrubop == "deep-scrub"));}ss << "instructing pg " << spgid << " on osd." << acting_primary ///////////////////////<< " to " << scrubop;cmdctx->reply(0, ss);return true;}

OSD 端

osd 有专门处理MOSDScrub2 消息的方法,作为scrub的入口,会将对应的消息包装加入op_shardedwq队列。

void OSD::handle_fast_scrub(MOSDScrub2 *m)

{dout(10) << __func__ << " " << *m << dendl;if (!require_mon_or_mgr_peer(m)) {m->put();return;}if (m->fsid != monc->get_fsid()) {dout(0) << __func__ << " fsid " << m->fsid << " != " << monc->get_fsid()<< dendl;m->put();return;}for (auto pgid : m->scrub_pgs) {enqueue_peering_evt(pgid,PGPeeringEventRef(std::make_shared<PGPeeringEvent>(m->epoch,m->epoch,PeeringState::RequestScrub(m->deep, m->repair))));}m->put();

}

处理器从op_shardedwq队列中取出消息,通过继承的方式找到对应的run方法执行。此时进入到scrub的专门流程中。

void PGScrub::run(OSD* osd, OSDShard* sdata, PGRef& pg, ThreadPool::TPHandle& handle) //here

{pg->scrub(epoch_queued, handle); pg->unlock();

}

从这个方法开始,我们进行到scrubber 的状态机中。对应类:https://github.com/ceph/ceph/blob/38057ce2c14ca9a6b81617399480510fb0eae951/src/osd/scrubber/scrub_machine.h。scrub的流程是通过状态机实现的。

初始状态:PrimaryIdle 事件: StartScrub 目标状态:ReservingReplicas

主要是对于epoch的操作和检查。

void PgScrubber::initiate_regular_scrub(epoch_t epoch_queued)

{dout(10) << fmt::format("{}: epoch:{} is PrimaryIdle:{}", __func__, epoch_queued,m_fsm->is_primary_idle())<< dendl;// we may have lost our Primary status while the message languished in the// queueif (check_interval(epoch_queued)) {dout(10) << "scrubber event -->> StartScrub epoch: " << epoch_queued<< dendl;m_fsm->process_event(StartScrub{});dout(10) << "scrubber event --<< StartScrub" << dendl;}

}sc::result PrimaryIdle::react(const StartScrub&)

{dout(10) << "PrimaryIdle::react(const StartScrub&)" << dendl;DECLARE_LOCALS;scrbr->reset_epoch();return transit<ReservingReplicas>();

}

因为ReservingReplicas 作为 Session 的子状态,在状态转换时会进入 Session 状态。ReservingReplicas 状态是一个等待并处理来自其他副本节点响应的中间状态6。

struct ReservingReplicas : sc::state<ReservingReplicas, Session>, NamedSimply {explicit ReservingReplicas(my_context ctx);~ReservingReplicas() = default;using reactions = mpl::list<sc::custom_reaction<ReplicaGrant>,sc::custom_reaction<ReplicaReject>,sc::transition<AbortIfReserving, PrimaryIdle>,sc::transition<RemotesReserved, ActiveScrubbing>>;ScrubTimePoint entered_at = ScrubClock::now();/// a "raw" event carrying a peer's grant responsesc::result react(const ReplicaGrant&);/// a "raw" event carrying a peer's denial responsesc::result react(const ReplicaReject&);

};在ReservingReplicas中向PSG的OSD发送 MOSDScrubReserve消息,进行 Scrub 操作时的资源预留。

bool ReplicaReservations::send_next_reservation_or_complete()

{if (m_next_to_request == m_sorted_secondaries.cend()) {// granted by all replicasdout(10) << "remote reservation complete" << dendl;log_success_and_duration();return true; // done}// send the next reservation requestconst auto peer = *m_next_to_request;const auto epoch = m_pg->get_osdmap_epoch();m_last_request_sent_nonce++;auto m = make_message<MOSDScrubReserve>(spg_t{m_pgid, peer.shard}, epoch, MOSDScrubReserve::REQUEST, m_pg->pg_whoami,m_last_request_sent_nonce);m_pg->send_cluster_message(peer.osd, m, epoch, false);m_last_request_sent_at = ScrubClock::now();dout(10) << fmt::format("reserving {} (the {} of {} replicas) e:{} nonce:{}",*m_next_to_request, active_requests_cnt() + 1,m_sorted_secondaries.size(), epoch, m_last_request_sent_nonce)<< dendl;m_next_to_request++;return false;

}

主OSD 接受从OSD的资源预约请求,分类进行处理。

- REQUEST: 副本接收到主 OSD 发来的资源预约请求。

- GRANT: 主 OSD 接收到副本同意预约的确认。

- REJECT: 主 OSD 接收到副本拒绝预约的通知。

- RELEASE: 主 OSD 或副本发起释放已占用资源的请求。

void PgScrubber::handle_scrub_reserve_msgs(OpRequestRef op)

{dout(10) << fmt::format("{}: {}", __func__, *op->get_req()) << dendl;op->mark_started();if (should_drop_message(op)) {return;}auto m = op->get_req<MOSDScrubReserve>();switch (m->type) {case MOSDScrubReserve::REQUEST:m_fsm->process_event(ReplicaReserveReq{op, m->from});break;case MOSDScrubReserve::GRANT:m_fsm->process_event(ReplicaGrant{op, m->from});break;case MOSDScrubReserve::REJECT:m_fsm->process_event(ReplicaReject{op, m->from});break;case MOSDScrubReserve::RELEASE:m_fsm->process_event(ReplicaRelease{op, m->from});break;}

}

初始状态:ReservingReplicas 事件:ReplicaGran 目标状态:ActiveScrubbing

sc::result ReservingReplicas::react(const ReplicaGrant& ev)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "ReservingReplicas::react(const ReplicaGrant&)" << dendl;const auto& m = ev.m_op->get_req<MOSDScrubReserve>();

6auto& session = context<Session>();ceph_assert(session.m_reservations);if (session.m_reservations->handle_reserve_grant(*m, ev.m_from)) {// we are done with the reservation processreturn transit<ActiveScrubbing>();}return discard_event();

}

在这里进行了scrub的初始化。

ActiveScrubbing::ActiveScrubbing(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/ActiveScrubbing")

{dout(10) << "-- state -->> ActiveScrubbing" << dendl;DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesauto& session = context<Session>();session.m_osd_counters->inc(session.m_counters_idx->active_started_cnt);scrbr->get_clog()->debug()<< fmt::format("{} {} starts", pg_id.pgid, scrbr->get_op_mode_text());scrbr->on_init();

}因为 ActiveScrubbing 状态的初始子状态(Initial Substate) 是 PendingTimer。当状态机进入 ActiveScrubbing 状态时,它会自动进入其初始子状态 PendingTimer。

初始状态:ActiveScrubbing 事件:无 目标状态:PendingTimer

struct ActiveScrubbing: sc::state<ActiveScrubbing, Session, PendingTimer>, NamedSimply {explicit ActiveScrubbing(my_context ctx); // 构造函数~ActiveScrubbing(); // 析构函数

};

其将PGScrubResched 包装放入到OSD的工作队列中。

void OSDService::queue_for_scrub_resched(PG* pg, Scrub::scrub_prio_t with_priority)

{// Resulting scrub event: 'InternalSchedScrub'queue_scrub_event_msg_default_cost<PGScrubResched>(pg, with_priority);

}PendingTimer::PendingTimer(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/PendingTimer")

{dout(10) << "-- state -->> Session/Act/PendingTimer" << dendl;DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesauto sleep_time = scrbr->get_scrub_sleep_time();if (sleep_time.count()) {// the following log line is used by osd-scrub-test.shdout(20) << __func__ << " scrub state is PendingTimer, sleeping" << dendl;dout(20) << "PgScrubber: " << scrbr->get_spgid()<< " sleeping for " << sleep_time << dendl;m_sleep_timer = machine.schedule_timer_event_after<SleepComplete>(sleep_time);} else {scrbr->queue_for_scrub_resched(Scrub::scrub_prio_t::high_priority); //here}

}

从OSD的工作队列取出 PGScrubResched 消息,处理 InternalSchedScrub 事件。

void PGScrubResched::run(OSD* osd,OSDShard* sdata,PGRef& pg,ThreadPool::TPHandle& handle)

{pg->scrub_send_scrub_resched(epoch_queued, handle);pg->unlock();

}void PgScrubber::send_scrub_resched(epoch_t epoch_queued)

{dout(10) << "scrubber event -->> " << __func__ << " epoch: " << epoch_queued<< dendl;if (is_message_relevant(epoch_queued)) {m_fsm->process_event(InternalSchedScrub{});}dout(10) << "scrubber event --<< " << __func__ << dendl;

}

初始状态:PendingTimer 事件:InternalSchedScrub 目标状态:NewChunk

struct PendingTimer : sc::state<PendingTimer, ActiveScrubbing>, NamedSimply {explicit PendingTimer(my_context ctx);using reactions = mpl::list<sc::transition<InternalSchedScrub, NewChunk>,sc::custom_reaction<SleepComplete>>;ScrubTimePoint entered_at = ScrubClock::now();ScrubMachine::timer_event_token_t m_sleep_timer;sc::result react(const SleepComplete&);

};

在NewChunk方法中会将PGScrubChunkIsFree 消息包装放入到OSD队列中。

void OSDService::queue_scrub_chunk_free(PG* pg, Scrub::scrub_prio_t with_priority, uint64_t cost)

{// Resulting scrub event: 'SelectedChunkFree'queue_scrub_event_msg<PGScrubChunkIsFree>(pg, with_priority, cost);

}template <class MSG_TYPE>

void OSDService::queue_scrub_event_msg(PG* pg,Scrub::scrub_prio_t with_priority,uint64_t cost)

{const auto epoch = pg->get_osdmap_epoch();auto msg = new MSG_TYPE(pg->get_pgid(), epoch);dout(15) << "queue a scrub event (" << *msg << ") for " << *pg << ". Epoch: " << epoch << dendl;enqueue_back(OpSchedulerItem(unique_ptr<OpSchedulerItem::OpQueueable>(msg), cost,pg->scrub_requeue_priority(with_priority), ceph_clock_now(), 0, epoch));

}/*** Preconditions:* - preemption data was set* - epoch start was updated*/

NewChunk::NewChunk(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/NewChunk")

{dout(10) << "-- state -->> Session/Act/NewChunk" << dendl;DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesscrbr->get_preemptor().adjust_parameters();// choose range to work on// select_range_n_notify() will signal either SelectedChunkFree or// ChunkIsBusy. If 'busy', we transition to Blocked, and wait for the// range to become available.scrbr->select_range_n_notify();

}线程将PGScrubChunkIsFree 消息从队列中取出,得到SelectedChunkFree 事件。

void PGScrubChunkIsFree::run([[maybe_unused]] OSD* osd,OSDShard* sdata,PGRef& pg,ThreadPool::TPHandle& handle)

{pg->scrub_send_chunk_free(epoch_queued, handle);pg->unlock();

}void scrub_send_chunk_free(epoch_t queued, ThreadPool::TPHandle& handle){forward_scrub_event(&ScrubPgIF::send_chunk_free, queued, "SelectedChunkFree");}初始状态:ActiveScrubbing 事件:SelectedChunkFree 目标状态:WaitPushes

sc::result NewChunk::react(const SelectedChunkFree&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "NewChunk::react(const SelectedChunkFree&)" << dendl;scrbr->set_subset_last_update(scrbr->search_log_for_updates());return transit<WaitPushes>();

}

构造函数会触发ActivePushesUpd 事件。

WaitPushes::WaitPushes(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/WaitPushes")

{dout(10) << " -- state -->> Session/Act/WaitPushes" << dendl;post_event(ActivePushesUpd{});

}

初始状态:WaitPushes 事件:ActivePushesUpd 目标状态:WaitLastUpdate

sc::result WaitPushes::react(const ActivePushesUpd&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10)<< "WaitPushes::react(const ActivePushesUpd&) pending_active_pushes: "<< scrbr->pending_active_pushes() << dendl;if (!scrbr->pending_active_pushes()) {// done waitingreturn transit<WaitLastUpdate>();}return discard_event();

}

构造函数会触发 UpdatesApplied 事件。

WaitLastUpdate::WaitLastUpdate(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/WaitLastUpdate")

{dout(10) << " -- state -->> Session/Act/WaitLastUpdate" << dendl;post_event(UpdatesApplied{});

}

UpdatesApplied 事件 继续触发InternalAllUpdates 事件。

void WaitLastUpdate::on_new_updates(const UpdatesApplied&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "WaitLastUpdate::on_new_updates(const UpdatesApplied&)" << dendl;if (scrbr->has_pg_marked_new_updates()) {post_event(InternalAllUpdates{});} else {// will be requeued by op_applieddout(10) << "wait for EC read/modify/writes to queue" << dendl;}

}

初始状态:WaitLastUpdate 事件:InternalAllUpdates 目标状态:BuildMap

sc::result WaitLastUpdate::react(const InternalAllUpdates&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "WaitLastUpdate::react(const InternalAllUpdates&)" << dendl;scrbr->get_replicas_maps(scrbr->get_preemptor().is_preemptable());return transit<BuildMap>();

}构造函数会触发 IntLocalMapDone 事件。

BuildMap::BuildMap(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/BuildMap")

{dout(10) << " -- state -->> Session/Act/BuildMap" << dendl;DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesauto& session = context<Session>();// no need to check for an epoch change, as all possible flows that brought// us here have a check_interval() verification of their final event.if (scrbr->get_preemptor().was_preempted()) {// we were preempted, either directly or by a replicadout(10) << __func__ << " preempted!!!" << dendl;scrbr->mark_local_map_ready();post_event(IntBmPreempted{});session.m_perf_set->inc(scrbcnt_preempted);} else {// note that build_primary_map_chunk() may return -EINPROGRESS, but no// other error value (as those errors would cause it to crash the OSD).if (scrbr->build_primary_map_chunk() == -EINPROGRESS) {// must wait for the backend to finish. No specific event provided.// build_primary_map_chunk() has already requeued us.dout(20) << "waiting for the backend..." << dendl;} else {// the local map was createdpost_event(IntLocalMapDone{}); //here}}

}

初始状态:BuildMap 事件:IntLocalMapDone 目标状态:WaitReplicas

sc::result BuildMap::react(const IntLocalMapDone&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "BuildMap::react(const IntLocalMapDone&)" << dendl;scrbr->mark_local_map_ready();return transit<WaitReplicas>();

}构造函数会触发 GotReplicas 事件。

WaitReplicas::WaitReplicas(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/WaitReplicas")

{dout(10) << "-- state -->> Session/Act/WaitReplicas" << dendl;post_event(GotReplicas{});

}

初始状态:WaitReplicas 事件:GotReplicas 目标状态:WaitDigestUpdate

sc::result WaitReplicas::react(const GotReplicas&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "WaitReplicas::react(const GotReplicas&)" << dendl;if (!all_maps_already_called && scrbr->are_all_maps_available()) {dout(10) << "WaitReplicas::react(const GotReplicas&) got all" << dendl;all_maps_already_called = true;// were we preempted?if (scrbr->get_preemptor().disable_and_test()) { // a test&setdout(10) << "WaitReplicas::react(const GotReplicas&) PREEMPTED!" << dendl;return transit<PendingTimer>();} else {scrbr->maps_compare_n_cleanup();return transit<WaitDigestUpdate>(); //here}} else {return discard_event();}

}

构造函数会触发DigestUpdate 事件。

WaitDigestUpdate::WaitDigestUpdate(my_context ctx): my_base(ctx), NamedSimply(context<ScrubMachine>().m_scrbr, "Session/Act/WaitDigestUpdate")

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "-- state -->> Session/Act/WaitDigestUpdate" << dendl;// perform an initial check: maybe we already// have all the updates we need:// (note that DigestUpdate is usually an external event)post_event(DigestUpdate{});

}

对 DigestUpdate 事件进行处理,会将PGScrubScrubFinished 消息放入到队列中。

sc::result WaitDigestUpdate::react(const DigestUpdate&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "WaitDigestUpdate::react(const DigestUpdate&)" << dendl;// on_digest_updates() will either:// - do nothing - if we are still waiting for updates, or// - finish the scrubbing of the current chunk, and:// - send NextChunk, or// - send ScrubFinishedscrbr->on_digest_updates();return discard_event();

}void OSDService::queue_scrub_is_finished(PG *pg)

{// Resulting scrub event: 'ScrubFinished'queue_scrub_event_msg_default_cost<PGScrubScrubFinished>(pg, Scrub::scrub_prio_t::high_priority);

}PGScrubScrubFinished 消息 会触发 ScrubFinished 事件。

void PGScrubScrubFinished::run([[maybe_unused]] OSD* osd,OSDShard* sdata,PGRef& pg,ThreadPool::TPHandle& handle)

{pg->scrub_send_scrub_is_finished(epoch_queued, handle);pg->unlock();

}void scrub_send_scrub_is_finished(epoch_t queued, ThreadPool::TPHandle& handle){forward_scrub_event(&ScrubPgIF::send_scrub_is_finished, queued, "ScrubFinished");}

初始状态:WaitDigestUpdate 事件:ScrubFinishe 目标状态:PrimaryIdle 此时scrub流程结束。

sc::result WaitDigestUpdate::react(const ScrubFinished&)

{DECLARE_LOCALS; // 'scrbr' & 'pg_id' aliasesdout(10) << "WaitDigestUpdate::react(const ScrubFinished&)" << dendl;auto& session = context<Session>();session.m_osd_counters->inc(session.m_counters_idx->successful_cnt);// set the 'scrub duration'auto duration = machine.get_time_scrubbing();scrbr->set_scrub_duration(ceil<milliseconds>(duration));machine.m_session_started_at.reset();session.m_osd_counters->tinc(session.m_counters_idx->successful_elapsed, duration);scrbr->scrub_finish(); //herereturn transit<PrimaryIdle>();

}

这个方法会打印 //Aug 27 08:41:21 ceph02 ceph-osd[499833]: log_channel(cluster) log [DBG] : 3.3 deep-scrub ok 日志,标志scrub的结束。

void PgScrubber::scrub_finish()

{dout(10) << __func__ << " before flags: " << m_flags << ". repair state: "<< (state_test(PG_STATE_REPAIR) ? "repair" : "no-repair")<< ". deep_scrub_on_error: " << m_flags.deep_scrub_on_error << dendl;ceph_assert(m_pg->is_locked());ceph_assert(is_queued_or_active());// if the repair request comes from auto-repair and there is a large// number of objects known to be damaged, we cancel the auto-repairif (m_is_repair && m_flags.auto_repair &&ScrubJob::is_repairs_count_limited(m_active_target->urgency()) &&m_be->authoritative_peers_count() >static_cast<int>(m_pg->cct->_conf->osd_scrub_auto_repair_num_errors)) {dout(5) << fmt::format("{}: undoing the repair. Damaged objects count ({}) is ""above configured limit ({})",__func__, m_be->authoritative_peers_count(),m_pg->cct->_conf->osd_scrub_auto_repair_num_errors)<< dendl;state_clear(PG_STATE_REPAIR); // not expected to be set, anywaym_is_repair = false;update_op_mode_text();}m_be->update_repair_status(m_is_repair);// if the count of damaged objects found in shallow-scrubbing is not// too high - do a deep scrub to auto repairbool do_auto_scrub = false;if (m_flags.deep_scrub_on_error && m_be->authoritative_peers_count() &&m_be->authoritative_peers_count() <=static_cast<int>(m_pg->cct->_conf->osd_scrub_auto_repair_num_errors)) {ceph_assert(!m_is_deep);do_auto_scrub = true;dout(10) << fmt::format("{}: will initiate a deep scrub to fix {} errors",__func__,m_be->authoritative_peers_count())<< dendl;}m_flags.deep_scrub_on_error = false;// type-specific finish (can tally more errors)_scrub_finish();/// \todo fix the relevant scrub test so that we would not need the extra log/// line here (even if the following 'if' is false)if (m_be->authoritative_peers_count()) {auto err_msg = fmt::format("{} {} {} missing, {} inconsistent objects",m_pg->info.pgid,m_mode_desc,m_be->m_missing.size(),m_be->m_inconsistent.size());dout(2) << err_msg << dendl;m_osds->clog->error() << fmt::to_string(err_msg);}// note that the PG_STATE_REPAIR might have changed aboveif (m_be->authoritative_peers_count() && m_is_repair) {state_clear(PG_STATE_CLEAN);// we know we have a problem, so it's OK to set the user-visible flag// even if we only reached here via auto-repairstate_set(PG_STATE_REPAIR);update_op_mode_text();m_be->update_repair_status(true);m_fixed_count += m_be->scrub_process_inconsistent();}bool has_error = (m_be->authoritative_peers_count() > 0) && m_is_repair;{stringstream oss;oss << m_pg->info.pgid.pgid << " " << m_mode_desc << " ";int total_errors = m_shallow_errors + m_deep_errors;if (total_errors)oss << total_errors << " errors";elseoss << "ok";if (!m_is_deep && m_pg->info.stats.stats.sum.num_deep_scrub_errors)oss << " ( " << m_pg->info.stats.stats.sum.num_deep_scrub_errors<< " remaining deep scrub error details lost)";if (m_is_repair)oss << ", " << m_fixed_count << " fixed";if (total_errors)m_osds->clog->error(oss);elsem_osds->clog->debug(oss); ///////here //Aug 27 08:41:21 ceph02 ceph-osd[499833]: log_channel(cluster) log [DBG] : 3.3 deep-scrub ok}// Since we don't know which errors were fixed, we can only clear them// when every one has been fixed.if (m_is_repair) {dout(15) << fmt::format("{}: {} errors. {} errors fixed",__func__,m_shallow_errors + m_deep_errors,m_fixed_count)<< dendl;if (m_fixed_count == m_shallow_errors + m_deep_errors) {ceph_assert(m_is_deep);m_shallow_errors = 0;m_deep_errors = 0;dout(20) << __func__ << " All may be fixed" << dendl;} else if (has_error) {// a recovery will be initiated (below). Arrange for a deep-scrub// after the recovery, to get the updated error counts.m_after_repair_scrub_required = true;dout(20) << fmt::format("{}: setting for deep-scrub-after-repair ({} errors. {} ""errors fixed)",__func__, m_shallow_errors + m_deep_errors, m_fixed_count)<< dendl;} else if (m_shallow_errors || m_deep_errors) {// We have errors but nothing can be fixed, so there is no repair// possible.state_set(PG_STATE_FAILED_REPAIR);dout(10) << __func__ << " " << (m_shallow_errors + m_deep_errors)<< " error(s) present with no repair possible" << dendl;}}{// finish upObjectStore::Transaction t;m_pg->recovery_state.update_stats([this](auto& history, auto& stats) {dout(10) << "m_pg->recovery_state.update_stats() errors:"<< m_shallow_errors << "/" << m_deep_errors << " deep? "<< m_is_deep << dendl;utime_t now = ceph_clock_now();history.last_scrub = m_pg->recovery_state.get_info().last_update;history.last_scrub_stamp = now;if (m_is_deep) {history.last_deep_scrub = m_pg->recovery_state.get_info().last_update;history.last_deep_scrub_stamp = now;}if (m_is_deep) {if ((m_shallow_errors == 0) && (m_deep_errors == 0)) {history.last_clean_scrub_stamp = now;}stats.stats.sum.num_shallow_scrub_errors = m_shallow_errors;stats.stats.sum.num_deep_scrub_errors = m_deep_errors;auto omap_stats = m_be->this_scrub_omapstats();stats.stats.sum.num_large_omap_objects =omap_stats.large_omap_objects;stats.stats.sum.num_omap_bytes = omap_stats.omap_bytes;stats.stats.sum.num_omap_keys = omap_stats.omap_keys;dout(19) << "scrub_finish shard " << m_pg_whoami<< " num_omap_bytes = " << stats.stats.sum.num_omap_bytes<< " num_omap_keys = " << stats.stats.sum.num_omap_keys<< dendl;} else {stats.stats.sum.num_shallow_scrub_errors = m_shallow_errors;// XXX: last_clean_scrub_stamp doesn't mean the pg is not inconsistent// because of deep-scrub errorsif (m_shallow_errors == 0) {history.last_clean_scrub_stamp = now;}}stats.stats.sum.num_scrub_errors =stats.stats.sum.num_shallow_scrub_errors +stats.stats.sum.num_deep_scrub_errors;if (m_flags.check_repair) {m_flags.check_repair = false;if (m_pg->info.stats.stats.sum.num_scrub_errors) {state_set(PG_STATE_FAILED_REPAIR);dout(10) << "scrub_finish "<< m_pg->info.stats.stats.sum.num_scrub_errors<< " error(s) still present after re-scrub" << dendl;}}return true;},&t);int tr = m_osds->store->queue_transaction(m_pg->ch, std::move(t), nullptr);ceph_assert(tr == 0);}if (has_error) {m_pg->queue_peering_event(PGPeeringEventRef(std::make_shared<PGPeeringEvent>(get_osdmap_epoch(),get_osdmap_epoch(),PeeringState::DoRecovery())));} else {m_is_repair = false;state_clear(PG_STATE_REPAIR);update_op_mode_text();}cleanup_on_finish();m_active_target.reset();if (do_auto_scrub) {request_rescrubbing();}// determine the next scrub timeupdate_scrub_job();if (m_pg->is_active() && m_pg->is_primary()) {m_pg->recovery_state.share_pg_info();}

}