Python脚本每天爬取微博热搜-升级版

主要优化内容:

定时任务调整:

将定时任务从每小时改为每10分钟执行一次

调整了请求延迟时间,从1-3秒减少到0.5-1.5秒

缩短了请求超时时间,从10秒减少到8秒

性能优化:

移除了广告数据的处理,减少不必要的处理

优化了数据结构,减少内存占用

添加了数据清理功能,自动删除7天前的数据

用户体验改进:

HTML页面添加了自动刷新功能(每5分钟)

添加了手动刷新按钮

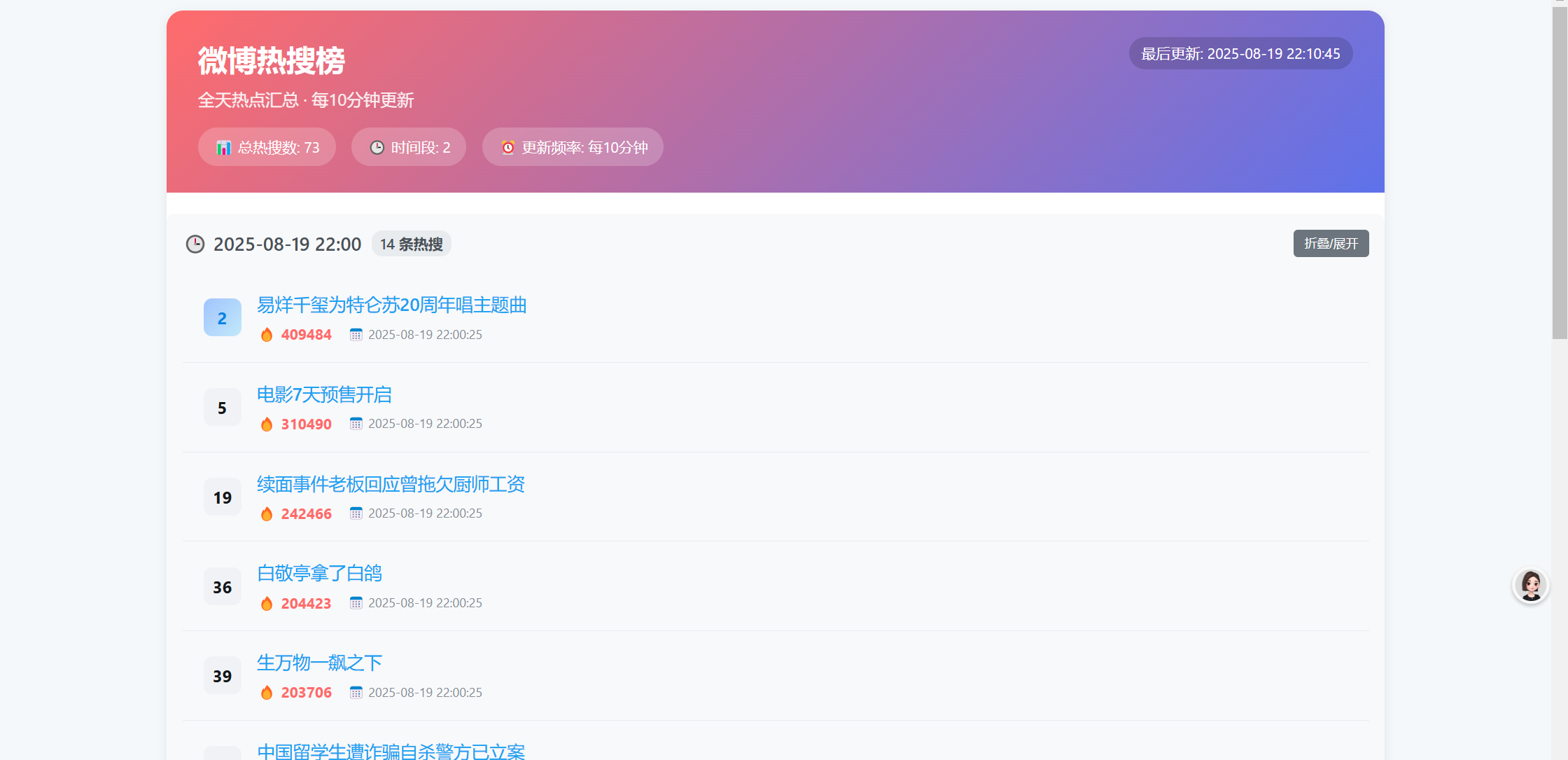

增加了统计信息显示(总热搜数、时间段数等)

优化了移动端显示效果

代码健壮性:

添加了异常处理

优化了数据存储和读取逻辑

添加了数据清理机制,防止数据无限增长

界面美化:

添加了网站图标

优化了颜色方案和布局

改进了响应式设计,适配移动设备

这个优化版本每10分钟获取一次微博热搜,并将新数据追加到当天的HTML文件中,同时保持了去重功能。页面也会每5分钟自动刷新,确保用户看到最新数据。

import requests

from bs4 import BeautifulSoup

import time

import os

from datetime import datetime, timedelta

import schedule

import random

import json

import redef fetch_weibo_hot():"""使用API接口获取微博热搜数据(避免HTML结构变化问题)"""api_url = "https://weibo.com/ajax/side/hotSearch"headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36','Referer': 'https://weibo.com/','Cookie': 'XSRF-TOKEN=RKnNBEaQBrp==' # 替换为你的实际Cookie}try:# 添加随机延迟避免被封time.sleep(random.uniform(0.5, 1.5))response = requests.get(api_url, headers=headers, timeout=8)response.raise_for_status()# 解析JSON数据data = response.json()# 提取热搜数据hot_items = []for group in data['data']['realtime']:# 普通热搜项if 'word' in group:item = {'rank': group.get('rank', ''),'title': group['word'],'hot': group.get('num', '0'),'link': f"https://s.weibo.com/weibo?q={group['word']}",'timestamp': datetime.now().strftime("%Y-%m-%d %H:%M:%S"),'source': 'weibo'}hot_items.append(item)# 只保留前50条热搜return hot_items[:50]except Exception as e:print(f"🚨 获取热搜数据失败: {e}")return []def load_existing_hot_searches(date_str):"""加载当天已存在的热搜数据"""json_file = os.path.join("weibo_hot", date_str, "hot_searches.json")if os.path.exists(json_file):try:with open(json_file, 'r', encoding='utf-8') as f:return json.load(f)except Exception as e:print(f"读取已有热搜数据失败: {e}")return []return []def save_hot_data_json(hot_data, date_str):"""保存热搜数据到JSON文件"""daily_dir = os.path.join("weibo_hot", date_str)if not os.path.exists(daily_dir):os.makedirs(daily_dir)json_file = os.path.join(daily_dir, "hot_searches.json")# 加载已有数据existing_data = load_existing_hot_searches(date_str)existing_titles = {item['title'] for item in existing_data}# 过滤掉已存在的热搜new_data = [item for item in hot_data if item['title'] not in existing_titles]# 合并数据all_data = existing_data + new_data# 保存到JSON文件with open(json_file, 'w', encoding='utf-8') as f:json.dump(all_data, f, ensure_ascii=False, indent=2)return all_data, len(new_data)def generate_html(hot_data, date_str):"""生成HTML文件"""if not hot_data:return "<html><body><h1>未获取到热搜数据</h1></body></html>"# 按时间分组热搜time_groups = {}for item in hot_data:time_key = item['timestamp'][:13] # 只取到小时if time_key not in time_groups:time_groups[time_key] = []time_groups[time_key].append(item)# 按时间倒序排列sorted_times = sorted(time_groups.keys(), reverse=True)# 统计信息total_count = len(hot_data)time_count = len(time_groups)html_content = f"""<!DOCTYPE html><html lang="zh-CN"><head><meta charset="UTF-8"><meta name="viewport" content="width=device-width, initial-scale=1.0"><title>微博热搜榜 {date_str}</title><link rel="icon" href="data:image/svg+xml,<svg xmlns=%22http://www.w3.org/2000/svg%22 viewBox=%220 0 100 100%22><text y=%22.9em%22 font-size=%2290%22>🔥</text></svg>"><style>* {{box-sizing: border-box;margin: 0;padding: 0;}}body {{ font-family: -apple-system, BlinkMacSystemFont, "Segoe UI", Roboto, "PingFang SC", "Microsoft YaHei", sans-serif; background-color: #f5f8fa;color: #14171a;line-height: 1.5;padding: 20px;max-width: 1200px;margin: 0 auto;}}.container {{background: white;border-radius: 16px;box-shadow: 0 3px 10px rgba(0, 0, 0, 0.08);overflow: hidden;margin-bottom: 30px;}}.header {{ background: linear-gradient(135deg, #ff6b6b, #5e72eb);color: white;padding: 25px 30px;position: relative;}}.title {{font-size: 28px;font-weight: 700;margin-bottom: 5px;}}.subtitle {{font-size: 16px;opacity: 0.9;}}.stats {{margin-top: 15px;display: flex;gap: 15px;flex-wrap: wrap;}}.stat-item {{background: rgba(255, 255, 255, 0.2);padding: 8px 15px;border-radius: 20px;font-size: 14px;}}.update-time {{position: absolute;top: 25px;right: 30px;background: rgba(0, 0, 0, 0.15);padding: 5px 12px;border-radius: 20px;font-size: 14px;}}.time-section {{margin: 20px 0;padding: 15px;background: #f8f9fa;border-radius: 8px;}}.time-header {{font-size: 18px;font-weight: 600;margin-bottom: 15px;color: #495057;display: flex;align-items: center;justify-content: space-between;}}.time-info {{display: flex;align-items: center;}}.time-count {{background: #e9ecef;padding: 2px 8px;border-radius: 10px;font-size: 14px;margin-left: 10px;}}.hot-list {{padding: 0;}}.hot-item {{display: flex;align-items: center;padding: 16px 20px;border-bottom: 1px solid #e6ecf0;transition: background 0.2s;}}.hot-item:hover {{background-color: #f7f9fa;}}.rank {{width: 36px;height: 36px;line-height: 36px;text-align: center;font-weight: bold;font-size: 16px;background: #f0f2f5;border-radius: 8px;margin-right: 15px;flex-shrink: 0;}}.top1 {{ background: linear-gradient(135deg, #ff9a9e, #fad0c4);color: #d63031;}}.top2 {{ background: linear-gradient(135deg, #a1c4fd, #c2e9fb);color: #0984e3;}}.top3 {{ background: linear-gradient(135deg, #ffecd2, #fcb69f);color: #e17055;}}.hot-content {{flex: 1;min-width: 0;}}.hot-title {{font-size: 17px;font-weight: 500;margin-bottom: 6px;white-space: nowrap;overflow: hidden;text-overflow: ellipsis;}}.hot-stats {{display: flex;align-items: center;color: #657786;font-size: 14px;gap: 15px;flex-wrap: wrap;}}.hot-value {{color: #ff6b6b;font-weight: 700;}}.hot-time {{font-size: 12px;color: #868e96;}}.link {{color: #1da1f2;text-decoration: none;transition: color 0.2s;}}.link:hover {{color: #0d8bda;text-decoration: underline;}}.footer {{text-align: center;padding: 20px;color: #657786;font-size: 13px;border-top: 1px solid #e6ecf0;}}.no-data {{text-align: center;padding: 40px;color: #657786;}}.collapse-btn {{background: #6c757d;color: white;border: none;padding: 5px 10px;border-radius: 4px;cursor: pointer;font-size: 12px;}}.collapse-btn:hover {{background: #5a6268;}}.collapsed .hot-list {{display: none;}}.auto-refresh {{text-align: center;margin: 20px 0;}}.refresh-btn {{background: #28a745;color: white;border: none;padding: 10px 20px;border-radius: 5px;cursor: pointer;font-size: 14px;}}.refresh-btn:hover {{background: #218838;}}@media (max-width: 768px) {{body {{padding: 10px;}}.header {{padding: 20px 15px;}}.title {{font-size: 22px;}}.update-time {{position: static;margin-top: 10px;}}.hot-item {{padding: 14px 15px;}}.hot-title {{font-size: 16px;}}.hot-stats {{flex-direction: column;align-items: flex-start;gap: 5px;}}}}</style></head><body><div class="container"><div class="header"><h1 class="title">微博热搜榜</h1><div class="subtitle">全天热点汇总 · 每10分钟更新</div><div class="stats"><div class="stat-item">📊 总热搜数: {total_count}</div><div class="stat-item">🕒 时间段: {time_count}</div><div class="stat-item">⏰ 更新频率: 每10分钟</div></div><div class="update-time">最后更新: {datetime.now().strftime("%Y-%m-%d %H:%M:%S")}</div></div>"""# 添加时间分组的热搜内容for time_key in sorted_times:time_display = f"{time_key}:00"time_data = time_groups[time_key]html_content += f"""<div class="time-section"><div class="time-header"><div class="time-info">🕒 {time_display}<span class="time-count">{len(time_data)} 条热搜</span></div><button class="collapse-btn" onclick="this.parentElement.parentElement.classList.toggle('collapsed')">折叠/展开</button></div><div class="hot-list">"""for item in time_data:rank_class = ""if item['rank'] == 1:rank_class = "top1"elif item['rank'] == 2:rank_class = "top2"elif item['rank'] == 3:rank_class = "top3"hot_value = f"<span class='hot-value'>🔥 {item['hot']}</span>" if item['hot'] else ""html_content += f"""<div class="hot-item"><div class="rank {rank_class}">{item['rank']}</div><div class="hot-content"><div class="hot-title"><a href="{item['link']}" class="link" target="_blank">{item['title']}</a></div><div class="hot-stats">{hot_value}<span class="hot-time">📅 {item['timestamp']}</span></div></div></div>"""html_content += """</div></div>"""if not hot_data:html_content += """<div class="no-data"><h3>暂无热搜数据</h3><p>请检查网络连接或稍后再试</p></div>"""html_content += f"""<div class="auto-refresh"><button class="refresh-btn" onclick="location.reload()">🔄 刷新页面</button><p>页面每10分钟自动更新,也可手动刷新</p></div><div class="footer">数据来源: 微博热搜 • 每10分钟自动更新 • 最后更新: {datetime.now().strftime("%Y-%m-%d %H:%M:%S")} • 仅供学习参考</div></div><script>// 默认折叠所有时间段的搜索结果document.addEventListener('DOMContentLoaded', function() {{var sections = document.querySelectorAll('.time-section');sections.forEach(function(section, index) {{if (index > 0) {{ // 保持最新时间段展开section.classList.add('collapsed');}}}});// 设置自动刷新(每5分钟)setTimeout(function() {{location.reload();}}, 5 * 60 * 1000);}});</script></body></html>"""return html_contentdef cleanup_old_data():"""清理7天前的数据"""try:now = datetime.now()cutoff_date = (now - timedelta(days=7)).strftime("%Y-%m-%d")weibo_hot_dir = "weibo_hot"if os.path.exists(weibo_hot_dir):for date_dir in os.listdir(weibo_hot_dir):if date_dir < cutoff_date:dir_path = os.path.join(weibo_hot_dir, date_dir)import shutilshutil.rmtree(dir_path)print(f"🗑️ 已清理过期数据: {date_dir}")except Exception as e:print(f"清理旧数据时出错: {e}")def save_hot_data():"""保存热搜数据"""try:# 创建存储目录if not os.path.exists("weibo_hot"):os.makedirs("weibo_hot")# 获取当前日期now = datetime.now()date_str = now.strftime("%Y-%m-%d")print(f"🕒 开始获取 {now.strftime('%Y-%m-%d %H:%M:%S')} 的热搜数据...")hot_data = fetch_weibo_hot()if hot_data:print(f"✅ 成功获取 {len(hot_data)} 条热搜数据")# 保存到JSON文件并获取所有数据all_data, new_count = save_hot_data_json(hot_data, date_str)print(f"📊 已有 {len(all_data)} 条热搜,新增 {new_count} 条")# 生成HTML内容html_content = generate_html(all_data, date_str)# 保存HTML文件html_file = os.path.join("weibo_hot", date_str, "index.html")with open(html_file, "w", encoding="utf-8") as f:f.write(html_content)print(f"💾 已保存到: {html_file}")# 每周清理一次旧数据#if now.weekday() == 0 and now.hour == 0 and now.minute < 10: # 每周一凌晨# cleanup_old_data()else:print("⚠️ 未获取到热搜数据,跳过保存")except Exception as e:print(f"❌ 保存数据时出错: {e}")def job():"""定时任务"""print("\n" + "="*60)print(f"⏰ 执行定时任务: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}")save_hot_data()print("="*60 + "\n")if __name__ == "__main__":print("🔥 微博热搜爬虫已启动 🔥")print("⏳ 首次执行将立即运行,之后每10分钟执行一次")print("💡 提示: 请确保已更新有效的Cookie")print("="*60)# 立即执行一次job()# 设置定时任务(每10分钟执行一次)schedule.every(10).minutes.do(job)# 每天凌晨清理一次旧数据schedule.every().day.at("00:00").do(cleanup_old_data)print("⏳ 程序运行中,按Ctrl+C退出...")try:# 保持程序运行while True:schedule.run_pending()time.sleep(30) # 每30秒检查一次except KeyboardInterrupt:print("\n👋 程序已手动停止")