iptables -m connlimit导致内存不足

1. 问题描述:

Udp 高频攻击导致slab kmalloc-64 持续申请,导致内存不足。A7低版本内核无该问题,MA35/AM62在kernel6版本上也无该问题,此问题只出在A7 kernel6上。

问题环境(kernel6.6) iptables在不同环境下的版本相同

[root@evse ~]# uname -a

Linux evse 6.6.90-gf2b2a1246566 #1 SMP PREEMPT Wed Jun 4 15:06:07 CST 2025

armv7l GNU/Linux

[root@evse ~]# cat /proc/cpuinfo

processor : 0

model name : ARMv7 Processor rev 5 (v7l)

BogoMIPS : 64.00

Features : half thumb fastmult vfp edsp neon vfpv3 tls vfpv4 idiva

idivt vfpd32 lpae

CPU implementer : 0x41

CPU architecture: 7

CPU variant : 0x0

CPU part : 0xc07

CPU revision : 5

Hardware : Freescale i.MX6 Ultralite (Device Tree)

Revision : 0000

Serial : d9656bca271e39d4

[root@evse ~]#

2. 测试命令

删除规则与查看规则:

iptables -D INPUT 1

iptables -L INPUT --line-numbers

两条问题规则(-m connlimit ):

iptables -A INPUT -p udp -m connlimit --connlimit-above 60 --connlimit-mask 32 -m limit --limit

2/min -j LOG --log-prefix "UDP Flood Limit Exceeded: "iptables -A INPUT -p udp -m connlimit --connlimit-above 60 --connlimit-mask 32 -j DROP

虚拟机对目标机使用不同间隔进行攻击:

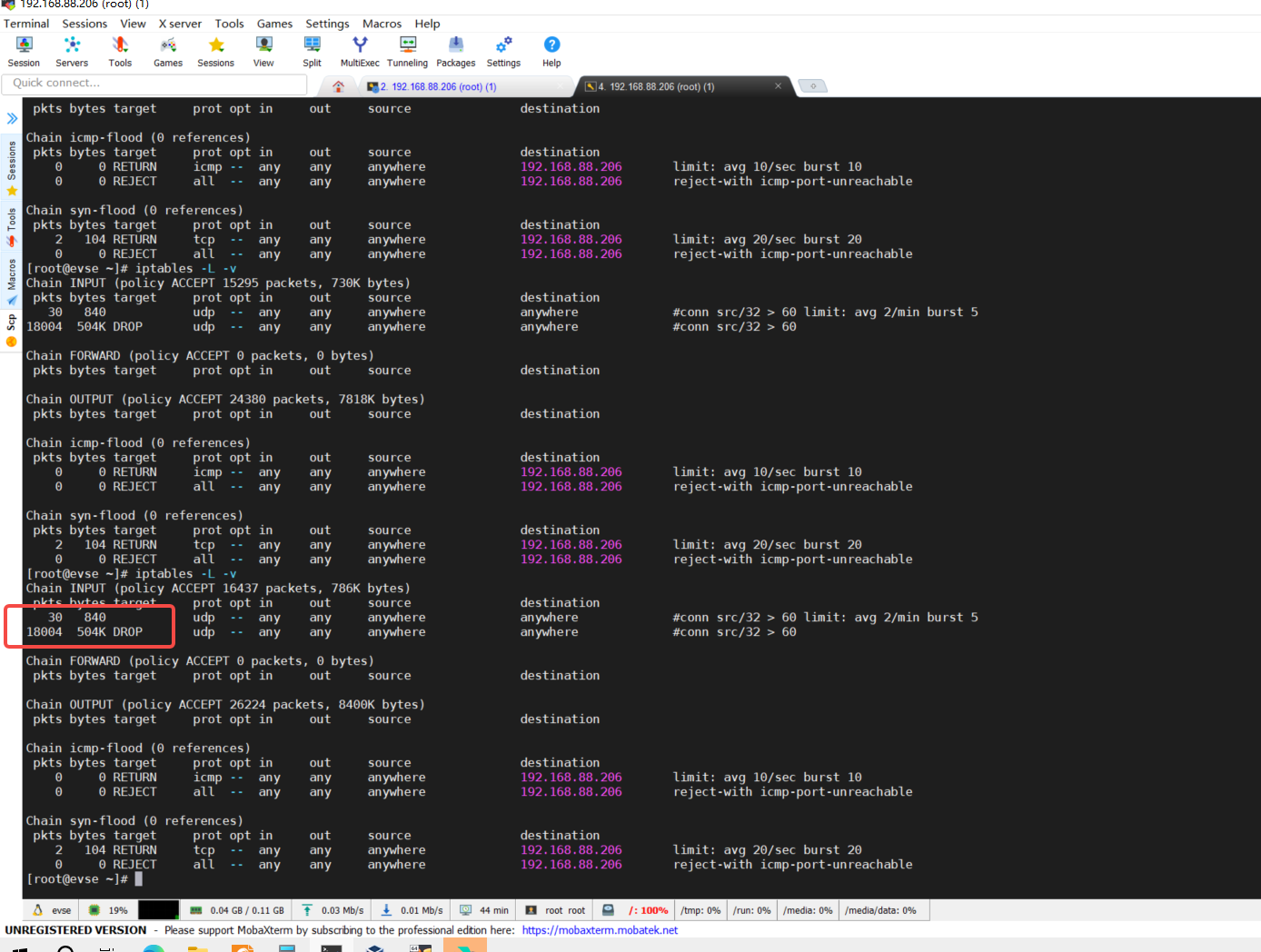

sudo hping3 --udp -s 6666 -p 443 --flood 192.168.88.206 kmalloc-64 持续增加

sudo hping3 --udp -s 6666 -p 443 -i u2000 192.168.88.206 kmalloc-64 不持续增加

sudo hping3 --udp -s 6666 -p 443 -i u200 192.168.88.206 kmalloc-64 不持续增加

sudo hping3 --udp -s 6666 -p 443 -i u20 192.168.88.206 kmalloc-64 持续增加 ,删除规则后,kmalloc-64恢复原来较低数值。若此时不删除规则,并且停止-i u20 攻击, kmalloc-64 会一直保持原高值不变,若此时再使用-i u200 进行攻击,kmalloc-64 会在原来高值情况下慢慢减小,最终回到内存正常状态。

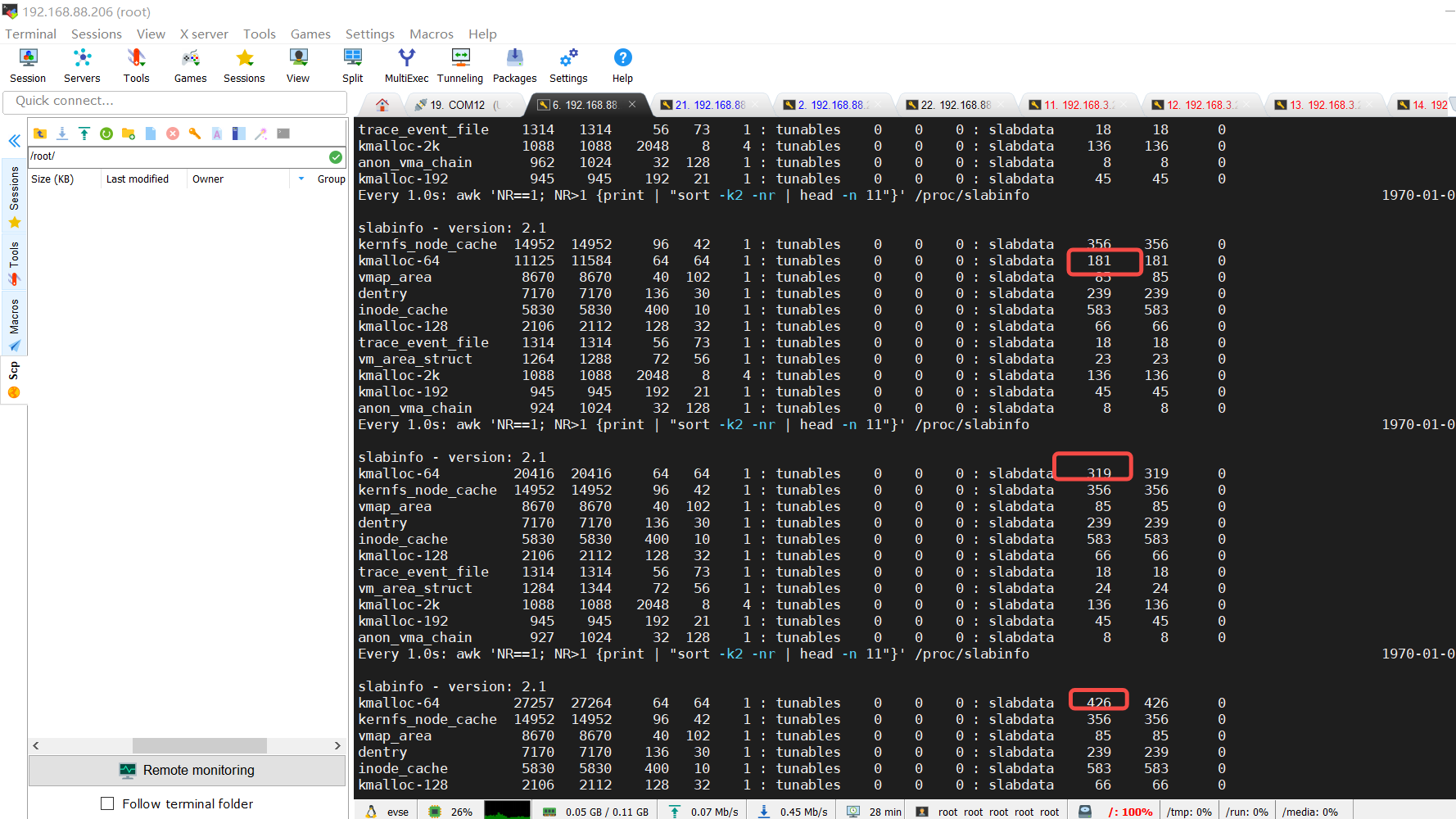

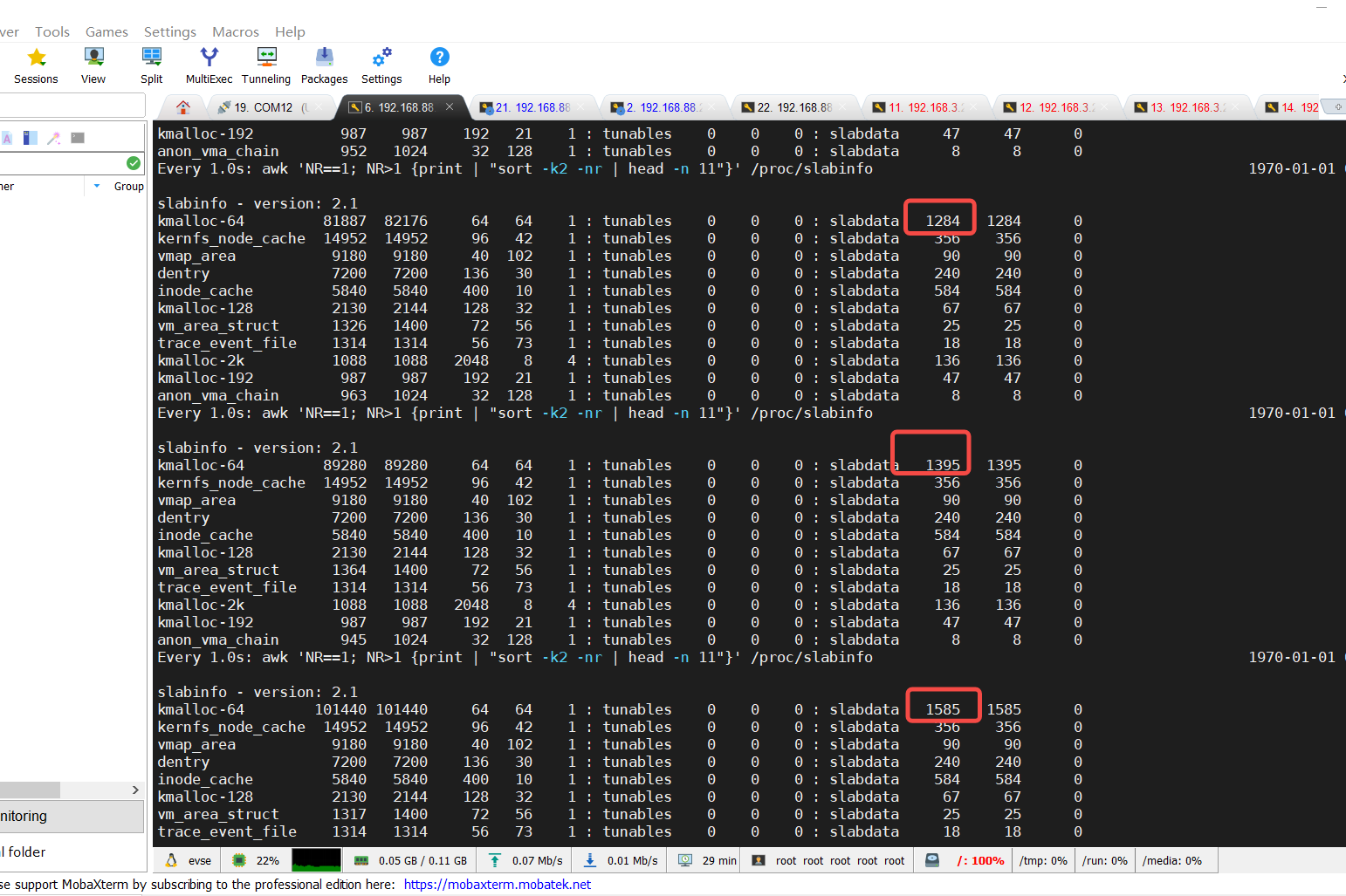

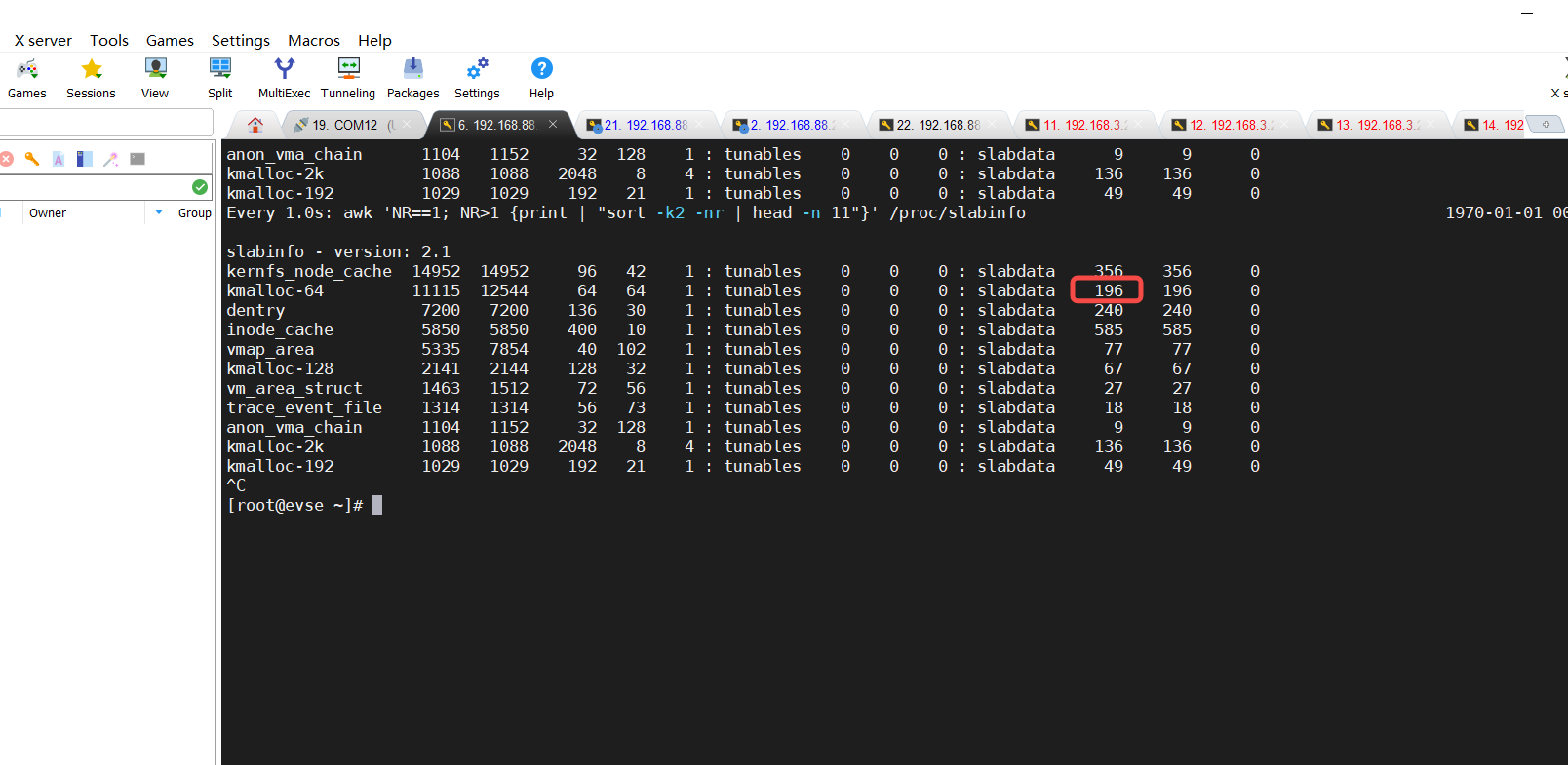

通过如下命令可查看有问题时,可用内存下降, kmalloc-64 会持续增加

watch -n1 cat /proc/meminfo |grep "MemFree"

watch -n1 "awk 'NR==1; NR>1 {print | \"sort -k2 -nr | head -n 11\"}' /proc/slabinfo"

watch -n1 iptables -L -vn

3. kernel4.14.98 (无问题)

此内核无该类问题,规则生效,但内存不会增长。

注意:该环境iptables两条udp规则和洪涝攻击并不会导致内存泄露,但是产品线S83中的preconf会导致空闲内存越来越少。(因固件包过老,暂不查产品线的问题)

4. kernel6.6 (有问题)

4.1 kmalloc-64 内存异常

- 第一列: slab 缓存的名称,

- 第二列(active_objs) :当前活跃的对象数量,

- 第三列(num_objs) :缓存中总对象数量(包括活跃和非活跃),

- 第四列(objsize):每个对象的大小(字节),

- 第五列(objperslab) :每个 slab 页能存放的对象数量,

- 第六列(pagesperslab) :每个 slab 占用的内存页数,

通常为 1。

tunables 部分可调参数(通常为 0,表示使用默认值)

slabdata 部分

• 第一个值:活跃的 slab 页数量。(因每个slab占用内存物理页为1个,也可认为此值是该slab的个

数)

• 第二个值:总 slab 页数量。

• 第三个值:共享 slab 页数量

总对象个数=slab组数每组对象 如 82176=128464 其中81887为当前活跃的对象

slab内存回收是以slab单个节点来回收,一个slab控制器包含多个obj,只有所有obj都非活跃状态,slab节点才会被系统回收。所有只有释放obj,后续才有slab内存回收。

当删除规则,kmalloc-64立即回到原先正常大小。或者在不删除规则的环境下,此时再降速攻击,kmalloc-64便会慢慢释放,最终变回原先大小:

4.2 优化系统参数(缩减超时时间):无效

echo 300 > /proc/sys/net/netfilter/nf_conntrack_tcp_timeout_established

echo 1 > /proc/sys/net/netfilter/nf_conntrack_tcp_be_liberal

echo 1 > /proc/sys/net/netfilter/nf_conntrack_generic_timeout

echo 5 > /proc/sys/net/netfilter/nf_conntrack_udp_timeout

echo 1 > /proc/sys/net/netfilter/nf_conntrack_icmp_timeout

4.3 内核调整:有效

CONFIG_HZ_1000 CONFIG_HZ=1000 ----- 在答疑Q2中详细说明原因

CONNCOUNT_GC_MAX_NODES 从8设置成64 ----- 增加单次释放节点的个数,查找资料64适合高并

发的攻击环境

if ((u32)jiffies == list->last_gc) 改成 if ((u32)jiffies == READ_ONCE(list->last_gc)) -------以保读取最新

值,

禁止编译器优化为重复读取或重排指令

单核设备应配置CONFIG_NR_CPUS=1 ,并关闭CONFIG_SMP 以匹配硬件 -----a7所有配置都应做此调整

4.4 代码追踪

iptables -A INPUT -p udp -m connlimit --connlimit-above 60 --connlimit-mask 32 -j DROP

规则会

触发 connlimit_mt_check -> nf_conncount_init insert_tree

iptables -D INPUT 1删除上述规则,会触发connlimit_mt_destroy -> nf_conncount_destroy ->destroy_tree

使用sudo hping3 --udp -s 6666 -p 443 -i u2000000 192.168.88.206进行攻击:

因间隔为2秒,非高频攻击状态,无包被DROP, 此时无__nf_conncount_add 和conn_free 函数的

执行。

4.4.1 节点创建:

__nf_conncount_add -> kmem_cache_alloc(conncount_conn_cachep,

GFP_ATOMIC) (此函数便是分配slab的缓存对象)

4.4.2 节点释放:

find_or_evict 用来查找过期节点,并将其释放。

-

- 在添加节点时释放

__nf_conncount_add -> find_or_evict -> conn_free ->

kmem_cache_free(conncount_conn_cachep, conn)

(此函数便是释放slab的缓存对象)

-

- 在其他处释放

connlimit_mt ->

nf_conncount_count ->

count_tree -> nf_conncount_gc_list -> find_or_evict-> insert_tree -> nf_conncount_gc_list -> find_or_evict- > schedule_gc_worker -> schedule_gc_worker -> schedule_work

schedule_work 触发工作队列去执行 tree_gc_worker -> nf_conncount_gc_list -> find_or_evict

connlimit_mt 主要用于限制单个 IP 的连接数

nf_conncount_count 统计当前键值对应的连接数

4.5 攻击测试

4.5.1 使用sudo hping3 --udp -s 6666 -p 443 -i u200000 192.168.88.206 进行

攻击6秒左右:

Welcome to EVSE

evse login: [ 106.484184] **[connlimit_mt_check:95]**

[ 106.488585] **[nf_conncount_init:539]**

[ 110.288686] **[insert_tree:366]**

Welcome to EVSE

evse login:

Welcome to EVSE

evse login:

Welcome to EVSE

evse login:

Welcome to EVSE

evse login: [ 132.168009] **[insert_tree:366]**

[ 132.377315] **[__nf_conncount_add:186]**

[ 132.588389] **[__nf_conncount_add:186]**

[ 132.792491] **[__nf_conncount_add:186]**

[ 132.992824] **[__nf_conncount_add:186]**

[ 133.196611] **[__nf_conncount_add:186]**

[ 133.398087] **[__nf_conncount_add:186]**

[ 133.602361] **[__nf_conncount_add:186]**

[ 133.810499] **[__nf_conncount_add:186]**

[ 134.013903] **[__nf_conncount_add:186]**

[ 134.215048] **[__nf_conncount_add:186]**

[ 134.420325] **[__nf_conncount_add:186]**

[ 134.625043] **[__nf_conncount_add:186]**

[ 134.825720] **[__nf_conncount_add:186]**

[ 135.028171] **[__nf_conncount_add:186]**

[ 135.230085] **[__nf_conncount_add:186]**

[ 135.430352] **[__nf_conncount_add:186]**

[ 135.631057] **[__nf_conncount_add:186]**

[ 135.835375] **[__nf_conncount_add:186]**

[ 136.044689] **[__nf_conncount_add:186]**

[ 136.249517] **[__nf_conncount_add:186]**

[ 136.449621] **[__nf_conncount_add:186]**

[ 136.652949] **[__nf_conncount_add:186]**

4.5.2 上一步前提下,过一段时间再使用sudo hping3 --udp -s 6666 -p 443 -i u200000 192.168.88.206 进行攻击2秒左右,从打印可看到先释放原先创建的节点:

[ 246.340577] **[conn_free:92]**

[ 246.343988] **[conn_free:92]**

[ 246.347228] **[conn_free:92]**

[ 246.350448] **[conn_free:92]**

[ 246.353663] **[conn_free:92]**

[ 246.356968] **[conn_free:92]**

[ 246.360190] **[conn_free:92]**

[ 246.363408] **[conn_free:92]**

[ 246.366622] **[conn_free:92]**

[ 246.369831] **[__nf_conncount_add:186]**

[ 246.544568] **[conn_free:92]**

[ 246.547849] **[conn_free:92]**

[ 246.551030] **[conn_free:92]**

[ 246.554201] **[conn_free:92]**

[ 246.557367] **[conn_free:92]**

[ 246.560535] **[conn_free:92]**

[ 246.563701] **[conn_free:92]**

[ 246.566866] **[conn_free:92]**

[ 246.570031] **[conn_free:92]**

[ 246.573193] **[__nf_conncount_add:186]**

[ 246.749883] **[conn_free:92]**

[ 246.753196] **[conn_free:92]**

[ 246.756645] **[conn_free:92]**

[ 246.759876] **[conn_free:92]**

[ 246.763095] **[conn_free:92]**

[ 246.766407] **[__nf_conncount_add:186]**

[ 246.961836] **[__nf_conncount_add:186]**

[ 247.162273] **[__nf_conncount_add:186]**

[ 247.362697] **[__nf_conncount_add:186]**

[ 247.563052] **[__nf_conncount_add:186]**

4.5.3 断电重启后,使用sudo hping3 --udp -s 6666 -p 443 -i u200000 192.168.88.206 进行攻击30秒左右

可以看到先连续创建前60个(200ms一帧,12秒正好60个),期间没有conn_free,这是因为之前**-- connlimit-above 60** 参数的原因,可以看到后期每增加一个节点,便会释放一个节点。内核的nf_conncount 模块会优先释放最早创建的连接 (FIFO策略)。

Welcome to EVSE

evse login: [ 6588.953200] **[connlimit_mt_check:95]**

[ 6588.964224] **[nf_conncount_init:539]**

Welcome to EVSE

evse login: [ 6623.889501] **[insert_tree:366]**

[ 6624.092922] **[__nf_conncount_add:186]**

[ 6624.293990] **[__nf_conncount_add:186]**

[ 6624.496559] **[__nf_conncount_add:186]**

[ 6624.704156] **[__nf_conncount_add:186]**

[ 6624.905262] **[__nf_conncount_add:186]**

[ 6625.117401] **[__nf_conncount_add:186]**

[ 6625.332664] **[__nf_conncount_add:186]**

[ 6625.534785] **[__nf_conncount_add:186]**

[ 6625.735500] **[__nf_conncount_add:186]**

[ 6625.936776] **[__nf_conncount_add:186]**

[ 6626.142550] **[__nf_conncount_add:186]**

[ 6626.464095] **[__nf_conncount_add:186]**

[ 6626.672264] **[__nf_conncount_add:186]**

[ 6626.876006] **[__nf_conncount_add:186]**

[ 6627.080153] **[__nf_conncount_add:186]**

[ 6627.280599] **[__nf_conncount_add:186]**

[ 6627.485330] **[__nf_conncount_add:186]**

[ 6627.688502] **[__nf_conncount_add:186]**

[ 6627.890940] **[__nf_conncount_add:186]**

[ 6628.093806] **[__nf_conncount_add:186]**

[ 6628.313874] **[__nf_conncount_add:186]**

[ 6628.515991] **[__nf_conncount_add:186]**

[ 6628.720403] **[__nf_conncount_add:186]**

[ 6628.924391] **[__nf_conncount_add:186]**

[ 6629.126770] **[__nf_conncount_add:186]**

[ 6629.330207] **[__nf_conncount_add:186]**

[ 6629.533838] **[__nf_conncount_add:186]**

[ 6629.734090] **[__nf_conncount_add:186]**

[ 6629.934281] **[__nf_conncount_add:186]**

[ 6630.135378] **[__nf_conncount_add:186]**

[ 6630.335804] **[__nf_conncount_add:186]**

[ 6630.537249] **[__nf_conncount_add:186]**

[ 6630.740110] **[__nf_conncount_add:186]**

[ 6630.942853] **[__nf_conncount_add:186]**

[ 6631.143030] **[__nf_conncount_add:186]**

[ 6631.346782] **[__nf_conncount_add:186]**

[ 6631.547754] **[__nf_conncount_add:186]**

[ 6631.747852] **[__nf_conncount_add:186]**

[ 6631.948470] **[__nf_conncount_add:186]**

[ 6632.152779] **[__nf_conncount_add:186]**

[ 6632.364964] **[__nf_conncount_add:186]**

[ 6632.576077] **[__nf_conncount_add:186]**

[ 6632.796017] **[__nf_conncount_add:186]**

[ 6632.997078] **[__nf_conncount_add:186]**

[ 6633.203338] **[__nf_conncount_add:186]**

[ 6633.406403] **[__nf_conncount_add:186]**

[ 6633.607636] **[__nf_conncount_add:186]**

[ 6633.812599] **[__nf_conncount_add:186]**

[ 6634.024786] **[__nf_conncount_add:186]**

[ 6634.225224] **[__nf_conncount_add:186]**

[ 6634.427251] **[__nf_conncount_add:186]**

[ 6634.642583] **[__nf_conncount_add:186]**

[ 6634.842924] **[__nf_conncount_add:186]**

[ 6635.042996] **[__nf_conncount_add:186]**

[ 6635.243920] **[__nf_conncount_add:186]**

[ 6635.461265] **[__nf_conncount_add:186]**

[ 6635.670469] **[__nf_conncount_add:186]**

[ 6635.871427] **[__nf_conncount_add:186]**

[ 6636.079939] **[__nf_conncount_add:186]**

[ 6636.280148] **[__nf_conncount_add:186]**

[ 6636.481285] **[conn_free:92]**

[ 6636.484515] **[__nf_conncount_add:186]**

[ 6636.682712] **[conn_free:92]**

[ 6636.686019] **[__nf_conncount_add:186]**

[ 6636.883322] **[conn_free:92]**

[ 6636.886627] **[__nf_conncount_add:186]**

[ 6637.093071] **[conn_free:92]**

[ 6637.096471] **[__nf_conncount_add:186]**

[ 6637.308856] **[conn_free:92]**

[ 6637.312146] **[__nf_conncount_add:186]**

[ 6637.509549] **[conn_free:92]**

[ 6637.512862] **[__nf_conncount_add:186]**

[ 6637.715686] **[conn_free:92]**

[ 6637.718980] **[__nf_conncount_add:186]**

[ 6637.918133] **[conn_free:92]**

[ 6637.921462] **[__nf_conncount_add:186]**

[ 6638.122847] **[conn_free:92]**

[ 6638.126249] **[__nf_conncount_add:186]**

[ 6638.346137] **[conn_free:92]**[ 6638.349367] **[__nf_conncount_add:186]**

[ 6638.562855] **[conn_free:92]**

[ 6638.566084] **[__nf_conncount_add:186]**

4.5.4 若使用sudo hping3 --udp -s 6666 -p 443 -i u20 192.168.88.206 进行高频攻击

我们可以看到创建节点速度要明显快于释放。Kernel 6.6对连接跟踪模块进行了重构,新增了更严格的连接状态检查机制,导致处理每个连接需要更多CPU周期。

创建快是因为仅需分配内存,而释放需完成哈希表操作、状态清理等耗时步骤。

[10060.558838] **[__nf_conncount_add:186]**

[10060.558854] **[__nf_conncount_add:186]**

[10060.558873] **[__nf_conncount_add:186]**

[10060.558889] **[__nf_conncount_add:186]**

[10060.558907] **[__nf_conncount_add:186]**

[10060.559033] **[__nf_conncount_add:186]**

[10060.559058] **[__nf_conncount_add:186]**

[10060.559081] **[__nf_conncount_add:186]**

[10060.559098] **[__nf_conncount_add:186]**

[10060.559116] **[__nf_conncount_add:186]**

[10060.559134] **[__nf_conncount_add:186]**

[10060.559152] **[__nf_conncount_add:186]**

[10060.559170] **[__nf_conncount_add:186]**

[10060.559294] **[__nf_conncount_add:186]**

[10060.559318] **[__nf_conncount_add:186]**

[10060.559342] **[__nf_conncount_add:186]**

[10060.559360] **[__nf_conncount_add:186]**

[10060.559380] **[__nf_conncount_add:186]**

[10060.559399] **[__nf_conncount_add:186]**

[10060.559417] **[__nf_conncount_add:186]**

[10060.559436] **[__nf_conncount_add:186]**

[10060.559562] **[__nf_conncount_add:186]**

[10060.559587] **[__nf_conncount_add:186]**

[10060.559607] **[__nf_conncount_add:186]**

[10060.559624] **[__nf_conncount_add:186]**

[10060.559642] **[__nf_conncount_add:186]**

[10060.559660] **[__nf_conncount_add:186]**

[10060.559678] **[__nf_conncount_add:186]**

[10060.559696] **[__nf_conncount_add:186]**

[10060.559833] **[__nf_conncount_add:186]**

[10060.559861] **[__nf_conncount_add:186]**

[10060.559880] **[__nf_conncount_add:186]**

[10060.559899] **[__nf_conncount_add:186]**

[10060.559918] **[__nf_conncount_add:186]**

[10060.559936] **[__nf_conncount_add:186]**

[10060.559954] **[__nf_conncount_add:186]**

[10060.559972] **[__nf_conncount_add:186]**

[10060.560100] **[__nf_conncount_add:186]**

[10060.560125] **[__nf_conncount_add:186]**

[10060.560147] **[__nf_conncount_add:186]**

[10060.560164] **[__nf_conncount_add:186]**

[10060.560182] **[__nf_conncount_add:186]**

[10060.560201] **[__nf_conncount_add:186]**

[10060.560219] **[__nf_conncount_add:186]**

[10060.560239] **[__nf_conncount_add:186]**

[10060.560371] **[__nf_conncount_add:186]**

[10060.560394] **[__nf_conncount_add:186]**

[10060.560413] **[__nf_conncount_add:186]**

[10060.560429] **[__nf_conncount_add:186]**

[10060.560445] **[__nf_conncount_add:186]**

[10060.560482] **[__nf_conncount_add:186]**

[10060.560500] **[__nf_conncount_add:186]**

[10060.560518] **[__nf_conncount_add:186]**

[10060.560646] **[__nf_conncount_add:186]**

[10060.560671] **[__nf_conncount_add:186]**

[10060.560688] **[__nf_conncount_add:186]**

[10060.560705] **[__nf_conncount_add:186]**

[10060.560842] **[conn_free:92]**

[10060.560870] **[conn_free:92]**

[10060.560883] **[conn_free:92]**

[10060.560895] **[conn_free:92]**

[10060.560906] **[conn_free:92]**

[10060.560917] **[conn_free:92]**

[10060.560929] **[conn_free:92]**

[10060.560940] **[conn_free:92]**

[10060.560952] **[conn_free:92]**

[10060.560961] **[__nf_conncount_add:186]**

[10060.560982] **[__nf_conncount_add:186]**

[10060.561001] **[__nf_conncount_add:186]**

[10060.561018] **[__nf_conncount_add:186]**

[10060.561171] **[__nf_conncount_add:186]**

[10060.561201] **[__nf_conncount_add:186]**

[10060.561222] **[__nf_conncount_add:186]**

[10060.561240] **[__nf_conncount_add:186]**

[10060.561257] **[__nf_conncount_add:186]**

[10060.561274] **[__nf_conncount_add:186]**

[10060.561290] **[__nf_conncount_add:186]**

[10060.561307] **[__nf_conncount_add:186]**

[10060.561439] **[__nf_conncount_add:186]**[10060.561463] **[__nf_conncount_add:186]**

[10060.561480] **[__nf_conncount_add:186]**

[10060.561497] **[__nf_conncount_add:186]**

[10060.561513] **[__nf_conncount_add:186]**

[10060.561531] **[__nf_conncount_add:186]**

[10060.561547] **[__nf_conncount_add:186]**

[10060.561563] **[__nf_conncount_add:186]**

[10060.561688] **[__nf_conncount_add:186]**

[10060 561712] **[ f t dd:186]**

5 答疑

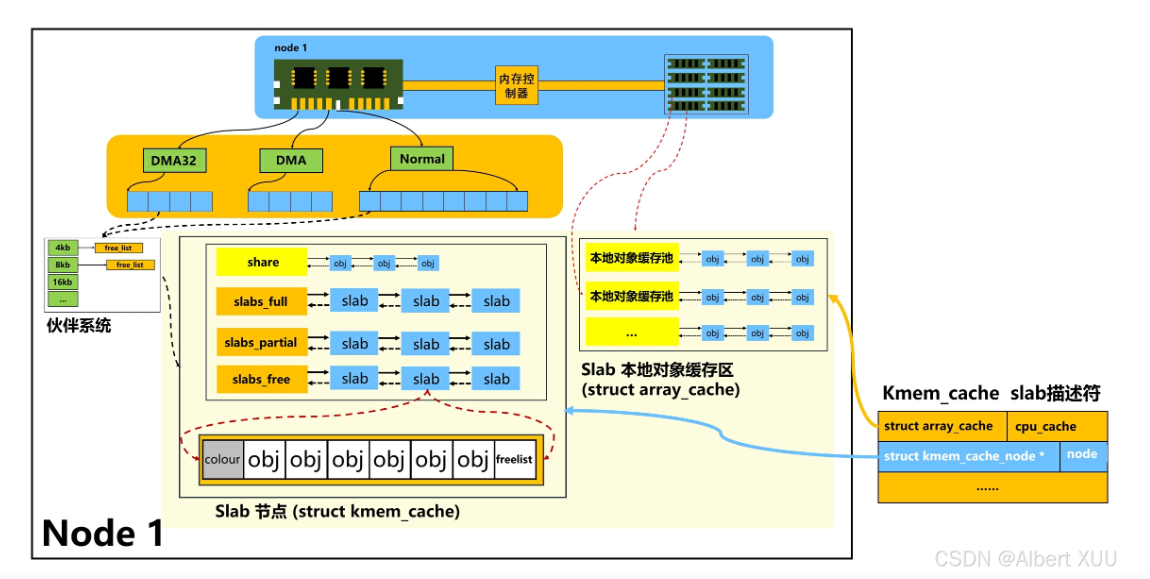

Q1: 从下面的代码可以看到slab描述符的名称应为nf_conncount_tuple,为何在proc/slabinfo中没有?而出现增长的slab名称确是malloc-64?

conncount_conn_cachep = kmem_cache_create("nf_conncount_tuple",

sizeof(struct nf_conncount_tuple),

0, 0, NULL);

slab描述符nf_conncount_tuple未在/proc/slabinfo显示,因为对象尺寸与通用缓存匹配且未强制独

立分配,内核会复用 malloc-64 缓存,所以在高频攻击下是malloc-64持续增加。

Q2: 为何Udp 高频攻击导致slab kmalloc-64 持续申请,导致内存不足?

高频攻击,有一个新连接便生成一个节点(add_new_node),就会申请一个slab对象(kmem_cache_alloc) 。

其中find_or_evict函数是用来释放无效过期节点,注意if ((u32)jiffies == READ_ONCE(list->last_gc))的判断逻辑,A7原CONFIG_HZ=100,也就是一个jiffies的单位就是10ms,当在这10ms时间内,这个函数直接到add_new_node出申请对象,而调过了释放无效过期的逻辑。当高频下(比如u20),10ms内就有500个节点要连续申请。

在下一个10ms才会到find_or_evict的释放逻辑,这样内存申请过于密集。

find_or_evict的释放逻辑还有注意点,每次它只释放9个节点,当释放完9个后,find_or_evict函数就返回。

这样就可知道高频攻击下,因节点在短时间创建过多节点,而一次释放最多9个,随着时间的积累,内存会逐渐被kmalloc-64所消耗。

所以kernel6需做如下调整:CONFIG_HZ_1000 CONFIG_HZ=1000 CONNCOUNT_GC_MAX_NODES从8设置成64(64值适合高并发环境)。

(后期其他平台用非rt内核kernel6,也需关注此处)

static int __nf_conncount_add(struct net *net,

struct nf_conncount_list *list,

const struct nf_conntrack_tuple *tuple,

const struct nf_conntrack_zone *zone)

{const struct nf_conntrack_tuple_hash *found;struct nf_conncount_tuple *conn, *conn_n;struct nf_conn *found_ct;unsigned int collect = 0;if ((u32)jiffies == READ_ONCE(list->last_gc))goto add_new_node;/* check the saved connections */list_for_each_entry_safe(conn, conn_n, &list->head, node) {if (collect > CONNCOUNT_GC_MAX_NODES)break;found = find_or_evict(net, list, conn);if (IS_ERR(found)) {/* Not found, but might be about to be confirmed */if (PTR_ERR(found) == -EAGAIN) {if (nf_ct_tuple_equal(&conn->tuple, tuple) &&nf_ct_zone_id(&conn->zone, conn->zone.dir)==nf_ct_zone_id(zone, zone->dir))return 0; /* already exists */} else {collect++;}continue;}found_ct = nf_ct_tuplehash_to_ctrack(found);if (nf_ct_tuple_equal(&conn->tuple, tuple) &&nf_ct_zone_equal(found_ct, zone, zone->dir)) {/** We should not see tuples twice unless someone hooks* this into a table without "-p tcp --syn".** Attempt to avoid a re-add in this case.*/nf_ct_put(found_ct);return 0;} else if (already_closed(found_ct)) {/** we do not care about connections which are* closed already -> ditch it*/nf_ct_put(found_ct);conn_free(list, conn);collect++;continue;}nf_ct_put(found_ct);}add_new_node:if (WARN_ON_ONCE(list->count > INT_MAX))return -EOVERFLOW;conn = kmem_cache_alloc(conncount_conn_cachep, GFP_ATOMIC);if (conn == NULL)return -ENOMEM;conn->tuple = *tuple;conn->zone = *zone;conn->cpu = raw_smp_processor_id();conn->jiffies32 = (u32)jiffies;list_add_tail(&conn->node, &list->head);list->count++;list->last_gc = (u32)jiffies;return 0;

}

Q3:删除规则后,kmalloc-64恢复原来较低数值?

iptables -D INPUT 1删除规则,会触发connlimit_mt_destroy -> nf_conncount_destroy ->destroy_tree -> kmem_cache_free ,便会把所用的slab对象全部释放,所以kmalloc-64恢复原来较低

数值。

Q4:若此时不删除规则,暂停攻击, kmalloc-64 会一直保持原高值不变?

find_or_evict函数是用来释放无效过期节点,虽然find_or_evict除了在我们介绍的

__nf_conncount_add函数里被调用,还会在其他几个函数中被调用。

但是通过日志追踪,实际find_or_evict只在__nf_conncount_add中执行。那么只有新节点过来,才会进入find_or_evict函数来释放内存,所以暂停攻击,kmalloc-64 就会一直保持原高值不变。

Q5:若此时再使用低频进行攻击, kmalloc-64 会在原来高值情况下慢慢减小,最终回到内存正常状态?

当低频攻击时,__nf_conncount_add的在10ms内直接add_new_node就会少,同时find_or_evict函数一次最多可以释放9个对象。这样释放节点数快于新节点的创建,所以kmalloc-64 会在原来高值情况下慢慢减小,最终会回到内存正常状态。

Q6:find_or_evict会在多个函数被调用,为何实际只在__nf_conncount_add中执行?如果其他函数也会调用,这样释放节点就加快了,也可以避免该问题。

之前过分析的代码:

connlimit_mt ->

nf_conncount_count ->

count_tree -> nf_conncount_gc_list -> find_or_evict-> insert_tree -> nf_conncount_gc_list -> find_or_evict- > schedule_gc_worker -> schedule_gc_worker -> schedule_work

schedule_work 触发工作队列去执行

tree_gc_worker -> nf_conncount_gc_list -> find_or_evict

首先对Q4的补充:

connlimit_mt 和 __nf_conncount_add 均包含节点释放逻辑,但两者的触发条件均依赖数据包的主动进入。

当高频攻击停止且无新数据包进入时,由于缺乏触发条件,已分配的 kmalloc-64 内存(存储连接计数节点)将无法释放,导致内存占用维持高位。

static unsigned int

count_tree(struct net *net,struct nf_conncount_data *data,

const u32 *key,

const struct nf_conntrack_tuple *tuple,

const struct nf_conntrack_zone *zone)

{struct rb_root *root;struct rb_node *parent;struct nf_conncount_rb *rbconn;unsigned int hash;printk("**[%s:%d]** \n", __func__, __LINE__);hash = jhash2(key, data->keylen, conncount_rnd) % CONNCOUNT_SLOTS;root = &data->root[hash];parent = rcu_dereference_raw(root->rb_node);while (parent) {int diff;rbconn = rb_entry(parent, struct nf_conncount_rb, node);diff = key_diff(key, rbconn->key, data->keylen);if (diff < 0) {parent = rcu_dereference_raw(parent->rb_left);} else if (diff > 0) {parent = rcu_dereference_raw(parent->rb_right);} else {int ret;printk("**[%s:%d]** \n", __func__, __LINE__);if (!tuple) {printk("**[%s:%d]** \n", __func__, __LINE__);nf_conncount_gc_list(net, &rbconn->list);return rbconn->list.count;}spin_lock_bh(&rbconn->list.list_lock);/* Node might be about to be free'd.* We need to defer to insert_tree() in this case.*/if (rbconn->list.count == 0) {spin_unlock_bh(&rbconn->list.list_lock);break;}/* same source network -> be counted! */ret = __nf_conncount_add(net, &rbconn->list, tuple,zone);spin_unlock_bh(&rbconn->list.list_lock);if (ret)return 0; /* hotdrop */elsereturn rbconn->list.count;}}printk("**[%s:%d]** \n", __func__, __LINE__);if (!tuple)return 0;printk("**[%s:%d]** \n", __func__, __LINE__);return insert_tree(net, data, root, hash, key, tuple, zone);

}

count_tree 为何没进入上述释放节点的逻辑:

-

- 因调用时始终传入有效的 tuple 参数,跳过了直接调用 nf_conncount_gc_list 的路径。

-

- 进入__nf_conncount_add逻辑后,直接返回,不再进入 insert_tree 函数调用,后续的释放节点都走不到。