ELK日志分析,涉及logstash、elasticsearch、kibana等多方面应用,必看!

目录

ELK日志分析

1、下载lrzsc

2、下载源包

3、解压文件,下载elasticsearch、kibana、 logstash

4、配置elasticsearch

5、配种域名解析

6、配置kibana

7、配置logstash

8、进行测试

ELK日志分析

1、下载lrzsc

[root@localhost ~]# hostnamectl set-hostname elk ##更改主机名

[root@localhost ~]# bash

[root@elk ~]# history yum isntall -y lrzsc

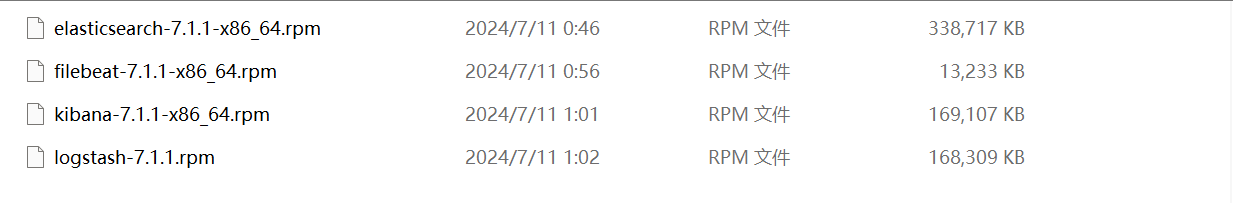

2、下载源包

https://www.elastic.co/downloads/past-releases/ ##下载网址

压缩成elfk.tar.gz压缩包,并加载到主机上

3、解压文件,下载elasticsearch、kibana、 logstash

[root@elk ~]# tar xf elfk.tar.gz

[root@elk ~]# cd /rpm

[root@elk ~]# ls

elasticsearch-7.1.1-x86_64.rpm filebeat-7.1.1-x86_64.rpm kibana-7.1.1-x86_64.rpm logstash-7.1.1.rpm

[root@elk ~]# yum localinstall elasticsearch-7.1.1-x86_64.rpm

[root@elk ~]# yum localinstall kibana-7.1.1-x86_64.rpm

[root@elk ~]# yum localinstall logstash-7.1.1.rpm

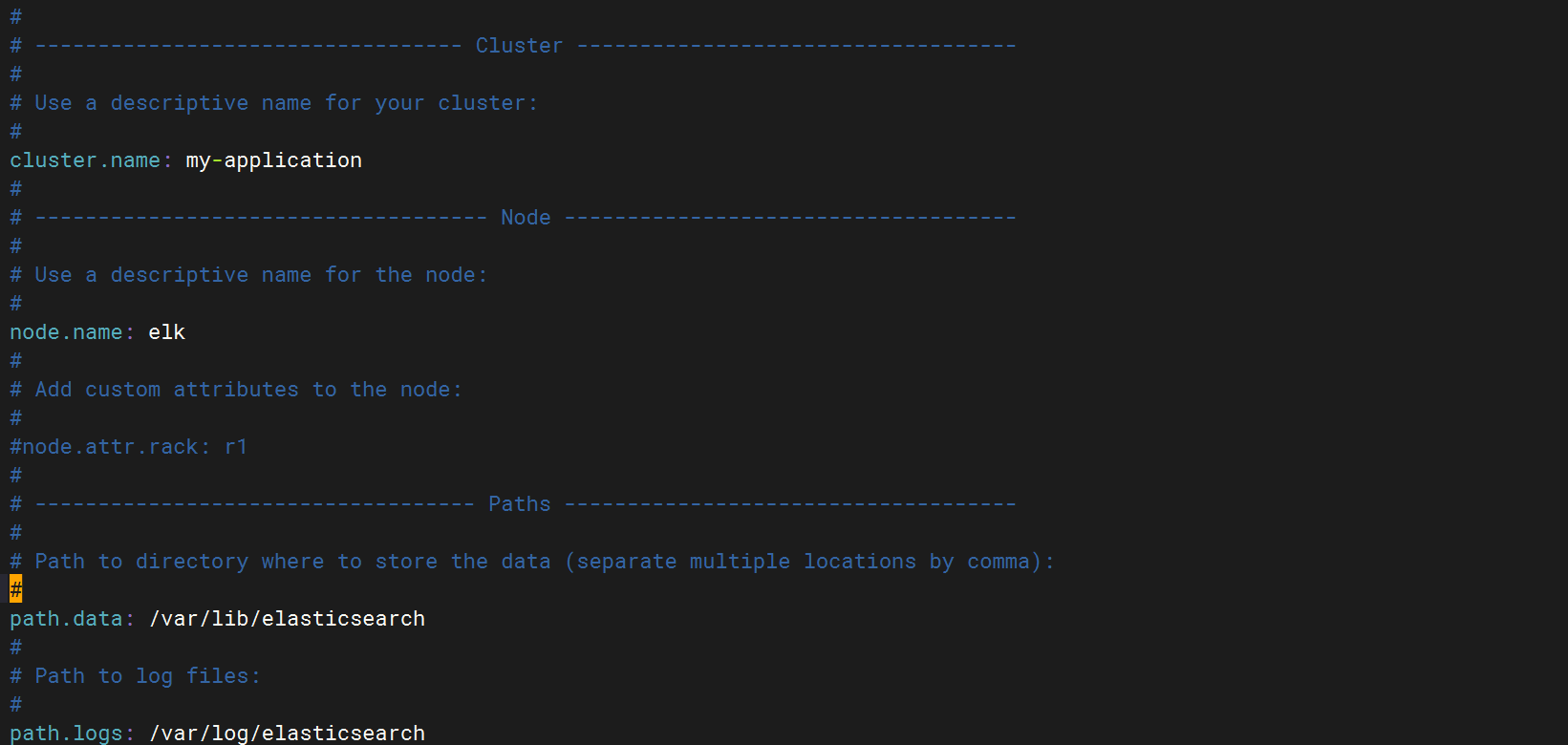

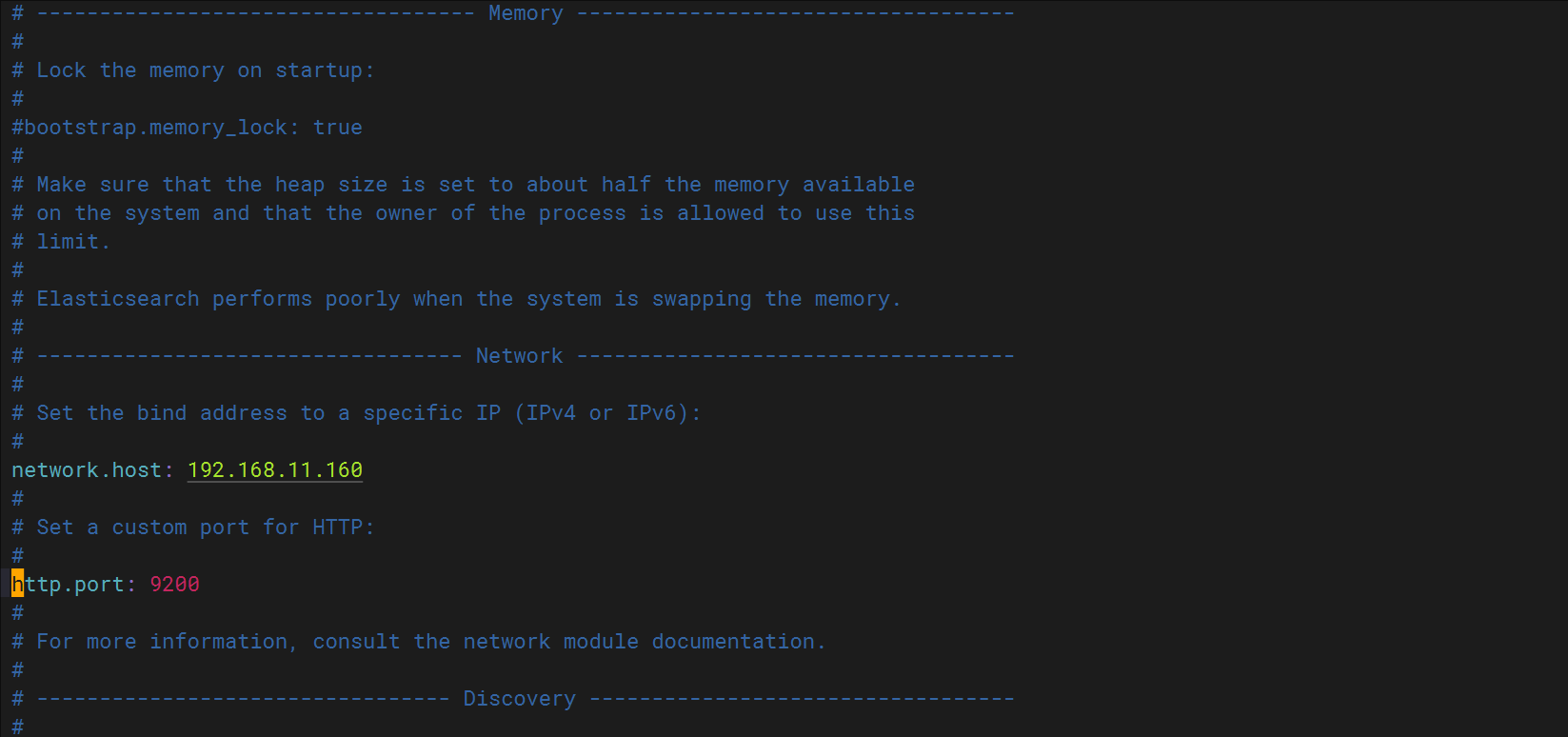

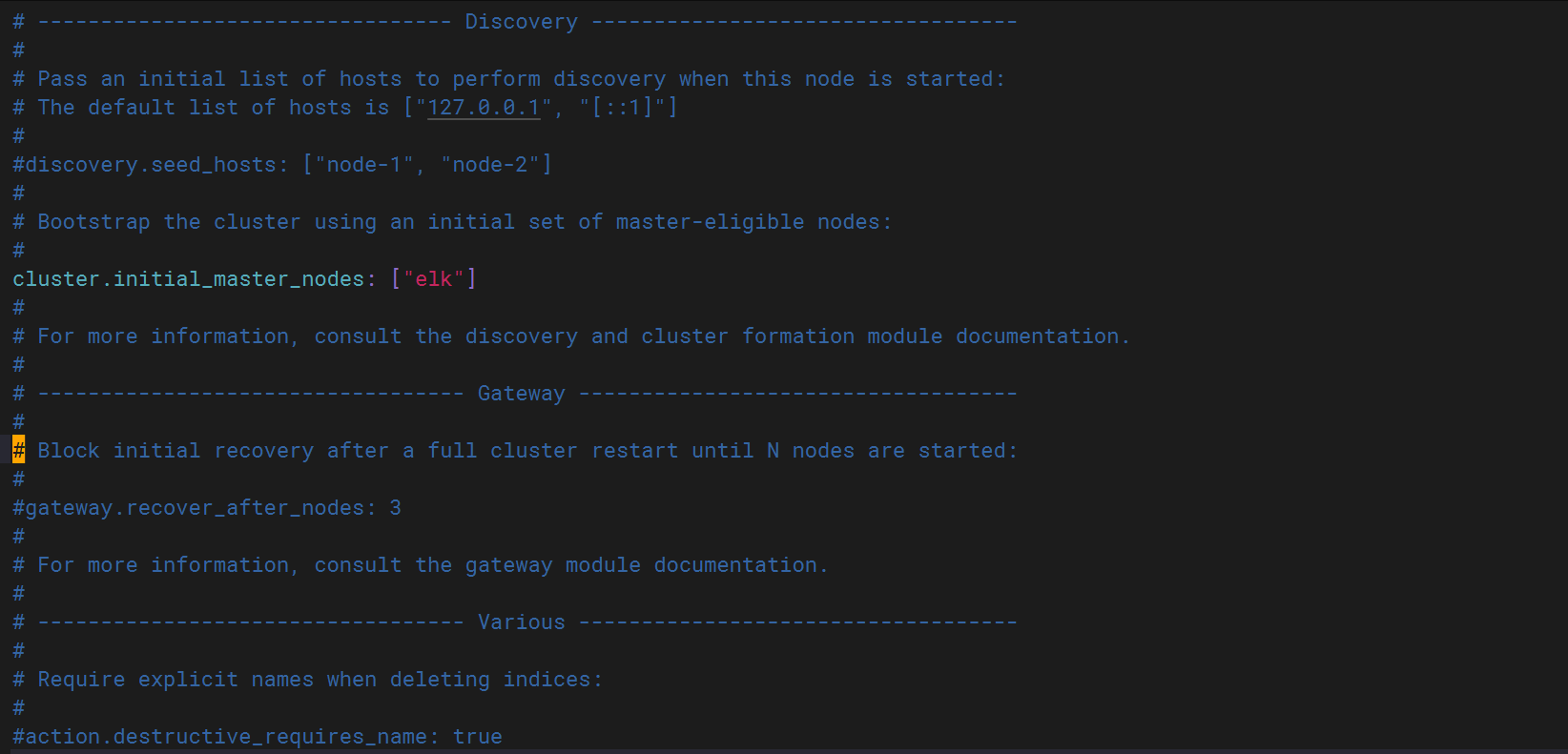

4、配置elasticsearch

[root@elk ~]# vim /etc/elasticsearch/elasticsearch.yml ##配置elasticsearch

找到Cluster定义

Elasticsearch 集群名称

node.name:elk 节点名称

找到 Memory

network:192.168.11.160 主机IP

http.port: 9200 HTTP端口

找到 Discovery

cluster.initial_master_nodes 集群初始主节点

[root@elk ~]# systemctl start elasticsearch.service ##启动程序

[root@elk kibana]# netstat -anptu | grep java ##查看程序

tcp6 0 0 192.168.11.160:9200 :::* LISTEN 48445/java

tcp6 0 0 192.168.11.160:9300 :::* LISTEN 48445/java

tcp6 0 0 192.168.11.160:9200 192.168.11.160:58732 ESTABLISHED 48445/java

tcp6 0 0 192.168.11.160:9200 192.168.11.160:58738 ESTABLISHED 48445/java

5、配种域名解析

[root@elk ~]# vim /etc/hosts ##配置域名解析

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.11.160 elk

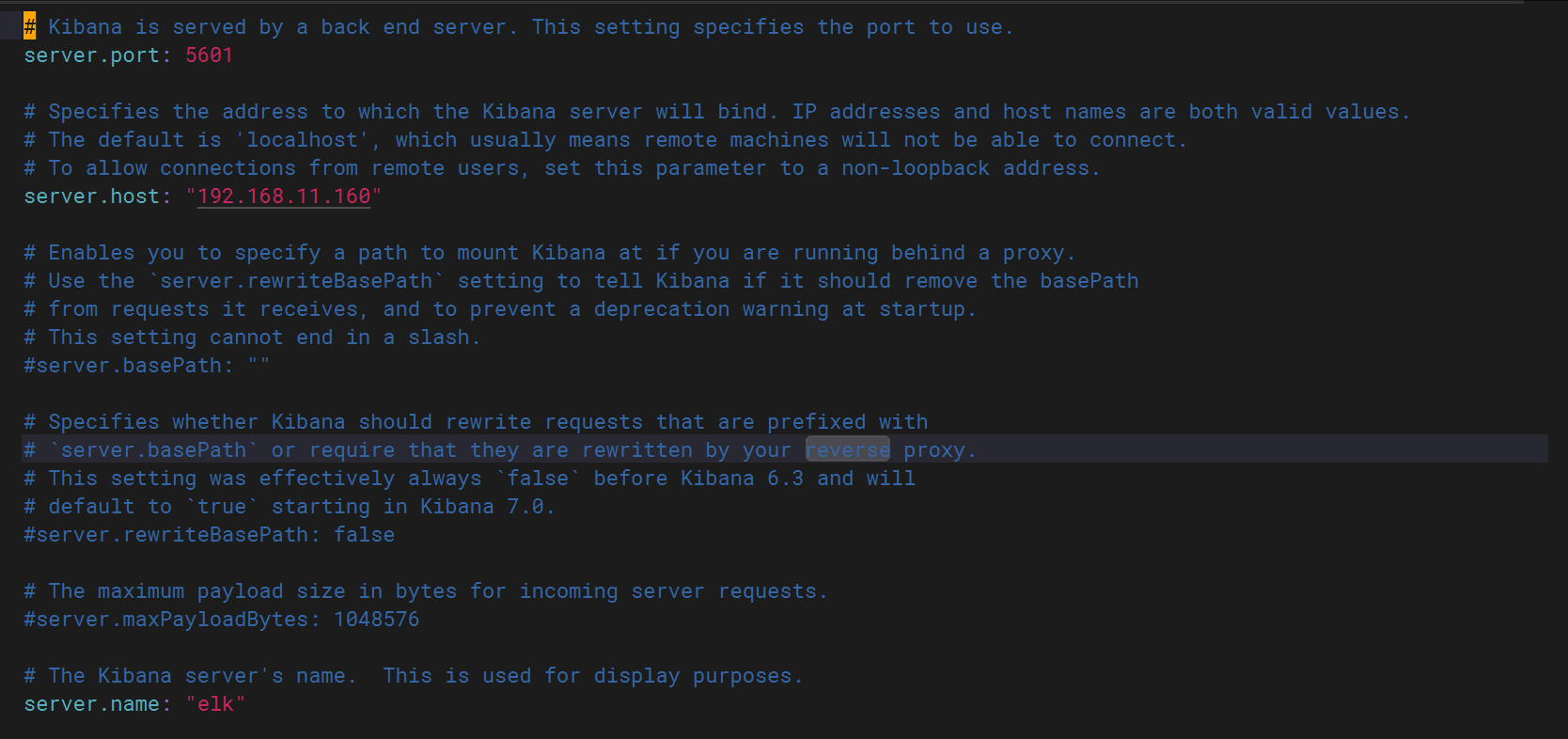

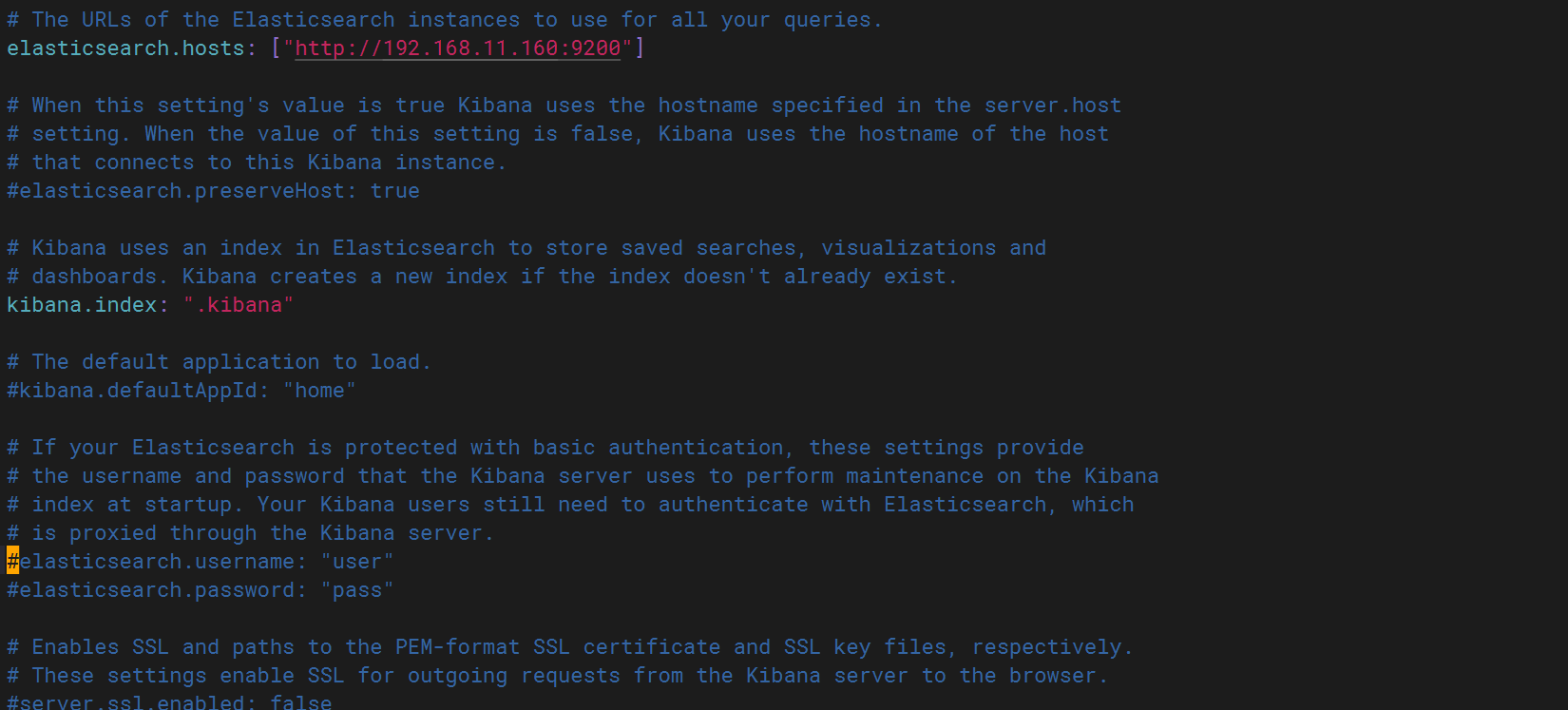

6、配置kibana

[root@elk ~]# vim /etc/kibana/kibana.yml ##配置kibana

server.port:5601 打开服务器端口5601

server.host:192.168.11.160 服务器主机 (也可以为elk ,因为hosts已经配置)

sercer.name: elk 服务器名

elasticsearch.hosts: ["http://192.168.11.160:9200"] elasticsearch主机

kibana.index: ".kibana" kibana索引

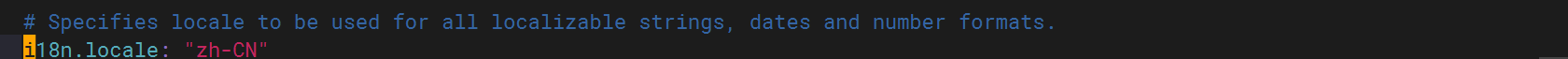

i18n.locale: "zh-CN" 中文表达

[root@elk ~]# systemctl start kibana ##启动kibana

[root@elk ~]# netstat -anptu | grep 5601 ##查看kibana

tcp 0 0 192.168.11.160:5601 0.0.0.0:* LISTEN 57307/node

7、配置logstash

[root@elk ~]# cat /etc/logstash/logstash.yml ##查看logstash配置但不进行配置

[root@elk ~]# vim /etc/logstash/conf.d/pipline.conf ##创建pipline.conf配置文件进行如下配置

input { ## 使用 file 插件从文件中读取数据file { ##指定要读取的日志文件路径path => "/var/log/messages" ##设定首次运行时的起始读取位置start_position => "beginning" ##设定首次运行时的起始读取位置##"beginning" - 从头读取文件所有内容}}

output { ##输出部分:定义数据的去向elasticsearch { ##使用 elasticsearch 插件将数据发送到 Elasticsearchhosts => ["http://192.168.11.160:9200"] ##指定 Elasticsearch 集群的节点地址##支持多个节点地址,实现高可用index => "system-log-%{+YYYY.MM.dd}" ##定义索引名称模板,自动按日期创建索引}

stdout { ##同时输出到标准输出(控制台)codec => rubydebug ##指定输出格式为 rubydebug##rubydebug 格式会将数据以易读的 JSON 格式打印}}

~

8、进行测试

对logstash测试

[root@elk ~]# logstash -e 'input{ stdin{} }output { stdout{} }'

[INFO ] 2025-07-16 21:21:29.007 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

1

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{"message" => "1","@version" => "1","host" => "elk","@timestamp" => 2025-07-16T13:21:33.148Z[root@elk conf.d]# logstash -e 'input{ stdin{} }output { stdout{ codec=>rubydebug }}'

[INFO ] 2025-07-16 21:23:52.835 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

1

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{"@timestamp" => 2025-07-16T13:23:54.454Z,"@version" => "1","host" => "elk","message" => "1"

}

[root@elk conf.d]# logstash -e 'input{ stdin{} }output { elasticsearch{ hosts=>["192.168.11.160:9200"]}}'

[INFO ] 2025-07-16 20:11:13.660 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{"message" => "Jul 17 03:24:55 elk systemd[2400]: Starting Tracker metadata extractor...","@version" => "1","path" => "/var/log/messages","@timestamp" => 2025-07-16T12:11:15.233Z,"host" => "elk"

}

...... ##不主动关闭一直刷新

对elasticsearch测试

[root@localhost ~]# curl 192.168.11.160:9200

{"name" : "elk","cluster_name" : "my-application","cluster_uuid" : "WzA4MSNsRV6vMYSgX5EePw","version" : {"number" : "7.1.1","build_flavor" : "default","build_type" : "rpm","build_hash" : "7a013de","build_date" : "2019-05-23T14:04:00.380842Z","build_snapshot" : false,"lucene_version" : "8.0.0","minimum_wire_compatibility_version" : "6.8.0","minimum_index_compatibility_version" : "6.0.0-beta1"},"tagline" : "You Know, for Search"

}

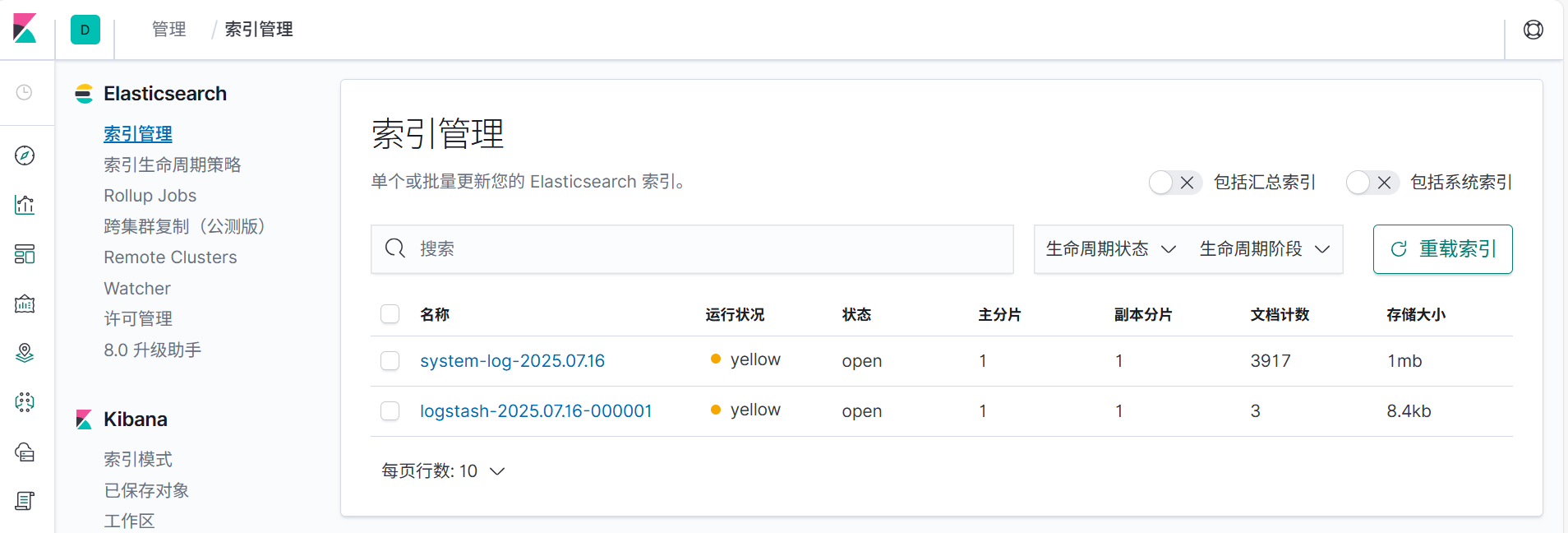

对kibana测试

http://192.168.11.160:5601

在进行如下操作时,会出先system-log索引 如下图

[root@elk conf.d]# logstash -e 'input{ stdin{} }output { elasticsearch{ hosts=>["192.168.11.160:9200"]}}'

查看到如上界面,kibana配置成功