OpenStack虚拟化平台之T版搭建部署

1 环境准备

1.1 主机规划

1.1.1 hosts地址解析

[root@controller ~]# cat >> /etc/hosts <<EOF

> #contoller

> 11.0.1.10 controller

>

> #compute1

> 11.0.1.11 compute1

> EOF[root@compute1 ~]# cat >> /etc/hosts <<EOF

> #contoller

> 11.0.1.10 controller

>

> #compute1

> 11.0.1.11 compute1

> EOF

1.1.2 关闭防火墙

sudo systemctl stop ufw

sudo systemctl disable ufw

1.1.3 关闭selinux

root@controller:~# sestatus sestatus

SELinux status: disabledroot@compute1:~# sestatus sestatus

SELinux status: disabled

1.2 安装OpenStack基础包

#导入安装源

add-apt-repository cloud-archive:zed#安装软件包

apt install nova-compute -y

apt install python3-openstackclient -y

1.3 安装数据库(控制节点)

1.3.1 安装程序包

apt install mariadb-server python3-pymysql -y

1.3.2 数据库配置

cat >> /etc/mysql/mariadb.conf.d/99-openstack.cnf <<EOF

[mysqld]

bind-address = 11.0.1.10default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOFmysql_secure_installation#安全设置

root@controller:~# mysql_secure_installationNOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDBSERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

haven't set the root password yet, you should just press enter here.Enter current password for root (enter for none):

OK, successfully used password, moving on...Setting the root password or using the unix_socket ensures that nobody

can log into the MariaDB root user without the proper authorisation.You already have your root account protected, so you can safely answer 'n'.Switch to unix_socket authentication [Y/n]

Enabled successfully!

Reloading privilege tables..... Success!You already have your root account protected, so you can safely answer 'n'.Change the root password? [Y/n] n... skipping.By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.Remove anonymous users? [Y/n] y... Success!Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.Disallow root login remotely? [Y/n] y... Success!By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.Remove test database and access to it? [Y/n] y- Dropping test database...... Success!- Removing privileges on test database...... Success!Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.Reload privilege tables now? [Y/n] y... Success!Cleaning up...All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.Thanks for using MariaDB!

1.4 安装消息队列(控制节点)

1.4.1 安装软件包

#安装rabbitmq

root@controller:~# apt install rabbitmq-server -y

#设置开机自启动并启动服务

root@controller:~# systemctl enable --now rabbitmq-server

Synchronizing state of rabbitmq-server.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable rabbitmq-server

root@controller:~# systemctl status rabbitmq-server

● rabbitmq-server.service - RabbitMQ Messaging ServerLoaded: loaded (/lib/systemd/system/rabbitmq-server.service; enabled; vendor preset: enabled)Active: active (running) since Sun 2025-08-10 15:02:29 CST; 1min 59s agoMain PID: 2234 (beam.smp)Tasks: 24 (limit: 4516)Memory: 92.1MCPU: 5.538sCGroup: /system.slice/rabbitmq-server.service├─2234 /usr/lib/erlang/erts-12.2.1/bin/beam.smp -W w -MBas ageffcbf -MHas ageffcbf -MBlmbcs 512 -MHlmbcs 512 -MMmcs 30 -P 1048576 -t 5000000 -stbt db -zdbbl 128000 -sbwt no>├─2246 erl_child_setup 65536├─2297 inet_gethost 4├─2298 inet_gethost 4└─2301 /bin/sh -s rabbit_disk_monitorAug 10 15:02:23 controller systemd[1]: Starting RabbitMQ Messaging Server...

Aug 10 15:02:29 controller systemd[1]: Started RabbitMQ Messaging Server.

lines 1-16/16 (END)

1.4.2 配置

#添加用户:openstack

rabbitmqctl add_user openstack RABBIT_PASS#允许用户进行配置、写入和读取访问:openstack

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

1.5 内存缓存Memcached (控制节点)

1.5.1 安装软件包

root@controller:~# apt install memcached python3-memcache -y

1.5.2 配置

root@controller:~# vim /etc/memcached.conf #修改一行

#...

-l 11.0.1.10

1.5.3 设置开机自启动

#启动服务

root@controller:~# systemctl enable --now memcached

Synchronizing state of memcached.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable memcached

root@controller:~# systemctl status memcached.service

● memcached.service - memcached daemonLoaded: loaded (/lib/systemd/system/memcached.service; enabled; vendor preset: enabled)Active: active (running) since Sun 2025-08-10 15:10:58 CST; 2min 17s agoDocs: man:memcached(1)Main PID: 3325 (memcached)Tasks: 10 (limit: 4516)Memory: 2.1MCPU: 62msCGroup: /system.slice/memcached.service└─3325 /usr/bin/memcached -m 64 -p 11211 -u memcache -l 127.0.0.1 -P /var/run/memcached/memcached.pidAug 10 15:10:58 controller systemd[1]: Started memcached daemon.

1.6 键值存储 Etcd(控制节点)

1.6.1 安装软件包

root@controller:~# apt install etcd -y

1.6.2 配置

root@controller:~# cp /etc/default/etcd /etc/default/etcd.bak

root@controller:~# grep -Ev '^$|#' /etc/default/etcd.bak > /etc/default/etcd

root@controller:~# vim /etc/default/etcd

ETCD_NAME="controller"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER="controller=http://11.0.1.10:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://11.0.1.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://11.0.1.10:2379"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://11.0.1.10:2379"root@controller:~# systemctl enable --now etcd

Synchronizing state of etcd.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable etcd

root@controller:~# systemctl status etcd

● etcd.service - etcd - highly-available key value storeLoaded: loaded (/lib/systemd/system/etcd.service; enabled; vendor preset: enabled)Active: active (running) since Sun 2025-08-10 15:32:46 CST; 6min agoDocs: https://etcd.io/docsman:etcdMain PID: 4638 (etcd)Tasks: 8 (limit: 4516)Memory: 5.5MCPU: 1.529sCGroup: /system.slice/etcd.service└─4638 /usr/bin/etcdAug 10 15:32:46 controller etcd[4638]: 8e9e05c52164694d received MsgVoteResp from 8e9e05c52164694d at term 2

Aug 10 15:32:46 controller etcd[4638]: 8e9e05c52164694d became leader at term 2

Aug 10 15:32:46 controller etcd[4638]: raft.node: 8e9e05c52164694d elected leader 8e9e05c52164694d at term 2

Aug 10 15:32:46 controller etcd[4638]: setting up the initial cluster version to 3.3

Aug 10 15:32:46 controller etcd[4638]: set the initial cluster version to 3.3

Aug 10 15:32:46 controller etcd[4638]: enabled capabilities for version 3.3

Aug 10 15:32:46 controller etcd[4638]: published {Name:controller ClientURLs:[http://localhost:2379]} to cluster cdf818194e3a8c32

Aug 10 15:32:46 controller etcd[4638]: ready to serve client requests

Aug 10 15:32:46 controller etcd[4638]: serving insecure client requests on 127.0.0.1:2379, this is strongly discouraged!

Aug 10 15:32:46 controller systemd[1]: Started etcd - highly-available key value store.

root@controller:~#

2 安装 OpenStack 组件服务

注:OpenStack服务安装的通用步骤

- 创库授权

- keystone创建账号

- keystone创建服务实体

- 安装服务软件包

- 修改服务的配置文件

- 同步数据库

- 启动服务

- 验证

2.1 身份服务keystone(控制节点)

2.1.1 创建数据库

root@controller:~# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 40

Server version: 10.6.22-MariaDB-0ubuntu0.22.04.1 Ubuntu 22.04Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.018 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \-> IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.019 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \-> IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.001 sec)MariaDB [(none)]> quit

Bye

root@controller:~#

2.1.2 安装软件包及配置

2.1.2.1 安装keystone及配置

#安装软件包

root@controller:~# apt install keystone -y#配置

root@controller:~# cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

root@controller:~# grep -Ev '^$|#' /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

root@controller:~# vim /etc/keystone/keystone.conf#填充 Identity 服务数据库

root@controller:~# su -s /bin/sh -c "keystone-manage db_sync" keystone#初始化 Fernet 密钥存储库

root@controller:~# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

root@controller:~# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone#引导身份服务

root@controller:~# keystone-manage bootstrap --bootstrap-password ADMIN_PASS \--bootstrap-admin-url http://controller:5000/v3/ \--bootstrap-internal-url http://controller:5000/v3/ \--bootstrap-public-url http://controller:5000/v3/ \--bootstrap-region-id RegionOne

2.1.2.2 配置http服务器

root@controller:~# echo "ServerName controller" >> /etc/apache2/apache2.conf

root@controller:~# systemctl restart apache2.service

2.1.2.3 设置环境变量来配置管理帐户

root@controller:~# cat > /etc/profile.d/keystone.sh <<EOF

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

EOF

root@controller:~# . /etc/profile.d/keystone.sh

root@controller:~# env|grep OS

OS_AUTH_URL=http://controller:5000/v3

OS_PROJECT_DOMAIN_NAME=Default

LESSCLOSE=/usr/bin/lesspipe %s %s

OS_USERNAME=admin

OS_USER_DOMAIN_NAME=Default

OS_PROJECT_NAME=admin

OS_PASSWORD=ADMIN_PASS

OS_IDENTITY_API_VERSION=3

2.1.3 创建域、项目、用户和角色

root@controller:~# openstack domain create --description "An Example Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | 0281405e0afc4e5aaa8e61433fe779ae |

| name | example |

| options | {} |

| tags | [] |

+-------------+----------------------------------+

root@controller:~# openstack project create --domain default \--description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | bf36f7ce3b9744a4b3816eaf5f04d6be |

| is_domain | False |

| name | service |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

root@controller:~# openstack project create --domain default \--description "Demo Project" myproject

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | 576be1db3aea44149d1b4477fb708cb2 |

| is_domain | False |

| name | myproject |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

root@controller:~# openstack user create --domain default \--password 123456 myuser

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | cc796956b5d246039c4f2d0e6d9284fb |

| name | myuser |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

root@controller:~# openstack role create myrole

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | None |

| domain_id | None |

| id | 143f348c19254090b77e49eafeca16a6 |

| name | myrole |

| options | {} |

+-------------+----------------------------------+

root@controller:~# openstack role add --project myproject --user myuser myrole

root@controller:~#

2.1.4 验证

root@controller:~# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2025-08-10T10:21:42+0000 |

| id | gAAAAABomGSm2gTzfhMK8gXGXvltqoWTJVChjPV7AAISvSCncRfLCvnUltRuy3XOv-X6CQ0IlbssqJegDLNOmaWYxUzOEagQYxIYw5tQ51u86DOowqaXc5V5StpszycPMRW0pruOVEq0Lcp73cmwFMISRGuKjvFI-EDFRiCNWNEg7y10hfs9e2s |

| project_id | 00286ab0bea74e97ae6307e8245b98af |

| user_id | 42a4c1c0c4a84b49935fb1370a0b93b2 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

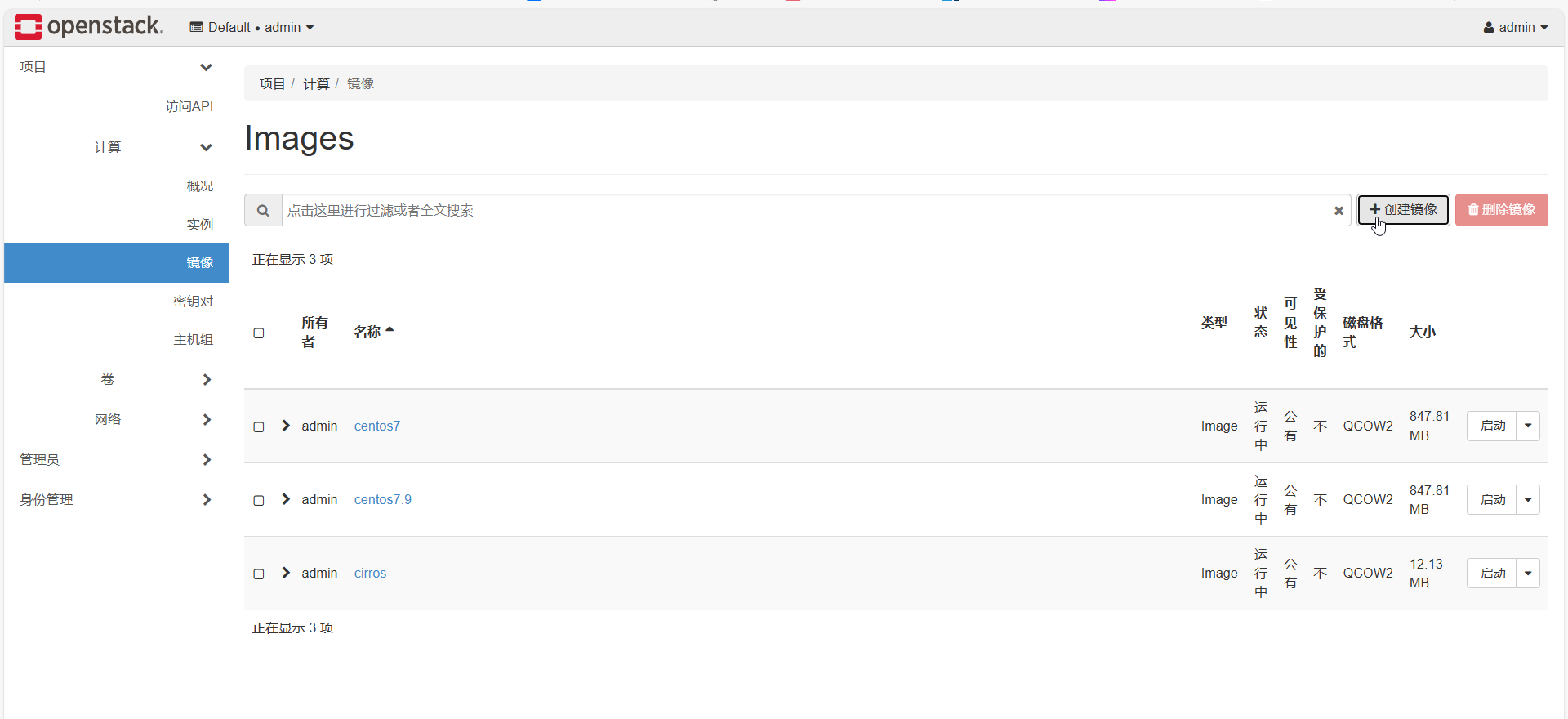

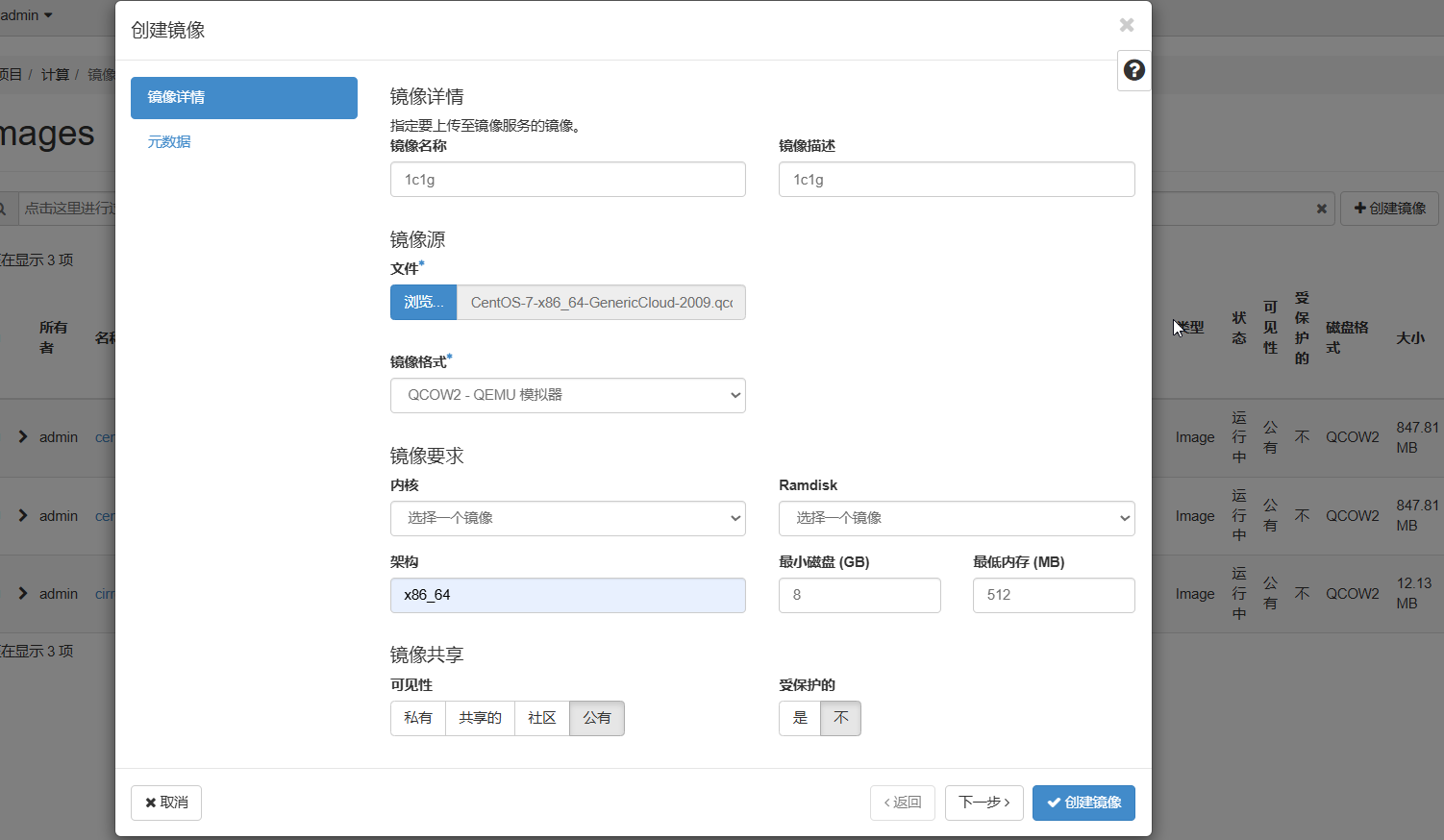

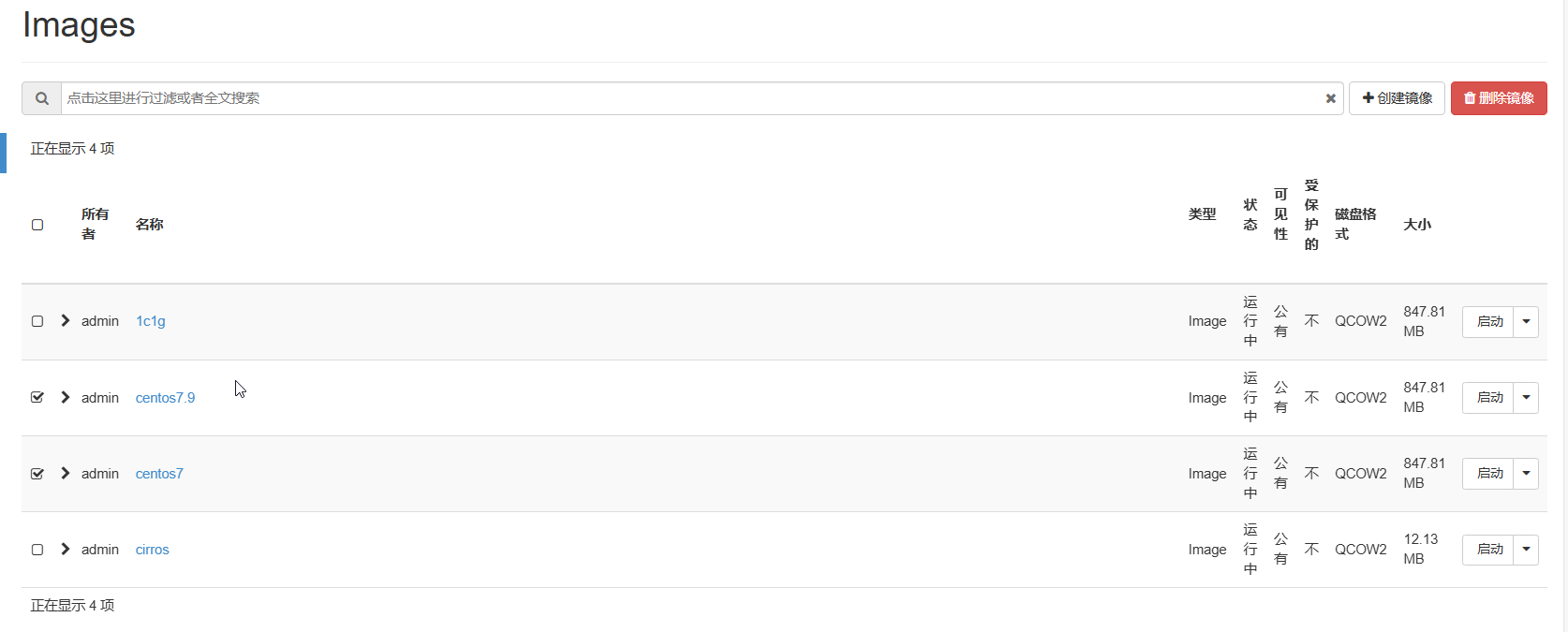

2.2 镜像服务Glance(控制节点)

2.2.1 创库授权

#创库

root@controller:~# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 43

Server version: 10.6.22-MariaDB-0ubuntu0.22.04.1 Ubuntu 22.04Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.001 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \-> IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.001 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \-> IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.001 sec)MariaDB [(none)]> quit

Bye

root@controller:~# #创建服务凭证

## 创建用户:glance

root@controller:~# openstack user create --domain default --password GLANCE_DBPASS glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 7cdc21f956004f5a83e8d1c005d24577 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#将角色添加到用户和项目:admin glance service

root@controller:~# openstack role add --project service --user glance admin

#创建服务实体:glance

root@controller:~# openstack service create --name glance \--description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | a96d664ba14e4b03b2132bfa1f1f3c88 |

| name | glance |

| type | image |

+-------------+----------------------------------+#创建映像服务 API 端点

root@controller:~# openstack endpoint create --region RegionOne \image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 9312ad9182e9460083525f711bb03cef |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a96d664ba14e4b03b2132bfa1f1f3c88 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

root@controller:~# openstack endpoint create --region RegionOne \image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 82777a1290cf4483a9f78d82821220ec |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a96d664ba14e4b03b2132bfa1f1f3c88 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

root@controller:~# openstack endpoint create --region RegionOne \image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | efac96d9f41c4c8bb61598400034d620 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a96d664ba14e4b03b2132bfa1f1f3c88 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

2.2.2 安装和配置组件

root@controller:~# apt install glance -y

root@controller:~# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

root@controller:~# grep -Ev '^$|#' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

root@controller:~# vim /etc/glance/glance-api.conf

root@controller:~# cat /etc/glance/glance-api.conf

[DEFAULT]

enabled_backends=fs:file

use_keystone_limits = True #(可选)启用每个租户配额:[DEFAULT]

[barbican]

[barbican_service_user]

[cinder]

[cors]

#在该部分中,配置数据库访问:[database]

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

connection = sqlite:////var/lib/glance/glance.sqlite

backend = sqlalchemy

[file]

[fs]

filesystem_store_datadir = /var/lib/glance/images/

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.s3.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

#配置本地文件 图像文件的系统存储和位置:[glance_store]

[glance_store]

default_backend = fs

[healthcheck]

[image_format]

disk_formats = ami,ari,aki,vhd,vhdx,vmdk,raw,qcow2,vdi,iso,ploop.root-tar

[key_manager]

#配置身份服务访问:[keystone_authtoken][paste_deploy]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[os_brick]

[oslo_concurrency]

#配置对 keystone 的访问:[oslo_limit]

[oslo_limit]

auth_url = http://controller:5000

auth_type = password

user_domain_id = default

username = glance

system_scope = all

password = GLANCE_PASS

endpoint_id = ENDPOINT_ID

region_name = RegionOne

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

[vault]

[wsgi]root@controller:~# openstack endpoint list --service glance --region RegionOne+----------------------------------+-----------+--------------+--------------+---------+-----------+------------------------+

| ID | Region | Service Name | Service Type | Enabled | Interface | URL |

+----------------------------------+-----------+--------------+--------------+---------+-----------+------------------------+

| 82777a1290cf4483a9f78d82821220ec | RegionOne | glance | image | True | internal | http://controller:9292 |

| 9312ad9182e9460083525f711bb03cef | RegionOne | glance | image | True | public | http://controller:9292 |

| efac96d9f41c4c8bb61598400034d620 | RegionOne | glance | image | True | admin | http://controller:9292 |

+----------------------------------+-----------+--------------+--------------+---------+-----------+------------------------+

root@controller:~#

root@controller:~# openstack role add --user glance --user-domain Default --system all readerroot@controller:~#

root@controller:~# vim /etc/glance/glance-api.conf

root@controller:~# su -s /bin/sh -c "glance-manage db_sync" glance

2025-08-10 18:19:46.932 4387 INFO alembic.runtime.migration [-] Context impl SQLiteImpl.

2025-08-10 18:19:46.933 4387 INFO alembic.runtime.migration [-] Will assume non-transactional DDL.

2025-08-10 18:19:46.939 4387 INFO alembic.runtime.migration [-] Context impl SQLiteImpl.

2025-08-10 18:19:46.939 4387 INFO alembic.runtime.migration [-] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> liberty, liberty initial

INFO [alembic.runtime.migration] Running upgrade liberty -> mitaka01, add index on created_at and updated_at columns of 'images' table

INFO [alembic.runtime.migration] Running upgrade mitaka01 -> mitaka02, update metadef os_nova_server

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_expand01, add visibility to images

INFO [alembic.runtime.migration] Running upgrade ocata_expand01 -> pike_expand01, empty expand for symmetry with pike_contract01

INFO [alembic.runtime.migration] Running upgrade pike_expand01 -> queens_expand01

INFO [alembic.runtime.migration] Running upgrade queens_expand01 -> rocky_expand01, add os_hidden column to images table

INFO [alembic.runtime.migration] Running upgrade rocky_expand01 -> rocky_expand02, add os_hash_algo and os_hash_value columns to images table

INFO [alembic.runtime.migration] Running upgrade rocky_expand02 -> train_expand01, empty expand for symmetry with train_contract01

INFO [alembic.runtime.migration] Running upgrade train_expand01 -> ussuri_expand01, empty expand for symmetry with ussuri_expand01

INFO [alembic.runtime.migration] Running upgrade ussuri_expand01 -> wallaby_expand01, add image_id, request_id, user columns to tasks table"

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: wallaby_expand01, current revision(s): wallaby_expand01

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database migration is up to date. No migration needed.

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_contract01, remove is_public from images

INFO [alembic.runtime.migration] Running upgrade ocata_contract01 -> pike_contract01, drop glare artifacts tables

INFO [alembic.runtime.migration] Running upgrade pike_contract01 -> queens_contract01

INFO [alembic.runtime.migration] Running upgrade queens_contract01 -> rocky_contract01

INFO [alembic.runtime.migration] Running upgrade rocky_contract01 -> rocky_contract02

INFO [alembic.runtime.migration] Running upgrade rocky_contract02 -> train_contract01

INFO [alembic.runtime.migration] Running upgrade train_contract01 -> ussuri_contract01

INFO [alembic.runtime.migration] Running upgrade ussuri_contract01 -> wallaby_contract01

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: wallaby_contract01, current revision(s): wallaby_contract01

INFO [alembic.runtime.migration] Context impl SQLiteImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database is synced successfully.root@controller:~# systemctl daemon-reload

root@controller:~# systemctl enable --now glance-api.service

Synchronizing state of glance-api.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable glance-api

root@controller:~# systemctl status glance-api.service

● glance-api.service - OpenStack Image Service APILoaded: loaded (/lib/systemd/system/glance-api.service; enabled; vendor preset: enabled)Active: active (running) since Sun 2025-08-10 18:08:00 CST; 12min agoDocs: man:glance-api(1)Main PID: 3881 (glance-api)Tasks: 3 (limit: 4516)Memory: 108.9MCPU: 9.124sCGroup: /system.slice/glance-api.service├─3881 /usr/bin/python3 /usr/bin/glance-api --config-file=/etc/glance/glance-api.conf --config-dir=/etc/glance/ --log-file=/var/log/glance/glance-api.log├─3939 /usr/bin/python3 /usr/bin/glance-api --config-file=/etc/glance/glance-api.conf --config-dir=/etc/glance/ --log-file=/var/log/glance/glance-api.log└─3940 /usr/bin/python3 /usr/bin/glance-api --config-file=/etc/glance/glance-api.conf --config-dir=/etc/glance/ --log-file=/var/log/glance/glance-api.logAug 10 18:08:00 controller systemd[1]: Started OpenStack Image Service API.

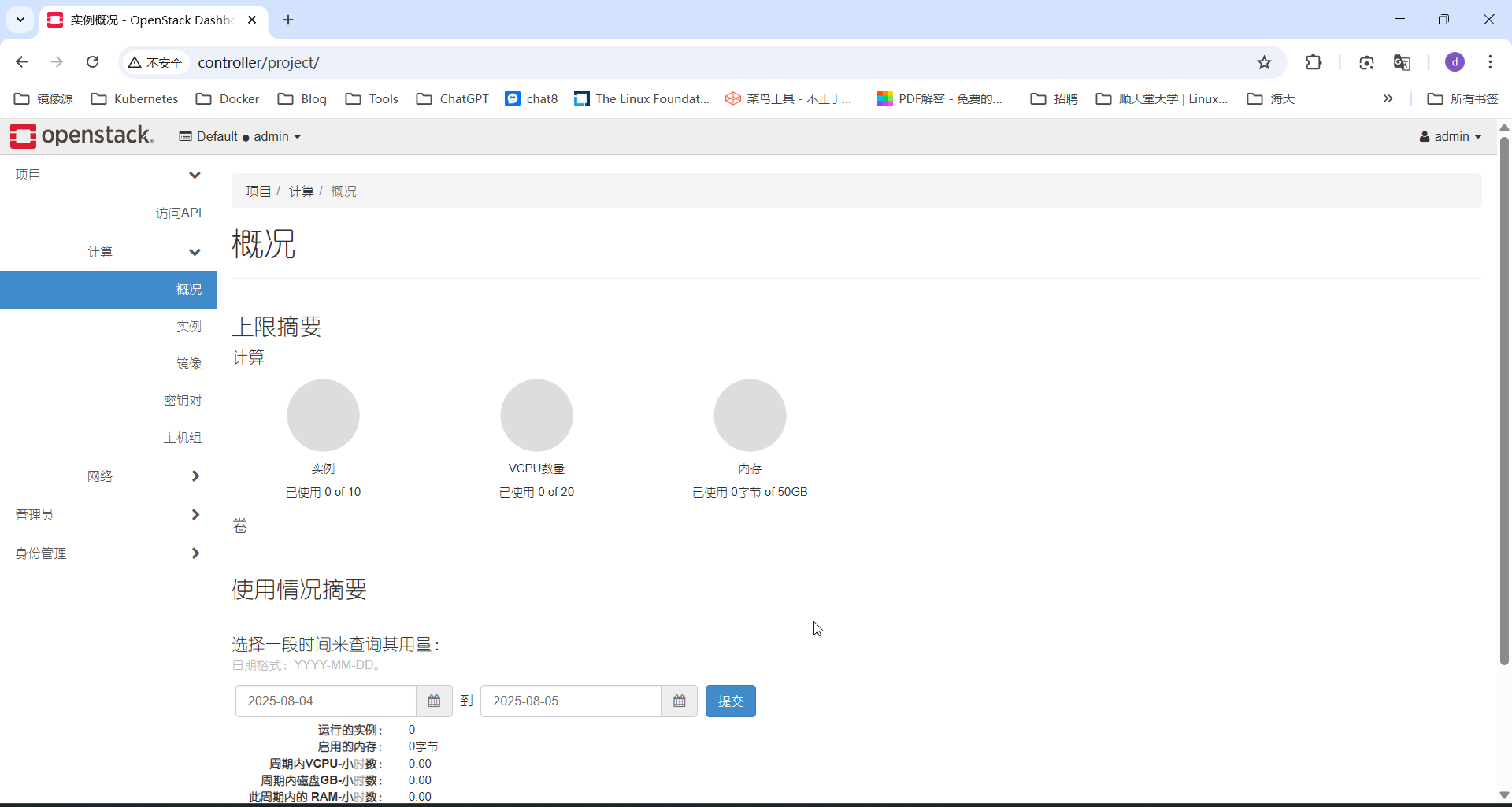

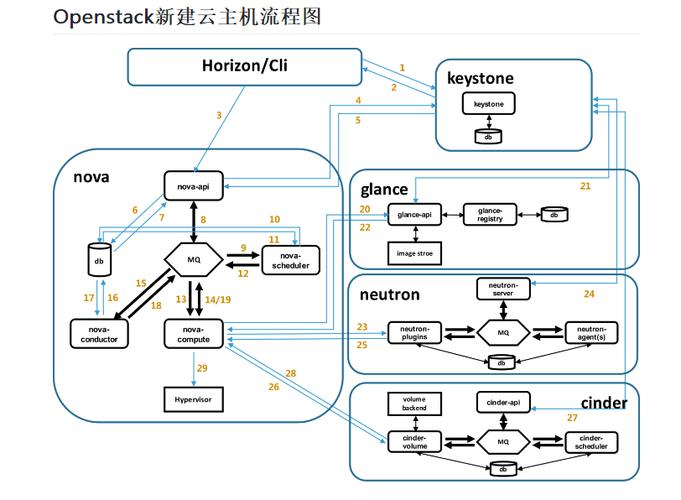

1 OpenStack架构及部署(T版)

OpenStack是KVM的虚拟机的管理平台

相关组件:

keystone: 认证服务(openstck的账号密码)

nova: 计算服务(提供计算资源)

neutron: 提供网络(提供网络连接),openstack支持多用户,自定义网络vpc

glance: 镜像服务(已经安装好系统的磁盘文件),openstack都是链接克隆,需要继续基础镜像

cinder: 块存储(云硬盘)

horizon: 提供web界面

cellometer: 监控计费

swift: 对象存储

heat: 定义yaml文件批量启动虚拟机(用于编排)

安装部署:Train版本为例

环境准备(Centos7)

系统初始化

#host地址规划

#contoller

11.0.1.7 controller #官方文档10.0.0.11#compute1

11.0.1.17 compute1 #官方文档10.0.0.31#管理节点和计算节点都要执行

[root@centos7 ~]# hostnamectl set-hostname controller

[root@centos7 ~]# hostnamectl set-hostname compute1#节点执行命令

cat >> /etc/hosts <<EOF

#contoller

11.0.1.7 controller#compute1

11.0.1.17 compute1

EOF[root@controller ~]# cat >> /etc/hosts <<EOF

> #contoller

> 11.0.1.7 controller

>

> #compute1

> 11.0.1.17 compute1

> EOF[root@compute1 ~]# cat >> /etc/hosts <<EOF

> #contoller

> 11.0.1.7 controller

>

> #compute1

> 11.0.1.17 compute1

> EOF#测试

[root@controller ~]# ping compute1

PING compute1 (11.0.1.17) 56(84) bytes of data.

64 bytes from compute1 (11.0.1.17): icmp_seq=1 ttl=64 time=0.503 ms

64 bytes from compute1 (11.0.1.17): icmp_seq=2 ttl=64 time=0.412 ms

64 bytes from compute1 (11.0.1.17): icmp_seq=3 ttl=64 time=0.449 ms

64 bytes from compute1 (11.0.1.17): icmp_seq=4 ttl=64 time=0.297 ms

^C

--- compute1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3002ms

rtt min/avg/max/mdev = 0.297/0.415/0.503/0.076 ms[root@compute1 ~]# ping controller

PING controller (11.0.1.7) 56(84) bytes of data.

64 bytes from controller (11.0.1.7): icmp_seq=1 ttl=64 time=0.560 ms

64 bytes from controller (11.0.1.7): icmp_seq=2 ttl=64 time=0.481 ms

64 bytes from controller (11.0.1.7): icmp_seq=3 ttl=64 time=0.617 ms

64 bytes from controller (11.0.1.7): icmp_seq=4 ttl=64 time=0.760 ms

^C

--- controller ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3001ms

rtt min/avg/max/mdev = 0.481/0.604/0.760/0.104 ms#安装对时服务chronyd,保证服务的正常运行,token一致性

[root@controller ~]# yum install y chrony #控制节点做时钟服务器

[root@compute1 ~]# yum install -y chrony #计算节点作为客户端,和控制节点对时

[root@controller ~]# grep -Ev '^(#|$)' /etc/chrony.conf

server ntp.aliyun.com iburst #修改此行,设置时钟源

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow 11.0.1.0/24 #设置允许客户端对时的网段

logdir /var/log/chrony#修改配置文件

[root@compute1 ~]# grep -Ev '^(#|$)' /etc/chrony.conf

server controller iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

logdir /var/log/chrony

##重启服务

[root@controller ~]# systemctl restart chronyd #ss -unltp 监听123端口

[root@compute1 ~]# systemctl restart chronyd#验证

[root@controller ~]# date

Tue Aug 5 10:25:36 CST 2025

[root@compute1 ~]# date

Tue Aug 5 10:25:36 CST 2025

安装OpenStack基础包,Stein 版本

#安装指定版本仓库

yum install centos-release-openstack-stein -y #旧版本已经停用#安装源

[root@controller ~]# cat > /etc/yum.repos.d/openstack.repo <<EOF

[base]

name=CentOS-\$releasever - Base

baseurl=http://mirrors.aliyun.com/centos/\$releasever/os/\$basearch/

gpgcheck=0[updates]

name=CentOS-\$releasever - Updates

baseurl=http://mirrors.aliyun.com/centos/\$releasever/updates/\$basearch/

gpgcheck=0[extras]

name=CentOS-\$releasever - Extras

baseurl=http://mirrors.aliyun.com/centos/\$releasever/extras/\$basearch/

gpgcheck=0[cloud]

name=CentOS-\$releasever - Cloud

baseurl=https://mirrors.aliyun.com/centos/\$releasever/cloud/x86_64/openstack-train/

gpgcheck=0[virt]

name=CentOS-\$releasever - Virt

baseurl=https://mirrors.aliyun.com/centos/\$releasever/virt/x86_64/kvm-common/

gpgcheck=0

EOF#清除缓存

yum clean all && yum update

#安装客户端

yum install -y python-openstackclient

安装SQL数据库

官方文档:

https://docs.openstack.org/install-guide/environment-sql-database-rdo.html

#安装软件包

[root@controller ~]# yum install mariadb mariadb-server python2-PyMySQL -y

#初始化数据库

[root@controller ~]# mysql_secure_installation NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDBSERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.Enter current password for root (enter for none):

OK, successfully used password, moving on...Setting the root password ensures that nobody can log into the MariaDB

root user without the proper authorisation.Set root password? [Y/n] n... skipping.By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.Remove anonymous users? [Y/n] y... Success!Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.Disallow root login remotely? [Y/n] y... Success!By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.Remove test database and access to it? [Y/n] y- Dropping test database...... Success!- Removing privileges on test database...... Success!Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.Reload privilege tables now? [Y/n] y... Success!Cleaning up...All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.Thanks for using MariaDB!

消息队列RabbitMQ

官方文档:

https://docs.openstack.org/install-guide/environment-messaging-rdo.html

[root@controller ~]# yum install rabbitmq-server -y

[root@controller ~]# systemctl enable --now rabbitmq-server.service

#创建openstack用户密码

[root@controller ~]# rabbitmqctl add_user openstack RABBIT_PASS

Creating user "openstack"

#允许用户进行配置、写入和读取访问:openstack

[root@controller ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/"

缓存Memcached

官方文档:

https://docs.openstack.org/install-guide/environment-memcached-rdo.html

[root@controller ~]# yum install memcached python-memcached -y

#编辑配置文件

[root@controller ~]# cat /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 127.0.0.1,::1,cotroller" #添加controller配置

#设置开机启动

[root@controller ~]# systemctl enable --now memcached.service

Etcd(可选)

官方文档:

https://docs.openstack.org/install-guide/environment-etcd.html

安装keystone认证服务

官方文档:

https://docs.openstack.org/keystone/2024.2/install/index.html

安装配置

#创建数据库

[root@controller ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 14

Server version: 10.3.20-MariaDB MariaDB ServerCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \-> IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \-> IDENTIFIED BY 'KEYSTONE_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> quit

Bye#安装配置包

[root@controller ~]# yum install openstack-keystone httpd mod_wsgi

##可能会报错,可手动安装依赖包

[root@controller ~]# yum --disablerepo=epel install qpid-proton-c-0.26.0-2.el7.x86_64

[root@controller ~]# yum install openstack-keystone httpd mod_wsgi -y[root@controller ~]# cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

[root@controller ~]# grep -Ev '^#|^$' /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

[root@controller ~]# vim /etc/keystone/keystone.conf

......

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

#......

[token]

provider = fernet#命令:su -s /bin/sh -c "keystone-manage db_sync" keystone

#注解:切换用户 指定编辑器 执行的命令 使用keystone身份执行#初始化数据库

[root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

#初始化 Fernet 密钥存储库

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# ls /etc/keystone/ -l

total 124

drwx------ 2 keystone keystone 24 Aug 5 12:26 credential-keys

-rw-r----- 1 root keystone 2303 Jun 7 2021 default_catalog.templates

drwx------ 2 keystone keystone 24 Aug 5 12:26 fernet-keys

-rw-r----- 1 root keystone 693 Aug 5 12:11 keystone.conf

-rw-r----- 1 root root 106413 Aug 5 12:09 keystone.conf.bak

-rw-r----- 1 root keystone 1046 Jun 7 2021 logging.conf

-rw-r----- 1 root keystone 3 Jun 8 2021 policy.json

-rw-r----- 1 keystone keystone 665 Jun 7 2021 sso_callback_template.html#初始化管理员密码

[root@controller ~]# keystone-manage bootstrap --bootstrap-password ADMIN_PASS \--bootstrap-admin-url http://controller:5000/v3/ \--bootstrap-internal-url http://controller:5000/v3/ \--bootstrap-public-url http://controller:5000/v3/ \--bootstrap-region-id RegionOne#配置httpd

[root@controller ~]# echo "ServerName controller" >> /etc/httpd/conf/httpd.conf

[root@controller ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

[root@controller ~]# systemctl restart httpd.service

[root@controller ~]# ss -ntlp |grep 5000 #监听5000端口

LISTEN 0 128 [::]:5000 [::]:* users:(("httpd",pid=15654,fd=6),("httpd",pid=15653,fd=6),("httpd",pid=15652,fd=6),("httpd",pid=15651,fd=6),("httpd",pid=15650,fd=6),("httpd",pid=15644,fd=6))#创建环境变量

[root@controller ~]# cat > /etc/profile.d/keystone.sh <<EOF

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

EOF

[root@controller ~]# . /etc/profile.d/keystone.sh

[root@controller ~]# env|grep OS

HOSTNAME=controller

OS_USER_DOMAIN_NAME=Default

OS_PROJECT_NAME=admin

OS_IDENTITY_API_VERSION=3

OS_PASSWORD=ADMIN_PASS

OS_AUTH_URL=http://controller:5000/v3

OS_USERNAME=admin

OS_PROJECT_DOMAIN_NAME=Default

创建域、项目、用户和角色

[root@controller ~]# openstack domain create --description "An Example Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | b01e7f05376b43a193b9190ea93e82e2 |

| name | example |

| options | {} |

| tags | [] |

+-------------+----------------------------------+

[root@controller ~]# openstack project create --domain default \

> --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | a1f9fd14361248aba88bfffacbfb314e |

| is_domain | False |

| name | service |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

[root@controller ~]# openstack project create --domain default \

> --description "Demo Project" myproject

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | e7fee4783d1b4b639a44050a47038759 |

| is_domain | False |

| name | myproject |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

[root@controller ~]# openstack user create --domain default --password 123456 myuser

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | e21f3888a90c48159526084ceda5a419 |

| name | myuser |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+[root@controller ~]# openstack role create myrole

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | None |

| domain_id | None |

| id | 89d5f21d603342c5b564cada4a61d21f |

| name | myrole |

| options | {} |

+-------------+----------------------------------+

[root@controller ~]# openstack role add --project myproject --user myuser myrole

载入环境变量

#生成环境变量文件

cat <<EOF | tee admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOFsource admin-openrc

验证

#获取token成功即keystone服务部署成功

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2025-08-05T06:04:39+0000 |

| id | gAAAAABokZDnbF5uLuKbKaimyhuQxngf5-epp8xe_R8sXcMq_FaBQgfgTt5o3KbY1rFD1-GV1mUbDUXHH61yhXTE7I0THj9zd_023DTKFLtkhQ9uOf_mz0uqsyjgyzFWCdzsDz0S9u2ZOYC2CJD8muXH_dGvs6UgJKLltMEanhl8ob1vMj3KWWA |

| project_id | a005165483ef415abe2521ef39ec1674 |

| user_id | 2c7d1f2ce2ea4d13a0b669cfbd3c45fd |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

安装glance镜像服务

官方文档:

https://docs.openstack.org/glance/2024.2/install/

OpenStack服务安装的通用步骤

- 创库授权

- keystone创建账号

- keystone创建服务实体

- 安装服务软件包

- 修改服务的配置文件

- 同步数据库

- 启动服务

- 验证

创建数据库

#创建数据库

[root@controller ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 23

Server version: 10.3.20-MariaDB MariaDB ServerCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \-> IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \-> IDENTIFIED BY 'GLANCE_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> quit

Bye

#创建用户

[root@controller ~]# openstack user create --domain default --password GLANCE_PASS glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fbddd318ddc54c0ba29ce7ef299cf715 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#将角色添加到用户和项目:admin glance和service

[root@controller ~]# openstack role add --project service --user glance admin#创建服务实体:glance

[root@controller ~]# openstack service create --name glance \

> --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | ef627660145745f296e085173e581206 |

| name | glance |

| type | image |

+-------------+----------------------------------+#创建映像服务 API 端点

[root@controller ~]# openstack endpoint create --region RegionOne \

> image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 784e3a56038f4fe1b8d31c6be16440e6 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ef627660145745f296e085173e581206 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8e03a87f9d0c4663b62e23aa5b1f3d81 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ef627660145745f296e085173e581206 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5cc714ce7e864ec7954612439ffdf5d6 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | ef627660145745f296e085173e581206 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

安装服务软件包

[root@controller ~]# yum install openstack-glance -y#遇到的问题 python 和 python-libs 包版本不匹配

tkinter 依赖于 python-2.7.5-89.el7,而你系统中已安装的是 python-2.7.5-92.el7_9,导致版本冲突。

kpartx 的版本也存在冲突,无法与 device-mapper-multipath 配合正常工作。

#解决方案

sudo yum downgrade python-2.7.5-89.el7 python-libs-2.7.5-89.el7

修改服务的配置文件

#glance-api配置文件

[root@controller ~]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

[root@controller ~]# grep -Ev '^$|#' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

[root@controller ~]# vim /etc/glance/glance-api.conf

[root@controller ~]# cat /etc/glance/glance-api.conf

[DEFAULT]

[cinder]

[cors]

[database] #数据库链接

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[file]

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.sheepdog.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

[glance_store] #镜像存储路径

stores = file,http

default _store = file

filesystem_store_datadir = /var/lib/glance/images/

[image_format]

[keystone_authtoken] #配置身份服务访问:[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[paste_deploy] #配置身份服务访问:[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]#glance-registry配置文件

[root@controller ~]# cp /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.bak

[root@controller ~]# grep -Ev '^$|#' /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf

[root@controller ~]# vim /etc/glance/glance-registry.conf[DEFAULT]

[database] #数据库的连接

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken] #配置身份服务访问:[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_policy]

[paste_deploy] #配置身份服务访问:[paste_deploy]

flavor = keystone

[profiler]

同步数据库

#初始化数据库

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance#报错

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:1371: OsloDBDeprecationWarning: EngineFacade is deprecated; please use oslo_db.sqlalchemy.enginefacadeexpire_on_commit=expire_on_commit, _conf=conf)

警告信息表明 oslo_db 中的 EngineFacade 已经过时,并建议你使用 oslo_db.sqlalchemy.enginefaca#发现账号授权未成功,再次授权后执行成功

[root@controller ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 38

Server version: 10.3.10-MariaDB MariaDB ServerCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| keystone |

| mysql |

| performance_schema |

+--------------------+

MariaDB [(none)]> SHOW GRANTS FOR glance;

ERROR 1141 (42000): There is no such grant defined for user 'glance' on host '%'

MariaDB [(none)]> create database glance;

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| glance |

| information_schema |

| keystone |

| mysql |

| performance_schema |

+--------------------+

5 rows in set (0.000 sec)MariaDB [(none)]> show grants for glance;

+-------------------------------------------------------------------------------------------------------+

| Grants for glance@% |

+-------------------------------------------------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'glance'@'%' IDENTIFIED BY PASSWORD '*C0CE56F2C0C7234791F36D89700B02691C1CAB8E' |

| GRANT ALL PRIVILEGES ON `glance`.* TO 'glance'@'%' |

+-------------------------------------------------------------------------------------------------------+

2 rows in set (0.000 sec)MariaDB [(none)]> quit

Bye#再次执行su -s /bin/sh -c "glance-manage db_sync" glance

启动服务

[root@controller ~]# systemctl restart openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# ss -lntup|grep -E '9292|9191'

tcp LISTEN 0 128 *:9191 *:* users:(("glance-registry",pid=20532,fd=4),("glance-registry",pid=20531,fd=4),("glance-registry",pid=20510,fd=4))

tcp LISTEN 0 128 *:9292 *:* users:(("glance-api",pid=20535,fd=4),("glance-api",pid=20534,fd=4),("glance-api",pid=20509,fd=4))

验证

#加载环境变量

[root@controller ~]# source admin-openrc

#下载官方测试镜像

[root@controller ~]# wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

[root@controller ~]# glance image-create --name "cirros" \

> --file cirros-0.4.0-x86_64-disk.img \

> --disk-format qcow2 --container-format bare \

> --visibility=public

+------------------+----------------------------------------------------------------------------------+

| Property | Value |

+------------------+----------------------------------------------------------------------------------+

| checksum | 443b7623e27ecf03dc9e01ee93f67afe |

| container_format | bare |

| created_at | 2025-08-05T10:22:10Z |

| disk_format | qcow2 |

| id | 75a52417-be1a-4289-81cc-afd1e8178f92 |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| os_hash_algo | sha512 |

| os_hash_value | 6513f21e44aa3da349f248188a44bc304a3653a04122d8fb4535423c8e1d14cd6a153f735bb0982e |

| | 2161b5b5186106570c17a9e58b64dd39390617cd5a350f78 |

| os_hidden | False |

| owner | a005165483ef415abe2521ef39ec1674 |

| protected | False |

| size | 12716032 |

| status | active |

| tags | [] |

| updated_at | 2025-08-05T10:22:10Z |

| virtual_size | Not available |

| visibility | public |

+------------------+----------------------------------------------------------------------------------+

[root@controller ~]# glance image-list

+--------------------------------------+--------+

| ID | Name |

+--------------------------------------+--------+

| 75a52417-be1a-4289-81cc-afd1e8178f92 | cirros |

+--------------------------------------+--------+

安装nova服务

安装安置服务 Placement

官方文档

https://docs.openstack.org/placement/2024.2/install/install-rdo.html#finalize-installation

#安装数据库

[root@controller ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 13

Server version: 10.3.20-MariaDB MariaDB ServerCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> CREATE DATABASE placement;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \-> IDENTIFIED BY 'PLACEMENT_DBPASS';

Query OK, 0 rows affected (0.001 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \-> IDENTIFIED BY 'PLACEMENT_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> quit

Bye#配置用户和端点

[root@controller ~]# openstack user create --domain default --password PLACEMENT_PASS placement

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 0ecbe1ec839742d9b363658d811e5f27 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]# openstack role add --project service --user placement admin

[root@controller ~]# openstack service create --name placement \

> --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | e171a705c8a847288c7f46ba81bc27c5 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack endpoint create --region RegionOne \

> placement public http://controller:8778+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8b04f9c7b64740aba44afd93de211258 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e171a705c8a847288c7f46ba81bc27c5 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack endpoint create --region RegionOne \

> placement internal http://controller:8778+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3219d8b9d9e246729a3906e77b186828 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e171a705c8a847288c7f46ba81bc27c5 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack endpoint create --region RegionOne \

> placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 61f6e57ee71546d1996d6730bb6ce665 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e171a705c8a847288c7f46ba81bc27c5 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

安装配置组件

#安装组件包

[root@controller ~]# yum install openstack-placement-api -y

[root@controller ~]# cp /etc/placement/placement.conf /etc/placement/placement.conf.bak

[root@controller ~]# grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf

[root@controller ~]# vim /etc/placement/placement.conf

[DEFAULT]

[api]

auth_strategy = keystone #配置标识 服务访问:[api][keystone_authtoken]

[cors]

[keystone_authtoken] #配置标识 服务访问:[api][keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = PLACEMENT_PASS

[oslo_policy]

[placement]

[placement_database] #配置数据库访问:[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

[profiler]#填充数据库:placement,忽略报错

[root@controller ~]# su -s /bin/sh -c "placement-manage db sync" placement

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1280, u"Name 'alembic_version_pkc' ignored for PRIMARY key.")result = self._query(query)

#生成的文件内容

[root@controller ~]# ls /etc/httpd/conf.d/00-placement-api.conf

/etc/httpd/conf.d/00-placement-api.conf

[root@controller ~]# cat /etc/httpd/conf.d/00-placement-api.conf

Listen 8778<VirtualHost *:8778>WSGIProcessGroup placement-apiWSGIApplicationGroup %{GLOBAL}WSGIPassAuthorization OnWSGIDaemonProcess placement-api processes=3 threads=1 user=placement group=placementWSGIScriptAlias / /usr/bin/placement-api<IfVersion >= 2.4>ErrorLogFormat "%M"</IfVersion>ErrorLog /var/log/placement/placement-api.log#SSLEngine On#SSLCertificateFile ...#SSLCertificateKeyFile ...

</VirtualHost>Alias /placement-api /usr/bin/placement-api

<Location /placement-api>SetHandler wsgi-scriptOptions +ExecCGIWSGIProcessGroup placement-apiWSGIApplicationGroup %{GLOBAL}WSGIPassAuthorization On

</Location>

#添加一下内容

<Directory /usr/bin><IfVersion >= 2.4>Require all granted</IfVersion><IfVersion < 2.4>Order allow,denyAllow from all</IfVersion>

</Directory>#重启httpd服务

[root@controller ~]# systemctl restart httpd.service

[root@controller ~]# ss -ntlu|grep 8778

tcp LISTEN 0 128 [::]:8778 [::]:*

验证服务

[root@controller ~]# placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+控制节点安装nova服务

创建数据库

[root@controller ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 17

Server version: 10.3.20-MariaDB MariaDB ServerCopyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MariaDB [(none)]> CREATE DATABASE nova_api;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> CREATE DATABASE nova;

Query OK, 1 row affected (0.000 sec)MariaDB [(none)]> CREATE DATABASE nova_cell0;

Query OK, 1 row affected (0.001 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \-> IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \-> IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \-> IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \-> IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \-> IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.000 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \-> IDENTIFIED BY 'NOVA_DBPASS';

Query OK, 0 rows affected (0.001 sec)MariaDB [(none)]> quit

Bye

[root@controller ~]# openstack user create --domain default --password NOVA_PASS nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 28d2728c5aff49b5b380d8bc8eb0b0af |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]# openstack role add --project service --user nova admin

[root@controller ~]# openstack service create --name nova \

> --description "OpenStack Compute" compute+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 1578a0605755416797cf4ea6fbf8b4ac |

| name | nova |

| type | compute |

+-------------+----------------------------------+

[root@controller ~]#

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2f8e6cecd95e432281fb53b2e72ba297 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 1578a0605755416797cf4ea6fbf8b4ac |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | f0af0f0a6b0b44008e290f737e142370 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 1578a0605755416797cf4ea6fbf8b4ac |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b2a9b83a8f504687b801063037bb800f |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 1578a0605755416797cf4ea6fbf8b4ac |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]#

安装和配置组件

官方文档

https://docs.openstack.org/nova/2024.2/install/controller-install-rdo.html

openstack-nova-scheduler: 根据调度策略选择最合适的计算节点(hypervisor)来运行新实例。

openstack-nova-api: 提供其他组件的调用接口。

openstack-nova-novncproxy:提供一个web版本的vnc终端。

openstack-nova-conductor:处理与数据库的交互请求,避免计算节点直接访问数据库。还协调计算节点之间的调度指令。

[root@controller ~]# yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler

[root@controller ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

[root@controller ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

[root@controller ~]# vim /etc/nova/nova.conf

[root@controller ~]# cat /etc/nova/nova.conf

[DEFAULT]

my_ip = 11.0.1.7

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/

use_neutron = true

firewall_dirver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[barbican]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user] #需配置https ,开启443

#send_service_user_token = true

#auth_url = https://controller/identity

#auth_strategy = keystone

#auth_type = password

#project_domain_name = Default

#project_name = service

#user_domain_name = Default

#username = nova

#password = NOVA_PASS

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

#初始化数据库,stein版本可能过低导致数据库初始失败,更替train版本安装源

[root@controller ~]# yum remove openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y #卸载老版本

[root@controller ~]# yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y #安装新版本

#以下命令执行成功

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

38d3c733-6de2-4fd6-b75d-05e18c92c948

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release')result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:170: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release')result = self._query(query)

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | 38d3c733-6de2-4fd6-b75d-05e18c92c948 | rabbit://openstack:****@controller:5672/ | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+#启动服务并设置开机自启动

[root@controller ~]# systemctl enable --now \openstack-nova-api.service \openstack-nova-scheduler.service \openstack-nova-conductor.service \openstack-nova-novncproxy.service

验证

[root@controller ~]# openstack compute service list

+----+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+------------+----------+---------+-------+----------------------------+

| 2 | nova-conductor | controller | internal | enabled | up | 2025-08-05T11:38:57.000000 |

| 5 | nova-scheduler | controller | internal | enabled | up | 2025-08-05T11:38:58.000000 |

+----+----------------+------------+----------+---------+-------+----------------------------+##nova-status upgrade check 403报错,解决方案如下

[root@controller ~]# nova-status upgrade check

Error:

Traceback (most recent call last):File "/usr/lib/python2.7/site-packages/nova/cmd/status.py", line 398, in mainret = fn(*fn_args, **fn_kwargs)File "/usr/lib/python2.7/site-packages/oslo_upgradecheck/upgradecheck.py", line 102, in checkresult = func(self)File "/usr/lib/python2.7/site-packages/nova/cmd/status.py", line 164, in _check_placementversions = self._placement_get("/")File "/usr/lib/python2.7/site-packages/nova/cmd/status.py", line 154, in _placement_getreturn client.get(path, raise_exc=True).json()File "/usr/lib/python2.7/site-packages/keystoneauth1/adapter.py", line 386, in getreturn self.request(url, 'GET', **kwargs)File "/usr/lib/python2.7/site-packages/keystoneauth1/adapter.py", line 248, in requestreturn self.session.request(url, method, **kwargs)File "/usr/lib/python2.7/site-packages/keystoneauth1/session.py", line 961, in requestraise exceptions.from_response(resp, method, url)

Forbidden: Forbidden (HTTP 403)##解决方案

vim /etc/httpd/conf.d/00-nova-placement-api.conf #添加以下内容

<Directory /usr/bin><IfVersion >= 2.4>Require all granted</IfVersion><IfVersion < 2.4>Order allow,denyAllow from all</IfVersion>

</Directory>systemctl restart httpd#完美解决

[root@controller ~]# nova-status upgrade check

+--------------------------------------------------------------------+

| Upgrade Check Results |

+--------------------------------------------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: No host mappings or compute nodes were found. Remember to |

| run command 'nova-manage cell_v2 discover_hosts' when new |

| compute hosts are deployed. |

+--------------------------------------------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

计算节点nova服务

#安装服务

[root@compute1 ~]# sudo yum install openstack-nova-compute......yum install openstack-nova-compute Error: Package: python2-qpid-proton-0.22.0-1.el7.x86_64 (openstack)Requires: qpid-proton-c(x86-64) = 0.22.0-1.el7Installed: qpid-proton-c-0.37.0-1.el7.x86_64 (@epel)qpid-proton-c(x86-64) = 0.37.0-1.el7Available: qpid-proton-c-0.14.0-2.el7.x86_64 (extras)qpid-proton-c(x86-64) = 0.14.0-2.el7Available: qpid-proton-c-0.22.0-1.el7.x86_64 (openstack)qpid-proton-c(x86-64) = 0.22.0-1.el7You could try using --skip-broken to work around the problemYou could try running: rpm -Va --nofiles --nodigest

[root@compute1 ~]# sudo yum downgrade qpid-proton-c-0.22.0-1.el7

[root@compute1 yum.repos.d]# yum install openstack-nova-compute

[root@compute1 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

[root@compute1 ~]# grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

[root@compute1 ~]# vim /etc/nova/nova.conf #按步骤修改配置文件

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:RABBIT_PASS@controller

my_ip = 11.0.1.17 #主机是计算节点的信息

use_neutron = true

firewall_dirver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[api_database]

[barbican]

[cache]

[cells]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[database]

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS

[placement_database]

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user] #需配置https ,开启443

#send_service_user_token = true

#auth_url = https://controller/identity

#auth_strategy = keystone

#auth_type = password

#project_domain_name = Default

#project_name = service

#user_domain_name = Default

#username = nova

#password = NOVA_PASS

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

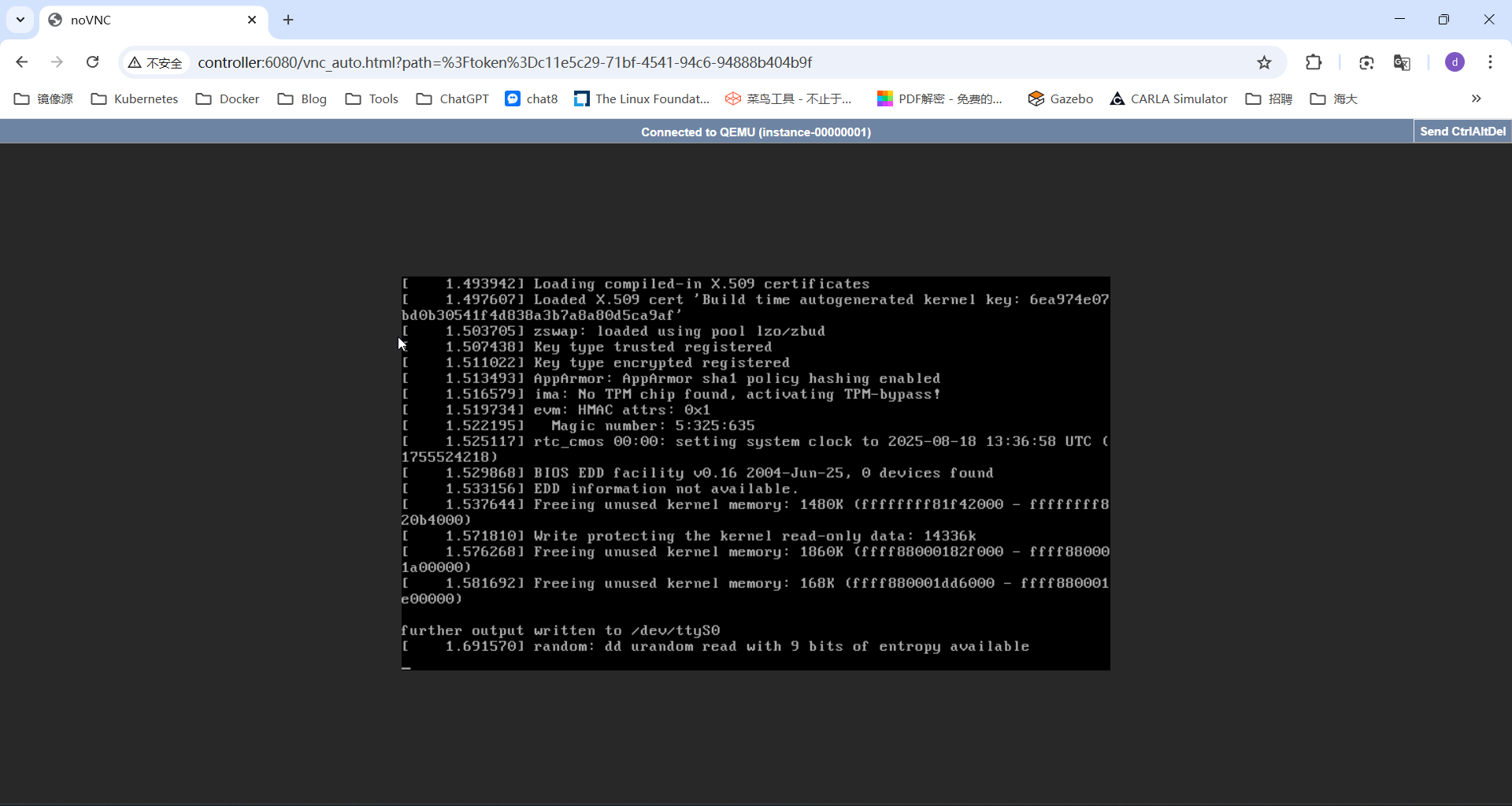

novncproxy_base_url = http://controller:6080/vnc_auto.html

[workarounds]

[wsgi]

[xenserver]

[xvp]

[zvm]

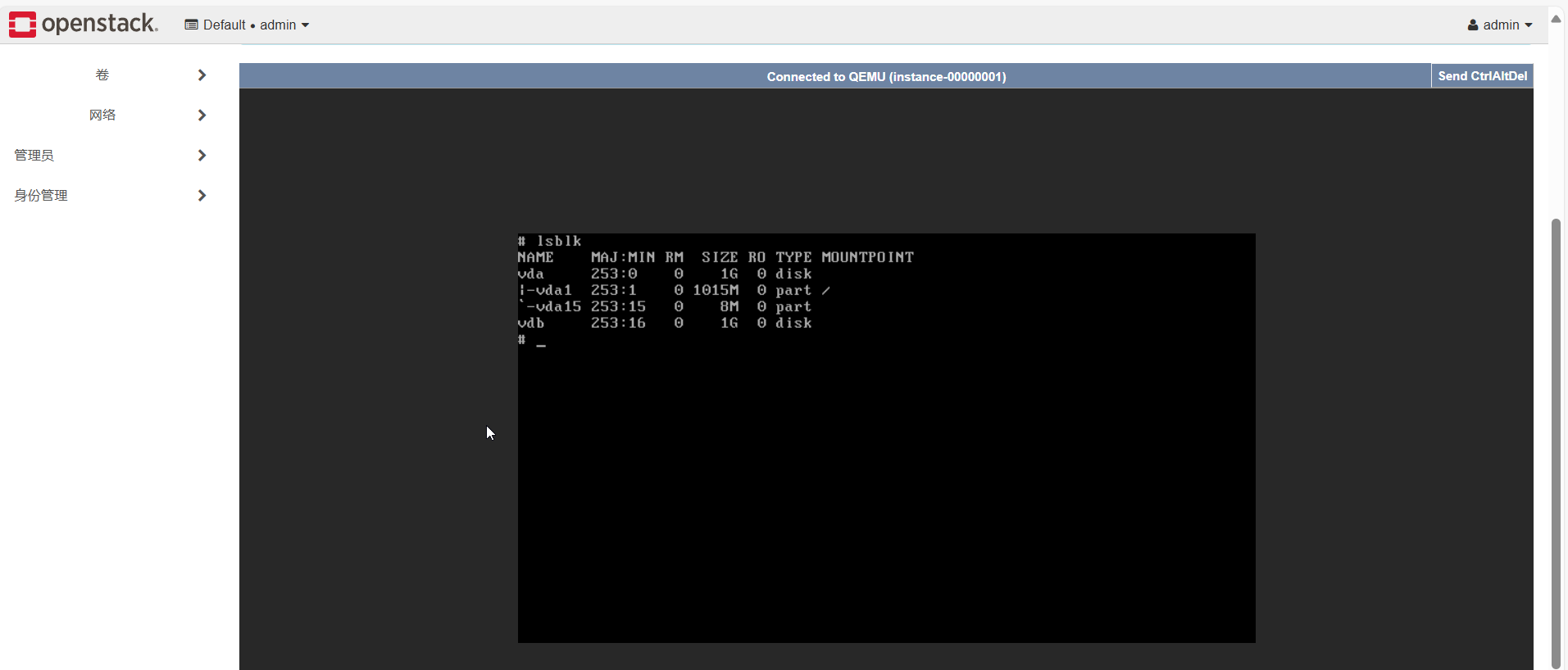

#检查是否启动镜像加速

[root@compute1 ~]# egrep -c '(vms|svm)' /proc/cpuinfo

2#设置开机启动并启动服务

[root@compute1 ~]# systemctl enable --now libvirtd.service openstack-nova-compute.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service.

将计算节点添加到单元数据库

#控制器节点上执行指令

[root@controller ~]# openstack compute service list --service nova-compute

+----+--------------+----------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+----------+------+---------+-------+----------------------------+

| 7 | nova-compute | compute1 | nova | enabled | up | 2025-08-05T13:56:44.000000 |

+----+--------------+----------+------+---------+-------+----------------------------+

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 38d3c733-6de2-4fd6-b75d-05e18c92c948

Checking host mapping for compute host 'compute1': 534c3790-2e61-4544-993e-4cde6f94c2f6

Creating host mapping for compute host 'compute1': 534c3790-2e61-4544-993e-4cde6f94c2f6

Found 1 unmapped computes in cell: 38d3c733-6de2-4fd6-b75d-05e18c92c948#检验

[root@controller ~]# openstack compute service list

+----+----------------+------------+----------+---------+-------+----------------------------+

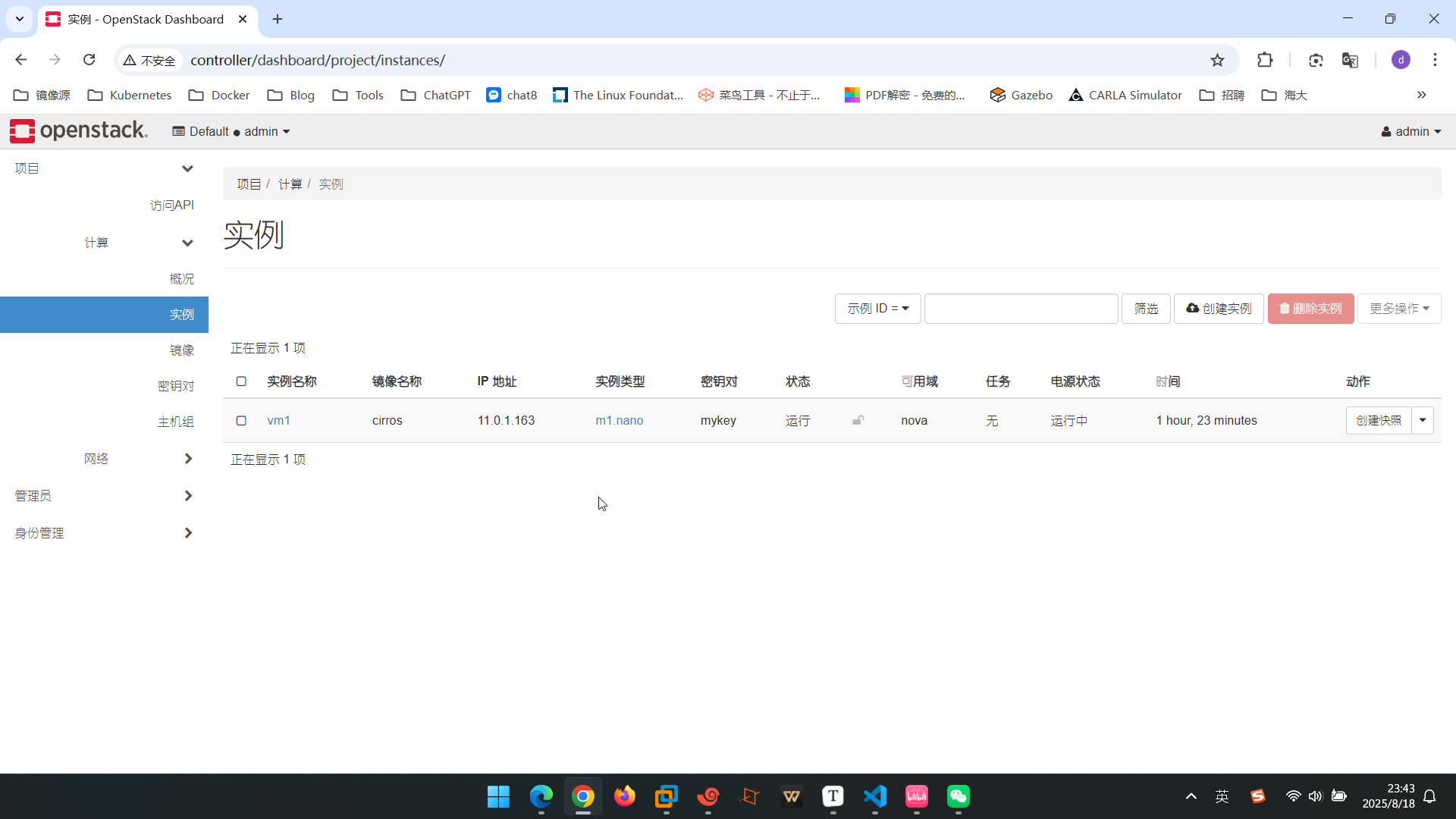

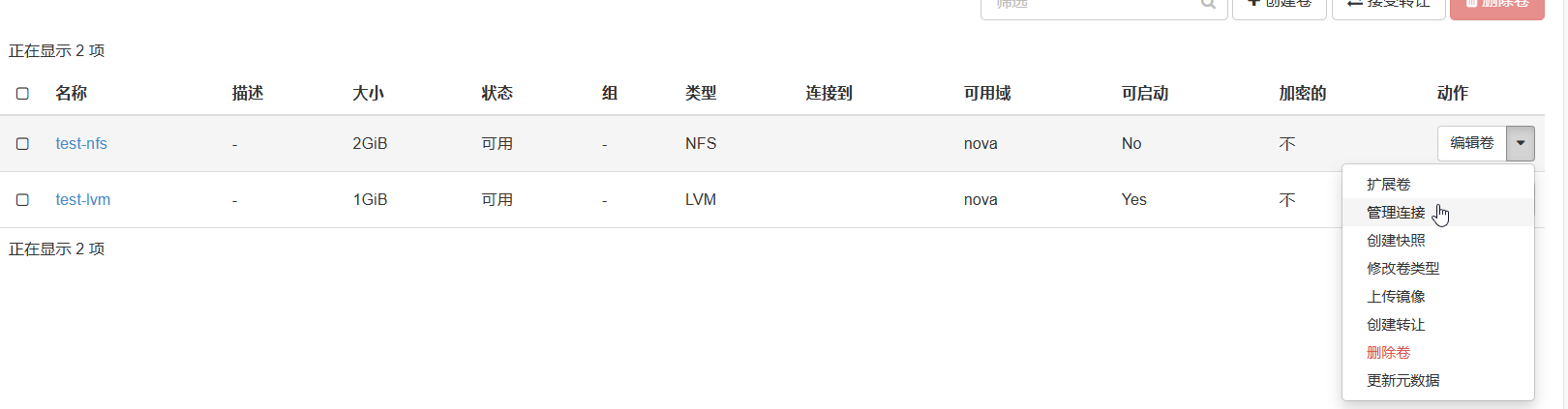

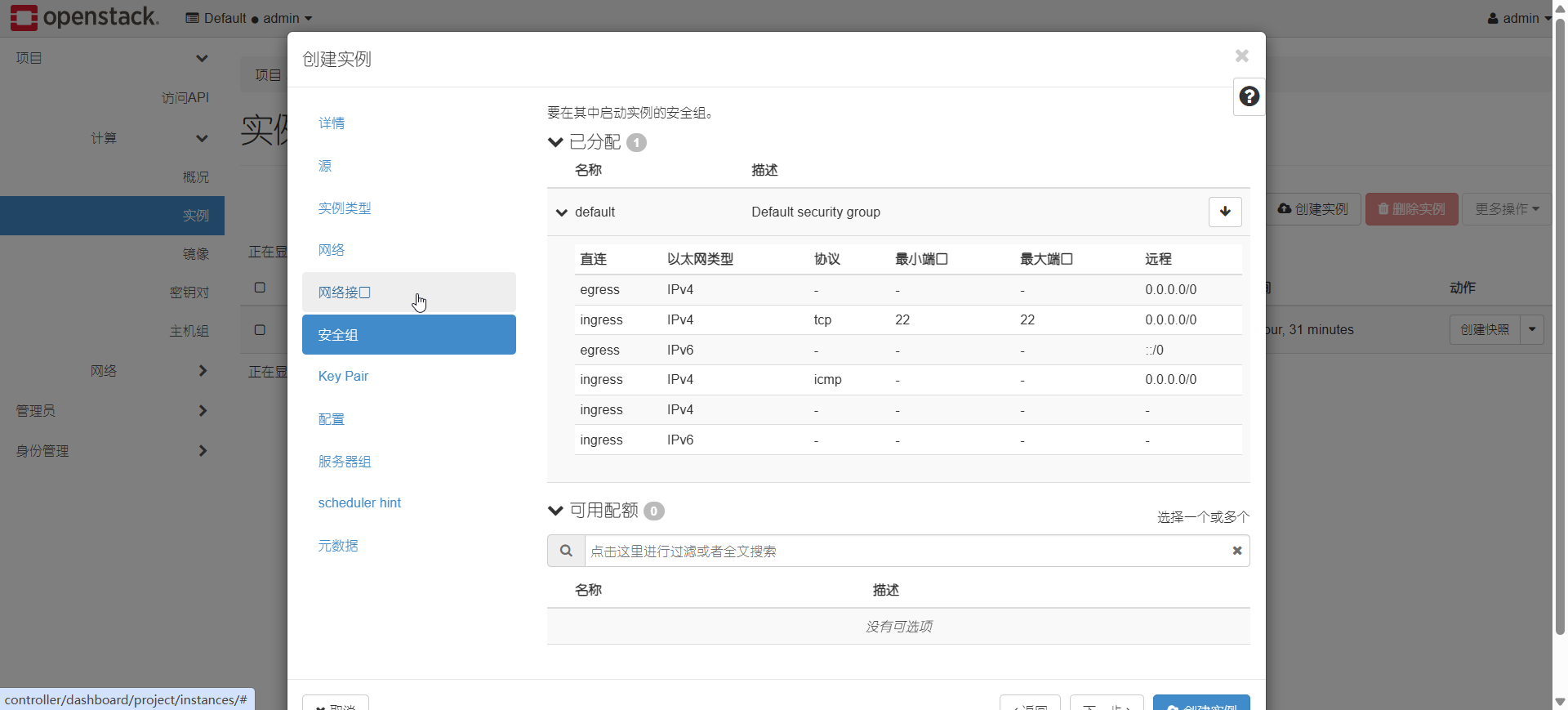

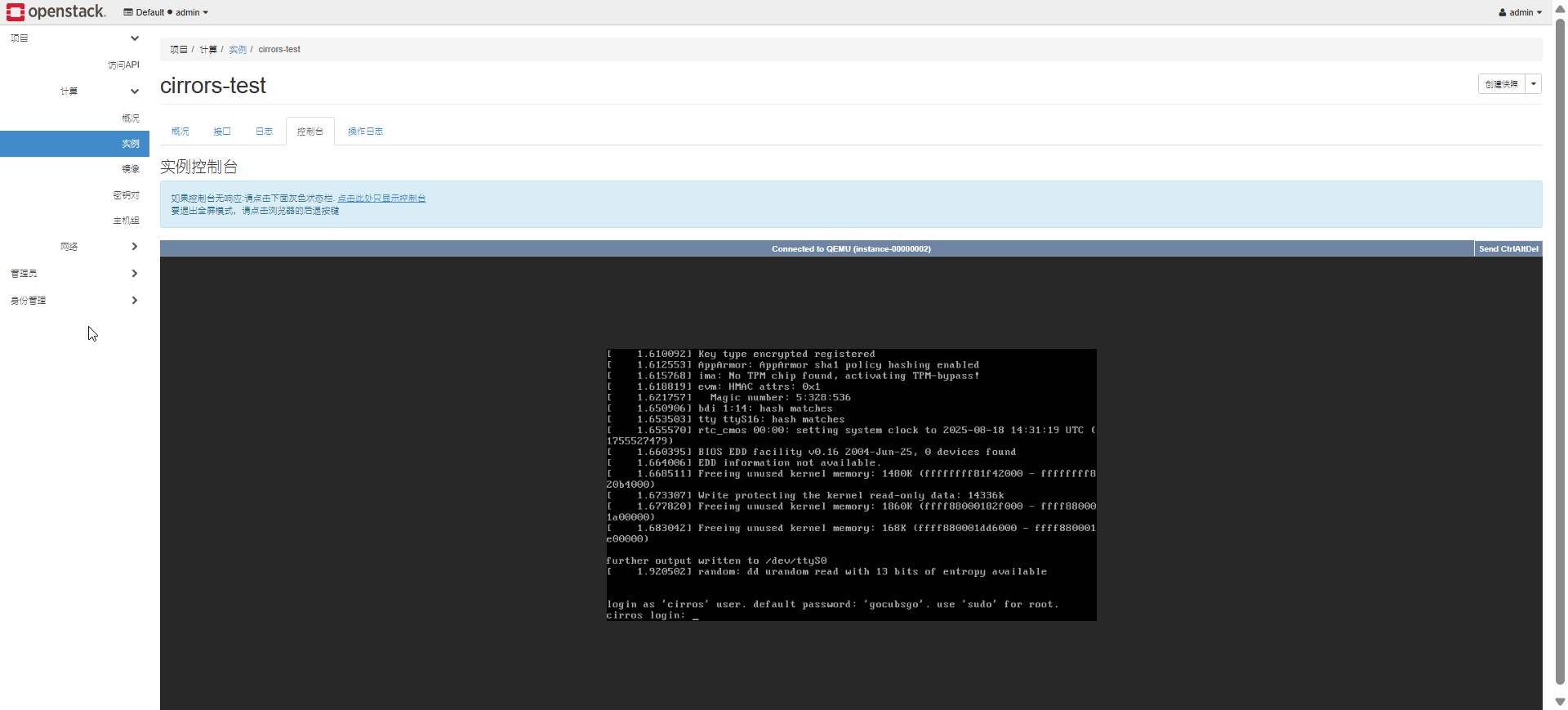

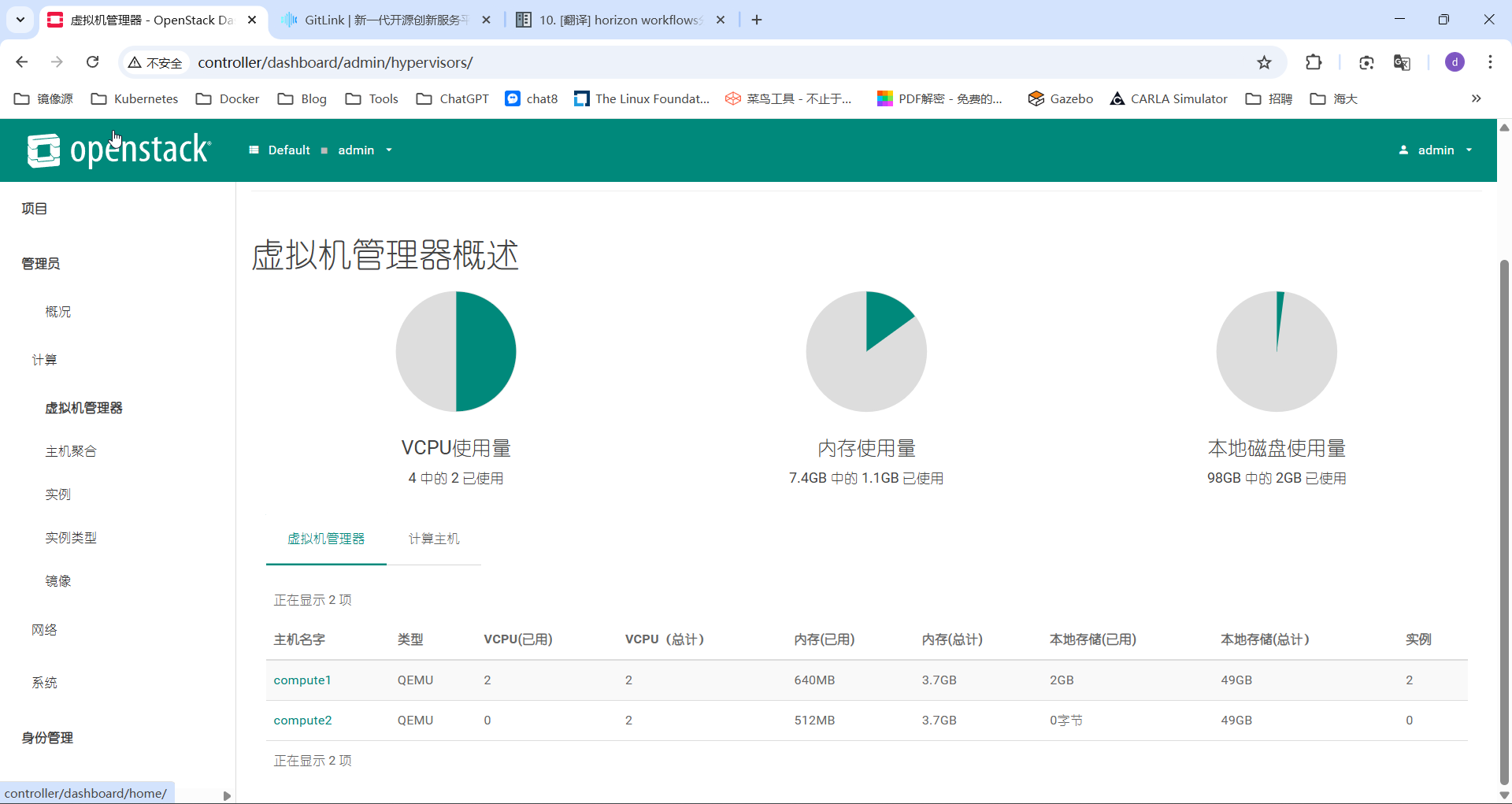

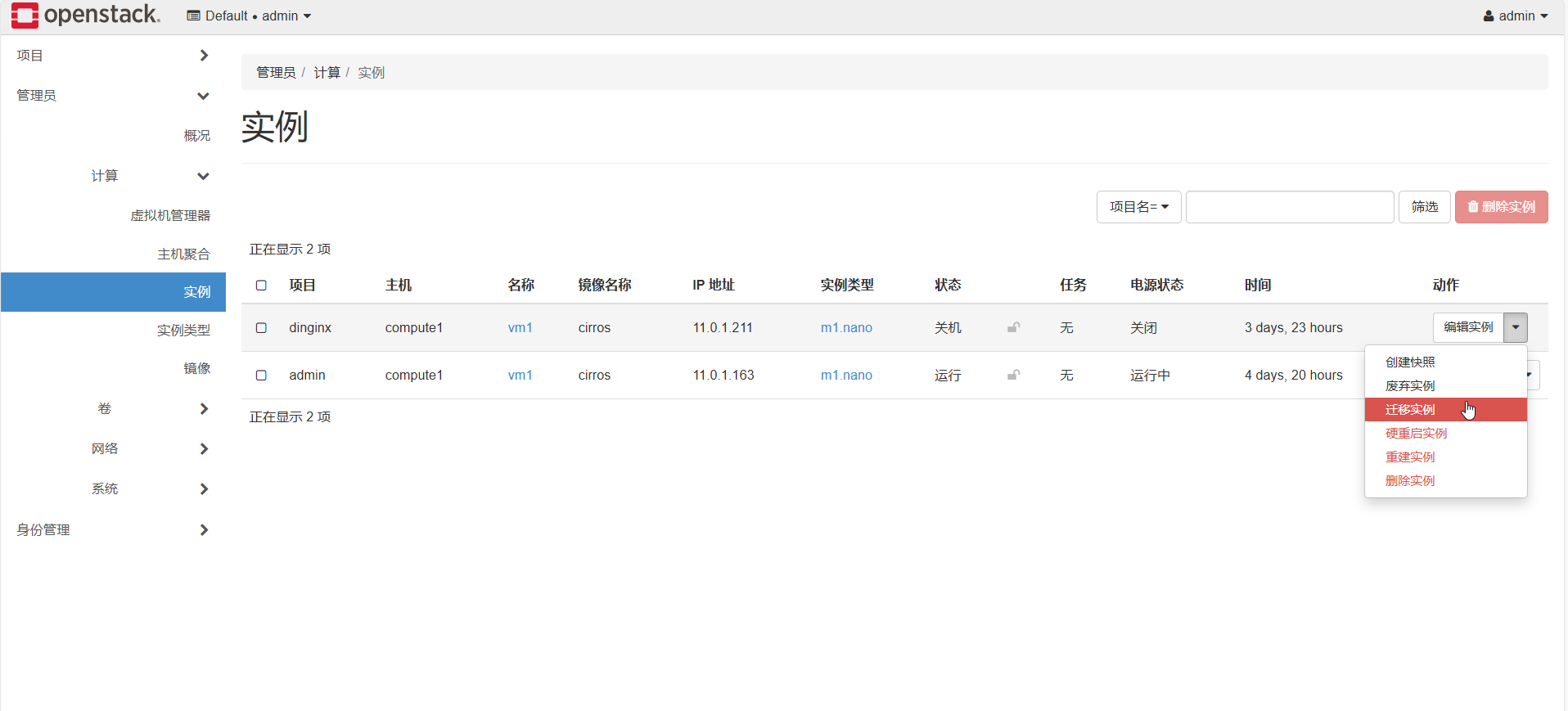

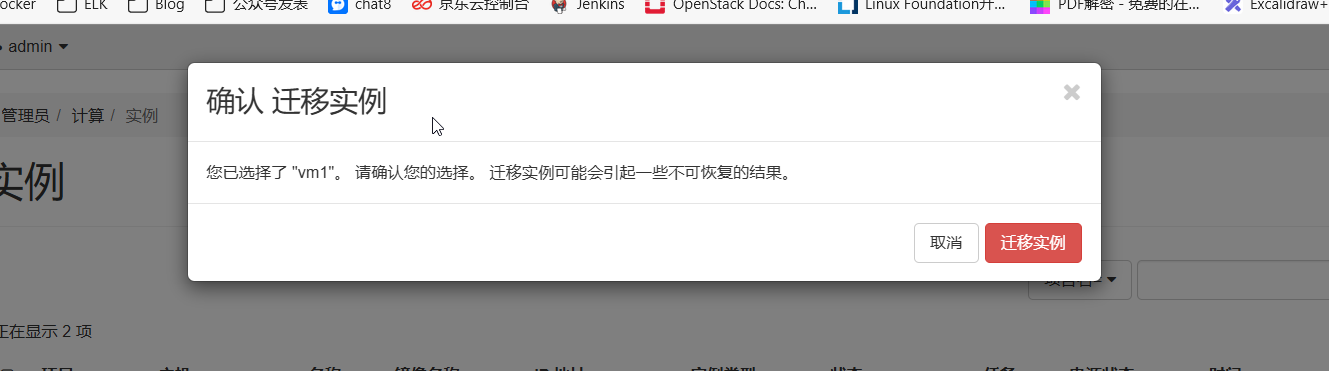

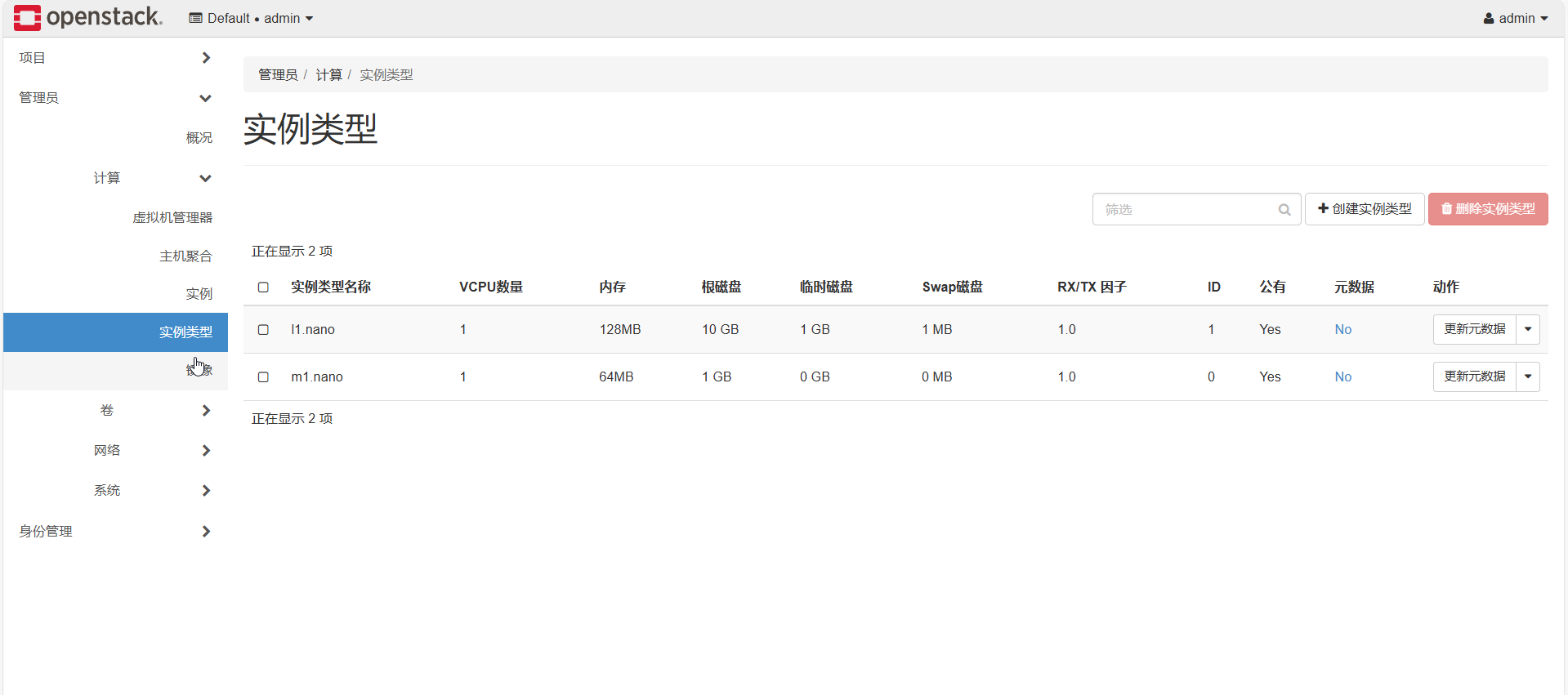

| ID | Binary | Host | Zone | Status | State | Updated At |