pip install gptqmodel报错:error: subprocess-exited-with-error

在LLamaFactory中执行量化导出功能的时候要求安装gptmodel>=2.0.0,但是安装的时候报错了,完整报错信息:

error: subprocess-exited-with-error× Getting requirements to build wheel did not run successfully.│ exit code: 1╰─> [30 lines of output]Traceback (most recent call last):File "<string>", line 168, in _detect_torch_versionFile "/root/anaconda3/envs/llamaf_env_new/lib/python3.10/importlib/metadata/__init__.py", line 996, in versionreturn distribution(distribution_name).versionFile "/root/anaconda3/envs/llamaf_env_new/lib/python3.10/importlib/metadata/__init__.py", line 969, in distributionreturn Distribution.from_name(distribution_name)File "/root/anaconda3/envs/llamaf_env_new/lib/python3.10/importlib/metadata/__init__.py", line 548, in from_nameraise PackageNotFoundError(name)importlib.metadata.PackageNotFoundError: No package metadata was found for torchDuring handling of the above exception, another exception occurred:Traceback (most recent call last):File "/root/anaconda3/envs/llamaf_env_new/lib/python3.10/site-packages/pip/_vendor/pyproject_hooks/_in_process/_in_process.py", line 389, in <module>main()File "/root/anaconda3/envs/llamaf_env_new/lib/python3.10/site-packages/pip/_vendor/pyproject_hooks/_in_process/_in_process.py", line 373, in mainjson_out["return_val"] = hook(**hook_input["kwargs"])File "/root/anaconda3/envs/llamaf_env_new/lib/python3.10/site-packages/pip/_vendor/pyproject_hooks/_in_process/_in_process.py", line 143, in get_requires_for_build_wheelreturn hook(config_settings)File "/tmp/pip-build-env-rpgqnoz1/overlay/lib/python3.10/site-packages/setuptools/build_meta.py", line 331, in get_requires_for_build_wheelreturn self._get_build_requires(config_settings, requirements=[])File "/tmp/pip-build-env-rpgqnoz1/overlay/lib/python3.10/site-packages/setuptools/build_meta.py", line 301, in _get_build_requiresself.run_setup()File "/tmp/pip-build-env-rpgqnoz1/overlay/lib/python3.10/site-packages/setuptools/build_meta.py", line 512, in run_setupsuper().run_setup(setup_script=setup_script)File "/tmp/pip-build-env-rpgqnoz1/overlay/lib/python3.10/site-packages/setuptools/build_meta.py", line 317, in run_setupexec(code, locals())File "<string>", line 232, in <module>File "<string>", line 172, in _detect_torch_versionException: Unable to detect torch version via uv/pip/conda/importlib. Please install torch >= 2.7.1[end of output]note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error× Getting requirements to build wheel did not run successfully.

│ exit code: 1

╰─> See above for output.note: This error originates from a subprocess, and is likely not a problem with pip.找了好些教程,有些建议:

pip cache purgepip install --upgrade setuptools wheel# 然后再执行:

pip install gptqmodel就安装成功了。不过我这里好像没有用,还是报一样的错误。

而且我的torch已经正确安装了。重装了torch也不生效。后续干脆去找到包离线下载+安装成功了。

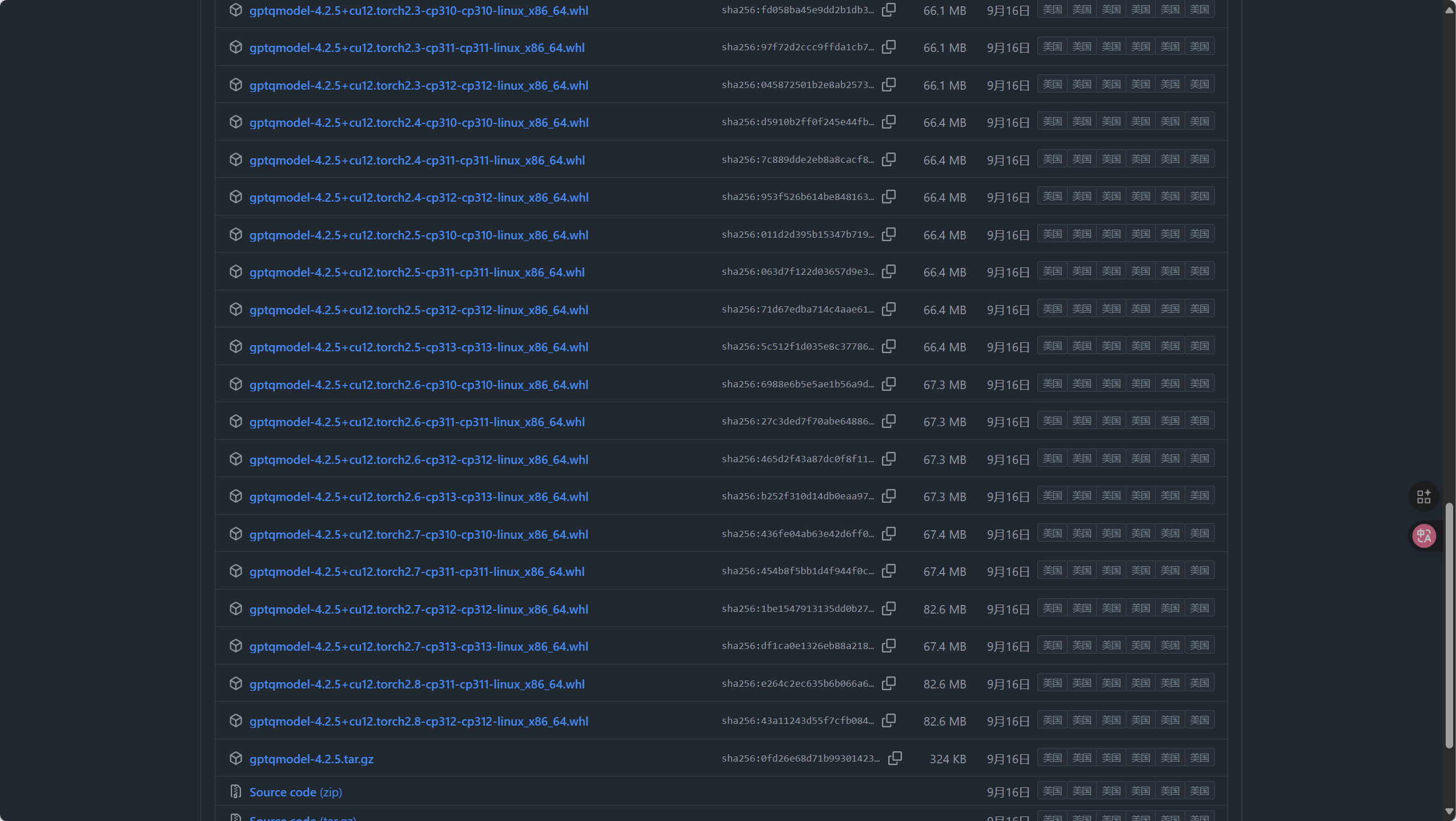

发行版 GPT-QModel v4.2.5 · ModelCloud/GPTQModel

按照上面的地址,找到对应的版本:

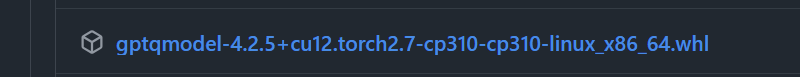

我的虚拟环境是3.10的Python版本,所以我找的是如下这个版本:

这个版本要安装对应的库的版本依赖,版本名中也写清楚了,我这个版本是:

cu12、torch2.7、Python3.10

安装对应版本的torch、torchvision、torchaudio的命令如下:

pip install torch==2.7.0+cu126 torchvision==0.22.0+cu126 torchaudio==2.7.0+cu126 --index-url https://download.pytorch.org/whl/cu126这个组合我用的比较多,比较稳定。不过这个命令下载要梯子,网络不行的话,把后面那个“--index-url https://download.pytorch.org/whl/cu126”去掉,然后尝试用清华源镜像安装吧。

离线包下载好后丢到服务器上去找个位置,然后安装:

pip install /你的路径/gptqmodel-4.2.5+cu12.torch2.7-cp310-cp310-linux_x86_64.whl成功安装~