Transformers包常用函数讲解

1. AutoTokenizer.from_pretrained(model_path, use_fast=False)

1.1. 首先读取config文件: model_path/tokenizer_config.json

tokenizer_config.json在 transformers/tokenization_utils_base.py中定义:

# Slow tokenizers used to be saved in three separated files

SPECIAL_TOKENS_MAP_FILE = "special_tokens_map.json"

ADDED_TOKENS_FILE = "added_tokens.json"

TOKENIZER_CONFIG_FILE = "tokenizer_config.json"

然后在transformers/models/auto/tokenization_auto.py读取:

commit_hash = kwargs.get("_commit_hash", None)resolved_config_file = cached_file(pretrained_model_name_or_path,TOKENIZER_CONFIG_FILE,cache_dir=cache_dir,force_download=force_download,resume_download=resume_download,proxies=proxies,use_auth_token=use_auth_token,revision=revision,local_files_only=local_files_only,subfolder=subfolder,_raise_exceptions_for_missing_entries=False,_raise_exceptions_for_connection_errors=False,_commit_hash=commit_hash,)

ransformers/utils/hub.py中定义cached_file:

def cached_file(path_or_repo_id: Union[str, os.PathLike],filename: str,cache_dir: Optional[Union[str, os.PathLike]] = None,force_download: bool = False,resume_download: bool = False,proxies: Optional[Dict[str, str]] = None,use_auth_token: Optional[Union[bool, str]] = None,revision: Optional[str] = None,local_files_only: bool = False,subfolder: str = "",repo_type: Optional[str] = None,user_agent: Optional[Union[str, Dict[str, str]]] = None,_raise_exceptions_for_missing_entries: bool = True,_raise_exceptions_for_connection_errors: bool = True,_commit_hash: Optional[str] = None,

):if is_offline_mode() and not local_files_only:logger.info("Offline mode: forcing local_files_only=True")local_files_only = Trueif subfolder is None:subfolder = ""path_or_repo_id = str(path_or_repo_id)full_filename = os.path.join(subfolder, filename)if os.path.isdir(path_or_repo_id):resolved_file = os.path.join(os.path.join(path_or_repo_id, subfolder), filename)if not os.path.isfile(resolved_file):if _raise_exceptions_for_missing_entries:raise EnvironmentError(f"{path_or_repo_id} does not appear to have a file named {full_filename}. Checkout "f"'https://huggingface.co/{path_or_repo_id}/{revision}' for available files.")else:return Nonereturn resolved_file

所以AutoTokenizer得配置文件为model_path/tokenizer_config.json中定义

其中定义的一个值为:

"tokenizer_class": "LlamaTokenizer",

然后在transformers/models/auto/tokenization_auto.py使用

tokenizer_class = tokenizer_class_from_name(tokenizer_class_candidate)def tokenizer_class_from_name(class_name: str):if class_name == "PreTrainedTokenizerFast":return PreTrainedTokenizerFastfor module_name, tokenizers in TOKENIZER_MAPPING_NAMES.items():if class_name in tokenizers:module_name = model_type_to_module_name(module_name)module = importlib.import_module(f".{module_name}", "transformers.models")try:return getattr(module, class_name)except AttributeError:continuereturn None

返回的类为"<class 'transformers.models.llama.tokenization_llama.LlamaTokenizer'>"

然后在transformers/models/auto/tokenization_auto.py中调用

if tokenizer_class is None:tokenizer_class_candidate = config_tokenizer_classtokenizer_class = tokenizer_class_from_name(tokenizer_class_candidate)return tokenizer_class.from_pretrained(pretrained_model_name_or_path, *inputs, **kwargs)

然后再

# At this point pretrained_model_name_or_path is either a directory or a model identifier name

additional_files_names = {"added_tokens_file": ADDED_TOKENS_FILE,"special_tokens_map_file": SPECIAL_TOKENS_MAP_FILE,"tokenizer_config_file": TOKENIZER_CONFIG_FILE,

}

vocab_files = {**cls.vocab_files_names, **additional_files_names}

其中cls: <class 'transformers.models.llama.tokenization_llama.LlamaTokenizer

vocab_files = {'vocab_file': 'tokenizer.model', 'added_tokens_file': 'added_tokens.json', 'special_tokens_map_file': 'special_tokens_map.json', 'tokenizer_config_file': 'tokenizer_config.json'

}

然后获取这里面每个文件的绝对路径:

resolved_vocab_files =

{

'vocab_file': 'model_path/tokenizer.model',

'added_tokens_file': None,

'special_tokens_map_file': 'model_path/special_tokens_map.json',

'tokenizer_config_file': 'model_path/tokenizer_config.json'

}

然后再``解析:

return cls._from_pretrained(resolved_vocab_files,pretrained_model_name_or_path,init_configuration,*init_inputs,use_auth_token=token,cache_dir=cache_dir,local_files_only=local_files_only,_commit_hash=commit_hash,_is_local=is_local,**kwargs,)

其中cls: <class transformers.models.llama.tokenization_llama.LlamaTokenizer", 然后得到以下参数初始化类<class 'transformers.models.llama.tokenization_llama.LlamaTokenizer:

{"add_bos_token": true,"add_eos_token": false,"bos_token": "<s>","clean_up_tokenization_spaces": false,"eos_token": "</s>","legacy": false,"model_max_length": 2048,"pad_token": null,"padding_side": "right","sp_model_kwargs": {},"unk_token": "<unk>","vocab_file": "./work_dirs/llama-vid/llama-vid-7b-full-224-video-fps-1/tokenizer.model","special_tokens_map_file": "./work_dirs/llama-vid/llama-vid-7b-full-224-video-fps-1/special_tokens_map.json","name_or_path": "./work_dirs/llama-vid/llama-vid-7b-full-224-video-fps-1"

}

try:tokenizer = cls(*init_inputs, **init_kwargs)

except OSError:raise OSError("Unable to load vocabulary from file. ""Please check that the provided vocabulary is accessible and not corrupted.")

然后在transformers/models/llama/tokenization_llama.py`初始化中使用加载

import sentencepiece as spm

self.sp_model = spm.SentencePieceProcessor(**self.sp_model_kwargs)

self.sp_model.Load(vocab_file)

`vocab_file=‘tokenizer.model’

最后就是返回一个tokenizer对象

LlamaTokenizer(name_or_path='./work_dirs/llama-vid/llama-vid-7b-full-224-video-fps-1',vocab_size=32000,model_max_length=2048,is_fast=False,padding_side='right',truncation_side='right',special_tokens={'bos_token': AddedToken("<s>", rstrip=False, lstrip=False, single_word=False, normalized=False),'eos_token': AddedToken("</s>", rstrip=False, lstrip=False, single_word=False, normalized=False),'unk_token': AddedToken("<unk>", rstrip=False, lstrip=False, single_word=False, normalized=False),'pad_token': '<unk>'},clean_up_tokenization_spaces=False

)

2. LlavaLlamaAttForCausalLM.from_pretrained

首先在``中加载参数:

if not isinstance(config, PretrainedConfig):config_path = config if config is not None else pretrained_model_name_or_pathconfig, model_kwargs = cls.config_class.from_pretrained(config_path,cache_dir=cache_dir,return_unused_kwargs=True,force_download=force_download,resume_download=resume_download,proxies=proxies,local_files_only=local_files_only,token=token,revision=revision,subfolder=subfolder,_from_auto=from_auto_class,_from_pipeline=from_pipeline,**kwargs,)

else:model_kwargs = kwargscls: <class 'llamavid.model.language_model.llava_llama_vid.LlavaLlamaAttForCausalLM'>

cls.config_class: <class 'llamavid.model.language_model.llava_llama_vid.LlavaConfig'>

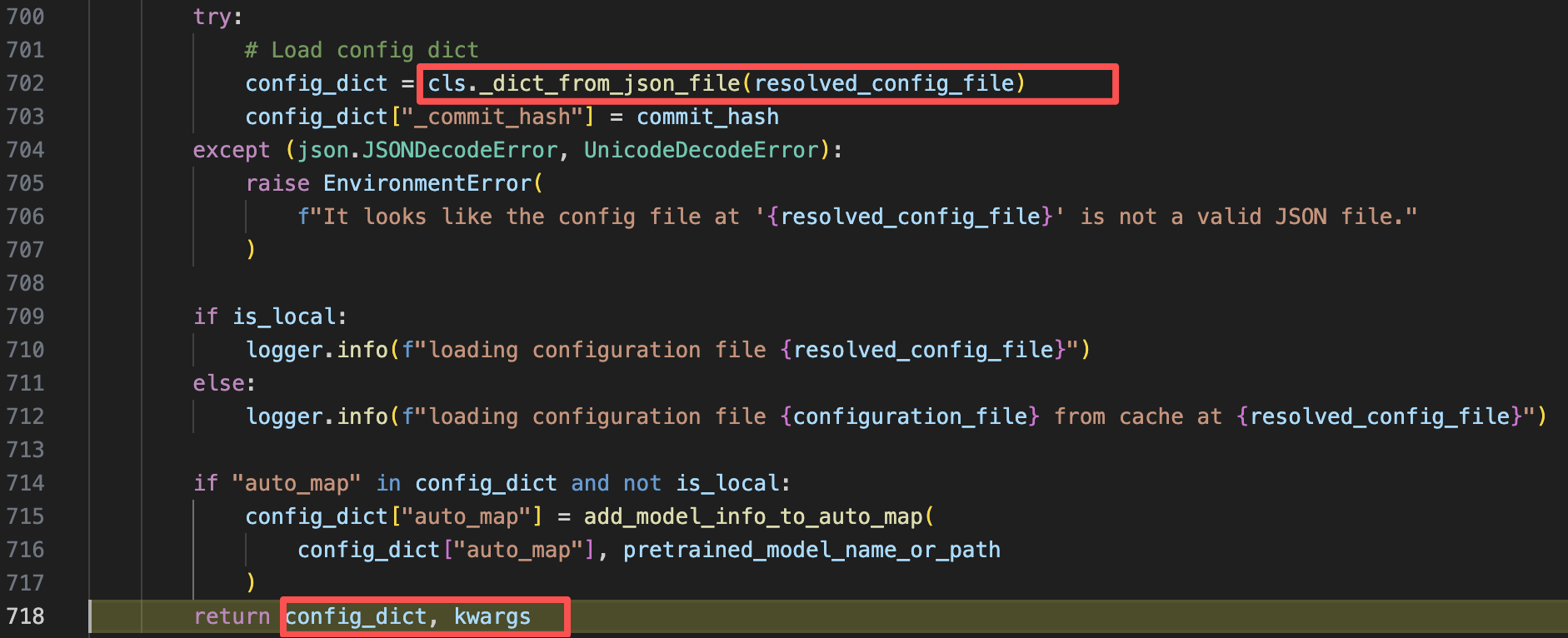

然后在transformers/configuration_utils.py中取出model_path/config.json

else:configuration_file = kwargs.pop("_configuration_file", CONFIG_NAME)try:# Load from local folder or from cache or download from model Hub and cacheresolved_config_file = cached_file(pretrained_model_name_or_path,configuration_file,cache_dir=cache_dir,force_download=force_download,proxies=proxies,resume_download=resume_download,local_files_only=local_files_only,use_auth_token=use_auth_token,user_agent=user_agent,revision=revision,subfolder=subfolder,_commit_hash=commit_hash,)

CONFIG_NAME在transformers/utils/__init__.py中定义:

WEIGHTS_NAME = "pytorch_model.bin"

WEIGHTS_INDEX_NAME = "pytorch_model.bin.index.json"

ADAPTER_CONFIG_NAME = "adapter_config.json"

ADAPTER_WEIGHTS_NAME = "adapter_model.bin"

ADAPTER_SAFE_WEIGHTS_NAME = "adapter_model.safetensors"

TF2_WEIGHTS_NAME = "tf_model.h5"

TF2_WEIGHTS_INDEX_NAME = "tf_model.h5.index.json"

TF_WEIGHTS_NAME = "model.ckpt"

FLAX_WEIGHTS_NAME = "flax_model.msgpack"

FLAX_WEIGHTS_INDEX_NAME = "flax_model.msgpack.index.json"

SAFE_WEIGHTS_NAME = "model.safetensors"

SAFE_WEIGHTS_INDEX_NAME = "model.safetensors.index.json"

CONFIG_NAME = "config.json"

FEATURE_EXTRACTOR_NAME = "preprocessor_config.json"

IMAGE_PROCESSOR_NAME = FEATURE_EXTRACTOR_NAME

GENERATION_CONFIG_NAME = "generation_config.json"

MODEL_CARD_NAME = "modelcard.json"

然后通过Json load成dict然后返回

最后在``中加载cls: <class 'llamavid.model.language_model.llava_llama_vid.LlavaConfig'>:

return cls.from_dict(config_dict, **kwargs)-> config = cls(**config_dict)return config

主要加载hidden_dim等之类的配置:

class LlamaConfig(PretrainedConfig):model_type = "llama"keys_to_ignore_at_inference = ["past_key_values"]def __init__(self,vocab_size=32000,hidden_size=4096,intermediate_size=11008,num_hidden_layers=32,num_attention_heads=32,num_key_value_heads=None,hidden_act="silu",max_position_embeddings=2048,initializer_range=0.02,rms_norm_eps=1e-6,use_cache=True,pad_token_id=0,bos_token_id=1,eos_token_id=2,pretraining_tp=1,tie_word_embeddings=False,rope_scaling=None,**kwargs,):self.vocab_size = vocab_sizeself.max_position_embeddings = max_position_embeddingsself.hidden_size = hidden_sizeself.intermediate_size = intermediate_sizeself.num_hidden_layers = num_hidden_layersself.num_attention_heads = num_attention_heads# for backward compatibilityif num_key_value_heads is None:num_key_value_heads = num_attention_headsself.num_key_value_heads = num_key_value_headsself.hidden_act = hidden_actself.initializer_range = initializer_rangeself.rms_norm_eps = rms_norm_epsself.pretraining_tp = pretraining_tpself.use_cache = use_cacheself.rope_scaling = rope_scalingself._rope_scaling_validation()super().__init__(pad_token_id=pad_token_id,bos_token_id=bos_token_id,eos_token_id=eos_token_id,tie_word_embeddings=tie_word_embeddings,**kwargs,)

除此之外的其他参数在transformers/configuration_utils.py中加载:

class PretrainedConfig(PushToHubMixin):model_type: str = ""is_composition: bool = Falseattribute_map: Dict[str, str] = {}_auto_class: Optional[str] = Nonedef __setattr__(self, key, value):if key in super().__getattribute__("attribute_map"):key = super().__getattribute__("attribute_map")[key]super().__setattr__(key, value)def __getattribute__(self, key):if key != "attribute_map" and key in super().__getattribute__("attribute_map"):key = super().__getattribute__("attribute_map")[key]return super().__getattribute__(key)def __init__(self, **kwargs):# Attributes with defaultsself.return_dict = kwargs.pop("return_dict", True)self.output_hidden_states = kwargs.pop("output_hidden_states", False)self.output_attentions = kwargs.pop("output_attentions", False)self.torchscript = kwargs.pop("torchscript", False) # Only used by PyTorch modelsself.torch_dtype = kwargs.pop("torch_dtype", None) # Only used by PyTorch modelsself.use_bfloat16 = kwargs.pop("use_bfloat16", False)self.tf_legacy_loss = kwargs.pop("tf_legacy_loss", False) # Only used by TensorFlow modelsself.pruned_heads = kwargs.pop("pruned_heads", {})self.tie_word_embeddings = kwargs.pop("tie_word_embeddings", True) # Whether input and output word embeddings should be tied for all MLM, LM and Seq2Seq models.# Is decoder is used in encoder-decoder models to differentiate encoder from decoderself.is_encoder_decoder = kwargs.pop("is_encoder_decoder", False)self.is_decoder = kwargs.pop("is_decoder", False)self.cross_attention_hidden_size = kwargs.pop("cross_attention_hidden_size", None)self.add_cross_attention = kwargs.pop("add_cross_attention", False)self.tie_encoder_decoder = kwargs.pop("tie_encoder_decoder", False)# Parameters for sequence generationself.max_length = kwargs.pop("max_length", 20)self.min_length = kwargs.pop("min_length", 0)self.do_sample = kwargs.pop("do_sample", False)self.early_stopping = kwargs.pop("early_stopping", False)self.num_beams = kwargs.pop("num_beams", 1)self.num_beam_groups = kwargs.pop("num_beam_groups", 1)self.diversity_penalty = kwargs.pop("diversity_penalty", 0.0)self.temperature = kwargs.pop("temperature", 1.0)self.top_k = kwargs.pop("top_k", 50)self.top_p = kwargs.pop("top_p", 1.0)self.typical_p = kwargs.pop("typical_p", 1.0)self.repetition_penalty = kwargs.pop("repetition_penalty", 1.0)self.length_penalty = kwargs.pop("length_penalty", 1.0)self.no_repeat_ngram_size = kwargs.pop("no_repeat_ngram_size", 0)self.encoder_no_repeat_ngram_size = kwargs.pop("encoder_no_repeat_ngram_size", 0)self.bad_words_ids = kwargs.pop("bad_words_ids", None)self.num_return_sequences = kwargs.pop("num_return_sequences", 1)self.chunk_size_feed_forward = kwargs.pop("chunk_size_feed_forward", 0)self.output_scores = kwargs.pop("output_scores", False)self.return_dict_in_generate = kwargs.pop("return_dict_in_generate", False)self.forced_bos_token_id = kwargs.pop("forced_bos_token_id", None)self.forced_eos_token_id = kwargs.pop("forced_eos_token_id", None)self.remove_invalid_values = kwargs.pop("remove_invalid_values", False)self.exponential_decay_length_penalty = kwargs.pop("exponential_decay_length_penalty", None)self.suppress_tokens = kwargs.pop("suppress_tokens", None)self.begin_suppress_tokens = kwargs.pop("begin_suppress_tokens", None)# Fine-tuning task argumentsself.architectures = kwargs.pop("architectures", None)self.finetuning_task = kwargs.pop("finetuning_task", None)self.id2label = kwargs.pop("id2label", None)self.label2id = kwargs.pop("label2id", None)if self.label2id is not None and not isinstance(self.label2id, dict):raise ValueError("Argument label2id should be a dictionary.")if self.id2label is not None:if not isinstance(self.id2label, dict):raise ValueError("Argument id2label should be a dictionary.")num_labels = kwargs.pop("num_labels", None)if num_labels is not None and len(self.id2label) != num_labels:logger.warning(f"You passed along `num_labels={num_labels}` with an incompatible id to label map: "f"{self.id2label}. The number of labels wil be overwritten to {self.num_labels}.")self.id2label = {int(key): value for key, value in self.id2label.items()}# Keys are always strings in JSON so convert ids to int here.else:self.num_labels = kwargs.pop("num_labels", 2)if self.torch_dtype is not None and isinstance(self.torch_dtype, str):# we will start using self.torch_dtype in v5, but to be consistent with# from_pretrained's torch_dtype arg convert it to an actual torch.dtype objectif is_torch_available():import torchself.torch_dtype = getattr(torch, self.torch_dtype)# Tokenizer arguments TODO: eventually tokenizer and models should share the same configself.tokenizer_class = kwargs.pop("tokenizer_class", None)self.prefix = kwargs.pop("prefix", None)self.bos_token_id = kwargs.pop("bos_token_id", None)self.pad_token_id = kwargs.pop("pad_token_id", None)self.eos_token_id = kwargs.pop("eos_token_id", None)self.sep_token_id = kwargs.pop("sep_token_id", None)self.decoder_start_token_id = kwargs.pop("decoder_start_token_id", None)# task specific argumentsself.task_specific_params = kwargs.pop("task_specific_params", None)# regression / multi-label classificationself.problem_type = kwargs.pop("problem_type", None)allowed_problem_types = ("regression", "single_label_classification", "multi_label_classification")if self.problem_type is not None and self.problem_type not in allowed_problem_types:raise ValueError(f"The config parameter `problem_type` was not understood: received {self.problem_type} ""but only 'regression', 'single_label_classification' and 'multi_label_classification' are valid.")# TPU argumentsif kwargs.pop("xla_device", None) is not None:logger.warning("The `xla_device` argument has been deprecated in v4.4.0 of Transformers. It is ignored and you can ""safely remove it from your `config.json` file.")# Name or path to the pretrained checkpointself._name_or_path = str(kwargs.pop("name_or_path", ""))# Config hashself._commit_hash = kwargs.pop("_commit_hash", None)# Drop the transformers version infoself.transformers_version = kwargs.pop("transformers_version", None)# Deal with gradient checkpointingif kwargs.get("gradient_checkpointing", False):warnings.warn("Passing `gradient_checkpointing` to a config initialization is deprecated and will be removed in v5 ""Transformers. Using `model.gradient_checkpointing_enable()` instead, or if you are using the ""`Trainer` API, pass `gradient_checkpointing=True` in your `TrainingArguments`.")# Additional attributes without default valuesfor key, value in kwargs.items():try:setattr(self, key, value)except AttributeError as err:logger.error(f"Can't set {key} with value {value} for {self}")raise err

最终返回一个"<class 'llamavid.model.language_model.llava_llama_vid.LlavaConfig'>"

LlavaConfig {"_name_or_path": "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/model_zoo/LLM/vicuna/7B-V1.5","architectures": ["LlavaLlamaAttForCausalLM"],"bert_type": "qformer_pretrain","bos_token_id": 1,"compress_type": "mean","eos_token_id": 2,"freeze_mm_mlp_adapter": false,"hidden_act": "silu","hidden_size": 4096,"image_aspect_ratio": "pad","image_grid_pinpoints": null,"image_processor": "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/llamavid/processor/clip-patch14-224","initializer_range": 0.02,"intermediate_size": 11008,"max_position_embeddings": 4096,"max_token": 2048,"mm_hidden_size": 1408,"mm_projector_type": "mlp2x_gelu","mm_use_im_patch_token": false,"mm_use_im_start_end": false,"mm_vision_select_feature": "patch","mm_vision_select_layer": -2,"mm_vision_tower": "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/model_zoo/LAVIS/eva_vit_g.pth","model_type": "llava","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 32,"num_query": 32,"pad_token_id": 0,"pretraining_tp": 1,"rms_norm_eps": 1e-05,"rope_scaling": null,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.31.0","tune_mm_mlp_adapter": false,"use_cache": false,"use_mm_proj": true,"vocab_size": 32000

}

接下来拿到'model_path/pytorch_model.bin.index.json', 该文件记录模型相关的weight在哪个bin文件中, 即shard, 例如:

{"metadata": {"total_size": 15882784512},"weight_map": {"lm_head.weight": "pytorch_model-00002-of-00002.bin","model.embed_tokens.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.input_layernorm.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.mlp.down_proj.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.mlp.gate_proj.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.mlp.up_proj.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.post_attention_layernorm.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.self_attn.k_proj.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.self_attn.o_proj.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.self_attn.q_proj.weight": "pytorch_model-00001-of-00002.bin","model.layers.0.self_attn.rotary_emb.inv_freq": "pytorch_model-00001-of-00002.bin","model.layers.0.self_attn.v_proj.weight": "pytorch_model-00001-of-00002.bin",}

}接下来在transformers/utils/hub.py中load:

def get_checkpoint_shard_files(pretrained_model_name_or_path,index_filename,cache_dir=None,force_download=False,proxies=None,resume_download=False,local_files_only=False,use_auth_token=None,user_agent=None,revision=None,subfolder="",_commit_hash=None,

):import jsonif not os.path.isfile(index_filename):raise ValueError(f"Can't find a checkpoint index ({index_filename}) in {pretrained_model_name_or_path}.")with open(index_filename, "r") as f:index = json.loads(f.read())shard_filenames = sorted(set(index["weight_map"].values()))sharded_metadata = index["metadata"]sharded_metadata["all_checkpoint_keys"] = list(index["weight_map"].keys())sharded_metadata["weight_map"] = index["weight_map"].copy()# First, let's deal with local folder.if os.path.isdir(pretrained_model_name_or_path):shard_filenames = [os.path.join(pretrained_model_name_or_path, subfolder, f) for f in shard_filenames]return shard_filenames, sharded_metadata

然后开始构建模型transformers/modeling_utils.py -> 'llamavid.model.language_model.llava_llama_vid.LlavaLlamaAttForCausalLM':

with ContextManagers(init_contexts):model = cls(config, *model_args, **model_kwargs)

初始化模型:

class LlavaLlamaAttForCausalLM(LlamaForCausalLM, LLaMAVIDMetaForCausalLM):config_class = LlavaConfigdef __init__(self, config):super(LlamaForCausalLM, self).__init__(config)self.model = LlavaAttLlamaModel(config)self.lm_head = nn.Linear(config.hidden_size, config.vocab_size, bias=False)# Initialize weights and apply final processingself.post_init()

transformers/models/llama/modeling_llama.py

@add_start_docstrings("The bare LLaMA Model outputting raw hidden-states without any specific head on top.",LLAMA_START_DOCSTRING,

)

class LlamaModel(LlamaPreTrainedModel):"""Transformer decoder consisting of *config.num_hidden_layers* layers. Each layer is a [`LlamaDecoderLayer`]Args:config: LlamaConfig"""def __init__(self, config: LlamaConfig):super().__init__(config)self.padding_idx = config.pad_token_idself.vocab_size = config.vocab_sizeself.embed_tokens = nn.Embedding(config.vocab_size, config.hidden_size, self.padding_idx)self.layers = nn.ModuleList([LlamaDecoderLayer(config) for _ in range(config.num_hidden_layers)])self.norm = LlamaRMSNorm(config.hidden_size, eps=config.rms_norm_eps)self.gradient_checkpointing = False# Initialize weights and apply final processingself.post_init()

并在LLaMA-VID/llamavid/model/llamavid_arch.py中构建vision_tower和projector:

class LLaMAVIDMetaModel:def __init__(self, config):super(LLaMAVIDMetaModel, self).__init__(config)if hasattr(config, "mm_vision_tower"):self.vision_tower = build_vision_tower(config, delay_load=True)self.mm_projector = build_vision_projector(config)def get_vision_tower(self):vision_tower = getattr(self, 'vision_tower', None)if type(vision_tower) is list:vision_tower = vision_tower[0]return vision_tower

这些vision_tower和image_processor都在model_path/config.json中定义:

{"_name_or_path": "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/model_zoo/LLM/vicuna/7B-V1.5","architectures": ["LlavaLlamaAttForCausalLM"],"bert_type": "qformer_pretrain","bos_token_id": 1,"compress_type": "mean","eos_token_id": 2,"freeze_mm_mlp_adapter": false,"hidden_act": "silu","hidden_size": 4096,"image_aspect_ratio": "pad","image_grid_pinpoints": null,"image_processor": "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/llamavid/processor/clip-patch14-224","initializer_range": 0.02,"intermediate_size": 11008,"max_position_embeddings": 4096,"max_token": 2048,"mm_hidden_size": 1408,"mm_projector_type": "mlp2x_gelu","mm_use_im_patch_token": false,"mm_use_im_start_end": false,"mm_vision_select_feature": "patch","mm_vision_select_layer": -2,"mm_vision_tower": "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/model_zoo/LAVIS/eva_vit_g.pth","model_type": "llava","num_attention_heads": 32,"num_hidden_layers": 32,"num_key_value_heads": 32,"num_query": 32,"pad_token_id": 0,"pretraining_tp": 1,"rms_norm_eps": 1e-05,"rope_scaling": null,"tie_word_embeddings": false,"torch_dtype": "bfloat16","transformers_version": "4.31.0","tune_mm_mlp_adapter": false,"use_cache": false,"use_mm_proj": true,"vocab_size": 32000

}

def build_vision_tower(vision_tower_cfg, **kwargs):vision_tower = getattr(vision_tower_cfg, 'mm_vision_tower', getattr(vision_tower_cfg, 'vision_tower', None))image_processor = getattr(vision_tower_cfg, 'image_processor', getattr(vision_tower_cfg, 'image_processor', "/mnt/bn/liyan1994/test_th2tf/LLaMA-VID/model_zoo/OpenAI/clip-vit-large-patch14"))is_absolute_path_exists = os.path.exists(vision_tower)if not is_absolute_path_exists:raise ValueError(f'Not find vision tower: {vision_tower}')if "openai" in vision_tower.lower() or "laion" in vision_tower.lower():return CLIPVisionTower(vision_tower, args=vision_tower_cfg, **kwargs)elif "lavis" in vision_tower.lower() or "eva" in vision_tower.lower():return EVAVisionTowerLavis(vision_tower, image_processor, args=vision_tower_cfg, **kwargs)else:raise ValueError(f'Unknown vision tower: {vision_tower}')

vision_tower的定义:

class EVAVisionTowerLavis(nn.Module):def __init__(self, vision_tower, image_processor, args, use_checkpoint=False, drop_path_rate=0.0, delay_load=False, dtype=torch.float32):super().__init__()self.is_loaded = Falseself.use_checkpoint = use_checkpointself.vision_tower_name = vision_towerself.image_processor_name = image_processorself.drop_path_rate = drop_path_rateself.patch_size = 14self.out_channel = 1408if not delay_load:self.load_model()self.vision_config = CLIPVisionConfig.from_pretrained(image_processor)def load_model(self):self.image_processor = CLIPImageProcessor.from_pretrained(self.image_processor_name)self.vision_tower = VisionTransformer(img_size=self.image_processor.size['shortest_edge'],patch_size=self.patch_size,use_mean_pooling=False,embed_dim=self.out_channel,depth=39,num_heads=self.out_channel//88,mlp_ratio=4.3637,qkv_bias=True,drop_path_rate=self.drop_path_rate,norm_layer=partial(nn.LayerNorm, eps=1e-6),use_checkpoint=self.use_checkpoint,) state_dict = torch.load(self.vision_tower_name, map_location="cpu") interpolate_pos_embed(self.vision_tower, state_dict)incompatible_keys = self.vision_tower.load_state_dict(state_dict, strict=False)print(incompatible_keys)self.vision_tower.requires_grad_(False)self.is_loaded = True@torch.no_grad()def forward(self, images):if type(images) is list:image_features = []for image in images:image_forward_out = self.vision_tower(image.to(device=self.device, dtype=self.dtype).unsqueeze(0))image_feature = image_forward_out.to(image.dtype)image_features.append(image_feature)else:image_forward_outs = self.vision_tower(images.to(device=self.device, dtype=self.dtype))image_features = image_forward_outs.to(images.dtype)return image_features@propertydef num_patches(self):return (self.image_processor.size['shortest_edge'] // self.patch_size) ** 2

加载权重:

from safetensors import safe_open

from safetensors.torch import load_file as safe_load_file

from safetensors.torch import save_file as safe_save_file

if checkpoint_file.endswith(".safetensors") and is_safetensors_available():# Check format of the archivewith safe_open(checkpoint_file, framework="pt") as f:metadata = f.metadata()if metadata.get("format") not in ["pt", "tf", "flax"]:raise OSError(f"The safetensors archive passed at {checkpoint_file} does not contain the valid metadata. Make sure ""you save your model with the `save_pretrained` method.")elif metadata["format"] != "pt":raise NotImplementedError(f"Conversion from a {metadata['format']} safetensors archive to PyTorch is not implemented yet.")return safe_load_file(checkpoint_file)

如果是'model_path/pytorch_model-00001-of-00002.bin'格式, 则:

return torch.load(checkpoint_file, map_location="cpu")

然后将一个shard文件文件的权重加载进模型中transformers/modeling_utils.py:

state_dict = load_state_dict()if low_cpu_mem_usage:new_error_msgs, offload_index, state_dict_index = _load_state_dict_into_meta_model(model_to_load,state_dict,loaded_keys,start_prefix,expected_keys,device_map=device_map,offload_folder=offload_folder,offload_index=offload_index,state_dict_folder=state_dict_folder,state_dict_index=state_dict_index,dtype=dtype,is_quantized=is_quantized,is_safetensors=is_safetensors,keep_in_fp32_modules=keep_in_fp32_modules,)error_msgs += new_error_msgselse:error_msgs += _load_state_dict_into_model(model_to_load, state_dict, start_prefix)# force memory releasedel state_dictgc.collect()

加载参数位于transformers/modeling_utils.py: 其中关键函数为set_module_tensor_to_device, set_module_quantized_tensor_to_device

def _load_state_dict_into_meta_model(model,state_dict,loaded_state_dict_keys, # left for now but could be removed, see belowstart_prefix,expected_keys,device_map=None,offload_folder=None,offload_index=None,state_dict_folder=None,state_dict_index=None,dtype=None,is_quantized=False,is_safetensors=False,keep_in_fp32_modules=None,

):if is_quantized:from .utils.bitsandbytes import set_module_quantized_tensor_to_deviceerror_msgs = []old_keys = []new_keys = []for key in state_dict.keys():new_key = Noneif "gamma" in key:new_key = key.replace("gamma", "weight")if "beta" in key:new_key = key.replace("beta", "bias")if new_key:old_keys.append(key)new_keys.append(new_key)for old_key, new_key in zip(old_keys, new_keys):state_dict[new_key] = state_dict.pop(old_key)for param_name, param in state_dict.items():# First part of the test is always true as load_state_dict_keys always contains state_dict keys.if param_name not in loaded_state_dict_keys or param_name not in expected_keys:continueif param_name.startswith(start_prefix):param_name = param_name[len(start_prefix) :]module_name = param_nameset_module_kwargs = {}# We convert floating dtypes to the `dtype` passed. We want to keep the buffers/params# in int/uint/bool and not cast them.if dtype is not None and torch.is_floating_point(param):if (keep_in_fp32_modules is not Noneand any(module_to_keep_in_fp32 in param_name for module_to_keep_in_fp32 in keep_in_fp32_modules)and dtype == torch.float16):param = param.to(torch.float32)# For backward compatibility with older versions of `accelerate`# TODO: @sgugger replace this check with version check at the next `accelerate` releaseif "dtype" in list(inspect.signature(set_module_tensor_to_device).parameters):set_module_kwargs["dtype"] = torch.float32else:param = param.to(dtype)# For compatibility with PyTorch load_state_dict which converts state dict dtype to existing dtype in modelif dtype is None:old_param = modelsplits = param_name.split(".")for split in splits:old_param = getattr(old_param, split)if old_param is None:breakif old_param is not None:param = param.to(old_param.dtype)set_module_kwargs["value"] = paramif device_map is None:param_device = "cpu"else:# find next higher level module that is defined in device_map:# bert.lm_head.weight -> bert.lm_head -> bert -> ''while len(module_name) > 0 and module_name not in device_map:module_name = ".".join(module_name.split(".")[:-1])if module_name == "" and "" not in device_map:# TODO: group all errors and raise at the end.raise ValueError(f"{param_name} doesn't have any device set.")param_device = device_map[module_name]if param_device == "disk":if not is_safetensors:offload_index = offload_weight(param, param_name, offload_folder, offload_index)elif param_device == "cpu" and state_dict_index is not None:state_dict_index = offload_weight(param, param_name, state_dict_folder, state_dict_index)elif not is_quantized:# For backward compatibility with older versions of `accelerate`set_module_tensor_to_device(model, param_name, param_device, **set_module_kwargs)else:if param.dtype == torch.int8 and param_name.replace("weight", "SCB") in state_dict.keys():fp16_statistics = state_dict[param_name.replace("weight", "SCB")]else:fp16_statistics = Noneif "SCB" not in param_name:set_module_quantized_tensor_to_device(model, param_name, param_device, value=param, fp16_statistics=fp16_statistics)return error_msgs, offload_index, state_dict_index

然后通过以下真正加载参数并支持量化accelerate/utils/modeling.py:

if value is not None:new_value = value.to(device)

else:new_value = old_value.to(device)if is_buffer:module._buffers[tensor_name] = new_value

else:module._parameters[tensor_name] = param_cls(new_value, requires_grad=old_value.requires_grad).to(device)

完成后返回transformers/modeling_utils.py

elif from_pt:# restore default dtypeif dtype_orig is not None:torch.set_default_dtype(dtype_orig)(model,missing_keys,unexpected_keys,mismatched_keys,offload_index,error_msgs,) = cls._load_pretrained_model(model,state_dict,loaded_state_dict_keys, # XXX: rename?resolved_archive_file,pretrained_model_name_or_path,ignore_mismatched_sizes=ignore_mismatched_sizes,sharded_metadata=sharded_metadata,_fast_init=_fast_init,low_cpu_mem_usage=low_cpu_mem_usage,device_map=device_map,offload_folder=offload_folder,offload_state_dict=offload_state_dict,dtype=torch_dtype,is_quantized=(load_in_8bit or load_in_4bit),keep_in_fp32_modules=keep_in_fp32_modules,)

然后加载GenerationConfig: transformers/modeling_utils.py, 需要的文件为:model_path/generation_config.json

if model.can_generate():try:model.generation_config = GenerationConfig.from_pretrained(pretrained_model_name_or_path,cache_dir=cache_dir,force_download=force_download,resume_download=resume_download,proxies=proxies,local_files_only=local_files_only,token=token,revision=revision,subfolder=subfolder,_from_auto=from_auto_class,_from_pipeline=from_pipeline,**kwargs,)

以上就是model = AutoModelForCausalLM.from_pretrained(model_path, low_cpu_mem_usage=True, **kwargs)的全部内容

以下就是加载tokenizer, model和image_processor的全部:

tokenizer, model, image_processor, context_len = load_pretrained_model(args.model_path, args.model_base, model_name, args.model_max_length)