pv-pvc-sc存储卷进阶-sts-helm资源清单基础管理

🌟pv,pvc,sc之间的关系

pv

pv用于和后端存储对接的资源,关联后端存储。

sc

sc可以动态创建pv的资源,关联后端存储。

pvc

可以向pv或者sc进行资源请求,获取特定的存储。

pod只需要在存储卷声明使用哪个pvc即可。

🌟手动创建pv和pvc及pod引用

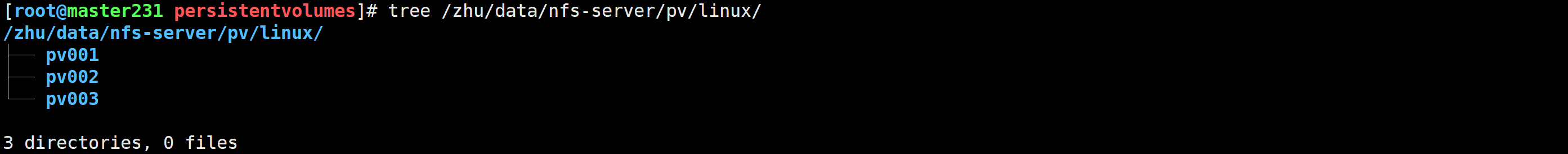

创建工作目录

[root@master231 persistentvolumes]# mkdir -pv /zhu/data/nfs-server/pv/linux/pv00{1,2,3}

mkdir: created directory '/zhu/data/nfs-server/pv'

mkdir: created directory '/zhu/data/nfs-server/pv/linux'

mkdir: created directory '/zhu/data/nfs-server/pv/linux/pv001'

mkdir: created directory '/zhu/data/nfs-server/pv/linux/pv002'

mkdir: created directory '/zhu/data/nfs-server/pv/linux/pv003'

创建资源清单

[root@master231 persistentvolumes]# cat 01-manual-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:name: linux-pv01labels:linux: pv

spec:# 声明PV的访问模式,常用的有"ReadWriteOnce","ReadOnlyMany"和"ReadWriteMany":# ReadWriteOnce:(简称:"RWO")# 只允许单个worker节点读写存储卷,但是该节点的多个Pod是可以同时访问该存储卷的。# ReadOnlyMany:(简称:"ROX")# 允许多个worker节点进行只读存储卷。# ReadWriteMany:(简称:"RWX")# 允许多个worker节点进行读写存储卷。# ReadWriteOncePod:(简称:"RWOP")# 该卷可以通过单个Pod以读写方式装入。# 如果您想确保整个集群中只有一个pod可以读取或写入PVC,请使用ReadWriteOncePod访问模式。# 这仅适用于CSI卷和Kubernetes版本1.22+。accessModes:- ReadWriteMany# 声明存储卷的类型为nfsnfs:path: /zhu/data/nfs-server/pv/linux/pv001server: 10.0.0.231# 指定存储卷的回收策略,常用的有"Retain"和"Delete"# Retain:# "保留回收"策略允许手动回收资源。# 删除PersistentVolumeClaim时,PersistentVolume仍然存在,并且该卷被视为"已释放"。# 在管理员手动回收资源之前,使用该策略其他Pod将无法直接使用。# Delete:# 对于支持删除回收策略的卷插件,k8s将删除pv及其对应的数据卷数据。# Recycle:# 对于"回收利用"策略官方已弃用。相反,推荐的方法是使用动态资源调配。# 如果基础卷插件支持,回收回收策略将对卷执行基本清理(rm -rf /thevolume/*),并使其再次可用于新的声明。persistentVolumeReclaimPolicy: Retain# 声明存储的容量capacity:storage: 2Gi---apiVersion: v1

kind: PersistentVolume

metadata:name: linux-pv02labels:linux: pv

spec:accessModes:- ReadWriteManynfs:path: /zhu/data/nfs-server/pv/linux/pv002server: 10.0.0.231persistentVolumeReclaimPolicy: Retaincapacity:storage: 5Gi---apiVersion: v1

kind: PersistentVolume

metadata:name: linux-pv03labels:linux: pv

spec:accessModes:- ReadWriteManynfs:path: /zhu/data/nfs-server/pv/linux/pv003server: 10.0.0.231persistentVolumeReclaimPolicy: Retaincapacity:storage: 10Gi

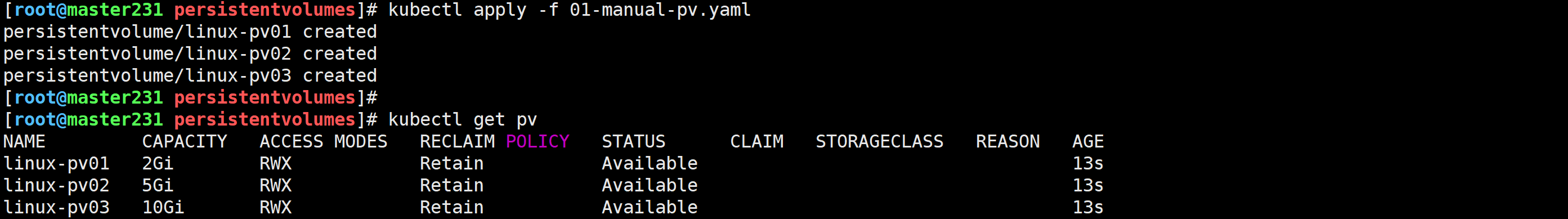

创建pv

[root@master231 persistentvolumes]# kubectl apply -f 01-manual-pv.yaml

[root@master231 persistentvolumes]# kubectl get pv

相关资源说明:

NAME: pv的名称

CAPACITY: pv的容量

ACCESS MODES: pv的访问模式

RECLAIM POLICY: pv的回收策略。

STATUS: pv的状态。

CLAIM: pv被哪个pvc使用。

STORAGECLASS: sc的名称。

REASON: pv出错时的原因。

AGE: 创建的时间。

手动创建pvc

[root@master231 persistentvolumeclaims]# cat > 01-manual-pvc.yaml <<EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: pvc001

spec:# 声明要是用的pv# volumeName: linux-pv03# 声明资源的访问模式accessModes:- ReadWriteMany# 声明资源的使用量resources:limits:storage: 4Girequests:storage: 3Gi

EOF[root@master231 persistentvolumes]# kubectl apply -f 01-manual-pvc.yaml

persistentvolumeclaim/pvc001 created

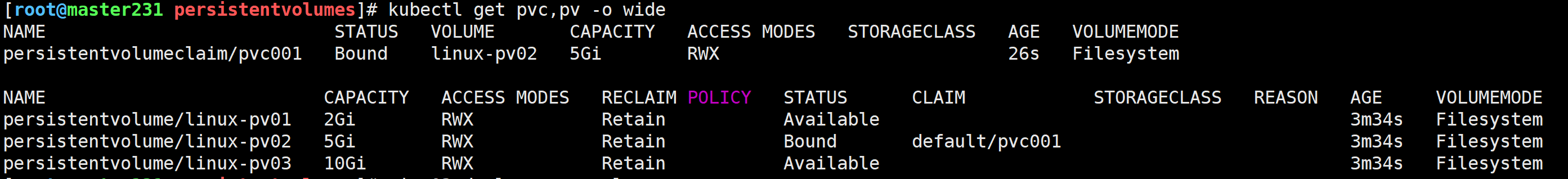

[root@master231 persistentvolumes]# kubectl get pvc,pv -o wide

Pod引用pvc

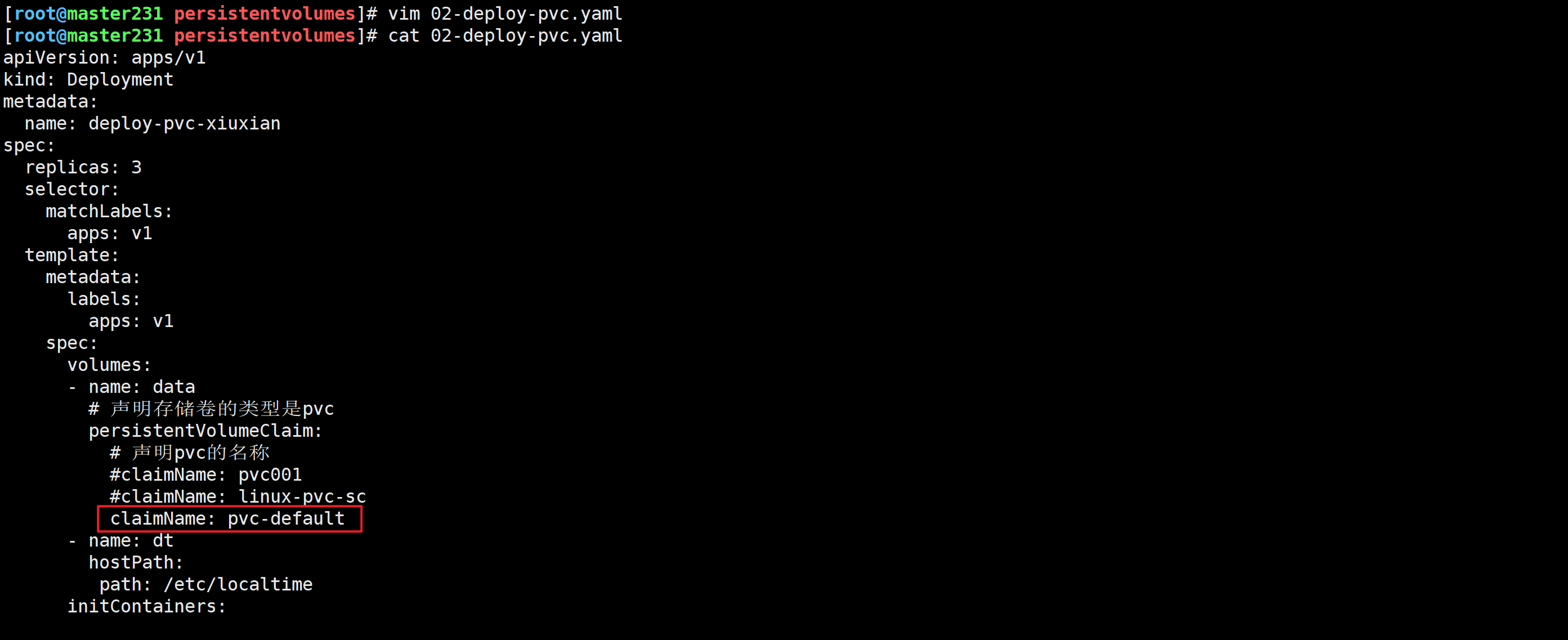

[root@master231 persistentvolumes]# cat 02-deploy-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-pvc-xiuxian

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:volumes:- name: data# 声明存储卷的类型是pvcpersistentVolumeClaim:# 声明pvc的名称claimName: pvc001- name: dthostPath:path: /etc/localtimeinitContainers:- name: init01image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /zhu- name: dtmountPath: /etc/localtimecommand:- /bin/sh- -c- date -R > /zhu/index.html ; echo www.zhubl.xyz >> /zhu/index.htmlcontainers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /usr/share/nginx/html- name: dtmountPath: /etc/localtime[root@master231 persistentvolumes]# kubectl apply -f 02-deploy-pvc.yaml

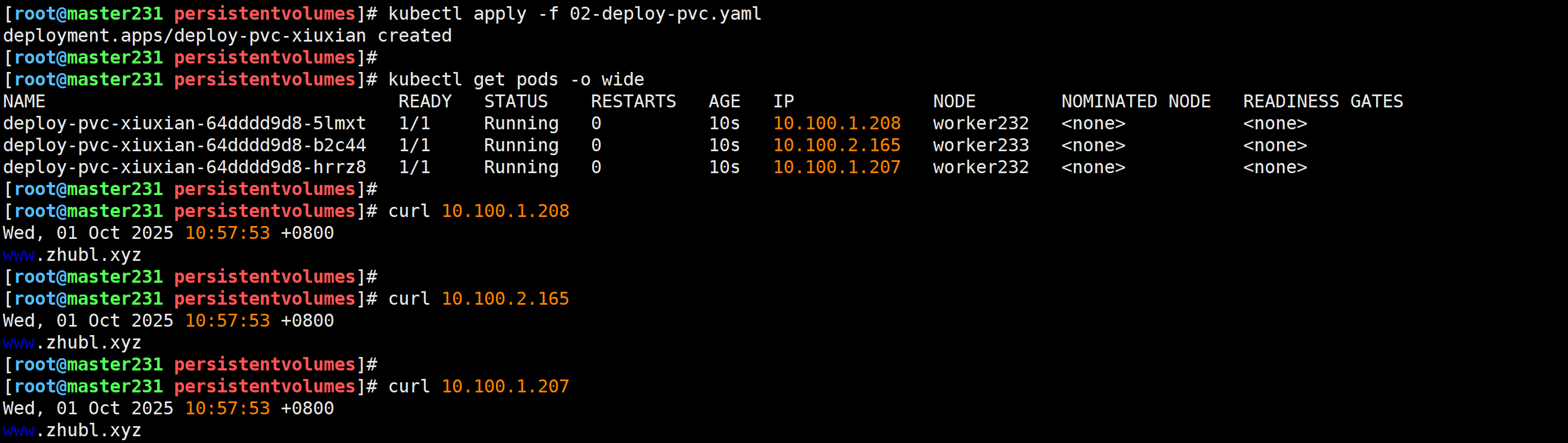

deployment.apps/deploy-pvc-xiuxian created

[root@master231 persistentvolumes]# kubectl get pods -o wide

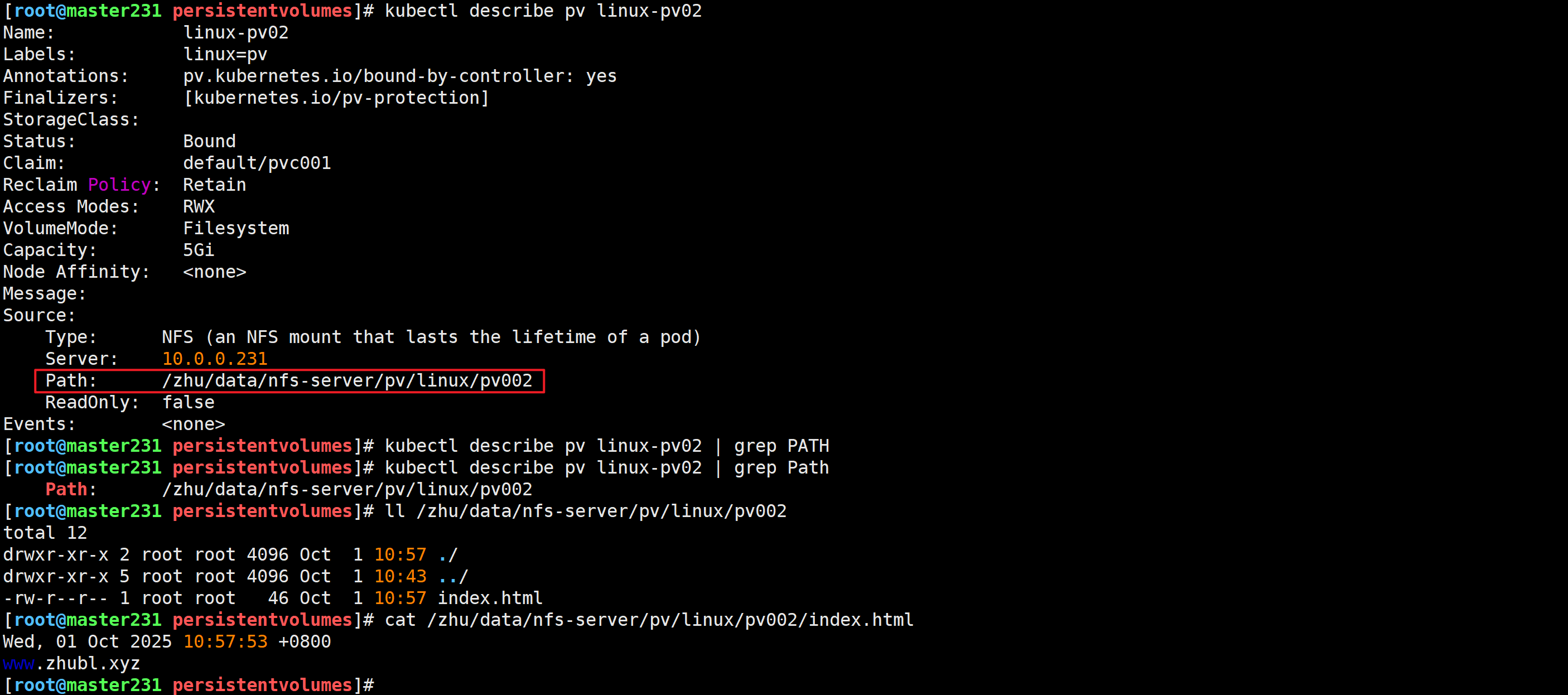

基于Pod找到后端的pv

找到pvc的名称

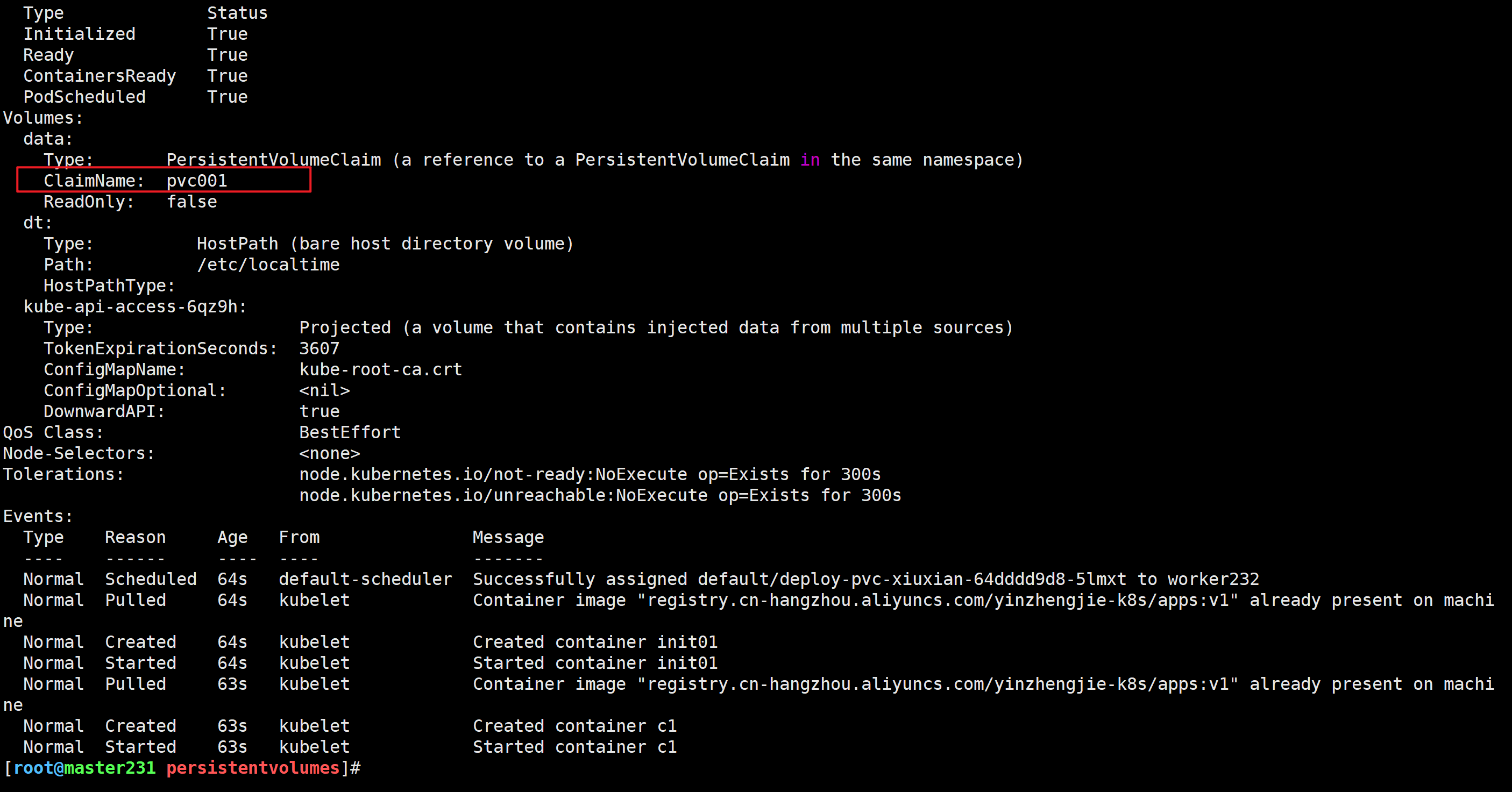

[root@master231 persistentvolumes]# kubectl describe pod deploy-pvc-xiuxian-64dddd9d8-5lmxt

Name: deploy-pvc-xiuxian-86f6d8d54d-24b28

Namespace: default

...

Volumes:data:Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)ClaimName: pvc001ReadOnly: false

...

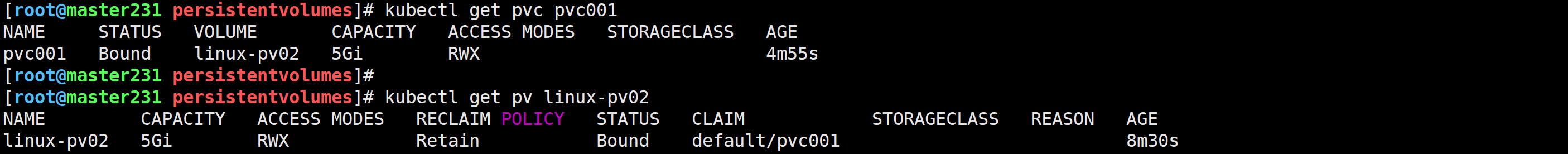

基于pvc找到与之关联的pv

[root@master231 persistentvolumes]# kubectl get pvc pvc001

[root@master231 persistentvolumes]# kubectl get pv linux-pv02

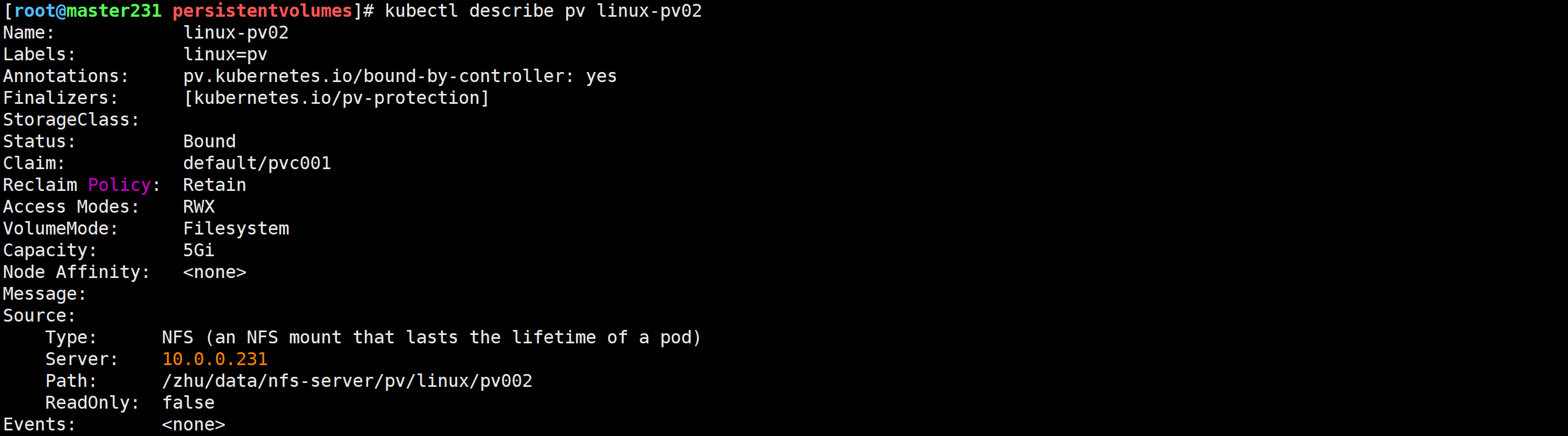

查看pv的详细信息

[root@master231 persistentvolumes]# kubectl describe pv linux-pv02

验证数据的内容

[root@master231 persistentvolumes]# kubectl describe pv linux-pv02 | grep PathPath: /zhu/data/nfs-server/pv/linux/pv002

[root@master231 persistentvolumes]# cat /zhu/data/nfs-server/pv/linux/pv002/index.html

Wed, 01 Oct 2025 10:57:53 +0800

www.zhubl.xyz

🌟CSI,CRI,CNI的区别

CSI:

Container Storage Interface,表示容器的存储接口,凡是符合该接口的程序K8S都能将数据存储该系统。

CRI:

Container Runtime Interface,表示容器运行接口,凡是符合该接口的运行时k8s底层都能调用该容器管理工具。

CNI:

Container Network Interface,表示容器的网络接口,凡是符合该接口的CNI插件k8s的Pod都可以使用该网络插件。

🌟基于nfs4.9.0版本实现动态存储类

推荐阅读:

https://github.com/kubernetes-csi/csi-driver-nfs/blob/master/docs/install-csi-driver-v4.9.0.md

https://kubernetes.io/docs/concepts/storage/storage-classes/#nfs

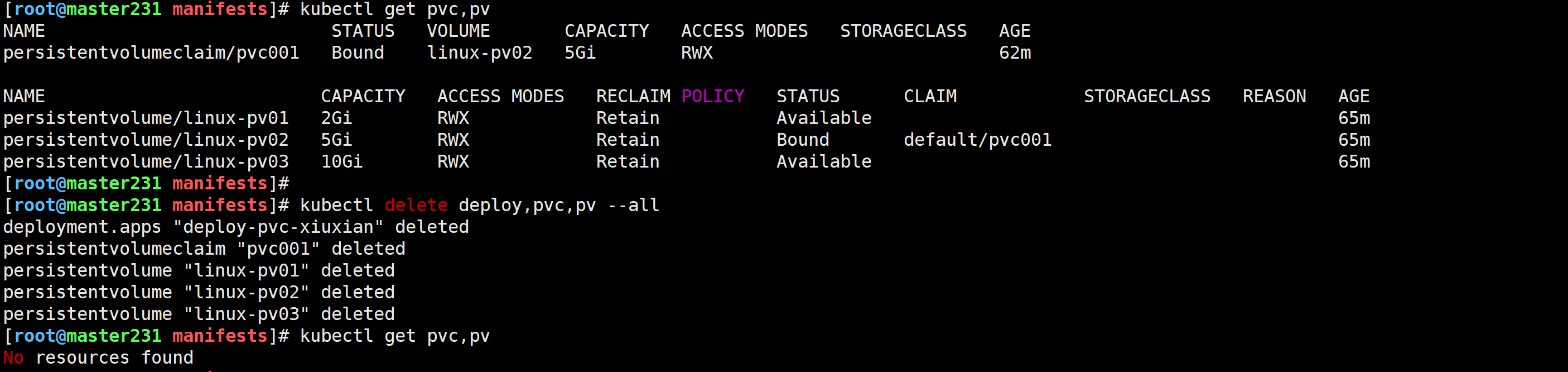

删除资源

[root@master231 manifests]# kubectl delete deploy,pvc,pv --all

deployment.apps "deploy-pvc-xiuxian" deleted

persistentvolumeclaim "pvc001" deleted

persistentvolume "linux-pv01" deleted

persistentvolume "linux-pv02" deleted

persistentvolume "linux-pv03" deleted

导入镜像(所有节点)

docker load -i csi-nfs-node-v4.9.0.tar.gz

docker load -i csi-nfs-controller-v4.9.0.tar.gz

克隆代码

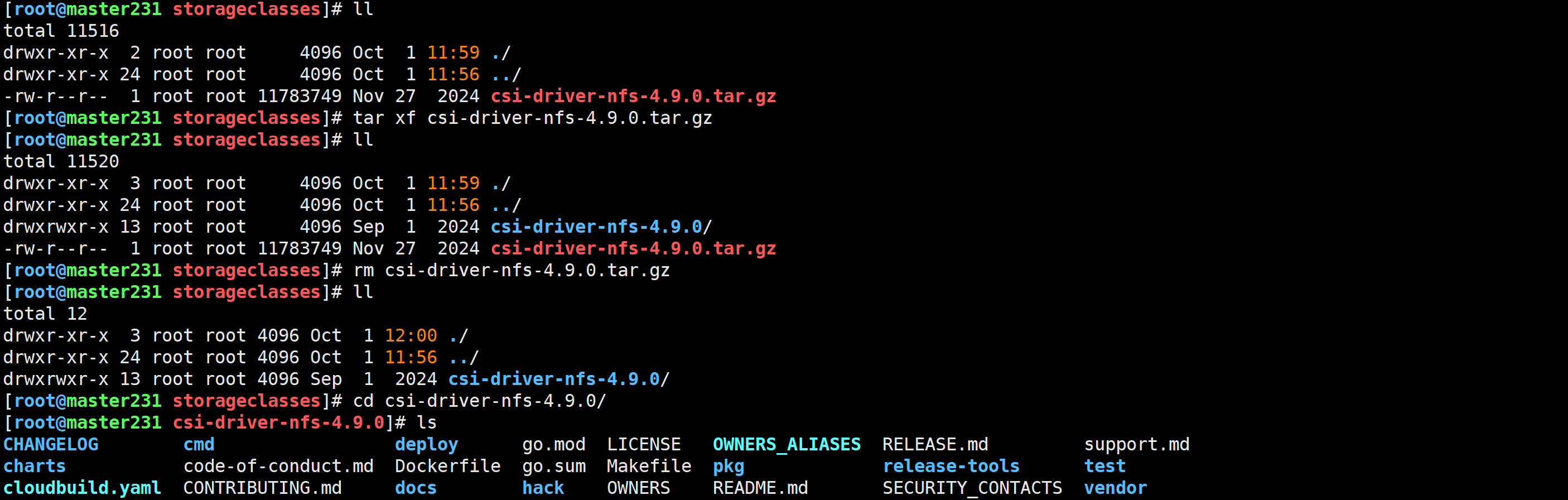

[root@master231 manifests]# cd storageclasses/

[root@master231 storageclasses]# cd ../persistentvolumes/

[root@master231 storageclasses]# git clone https://github.com/kubernetes-csi/csi-driver-nfs.git

[root@master231 storageclasses]# tar xf csi-driver-nfs-4.9.0.tar.gz

[root@master231 storageclasses]# cd csi-driver-nfs-4.9.0/

安装nfs动态存储类

[root@master231 csi-driver-nfs-4.9.0]# ./deploy/install-driver.sh v4.9.0 local

use local deploy

Installing NFS CSI driver, version: v4.9.0 ...

serviceaccount/csi-nfs-controller-sa created

serviceaccount/csi-nfs-node-sa created

clusterrole.rbac.authorization.k8s.io/nfs-external-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/nfs-csi-provisioner-binding created

csidriver.storage.k8s.io/nfs.csi.k8s.io created

deployment.apps/csi-nfs-controller created

daemonset.apps/csi-nfs-node created

NFS CSI driver installed successfully.

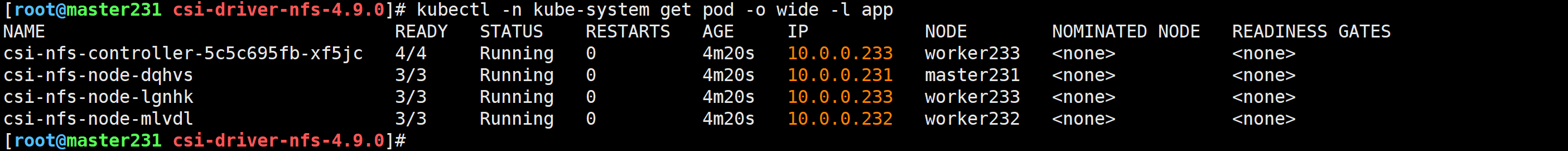

验证是否安装成功

[root@master231 csi-driver-nfs-4.9.0]# kubectl -n kube-system get pod -o wide -l app

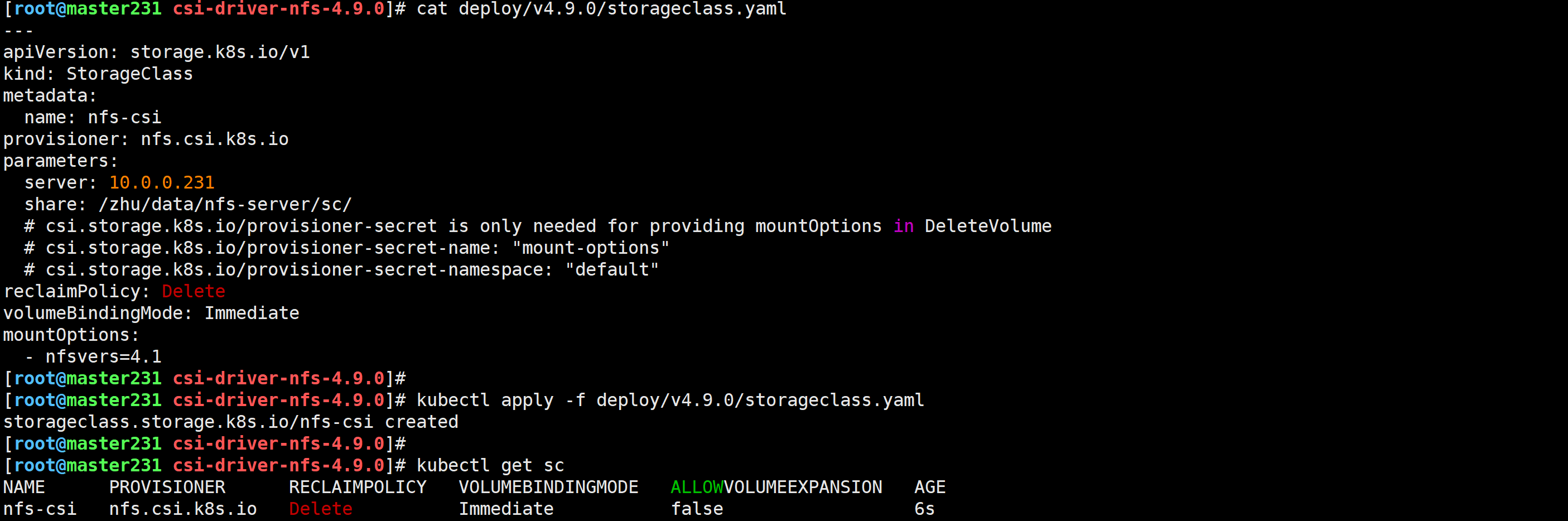

创建存储类

[root@master231 csi-driver-nfs-4.9.0]# mkdir /zhu/data/nfs-server/sc/

[root@master231 csi-driver-nfs-4.9.0]# cat deploy/v4.9.0/storageclass.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: nfs-csi

provisioner: nfs.csi.k8s.io

parameters:server: 10.0.0.231share: /zhu/data/nfs-server/sc/# csi.storage.k8s.io/provisioner-secret is only needed for providing mountOptions in DeleteVolume# csi.storage.k8s.io/provisioner-secret-name: "mount-options"# csi.storage.k8s.io/provisioner-secret-namespace: "default"

reclaimPolicy: Delete

volumeBindingMode: Immediate

mountOptions:- nfsvers=4.1[root@master231 csi-driver-nfs-4.9.0]# kubectl apply -f deploy/v4.9.0/storageclass.yaml

storageclass.storage.k8s.io/nfs-csi created

[root@master231 csi-driver-nfs-4.9.0]#

[root@master231 csi-driver-nfs-4.9.0]# kubectl get sc

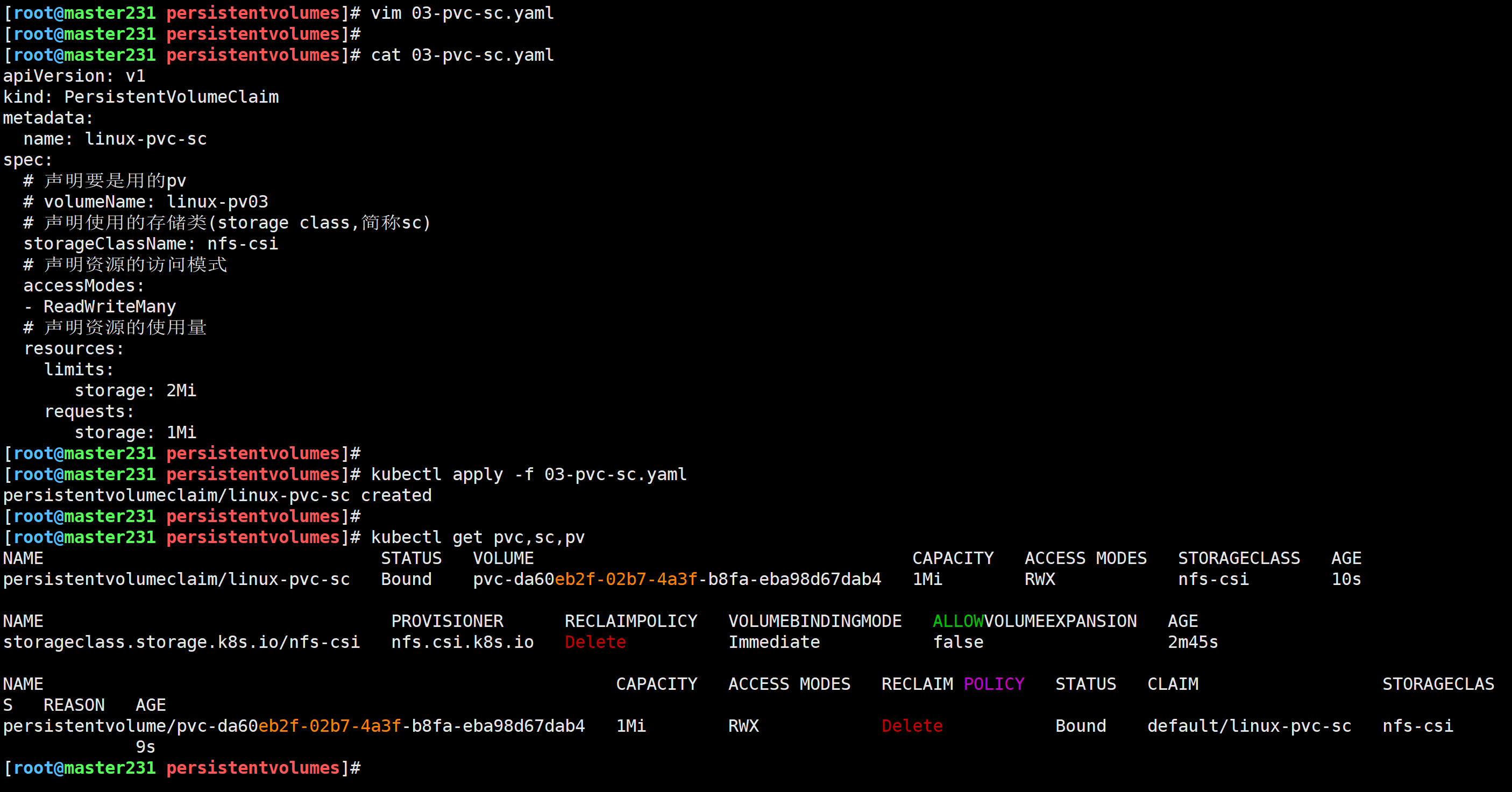

创建pvc测试

[root@master231 persistentvolumes]# cat 03-pvc-sc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: linux-pvc-sc

spec:# 声明要是用的pv# volumeName: linux-pv03# 声明使用的存储类(storage class,简称sc)storageClassName: nfs-csi# 声明资源的访问模式accessModes:- ReadWriteMany# 声明资源的使用量resources:limits:storage: 2Mirequests:storage: 1Mi

[root@master231 persistentvolumes]# kubectl apply -f 03-pvc-sc.yaml

persistentvolumeclaim/linux-pvc-sc created

[root@master231 persistentvolumes]#

[root@master231 persistentvolumes]# kubectl get pvc,sc,pv

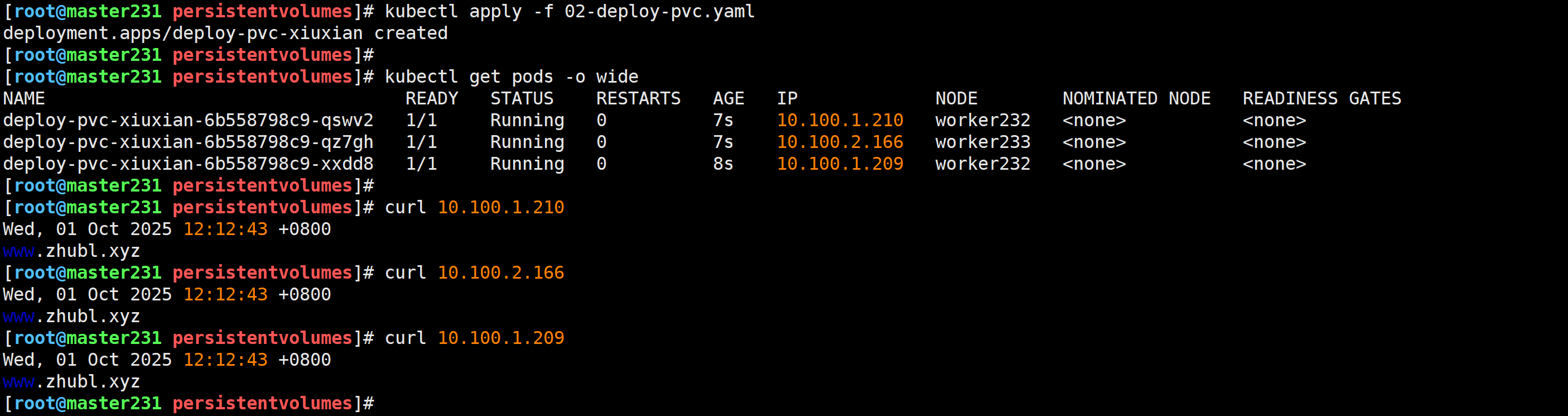

pod引用pvc

[root@master231 persistentvolumes]# cat 02-deploy-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-pvc-xiuxian

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:volumes:- name: data# 声明存储卷的类型是pvcpersistentVolumeClaim:# 声明pvc的名称#claimName: pvc001claimName: linux-pvc-sc- name: dthostPath:path: /etc/localtimeinitContainers:- name: init01image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /zhu- name: dtmountPath: /etc/localtimecommand:- /bin/sh- -c- date -R > /zhu/index.html ; echo www.zhubl.xyz >> /zhu/index.htmlcontainers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /usr/share/nginx/html- name: dtmountPath: /etc/localtime[root@master231 persistentvolumes]# kubectl apply -f 02-deploy-pvc.yaml

deployment.apps/deploy-pvc-xiuxian created

[root@master231 persistentvolumes]#

[root@master231 persistentvolumes]# kubectl get pods -o wide

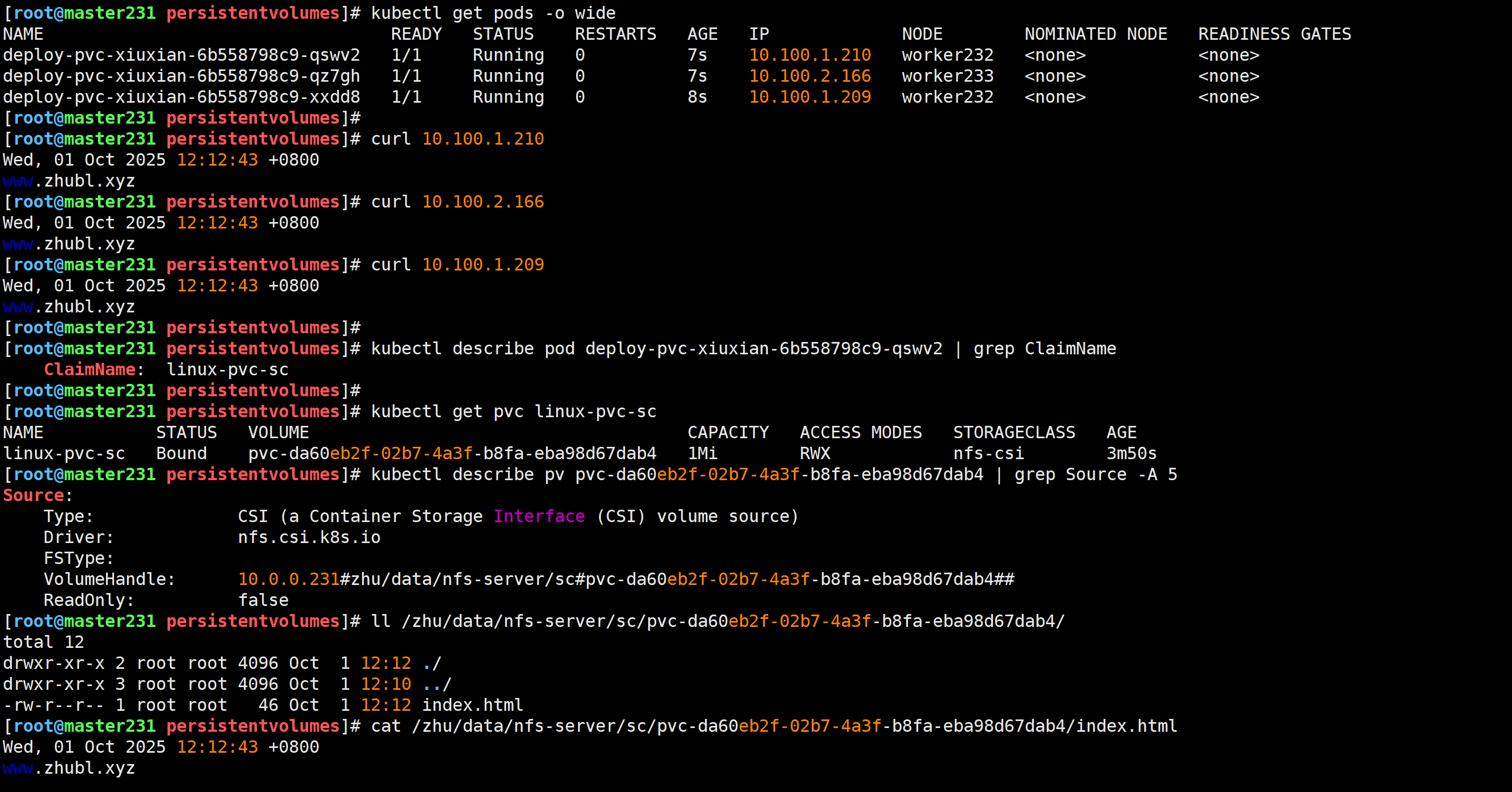

验证pod的后端存储数据

[root@master231 persistentvolumes]# kubectl describe pod deploy-pvc-xiuxian-6b558798c9-qswv2 | grep ClaimNameClaimName: linux-pvc-sc

[root@master231 persistentvolumes]# kubectl get pvc linux-pvc-sc

[root@master231 persistentvolumes]# kubectl describe pv pvc-da60eb2f-02b7-4a3f-b8fa-eba98d67dab4 | grep Source -A 5

[root@master231 persistentvolumes]# cat /zhu/data/nfs-server/sc/pvc-da60eb2f-02b7-4a3f-b8fa-eba98d67dab4/index.html

Wed, 01 Oct 2025 12:12:43 +0800

www.zhubl.xyz

🌟K8S配置默认的存储类及多个存储类定义

温馨提示:

- 1.K8S可以定义多个存储类;

- 2.如果pvc没有指定sc时则使用该默认存储类;

- 3.k8s集群中只有一个默认的存储类;

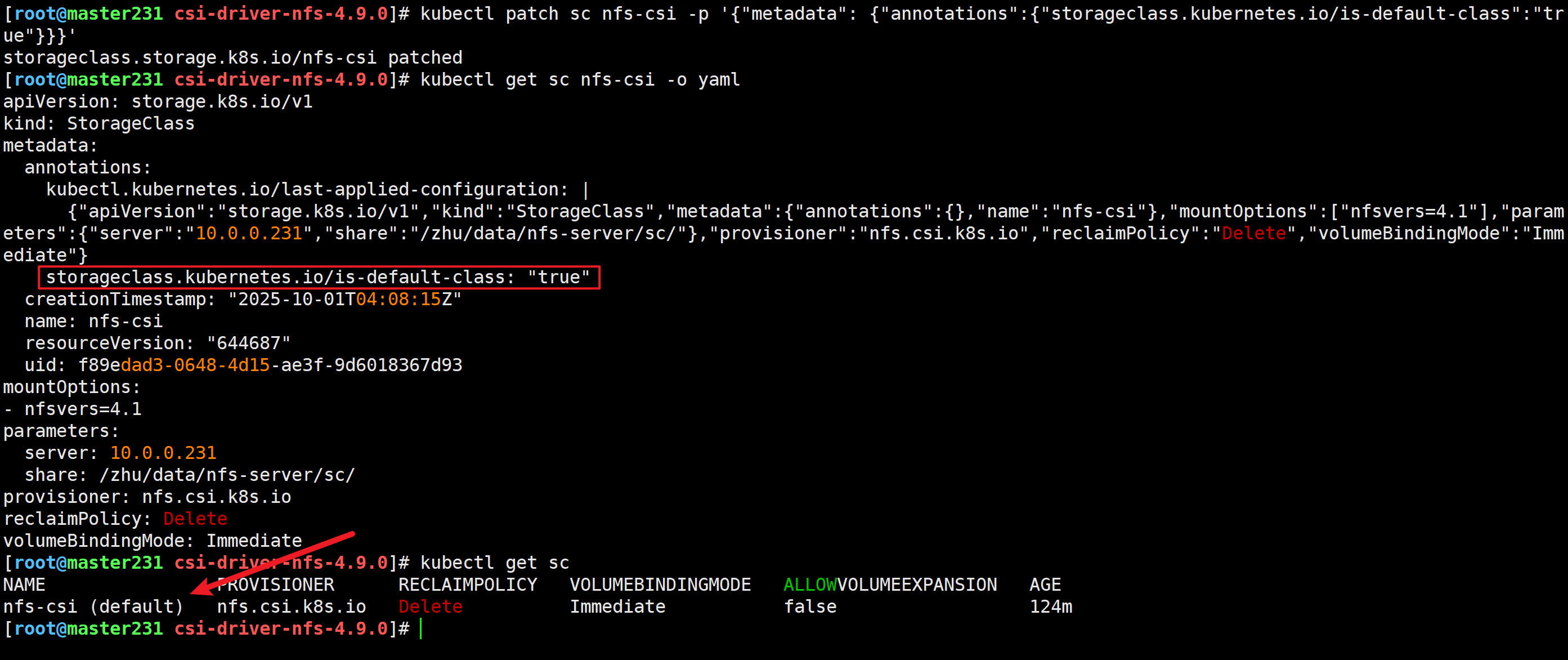

响应式配置默认存储类

[root@master231 csi-driver-nfs-4.9.0]# kubectl patch sc nfs-csi -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

[root@master231 csi-driver-nfs-4.9.0]# kubectl get sc

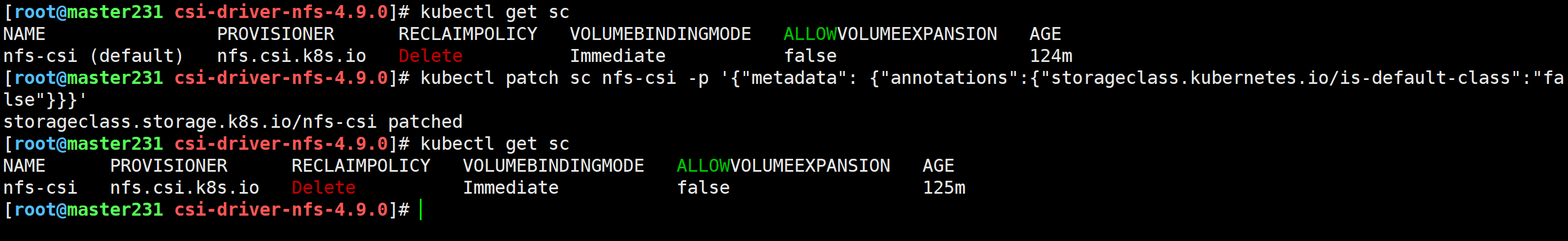

响应式取消默认存储类

[root@master231 csi-driver-nfs-4.9.0]# kubectl patch sc nfs-csi -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}'

storageclass.storage.k8s.io/nfs-csi patched

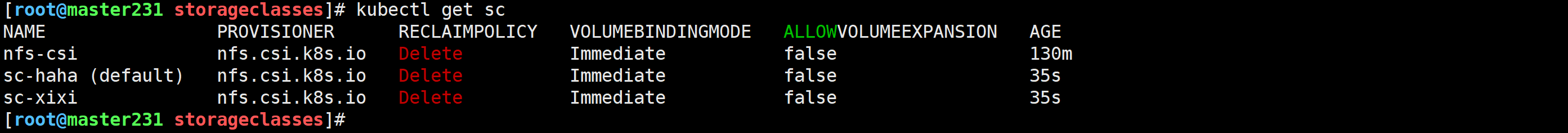

声明式配置多个存储类

[root@master231 storageclasses]# cat sc-multiple.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: sc-xixi# 配置资源注解【一般用于为资源定义配置信息】annotations:storageclass.kubernetes.io/is-default-class: "false"

provisioner: nfs.csi.k8s.io

parameters:server: 10.0.0.231share: /zhu/data/nfs-server/sc-xixi

reclaimPolicy: Delete

volumeBindingMode: Immediate

mountOptions:- nfsvers=4.1---apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: sc-hahaannotations:storageclass.kubernetes.io/is-default-class: "true"

provisioner: nfs.csi.k8s.io

parameters:server: 10.0.0.231share: /zhu/data/nfs-server/sc-haha

[root@master231 storageclasses]#

[root@master231 storageclasses]# kubectl apply -f sc-multiple.yaml

storageclass.storage.k8s.io/sc-xixi created

storageclass.storage.k8s.io/sc-haha created

准备目录

[root@master231 storageclasses]# mkdir -pv /zhu/data/nfs-server/sc-{xixi,haha}

mkdir: created directory '/zhu/data/nfs-server/sc-xixi'

mkdir: created directory '/zhu/data/nfs-server/sc-haha'

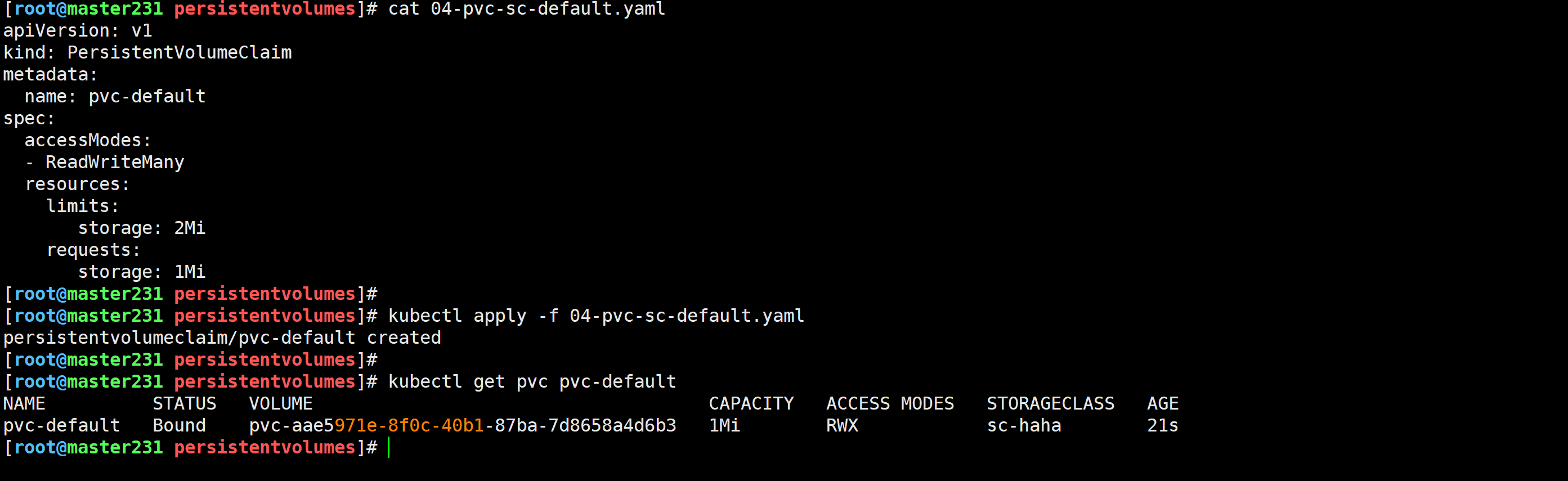

测试验证

[root@master231 persistentvolumes]# cat 04-pvc-sc-default.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: pvc-default

spec:accessModes:- ReadWriteManyresources:limits:storage: 2Mirequests:storage: 1Mi

pod引用pvc

[root@master231 persistentvolumes]# cat 02-deploy-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-pvc-xiuxian

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:volumes:- name: data# 声明存储卷的类型是pvcpersistentVolumeClaim:# 声明pvc的名称#claimName: pvc001#claimName: linux-pvc-scclaimName: pvc-default- name: dthostPath:path: /etc/localtimeinitContainers:- name: init01image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /zhu- name: dtmountPath: /etc/localtimecommand:- /bin/sh- -c- date -R > /zhu/index.html ; echo www.zhubl.xyz >> /zhu/index.htmlcontainers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /usr/share/nginx/html- name: dtmountPath: /etc/localtime

[root@master231 persistentvolumes]#

[root@master231 persistentvolumes]#

[root@master231 persistentvolumes]# kubectl apply -f 02-deploy-pvc.yaml

deployment.apps/deploy-pvc-xiuxian configured

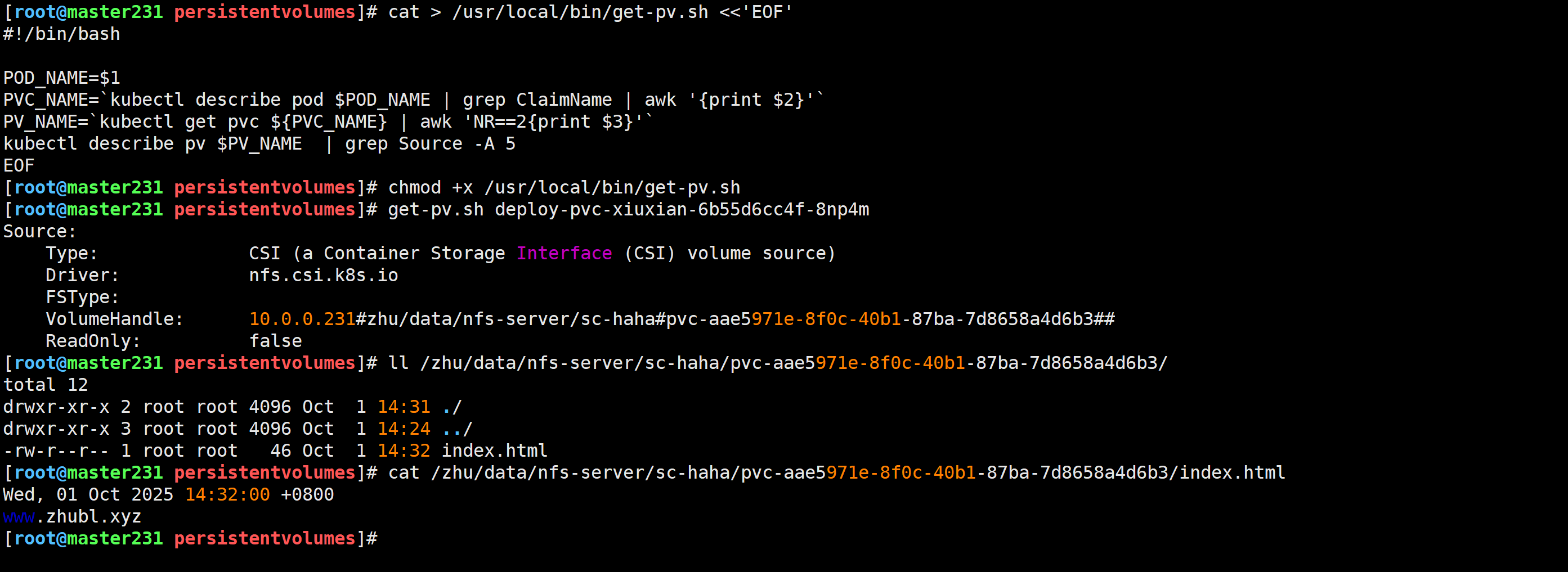

验证后端存储

[root@master231 persistentvolumes]# cat > /usr/local/bin/get-pv.sh <<'EOF'

#!/bin/bashPOD_NAME=$1

PVC_NAME=`kubectl describe pod $POD_NAME | grep ClaimName | awk '{print $2}'`

PV_NAME=`kubectl get pvc ${PVC_NAME} | awk 'NR==2{print $3}'`

kubectl describe pv $PV_NAME | grep Source -A 5

EOF

[root@master231 persistentvolumes]# chmod +x /usr/local/bin/get-pv.sh

[root@master231 persistentvolumes]# get-pv.sh deploy-pvc-xiuxian-6b55d6cc4f-8np4m

🌟sts控制器实战

参考链接:

https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/statefulset/

https://kubernetes.io/zh-cn/docs/tutorials/stateful-application/basic-stateful-set/

StatefulSets概述

以Nginx的为例,当任意一个Nginx挂掉,其处理的逻辑是相同的,即仅需重新创建一个Pod副本即可,这类服务我们称之为无状态服务。

以MySQL主从同步为例,master,slave两个库任意一个库挂掉,其处理逻辑是不相同的,这类服务我们称之为有状态服务。

有状态服务面临的难题:

(1)启动/停止顺序;(2)pod实例的数据是独立存储;(3)需要固定的IP地址或者主机名;

StatefulSet一般用于有状态服务,StatefulSets对于需要满足以下一个或多个需求的应用程序很有价值。

(1)稳定唯一的网络标识符。(2)稳定独立持久的存储。(3)有序优雅的部署和缩放。(4)有序自动的滚动更新。

稳定的网络标识:

其本质对应的是一个service资源,只不过这个service没有定义VIP,我们称之为headless service,即"无头服务"。通过"headless service"来维护Pod的网络身份,会为每个Pod分配一个数字编号并且按照编号顺序部署。综上所述,无头服务("headless service")要求满足以下两点:(1)将svc资源的clusterIP字段设置None,即"clusterIP: None";(2)将sts资源的serviceName字段声明为无头服务的名称;

独享存储:

Statefulset的存储卷使用VolumeClaimTemplate创建,称为"存储卷申请模板"。当sts资源使用VolumeClaimTemplate创建一个PVC时,同样也会为每个Pod分配并创建唯一的pvc编号,每个pvc绑定对应pv,从而保证每个Pod都有独立的存储。

🌟StatefulSets控制器-网络唯一标识之headless

编写资源清单

[root@master231 statefulsets]# cat 01-statefulset-headless-network.yaml

apiVersion: v1

kind: Service

metadata:name: svc-headless

spec:ports:- port: 80name: web# 将clusterIP字段设置为None表示为一个无头服务,即svc将不会分配VIP。clusterIP: Noneselector:app: nginx---apiVersion: apps/v1

kind: StatefulSet

metadata:name: sts-xiuxian

spec:selector:matchLabels:app: nginx# 声明无头服务 serviceName: svc-headlessreplicas: 3 template:metadata:labels:app: nginxspec:containers:- name: nginximage: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1imagePullPolicy: Always

[root@master231 statefulsets]#

创建资源

[root@master231 statefulsets]# kubectl apply -f 01-statefulset-headless-network.yaml

service/svc-headless created

statefulset.apps/sts-xiuxian created

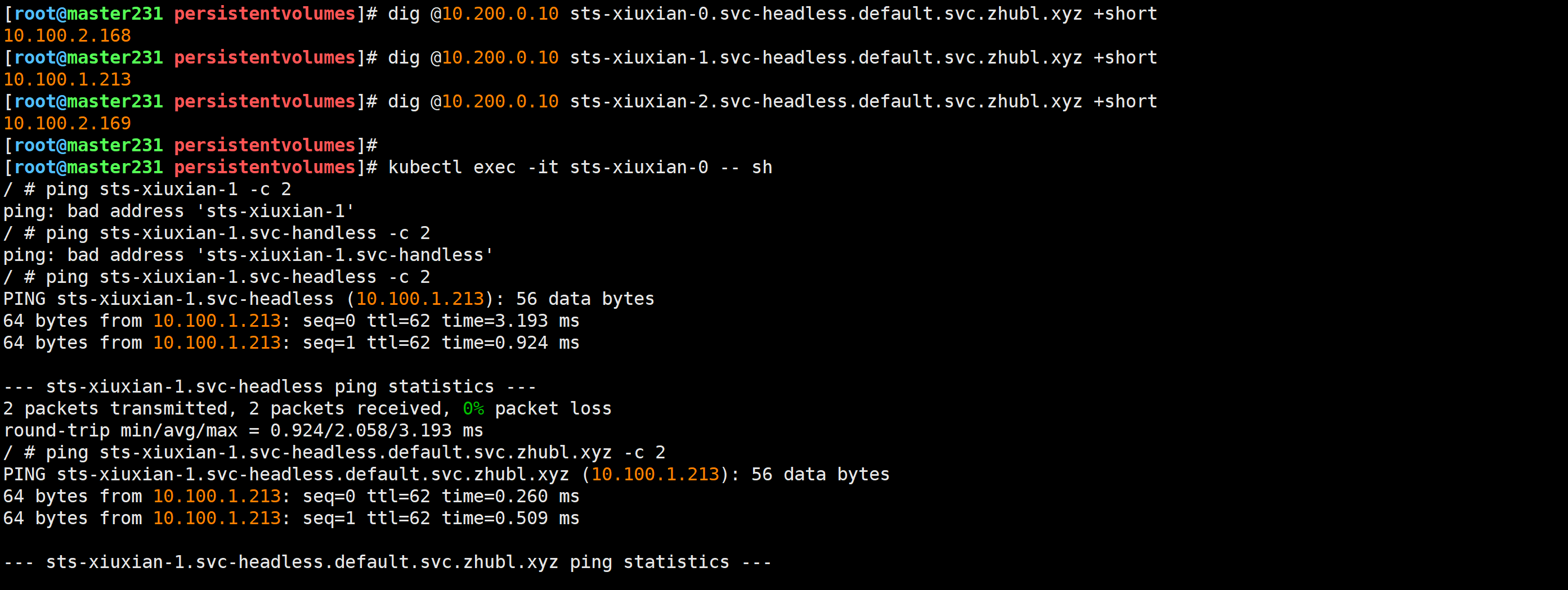

验证

[root@master231 persistentvolumes]# dig @10.200.0.10 sts-xiuxian-0.svc-headless.default.svc.zhubl.xyz +short

10.100.2.168

[root@master231 persistentvolumes]# dig @10.200.0.10 sts-xiuxian-1.svc-headless.default.svc.zhubl.xyz +short

10.100.1.213

[root@master231 persistentvolumes]# dig @10.200.0.10 sts-xiuxian-2.svc-headless.default.svc.zhubl.xyz +short

10.100.2.169

[root@master231 persistentvolumes]#

[root@master231 persistentvolumes]# kubectl exec -it sts-xiuxian-0 -- sh

/ # ping sts-xiuxian-1 -c 2/ # ping sts-xiuxian-1.svc-headless -c 2/ # ping sts-xiuxian-1.svc-headless.default.svc.zhubl.xyz -c 2

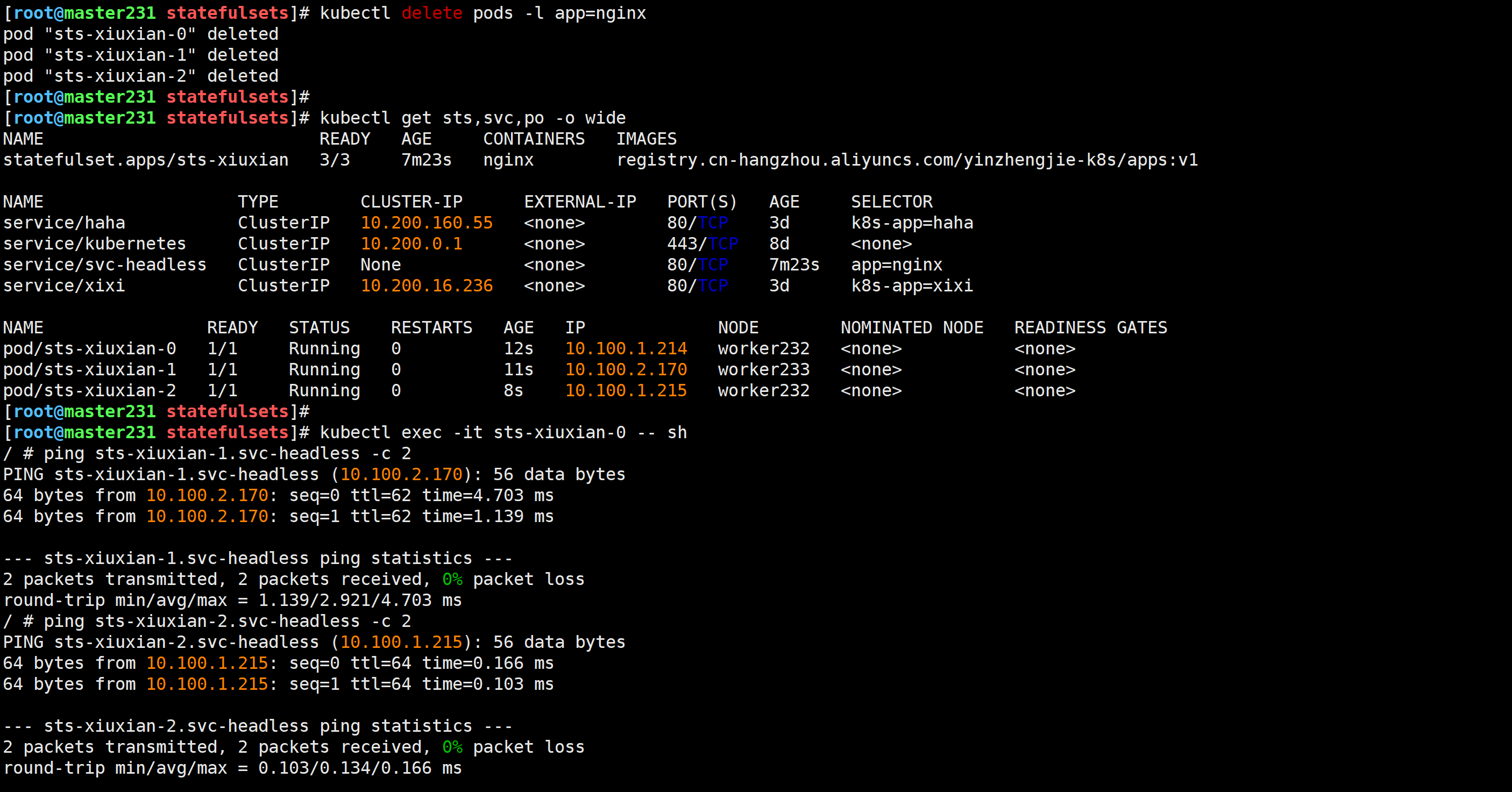

[root@master231 statefulsets]# kubectl delete pods -l app=nginx

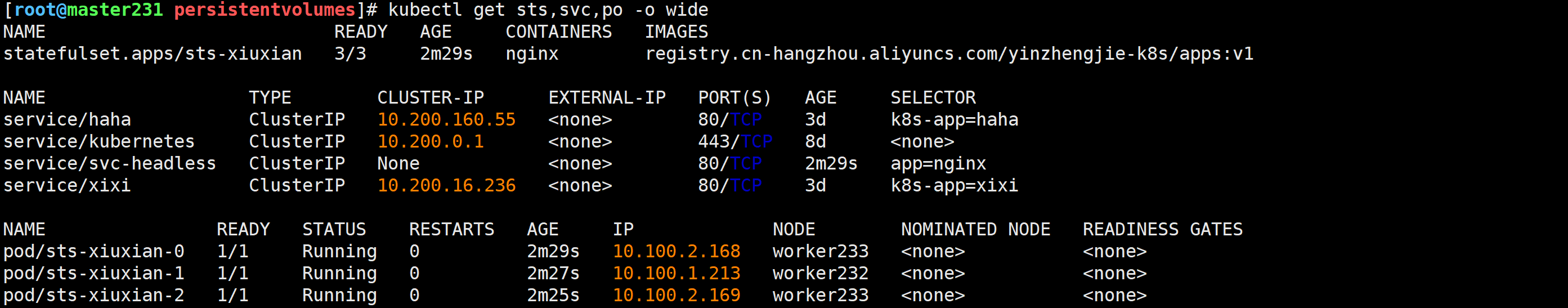

[root@master231 statefulsets]# kubectl get sts,svc,po -o wide

[root@master231 statefulsets]# kubectl exec -it sts-xiuxian-0 -- sh

/ # ping sts-xiuxian-1.svc-headless -c 2

/ # ping sts-xiuxian-2.svc-headless -c 2

🌟StatefulSets控制器-独享存储

编写资源清单

[root@master231 statefulsets]# cat 02-statefulset-headless-volumeClaimTemplates.yaml

apiVersion: v1

kind: Service

metadata:name: svc-headless

spec:ports:- port: 80name: webclusterIP: Noneselector:app: nginx---apiVersion: apps/v1

kind: StatefulSet

metadata:name: sts-xiuxian

spec:selector:matchLabels:app: nginxserviceName: svc-headlessreplicas: 3 # 卷申请模板,会为每个Pod去创建唯一的pvc并与之关联!volumeClaimTemplates:- metadata:name: dataspec:accessModes: [ "ReadWriteOnce" ]# 声明咱们自定义的动态存储类,即sc资源。storageClassName: "sc-xixi"resources:requests:storage: 2Gitemplate:metadata:labels:app: nginxspec:initContainers:- name: i1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1env:- name: pod_namevalueFrom:fieldRef:fieldPath: metadata.name- name: pod_ipvalueFrom:fieldRef:fieldPath: status.podIPvolumeMounts:- name: datamountPath: /zhucommand:- /bin/sh- -c- echo "${pod_name} --- ${pod_ip}" > /zhu/index.htmlcontainers:- name: nginximage: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1ports:- containerPort: 80name: xiuxianvolumeMounts:- name: datamountPath: /usr/share/nginx/html

---apiVersion: v1

kind: Service

metadata:name: svc-sts-xiuxian

spec:type: ClusterIPclusterIP: 10.200.0.200selector:app: nginxports:- port: 80targetPort: xiuxian

创建资源

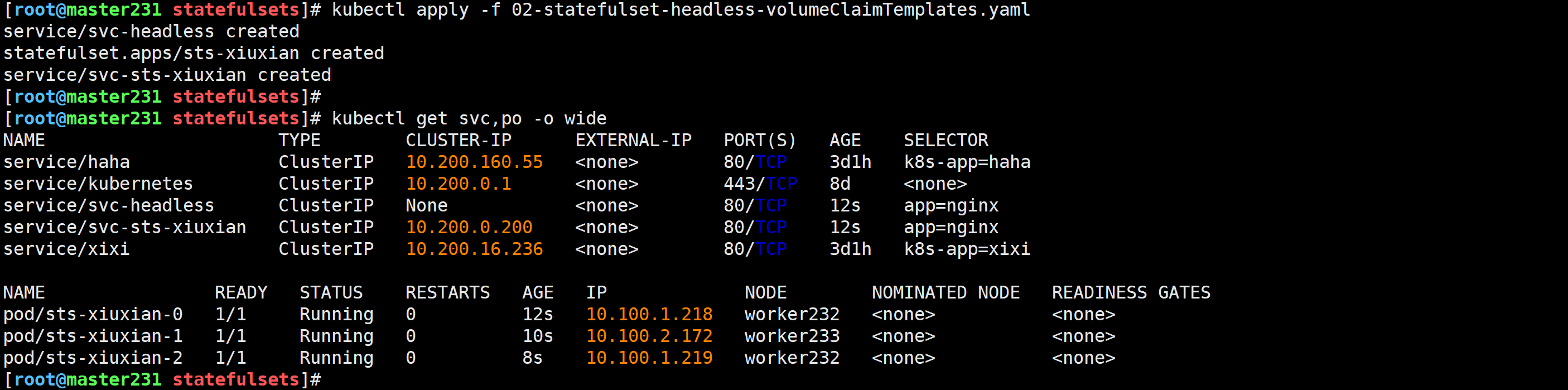

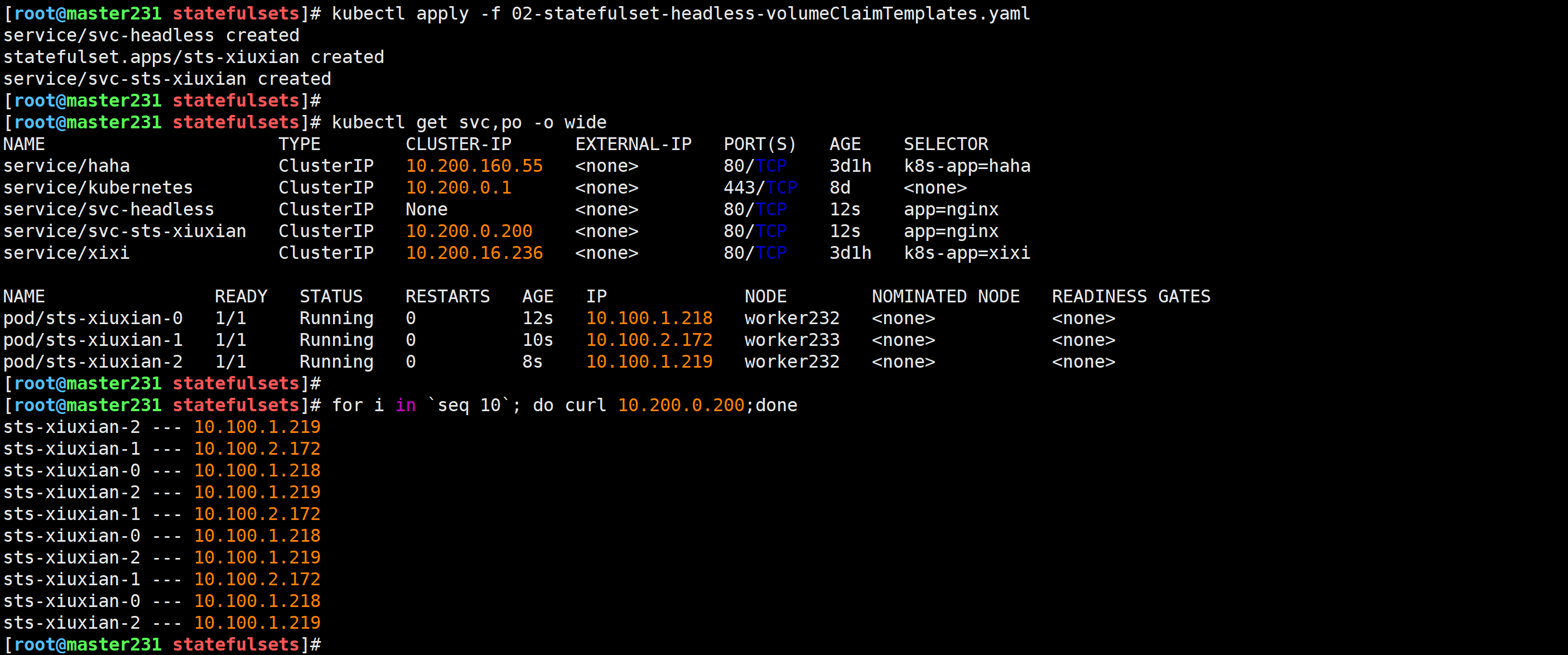

[root@master231 statefulsets]# kubectl apply -f 02-statefulset-headless-volumeClaimTemplates.yaml

service/svc-headless created

statefulset.apps/sts-xiuxian created

service/svc-sts-xiuxian created

测试验证

[root@master231 statefulsets]# for i in `seq 10`; do curl 10.200.0.200;done

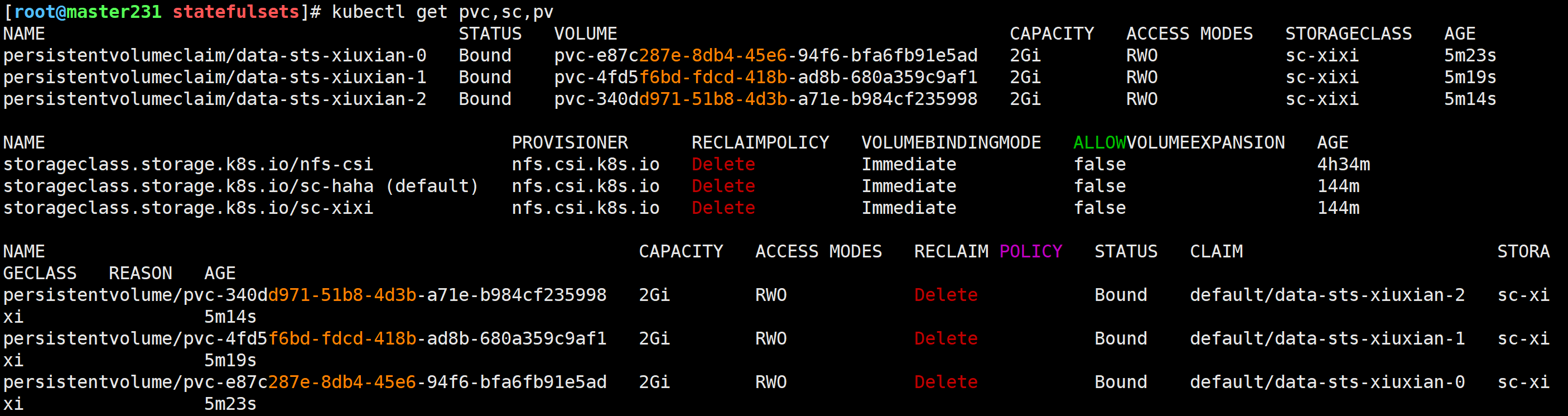

验证后端存储

kubectl get pvc -l app=nginx | awk 'NR>=2{print $3}' | xargs kubectl describe pv | grep VolumeHandle

🌟sts的分段更新

编写资源清单

[root@master231 statefulsets]# cat 03-statefuleset-updateStrategy-partition.yaml

apiVersion: v1

kind: Service

metadata:name: sts-headless

spec:ports:- port: 80name: webclusterIP: Noneselector:app: web---apiVersion: apps/v1

kind: StatefulSet

metadata:name: sts-web

spec:# 指定sts资源的更新策略updateStrategy:# 配置滚动更新rollingUpdate:# 当编号小于3时不更新,说白了,就是Pod编号大于等于3的Pod会被更新!partition: 3selector:matchLabels:app: webserviceName: sts-headlessreplicas: 5template:metadata:labels:app: webspec:containers:- name: c1ports:- containerPort: 80name: xiuxian image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

---

apiVersion: v1

kind: Service

metadata:name: sts-svc

spec:selector:app: webports:- port: 80targetPort: xiuxian

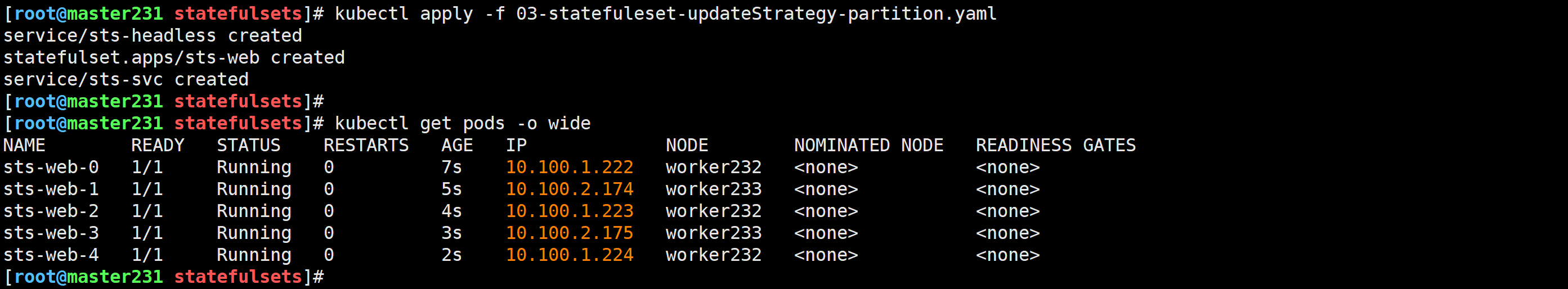

创建资源

[root@master231 statefulsets]# kubectl apply -f 03-statefuleset-updateStrategy-partition.yaml

service/sts-headless created

statefulset.apps/sts-web created

service/sts-svc created

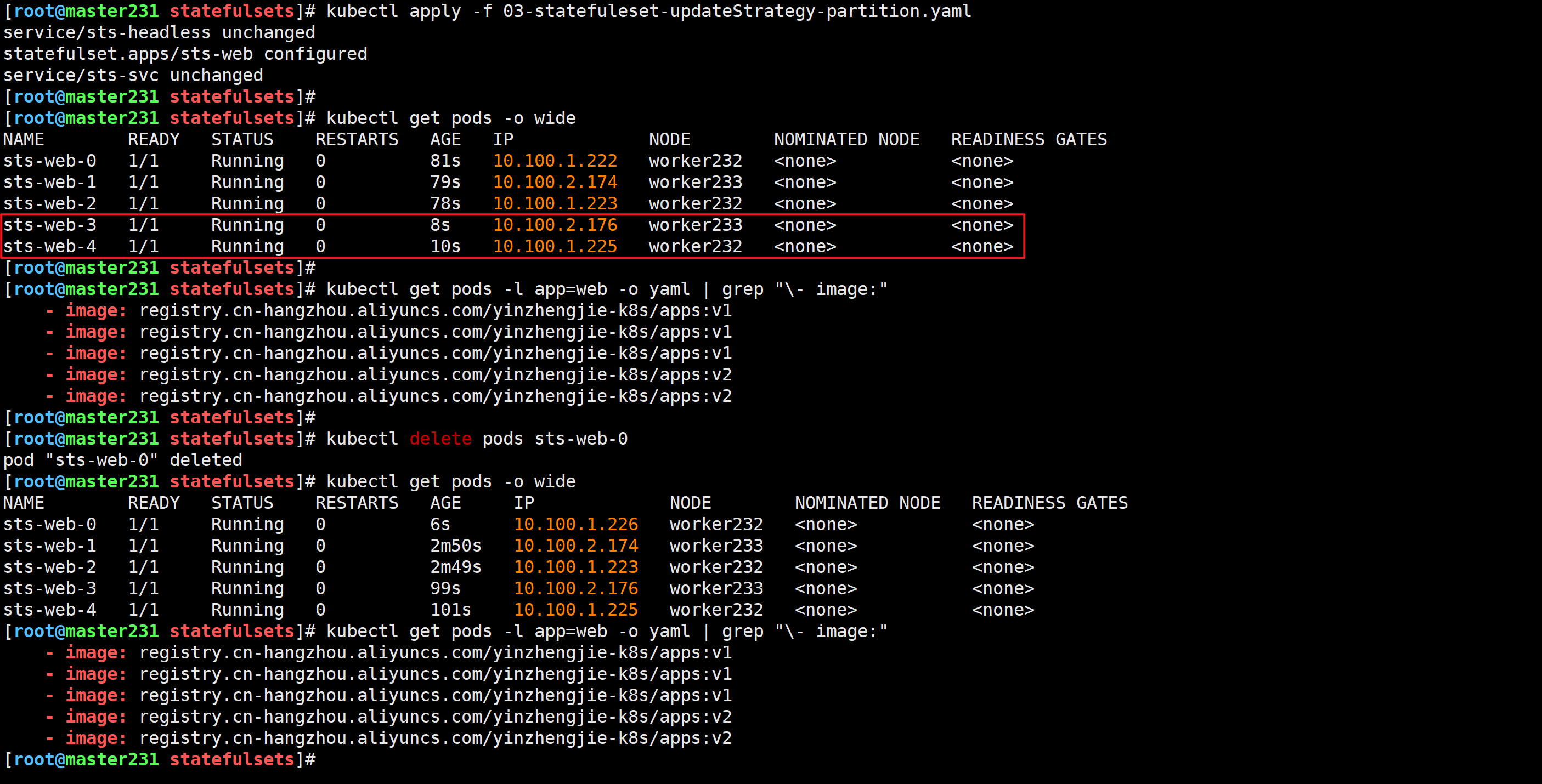

验证

[root@master231 statefulsets]# kubectl get pods -l app=web -o yaml | grep "\- image:"

[root@master231 statefulsets]# grep hangzhou 03-statefuleset-updateStrategy-partition.yaml

[root@master231 statefulsets]# sed -i '/hangzhou/s#v1#v2#' 03-statefuleset-updateStrategy-partition.yaml

[root@master231 statefulsets]# kubectl apply -f 03-statefuleset-updateStrategy-partition.yaml

service/sts-headless unchanged

statefulset.apps/sts-web configured

service/sts-svc unchanged

🌟基于sts部署zookeeper集群

参考链接: https://kubernetes.io/zh-cn/docs/tutorials/stateful-application/zookeeper/

K8S所有节点导入镜像

kubernetes-zookeeper-v1.0-3.4.10.tar.gz

docker load -i kubernetes-zookeeper-v1.0-3.4.10.tar.gz

编写资源清单

[root@master231 statefulsets]# cat 04-sts-zookeeper-cluster.yaml

apiVersion: v1

kind: Service

metadata:name: zk-hslabels:app: zk

spec:ports:- port: 2888name: server- port: 3888name: leader-electionclusterIP: Noneselector:app: zk

---

apiVersion: v1

kind: Service

metadata:name: zk-cslabels:app: zk

spec:ports:- port: 2181name: clientselector:app: zk

---

apiVersion: policy/v1

# 此类型用于定义可以对一组Pod造成的最大中断,说白了就是最大不可用的Pod数量。

# 一般情况下,对于分布式集群而言,假设集群故障容忍度为N,则集群最少需要2N+1个Pod。

kind: PodDisruptionBudget

metadata:name: zk-pdb

spec:# 匹配Podselector:matchLabels:app: zk# 最大不可用的Pod数量。这意味着将来zookeeper集群,最少要2*1 +1 = 3个Pod数量。maxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: zk

spec:selector:matchLabels:app: zkserviceName: zk-hsreplicas: 3updateStrategy:type: RollingUpdatepodManagementPolicy: OrderedReadytemplate:metadata:labels:app: zkspec:tolerations:- key: node-role.kubernetes.io/masteroperator: Existseffect: NoScheduleaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchExpressions:- key: "app"operator: Invalues:- zktopologyKey: "kubernetes.io/hostname"containers:- name: kubernetes-zookeeperimagePullPolicy: IfNotPresentimage: "registry.k8s.io/kubernetes-zookeeper:1.0-3.4.10"resources:requests:memory: "1Gi"cpu: "0.5"ports:- containerPort: 2181name: client- containerPort: 2888name: server- containerPort: 3888name: leader-electioncommand:- sh- -c- "start-zookeeper \--servers=3 \--data_dir=/var/lib/zookeeper/data \--data_log_dir=/var/lib/zookeeper/data/log \--conf_dir=/opt/zookeeper/conf \--client_port=2181 \--election_port=3888 \--server_port=2888 \--tick_time=2000 \--init_limit=10 \--sync_limit=5 \--heap=512M \--max_client_cnxns=60 \--snap_retain_count=3 \--purge_interval=12 \--max_session_timeout=40000 \--min_session_timeout=4000 \--log_level=INFO"readinessProbe:exec:command:- sh- -c- "zookeeper-ready 2181"initialDelaySeconds: 10timeoutSeconds: 5livenessProbe:exec:command:- sh- -c- "zookeeper-ready 2181"initialDelaySeconds: 10timeoutSeconds: 5volumeMounts:- name: datadirmountPath: /var/lib/zookeepersecurityContext:runAsUser: 1000fsGroup: 1000volumeClaimTemplates:- metadata:name: datadirspec:accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 10Gi

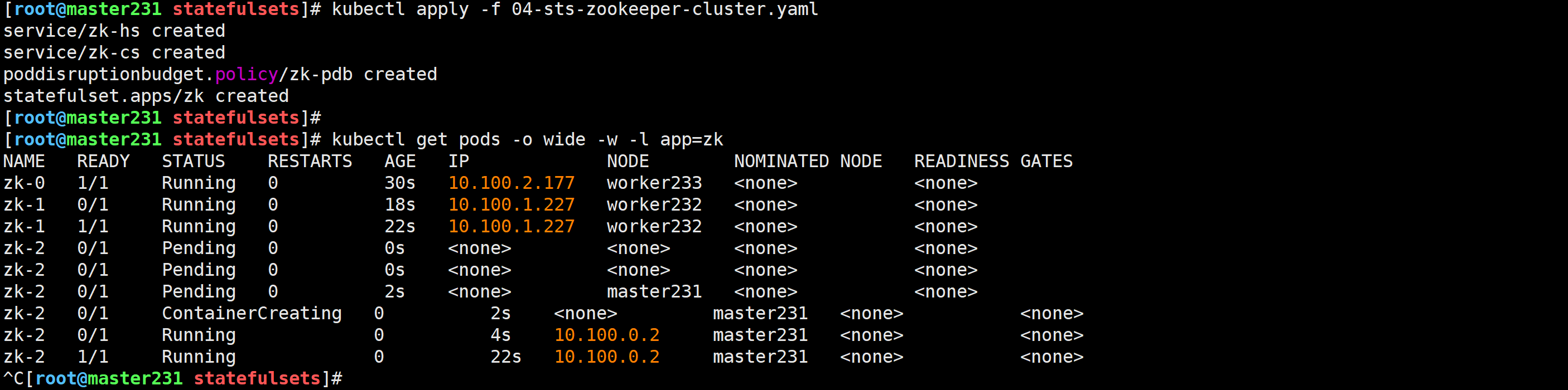

实时观察Pod状态

[root@master231 statefulsets]# kubectl apply -f 04-sts-zookeeper-cluster.yaml

service/zk-hs created

service/zk-cs created

poddisruptionbudget.policy/zk-pdb created

statefulset.apps/zk created

[root@master231 statefulsets]#

[root@master231 statefulsets]# kubectl get pods -o wide -w -l app=zk

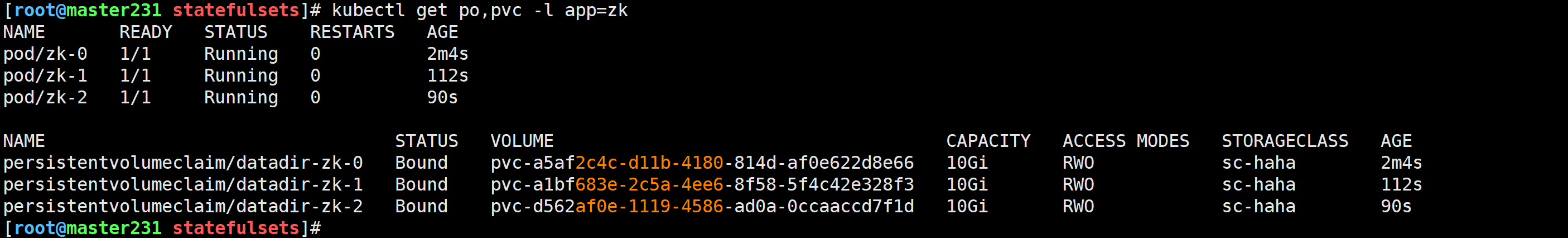

检查后端的存储

[root@master231 statefulsets]# kubectl get po,pvc -l app=zk

验证集群是否正常

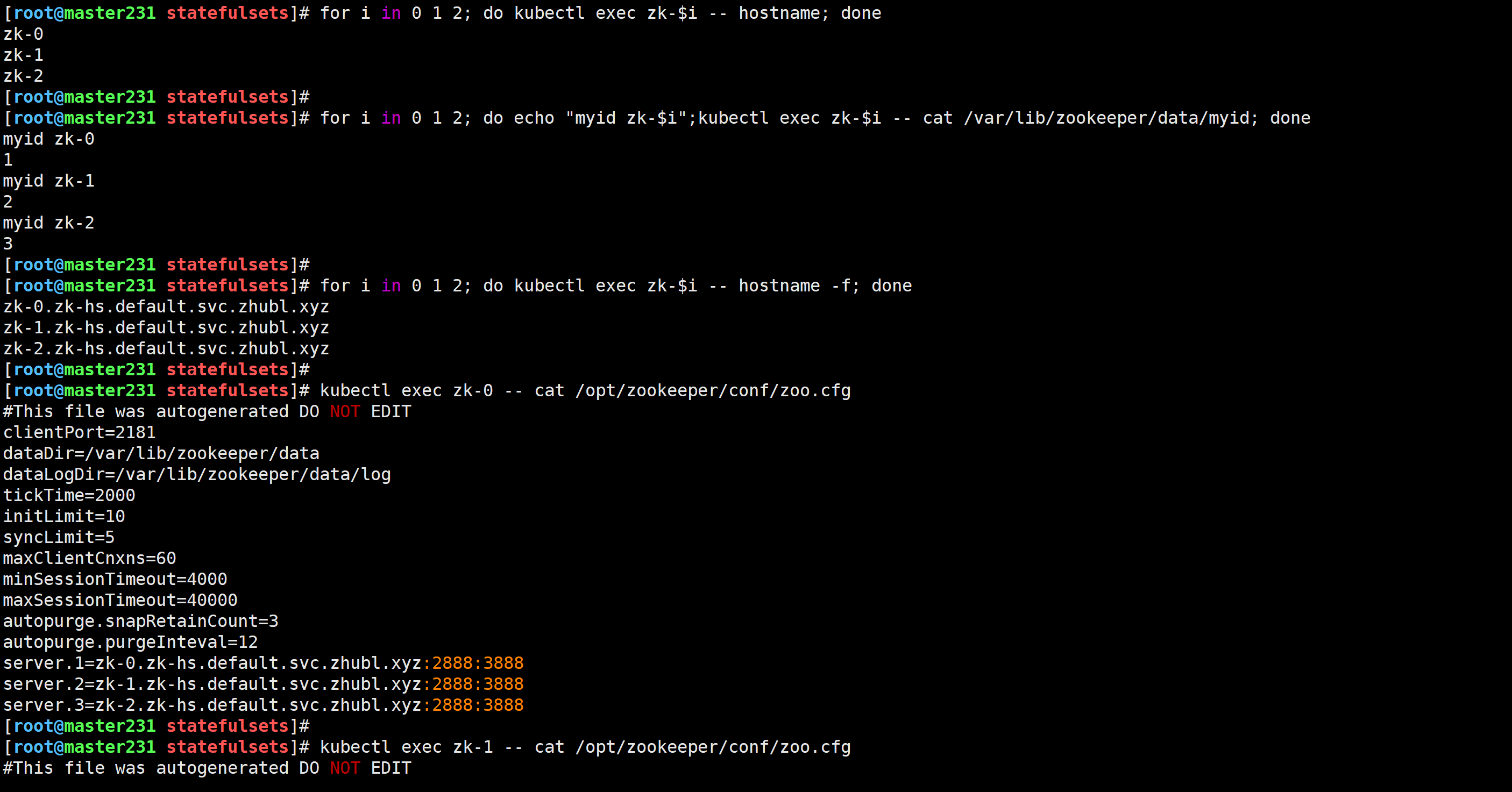

[root@master231 statefulsets]# for i in 0 1 2; do kubectl exec zk-$i -- hostname; done

[root@master231 statefulsets]# for i in 0 1 2; do echo "myid zk-$i";kubectl exec zk-$i -- cat /var/lib/zookeeper/data/myid; done

[root@master231 statefulsets]# for i in 0 1 2; do kubectl exec zk-$i -- hostname -f; done[root@master231 statefulsets]# kubectl exec zk-0 -- cat /opt/zookeeper/conf/zoo.cfg

#This file was autogenerated DO NOT EDIT

clientPort=2181

dataDir=/var/lib/zookeeper/data

dataLogDir=/var/lib/zookeeper/data/log

tickTime=2000

initLimit=10

syncLimit=5

maxClientCnxns=60

minSessionTimeout=4000

maxSessionTimeout=40000

autopurge.snapRetainCount=3

autopurge.purgeInteval=12

server.1=zk-0.zk-hs.default.svc.zhubl.xyz:2888:3888

server.2=zk-1.zk-hs.default.svc.zhubl.xyz:2888:3888

server.3=zk-2.zk-hs.default.svc.zhubl.xyz:2888:3888

[root@master231 statefulsets]#

[root@master231 statefulsets]# kubectl exec zk-1 -- cat /opt/zookeeper/conf/zoo.cfg

#This file was autogenerated DO NOT EDIT

clientPort=2181

dataDir=/var/lib/zookeeper/data

dataLogDir=/var/lib/zookeeper/data/log

tickTime=2000

initLimit=10

syncLimit=5

maxClientCnxns=60

minSessionTimeout=4000

maxSessionTimeout=40000

autopurge.snapRetainCount=3

autopurge.purgeInteval=12

server.1=zk-0.zk-hs.default.svc.zhubl.xyz:2888:3888

server.2=zk-1.zk-hs.default.svc.zhubl.xyz:2888:3888

server.3=zk-2.zk-hs.default.svc.zhubl.xyz:2888:3888

[root@master231 statefulsets]#

[root@master231 statefulsets]# kubectl exec zk-2 -- cat /opt/zookeeper/conf/zoo.cfg

#This file was autogenerated DO NOT EDIT

clientPort=2181

dataDir=/var/lib/zookeeper/data

dataLogDir=/var/lib/zookeeper/data/log

tickTime=2000

initLimit=10

syncLimit=5

maxClientCnxns=60

minSessionTimeout=4000

maxSessionTimeout=40000

autopurge.snapRetainCount=3

autopurge.purgeInteval=12

server.1=zk-0.zk-hs.default.svc.zhubl.xyz:2888:3888

server.2=zk-1.zk-hs.default.svc.zhubl.xyz:2888:3888

server.3=zk-2.zk-hs.default.svc.zhubl.xyz:2888:3888

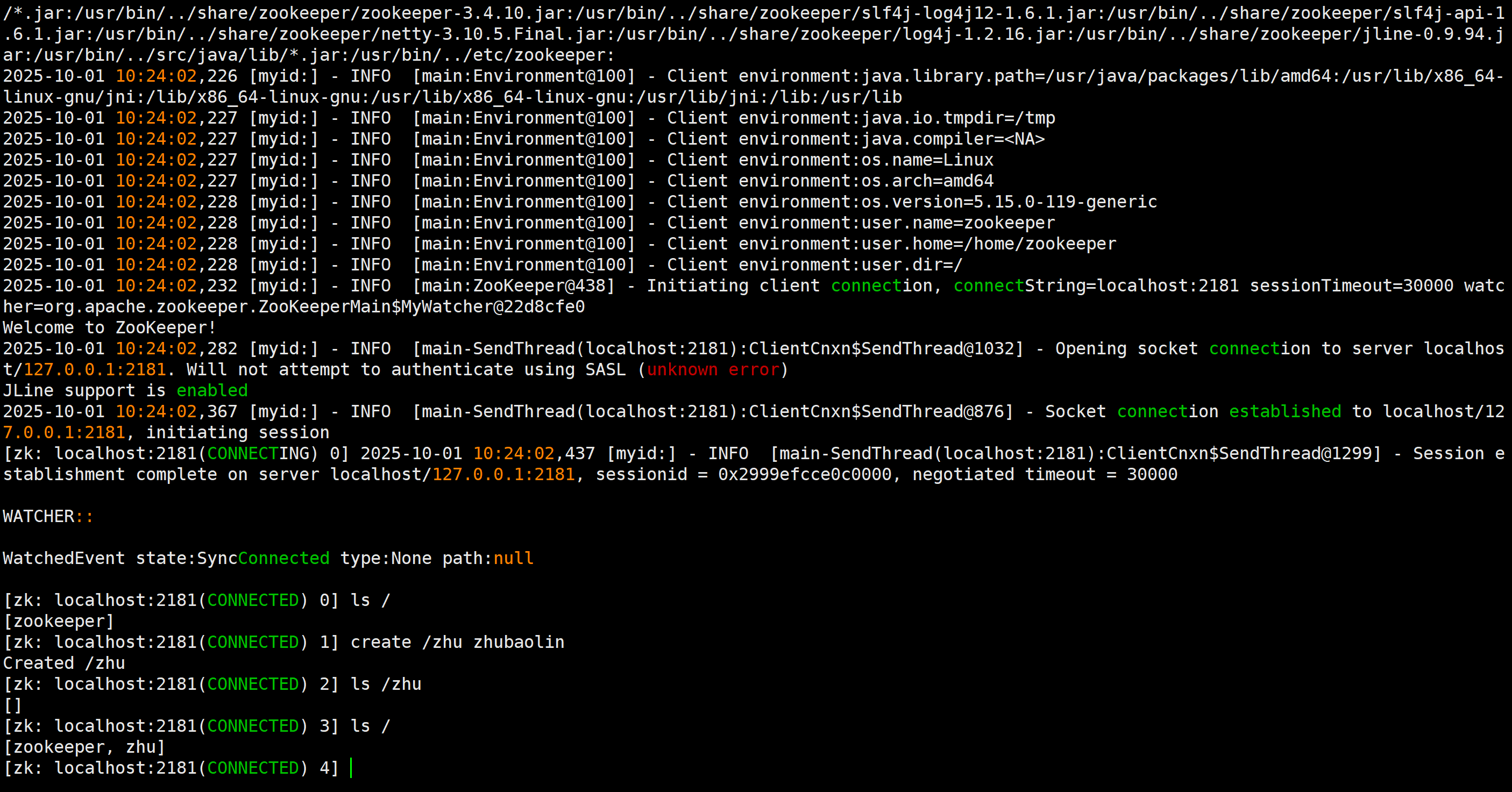

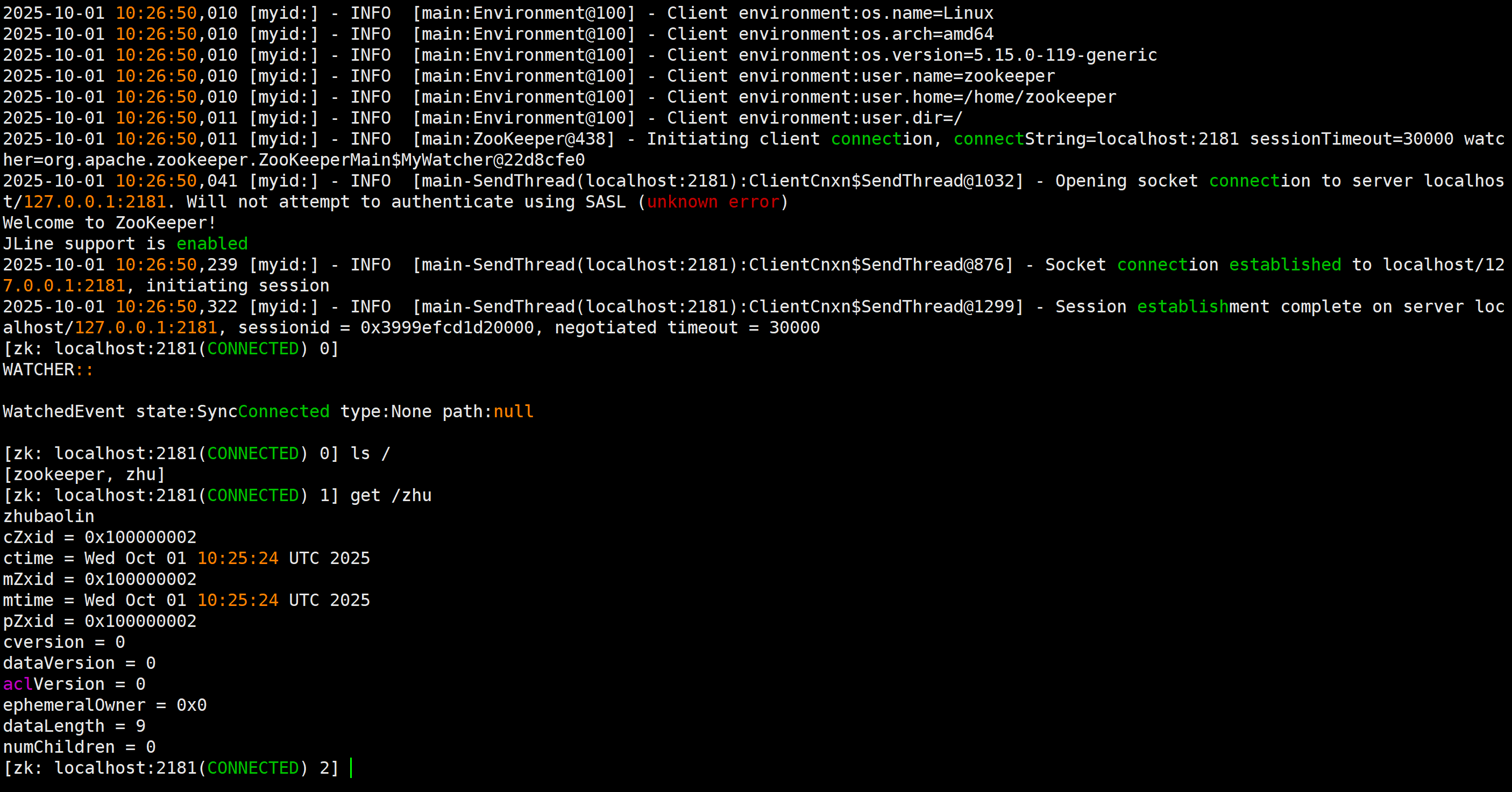

创建数据测试

在一个Pod写入数据

[root@master231 statefulsets]# kubectl exec -it zk-1 -- zkCli.sh

在另一个Pod查看下数据

[root@master231 statefulsets]# kubectl exec -it zk-2 -- zkCli.sh

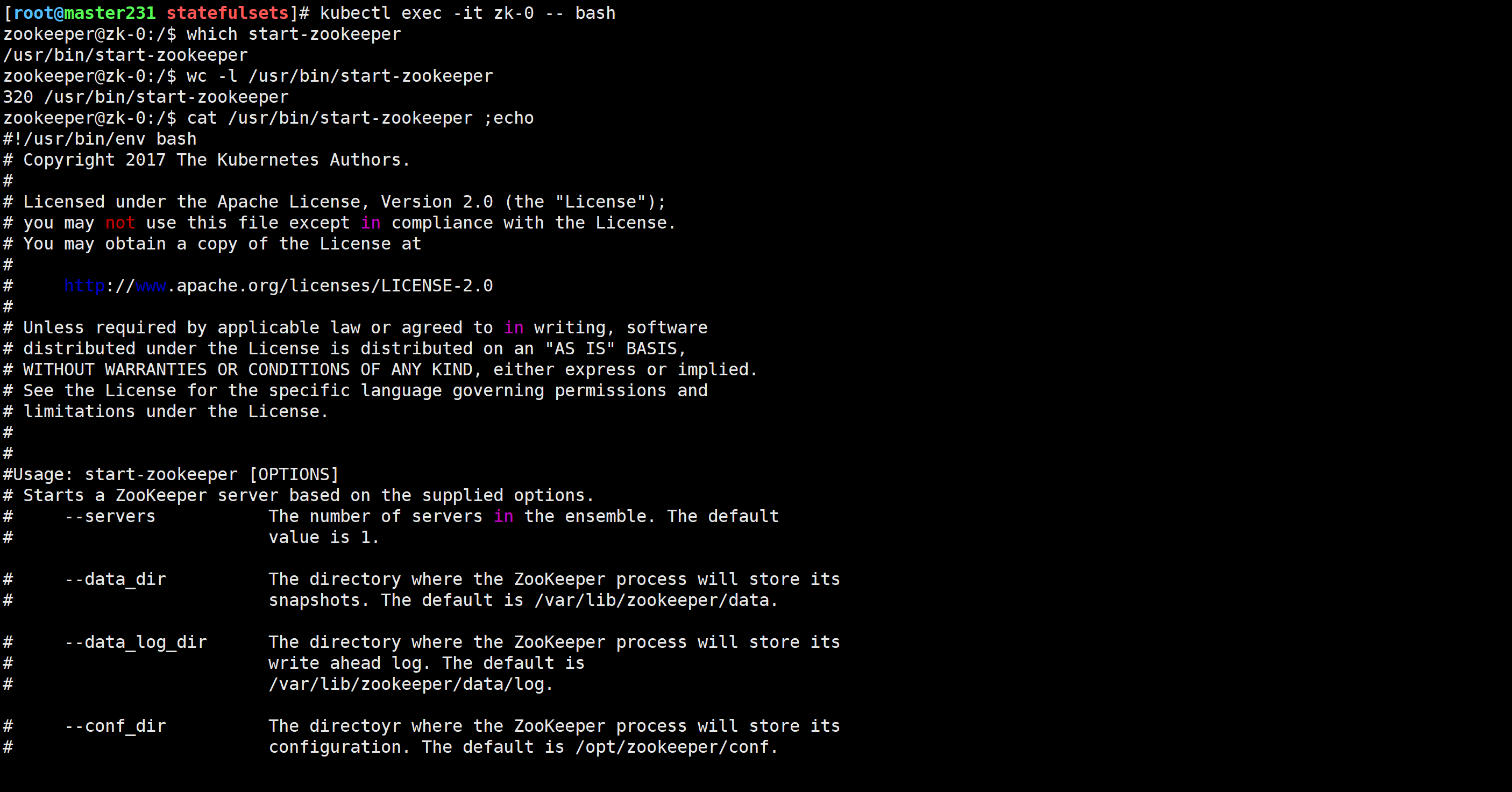

查看start-zookeeper 脚本逻辑

[root@master231 statefulsets]# kubectl exec -it zk-0 -- bash

zookeeper@zk-0:/$ which start-zookeeper

/usr/bin/start-zookeeper

zookeeper@zk-0:/$ wc -l /usr/bin/start-zookeeper

320 /usr/bin/start-zookeeper

zookeeper@zk-0:/$ cat /usr/bin/start-zookeeper ;echo

温馨提示:

业界对于sts控制器有点望而却步,我们知道这个控制器用做有状态服务部署,但是我们不用~

于是coreOS公司有研发出来了Operator(sts+crd)框架,大家可以基于该框架部署各种服务。

🌟helm环境快速部署实战

helm概述

helm有点类似于Linux的yum,apt工具,帮助我们管理K8S集群的资源清单。

Helm 帮助您管理 Kubernetes 应用—— Helm Chart,即使是最复杂的 Kubernetes 应用程序,都可以帮助您定义,安装和升级。

Helm Chart 易于创建、发版、分享和发布,所以停止复制粘贴,开始使用 Helm 吧。

Helm 是 CNCF 的毕业项目,由 Helm 社区维护。

官方文档: https://helm.sh/zh/

helm的架构版本选择

2019年11月Helm团队发布V3版本,相比v2版本最大变化是将Tiller删除,并大部分代码重构。

helm v3相比helm v2还做了很多优化,比如不同命名空间资源同名的情况在v3版本是允许的,我们在生产环境中使用建议大家使用v3版本,不仅仅是因为它版本功能较强,而且相对来说也更加稳定了。

官方地址: https://helm.sh/docs/intro/install/

github地址: https://github.com/helm/helm/releases

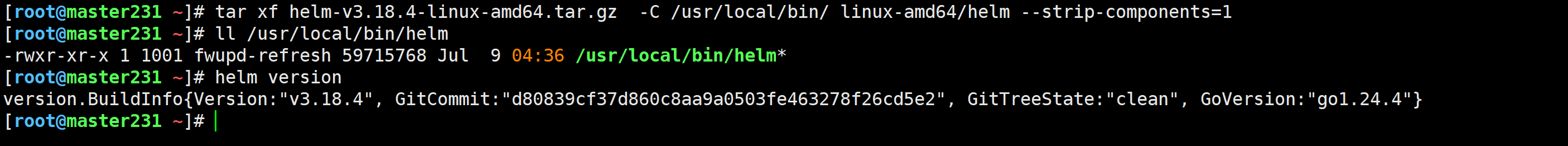

安装helm

wget https://get.helm.sh/helm-v3.18.4-linux-amd64.tar.gz[root@master231 ~]# tar xf helm-v3.18.4-linux-amd64.tar.gz -C /usr/local/bin/ linux-amd64/helm --strip-components=1[root@master231 ~]# ll /usr/local/bin/helm

-rwxr-xr-x 1 1001 fwupd-refresh 59715768 Jul 9 04:36 /usr/local/bin/helm*[root@master231 ~]# helm version

version.BuildInfo{Version:"v3.18.4", GitCommit:"d80839cf37d860c8aa9a0503fe463278f26cd5e2", GitTreeState:"clean", GoVersion:"go1.24.4"}

[root@master231 ~]#

配置helm的自动补全功能

[root@master231 ~]# helm completion bash > /etc/bash_completion.d/helm

[root@master231 ~]# source /etc/bash_completion.d/helm

[root@master231 ~]# echo 'source /etc/bash_completion.d/helm' >> ~/.bashrc

🌟helm的Chart基本管理

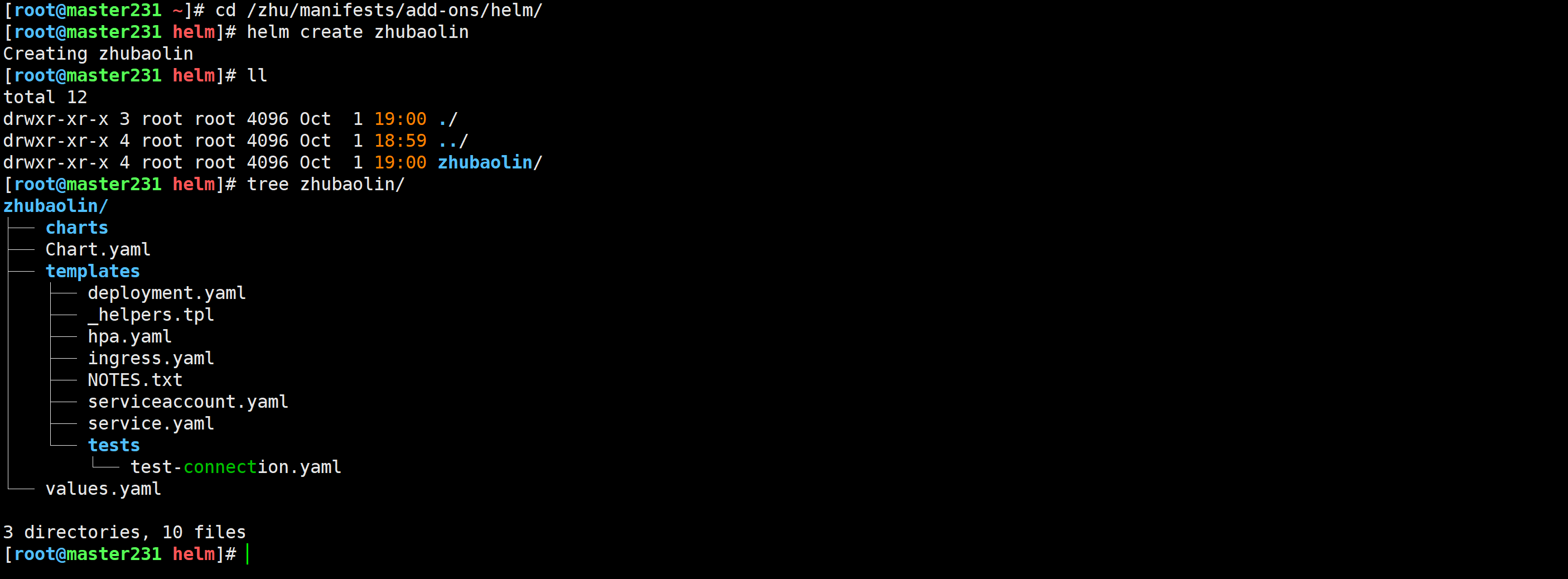

创建Chart

[root@master231 helm]# helm create zhubaolin

[root@master231 helm]# tree zhubaolin/

zhubaolin/

├── charts # 包含chart依赖的其他chart

├── Chart.yaml # 包含了chart信息的YAML文件

├── templates # 模板目录, 当和values 结合时,可生成有效的Kubernetes manifest文件

│ ├── deployment.yaml # deployment资源清单模板。

│ ├── _helpers.tpl # 自定义模板

│ ├── hpa.yaml # hpa资源清单模板。

│ ├── ingress.yaml # Ingress资源清单模板。

│ ├── NOTES.txt # 可选: 包含简要使用说明的纯文本文件

│ ├── serviceaccount.yaml # sa资源清单模板。

│ ├── service.yaml # svc资源清单模板。

│ └── tests # 测试目录

│ └── test-connection.yaml

└── values.yaml # chart 默认的配置值3 directories, 10 files

[root@master231 helm]#

参考链接: https://helm.sh/zh/docs/topics/charts/#chart-%E6%96%87%E4%BB%B6%E7%BB%93%E6%9E%84

修改默认的values.yaml

[root@master231 helm]# egrep "repository:|tag:" zhubaolin/values.yamlrepository: nginxtag: ""

[root@master231 helm]#

[root@master231 helm]# sed -i "/repository\:/s#nginx#registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps#" zhubaolin/values.yaml

[root@master231 helm]#

[root@master231 helm]# sed -ri '/tag\:/s#tag: ""#tag: v1#' zhubaolin/values.yaml

[root@master231 helm]#

[root@master231 helm]# egrep "repository:|tag:" zhubaolin/values.yamlrepository: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/appstag: v1

[root@master231 helm]#

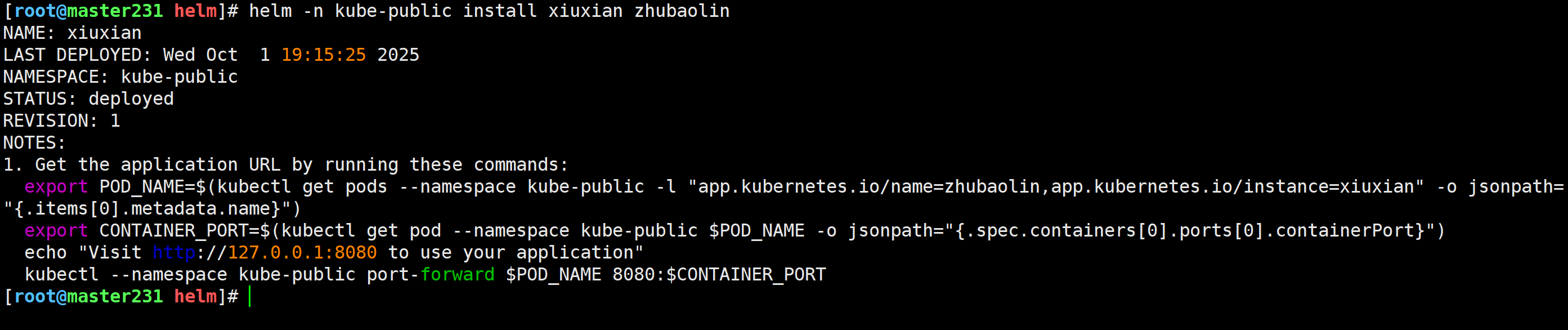

基于Chart安装服务发行Release

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

NAME SECRETS AGE

serviceaccount/default 1 11d[root@master231 helm]# helm -n kube-public install xiuxian zhubaolin

NAME: xiuxian

LAST DEPLOYED: Wed Oct 1 19:15:25 2025

NAMESPACE: kube-public

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:export POD_NAME=$(kubectl get pods --namespace kube-public -l "app.kubernetes.io/name=zhubaolin,app.kubernetes.io/instance=xiuxian" -o jsonpath="{.items[0].metadata.name}")export CONTAINER_PORT=$(kubectl get pod --namespace kube-public $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")echo "Visit http://127.0.0.1:8080 to use your application"kubectl --namespace kube-public port-forward $POD_NAME 8080:$CONTAINER_PORT

[root@master231 helm]#

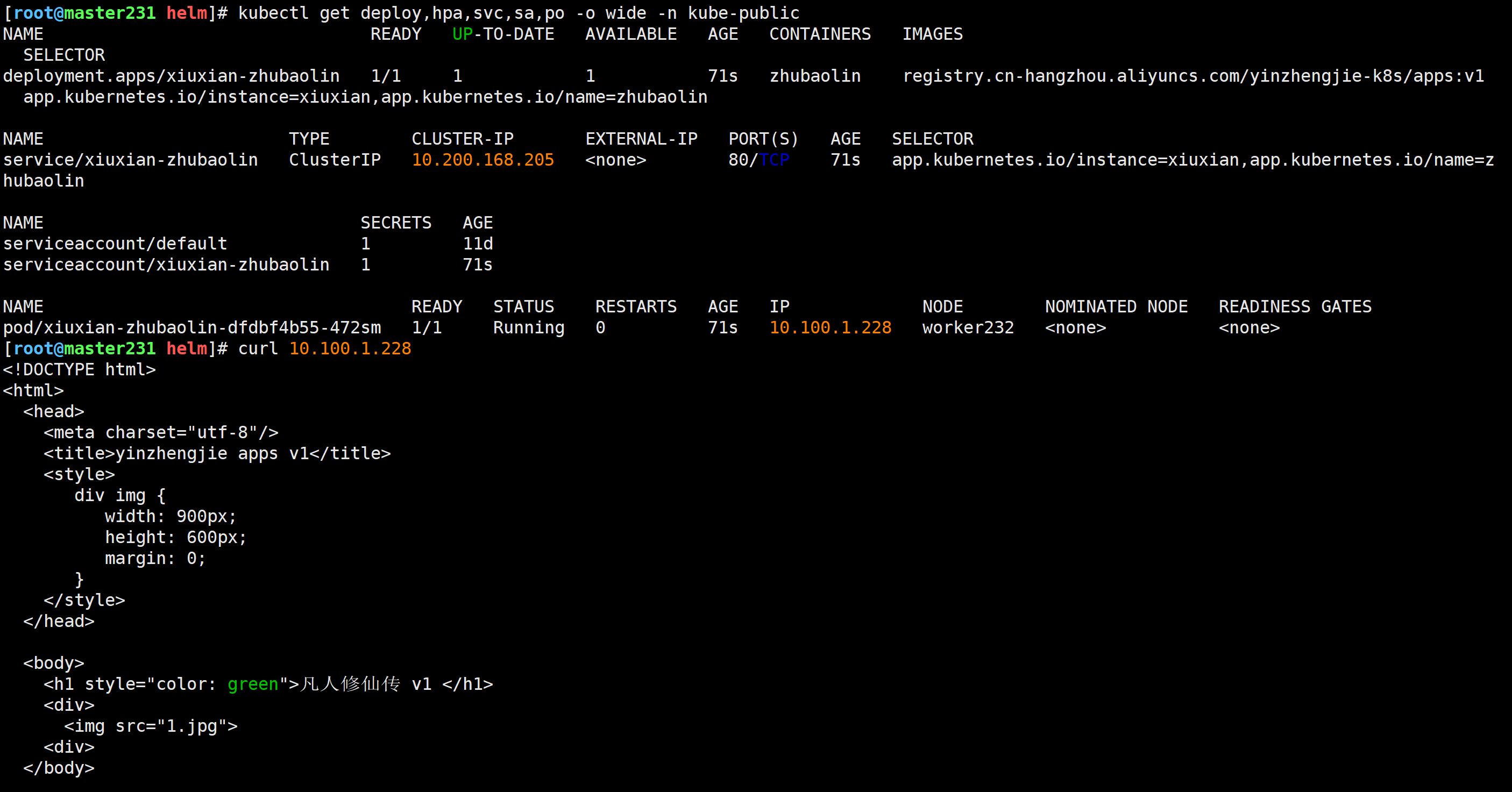

查看服务

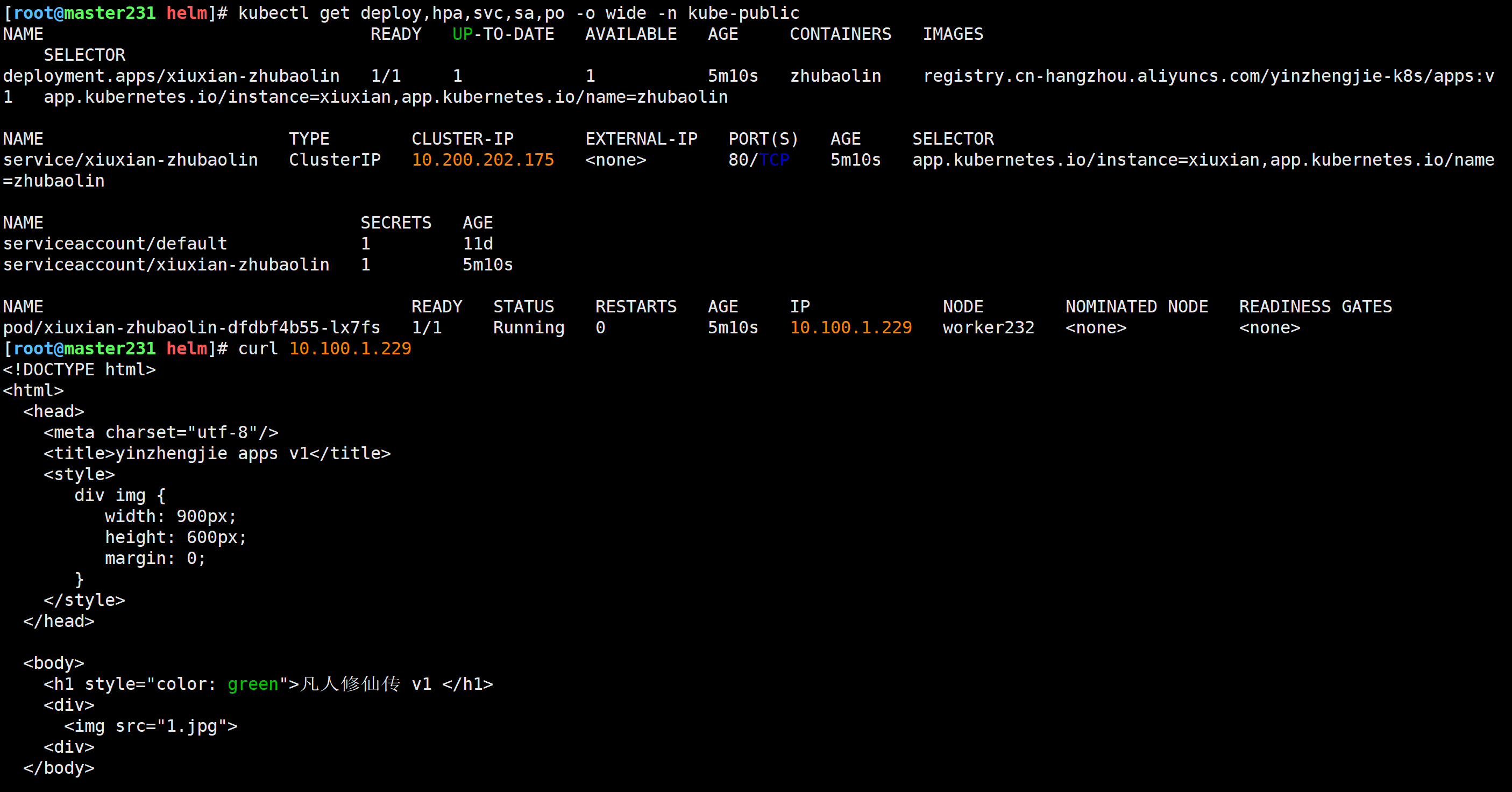

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

[root@master231 helm]# curl 10.100.1.228

卸载服务

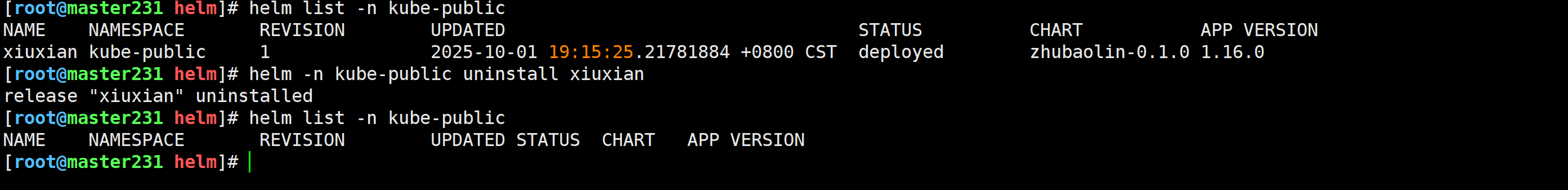

[root@master231 helm]# helm list -n kube-public

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

xiuxian kube-public 1 2025-10-01 19:15:25.21781884 +0800 CST deployed zhubaolin-0.1.0 1.16.0

[root@master231 helm]# helm -n kube-public uninstall xiuxian

release "xiuxian" uninstalled

[root@master231 helm]# helm list -n kube-public

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

[root@master231 helm]#

🌟helm的两种升级方式

安装旧的服务

[root@master231 helm]# helm -n kube-public install xiuxian zhubaolin

NAME: xiuxian

LAST DEPLOYED: Wed Oct 1 20:04:19 2025

NAMESPACE: kube-public

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:export POD_NAME=$(kubectl get pods --namespace kube-public -l "app.kubernetes.io/name=zhubaolin,app.kubernetes.io/instance=xiuxian" -o jsonpath="{.items[0].metadata.name}")export CONTAINER_PORT=$(kubectl get pod --namespace kube-public $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")echo "Visit http://127.0.0.1:8080 to use your application"kubectl --namespace kube-public port-forward $POD_NAME 8080:$CONTAINER_PORT

[root@master231 helm]#

修改要升级的相关参数

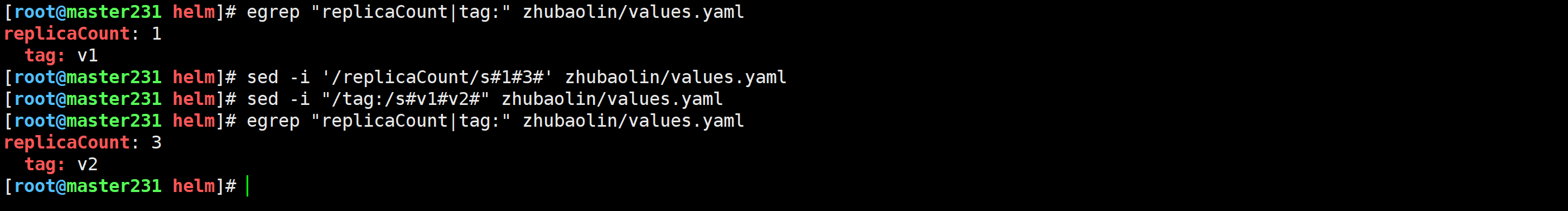

[root@master231 helm]# egrep "replicaCount|tag:" zhubaolin/values.yaml

replicaCount: 1tag: v1

[root@master231 helm]# sed -i '/replicaCount/s#1#3#' zhubaolin/values.yaml

[root@master231 helm]# sed -i "/tag:/s#v1#v2#" zhubaolin/values.yaml

[root@master231 helm]# egrep "replicaCount|tag:" zhubaolin/values.yaml

replicaCount: 3tag: v2

[root@master231 helm]#

基于文件方式升级

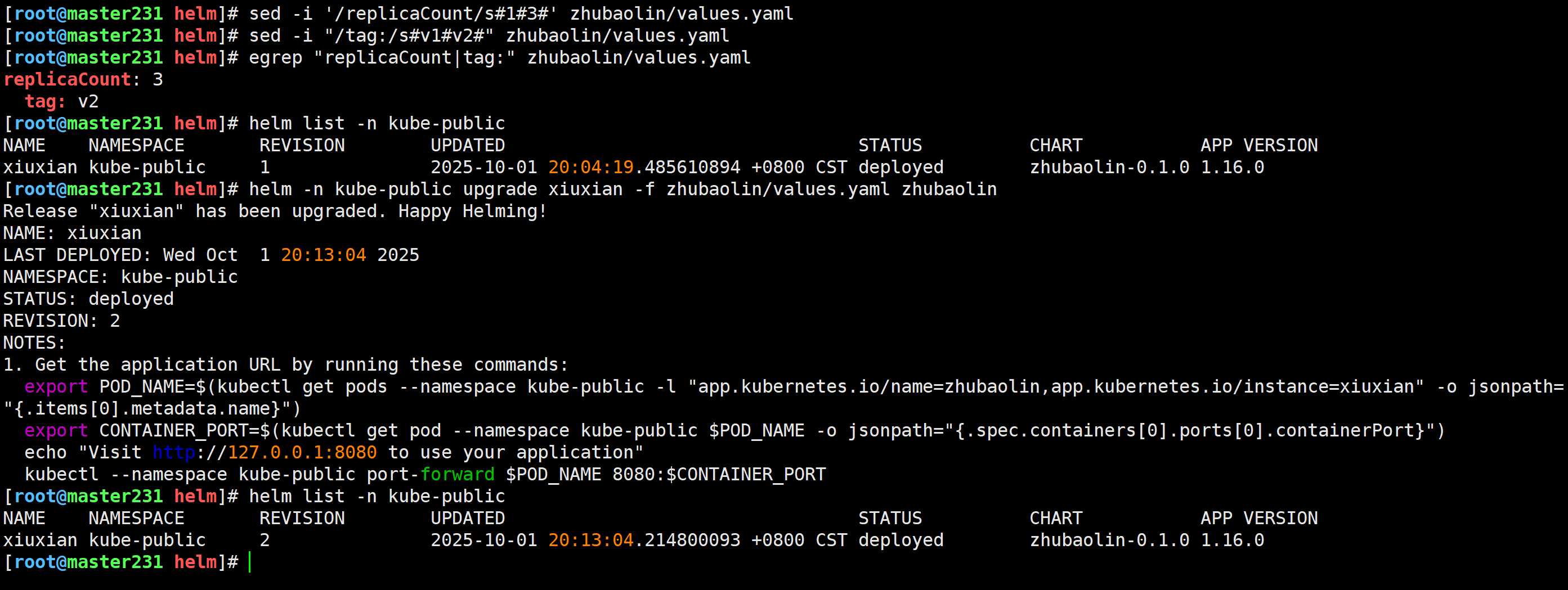

[root@master231 helm]# helm -n kube-public upgrade xiuxian -f zhubaolin/values.yaml zhubaolin

Release "xiuxian" has been upgraded. Happy Helming!

NAME: xiuxian

LAST DEPLOYED: Wed Oct 1 20:13:04 2025

NAMESPACE: kube-public

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:export POD_NAME=$(kubectl get pods --namespace kube-public -l "app.kubernetes.io/name=zhubaolin,app.kubernetes.io/instance=xiuxian" -o jsonpath="{.items[0].metadata.name}")export CONTAINER_PORT=$(kubectl get pod --namespace kube-public $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")echo "Visit http://127.0.0.1:8080 to use your application"kubectl --namespace kube-public port-forward $POD_NAME 8080:$CONTAINER_PORT

[root@master231 helm]#

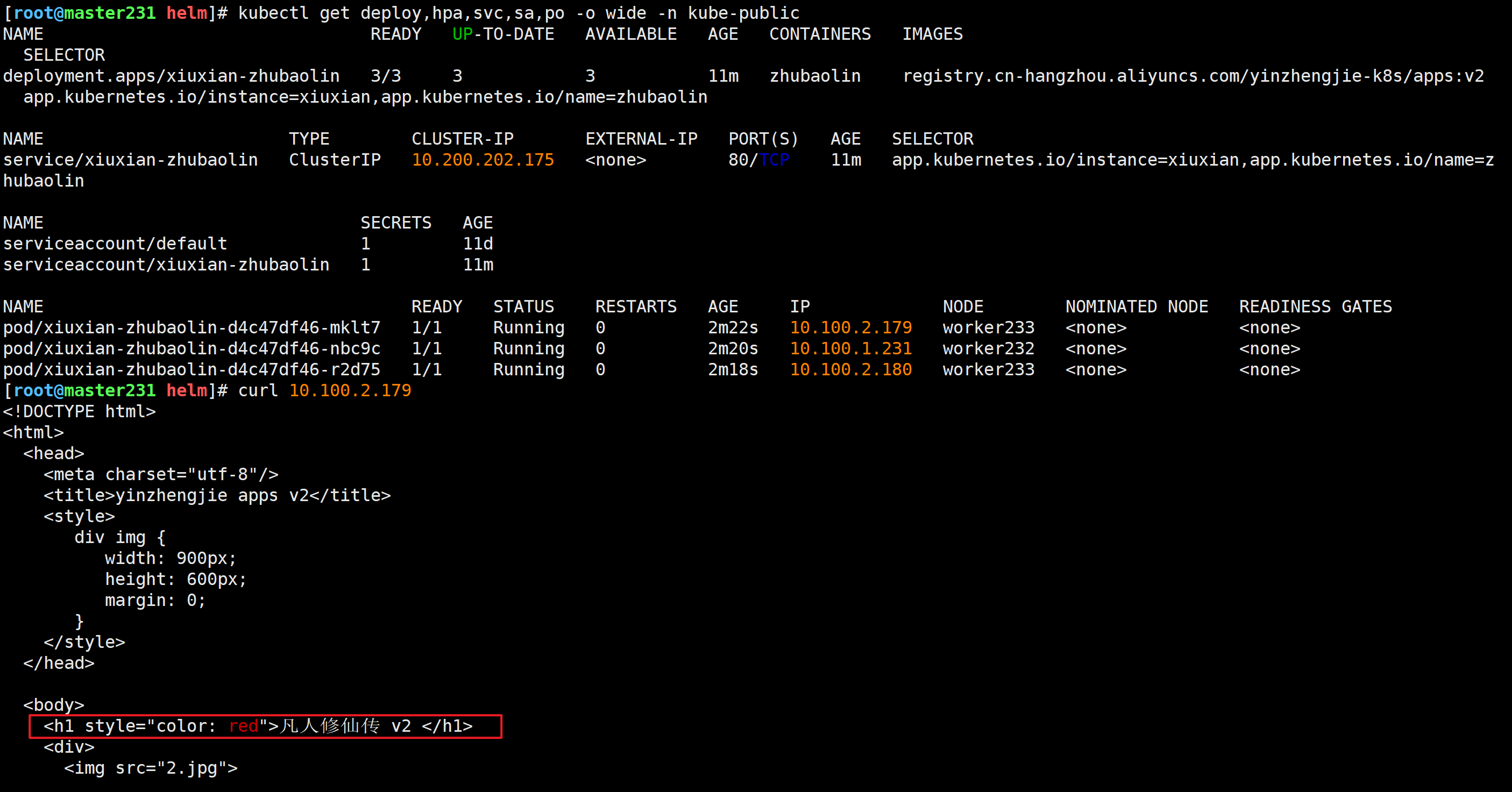

验证升级效果

[root@master231 helm]# helm list -n kube-public

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

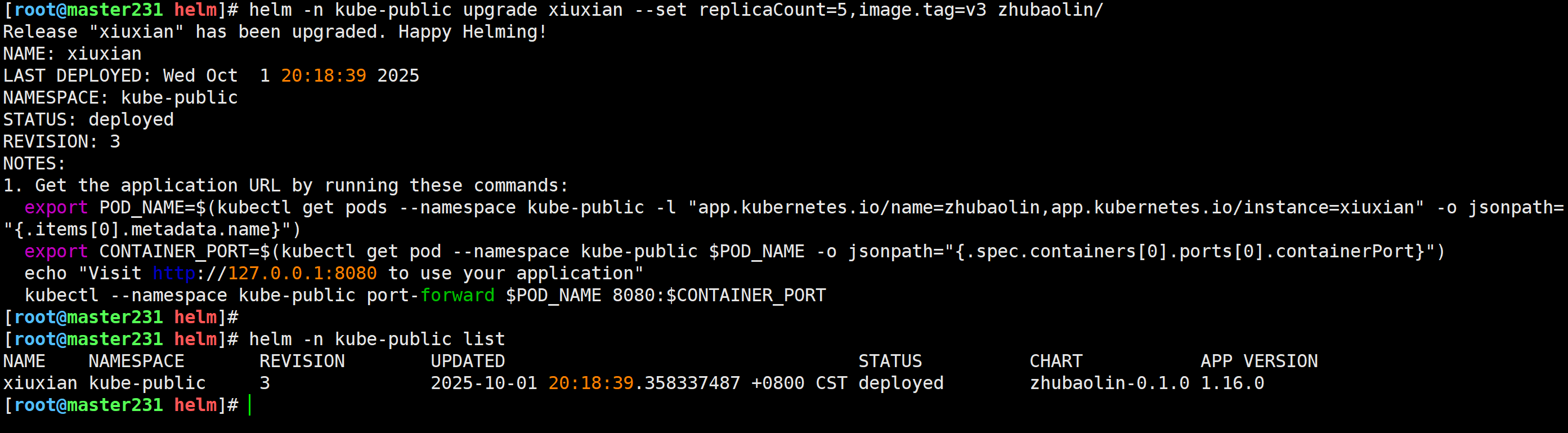

基于环境变量方式升级

[root@master231 helm]# helm -n kube-public upgrade xiuxian --set replicaCount=5,image.tag=v3 zhubaolin/

Release "xiuxian" has been upgraded. Happy Helming!

NAME: xiuxian

LAST DEPLOYED: Wed Oct 1 20:18:39 2025

NAMESPACE: kube-public

STATUS: deployed

REVISION: 3

NOTES:

1. Get the application URL by running these commands:export POD_NAME=$(kubectl get pods --namespace kube-public -l "app.kubernetes.io/name=zhubaolin,app.kubernetes.io/instance=xiuxian" -o jsonpath="{.items[0].metadata.name}")export CONTAINER_PORT=$(kubectl get pod --namespace kube-public $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")echo "Visit http://127.0.0.1:8080 to use your application"kubectl --namespace kube-public port-forward $POD_NAME 8080:$CONTAINER_PORT

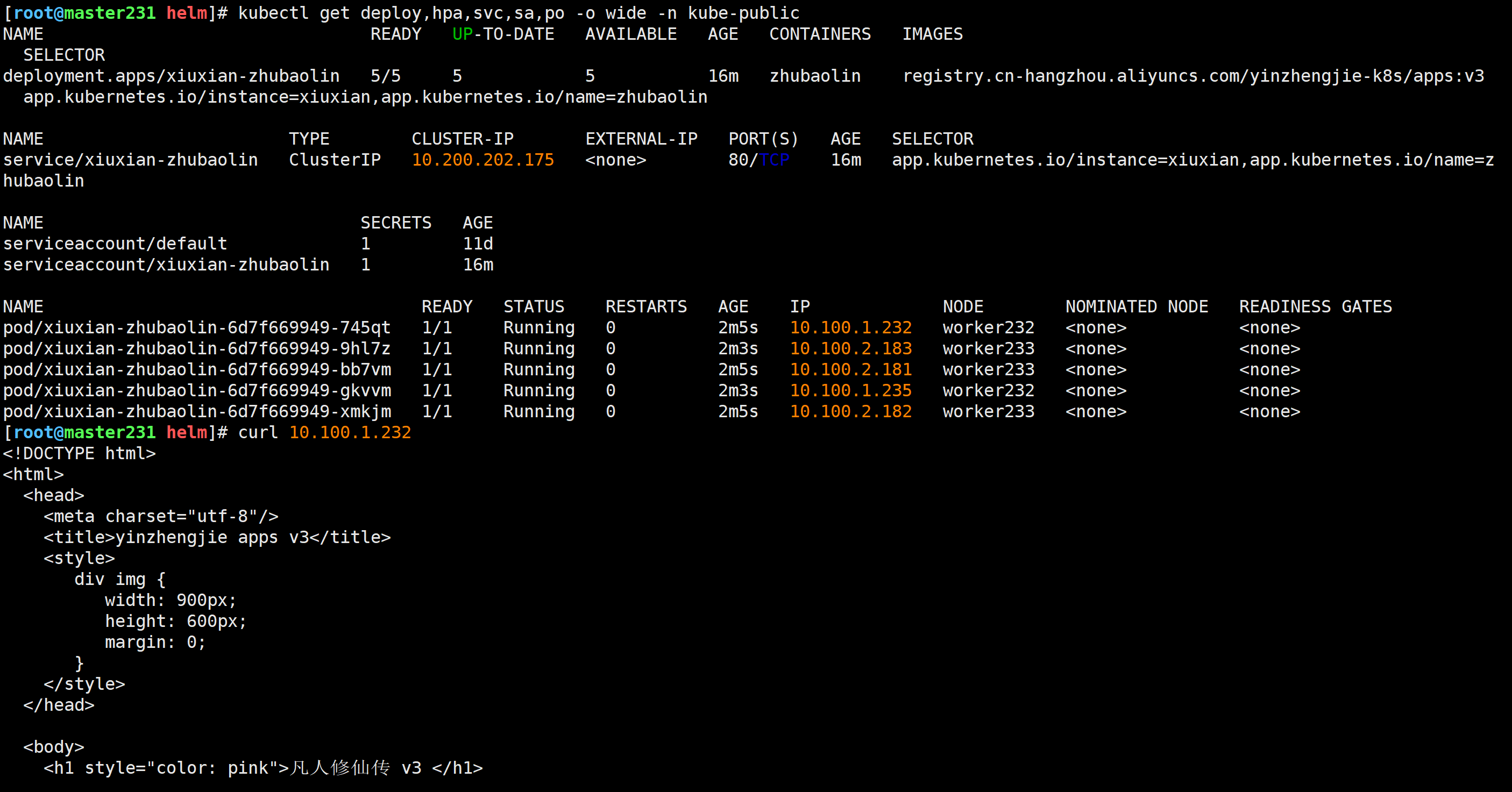

再次验证升级效果

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

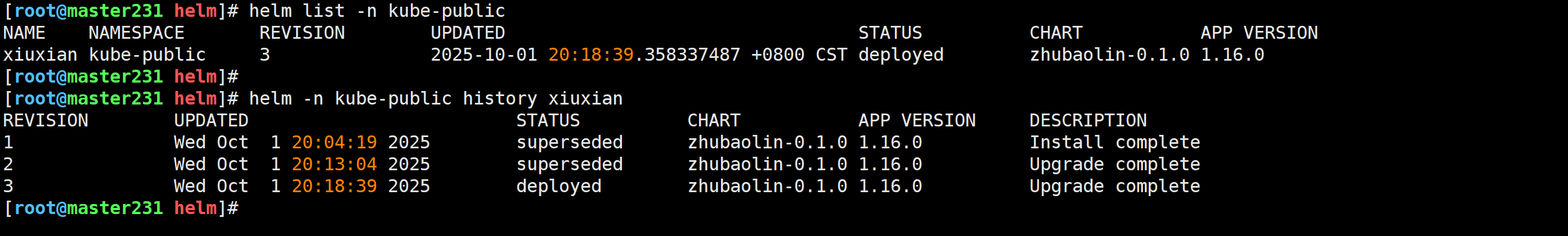

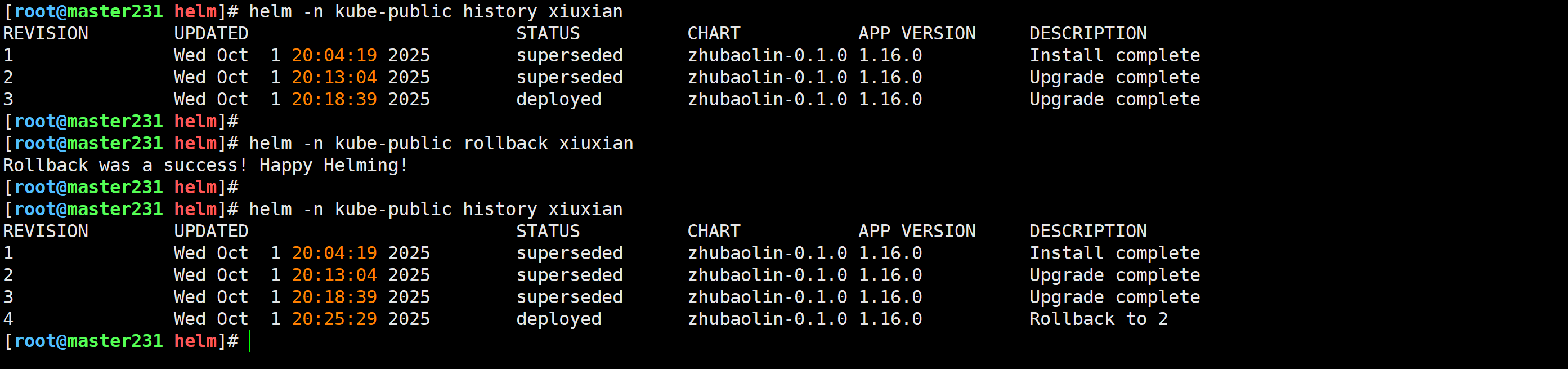

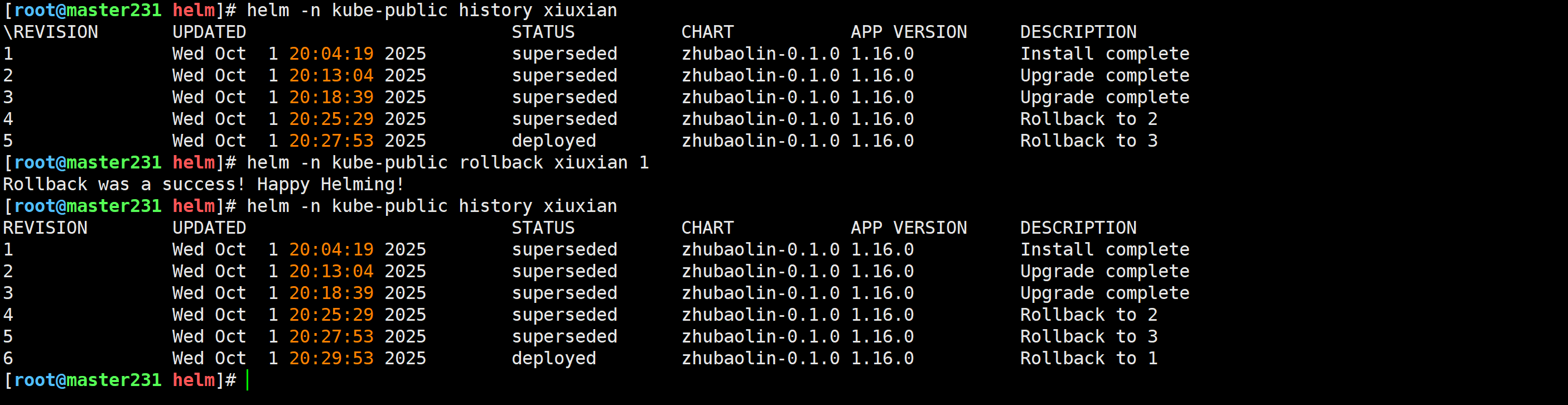

🌟helm的回滚

查看RELEASE历史版本

[root@master231 helm]# helm list -n kube-public

[root@master231 helm]# helm -n kube-public history xiuxian

回滚到上一个版本

[root@master231 helm]# helm -n kube-public rollback xiuxian

Rollback was a success! Happy Helming!

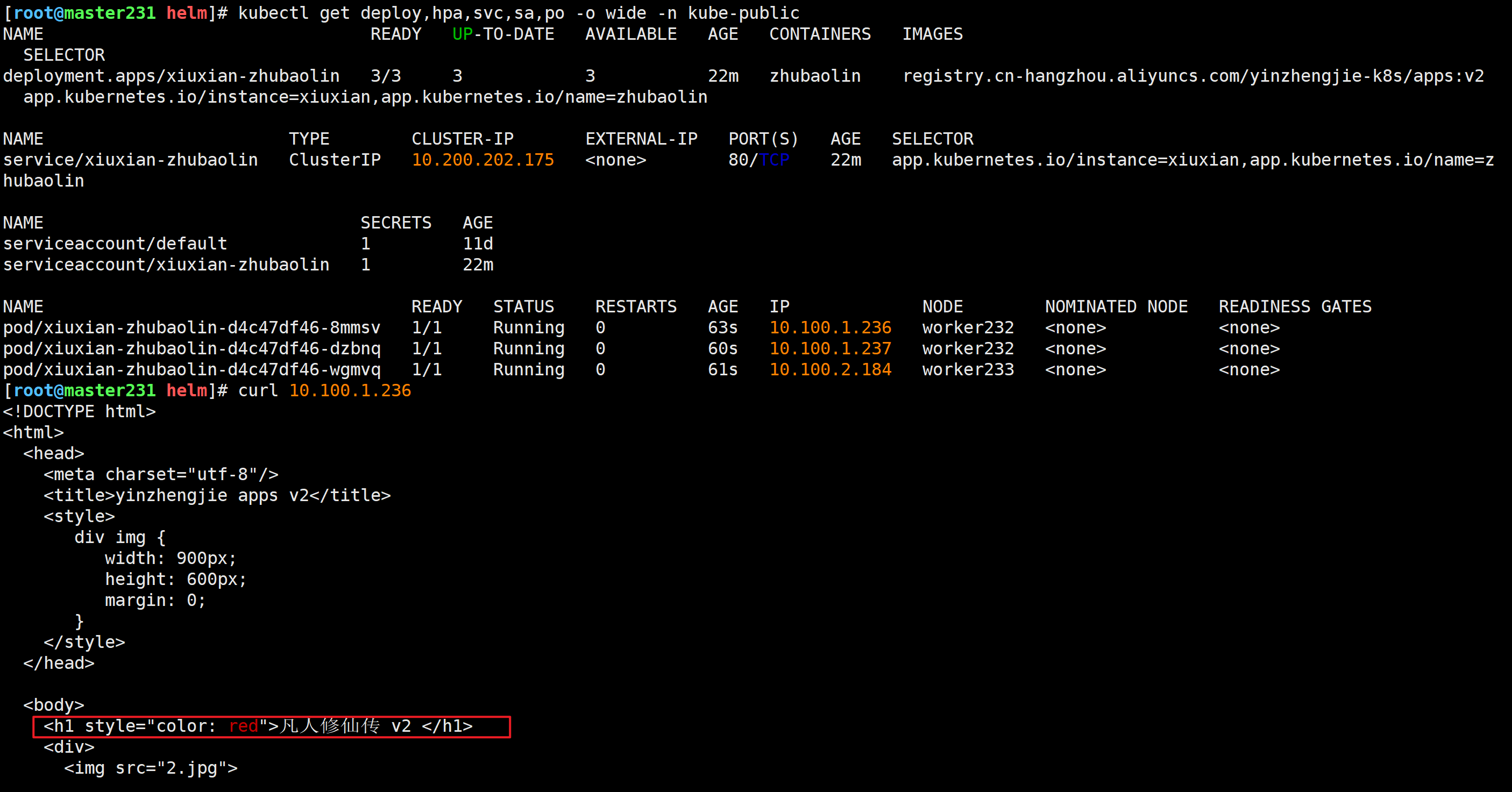

验证测试回滚效果

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

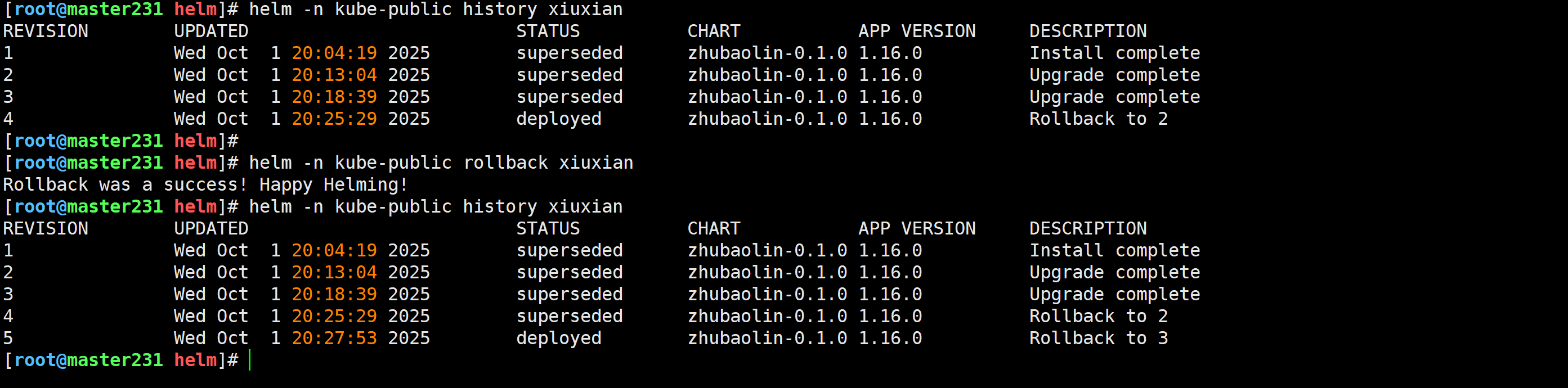

注意再次回滚到上一个版本

[root@master231 helm]# helm -n kube-public rollback xiuxian

Rollback was a success! Happy Helming!

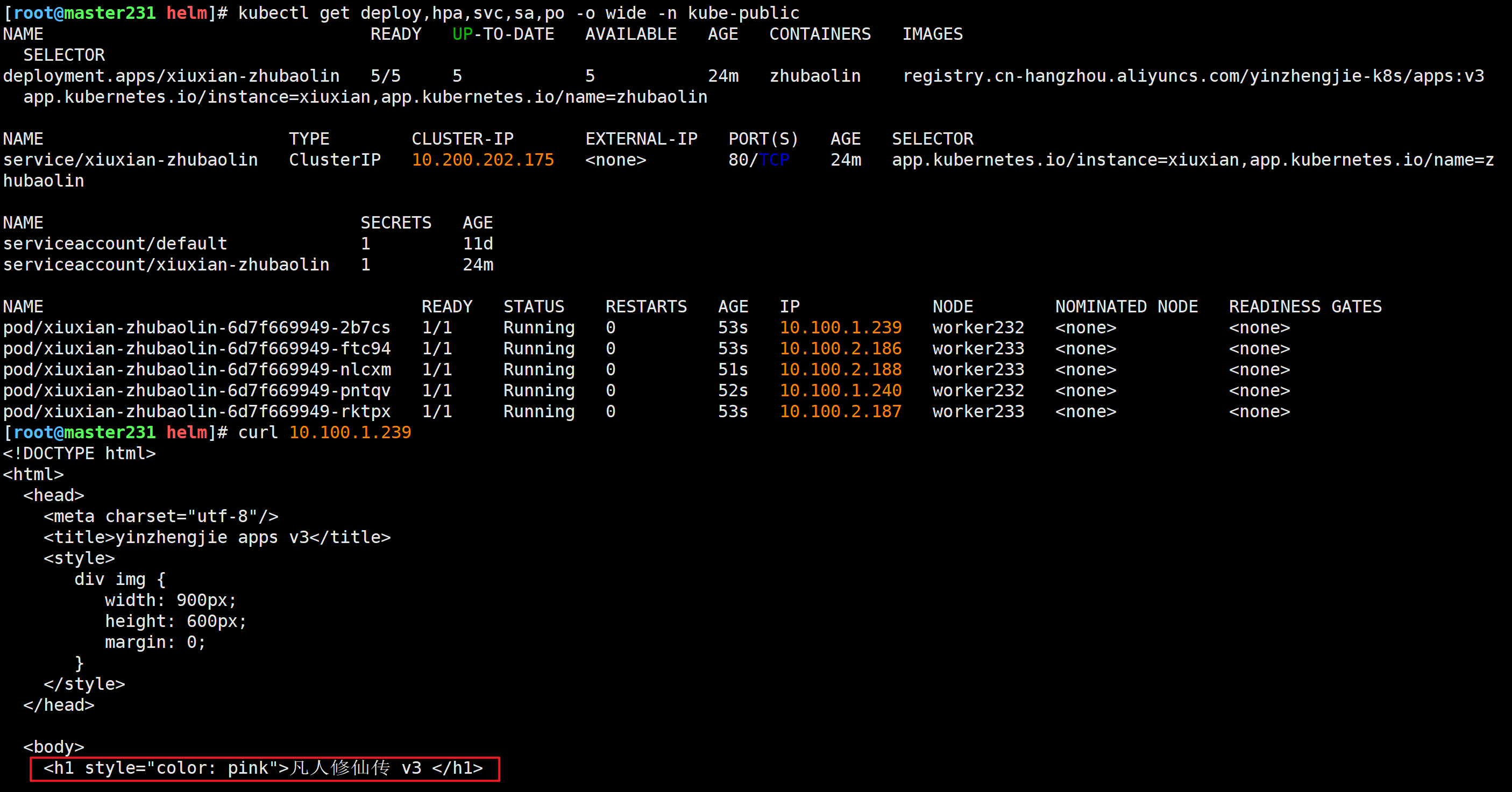

验证结果

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

回滚到指定版本

[root@master231 helm]# helm -n kube-public rollback xiuxian 1

Rollback was a success! Happy Helming!

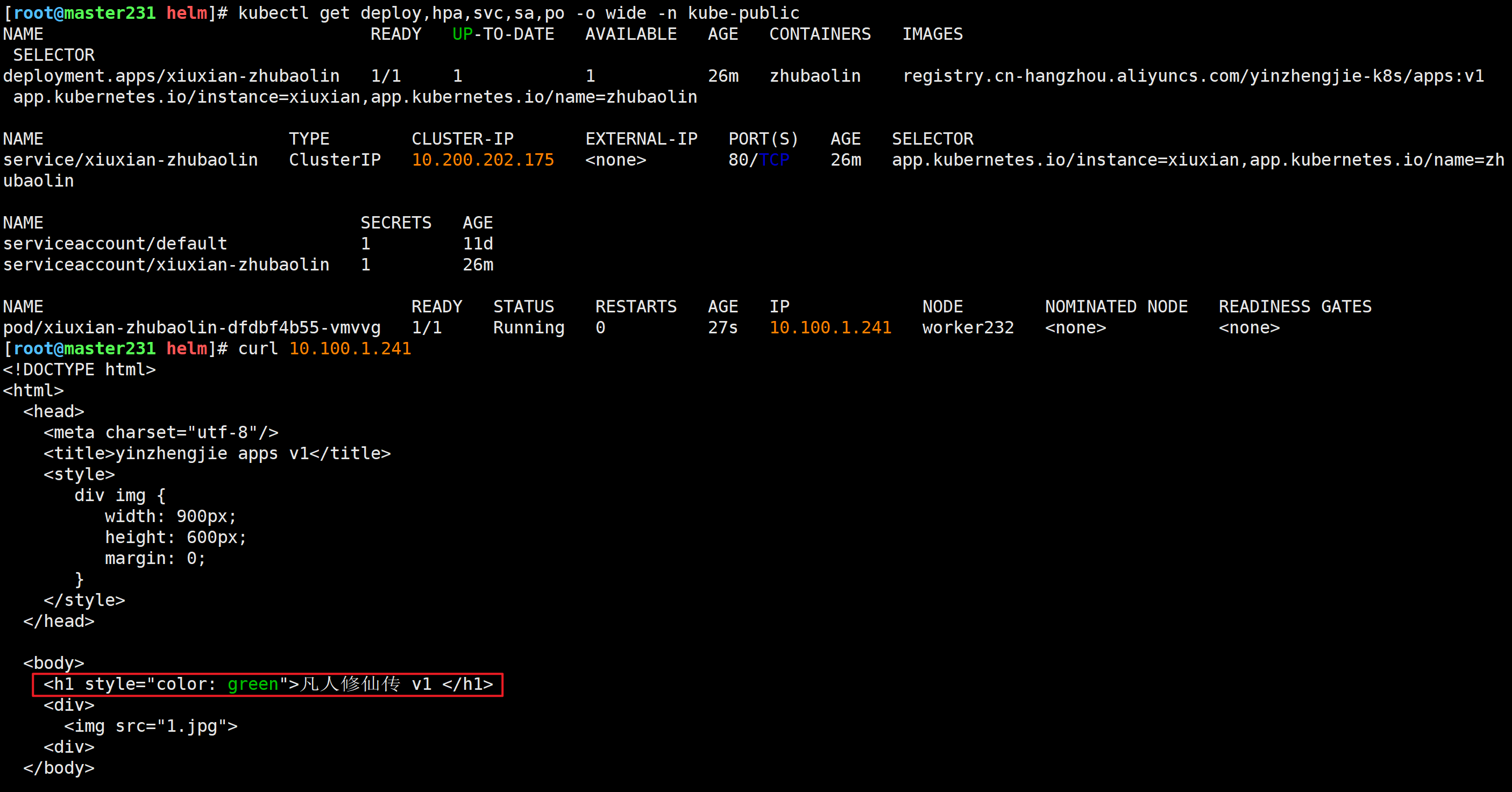

验证结果

[root@master231 helm]# kubectl get deploy,hpa,svc,sa,po -o wide -n kube-public

🌟helm的公有仓库管理及es-exporter环境部署

主流的Chart仓库概述

互联网公开Chart仓库,可以直接使用他们制作好的Chart包:

微软仓库: http://mirror.azure.cn/kubernetes/charts/

阿里云仓库: https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

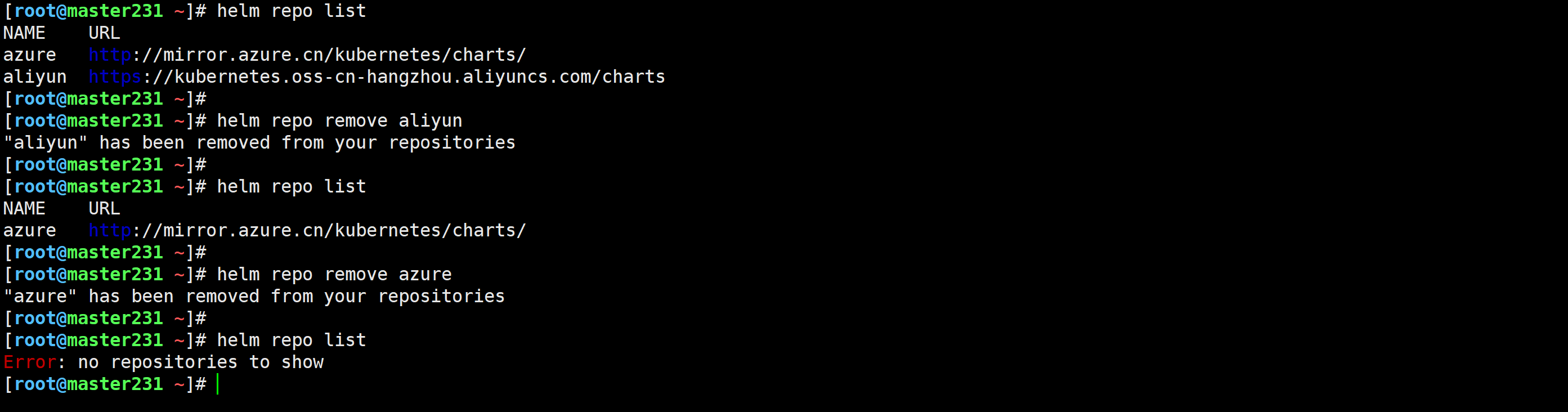

添加共有仓库

[root@master231 ~]# helm repo add azure http://mirror.azure.cn/kubernetes/charts/

"azure" has been added to your repositories

[root@master231 ~]#

[root@master231 ~]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"aliyun" has been added to your repositories

[root@master231 ~]#

查看本地的仓库列表

[root@master231 ~]# helm repo list

NAME URL

azure http://mirror.azure.cn/kubernetes/charts/

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

[root@master231 ~]#

更新本地的仓库信息

[root@master231 ~]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aliyun" chart repository

...Successfully got an update from the "azure" chart repository

Update Complete. ⎈Happy Helming!⎈

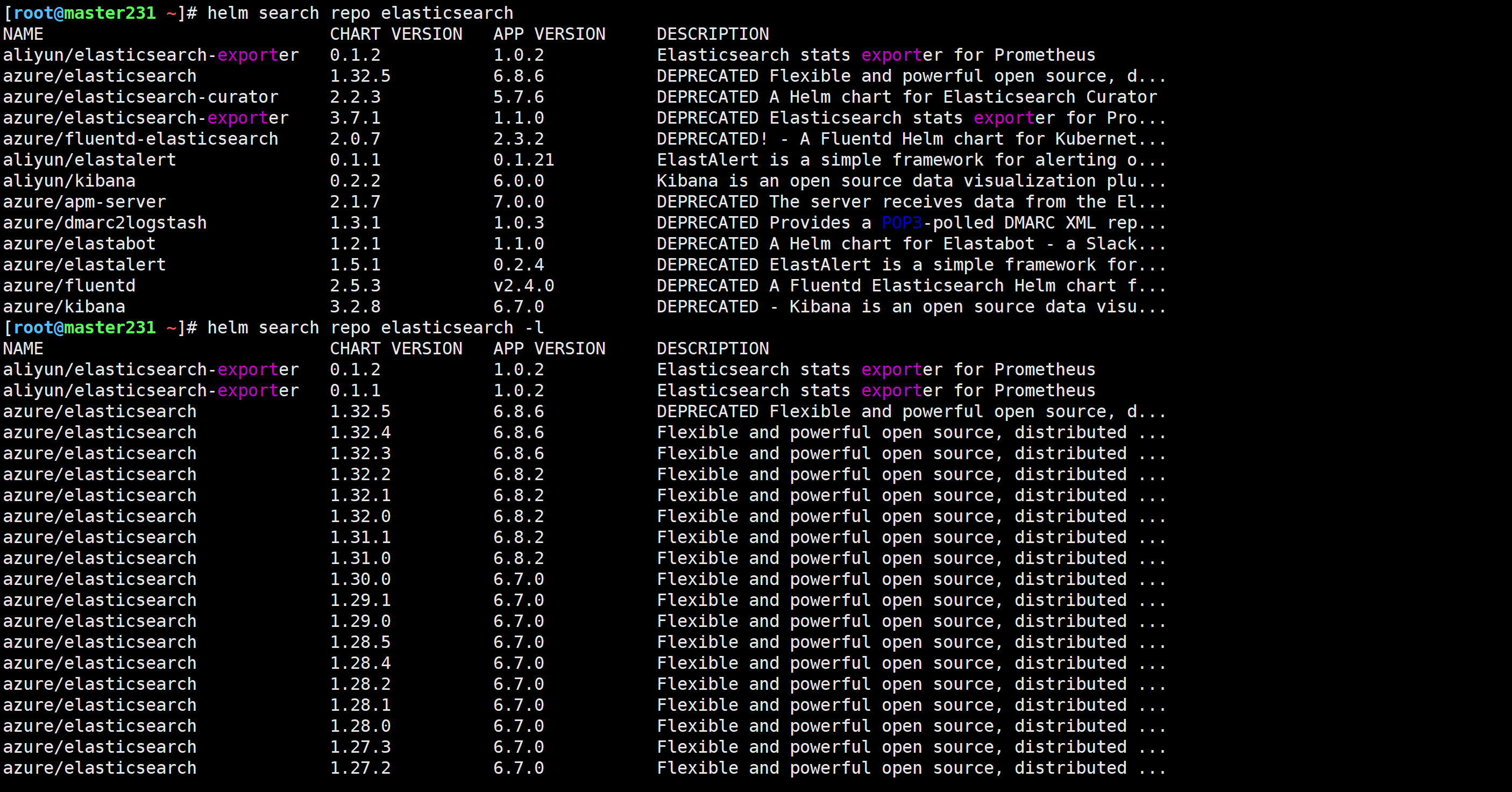

搜索我们关心的"Chart"

[root@master231 ~]# helm search repo elasticsearch#显示所有的版本信息列表

[root@master231 ~]# helm search repo elasticsearch -l

DEPRECATED:表示已弃用

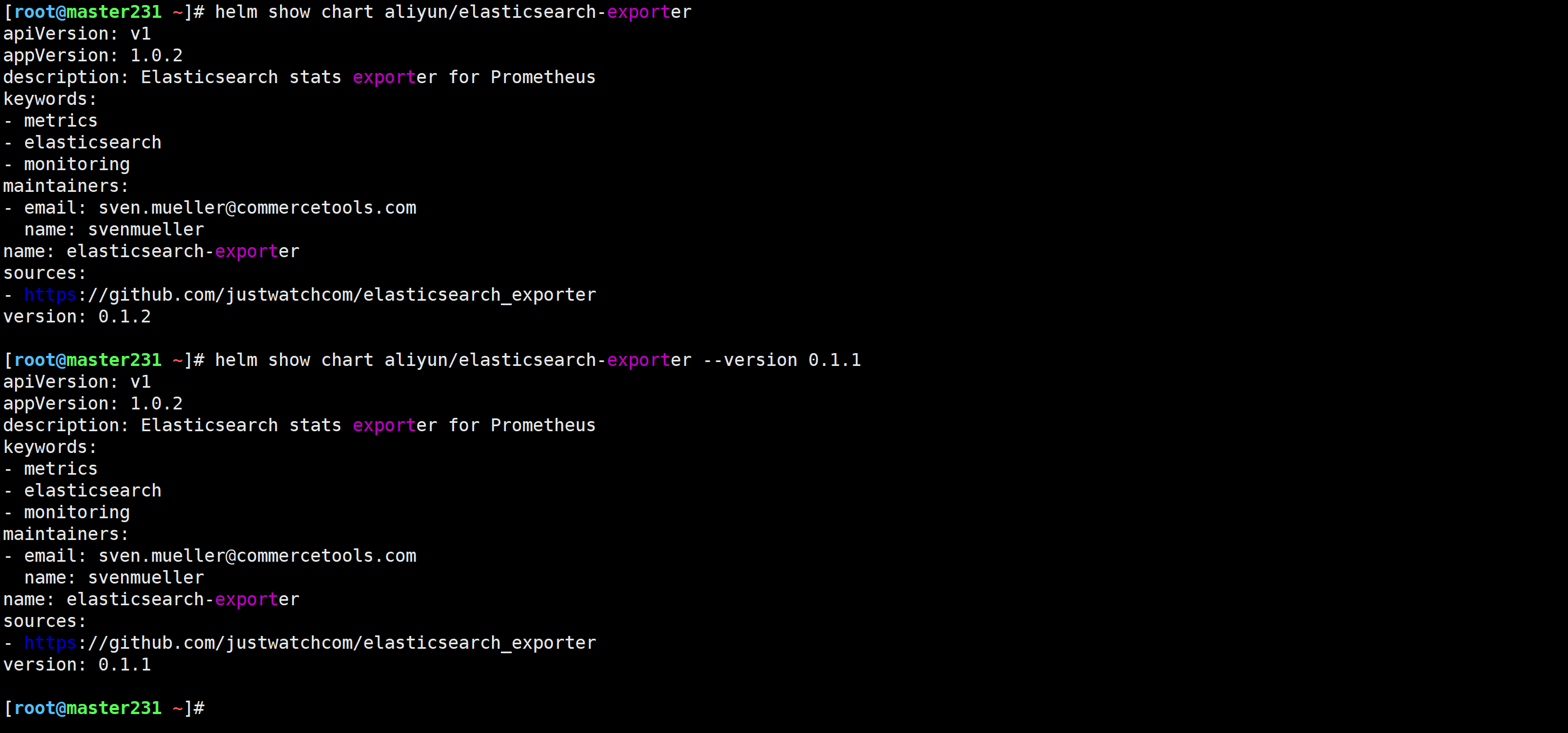

查看Chart的详细信息

[root@master231 ~]# helm show chart aliyun/elasticsearch-exporter [root@master231 ~]# helm show chart aliyun/elasticsearch-exporter --version 0.1.1

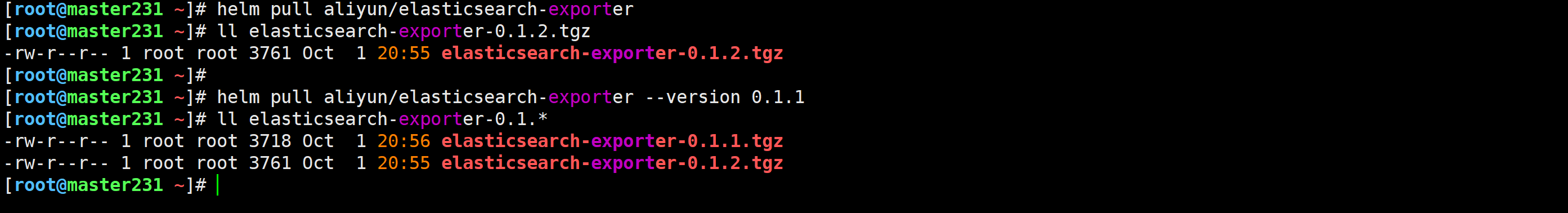

拉取Chart

# 若不指定,拉取最新的Chart

[root@master231 ~]# helm pull aliyun/elasticsearch-exporter# 拉取指定Chart版本

[root@master231 ~]# helm pull aliyun/elasticsearch-exporter --version 0.1.1

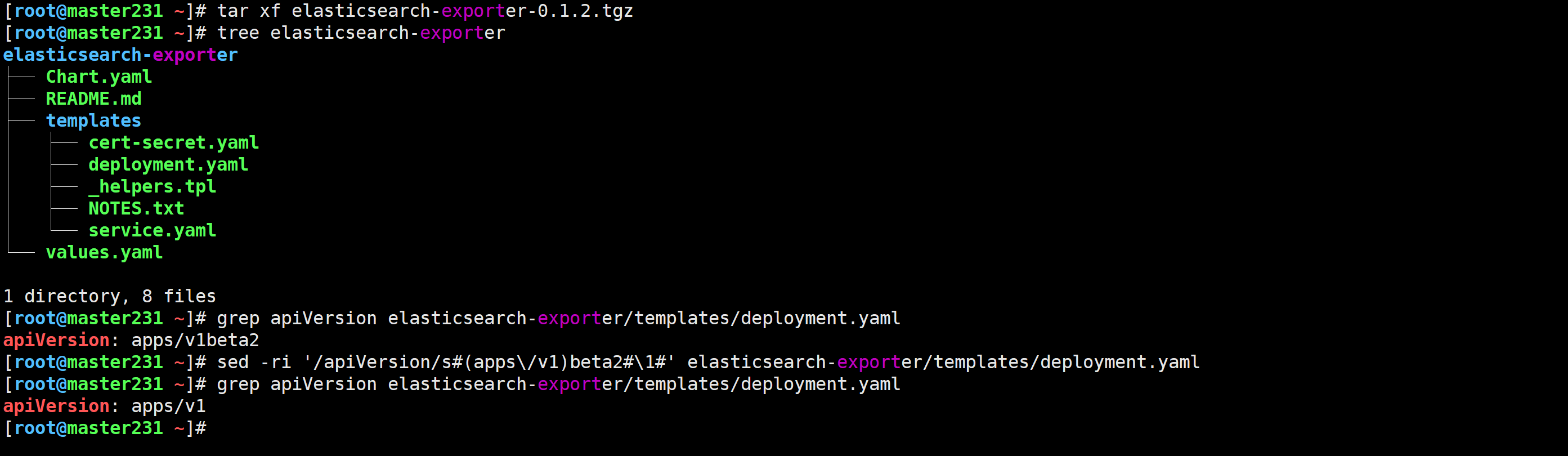

解压Chart包

[root@master231 ~]# tar xf elasticsearch-exporter-0.1.2.tgz

[root@master231 ~]# tree elasticsearch-exporter[root@master231 ~]# grep apiVersion elasticsearch-exporter/templates/deployment.yaml

apiVersion: apps/v1beta2

[root@master231 ~]# sed -ri '/apiVersion/s#(apps\/v1)beta2#\1#' elasticsearch-exporter/templates/deployment.yaml

[root@master231 ~]# grep apiVersion elasticsearch-exporter/templates/deployment.yaml

apiVersion: apps/v1

[root@master231 ~]#

导入镜像

docker load -i elasticsearch_exporter-v1.0.2.tar.gz

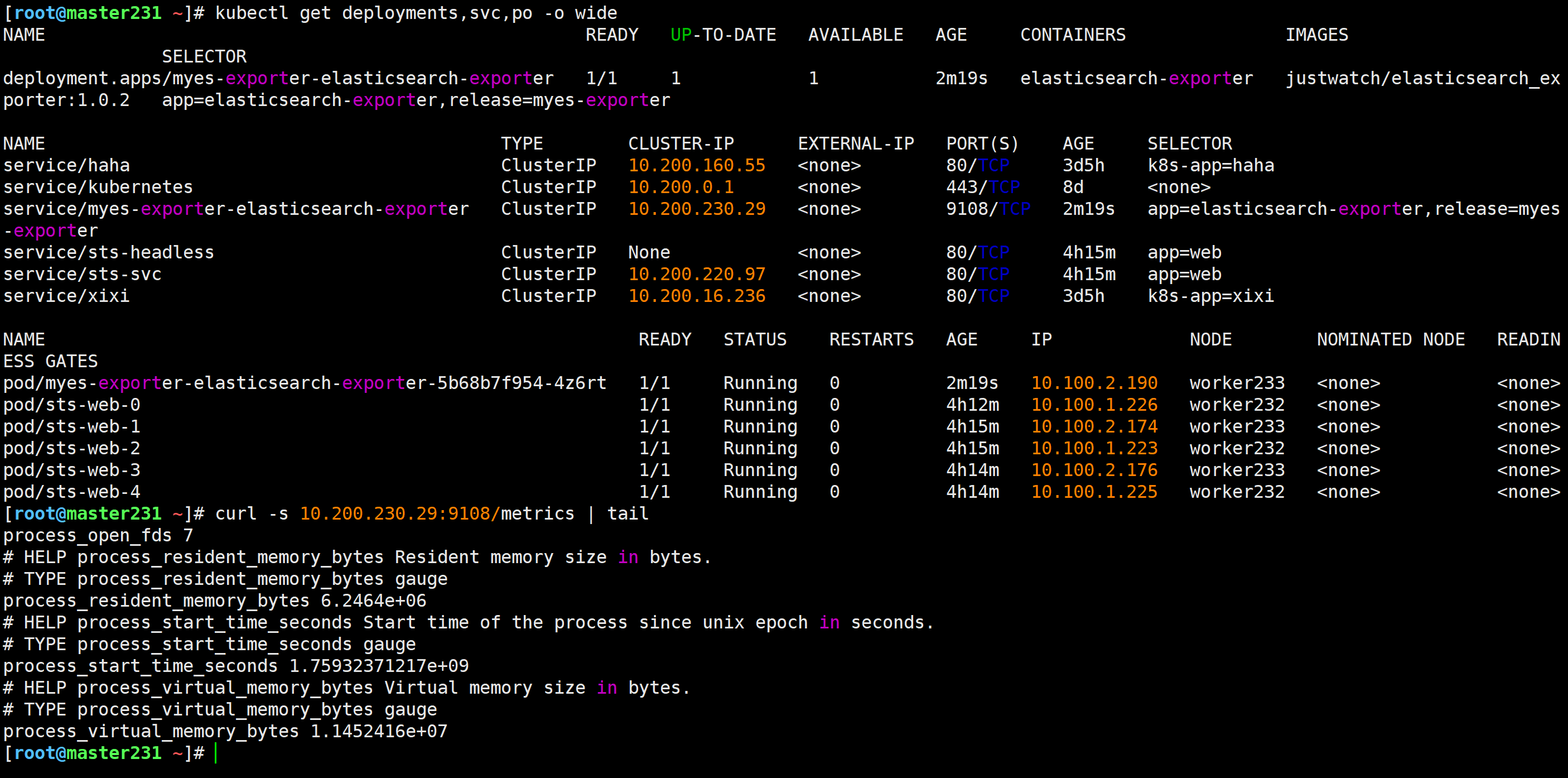

基于Chart安装服务发行Release

[root@master231 ~]# helm install myes-exporter elasticsearch-exporter

NAME: myes-exporter

LAST DEPLOYED: Wed Oct 1 21:00:11 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:export POD_NAME=$(kubectl get pods --namespace default -l "app=myes-exporter-elasticsearch-exporter" -o jsonpath="{.items[0].metadata.name}")echo "Visit http://127.0.0.1:9108/metrics to use your application"kubectl port-forward $POD_NAME 9108:9108 --namespace default[root@master231 ~]# curl -s 10.200.230.29:9108/metrics | tail

删除第三方仓库

[root@master231 ~]# helm repo list

NAME URL

azure http://mirror.azure.cn/kubernetes/charts/

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

[root@master231 ~]#

[root@master231 ~]# helm repo remove aliyun

"aliyun" has been removed from your repositories

[root@master231 ~]#

[root@master231 ~]# helm repo list

NAME URL

azure http://mirror.azure.cn/kubernetes/charts/

[root@master231 ~]#

[root@master231 ~]# helm repo remove azure

"azure" has been removed from your repositories

[root@master231 ~]#

[root@master231 ~]# helm repo list

Error: no repositories to show

[root@master231 ~]#