Flannel工作原理-Flannel故障案例-镜像拉取策略-secret对接harbor及ServiceAccount实战

🌟Flannel的工作原理图解

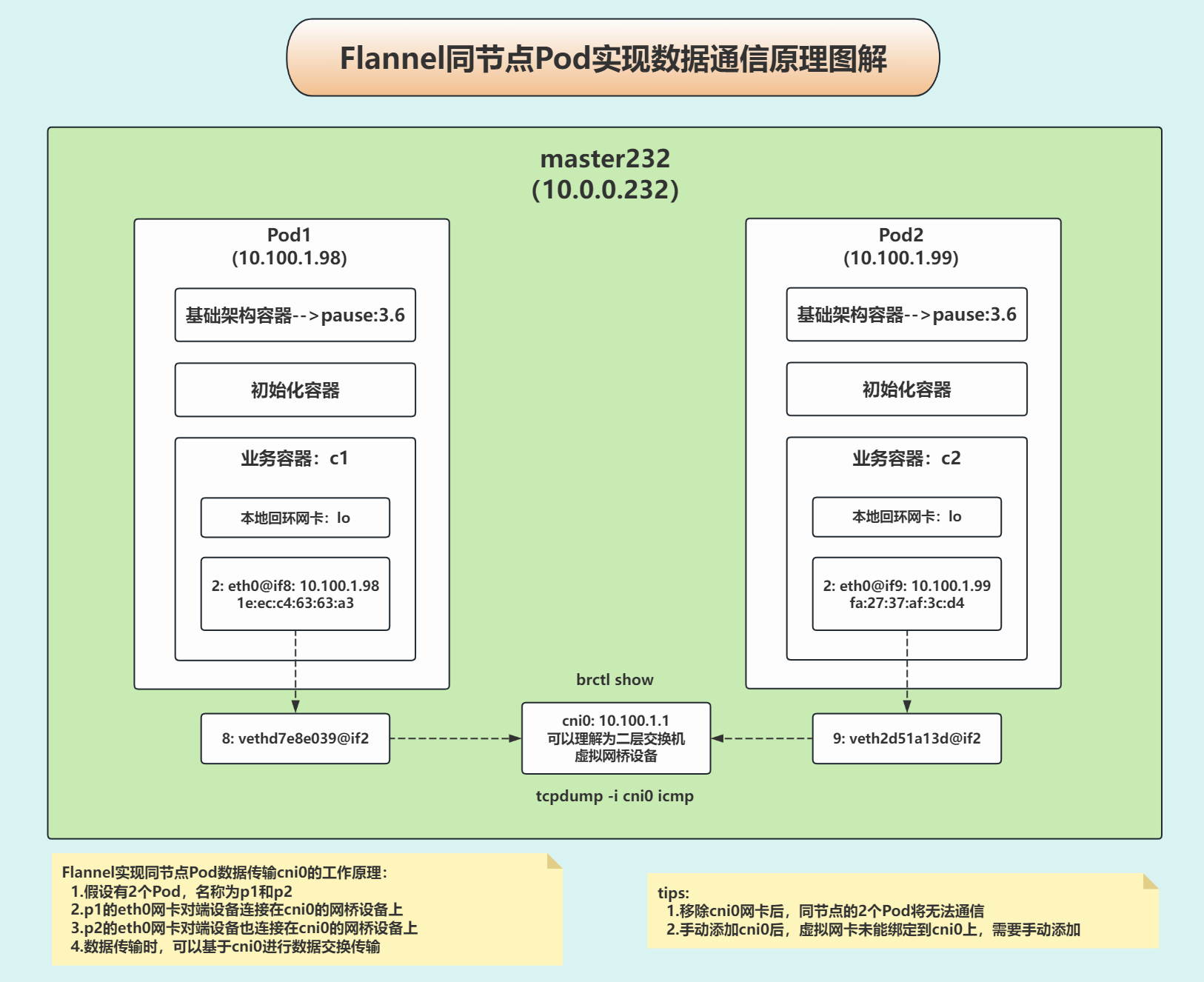

同节点各Pod实现数据通信原理

环境准备

[root@master231 flannel]# cat 01-deploy-one-worker.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian

spec:replicas: 2selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:nodeName: worker232containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

[root@master231 flannel]# kubectl apply -f 01-deploy-one-worker.yaml

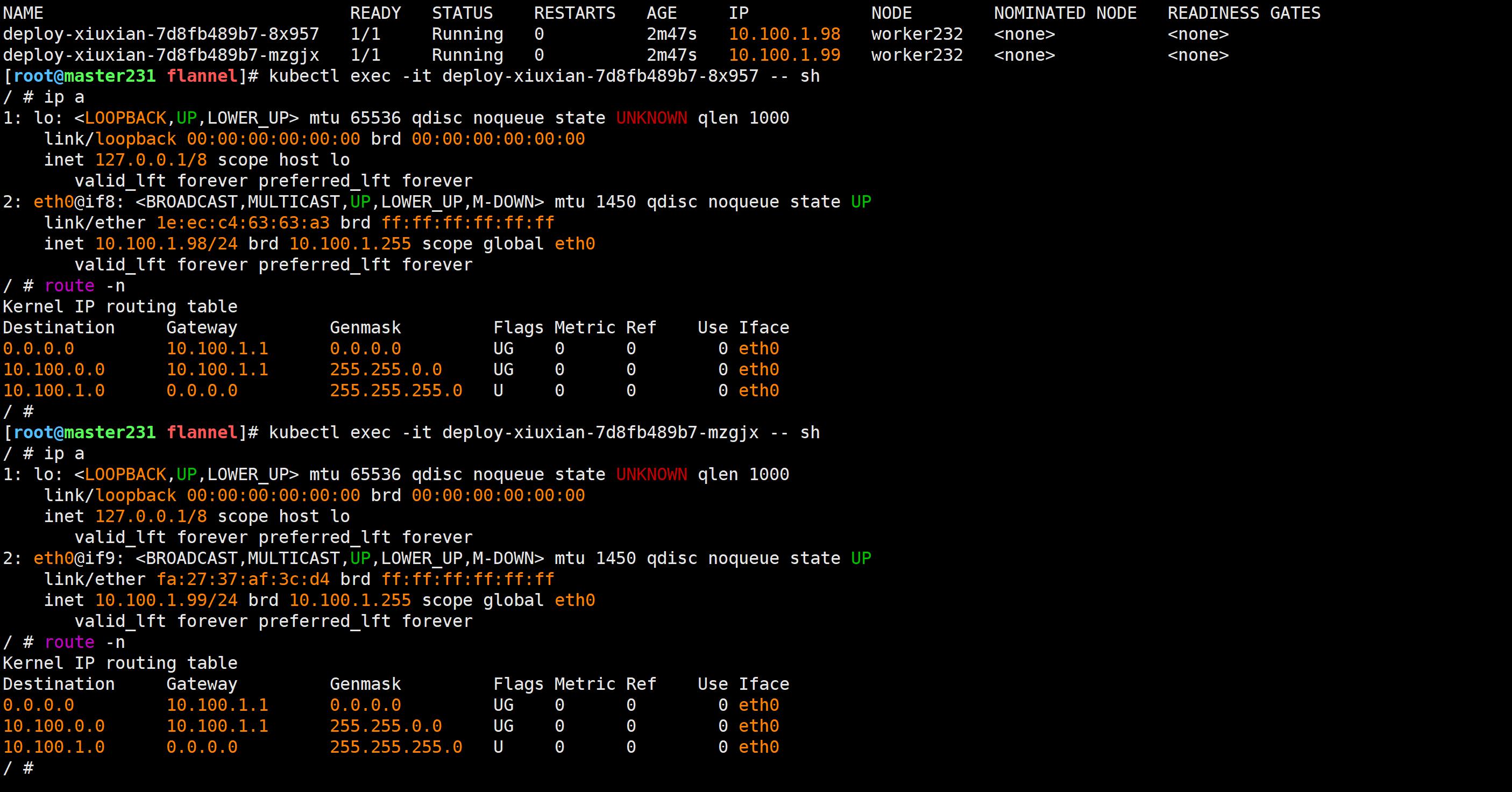

[root@master231 flannel]# kubectl get pods -o wide

[root@master231 flannel]# kubectl exec -it deploy-xiuxian-7d8fb489b7-8x957 -- sh

[root@master231 flannel]# kubectl exec -it deploy-xiuxian-7d8fb489b7-mzgjx -- sh

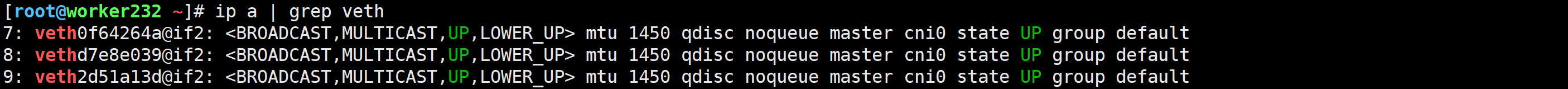

去worker节点测试验证

[root@worker232 ~]# ip a | grep veth

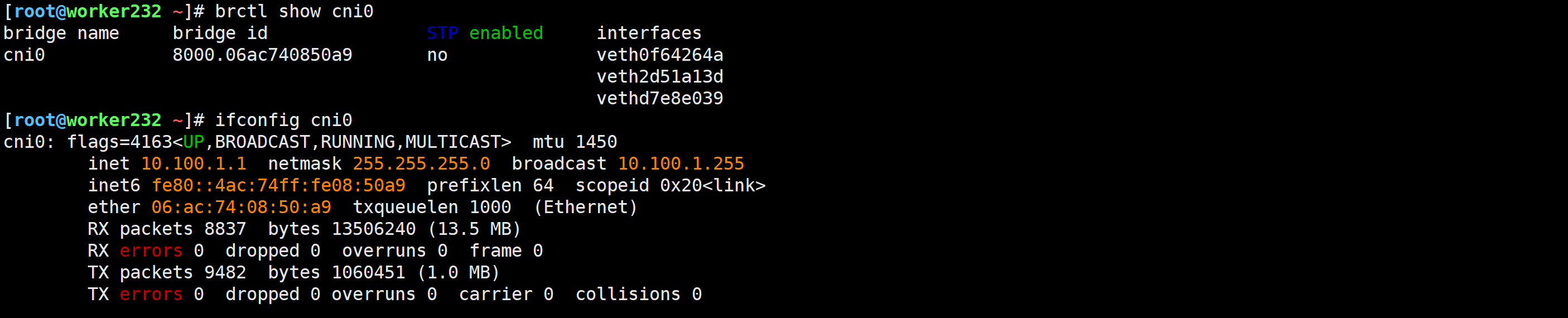

验证桥接网卡cni0

[root@worker232 ~]# apt install bride-utils

[root@worker232 ~]# brctl show cni0

bridge name bridge id STP enabled interfaces

cni0 8000.06ac740850a9 no veth0f64264aveth2d51a13dvethd7e8e039

抓包测试

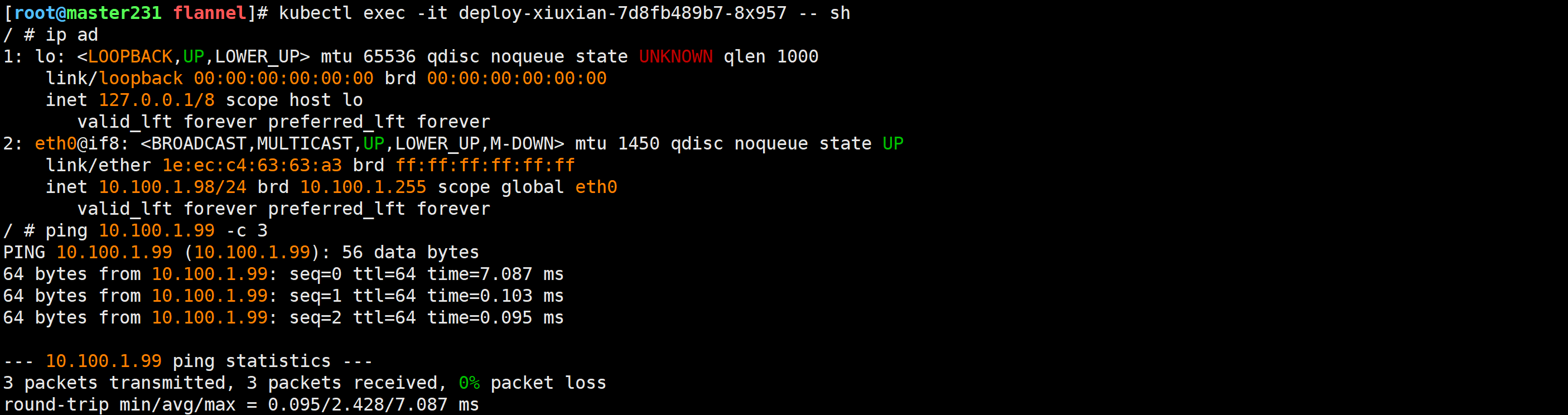

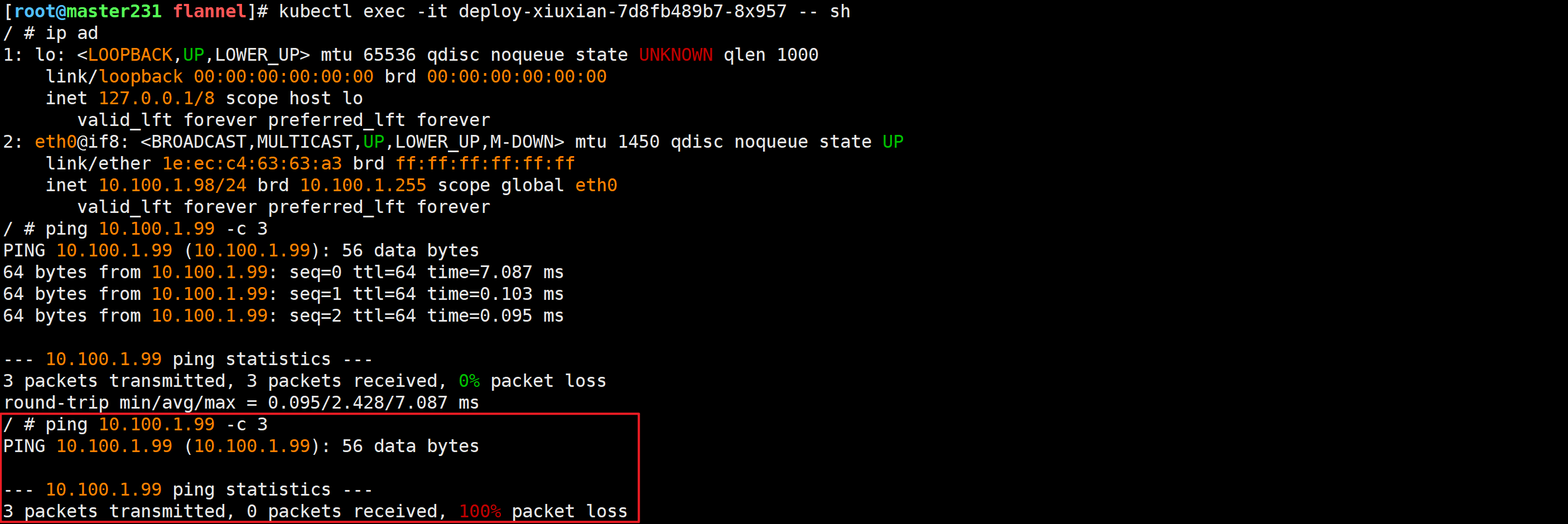

[root@master231 flannel]# kubectl exec -it deploy-xiuxian-7d8fb489b7-8x957 -- sh

/ # ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever

2: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue state UP link/ether 1e:ec:c4:63:63:a3 brd ff:ff:ff:ff:ff:ffinet 10.100.1.98/24 brd 10.100.1.255 scope global eth0valid_lft forever preferred_lft forever

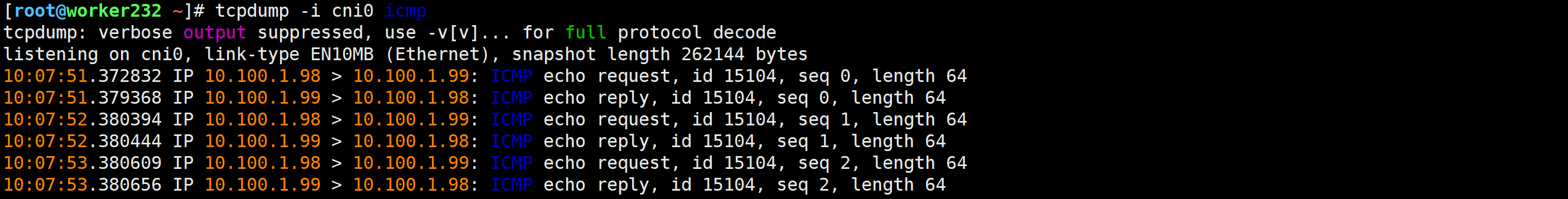

/ # ping 10.100.1.99 -c 3[root@worker232 ~]# tcpdump -i cni0 icmp

验证cni0网卡可以实现同节点不同pod数据通信

[root@worker232 ~]# ip link delete cni0/ # ping 10.100.1.99 -c 3

PING 10.100.1.99 (10.100.1.99): 56 data bytes--- 10.100.1.99 ping statistics ---

3 packets transmitted, 0 packets received, 100% packet loss

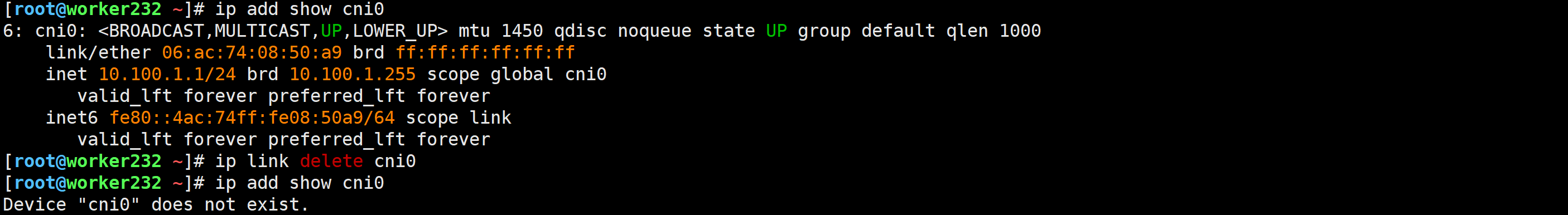

添加cni0网卡

1.添加cni0网卡

[root@worker232 ~]# ip link add cni0 type bridge

[root@worker232 ~]# ip addr add 10.100.1.1/24 dev cni0

[root@worker232 ~]# ip add show cni02.将网卡设备添加到cni0网桥

[root@worker232 ~]# brctl addif cni0 vethd7e8e039

[root@worker232 ~]# brctl addif cni0 veth2d51a13d3.激活网卡

[root@worker232 ~]# ip link set dev cni0 up

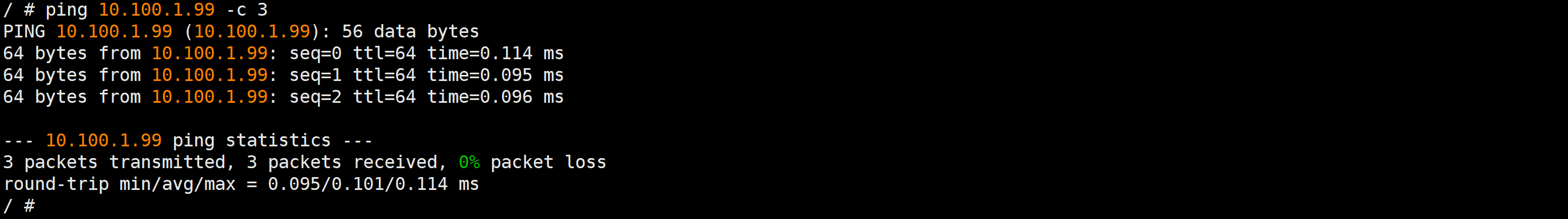

不同节点各Pod实现数据通信原理

环境准备

[root@master231 flannel]# cat 02-ds-two-worker.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:name: ds-xiuxian

spec:selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:containers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

[root@master231 flannel]# kubectl apply -f 02-ds-two-worker.yaml

daemonset.apps/ds-xiuxian created

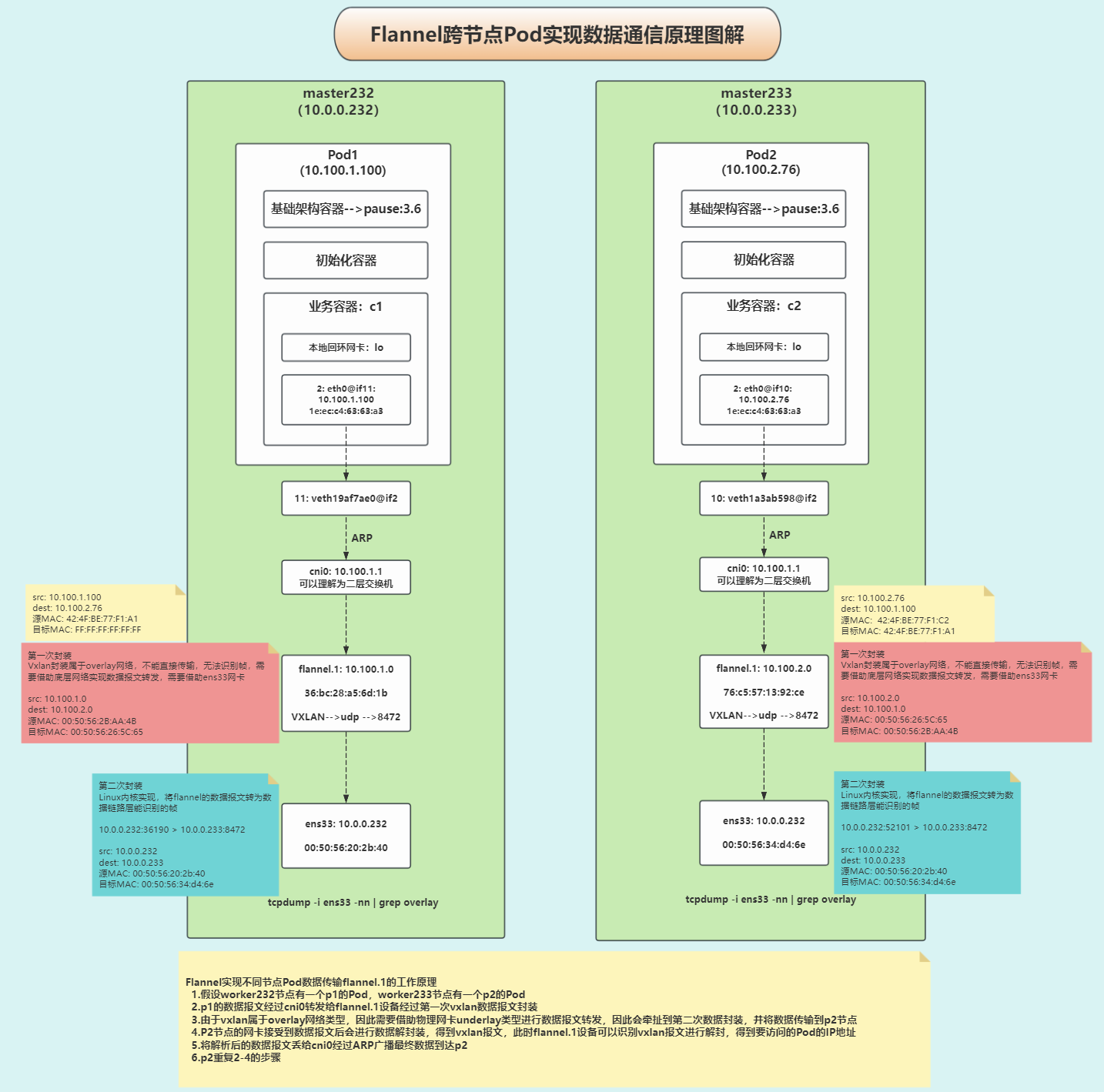

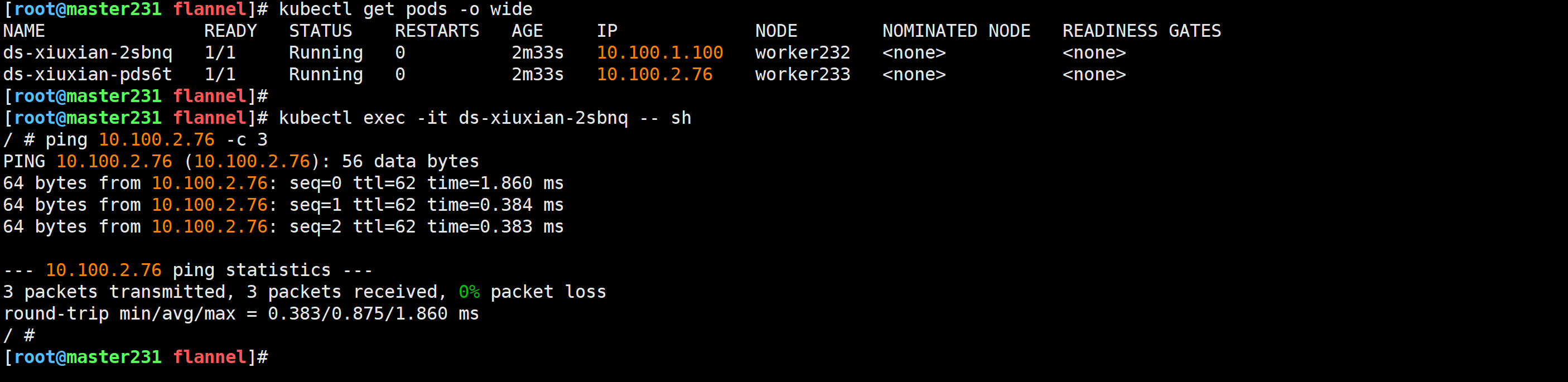

[root@master231 flannel]# kubectl get pods -o wide[root@master231 flannel]# kubectl exec -it ds-xiuxian-2sbnq -- sh

[root@master231 flannel]# kubectl exec -it ds-xiuxian-q4rzd -- sh

ping测试

[root@master231 flannel]# kubectl exec -it ds-xiuxian-2sbnq -- sh

/ # ping 10.100.2.76 -c 3

PING 10.100.2.76 (10.100.2.76): 56 data bytes

64 bytes from 10.100.2.76: seq=0 ttl=62 time=1.860 ms

64 bytes from 10.100.2.76: seq=1 ttl=62 time=0.384 ms

64 bytes from 10.100.2.76: seq=2 ttl=62 time=0.383 ms--- 10.100.2.76 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.383/0.875/1.860 ms

/ #

[root@master231 flannel]#

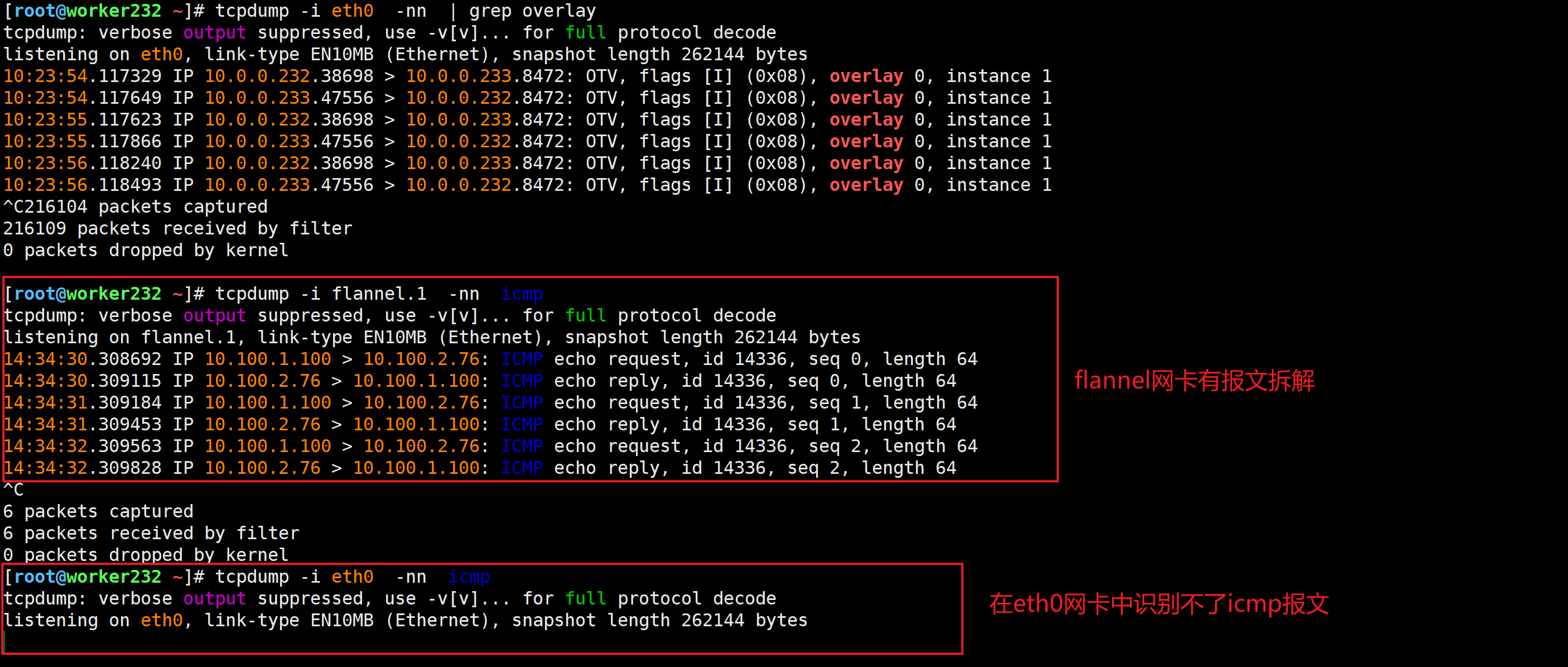

抓包测试

Flannel生产环境注意事项

- 1.如果Pod无法访问的情况,要检查各基点的宿主机网络路由,如果没有相应的路由,则可能会导致无法进行数据转发;

我们的Pod网络是10.100.0.0/16

- 2.Flannel的宿主机默认会占用一个C的地址

注意观察,子网掩码是"255.255.255.0",也就是说,当前节点的Pod的子网都是10.100.1.0/24。给节点的Pod数量254个。

- 综上所述得出结论如下

- 1.每个worker节点可用的IP地址是254个,减去一个网关和广播地址,说白了,就是一个节点最多有254个Pod;

- 2.由于每个节点会分配一个c的网络,因此网络的数量是10.100.0.0/16,总共能分的256个IP地子网,因此k8s集群的worker数量应该控制在256个;

flannel的工作模式切换

Flannel的工作模式

- udp: 早期支持的一种工作模式,由于性能差,目前官方已弃用。

- vxlan: 将源数据报文进行封装为二层报文(需要借助物理网卡转发),进行跨主机转发。

- host-gw: 将容器网络的路由信息写到宿主机的路由表上。尽管效率高,但不支持跨网段。

- directrouting: 将vxlan和host-gw工作模式结合工作。

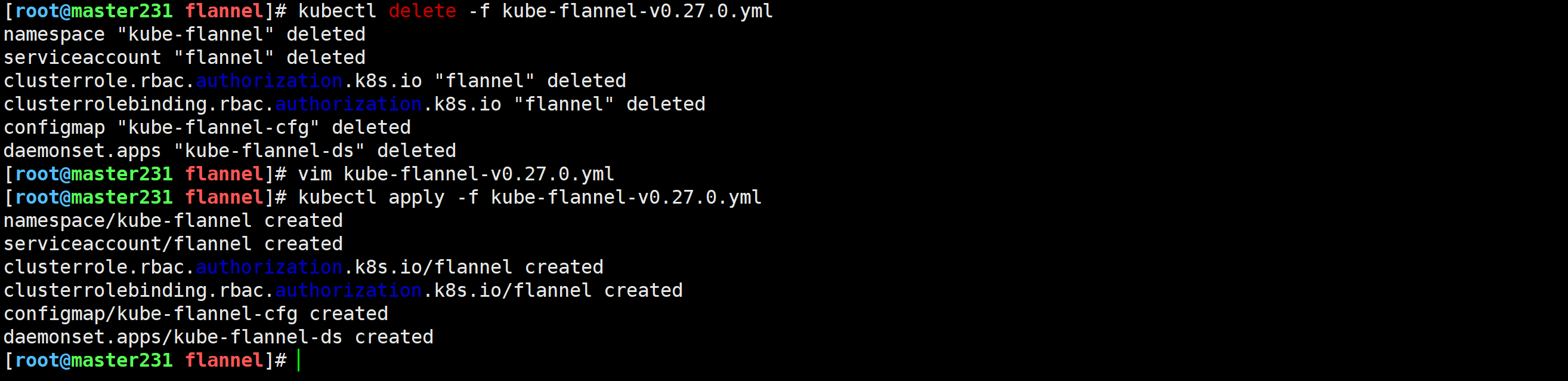

切换flannel的工作模式为"vxlan"

1.修改配置文件

[root@master231 flannel]# vim kube-flannel-v0.27.0.yml ...net-conf.json: |{"Network": "10.100.0.0/16","Backend": {"Type": "vxlan"}}2.2.重新创建资源

[root@master231 cni]# kubectl delete -f kube-flannel.yml

[root@master231 cni]# kubectl apply -f kube-flannel.yml 2.3.检查网络

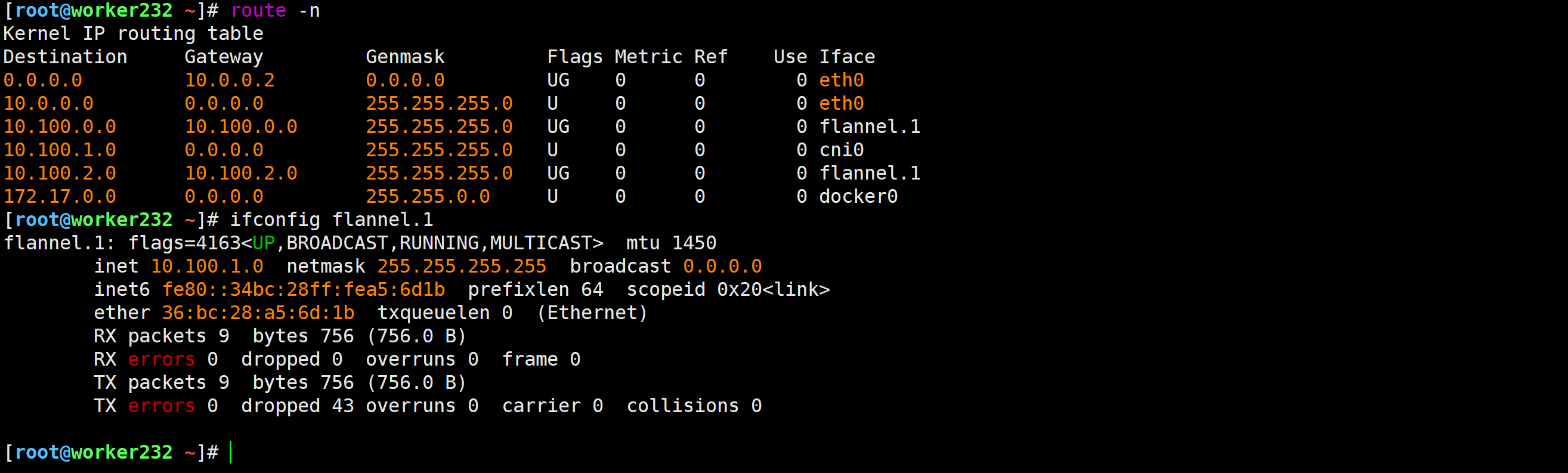

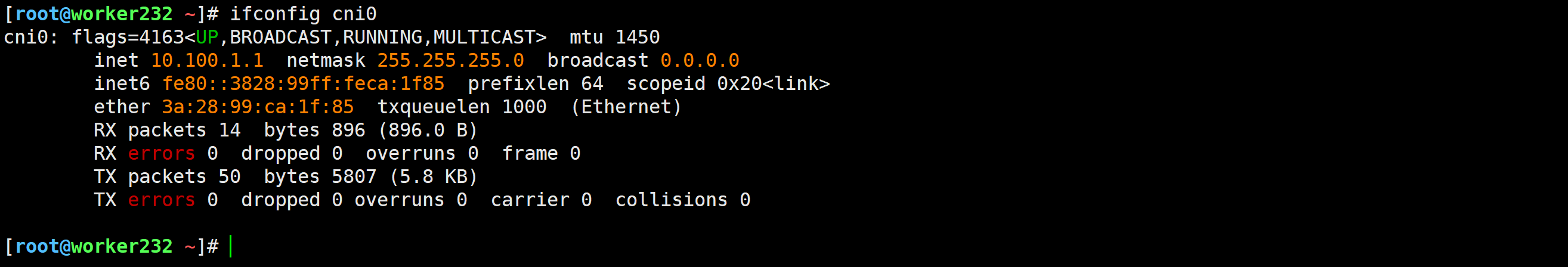

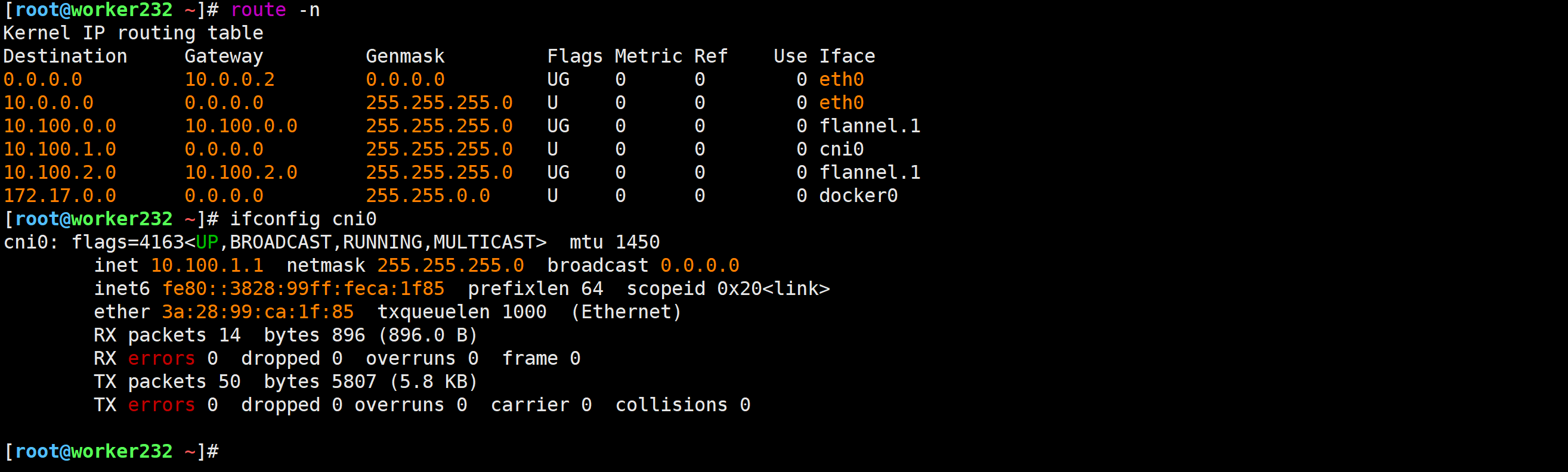

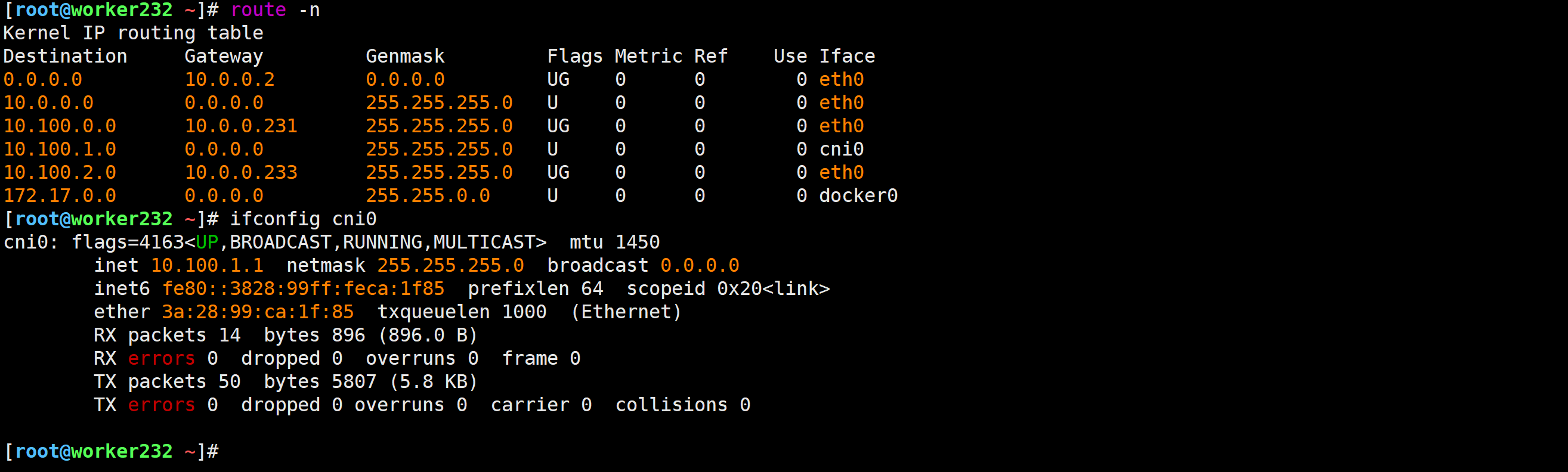

[root@worker232 ~]# route -n

切换flannel的工作模式为"host-gw"

1.修改配置文件

[root@master231 flannel]# vim kube-flannel-v0.27.0.yml ...net-conf.json: |{"Network": "10.100.0.0/16","Backend": {"Type": "host-gw"}}2.重新创建资源

[root@master231 cni]# kubectl delete -f kube-flannel.yml

[root@master231 cni]# kubectl apply -f kube-flannel.yml 3.检查网络

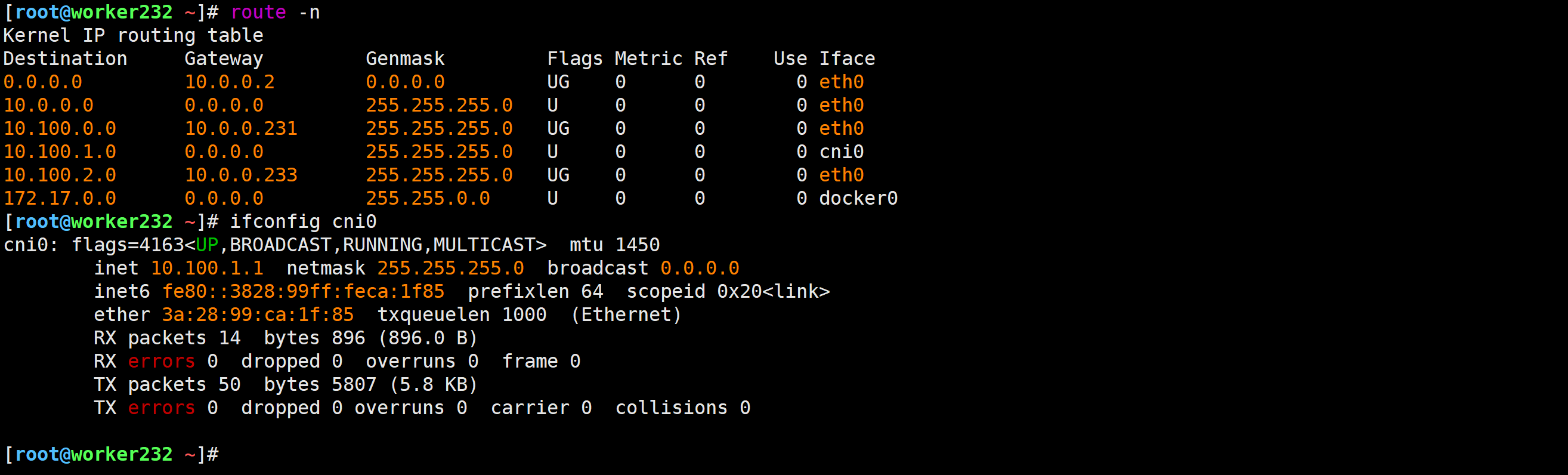

[root@worker232 ~]# route -n

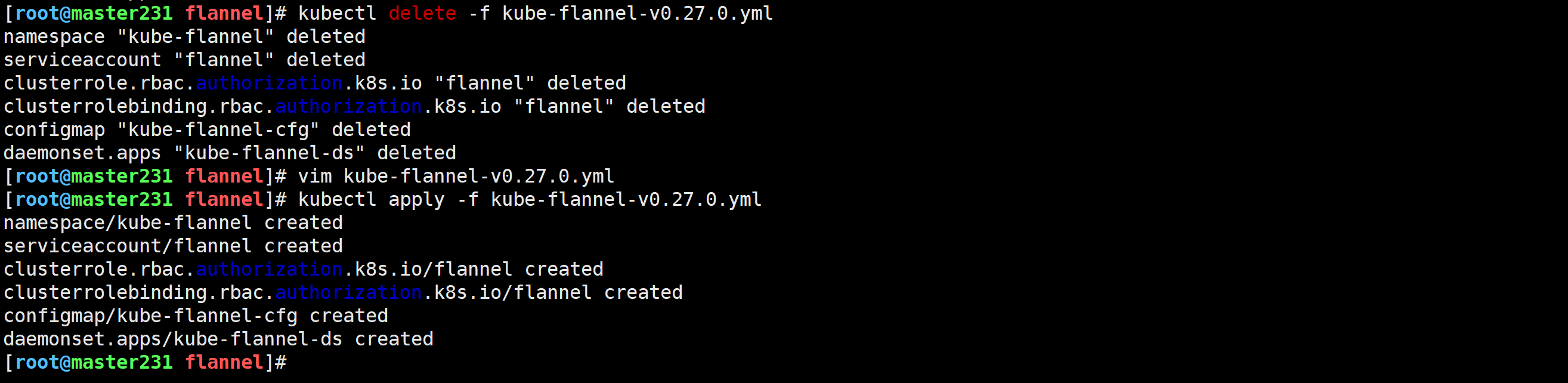

切换flannel的工作模式为"Directrouting"(推荐配置)

1.修改配置文件

[root@master231 cni]# vim kube-flannel.yml

...net-conf.json: |{"Network": "10.100.0.0/16","Backend": {"Type": "vxlan","Directrouting": true}}2.重新创建资源

[root@master231 ~]# kubectl delete -f kube-flannel-v0.27.0.yml

[root@master231 ~]# kubectl apply -f kube-flannel-v0.27.0.yml3.检查网络

[root@worker232 ~]# route -n

🌟Flannel故障案例

案例一:未安装Flannel插件

故障现象

[root@master231 pods]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xixi 0/1 ContainerCreating 0 169m <none> worker233 <none> <none>

[root@master231 pods]#

[root@master231 pods]# kubectl describe pod xixi

Name: xixi

Namespace: default

Priority: 0

Node: worker233/10.0.0.233...Events:Type Reason Age From Message---- ------ ---- ---- -------Warning FailedCreatePodSandBox 9m43s (x3722 over 169m) kubelet (combined from similar events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "ae48b3c943557dafdc5f8a3b06897da299233021ed2fd907818cc5acf86c16eb" network for pod "xixi": networkPlugin cni failed to set up pod "xixi_default" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directoryNormal SandboxChanged 4m43s (x3850 over 169m) kubelet Pod sandbox changed, it will be killed and re-created.

[root@master231 pods]#

故障原因

发现缺少’/run/flannel/subnet.env’这个文件,说明没有部署Flannel插件,安装插件即可。

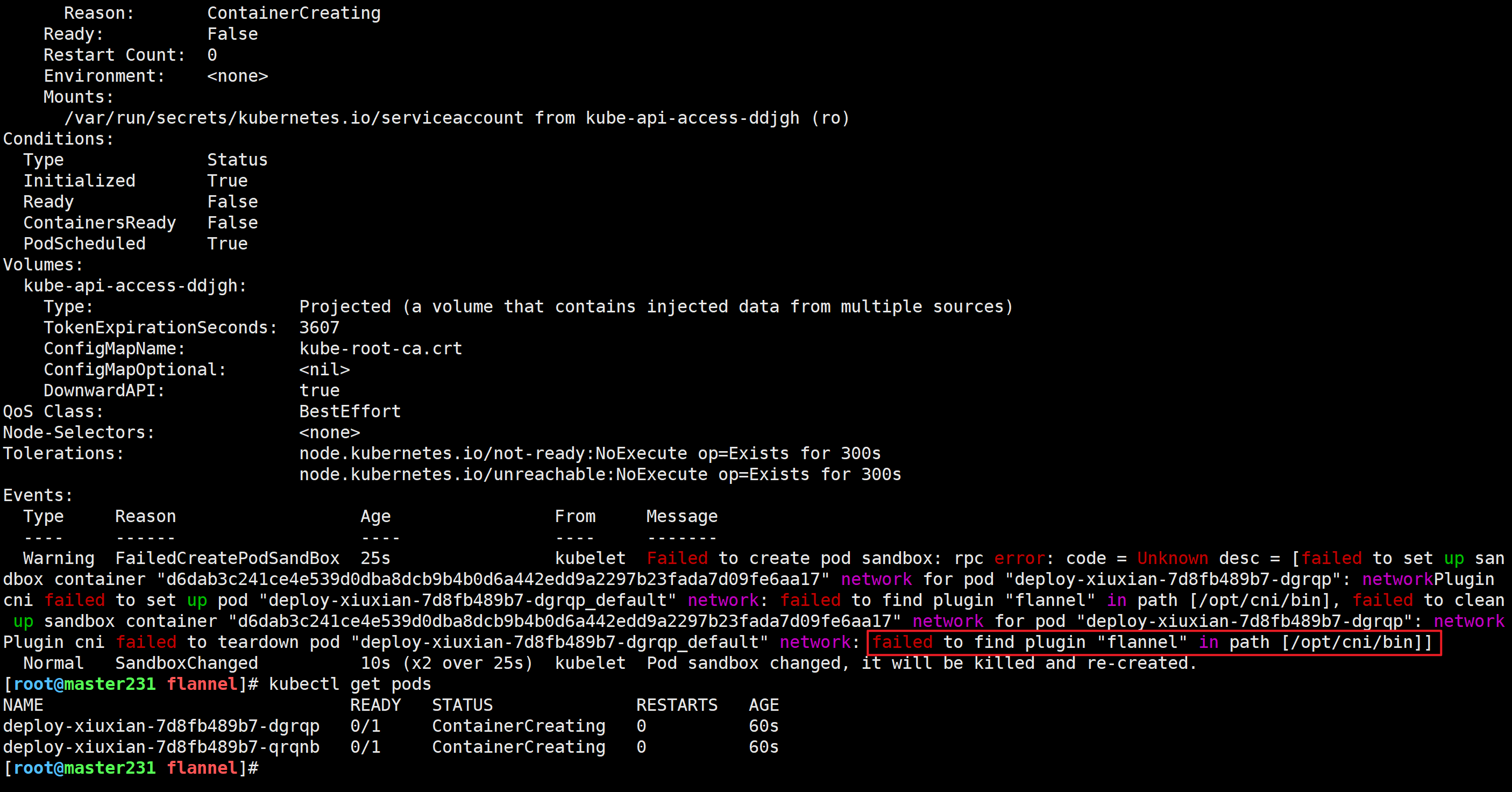

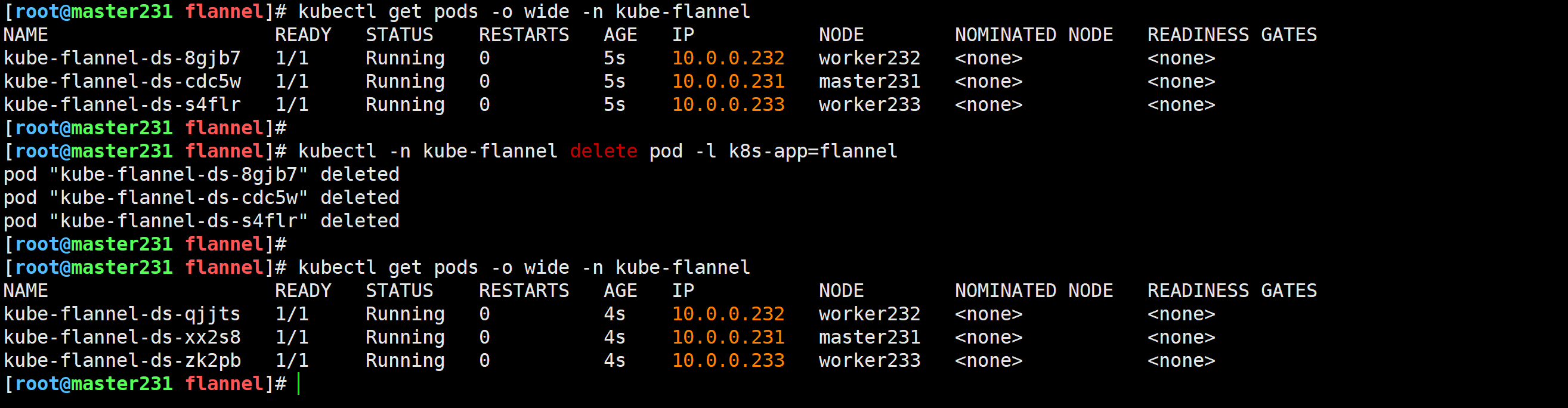

案例二:缺少Flannel程序

故障现象

[root@master231 flannel]# kubectl describe pods deploy-xiuxian-7d8fb489b7-dgrqp

Name: deploy-xiuxian-7d8fb489b7-dgrqp

Namespace: default

Priority: 0

Node: worker232/10.0.0.232

......

Events:Type Reason Age From Message---- ------ ---- ---- -------Warning FailedCreatePodSandBox 25s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = [failed to set up sandbox container "d6dab3c241ce4e539d0dba8dcb9b4b0d6a442edd9a2297b23fada7d09fe6aa17" network for pod "deploy-xiuxian-7d8fb489b7-dgrqp": networkPlugin cni failed to set up pod "deploy-xiuxian-7d8fb489b7-dgrqp_default" network: failed to find plugin "flannel" in path [/opt/cni/bin], failed to clean up sandbox container "d6dab3c241ce4e539d0dba8dcb9b4b0d6a442edd9a2297b23fada7d09fe6aa17" network for pod "deploy-xiuxian-7d8fb489b7-dgrqp": networkPlugin cni failed to teardown pod "deploy-xiuxian-7d8fb489b7-dgrqp_default" network: failed to find plugin "flannel" in path [/opt/cni/bin]]Normal SandboxChanged 10s (x2 over 25s) kubelet Pod sandbox changed, it will be killed and re-created.

问题原因

问题原因: 在"/opt/cni/bin"路径下找不到一个名为"flannel"的二进制文件。

解决方法

官方关于Flannel的初始化容器中存在Flannel设备,因此删除Pod后就能够自动生成该程序文件。

[root@master231 flannel]# kubectl -n kube-flannel delete pod -l k8s-app=flannel

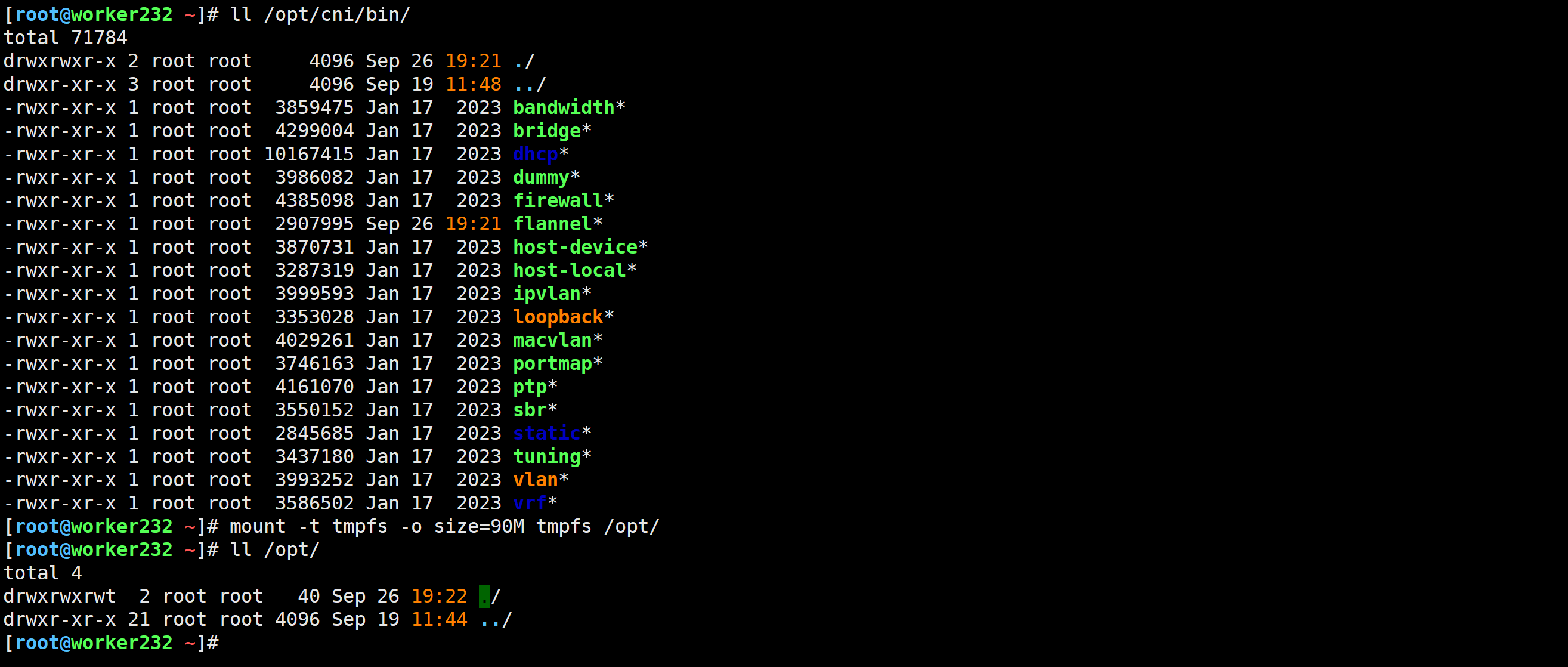

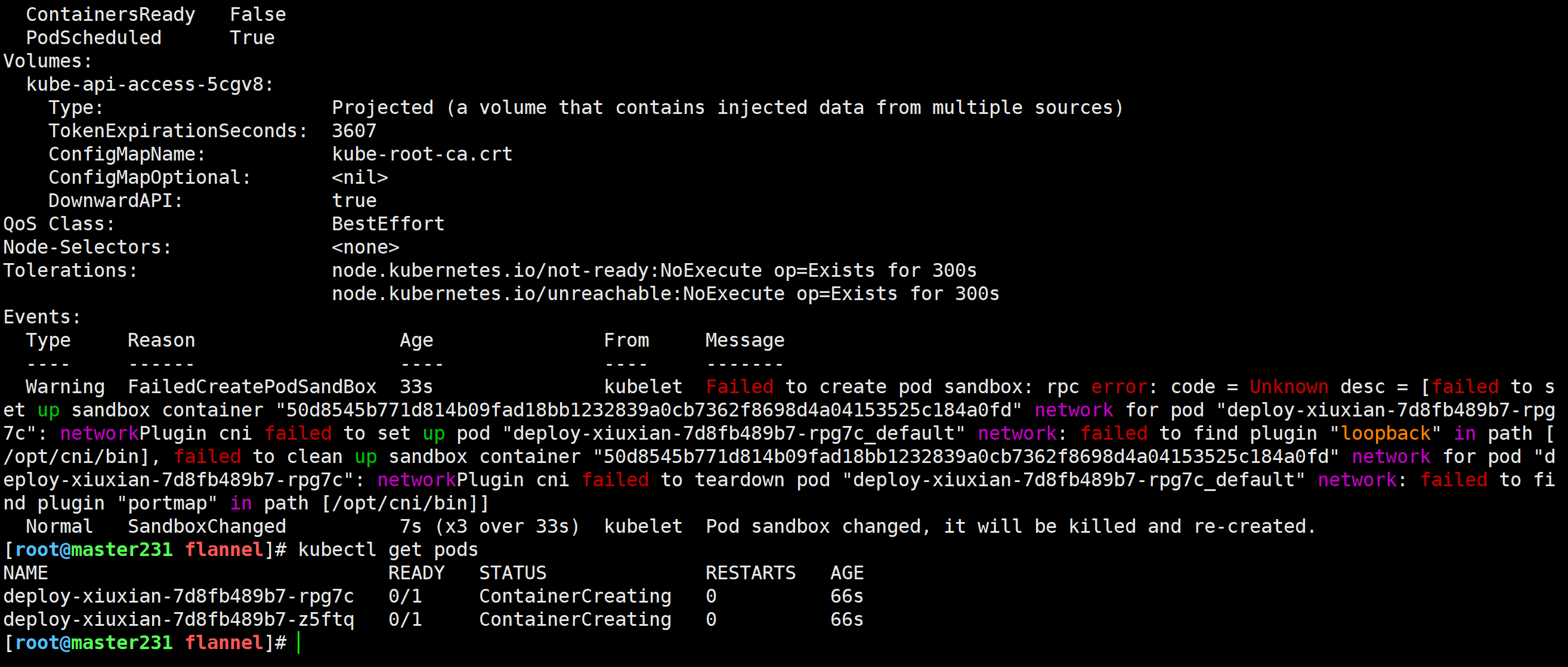

案例三:缺失程序包文件

故障复现

[root@worker232 ~]# mount -t tmpfs -o size=90M tmpfs /opt/

创建测试的pod

[root@master231 flannel]# kubectl apply -f 01-deploy-one-worker.yaml

[root@master231 flannel]# kubectl describe pod deploy-xiuxian-7d8fb489b7-rpg7c

Name: deploy-xiuxian-7d8fb489b7-rpg7c

Namespace: default

Priority: 0

Node: worker232/10.0.0.232

...

Events:Type Reason Age From Message---- ------ ---- ---- -------Warning FailedCreatePodSandBox 33s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = [failed to set up sandbox container "50d8545b771d814b09fad18bb1232839a0cb7362f8698d4a04153525c184a0fd" network for pod "deploy-xiuxian-7d8fb489b7-rpg7c": networkPlugin cni failed to set up pod "deploy-xiuxian-7d8fb489b7-rpg7c_default" network: failed to find plugin "loopback" in path [/opt/cni/bin], failed to clean up sandbox container "50d8545b771d814b09fad18bb1232839a0cb7362f8698d4a04153525c184a0fd" network for pod "deploy-xiuxian-7d8fb489b7-rpg7c": networkPlugin cni failed to teardown pod "deploy-xiuxian-7d8fb489b7-rpg7c_default" network: failed to find plugin "portmap" in path [/opt/cni/bin]]Normal SandboxChanged 7s (x3 over 33s) kubelet Pod sandbox changed, it will be killed and re-created.

[root@master231 flannel]#

问题原因

在’/opt/cni/bin’目录下缺失’portmap’程序,该程序主要做端口映射相关的。

我们需要检查一下该目录是否有程序文件,也有可能是运维同事误操作挂载导致的问题。检查是否有挂载点冲突问题。

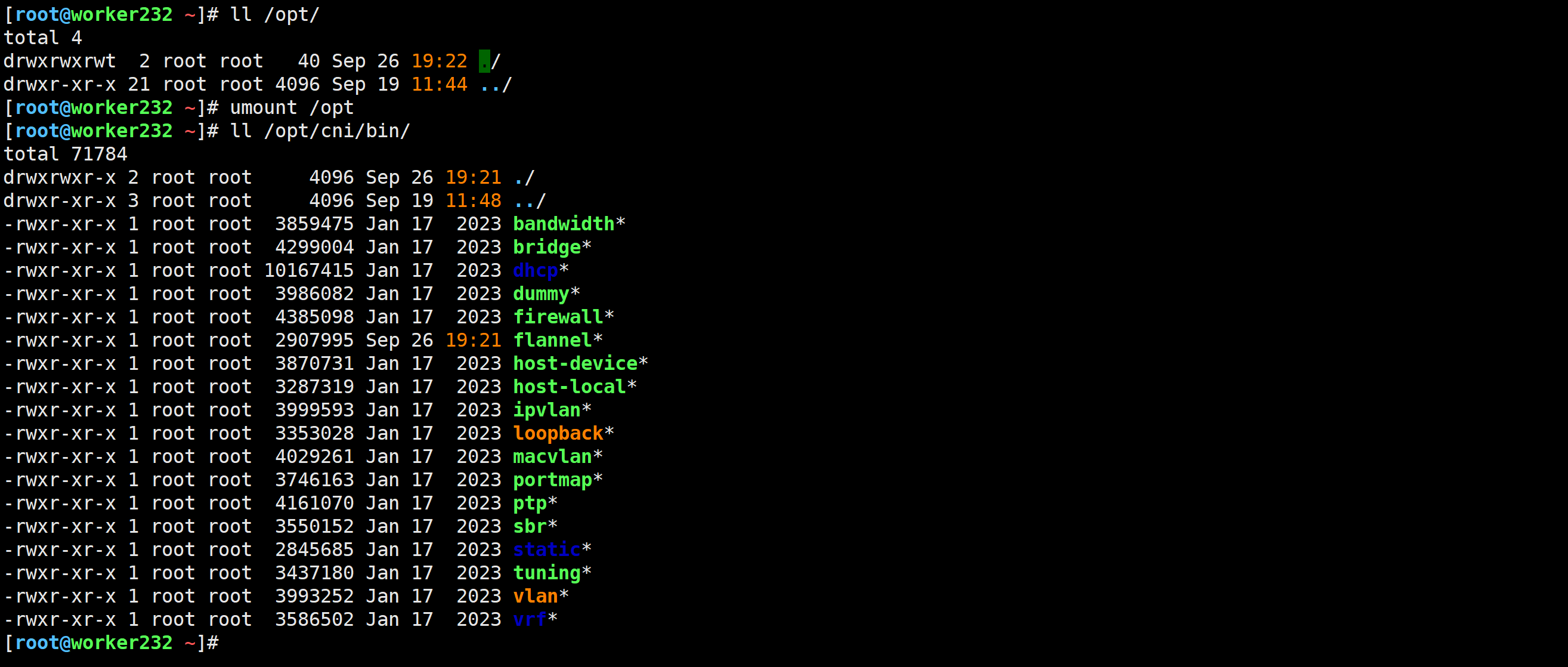

解决方法

取消挂载

[root@worker232 ~]# umount /opt

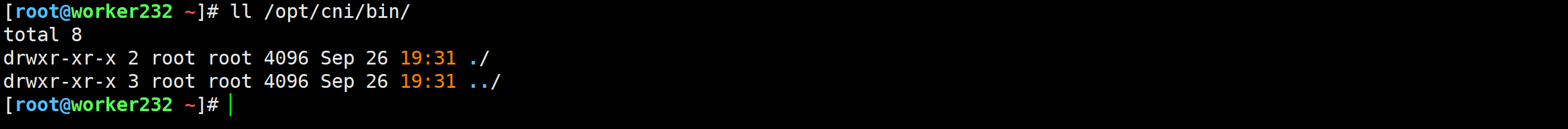

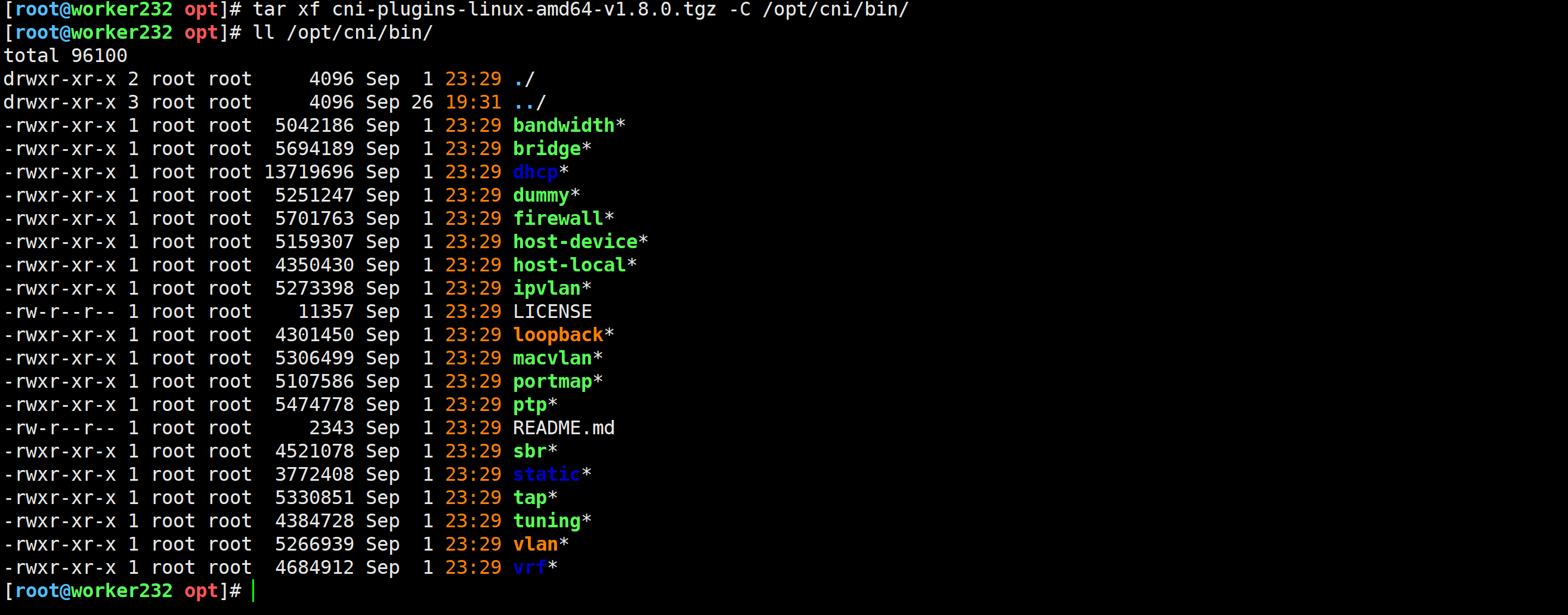

案例四:无CNI程序包

参考链接:https://github.com/containernetworking/plugins

[root@worker232 ~]# ll /opt/cni/bin/

[root@master231 flannel]# kubectl describe pod deploy-xiuxian-7d8fb489b7-95cv5

Name: deploy-xiuxian-7d8fb489b7-95cv5

Namespace: default

Priority: 0

Node: worker232/10.0.0.232

...

Events:Type Reason Age From Message---- ------ ---- ---- -------Warning FailedCreatePodSandBox 93s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = [failed to set up sandbox container "e8924c0c808ce4cfafe523e60c99427cbdce870eb69021a1141a79146cf8c57c" network for pod "deploy-xiuxian-7d8fb489b7-95cv5": networkPlugin cni failed to set up pod "deploy-xiuxian-7d8fb489b7-95cv5_default" network: failed to find plugin "loopback" in path [/opt/cni/bin], failed to clean up sandbox container "e8924c0c808ce4cfafe523e60c99427cbdce870eb69021a1141a79146cf8c57c" network for pod "deploy-xiuxian-7d8fb489b7-95cv5": networkPlugin cni failed to teardown pod "deploy-xiuxian-7d8fb489b7-95cv5_default" network: failed to find plugin "portmap" in path [/opt/cni/bin]]Normal SandboxChanged 4s (x9 over 92s) kubelet Pod sandbox changed, it will be killed and re-created.

下载CNI程序包

[root@worker232 ~]# wget https://github.com/containernetworking/plugins/releases/download/v1.8.0/cni-plugins-linux-amd64-v1.8.0.tgz

解压程序包到目录下

[root@worker232 opt]# tar xf cni-plugins-linux-amd64-v1.8.0.tgz -C /opt/cni/bin/

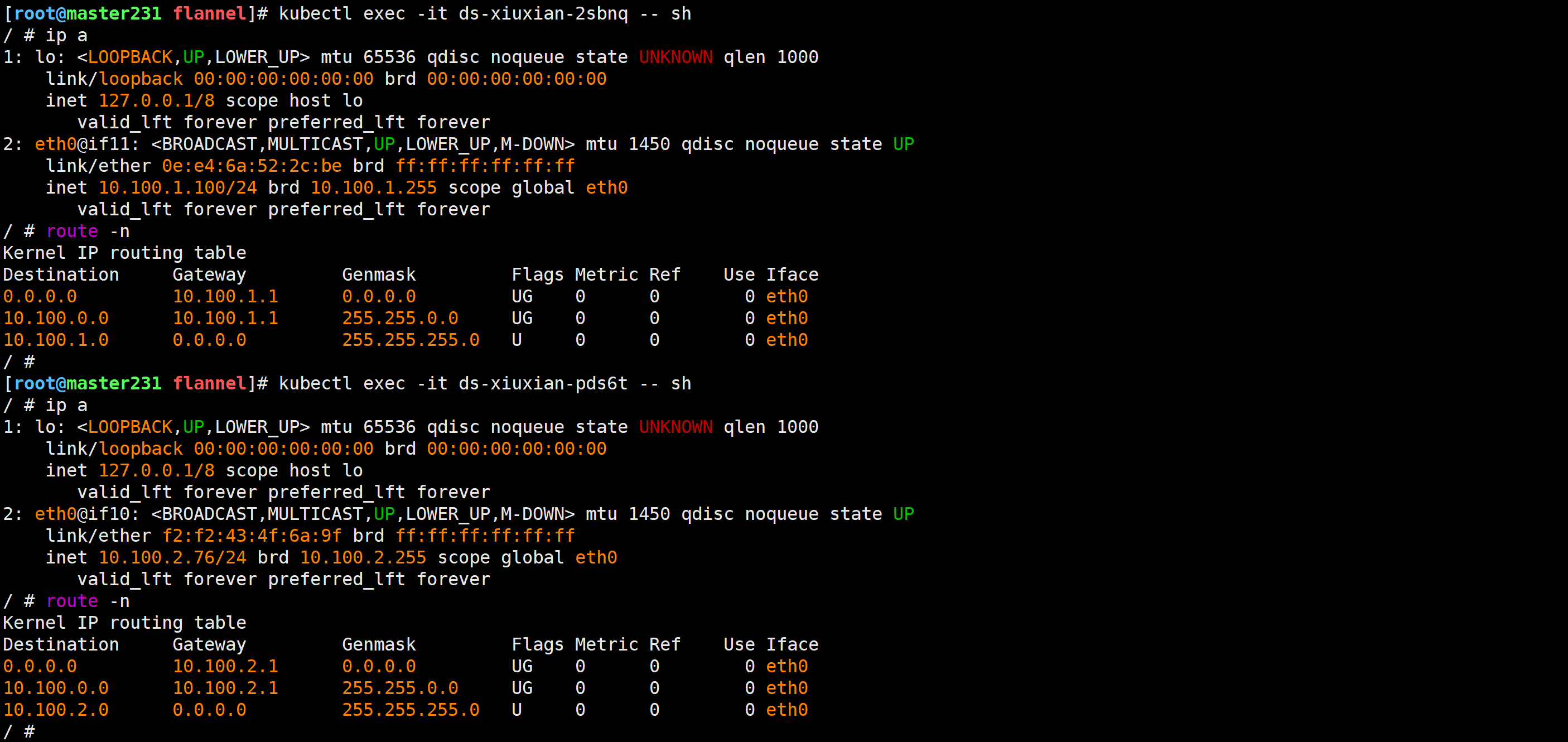

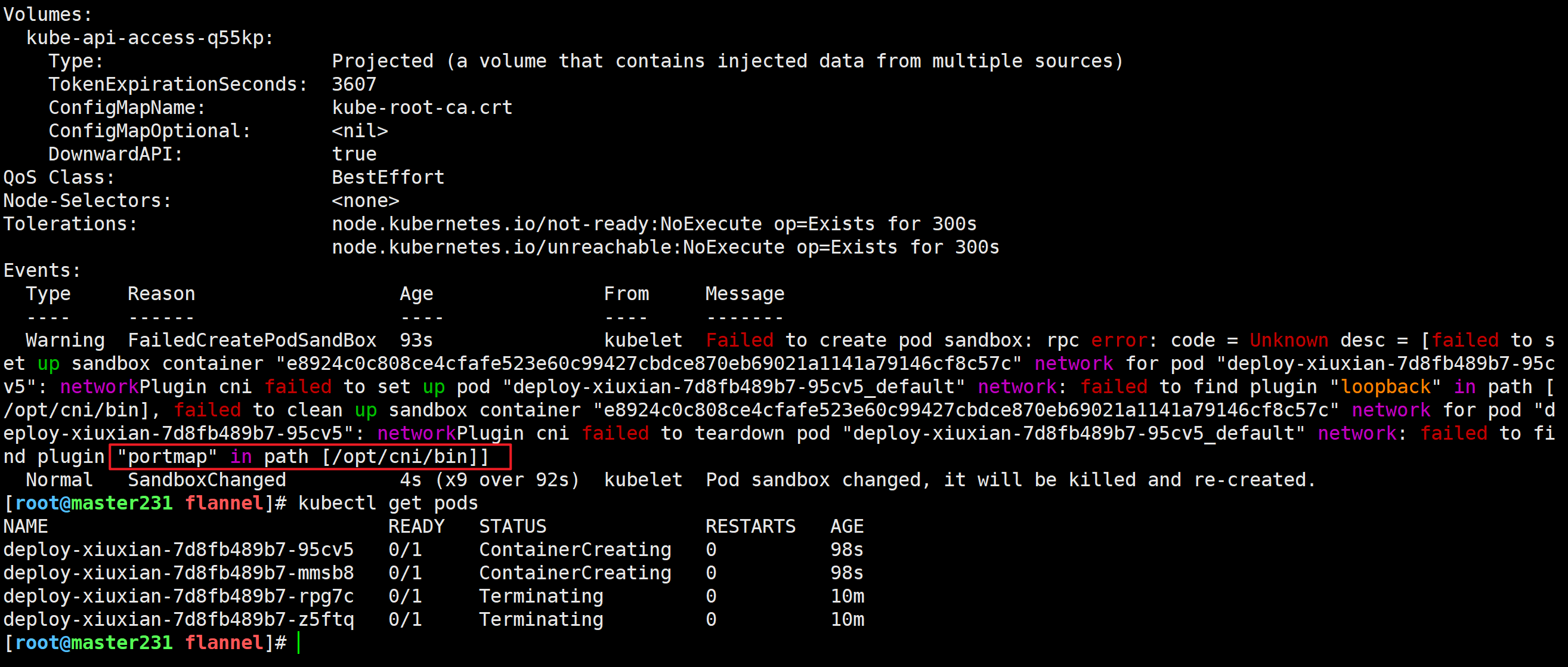

案例五:Flannel路由缺失案例

查看路由

# 删除之前检查本地路由没有10.100.0.0/16的路由

[root@master231 deployments]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.254 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

解决方法

[root@master231 deployments]# kubectl -n kube-flannel delete pods --all

pod "kube-flannel-ds-c9mp9" deleted

pod "kube-flannel-ds-pphgj" deleted

pod "kube-flannel-ds-vqr86" deleted# 删除之后检查本地路由存在10.100.0.0/16的路由,因此可以正常访问Pod。

[root@master231 deployments]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.0.254 0.0.0.0 UG 0 0 0 eth0

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

10.100.1.0 10.0.0.232 255.255.255.0 UG 0 0 0 eth0

10.100.2.0 10.0.0.233 255.255.255.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

🌟镜像的拉取策略

什么是镜像拉取策略

所谓的镜像拉取策略,指的是容器在创建过程中是否拉取镜像。

官方支持三种镜像拉取策略: Always,Never,IfNotPresent。

- Never:

- 如果本地有镜像则尝试启动

- 如果本地没有镜像也不会去远程仓库拉取镜像

- IfNotPresent

- 如果本地有镜像则尝试启动,

- 如果本地没有镜像会去远程仓库拉取镜像。

- Always:

- 如果本地有镜像,会对比本地镜像和远程镜像的digest(摘要信息),若相同则使用本地缓存镜像,若不同则重新拉取远程仓库镜像覆盖本地镜像。

- 这里的摘要信息可以简单理解为md5校验值,说白了,就是为了验证镜像是否发生改变,要确保本地和远程仓库的镜像内容一致性!

- 如果本地没有镜像会去远程仓库拉取镜像。

容器的默认拉取策略和镜像的tag有关,如果镜像的tag之为"latest",则默认的拉取策略为"Always",如果进行过的tag非"latest",则默认的拉取策略为"IfNotPresent"。

举个例子:

- “alpine:latest”,“nginx:latest”,这些标签都为"latest",则默认的镜像拉取策略为"Always"。

- “nginx:1.27.1-alpine”,“ubuntu:22.04”,“centos:7.9”,这些标签都不是"latest",则默认的镜像拉取策略为"IfNotPresent"。

[root@master231 ~]# kubectl explain po.spec.containers.imagePullPolicy

KIND: Pod

VERSION: v1FIELD: imagePullPolicy <string>DESCRIPTION:Image pull policy. One of Always, Never, IfNotPresent. Defaults to Alwaysif :latest tag is specified, or IfNotPresent otherwise. Cannot be updated.More info:https://kubernetes.io/docs/concepts/containers/images#updating-images

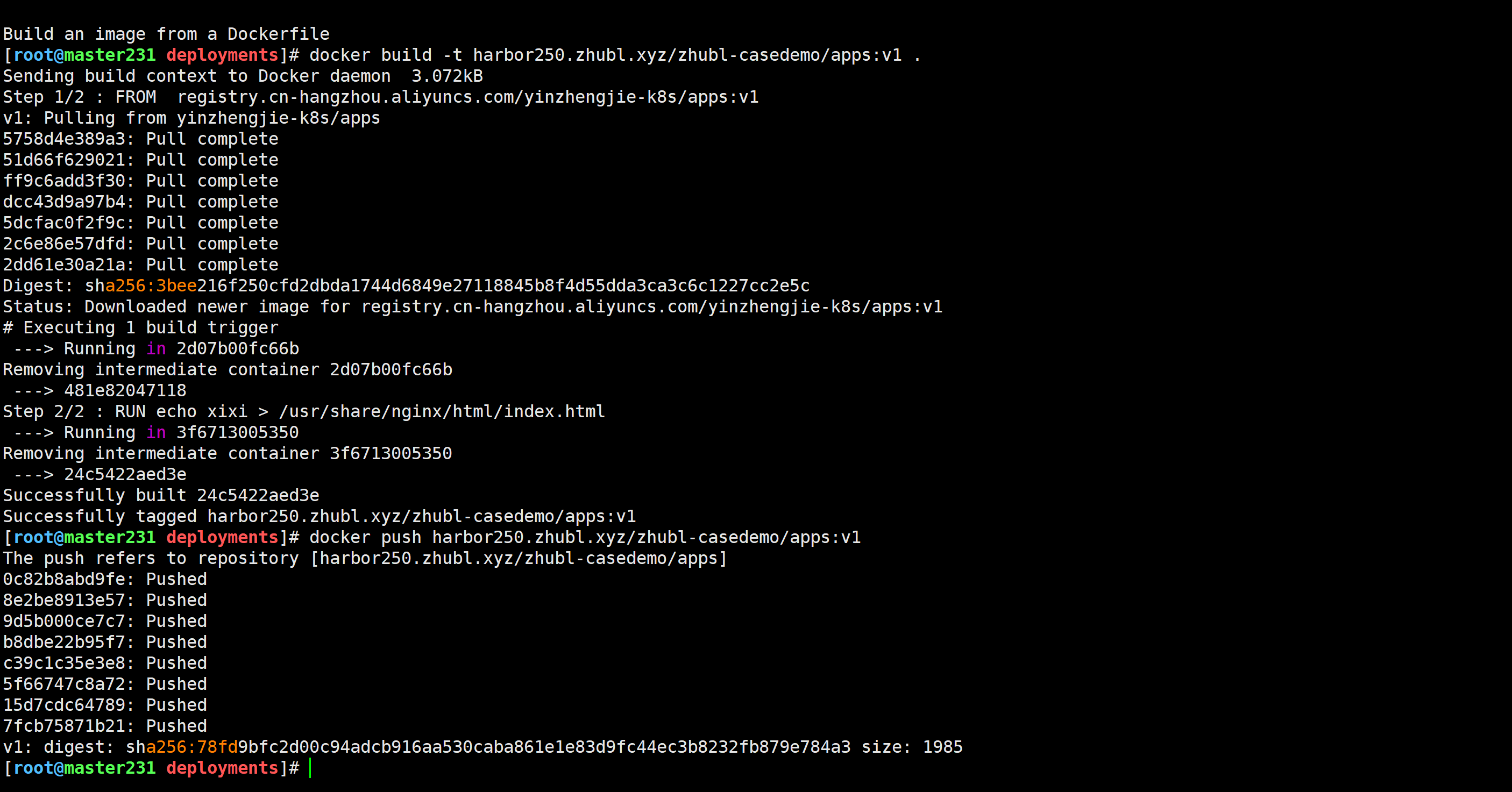

环境准备

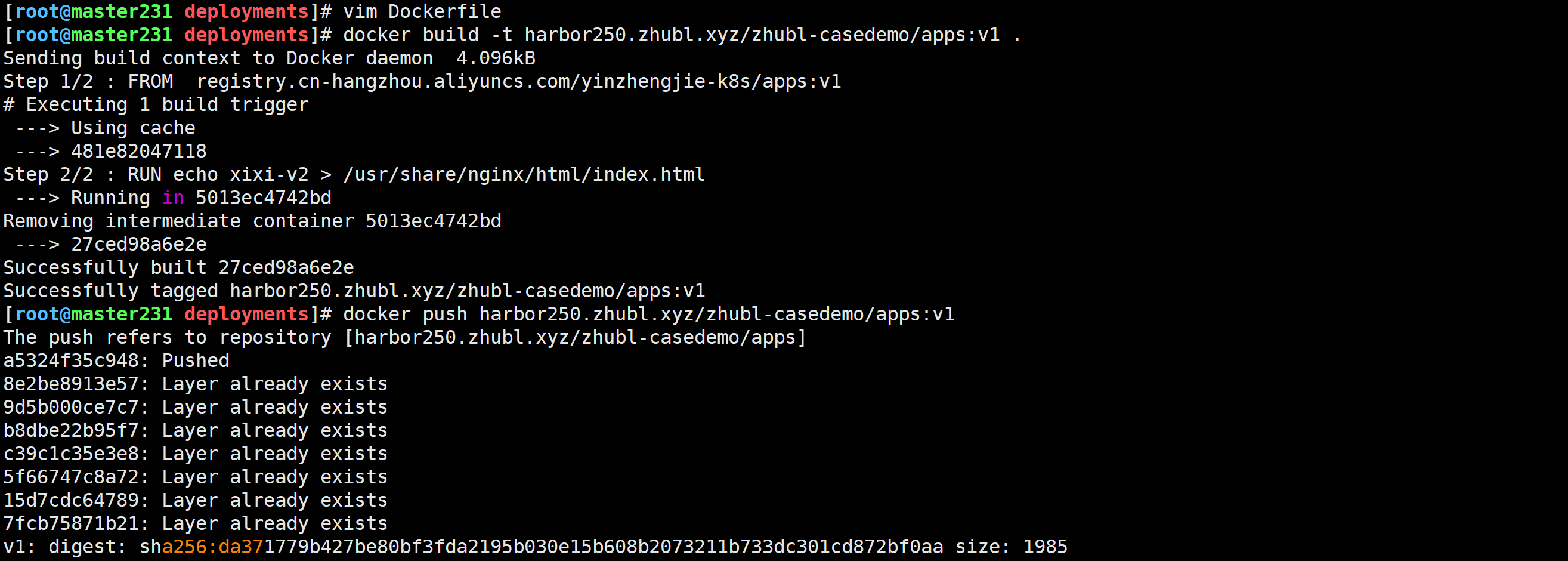

[root@master231 deployments]# cat Dockerfile

FROM registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1RUN echo xixi > /usr/share/nginx/html/index.html[root@master231 deployments]# docker build -t harbor250.zhubl.xyz/zhubl-casedemo/apps:v1 .

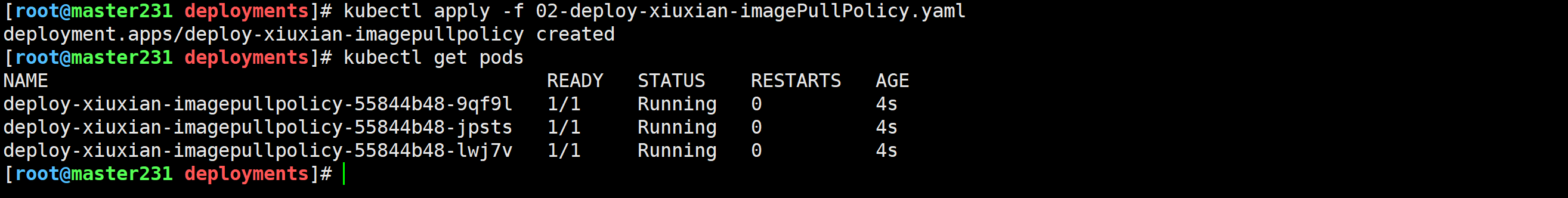

测试镜像拉取策略Never

[root@master231 deployments]# cat 02-deploy-xiuxian-imagePullPolicy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian-imagepullpolicy

spec:replicas: 3selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:nodeName: worker232containers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1imagePullPolicy: Never

测试镜像拉取策略IfNotPresent

[root@master231 deployments]# cat 02-deploy-xiuxian-imagePullPolicy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian-imagepullpolicy

spec:replicas: 3selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:nodeName: worker232containers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1#imagePullPolicy: NeverimagePullPolicy: IfNotPresent

修改远程仓库的内容

[root@master231 deployments]# cat Dockerfile

FROM registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1RUN echo xixi-v2 > /usr/share/nginx/html/index.html

[root@master231 deployments]# docker build -t harbor250.zhubl.xyz/zhubl-casedemo/apps:v1 .

[root@master231 deployments]# docker push harbor250.zhubl.xyz/zhubl-casedemo/apps:v1

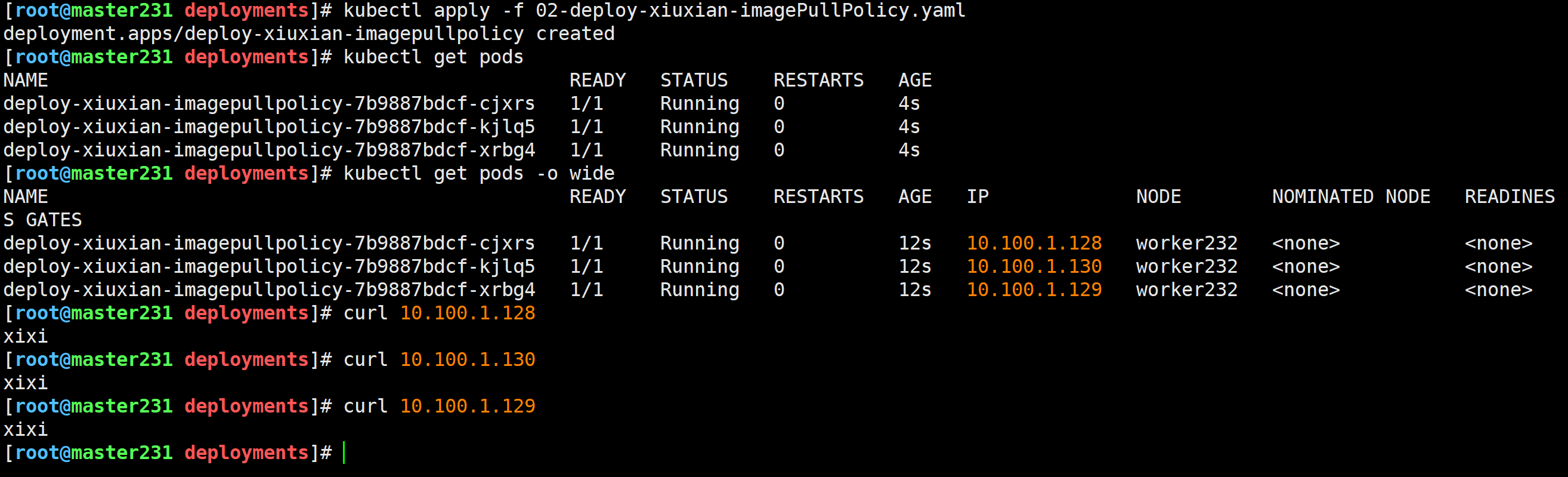

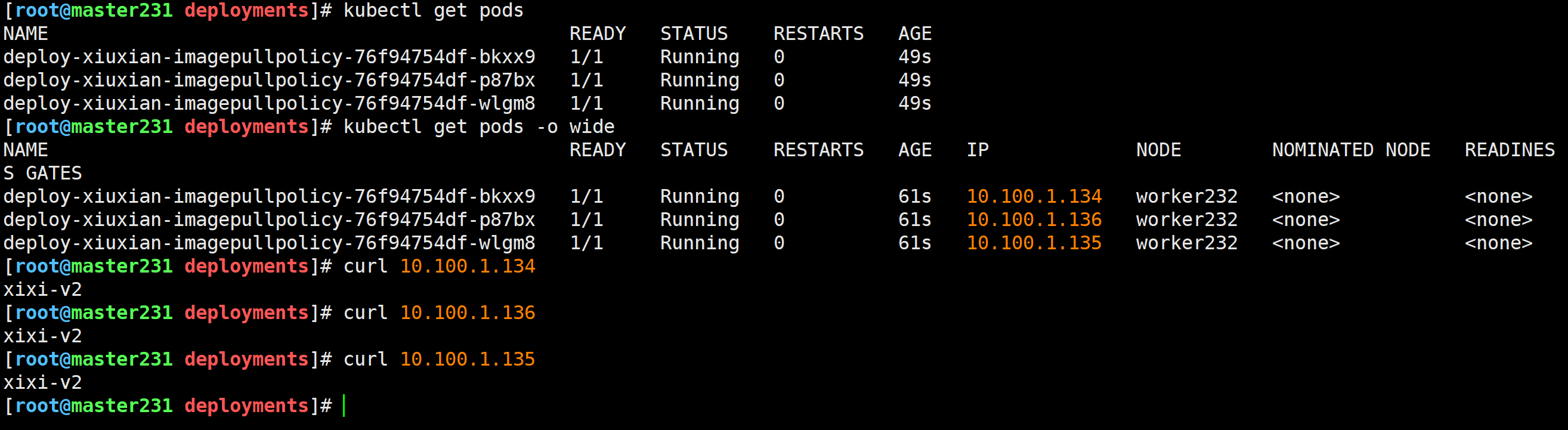

测试镜像拉取策略Always

[root@master231 deployments]# cat 02-deploy-xiuxian-imagePullPolicy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian-imagepullpolicy

spec:replicas: 3selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:nodeName: worker232containers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1#imagePullPolicy: Never#imagePullPolicy: IfNotPresentimagePullPolicy: Always

查看worker节点镜像结果

[root@worker232 opt]# docker images | grep harbor250.zhubl.xyz/zhubl-casedemo/apps

harbor250.zhubl.xyz/zhubl-casedemo/apps v1 27ced98a6e2e 8 minutes ago 23MB

harbor250.zhubl.xyz/zhubl-casedemo/apps <none> 24c5422aed3e 4 hours ago 23MB

[root@worker232 opt]#

温馨提示

- 生产环境中,不建议大家使用latest标签,而是使用明确的版本标签。

- 镜像拉取策略如果你要始终保证部署的镜像和你远程仓库的镜像是一致的,则可以使用Always。

- 但是,目前国外的一些镜像可能无法拉取,比如k8s.io,docker.io等仓库无法拉取,我们可以手动拉取到本地上传到worker节点,而后修改镜像的拉取策略为IfNotPresent;

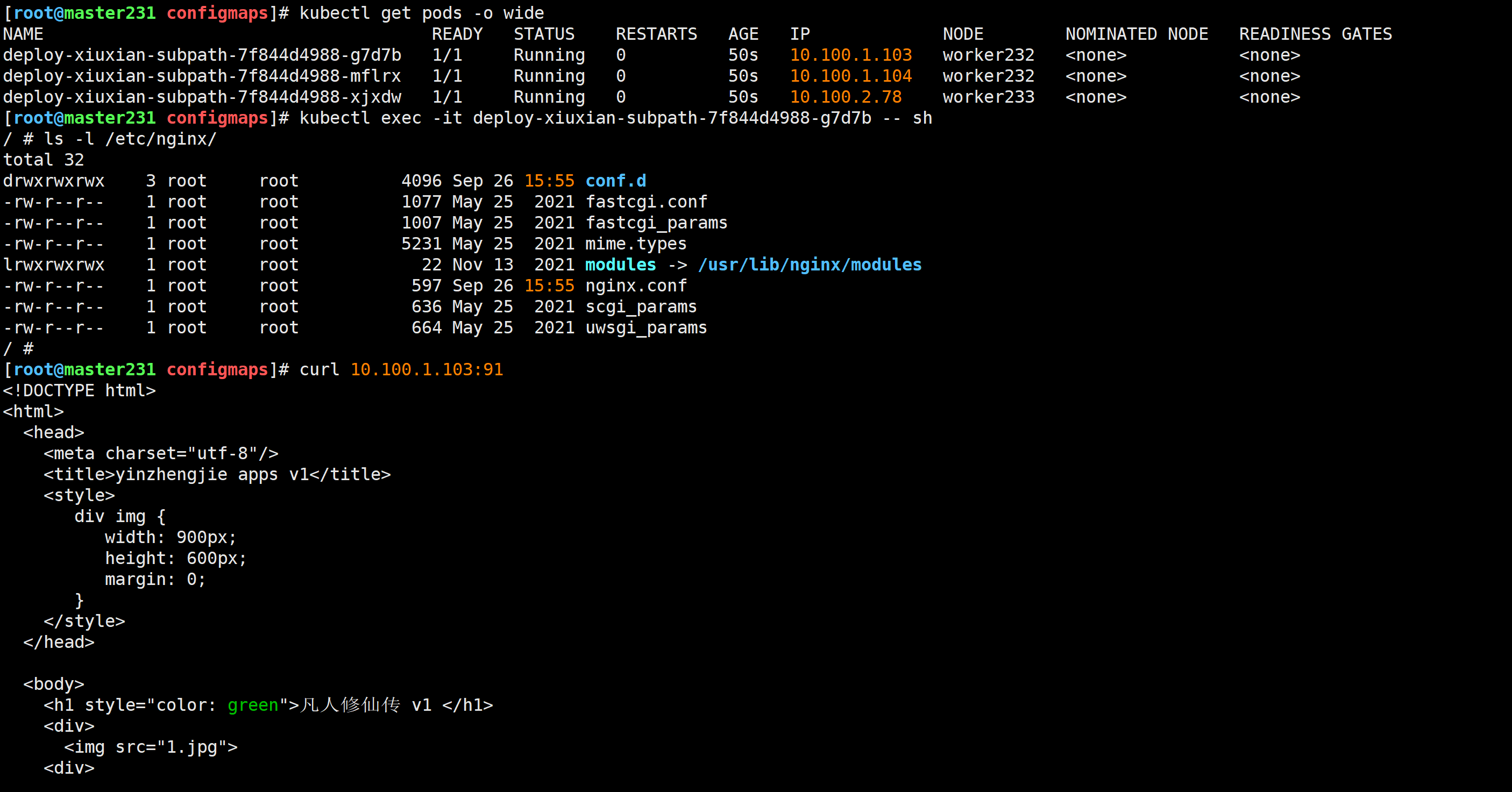

🌟configMap基于文件的挂载之subPath

编写资源清单

[root@master231 configmaps]# cat 05-deploy-cm-subPath.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian-subpath

spec:replicas: 3selector:matchLabels:app: xiuxiantemplate:metadata:labels:app: xiuxianversion: v1spec:volumes:- name: dthostPath:path: /etc/localtime- name: dataconfigMap:name: cm-nginxitems:- key: default.confpath: xiuxian.conf- name: mainconfigMap:name: cm-nginxitems:- key: main.confpath: xixi.confcontainers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /etc/nginx/conf.d/- name: dtmountPath: /etc/localtime- name: mainmountPath: /etc/nginx/nginx.conf# 当subPath和cm存储卷的path相同时,则mountPath挂载点则是一个文件而非目录。subPath: xixi.conf---apiVersion: v1

kind: ConfigMap

metadata:name: cm-nginx

data:main.conf: |user nginx;worker_processes auto;error_log /var/log/nginx/error.log notice;pid /var/run/nginx.pid;events {worker_connections 1024;}http {include /etc/nginx/mime.types;default_type application/octet-stream;log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';access_log /var/log/nginx/access.log main;sendfile on;keepalive_timeout 65;include /etc/nginx/conf.d/*.conf;}default.conf: |server {listen 91;listen [::]:91;server_name localhost;location / {root /usr/share/nginx/html;index index.html index.htm;}error_page 500 502 503 504 /50x.html;location = /50x.html {root /usr/share/nginx/html;}}测试验证

[root@master231 configmaps]# kubectl exec -it deploy-xiuxian-subpath-7f844d4988-g7d7b -- sh

/ # ls -l /etc/nginx/

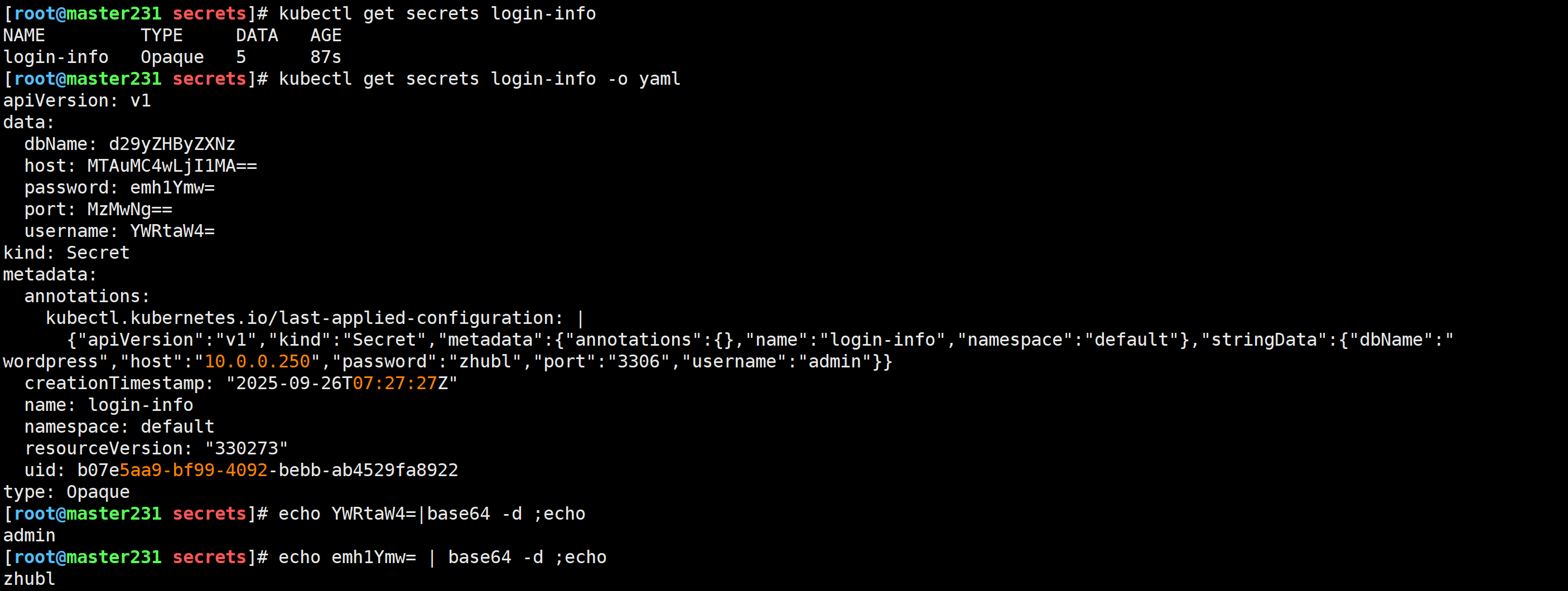

🌟Pod引用secret两种方式验证

什么是secret

secret和cm资源类似,也是存储数据的,但是secret主要存储的是敏感数据,比如认证信息,x509数字证书,登录信息等。

k8s会将数据进行base64编码后存储到etcd中。

编写资源清单

[root@master231 secrets]# cat 01-secrets-demo.yaml

apiVersion: v1

kind: Secret

metadata:name: login-info

stringData:host: 10.0.0.250port: "3306"dbName: wordpressusername: adminpassword: zhubl

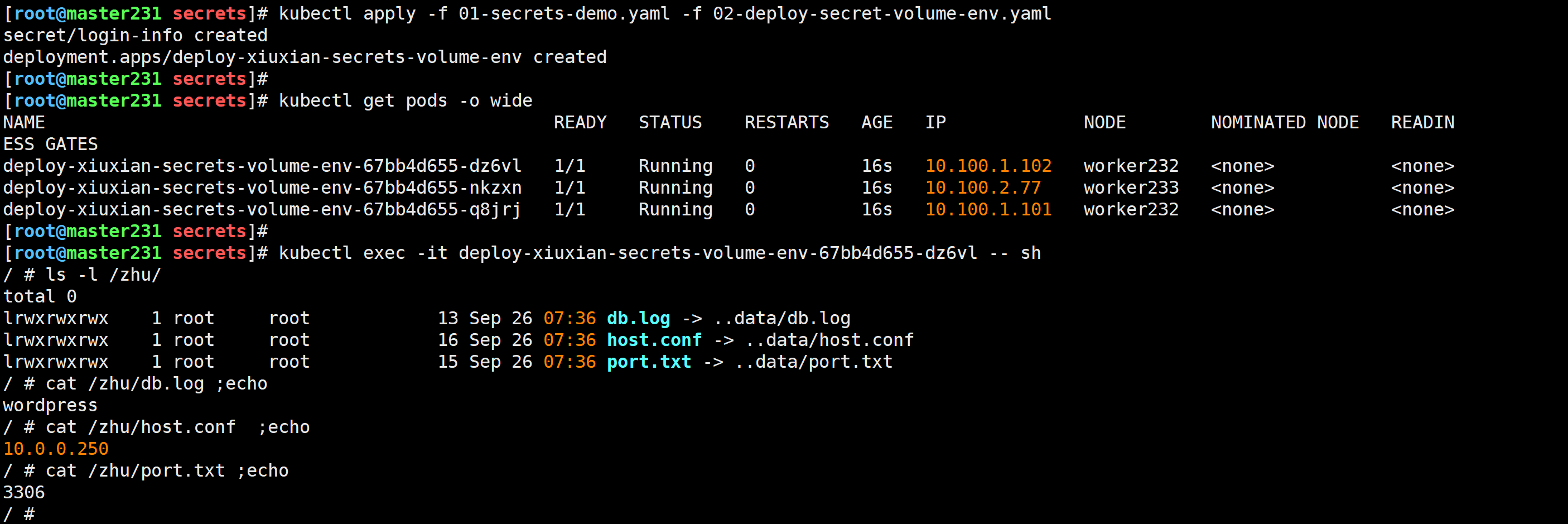

测试验证

[root@master231 secrets]# kubectl apply -f 01-secrets-demo.yaml

secret/login-info created

[root@master231 secrets]# kubectl get -f 01-secrets-demo.yaml

[root@master231 secrets]# kubectl describe secrets login-info

[root@master231 secrets]# kubectl get secrets login-info

[root@master231 secrets]# kubectl get secrets login-info -o yaml

pod引用secret的两种方式

[root@master231 secrets]# cat 02-deploy-secret-volume-env.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-xiuxian-secrets-volume-env

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:volumes:- name: data# 指定存储卷的类型是secret资源secret:# 指定secret的名称secretName: login-info# 指定secret要引用的key,若不指定则默认引用所有的keyitems:- key: hostpath: host.conf- key: portpath: port.txt- key: dbNamepath: db.logcontainers:- name: c1image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1volumeMounts:- name: datamountPath: /zhuenv:- name: ZHU-USERvalueFrom:# 值从一个secrets资源引用secretKeyRef:# 指定secrets资源的名称name: login-info# 引用secrets的具体keykey: username- name: ZHU-PWDvalueFrom:secretKeyRef:name: login-infokey: password

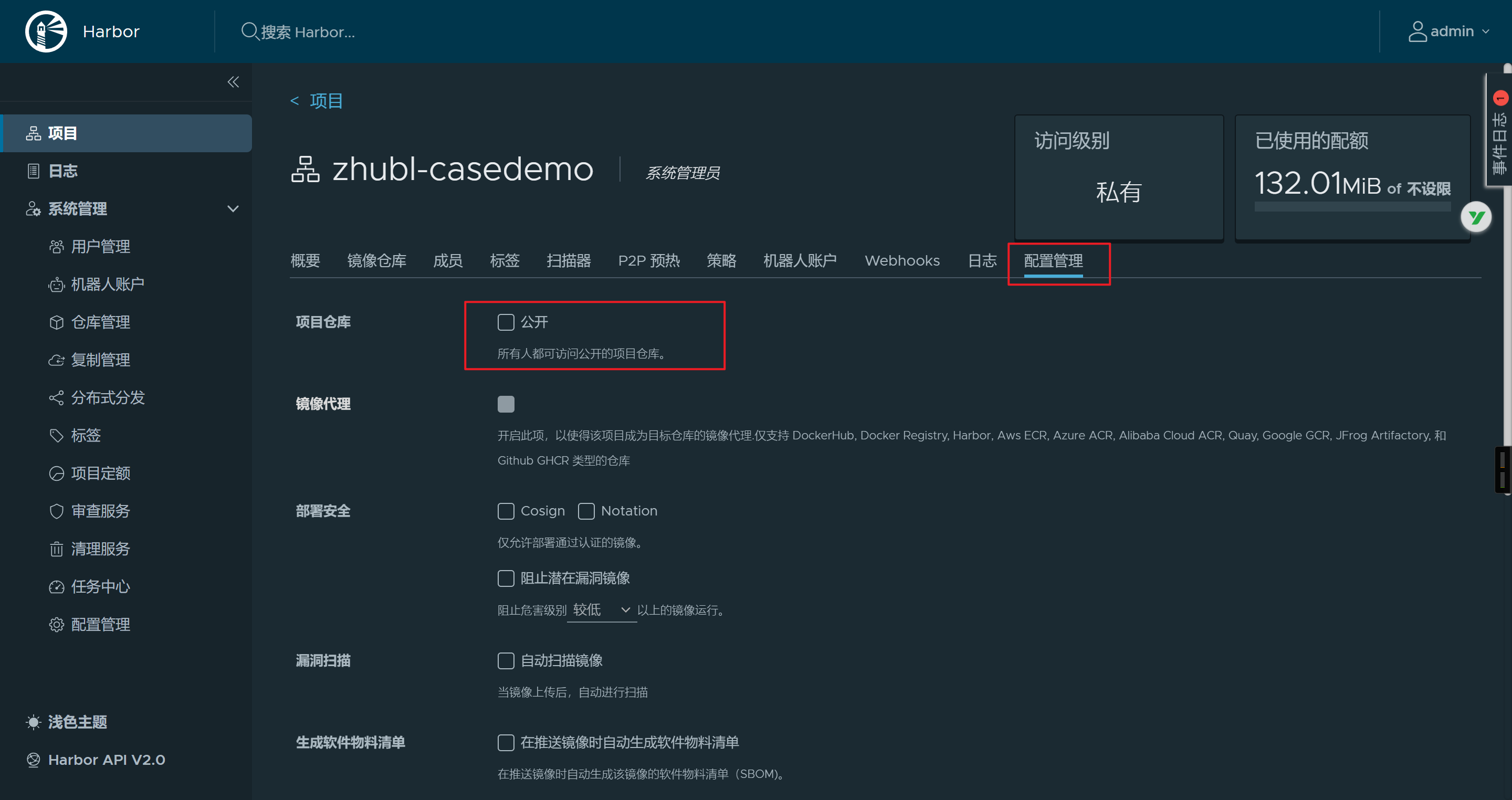

🌟secret实战案例之harbor私有仓库镜像拉取

harbor仓库修改为私有

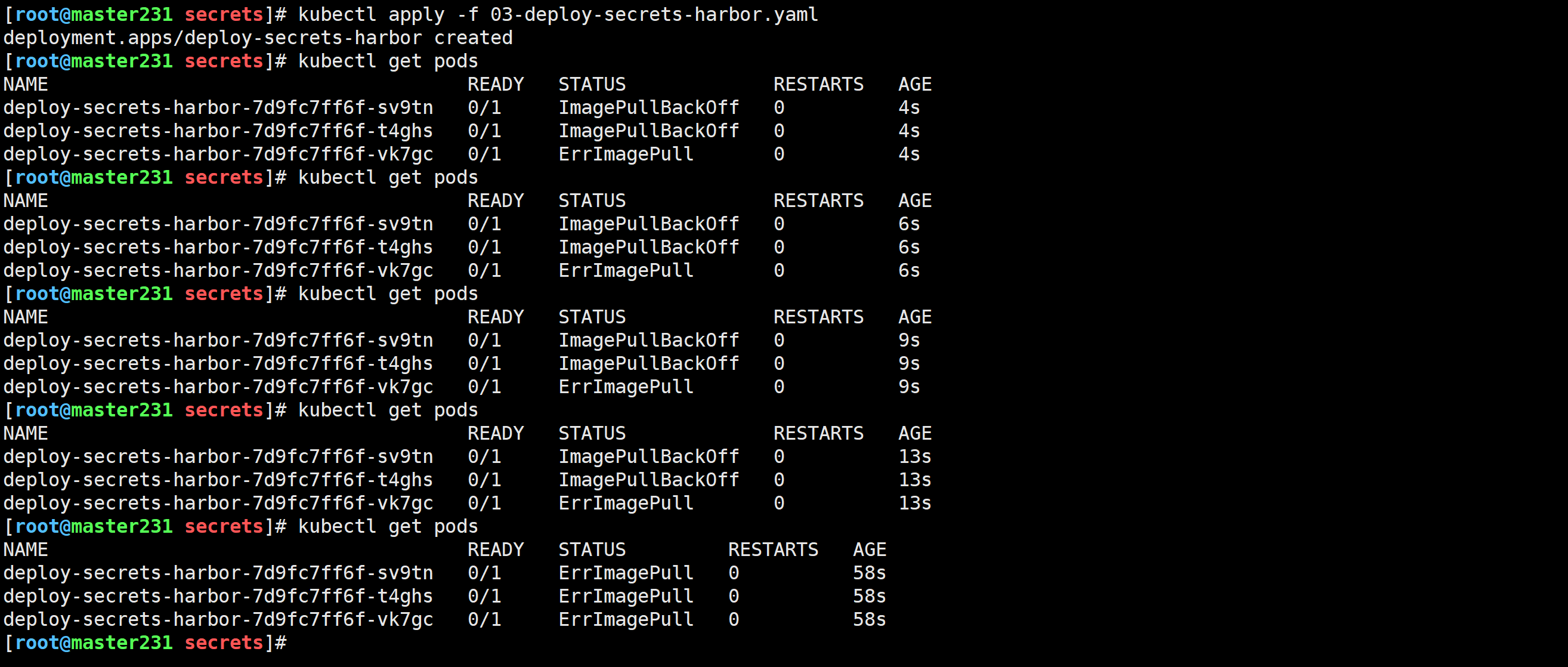

私有仓库未认证时无法拉取镜像

[root@master231 secrets]# cat 03-deploy-secrets-harbor.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-secrets-harbor

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:containers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1imagePullPolicy: Always

[root@master231 secrets]# kubectl apply -f 03-deploy-secrets-harbor.yaml

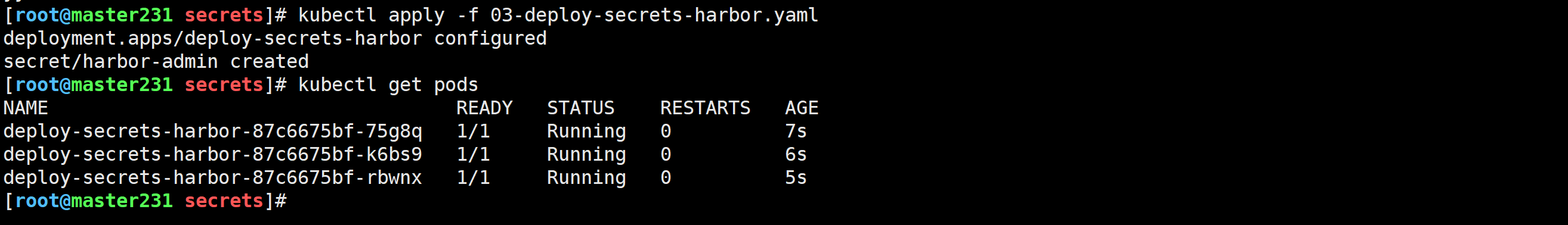

使用admin用户进行认证登录

[root@master231 secrets]# cat 03-deploy-secrets-harbor.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-secrets-harbor

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:# 指定Pod容器拉取镜像的认证信息imagePullSecrets:# 指定上面我们定义的secret名称,将来拉取镜像的时候使用该名称来取- name: "harbor-admin"containers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1imagePullPolicy: Always

---

apiVersion: v1

kind: Secret

metadata:name: harbor-admin

type: kubernetes.io/dockerconfigjson

stringData:# 注意,你的环境要将auth字段的用户名和密码做一个替换为'echo -n admin:1 | base64'的输出结果。.dockerconfigjson: '{"auths":{"harbor250.zhubl.xyz":{"username":"admin","password":"1","email":"admin@zhubl.xyz","auth":"YWRtaW46MQ=="}}}'

[root@master231 secrets]# kubectl apply -f 03-deploy-secrets-harbor.yaml

使用普通用户进行认证登录

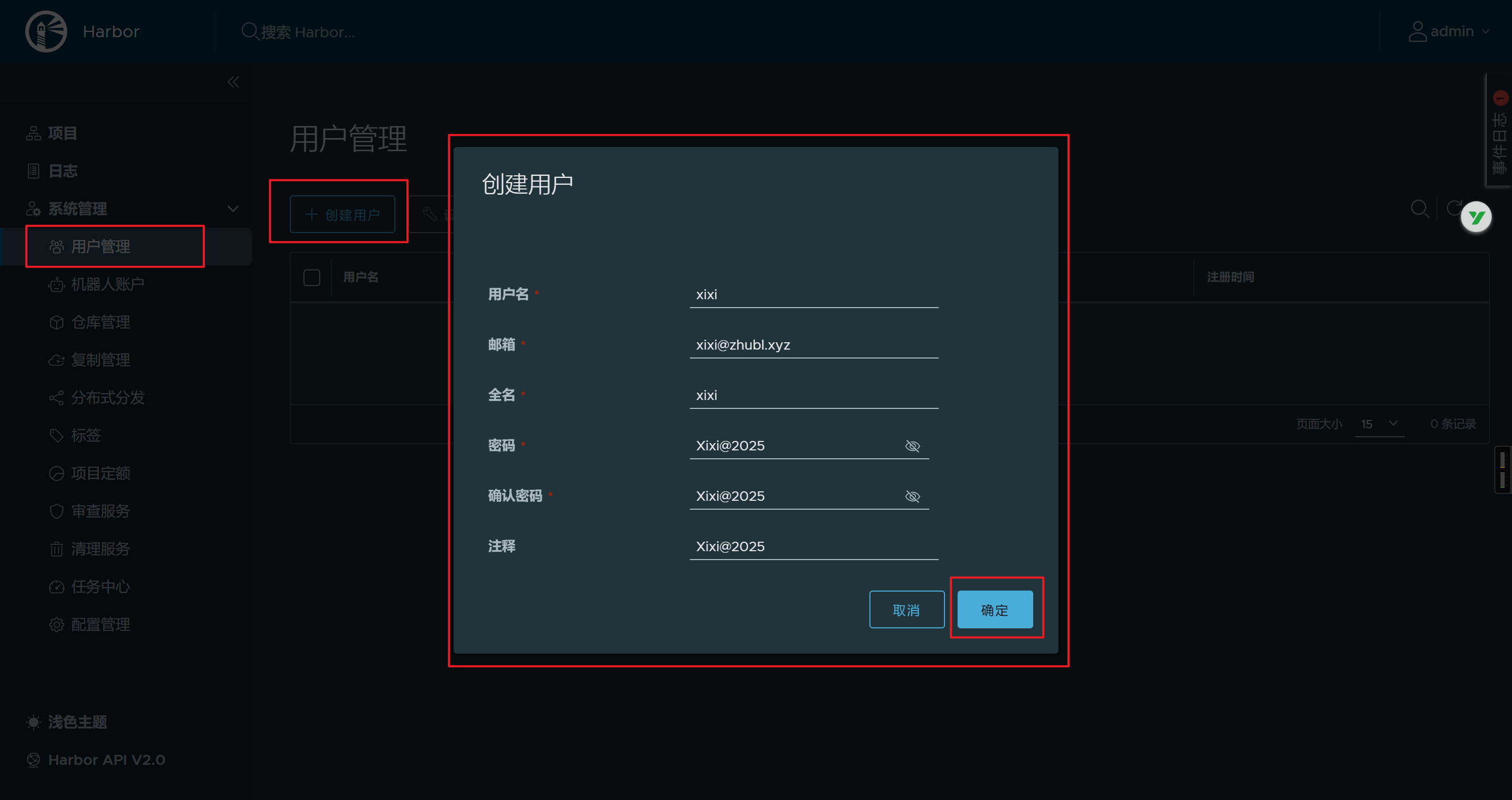

harbor仓库创建xixi用户

用户名: xixi

密码: xixi@2025

邮箱:xixi@zhubl.xyz

将项目目录授权给创建的用户

- 创建用户

- 项目授权给用户

准备认证信息进行base64编码

[root@master231 secrets]# echo -n xixi:Xixi@2025 | base64

eGl4aTpYaXhpQDIwMjU=

[root@master231 secrets]#

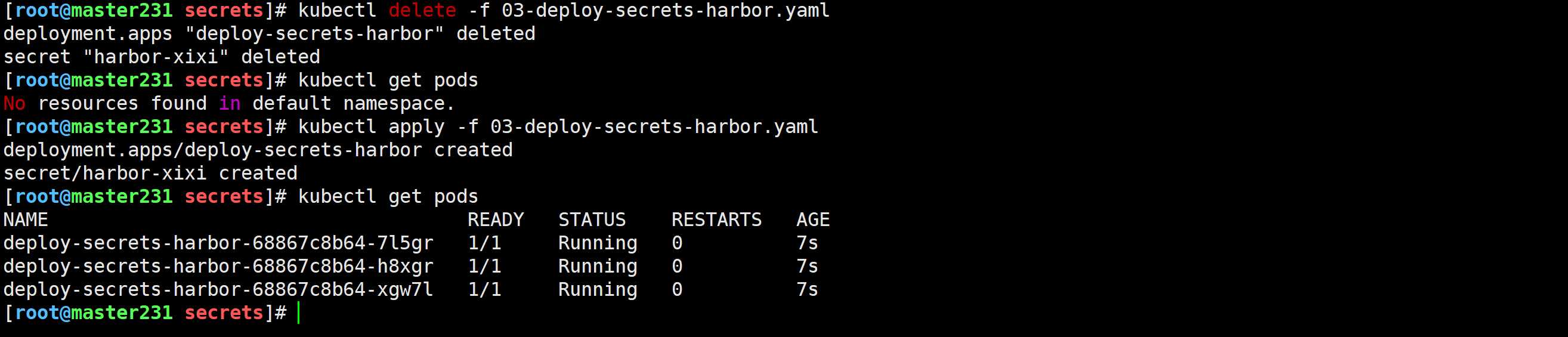

修改资源清单

[root@master231 secrets]# cat 03-deploy-secrets-harbor.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-secrets-harbor

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:# 指定Pod容器拉取镜像的认证信息imagePullSecrets:# 指定上面我们定义的secret名称,将来拉取镜像的时候使用该名称来取- name: "harbor-xixi"containers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1imagePullPolicy: Always

---

apiVersion: v1

kind: Secret

metadata:name: harbor-xixi

type: kubernetes.io/dockerconfigjson

stringData:# 注意,你的环境要将auth字段的用户名和密码做一个替换为'echo -n admin:1 | base64'的输出结果。.dockerconfigjson: '{"auths":{"harbor250.zhubl.xyz":{"username":"xixi","password":"Xixi@2025","email":"xixi@zhubl.xyz","auth":"eGl4aTpYaXhpQDIwMjU="}}}'

[root@master231 secrets]#

创建资源

[root@master231 secrets]# kubectl delete -f 03-deploy-secrets-harbor.yaml

[root@master231 secrets]# kubectl apply -f 03-deploy-secrets-harbor.yaml

[root@master231 secrets]# kubectl get pods

🌟pod基于sa认证harbor仓库登录

什么是sa

'sa’是"ServiceAccount"的简称,表示服务账号,是Pod用来进行身份认证的资源。

"k8s 1.23-"创建sa时会自动创建secret和token信息。从"k8s 1.24+"版本创建sa时不会创建secret和token信息。

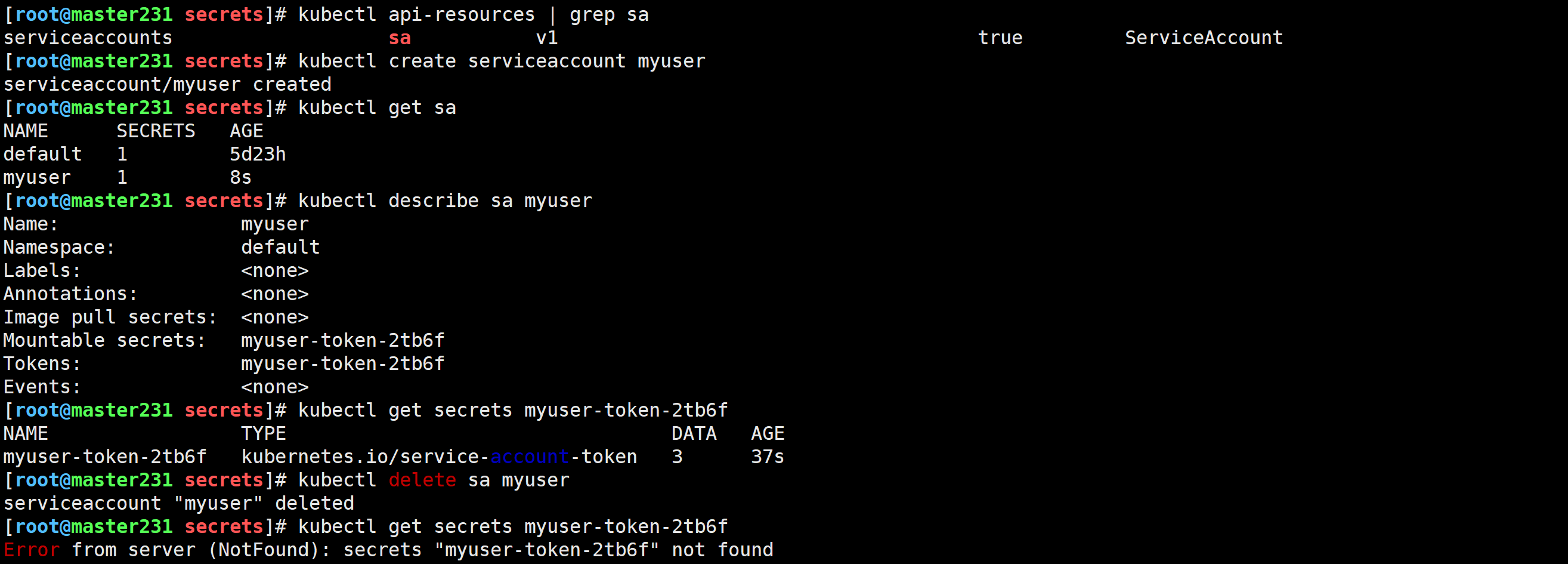

sa的基本管理

响应式管理sa

[root@master231 secrets]# kubectl api-resources | grep sa

[root@master231 secrets]# kubectl create serviceaccount myuser

[root@master231 secrets]# kubectl get sa

[root@master231 secrets]# kubectl describe sa myuser

[root@master231 secrets]# kubectl get secrets myuser-token-2tb6f

[root@master231 secrets]# kubectl delete sa myuser

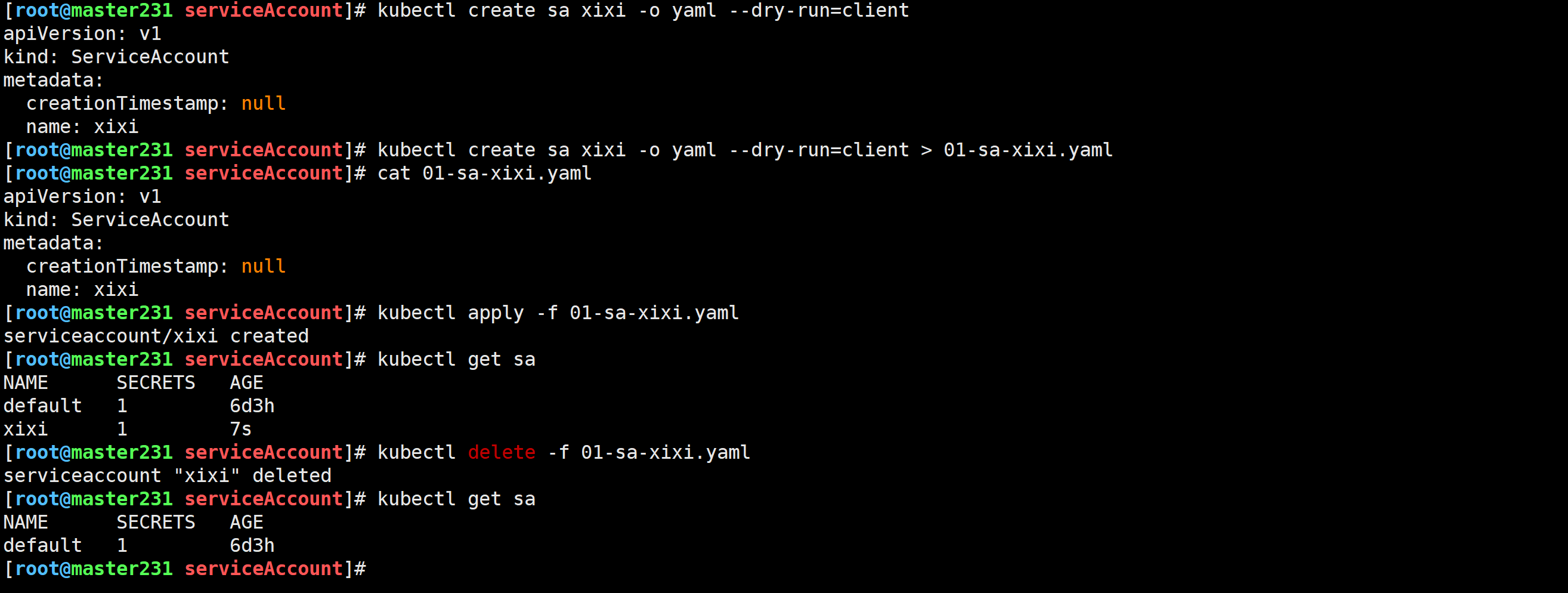

声明式管理sa

[root@master231 serviceAccount]# kubectl create sa xixi -o yaml --dry-run=client > 01-sa-xixi.yaml

apiVersion: v1

kind: ServiceAccount

metadata:creationTimestamp: nullname: xixi

[root@master231 serviceAccount]# kubectl apply -f 01-sa-xixi.yaml

serviceaccount/xixi created

[root@master231 serviceAccount]# kubectl get sa

NAME SECRETS AGE

default 1 6d3h

xixi 1 7s

[root@master231 serviceAccount]# kubectl delete -f 01-sa-xixi.yaml

serviceaccount "xixi" deleted

基于sa引用secret实现镜像拉取案例

harbor仓库创建账号信息

用户名: xixi

密码: xixi@2025

邮箱:xixi@zhubl.xyz

将私有项目授权给xixi用户

编写资源清单

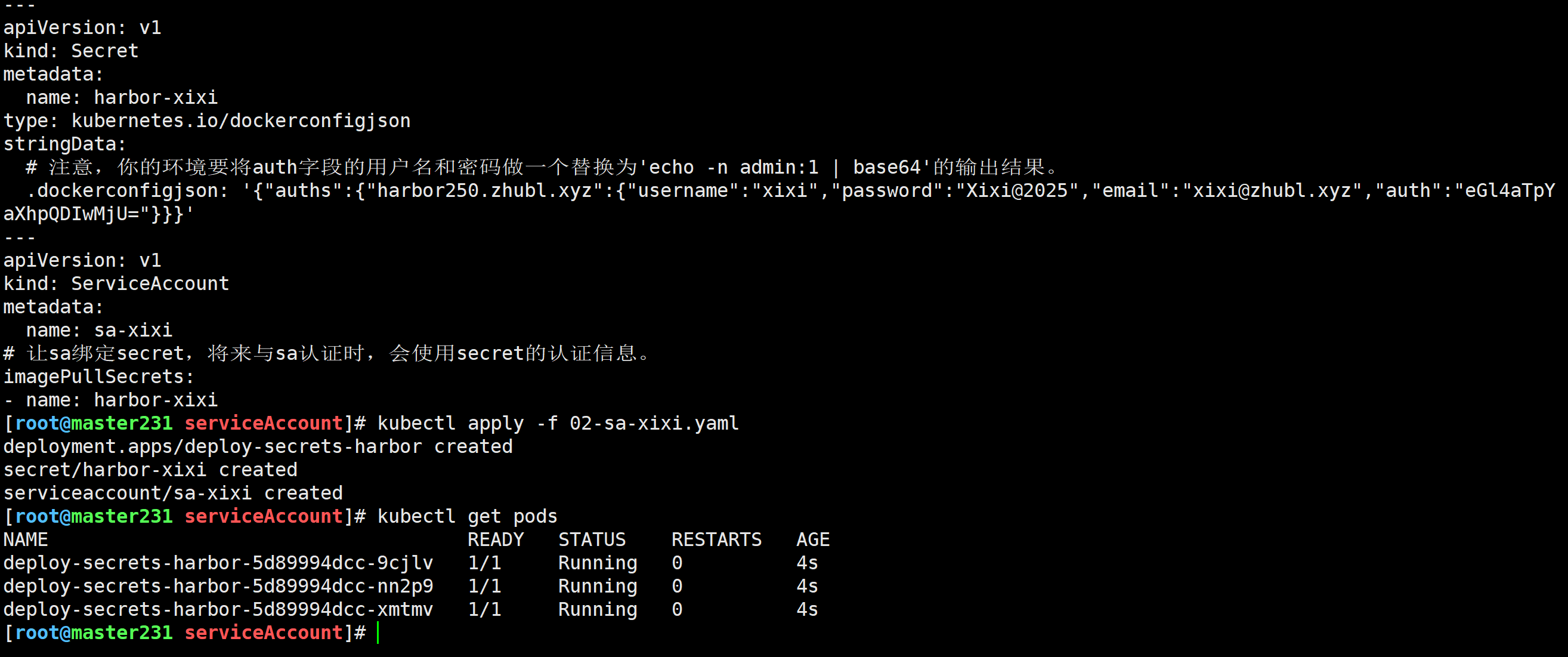

[root@master231 serviceAccount]# cat 02-sa-xixi.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deploy-secrets-harbor

spec:replicas: 3selector:matchLabels:apps: v1template:metadata:labels:apps: v1spec:# 指定服务账号,该字段官方已经弃用。推荐使用'serviceAccountName'。# serviceAccount: sa-xixi# 推荐使用该字段来指定sa的认证信息,如果不指定,则默认名称为"default"的sa。serviceAccountName: sa-xixicontainers:- name: c1image: harbor250.zhubl.xyz/zhubl-casedemo/apps:v1imagePullPolicy: Always

---

apiVersion: v1

kind: Secret

metadata:name: harbor-xixi

type: kubernetes.io/dockerconfigjson

stringData:# 注意,你的环境要将auth字段的用户名和密码做一个替换为'echo -n admin:1 | base64'的输出结果。.dockerconfigjson: '{"auths":{"harbor250.zhubl.xyz":{"username":"xixi","password":"Xixi@2025","email":"xixi@zhubl.xyz","auth":"eGl4aTpYaXhpQDIwMjU="}}}'

---

apiVersion: v1

kind: ServiceAccount

metadata:name: sa-xixi

# 让sa绑定secret,将来与sa认证时,会使用secret的认证信息。

imagePullSecrets:

- name: harbor-xixi

[root@master231 serviceAccount]#