sched_ext调度器task stall分析

在6.15.7版本中,基于sched_ext实现了调度器的debug过程中,发现调度器运行一段时间后,出现task stall异常。

一、sched_ext调度器异常log

DEBUG DUMP

================================================================================kworker/u521:3[124093] triggered exit kind 1026:runnable task stall (kworker/32:2[86288] failed to run for 30.342s)Backtrace:check_rq_for_timeouts+0xdf/0x120scx_watchdog_workfn+0x64/0x90process_one_work+0x1a0/0x3d0worker_thread+0x246/0x2d0kthread+0xe3/0x120ret_from_fork+0x34/0x50ret_from_fork_asm+0x1a/0x30CPU states

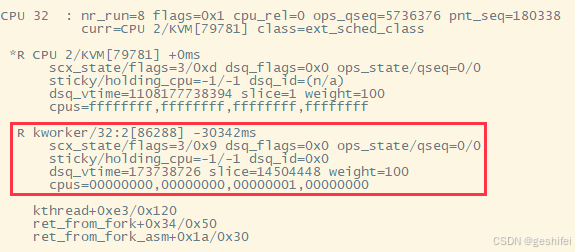

----------CPU 0 : nr_run=3 flags=0x9 cpu_rel=0 ops_qseq=7039535 pnt_seq=430828curr=CPU 3/KVM[79687] class=ext_sched_class*R CPU 3/KVM[79687] -21msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=1029029013654 slice=154313 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffR CPU 7/KVM[79691] -18msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1274313129134 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 0/KVM[79684] -11msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1120945256694 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eCPU 16 : nr_run=7 flags=0x1 cpu_rel=0 ops_qseq=5462922 pnt_seq=138451curr=CPU 4/KVM[79736] class=ext_sched_class*R CPU 4/KVM[79736] -2msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=1264236327455 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffR kworker/16:0[98272] -23040msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x0dsq_vtime=1351482184 slice=1954793 weight=100cpus=00000000,00000000,00000000,00010000kthread+0xe3/0x120ret_from_fork+0x34/0x50ret_from_fork_asm+0x1a/0x30R ksoftirqd/16[114] -21351msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x0dsq_vtime=1044750 slice=19898860 weight=100cpus=00000000,00000000,00000000,00010000kthread+0xe3/0x120ret_from_fork+0x34/0x50ret_from_fork_asm+0x1a/0x30R CPU 5/KVM[79737] -2msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=801157709582 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 0/KVM[79732] -1msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1013508900643 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffvcpu_run+0x228/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 1/KVM[79733] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1294246227978 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffvcpu_run+0x228/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 6/KVM[79738] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=855894411030 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eCPU 32 : nr_run=8 flags=0x1 cpu_rel=0 ops_qseq=5736376 pnt_seq=180338curr=CPU 2/KVM[79781] class=ext_sched_class*R CPU 2/KVM[79781] +0msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=1108177738394 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffR kworker/32:2[86288] -30342msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x0dsq_vtime=173738726 slice=14504448 weight=100cpus=00000000,00000000,00000001,00000000kthread+0xe3/0x120ret_from_fork+0x34/0x50ret_from_fork_asm+0x1a/0x30R ksoftirqd/32[211] -22323msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x0dsq_vtime=1270010 slice=19889240 weight=100cpus=00000000,00000000,00000001,00000000kthread+0xe3/0x120ret_from_fork+0x34/0x50ret_from_fork_asm+0x1a/0x30R CPU 1/KVM[79780] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1154751017694 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 0/KVM[79779] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1240368691518 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 4/KVM[79783] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=750392301274 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 5/KVM[79784] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1047896341206 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 3/KVM[79782] +0msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1071860899169 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eCPU 34 : nr_run=1 flags=0x1 cpu_rel=0 ops_qseq=1219013 pnt_seq=612166curr=kworker/u521:3[124093] class=ext_sched_class*R kworker/u521:3[124093] +0msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=8951300 slice=19323950 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffscx_dump_state+0x78d/0x890scx_ops_error_irq_workfn+0x40/0x50irq_work_run_list+0x4f/0x90irq_work_run+0x18/0x50__sysvec_irq_work+0x1c/0xb0sysvec_irq_work+0x70/0x90asm_sysvec_irq_work+0x1a/0x20check_rq_for_timeouts+0xf4/0x120scx_watchdog_workfn+0x64/0x90process_one_work+0x1a0/0x3d0worker_thread+0x246/0x2d0kthread+0xe3/0x120ret_from_fork+0x34/0x50ret_from_fork_asm+0x1a/0x30CPU 48 : nr_run=2 flags=0x9 cpu_rel=0 ops_qseq=5868204 pnt_seq=147402curr=CPU 4/KVM[79831] class=ext_sched_class*R CPU 4/KVM[79831] -20msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=751667972205 slice=203103 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffR CPU 5/KVM[79832] -17msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1171908792555 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eCPU 64 : nr_run=4 flags=0x9 cpu_rel=0 ops_qseq=5490610 pnt_seq=147467curr=CPU 0/KVM[79874] class=ext_sched_class*R CPU 0/KVM[79874] -17msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=1155511725076 slice=3148754 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffR CPU 2/KVM[79876] -16msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=755928821202 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 6/KVM[79880] -14msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=976444823305 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 1/KVM[79875] -8msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1023524740038 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eCPU 80 : nr_run=3 flags=0x9 cpu_rel=0 ops_qseq=5678793 pnt_seq=152247curr=CPU 2/KVM[79923] class=ext_sched_class*R CPU 2/KVM[79923] -12msscx_state/flags=3/0xd dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=(n/a)dsq_vtime=1206399319762 slice=8102776 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffR CPU 6/KVM[79927] -9msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1084765425604 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eR CPU 0/KVM[79921] -2msscx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0sticky/holding_cpu=-1/-1 dsq_id=0x8000000000000002dsq_vtime=1151287547870 slice=1 weight=100cpus=ffffffff,ffffffff,ffffffff,ffffffffkvm_vcpu_halt+0x6b/0x330 [kvm]vcpu_run+0x1e7/0x260 [kvm]kvm_arch_vcpu_ioctl_run+0x143/0x490 [kvm]kvm_vcpu_ioctl+0x232/0x770 [kvm]__x64_sys_ioctl+0x97/0xc0do_syscall_64+0x5d/0x170entry_SYSCALL_64_after_hwframe+0x76/0x7eEvent counters

--------------SCX_EV_SELECT_CPU_FALLBACK: 0SCX_EV_DISPATCH_LOCAL_DSQ_OFFLINE: 0SCX_EV_DISPATCH_KEEP_LAST: 1581SCX_EV_ENQ_SKIP_EXITING: 0SCX_EV_ENQ_SKIP_MIGRATION_DISABLED: 0SCX_EV_ENQ_SLICE_DFL: 1581SCX_EV_BYPASS_DURATION: 25274190SCX_EV_BYPASS_DISPATCH: 0SCX_EV_BYPASS_ACTIVATE: 1================================================================================EXIT: runnable task stall (kworker/32:2[86288] failed to run for 30.342s)

[root@hadoop03 sched_ext]#二、日志分析

1,关键日志1

![]()

这说明,最近30.342s内没有调度kworker/32:2线程运行,检测到task stall异常。

kworker线程命名规则:kworker/<CPU编号>:<线程序号>

2,关键日志2

在异常log中找到32核记录的kworker/32:2信息,

2.1 R kworker/32:2[86288] -30342ms

R:表示任务状态(Running,待调度运行/正在运行状态)。

kworker/32:2:任务的名字(内核工作线程)。

[86288]:任务 PID。

-30342ms:该任务自上一次调度以来的延迟。

2.2 scx_state/flags=3/0x9 dsq_flags=0x0 ops_state/qseq=0/0

scx_state=3:任务在 sched_ext 调度器中的状态码。

include/linux/linux/sched/ext.h

/* scx_entity.flags & SCX_TASK_STATE_MASK */

enum scx_task_state {SCX_TASK_NONE, /* ops.init_task() not called yet */SCX_TASK_INIT, /* ops.init_task() succeeded, but task can be cancelled */SCX_TASK_READY, /* fully initialized, but not in sched_ext */SCX_TASK_ENABLED, /* fully initialized and in sched_ext */SCX_TASK_NR_STATES,

};flags=0x9:flag定义码见include/linux/linux/sched/ext.h

/* scx_entity.flags */

enum scx_ent_flags {SCX_TASK_QUEUED = 1 << 0, /* on ext runqueue */SCX_TASK_RESET_RUNNABLE_AT = 1 << 2, /* runnable_at should be reset */SCX_TASK_DEQD_FOR_SLEEP = 1 << 3, /* last dequeue was for SLEEP */SCX_TASK_STATE_SHIFT = 8, /* bit 8 and 9 are used to carry scx_task_state */SCX_TASK_STATE_BITS = 2,SCX_TASK_STATE_MASK = ((1 << SCX_TASK_STATE_BITS) - 1) << SCX_TASK_STATE_SHIFT,SCX_TASK_CURSOR = 1 << 31, /* iteration cursor, not a task */

};标志位(低 8 位)对应的二进制值:

SCX_TASK_QUEUED = 0x1 → 0001

SCX_TASK_RESET_RUNNABLE_AT = 0x4 → 0100

SCX_TASK_DEQD_FOR_SLEEP = 0x8 → 1000

所以 flags=0x9 表示:

- 任务在 队列中 (SCX_TASK_QUEUED)

- 上一次 dequeue 是因为睡眠 (SCX_TASK_DEQD_FOR_SLEEP)

- 无需重置 runnable_at (SCX_TASK_RESET_RUNNABLE_AT = 0)

dsq_flags=0x0:任务在 DSQ上的标志

0 表示没有额外状态,1表示在优先级队列上。

/* scx_entity.dsq_flags */

enum scx_ent_dsq_flags {SCX_TASK_DSQ_ON_PRIQ = 1 << 0, /* task is queued on the priority queue of a dsq */

};ops_state=0:任务的调度操作状态

include/linux/linux/sched/ext.h

/** sched_ext_entity->ops_state** Used to track the task ownership between the SCX core and the BPF scheduler.* State transitions look as follows:** NONE -> QUEUEING -> QUEUED -> DISPATCHING* ^ | |* | v v* \-------------------------------/** QUEUEING and DISPATCHING states can be waited upon. See wait_ops_state() call* sites for explanations on the conditions being waited upon and why they are* safe. Transitions out of them into NONE or QUEUED must store_release and the* waiters should load_acquire.** Tracking scx_ops_state enables sched_ext core to reliably determine whether* any given task can be dispatched by the BPF scheduler at all times and thus* relaxes the requirements on the BPF scheduler. This allows the BPF scheduler* to try to dispatch any task anytime regardless of its state as the SCX core* can safely reject invalid dispatches.*/

enum scx_ops_state {SCX_OPSS_NONE, /* owned by the SCX core */SCX_OPSS_QUEUEING, /* in transit to the BPF scheduler */SCX_OPSS_QUEUED, /* owned by the BPF scheduler */SCX_OPSS_DISPATCHING, /* in transit back to the SCX core *//** QSEQ brands each QUEUED instance so that, when dispatch races* dequeue/requeue, the dispatcher can tell whether it still has a claim* on the task being dispatched.** As some 32bit archs can't do 64bit store_release/load_acquire,* p->scx.ops_state is atomic_long_t which leaves 30 bits for QSEQ on* 32bit machines. The dispatch race window QSEQ protects is very narrow* and runs with IRQ disabled. 30 bits should be sufficient.*/SCX_OPSS_QSEQ_SHIFT = 2,

};qseq=0:任务在队列中的序号。

每次任务被放入队列(QUEUED 状态)时都会打上一个 QSEQ。

调度器在决定“我可以调度这个任务”之前,会检查任务当前的 QSEQ 是否和它最初看到的一致。

如果不一致,说明任务在这期间被重新入队过,调度器就放弃当前调度,防止错误。

2.3 sticky/holding_cpu=-1/-1 dsq_id=0x0

sticky=-1:任务没有粘性 CPU,即不固定在某个 CPU 上。

holding_cpu=-1:当前没有 CPU 持有它。

dsq_id=0x0:任务所属 DSQ 队列 ID,这个很重要。

include/linux/linux/sched/ext.h

/** DSQ (dispatch queue) IDs are 64bit of the format:** Bits: [63] [62 .. 0]* [ B] [ ID ]** B: 1 for IDs for built-in DSQs, 0 for ops-created user DSQs* ID: 63 bit ID** Built-in IDs:** Bits: [63] [62] [61..32] [31 .. 0]* [ 1] [ L] [ R ] [ V ]** 1: 1 for built-in DSQs.* L: 1 for LOCAL_ON DSQ IDs, 0 for others* V: For LOCAL_ON DSQ IDs, a CPU number. For others, a pre-defined value.*/

enum scx_dsq_id_flags {SCX_DSQ_FLAG_BUILTIN = 1LLU << 63,SCX_DSQ_FLAG_LOCAL_ON = 1LLU << 62,SCX_DSQ_INVALID = SCX_DSQ_FLAG_BUILTIN | 0,SCX_DSQ_GLOBAL = SCX_DSQ_FLAG_BUILTIN | 1,SCX_DSQ_LOCAL = SCX_DSQ_FLAG_BUILTIN | 2,SCX_DSQ_LOCAL_ON = SCX_DSQ_FLAG_BUILTIN | SCX_DSQ_FLAG_LOCAL_ON,SCX_DSQ_LOCAL_CPU_MASK = 0xffffffffLLU,

};| 宏 | 值 | 含义 |

| SCX_DSQ_FLAG_BUILTIN | 1 << 63 | 内置队列 |

| SCX_DSQ_FLAG_LOCAL_ON | 1 << 62 | LOCAL_ON队列,带flag |

| SCX_DSQ_INVALID | SCX_DSQ_FLAG_BUILTIN | 0 | 无效队列 |

| SCX_DSQ_GLOBAL | SCX_DSQ_FLAG_BUILTIN | 1 | 全局队列(系统只有一个) |

| SCX_DSQ_LOCAL | SCX_DSQ_FLAG_BUILTIN | 2 | 本地队列(每cpu一个) |

| SCX_DSQ_LOCAL_ON | SCX_DSQ_FLAG_BUILTIN | SCX_DSQ_FLAG_LOCAL_ON | LOCAL_ON队列,不带flag |

| SCX_DSQ_LOCAL_CPU_MASK | 0xffffffff | LOCAL_ON 队列低 32 位用于存放 CPU 编号 |

dsq_id=0x0,是自己定义的队列:

#define SHARED_DSQ 0

s32 BPF_STRUCT_OPS_SLEEPABLE(numa_aware_init)

{return scx_bpf_create_dsq(SHARED_DSQ, -1);

}2.4 dsq_vtime=173738726 slice=14504448 weight=100

dsq_vtime=173738726:虚拟运行时间,单位ns

slice=14504448:剩余的时间片,单位ns。默认SCX_SLICE_DFL = 20 * 1000000, /* 20ms */

weight=100:任务权重,用于计算虚拟运行时间。

2.5 cpus=00000000,00000000,00000001,00000000

从左到右编号,最左边是 0,最右边是127(我的系统中有128 cpu),每个字段代表4个cpu,cpus一串编码,与下面命令(更人性化)展示的信息一样:

[root@hadoop03 86288]# cat /proc/86288/status | grep Cpus_allowed

Cpus_allowed: 00000000,00000000,00000001,00000000

Cpus_allowed_list: 32

[root@hadoop03 86288]#Cpus_allowed_list表示该任务允许在32核运行(cpu 从0开始编号)。

2.6 log总结

kworker/32:2线程只允许在cpu 32上运行,为Running状态,在自定义的SHARED_DSQ 队列中,最近30342ms一直没有调度,被检测到task stall,触发异常。

三、代码分析

1,调度器介绍(调测版本,非正式版)

调度器会将6个压测进程及其子线程作为一组,放在相同的node中,并指定node中特定cpu执行。

node及cpu由task_ext_map指定。

eBPF侧代码如下:

/*{node, cpu}{0, 0}, // a进程,node0,cpu0{1, 16}, // b进程,node1,cpu16{2, 32}, // c进程{3, 48}, // d进程{4, 64}, // e进程{5, 80}, // f进程

};

*/struct node_cpu_entry {s32 node;s32 cpu;

};struct {__uint(type, BPF_MAP_TYPE_ARRAY);__uint(max_entries, 6);__type(key, u32);__type(value, struct node_cpu_entry);

} node_cpu_table SEC(".maps");用户侧代码:

// === 初始化 node_cpu_table ===

struct node_cpu_entry vals[6] = {{0, 0}, {1, 16}, {2, 32},{3, 48}, {4, 64}, {5, 80},

};int map_fd = bpf_map__fd(skel->maps.node_cpu_table);

if (map_fd < 0) {fprintf(stderr, "failed to get node_cpu_table fd\n");return 1;}for (u32 i = 0; i < 6; i++) {if (bpf_map_update_elem(map_fd, &i, &vals[i], BPF_ANY)) {perror("bpf_map_update_elem");return 1;}

}

// === 初始化完成 ===a压测进程及其子线程放在node 0,cpu 0执行。

b压测进程及其子线程放在node 1,cpu 16执行。

c压测进程及其子线程放在node 2,cpu 32执行。

以此类推。

[root@hadoop03 ~]# bpftool map dump name task_ext_map

[{"key": 79663,"value": {"preferred_node": 0,"preferred_cpu": 0}},{"key": 79761,"value": {"preferred_node": 2,"preferred_cpu": 32}},{"key": 79903,"value": {"preferred_node": 5,"preferred_cpu": 80}},{"key": 79714,"value": {"preferred_node": 1,"preferred_cpu": 16}},{"key": 79857,"value": {"preferred_node": 4,"preferred_cpu": 64}},{"key": 79809,"value": {"preferred_node": 3,"preferred_cpu": 48}}

]2,任务enqueu操作

void BPF_STRUCT_OPS(numa_aware_enqueue, struct task_struct *p, u64 enq_flags)

{struct task_struct_ext *ext;u32 tgid = BPF_CORE_READ(p, tgid);ext = bpf_map_lookup_elem(&task_ext_map, &tgid);if (ext) {s32 target = ext->preferred_cpu;stat_inc(1);if (target >= 0 && bpf_cpumask_test_cpu(target, p->cpus_ptr)) {scx_bpf_dsq_insert(p, SCX_DSQ_LOCAL_ON | target, SCX_SLICE_DFL, enq_flags);scx_bpf_kick_cpu(target, SCX_KICK_IDLE);}return;}stat_inc(1); /* count global queueing */// 如果没有找到合适 CPU,fallback 到共享队列scx_bpf_dsq_insert(p, SHARED_DSQ, 0, enq_flags);

}kworker/32:2线程不是压测进程,所以numa_aware_enqueue会将它放到SHARED_DSQ

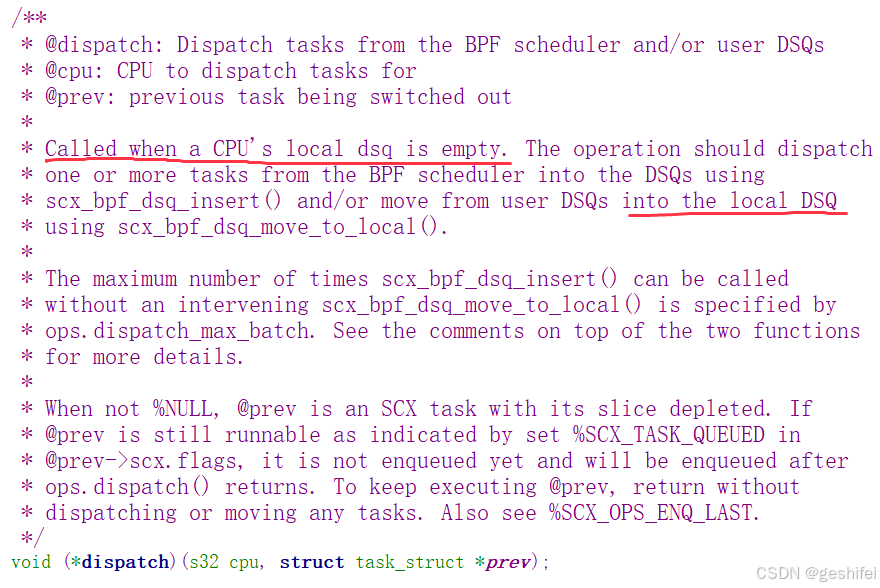

3,dispatch函数介绍

当 CPU 的 local DSQ 为空的时候,BPF 调度器框架会执行dispatch( ),给这个 local DSQ 塞任务。BPF调度器会从local DSQ中取任务执行。

void BPF_STRUCT_OPS(numa_aware_dispatch, s32 cpu, struct task_struct *prev)

{scx_bpf_dsq_move_to_local(SHARED_DSQ);

}

在 dispatch() 中,可以选择两种方式填充 CPU 的 local DSQ:

1)插入新的任务:

scx_bpf_dsq_insert(p, SCX_DSQ_LOCAL, slice, flags);

把某个任务 p 插到 CPU 的本地队列(或别的 DSQ)里。

连续调用次数有限制,由 ops.dispatch_max_batch 控制。

2)从用户 DSQ 拉任务过来

scx_bpf_dsq_move_to_local(SHARED_DSQ);

把任务从自己创建的 DSQ(比如全局共享队列)移动到目标 CPU 的本地 DSQ。

一次调用可以批量移动多个任务。

4,cpu 32 的dispatch流程

启动压测进程后,cpu 32是跑满的,说明local DSQ一直非空,导致numa_aware_dispatch没有机会从SHARED_DSQ中拉任务到cpu 32的本地队列,而kworker/32:2线程恰好是在SHARED_DSQ中的,所以cpu 32高负载情况下,不会执行SHARED_DSQ中的task,意味着cpu 32 不会执行kworker/32:2线程。

5,非cpu 32的 dispatch流程

系统中有128个cpu,除了task_ext_map指定的6个CPU,其他cpu都是空闲了,所以会通过numa_aware_dispatch从SHARED_DSQ中拉取任务到本地队列执行。

numa_aware_dispatch -> scx_bpf_dsq_move_to_local -> consume_dispatch_q通过下面代码段遍历SHARED_DSQ中的task,将task尝试拉到本地队列。

nldsq_for_each_task(p, dsq) {struct rq *task_rq = task_rq(p);if (rq == task_rq) {task_unlink_from_dsq(p, dsq);move_local_task_to_local_dsq(p, 0, dsq, rq);raw_spin_unlock(&dsq->lock);return true;}if (task_can_run_on_remote_rq(p, rq, false)) {if (likely(consume_remote_task(rq, p, dsq, task_rq)))return true;goto retry;}}我们这个场景是“非cpu 32的 dispatch流程”,那么if (rq == task_rq)一定不成立(因为rq对应的是“非cpu 32的运行队列”;task_rq是kworker/32:2线程之前的运行队列,即cpu 32的队列),所以会执行:

if (task_can_run_on_remote_rq(p, rq, false)) {if (likely(consume_remote_task(rq, p, dsq, task_rq)))return true;goto retry;

}task_can_run_on_remote_rq -> task_allowed_on_cpu会判断task是否允许在当前空闲的cpu(非32核)上执行:

static inline bool task_allowed_on_cpu(struct task_struct *p, int cpu)

{/* When not in the task's cpumask, no point in looking further. */if (!cpumask_test_cpu(cpu, p->cpus_ptr))return false;/* Can @cpu run a user thread? */if (!(p->flags & PF_KTHREAD) && !task_cpu_possible(cpu, p))return false;return true;

}由于kworker/32:2线程只允许在32核运行,所以task_allowed_on_cpu返回false,回到consume_dispatch_q中继续尝试其他cpu,在这个流程中,kworker/32:2线程也是无法被任何“非32核”的cpu处理的。

6,定位结论

压测后,cpu 32因高负载导致local DSQ非空,一致无法执行dispatch函数,无法运行kworker/32:2线程线程。其他部分cpu空闲,虽然可执行dispatch函数,但因为kworker/32:2线程的cpu mask不允许运行在“非32核”上,也不能运行kworker/32:2线程。整个系统无任何cpu可运行kworker/32:2,导致kworker/32:2 task stall异常。

7,解决方案

在numa_aware_enqueue函数中增加“绑核场景处理”,挂机测试,问题解决。

void BPF_STRUCT_OPS(numa_aware_enqueue, struct task_struct *p, u64 enq_flags)

{int cpu;struct task_struct_ext *ext;u32 tgid = BPF_CORE_READ(p, tgid);ext = bpf_map_lookup_elem(&task_ext_map, &tgid);if (ext) {/* 有 ext 的情况,放本地队列 */s32 target = ext->preferred_cpu;stat_inc(1);if (target >= 0 && bpf_cpumask_test_cpu(target, p->cpus_ptr)) {if (fifo_sched) {scx_bpf_dsq_insert(p, SHARED_DSQ, SCX_SLICE_DFL, enq_flags);} else {scx_bpf_dsq_insert(p, SCX_DSQ_LOCAL_ON | target, SCX_SLICE_DFL, enq_flags);scx_bpf_kick_cpu(target, SCX_KICK_IDLE);}}return;}/* 绑核场景处理 */#pragma unrollfor (cpu = 0; cpu < 128; cpu++) { // 系统 128 CPUif (bpf_cpumask_test_cpu(cpu, p->cpus_ptr)) {scx_bpf_dsq_insert(p, SCX_DSQ_LOCAL_ON | cpu, SCX_SLICE_DFL, enq_flags);scx_bpf_kick_cpu(cpu, SCX_KICK_IDLE);return;}}// 如果没有找到合适 CPU,fallback 到共享队列scx_bpf_dsq_insert(p, SHARED_DSQ, SCX_SLICE_DFL, enq_flags);

}