centos7通过kubeadm安装k8s1.27.1版本

1.准备机器

| 主机 | 说明 |

|---|---|

| 192.168.65.137 | master节点,能连外网,官网最低要求2核2G |

| 192.168.65.163 | node节点,能连外网,官网最低要求2核2G |

| 192.168.65.197 | node节点,能连外网,官网最低要求2核2G |

2.服务器环境配置

2.1 关闭防火墙(所有节点)

关闭防火墙并设置开机不启动

systemctl stop firewalld

systemctl disable firewalld

2.3 关闭swap分区(所有节点)

修改后重启服务器生效

swapoff -a

vim /etc/fstab #永久禁用swap,删除或注释掉/etc/fstab里的swap设备的挂载命令即可

#/dev/mapper/centos-swap swap swap defaults 0 0

2.4 Centos7内核升级(所有节点)

CentOS 7.x 系统自带的 3.10.x 内核存在一些 Bugs,导致运行的 Docker、Kubernetes 不稳定,还有会造成kube-proxy不能转发流量

#查看现在的内核版本

[root@k8s-master ~]#uname -a

Linux worker01 3.10.0-1160.el7.x86_64 #1 SMP Mon Oct 19 16:18:59 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux#查看 yum 中可升级的内核版本

yum list kernel --showduplicates

#如果list中有需要的版本可以直接执行 update 升级,多数是没有的,所以要按以下步骤操作#导入ELRepo软件仓库的公共秘钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org#Centos7系统安装ELRepo

yum install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm

#Centos8系统安装ELRepo

yum install https://www.elrepo.org/elrepo-release-8.el8.elrepo.noarch.rpm#查看ELRepo提供的内核版本

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available#kernel-lt:表示longterm,即长期支持的内核

#kernel-ml:表示mainline,即当前主线的内核

#安装主线内核(32位安装kernek-ml)

yum --enablerepo=elrepo-kernel install kernel-ml.x86_64#查看系统可用内核,并设置启动项

[root@k8s-master ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

[root@k8s-master ~]# sudo awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

0 : CentOS Linux (6.4.2-1.el7.elrepo.x86_64) 7 (Core)

1 : CentOS Linux (3.10.0-1160.el7.x86_64) 7 (Core)

2 : CentOS Linux (0-rescue-39c2c38c09f24319a63e3eaeee20324f) 7 (Core)#指定开机启动内核版本

cp /etc/default/grub /etc/default/grub-bak #备份

grub2-set-default 0 #设置默认内核版本#重新创建内核配置

grub2-mkconfig -o /boot/grub2/grub.cfg#更新软件包并重启系统

yum makecache

reboot

#验证内核

[root@k8s-master ~]# uname -a

Linux 192-168-65-137 6.4.2-1.el7.elrepo.x86_64 #1 SMP PREEMPT_DYNAMIC Wed Jul 5 16:22:27 EDT 2023 x86_64 x86_64 x86_64 GNU/Linux

2.5 设置主机名(所有节点)

#注意每个节点的名字要不一样

hostnamectl set-hostname k8s-master# 配置hosts

vim /etc/hosts

192.168.65.137 k8s-master

192.168.65.163 k8s-node1

192.168.65.197 k8s-node2

2.6 时间同步(所有节点)

yum install ntpdate -y && ntpdate time.windows.com

2.7配制iptables规则

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT#设置系统参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 网桥生效

sysctl --system

3.安装docker(所有节点)

3.1 安装docker-ce

安装需要的软件包, yum-util 提供yum-config-manager功能

yum install -y yum-utils# 设置yum源,并更新 yum 的包索引

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast# 查看仓库中docker版本,选择安装指定版本

yum list docker-ce --showduplicates | sort -r

# 安装docker指定版本

yum install -y docker-ce-3:24.0.2-1.el7.x86_64

3.2 配制镜像加速和cgroup

#没有则自己创建文件

[root@k8s-master ~]# vim /etc/docker/daemon.json

{"registry-mirrors": ["https://jbw52uwf.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]

}

#"exec-opts": ["native.cgroupdriver=systemd"]为docker使用cgroup的方式,k8s使用方式也是systemd,两边要一致#重启docker

systemctl restart docker

systemctl enable docker

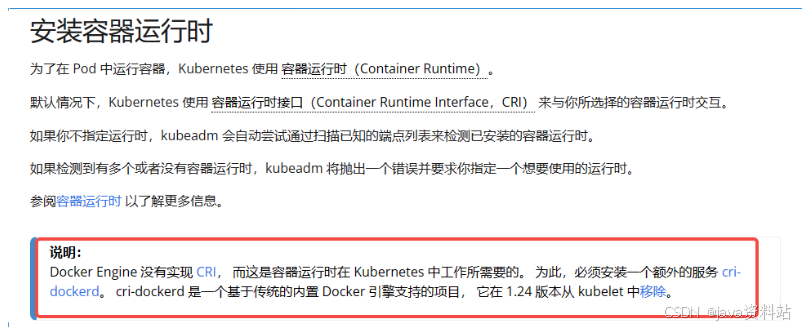

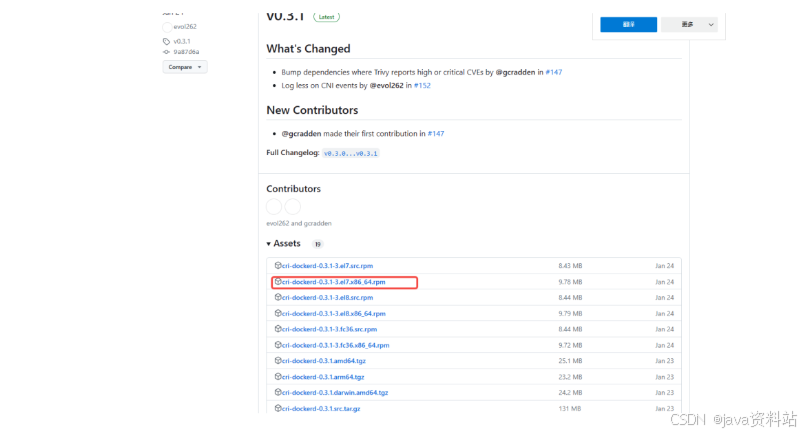

4.安装cri-dockerd(所有节点)

k8s官方表示1.24版本以上以不安装cri,这里需要手动安装

#https://github.com/Mirantis/cri-dockerd/releases

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.1/cri-dockerd-0.3.1-3.el7.x86_64.rpm

rpm -ivh cri-dockerd-0.3.1-3.el7.x86_64.rpm#修改/usr/lib/systemd/system/cri-docker.service文件中的ExecStart配置

vim /usr/lib/systemd/system/cri-docker.service

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7systemctl daemon-reload

systemctl enable --now cri-docker

5.yum安装kubeadm、kubelet、kubectl(所有节点)

# 将 SELinux 设置为 permissive 模式

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config#配置k8s yum源

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg#查看kubeadm有什么版本

yum list --showduplicates | grep kubeadm#不指定版本默认为最新版本,安装k8s1.27.1

sudo yum install -y kubelet-1.27.1 kubeadm-1.27.1 kubectl-1.27.1 #配置开机自启

sudo systemctl enable --now kubelet#指定容器运行时为containerd

crictl config runtime-endpoint /run/containerd/containerd.sock#查看版本

kubeadm version#指定镜像仓库地址,k8s将提前从该地址拉取k8s所需的镜像

kubeadm config images pull --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --cri-socket unix:///var/run/cri-dockerd.sock

6.初始化master节点的控制面板(master节点)

# kubeadm init --help可以查看命令的具体参数用法#在master节点执行初始化(node节点不用执行)

#apiserver-advertise-address 指定apiserver的IP,即master节点的IP

#image-repository 设置镜像仓库为国内镜像仓库

#kubernetes-version 设置k8s的版本,跟kubeadm版本一致

#service-cidr 这是设置node节点的网络的,暂时这样设置

#pod-network-cidr 这是设置node节点的网络的,暂时这样设置

#cri-socket 设置cri使用cri-dockerdkubeadm init \

--apiserver-advertise-address=192.168.65.137 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.27.1 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock \

--ignore-preflight-errors=all# 可以查看kubelet日志

journalctl -xefu kubelet

执行后返回:

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.65.137:6443 --token 9rde5p.16x7v9bh3bt1kwdn \--discovery-token-ca-cert-hash sha256:eb1601e65e9a4e47504d270b0972a01c6476d35fc717462621a8d6920320c4b4 提示以上信息证明你初始化成功

# 在master节点执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf #在你要加入的node节点上执行,master节点不用执行

# --cri-socket unix:///var/run/cri-dockerd.sock 是一个配置选项,用于指定容器运行时接口 (CRI) 与 Docker 守护程序之间的通信方式。

kubeadm join 192.168.65.137:6443 --token 9rde5p.16x7v9bh3bt1kwdn \--discovery-token-ca-cert-hash sha256:eb1601e65e9a4e47504d270b0972a01c6476d35fc717462621a8d6920320c4b4 \--cri-socket unix:///var/run/cri-dockerd.sock#如果上面的令牌忘记了,或者新的node节点加入,在master上执行下面的命令,生成新的令牌kubeadm token create --print-join-command#查看所有命名空间的pod

kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-65dcc469f7-mff2n 1/1 Running 0 69m

kube-system coredns-65dcc469f7-r5gl9 1/1 Running 0 69m

kube-system etcd-k8s-m1 1/1 Running 1 71m

kube-system kube-apiserver-k8s-m1 1/1 Running 2 71m

kube-system kube-controller-manager-k8s-m1 1/1 Running 27 (70m ago) 71m

kube-system kube-proxy-np46m 1/1 Running 0 52s

kube-system kube-proxy-wbp65 1/1 Running 0 69m

kube-system kube-proxy-xwqc7 1/1 Running 0 2m5s

kube-system kube-scheduler-k8s-m1 1/1 Running 26 71m#如果有pod启动失败,可以通过下面的命令查看pod的详细信息

kubectl describe pod coredns-65dcc469f7-mff2n -n kube-system

7.安装网络插件

flannel和calico选择一种安装就行

目前比较常用的是flannel和calico,flannel的功能比较简单,不具备复杂网络的配置能力,不支持网络策略;calico是比较出色的网络管理插件,单具备复杂网络配置能力的同时,往往意味着本身的配置比较复杂,所以相对而言,比较小而简单的集群使用flannel,考虑到日后扩容,未来网络可能需要加入更多设备,配置更多策略,则使用calico更好。

flannel插件

k8s官方flannel地址

# 下载flannel

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

#更改network地址,是初始化时的pod地址范围vim kube-flannel.yml...net-conf.json: |{"Network": "10.244.0.0/16", #更改为初始化pod的地址范围"Backend": {"Type": "vxlan"}}...

#安装flannel

kubectl apply -f kube-flannel.yml

calico插件

通过网盘分享的文件:calico.yaml

链接: https://pan.baidu.com/s/1XC4yoTGxkmhHxo-86j2Bpg 提取码: gi4k

# 下载calico.yaml

wget https://docs.projectcalico.org/v3.25/manifests/calico.yaml --no-check-certificate#查看calico用到的镜像

grep image: calico.yamlimage: docker.io/calico/cni:v3.25.0image: docker.io/calico/cni:v3.25.0image: docker.io/calico/node:v3.25.0image: docker.io/calico/node:v3.25.0image: docker.io/calico/kube-controllers:v3.25.0#默认的calico.yaml清单文件无需手动配置Pod子网范围(如果需要,可通过CALICO_IPV4POOL_CIDR指定),默认使用

#kube-controller-manager的"–cluster-cidr"启动项的值,即kubeadm init时指定的"–pod-network-cidr"或清单文件中使用"podSubnet"的值。

vim calico.yaml

...

- name: CALICO_IPV4POOL_CIDRvalue: "10.244.0.0/16" #更改为初始化pod的地址范围

...

#安装calico.yaml

kubectl apply -f calico.yaml#查看所有的pod

kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6c99c8747f-lkq25 1/1 Running 2 (4m14s ago) 10m

kube-system calico-node-854xw 1/1 Running 1 (4m47s ago) 10m

kube-system coredns-65dcc469f7-9w584 1/1 Running 0 60m

kube-system coredns-65dcc469f7-s44ww 1/1 Running 0 60m

kube-system etcd-k8s-m1 1/1 Running 1 60m

kube-system kube-apiserver-k8s-m1 1/1 Running 5 (63m ago) 60m

kube-system kube-controller-manager-k8s-m1 1/1 Running 44 (9m14s ago) 60m

kube-system kube-proxy-zqpvp 1/1 Running 0 60m

kube-system kube-scheduler-k8s-m1 1/1 Running 43 (5m30s ago) 26s#如果是重装calico,需要先清除旧的配置

rm -rf /etc/cni/net.d/

rm -rf /var/lib/calico

验证集群可用性

#检查节点

#status为ready就表示集群可以正常运行了

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-m1 Ready control-plane 73m v1.27.1

k8s-n1 Ready <none> 2m58s v1.27.1

k8s-n2 Ready <none> 4m12s v1.27.1查看集群健康情况

kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true","reason":""}

scheduler Healthy ok

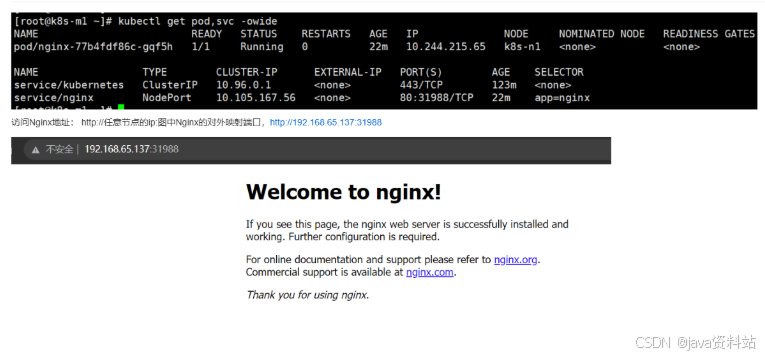

controller-manager Healthy ok**8.测试:**用K8S部署Nginx

在k8s-master机器上执行

# 创建一次deployment部署

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort # --port是service的虚拟ip对应的端口

# 查看Nginx的pod和service信息

kubectl get pod,svc -owide

9.常见错误

- 安装flannel插件时,查看pod发现错误:loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory

查看各个节点,包括master 节点是否有/run/flannel/subnet.env,内容应该是类似如下:

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

若有节点没有该文件那就拷贝一份。

- Failed to pull image “docker.io/flannel/flannel:v0.22.0”

# docker拉取flannel镜像到本地

docker pull docker.io/flannel/flannel:v0.22.0

- Found multiple CRI endpoints on the host. Please define which one do you wish to use by setting the ‘criSocket’ field in the kubeadm configuration file: unix:///var/run/containerd/containerd.sock, unix:///var/run/cri-dockerd.sock To see the stack trace of this error execute with --v=5 or higher

原因:没有整合kubelet和cri-dockerd

解决办法: 在命令后面加上以下选项

--cri-socket unix:///var/run/cri-dockerd.sock

- node执行kubectl命令出现下面的错误:couldn’t get current server API group list: Get “http://localhost:8080/api?timeout=32s”: dial tcp [::1]:8080: connect: connection refused

echo "export KUBECONFIG=/etc/kubernetes/kubelet.conf" >> /etc/profile

source /etc/profile

添加自动补全功能

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

10.重置k8s集群

#master节点执行

kubeadm reset --cri-socket unix:///var/run/cri-dockerd.sock手动清除配置信息

重置(reset)Kubernetes 集群的过程并不会清理 CNI 配置、iptables 规则和 IPVS 表,以及 kubeconfig 文件。要进行这些清理操作,你需要手动执行以下步骤:

- 清理 CNI 配置:运行以下命令以删除 /etc/cni/net.d 目录中的 CNI 配置文件:

rm -rf /etc/cni/net.d

2.清理 iptables 规则:使用 “iptables” 命令手动清理 iptables 规则。你可以运行以下命令来重置 iptables 规则:

iptables -F

iptables -X

iptables -t nat -F

iptables -t nat -X

iptables -t mangle -F

iptables -t mangle -X

iptables -P INPUT ACCEPT

iptables -P FORWARD ACCEPT

iptables -P OUTPUT ACCEPT3.清理 IPVS 表(仅适用于使用 IPVS 的集群):如果你的集群使用 IPVS,请运行以下命令以重置 IPVS 表:

ipvsadm --clear

4.清理 kubeconfig 文件:检查 $HOME/.kube/config 文件的内容,并根据需要手动删除或清理该文件。

rm $HOME/.kube/config

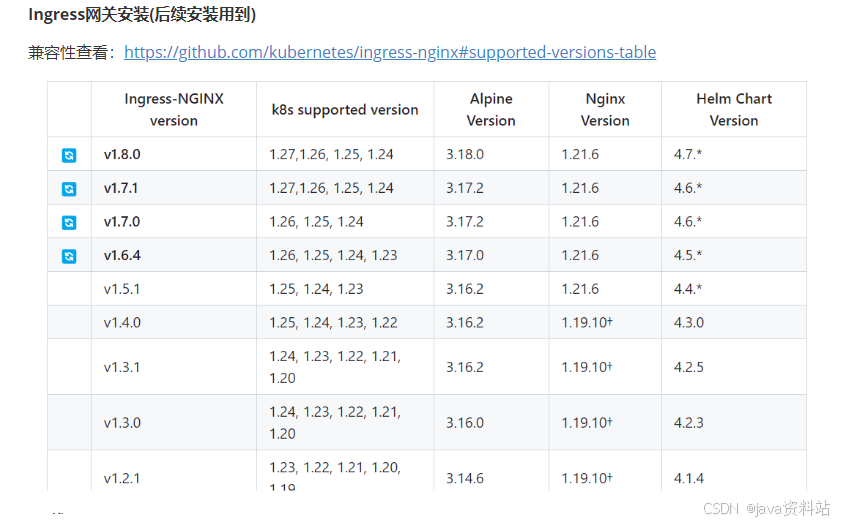

Ingress网关安装(后续安装用到)

兼容性查看:https://github.com/kubernetes/ingress-nginx#supported-versions-table

下载deploy.yaml

https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.0/deploy/static/provider/aws/nlb-with-tls-termination/deploy.yaml

可以使用我提供的deploy.yaml (v1.8.0)

apiVersion: v1

kind: Namespace

metadata:labels:app.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxname: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginxnamespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admissionnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginxnamespace: ingress-nginx

rules:

- apiGroups:- ""resources:- namespacesverbs:- get

- apiGroups:- ""resources:- configmaps- pods- secrets- endpointsverbs:- get- list- watch

- apiGroups:- ""resources:- servicesverbs:- get- list- watch

- apiGroups:- networking.k8s.ioresources:- ingressesverbs:- get- list- watch

- apiGroups:- networking.k8s.ioresources:- ingresses/statusverbs:- update

- apiGroups:- networking.k8s.ioresources:- ingressclassesverbs:- get- list- watch

- apiGroups:- coordination.k8s.ioresourceNames:- ingress-nginx-leaderresources:- leasesverbs:- get- update

- apiGroups:- coordination.k8s.ioresources:- leasesverbs:- create

- apiGroups:- ""resources:- eventsverbs:- create- patch

- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admissionnamespace: ingress-nginx

rules:

- apiGroups:- ""resources:- secretsverbs:- get- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:app.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx

rules:

- apiGroups:- ""resources:- configmaps- endpoints- nodes- pods- secrets- namespacesverbs:- list- watch

- apiGroups:- coordination.k8s.ioresources:- leasesverbs:- list- watch

- apiGroups:- ""resources:- nodesverbs:- get

- apiGroups:- ""resources:- servicesverbs:- get- list- watch

- apiGroups:- networking.k8s.ioresources:- ingressesverbs:- get- list- watch

- apiGroups:- ""resources:- eventsverbs:- create- patch

- apiGroups:- networking.k8s.ioresources:- ingresses/statusverbs:- update

- apiGroups:- networking.k8s.ioresources:- ingressclassesverbs:- get- list- watch

- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission

rules:

- apiGroups:- admissionregistration.k8s.ioresources:- validatingwebhookconfigurationsverbs:- get- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginxnamespace: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx

subjects:

- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admissionnamespace: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx-admission

subjects:

- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:app.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx

subjects:

- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx-admission

subjects:

- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx

---

apiVersion: v1

data:allow-snippet-annotations: "true"http-snippet: |server {listen 2443;return 308 https://$host$request_uri;}proxy-real-ip-cidr: 192.168.0.0/16use-forwarded-headers: "true"

kind: ConfigMap

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-controllernamespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:annotations:service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: "60"service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"service.beta.kubernetes.io/aws-load-balancer-ssl-cert: arn:aws:acm:us-west-2:XXXXXXXX:certificate/XXXXXX-XXXXXXX-XXXXXXX-XXXXXXXXservice.beta.kubernetes.io/aws-load-balancer-ssl-ports: httpsservice.beta.kubernetes.io/aws-load-balancer-type: nlblabels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-controllernamespace: ingress-nginx

spec:externalTrafficPolicy: LocalipFamilies:- IPv4ipFamilyPolicy: SingleStackports:- appProtocol: httpname: httpport: 80protocol: TCPtargetPort: tohttps- appProtocol: httpsname: httpsport: 443protocol: TCPtargetPort: httpselector:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxtype: NodePort

---

apiVersion: v1

kind: Service

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-controller-admissionnamespace: ingress-nginx

spec:ports:- appProtocol: httpsname: https-webhookport: 443targetPort: webhookselector:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxtype: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-controllernamespace: ingress-nginx

spec:minReadySeconds: 0revisionHistoryLimit: 10selector:matchLabels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxtemplate:metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0spec:containers:- args:- /nginx-ingress-controller- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller- --election-id=ingress-nginx-leader- --controller-class=k8s.io/ingress-nginx- --ingress-class=nginx- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller- --validating-webhook=:8443- --validating-webhook-certificate=/usr/local/certificates/cert- --validating-webhook-key=/usr/local/certificates/keyenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: LD_PRELOADvalue: /usr/local/lib/libmimalloc.soimage: giantswarm/ingress-nginx-controller:v1.8.1imagePullPolicy: IfNotPresentlifecycle:preStop:exec:command:- /wait-shutdownlivenessProbe:failureThreshold: 5httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1name: controllerports:- containerPort: 80name: httpprotocol: TCP- containerPort: 80name: httpsprotocol: TCP- containerPort: 2443name: tohttpsprotocol: TCP- containerPort: 8443name: webhookprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1resources:requests:cpu: 100mmemory: 90MisecurityContext:allowPrivilegeEscalation: truecapabilities:add:- NET_BIND_SERVICEdrop:- ALLrunAsUser: 101volumeMounts:- mountPath: /usr/local/certificates/name: webhook-certreadOnly: truednsPolicy: ClusterFirstnodeSelector:kubernetes.io/os: linuxserviceAccountName: ingress-nginxterminationGracePeriodSeconds: 300volumes:- name: webhook-certsecret:secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission-createnamespace: ingress-nginx

spec:template:metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission-createspec:containers:- args:- create- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc- --namespace=$(POD_NAMESPACE)- --secret-name=ingress-nginx-admissionenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespaceimage: dyrnq/kube-webhook-certgen:v20230407imagePullPolicy: IfNotPresentname: createsecurityContext:allowPrivilegeEscalation: falsenodeSelector:kubernetes.io/os: linuxrestartPolicy: OnFailuresecurityContext:fsGroup: 2000runAsNonRoot: truerunAsUser: 2000serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission-patchnamespace: ingress-nginx

spec:template:metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission-patchspec:containers:- args:- patch- --webhook-name=ingress-nginx-admission- --namespace=$(POD_NAMESPACE)- --patch-mutating=false- --secret-name=ingress-nginx-admission- --patch-failure-policy=Failenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespaceimage: dyrnq/kube-webhook-certgen:v20230407imagePullPolicy: IfNotPresentname: patchsecurityContext:allowPrivilegeEscalation: falsenodeSelector:kubernetes.io/os: linuxrestartPolicy: OnFailuresecurityContext:fsGroup: 2000runAsNonRoot: truerunAsUser: 2000serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:labels:app.kubernetes.io/component: controllerapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: nginx

spec:controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:labels:app.kubernetes.io/component: admission-webhookapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/name: ingress-nginxapp.kubernetes.io/part-of: ingress-nginxapp.kubernetes.io/version: 1.8.0name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:- v1clientConfig:service:name: ingress-nginx-controller-admissionnamespace: ingress-nginxpath: /networking/v1/ingressesfailurePolicy: FailmatchPolicy: Equivalentname: validate.nginx.ingress.kubernetes.iorules:- apiGroups:- networking.k8s.ioapiVersions:- v1operations:- CREATE- UPDATEresources:- ingressessideEffects: None

安装ingress

# 查看会用到的镜像, registry.k8s.io拉取不了

grep image: deploy.yaml image: registry.k8s.io/ingress-nginx/controller:v1.8.1@sha256:e5c4824e7375fcf2a393e1c03c293b69759af37a9ca6abdb91b13d78a93da8bdimage: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227bimage: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b#因默认镜像源国内无法访问 ,修改为docker hub上的镜像源

docker pull giantswarm/ingress-nginx-controller:v1.8.1

docker pull dyrnq/kube-webhook-certgen:v20230407# 安装

kubectl apply -f deploy.yaml#查看安装是否成功

kubectl get pods -n ingress-nginx -owide

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules: - apiGroups:

- networking.k8s.io

apiVersions: - v1

operations: - CREATE

- UPDATE

resources: - ingresses

sideEffects: None

- networking.k8s.io

- v1

**安装ingress**```shell

# 查看会用到的镜像, registry.k8s.io拉取不了

grep image: deploy.yaml image: registry.k8s.io/ingress-nginx/controller:v1.8.1@sha256:e5c4824e7375fcf2a393e1c03c293b69759af37a9ca6abdb91b13d78a93da8bdimage: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227bimage: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20230407@sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b#因默认镜像源国内无法访问 ,修改为docker hub上的镜像源

docker pull giantswarm/ingress-nginx-controller:v1.8.1

docker pull dyrnq/kube-webhook-certgen:v20230407# 安装

kubectl apply -f deploy.yaml#查看安装是否成功

kubectl get pods -n ingress-nginx -owide