使用数据断点调试唤醒任务时__state的变化

一、背景

在linux系统运行在内核态时,有非常多的场景是以D的状态作为进程被switch out时的prev_state状态,由于D状态的任务,我们无法用普通的kill来杀掉,唤醒D的任务一般都是用wake_up_process函数来进行唤醒,而wake_up_process其实就是调用的try_to_wake_up函数。

在之前的博客 sched_waking事件及try_to_wake_up函数解析 里,我们详细讲解了内核的唤醒逻辑里的核心函数 try_to_wake_up。这篇博客,我们以一个具体的例子出发,来跟踪一下执行wake_up_process时,执行到try_to_wake_up函数里去后,任务(task_struct)的__state状态是如何进行变化的。

在第二章里,我们写了一个例子,通过msleep进行D状态的睡眠后,尝试通过另外一个进程来唤醒这个D状态睡眠的任务,看是否可以唤醒得了,唤醒后的任务的状态有什么变化。

在第二章的程序里,我们还使用了内核原生就有的数据断点功能,来看task_struct的__state的状态的变更发生的时机。

在第三章里,我们对第二章里的实验结果进行原理解释。

二、使用msleep进行D状态的睡眠后,尝试通过wake_up_process进行唤醒

这一章的ko里,使用了数据断点功能,有关数据断点的使用的更多细节见之前的博客 观测指定内存上是否被读写,若触发条件打印调用栈。

2.1 编写一个ko,提供两个ioctl,一个用于任务以D状态睡眠,一个用于唤醒D状态的任务

ko的源码如下:

#include <linux/module.h>

#include <linux/capability.h>

#include <linux/sched.h>

#include <linux/uaccess.h>

#include <linux/proc_fs.h>

#include <linux/ctype.h>

#include <linux/seq_file.h>

#include <linux/poll.h>

#include <linux/types.h>

#include <linux/ioctl.h>

#include <linux/errno.h>

#include <linux/stddef.h>

#include <linux/lockdep.h>

#include <linux/kthread.h>

#include <linux/sched.h>

#include <linux/delay.h>

#include <linux/wait.h>

#include <linux/init.h>

#include <asm/atomic.h>

#include <trace/events/workqueue.h>

#include <linux/sched/clock.h>

#include <linux/string.h>

#include <linux/mm.h>

#include <linux/interrupt.h>

#include <linux/tracepoint.h>

#include <trace/events/osmonitor.h>

#include <trace/events/sched.h>

#include <trace/events/irq.h>

#include <trace/events/kmem.h>

#include <linux/ptrace.h>

#include <linux/uaccess.h>

#include <asm/processor.h>

#include <linux/sched/task_stack.h>

#include <linux/nmi.h>

#include <asm/apic.h>

#include <linux/version.h>

#include <linux/sched/mm.h>

#include <asm/irq_regs.h>

#include <linux/kallsyms.h>

#include <linux/kprobes.h>#include <linux/stop_machine.h>MODULE_LICENSE("GPL");

MODULE_AUTHOR("zhaoxin");

MODULE_DESCRIPTION("Module for debug D tasks.");

MODULE_VERSION("1.0");#define TESTDTASK_NODE_TYPEMAGIC 0x73

#define TESTDTASK_IO_BASE 0x10typedef struct testdtask_ioctl_dstatus_enter {int i_bio; // 0 or 1, 1 means io_schedule

} testdtask_ioctl_dstatus_enter;typedef struct testdtask_ioctl_dstatus_wake {int i_pid; // wake up specific pid D task

} testdtask_ioctl_dstatus_wake;#define TESTDTASK_IOCTL_DSTATUS_ENTER \_IOWR(TESTDTASK_NODE_TYPEMAGIC, TESTDTASK_IO_BASE + 1, testdtask_ioctl_dstatus_enter)

#define TESTDTASK_IOCTL_DSTATUS_WAKE \_IOWR(TESTDTASK_NODE_TYPEMAGIC, TESTDTASK_IO_BASE + 2, testdtask_ioctl_dstatus_wake)volatile struct task_struct *p_wake = NULL;#include <linux/perf_event.h>

#include <linux/hw_breakpoint.h>struct perf_event * __percpu *sample_hbp;

u32 *_p;static void sample_hbp_handler(struct perf_event *bp,struct perf_sample_data *data,struct pt_regs *regs)

{printk(KERN_INFO "currcpu[%d]currpid[%d]state value is changed[%d]\n", smp_processor_id(), current->pid, *_p);dump_stack();printk(KERN_INFO "Dump stack from sample_hbp_handler\n");

}void register_data_breakpoint(void* p)

{struct perf_event_attr attr;_p = p;hw_breakpoint_init(&attr);attr.bp_addr = (unsigned long)(p);attr.bp_len = HW_BREAKPOINT_LEN_4;attr.bp_type = HW_BREAKPOINT_W; //HW_BREAKPOINT_W;sample_hbp = register_wide_hw_breakpoint(&attr, sample_hbp_handler, NULL);

}void unregister_data_breakpoint(void)

{unregister_wide_hw_breakpoint(sample_hbp);

}static long testdtask_proc_ioctl(struct file *i_pfile, u32 i_cmd, long unsigned int i_arg)

{switch (i_cmd) {case TESTDTASK_IOCTL_DSTATUS_ENTER:{void __user* parg = (void __user*)i_arg;testdtask_ioctl_dstatus_enter enter;if (copy_from_user(&enter, parg, sizeof(enter))) {printk("copy_from_user failed\n");return -EFAULT;}if (enter.i_bio) {current->in_iowait = 1;}printk("pid[%d] before msleep interruptible\n", current->pid);p_wake = current;msleep(20000);p_wake = NULL;printk("pid[%d] after msleep interruptible\n", current->pid);return 0;}case TESTDTASK_IOCTL_DSTATUS_WAKE:{void __user* parg = (void __user*)i_arg;testdtask_ioctl_dstatus_wake wake;struct pid* pid_struct;struct task_struct* ptask;if (copy_from_user(&wake, parg, sizeof(wake))) {printk("copy_from_user failed\n");return -EFAULT;}if (p_wake) {printk("curr_pid[%d]before p_wake[%d][%s]stat[%d]\n", current->pid, p_wake->pid, p_wake->comm, p_wake->__state);register_data_breakpoint(&p_wake->__state);wake_up_process(p_wake);printk("curr_pid[%d]after wake p_wake[%d][%s]stat[%d]\n", current->pid, p_wake->pid, p_wake->comm, p_wake->__state);msleep(1000);unregister_data_breakpoint();}// if (wake.i_pid) {// printk("curr[%s]wake pid[%d]\n", current->comm, wake.i_pid);// pid_struct = find_get_pid(wake.i_pid);// if (pid_struct) {// ptask = get_pid_task(pid_struct, PIDTYPE_PID);// if (ptask) {// wake_up_process(ptask);// put_task_struct(ptask);// }// else {// printk("No exist task of i_pid[%d]!\n", wake.i_pid);// put_pid(pid_struct);// return 0;// }// put_pid(pid_struct);// }// else {// printk("No exist task of i_pid[%d]!\n", wake.i_pid);// return 0;// }// }return 0;}default:return -EINVAL;}return 0;

}static int testdtask_proc_open(struct inode *i_pinode, struct file *i_pfile)

{return 0;

}static int testdtask_proc_release(struct inode *i_inode, struct file *i_file)

{return 0;

}typedef struct testdtask_env {struct proc_dir_entry* testdtask;

} testdtask_env;static testdtask_env _env;static const struct proc_ops testdtask_proc_ops = {.proc_read = NULL,.proc_write = NULL,.proc_open = testdtask_proc_open,.proc_release = testdtask_proc_release,.proc_ioctl = testdtask_proc_ioctl,

};#define PROC_TESTDTASK_NAME "testdtask"static int __init testdtask_init(void)

{_env.testdtask = proc_create(PROC_TESTDTASK_NAME, 0666, NULL, &testdtask_proc_ops);//msleep(10000);return 0;

}static void __exit testdtask_exit(void)

{remove_proc_entry(PROC_TESTDTASK_NAME, NULL);

}module_init(testdtask_init);

module_exit(testdtask_exit);

2.2 两个用户态程序,testdtask_denter及testdtask_dwake,用于进入D状态及唤醒

2.2.1 testdtask_denter的用户态代码

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <string.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <unistd.h>

#include <string.h>

#include <iostream>

#include <sys/types.h>

#include <sys/ioctl.h>using namespace std;#define TESTDTASK_NODE_TYPEMAGIC 0x73

#define TESTDTASK_IO_BASE 0x10typedef struct testdtask_ioctl_dstatus_enter {int i_bio; // 0 or 1, 1 means io_schedule

} testdtask_ioctl_dstatus_enter;typedef struct testdtask_ioctl_dstatus_wake {int i_pid; // wake up specific pid D task

} testdtask_ioctl_dstatus_wake;#define TESTDTASK_IOCTL_DSTATUS_ENTER \_IOWR(TESTDTASK_NODE_TYPEMAGIC, TESTDTASK_IO_BASE + 1, testdtask_ioctl_dstatus_enter)

#define TESTDTASK_IOCTL_DSTATUS_WAKE \_IOWR(TESTDTASK_NODE_TYPEMAGIC, TESTDTASK_IO_BASE + 2, testdtask_ioctl_dstatus_wake)int main(int i_argc, char* i_argv[]) {int convertfd = open("/proc/testdtask", O_RDWR);testdtask_ioctl_dstatus_enter enter;if (*(i_argv[1]) != '0' && *(i_argv[1]) != '1') {printf("invalid input, parameter 1 should be 0 or 1!\n");return 0;}if (*i_argv[1] == 1) {enter.i_bio = 1;printf("bio=1\n");} else {enter.i_bio = 0;printf("bio=0\n");}if (ioctl(convertfd, TESTDTASK_IOCTL_DSTATUS_ENTER, &enter) < 0) {perror("ioctl");return 0;}return 1;

}2.2.2 testdtask_dwake的用户态代码

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <string.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <unistd.h>

#include <string.h>

#include <iostream>

#include <sys/types.h>

#include <sys/ioctl.h>using namespace std;#define TESTDTASK_NODE_TYPEMAGIC 0x73

#define TESTDTASK_IO_BASE 0x10typedef struct testdtask_ioctl_dstatus_enter {int i_bio; // 0 or 1, 1 means io_schedule

} testdtask_ioctl_dstatus_enter;typedef struct testdtask_ioctl_dstatus_wake {int i_pid; // wake up specific pid D task

} testdtask_ioctl_dstatus_wake;#define TESTDTASK_IOCTL_DSTATUS_ENTER \_IOWR(TESTDTASK_NODE_TYPEMAGIC, TESTDTASK_IO_BASE + 1, testdtask_ioctl_dstatus_enter)

#define TESTDTASK_IOCTL_DSTATUS_WAKE \_IOWR(TESTDTASK_NODE_TYPEMAGIC, TESTDTASK_IO_BASE + 2, testdtask_ioctl_dstatus_wake)int main(int i_argc, char* i_argv[]) {int convertfd = open("/proc/testdtask", O_RDWR);testdtask_ioctl_dstatus_wake wake;wake.i_pid = atoi(i_argv[1]);if (ioctl(convertfd, TESTDTASK_IOCTL_DSTATUS_WAKE, &wake) < 0) {perror("ioctl");return 0;}return 1;

}

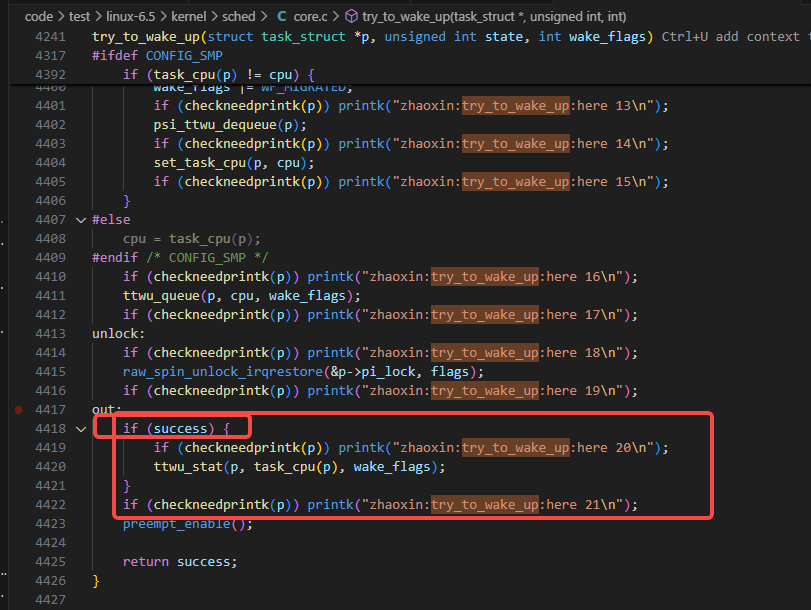

2.3 为了进一步调试try_to_wake_up里的运行情况,在try_to_wake_up函数里增加了打印

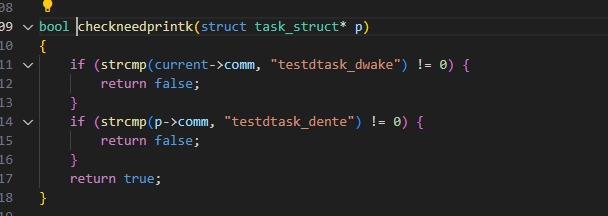

我们增加打印的方式是只在指定comm的任务唤醒指定comm的任务时才触发打印:

下图里的testdtask_dente和testdtask_dwake一个是执行D状态睡眠的任务的task_struct->comm,一个是唤醒该D状态任务的task_struct->comm。

修改后的try_to_wake_up函数如下:

static int

try_to_wake_up(struct task_struct *p, unsigned int state, int wake_flags)

{unsigned long flags;int cpu, success = 0;if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:enter\n");preempt_disable();if (p == current) {/** We're waking current, this means 'p->on_rq' and 'task_cpu(p)* == smp_processor_id()'. Together this means we can special* case the whole 'p->on_rq && ttwu_runnable()' case below* without taking any locks.** In particular:* - we rely on Program-Order guarantees for all the ordering,* - we're serialized against set_special_state() by virtue of* it disabling IRQs (this allows not taking ->pi_lock).*/if (!ttwu_state_match(p, state, &success))goto out;trace_sched_waking(p);ttwu_do_wakeup(p);goto out;}if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 0\n");/** If we are going to wake up a thread waiting for CONDITION we* need to ensure that CONDITION=1 done by the caller can not be* reordered with p->state check below. This pairs with smp_store_mb()* in set_current_state() that the waiting thread does.*/raw_spin_lock_irqsave(&p->pi_lock, flags);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 1\n");smp_mb__after_spinlock();if (!ttwu_state_match(p, state, &success)) {if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 2\n");goto unlock;}if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 3\n");trace_sched_waking(p);/** Ensure we load p->on_rq _after_ p->state, otherwise it would* be possible to, falsely, observe p->on_rq == 0 and get stuck* in smp_cond_load_acquire() below.** sched_ttwu_pending() try_to_wake_up()* STORE p->on_rq = 1 LOAD p->state* UNLOCK rq->lock** __schedule() (switch to task 'p')* LOCK rq->lock smp_rmb();* smp_mb__after_spinlock();* UNLOCK rq->lock** [task p]* STORE p->state = UNINTERRUPTIBLE LOAD p->on_rq** Pairs with the LOCK+smp_mb__after_spinlock() on rq->lock in* __schedule(). See the comment for smp_mb__after_spinlock().** A similar smb_rmb() lives in try_invoke_on_locked_down_task().*/smp_rmb();if (READ_ONCE(p->on_rq) && ttwu_runnable(p, wake_flags)) {if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 4\n");goto unlock;}if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 5\n");#ifdef CONFIG_SMP/** Ensure we load p->on_cpu _after_ p->on_rq, otherwise it would be* possible to, falsely, observe p->on_cpu == 0.** One must be running (->on_cpu == 1) in order to remove oneself* from the runqueue.** __schedule() (switch to task 'p') try_to_wake_up()* STORE p->on_cpu = 1 LOAD p->on_rq* UNLOCK rq->lock** __schedule() (put 'p' to sleep)* LOCK rq->lock smp_rmb();* smp_mb__after_spinlock();* STORE p->on_rq = 0 LOAD p->on_cpu** Pairs with the LOCK+smp_mb__after_spinlock() on rq->lock in* __schedule(). See the comment for smp_mb__after_spinlock().** Form a control-dep-acquire with p->on_rq == 0 above, to ensure* schedule()'s deactivate_task() has 'happened' and p will no longer* care about it's own p->state. See the comment in __schedule().*/smp_acquire__after_ctrl_dep();/** We're doing the wakeup (@success == 1), they did a dequeue (p->on_rq* == 0), which means we need to do an enqueue, change p->state to* TASK_WAKING such that we can unlock p->pi_lock before doing the* enqueue, such as ttwu_queue_wakelist().*/WRITE_ONCE(p->__state, TASK_WAKING);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 6\n");/** If the owning (remote) CPU is still in the middle of schedule() with* this task as prev, considering queueing p on the remote CPUs wake_list* which potentially sends an IPI instead of spinning on p->on_cpu to* let the waker make forward progress. This is safe because IRQs are* disabled and the IPI will deliver after on_cpu is cleared.** Ensure we load task_cpu(p) after p->on_cpu:** set_task_cpu(p, cpu);* STORE p->cpu = @cpu* __schedule() (switch to task 'p')* LOCK rq->lock* smp_mb__after_spin_lock() smp_cond_load_acquire(&p->on_cpu)* STORE p->on_cpu = 1 LOAD p->cpu** to ensure we observe the correct CPU on which the task is currently* scheduling.*/if (smp_load_acquire(&p->on_cpu) &&ttwu_queue_wakelist(p, task_cpu(p), wake_flags)) {if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 7\n");goto unlock;}if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 8\n");/** If the owning (remote) CPU is still in the middle of schedule() with* this task as prev, wait until it's done referencing the task.** Pairs with the smp_store_release() in finish_task().** This ensures that tasks getting woken will be fully ordered against* their previous state and preserve Program Order.*/smp_cond_load_acquire(&p->on_cpu, !VAL);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 9\n");cpu = select_task_rq(p, p->wake_cpu, wake_flags | WF_TTWU);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 10\n");if (task_cpu(p) != cpu) {if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 11\n");if (p->in_iowait) {if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 12\n");delayacct_blkio_end(p);atomic_dec(&task_rq(p)->nr_iowait);}wake_flags |= WF_MIGRATED;if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 13\n");psi_ttwu_dequeue(p);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 14\n");set_task_cpu(p, cpu);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 15\n");}

#elsecpu = task_cpu(p);

#endif /* CONFIG_SMP */if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 16\n");ttwu_queue(p, cpu, wake_flags);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 17\n");

unlock:if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 18\n");raw_spin_unlock_irqrestore(&p->pi_lock, flags);if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 19\n");

out:if (success) {if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 20\n");ttwu_stat(p, task_cpu(p), wake_flags);}if (checkneedprintk(p)) printk("zhaoxin:try_to_wake_up:here 21\n");preempt_enable();return success;

}2.3 运行方式及运行结果

2.3.1 运行方式

先insmod ko:

insmod testdtask.ko然后运行进入D状态的demo程序:

./testdtask_denter 0上图里的参数0,可以暂且忽略,这个参数0当时在写程序时是用于调试iowait这个指标相关的细节的,有关iowait及这个0/1参数对应的ko代码里的 current->in_iowait 的细节见之前的博客 cpu的iowait指标解释及示例。

然后运行唤醒该D状态任务的demo程序:

./testdtask_dwake 0上图里的参数0,也可以暂且忽略,这个参数原来想用于传入的pid,再去找对应的task_struct,再去wake up,但是现在代码里没有用这个找task_struct再去唤醒的逻辑,而是直接在之前的testdtask_denter进行ioctl时记下来这个task_struct的指针。有关如何在内核逻辑里获取指定任务的task_struct以及对应的cmdline见之前的博客 内核模块里获取当前进程和父进程的cmdline的方法及注意事项,涉及父子进程管理,和rcu的初步介绍 的 2.1.2 一节。

在执行上面的唤醒动作前可以先执行一下dmesg的清除工作,方便kernel log的阅读:

dmesg -c;clear;dmesg -w2.3.2 运行结果

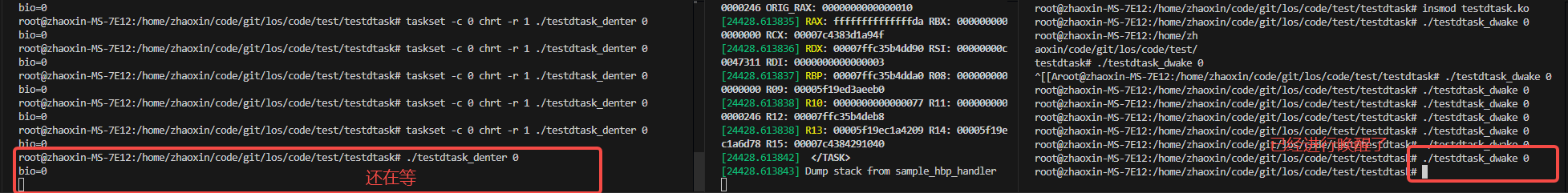

运行后可以发现,testdtask_dwake并没有马上把testdtask_denter任务退出msleep的执行,testdtask_denter真正退出msleep的时候还是要等msleep设置的时间超时后才结束。

从下图里的kernel log也可以看到(msleep执行完是等足了msleep当前设置的20000,也就是20秒):

三、原理解释

3.1 wake_up_process确实是可以唤醒D任务的

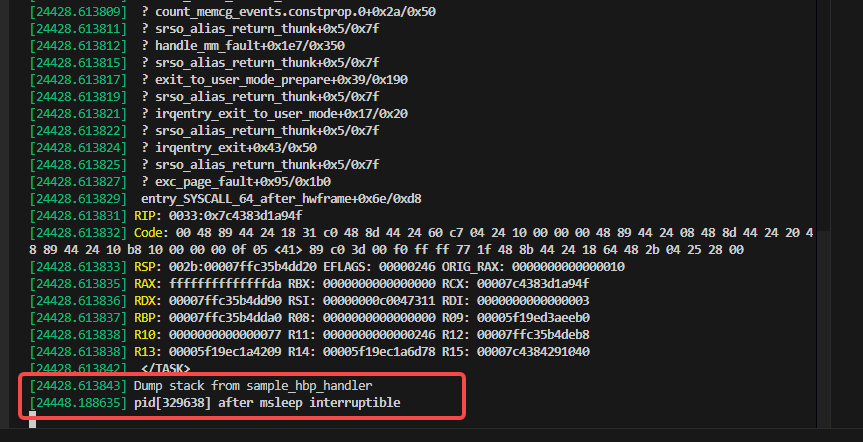

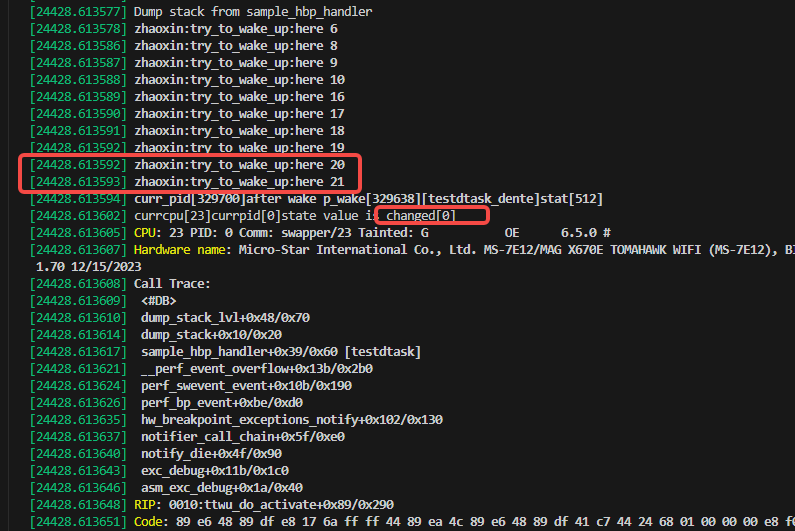

从下图里的内核日志里可以看到,try_to_wake_up执行到了20和21这里:

20和21即如下:

是成功唤醒了任务才会走到上图里的逻辑。

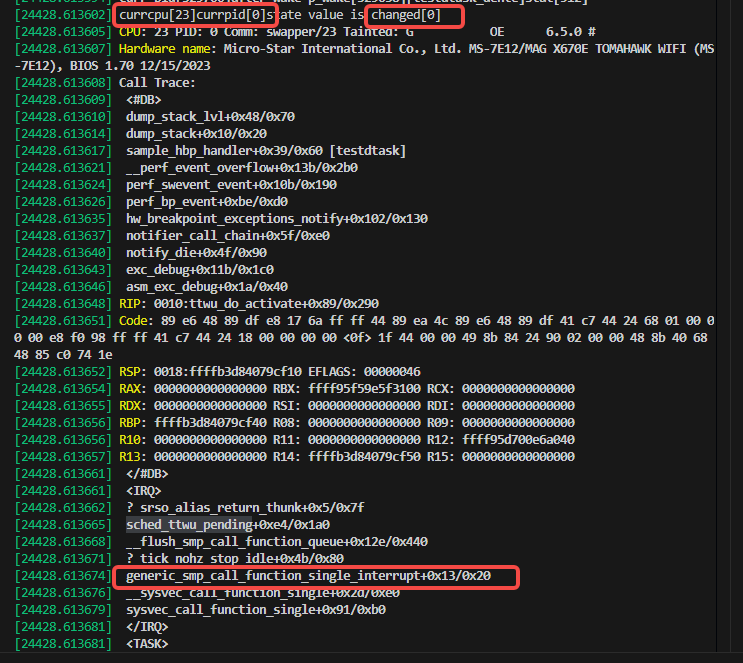

另外,内核日志里还有下图的打印,通过数据断点检测到了被唤醒任务的__state状态变成了0,如下图:

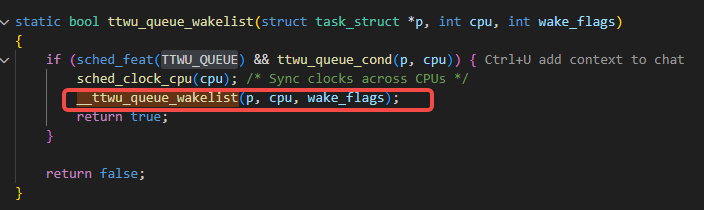

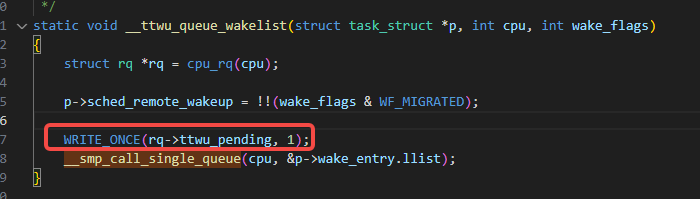

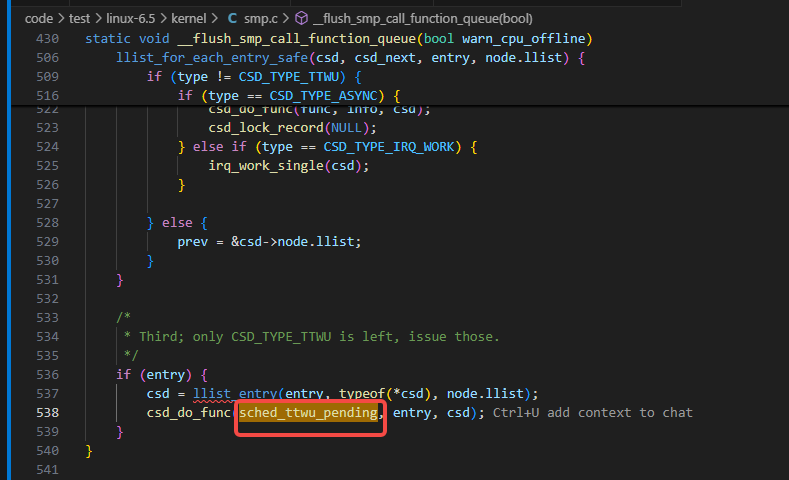

上图的sched_ttwu_pending是由内核默认打开的TTWU_QUEUE特性,走到下图里的逻辑:

通过__ttwu_queue_wakelist调用__smp_call_single_queue触发:

如下图里的调用:

有关TTWU_QUEUE特性在之前的博客 sched_waking事件及try_to_wake_up函数解析 里的 2.2.8 一节里有描述。

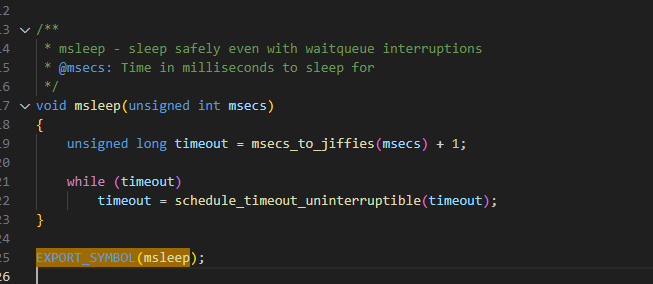

3.2 msleep与schedule_timeout_uninterruptible

虽然如上面 3.1 里证明的确实唤醒了D状态的任务,但是因为当前例子里testdtask_denter的底下的实现是用msleep来进行的D状态的睡眠,而msleep的实现是一个“死循环”,会一直保持D的状态,直到超时结束,在这个过程中,虽然可能会被唤醒,但是检查时间未超时的话,仍然会继续在循环里,如下图的msleep的实现:

但是,如果把testdtask_denter所调用到的底层逻辑里的msleep替换成schedule_timeout_uninterruptible的话,就会让testdtask_denter很快就返回了。

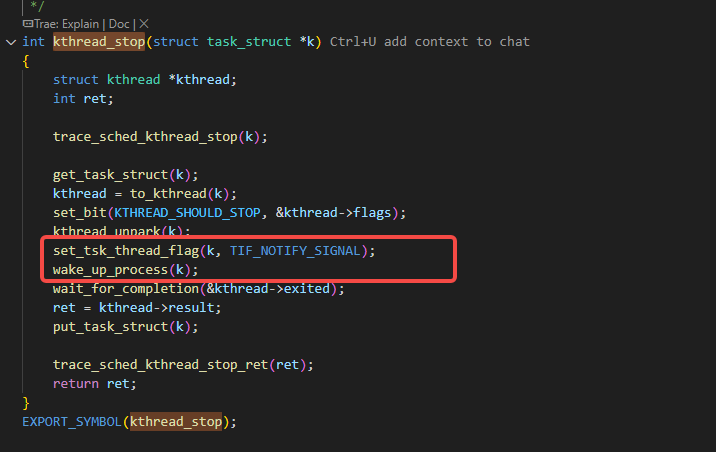

3.3 内核里常用的kthread_stop的实现及msleep_interruptible

内核代码里,有大量的使用内核线程去做一些事情,并使用kthread_stop函数去取消对应的内核线程。如下图是kthread_stop的实现,可以看到里面,也是用的wake_up_process来做的:

按照上面 3.1 和 3.2 的分析,如果内核线程用的是msleep来进行D状态睡眠的话,就算使用kthread_stop来通过wake_up_process也是无法让内核线程在msleep设置的超时到期前执行完msleep的逻辑的,但是改用schedule_timeout_uninterruptible就可以了,另外,当然也可以视情况来用msleep_interruptible来代替msleep,但是这样做会增大用户误操作导致任务被杀了的情况,也会因为误发信号到该任务导致msleep_interruptible未按预期的到期时间返回。