使用tensorRT8部署yolov5目标检测模型(1)

本文基于实际项目的使用经验,优化了原本的代码的文件结构,使得最新的部署代码可以更加方便的嵌入到不同的项目,同时优化的代码也变得更加鲁棒。由于不同项目使用的部署框架的版本不一致,本文使用tensorRT8的接口完成yolov5的目标检测模型部署任务。在不改变原始模型的输入和输出大小,对使用手机录像的视频进行测试,可以达到平均170fps(没有使用多线程)。

为了方便在原始的yolov5的源码中添加其他注意力或者其他改进策略而不改变模型的部署代码,我们整个流程从"训练模型--pt模型--转onnx模型--trt推理引擎"的思路展开,即完成模型部署训练,然后把模型转为onnx模型。本文拿到onnx模型然后把模型转为engine,然后开始对模型推理。

这里就不再多说其他,直接上代码,部署代码分为三个文件utils.hpp文件、Trtmodel.hpp文件Trtmodel.cpp文件。

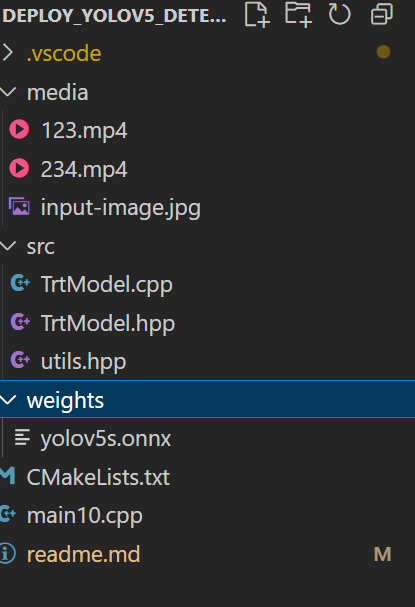

我的项目文件结构如下图:

其中utils.hpp文件如下:

#ifndef UTILS_HPP

#define UTILS_HPP#include <opencv2/opencv.hpp>

#include <cuda_runtime.h>

#include <cassert>

#include <iostream>

#include <memory>#ifndef CUDA_CHECK

#define CUDA_CHECK(call) \do { \cudaError_t err__ = (call); \if (err__ != cudaSuccess) { \std::cerr << "CUDA error [" << static_cast<int>(err__) << "] " \<< cudaGetErrorString(err__) << " at " << __FILE__ \<< ":" << __LINE__ << std::endl; \assert(false); \} \} while (0)

#endif// 管理 TensorRT/NV 对象:调用 p->destroy()

template<typename T>

inline std::shared_ptr<T> make_nvshared(T* ptr){return std::shared_ptr<T>(ptr, [](T* p){ if(p) p->destroy(); });

}/*-------------------------- YOLOV5_DETECT --------------------------*/

struct detectRes {int label { -1 };float confidence { 0.f };cv::Rect box {};cv::Scalar box_color {};

};

/*-------------------------- END YOLOV5_DETECT ----------------------*/#endif // UTILS_HPPTrtModel.hpp文件如下:

#ifndef TRTMODEL_HPP

#define TRTMODEL_HPP#include <NvInfer.h>

#include <NvOnnxParser.h>

#include "logger.h"

#include "common.h"

#include <fstream>

#include <iostream>

#include <vector>

#include <string>

#include <random>

#include <cuda_runtime_api.h>

#include <unordered_map>#include "utils.hpp"class TrtModel

{

public:TrtModel(std::string onnxfilepath, bool fp16); ~TrtModel(); /*使用默认析构函数*/std::vector<detectRes> detect_postprocess(cv::Mat& frame); void det_drawResult(cv::Mat& image, const std::vector<detectRes>& outputs);private:bool genEngine(); /*onnx转为engine */std::vector<unsigned char> load_engine_file(); /*加载engine模型*/bool Runtime(); /*从engine穿件推理运行时,执行上下文*/bool trtIOMemory();void preprocess(cv::Mat srcimg); /*图像预处理操作*/std::shared_ptr<nvinfer1::IRuntime> m_runtime {nullptr}; /*声明模型的推理运行时指针*/std::shared_ptr<nvinfer1::ICudaEngine> m_engine {nullptr}; /*声明模型反序列化指针*/std::shared_ptr<nvinfer1::IExecutionContext> m_context {nullptr}; /*声明模型执行上下文指针*/cudaStream_t m_stream; /*声明cuda流*/// 绑定名/索引(TRT 8.5+ 推荐用 I/O Tensor 名)std::string m_inputName, m_outputName;int m_inputIndex {-1}, m_outputIndex {-1};float* m_input_device_memory {nullptr};float* m_input_host_memory {nullptr};float* m_detect_bindings[2] {nullptr, nullptr};float* m_detect_host_memory {nullptr};float* m_detect_device_memory {nullptr};nvinfer1::Dims m_inputDims{}; /*声明输入图片属性的索引*/nvinfer1::Dims m_detectDims{};std::string m_enginePath {}; /*指定生成的engine模型的地址*/std::string onnx_file_path {}; /*指定输入的onnx模型的地址*/bool FP16 {}; /*判断是否使用半精度进行面模型优化*/int m_inputSize {}; /*图像需要预处理的大小*/int m_imgArea {}; /*使用指针对图像预处理的偏移量大小,不同图像通道*/int m_detectSize {};int kInputH {}; /*模型预处理的图像的高度,最好是32的整数倍*/int kInputW {}; /*模型预处理的图像的宽度,最好是32的整数倍*/float i2d[6] {}, d2i[6] {};float kNmsThresh = 0.2f; /*后处理的NMS的阈值*/float kConfThresh = 0.5f; /*模型检测的置信度的阈值*/const std::vector<std::string> CLASS_NAMES = { /*需要检测的目标类别*/ "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant","stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse","sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis","snowboard", "sports ball", "kite", "baseball bat", "baseball glove","skateboard", "surfboard", "tennis racket", "bottle", "wine glass","cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich","orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet", "tv","laptop", "mouse", "remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase","sc