K8s快速上手-微服务篇篇

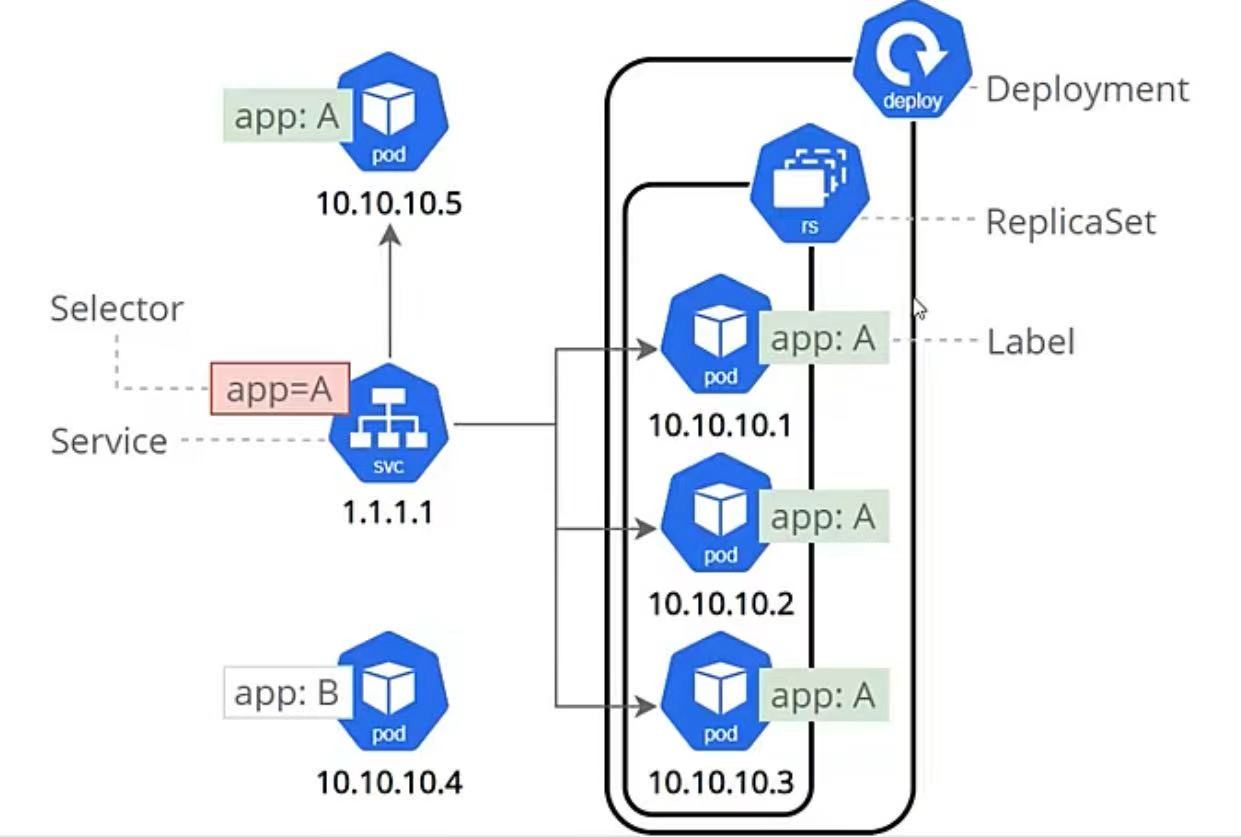

Service是一组提供相同服务的Pod对外开放的接口

借助Service,应用可以实现服务发现和负载均衡

Service默认只支持四层负载均衡能力,没有七层功能。(通过ingress实现)

Cluster IP

Cluster IP

针对于服务来说我们一般用expose,后面跟控制器。也可以用create,但是create后面没那么多的参数可选,这里可以先用一个控制器deployment进行下面service运用的测试

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: nginxtestname: nginxtestnamespace: dev

spec:replicas: 2selector:matchLabels:app: nginxtesttemplate:metadata:labels:app: nginxtestspec:containers:- image: nginx:1.17.1name: nginxcommand:- /bin/bash- -cargs:- |echo "test from $(hostname -I)" > /usr/share/nginx/html/index.htmlexec nginx -g "daemon off;"cluster IP

clusterip是集群内部的默认类型,用于集群内部通信,为一组Pod提供稳定的IP,实现集群内部服务发现于负载均衡。

比如我现在创建一个控制器或者pod:

[root@k8sMaster ~]# kubectl create deployment nginxtest --image=nginx:1.17.1 --replicas=1 -n dev

deployment.apps/nginxtest created然后我们针对这个控制器开启一个clusterip进行暴露

[root@k8sMaster ~]# kubectl expose -n dev deployment.apps/nginxtest --name=svc-nginx --type=ClusterIP --port=80 --target-port=80 我们在上面执行这些命令的时候可以加上--dry-run=client -o yaml,将输出的转为yaml,然后进行修改,也比较直观,上面两个的yaml内容如下:

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: nginxtestname: nginxtestnamespace: dev

spec:replicas: 2selector:matchLabels:app: nginxtesttemplate:metadata:labels:app: nginxtestspec:containers:- image: nginx:1.17.1name: nginxcommand:- /bin/bash- -cargs:- |echo "test from $(hostname -I)" > /usr/share/nginx/html/index.htmlexec nginx -g "daemon off;"

---

apiVersion: v1

kind: Service

metadata:labels:app: nginxtestname: svc-nginxnamespace: dev

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: nginxtesttype: ClusterIP可以推测出,我们的微服务是使用selector对pod进行选择型的控制,selector里面的标签和pod相互对应。上面提到的ports里面的参数分别对应service开放的端口(port)和pod开放的端口(targetPort):

[root@k8sMaster ~]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-nginx ClusterIP 10.100.84.97 <none> 80/TCP 0s现在是可以直接curl 上面的这个CLUSTER-IP 的,如果我们需要指定的话可以使用这些字段:

[root@k8sMaster ~]# kubectl explain svc.spec.clusterIP

KIND: Service

VERSION: v1FIELD: clusterIP <string>

# 比如指定IP为10.96.0.100

spec:type: ClusterIPclusterIP: 10.96.0.100我们可以进行测试:(默认就是负载均衡)

[root@k8sMaster ~]# curl 10.100.84.97

test from 10.244.249.6

[root@k8sMaster ~]# curl 10.100.84.97

test from 10.244.185.202

headless

Headless Service表示的就是去掉ClusterIP,直接将pod的IP地址给到dns映射,这里就不会经过ClusterIP这个代理,也就是不会经过ipvs或者iptables,不会负载均衡。这个常常搭配statefulset配合使用(后面会细讲):

apiVersion: v1

kind: Service

metadata:name: nginx-servicenamespace: dev

spec:type: ClusterIP ClusterIP: None #直接将里面的值给为None即可selector: app: nginxports:- port: 80targetPort: 80 NodePort

通过暴露所在节点的端口做一个对服务的映射,从而使外部主机通过master节点的对外ip:端口来访问pod业务

[root@k8sMaster ~]# kubectl expose deployment.apps/nginxtest --name=svc-nginx --type=NodePort --port=80 --target-port=80 -n dev --dry-run=client -o yaml

apiVersion: v1

kind: Service

metadata:creationTimestamp: nulllabels:app: nginxtestname: svc-nginxnamespace: dev

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: nginxtesttype: NodePort

status:loadBalancer: {}部署之后的效果:

[root@k8sMaster ~]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-nginx NodePort 10.110.170.144 <none> 80:32244/TCP 10s然后访问任意集群主机的这个32244端口:

[root@k8sMaster ~]# curl 192.168.118.201:32244

test from 10.244.249.7

[root@k8sMaster ~]# curl 192.168.118.202:32244

test from 10.244.249.7

[root@k8sMaster ~]# curl 192.168.118.200:32244

test from 10.244.249.7loadbalancer

对于我们虽然创建的NodePort可以使用任意一个节点附加端口进行访问我们集群内部服务,但是无法使得访问node的流量进行负载均衡。loadbalancer就是这样存在的。

1.自动分配一个外部 IP,由云平台负载均衡器统一分发到所有节点

2.负载均衡器自动剔除不健康节点,将流量导向健康节点

3.客户端仅感知负载均衡器 IP,节点 IP 对客户端透明

4.云负载均衡器自动扩展,处理海量请求

本示例我们会使用到这些东西:metallb-native.yml和两个镜像(metallb/controller和metallb/speaker)

controller: quay.io/metallb/controller:v0.14.8 - 镜像下载 | quay.io

speaker:quay.io/metallb/speaker:v0.14.8 - 镜像下载 | quay.io

metallb-native.yml:

wget https://raw.githubusercontent.com/metallb/metallb/v0.13.12/config/manifests/metallb-native.yaml我们将上面的镜像down下来,然后推送到自己的harbor仓库里面:使用docker tag quay.io/metallb/controller:v0.14.8 OpenLabTest/metallb/controller:v0.14.8然后docker push的方式(OpenLabTest这里是我自己harbor的名称)

[root@k8sMaster ~]# docker images | grep meta

OpenLabTest/metallb/controller v0.14.8 eabbe97a78ee 12 months ago 63.4MB

OpenLabTest/metallb/speaker v0.14.8 50d3d2d1712d 12 months ago 119MB创建

我们先将上面这个metallb-native.yml里面所有的image改为我们仓库的镜像地址

vim metallb-native.yml然后在里面输入:% s/docker.io/OpenLabTest/g将全部替换为自己仓库的地址

[root@k8sMaster ~]# mkdir metallb

[root@k8sMaster ~]# mv metallb-native.yaml metallb/

[root@k8sMaster metallb]# kubectl -n metallb-system get all

NAME READY STATUS RESTARTS AGE

pod/controller-56884d9bc4-zdrdw 1/1 Running 0 74s

pod/speaker-bcz24 0/1 ContainerCreating 0 74s

pod/speaker-dhxgx 1/1 Running 0 74s

pod/speaker-dvj99 0/1 ContainerCreating 0 74sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/metallb-webhook-service ClusterIP 10.111.61.117 <none> 443/TCP 74sNAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 3 3 1 3 1 kubernetes.io/os=linux 74sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 1/1 1 1 74sNAME DESIRED CURRENT READY AGE

replicaset.apps/controller-56884d9bc4 1 1 1 74s然后创建一个IP池和metallb模式:

vim configmap.ymlapiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:name: first-poolnamespace: metallb-system

spec:addresses:- 192.168.118.100-192.168.118.150 #这里和自己真实的物理网卡在同一个广播域的子网---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement #L2模式

metadata:name: metallb-l2 #资源名称不能大写namespace: metallb-system

spec:ipAddressPools:- first-pool 这里的L2模式就是,在节点中选出一个作为speaker,在它上面绑定VIP,并且发送arp广播,将这个IP宣告。但是实际上这个节点上面的VIP被流量访问到之后,会再通过NodePort进行转发到集群内部。

补充:controller这个容器通过 ClusterRole 获得对 services(所有 namespace)的 list/watch/update 权限,因此任何 namespace 里出现 type: LoadBalancer 的 Service 都能被它看到并分配 VIP。

speaker同样使用 ClusterRole 来 list/watch Services、Nodes、IPAddressPools 等,保证每台节点都能获知“哪些 VIP 该由自己负责”。

测试

kubectl apply -f metallb-native.yaml

kubectl apply -f configmap.yml [root@k8sMaster ~]# kubectl expose deployments.apps/nginxtest -n dev --name=svc-nginx--type=LoadBalancer --port=80 --target-port=80 --dry-run=client -o yaml

apiVersion: v1

kind: Service

metadata:creationTimestamp: nulllabels:app: nginxtestname: svc-nginxnamespace: dev

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: nginxtesttype: LoadBalancer

测试

[root@k8sMaster metallb]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc-nginx LoadBalancer 10.106.37.88 192.168.118.100 80:31612/TCP 10s

[root@k8sMaster metallb]# curl 192.168.118.100

test page which from nginx-deploy-7f998cb497-b7zbt:10.244.1.2

[root@k8sMaster metallb]# curl 192.168.118.100

test page which from nginx-deploy-7f998cb497-s4274:10.244.2.8

[root@k8sMaster metallb]# curl 192.168.118.100

test page which from nginx-deploy-7f998cb497-vrtj2:10.244.2.7

externalName

相当于是对集群内部添加一条域名解析,当我们解析这个名字(比如www.baidu.com)的时候,我们的serverName.default.svc.cluster.cluster.local会被插入成一条CName解析到这个www.baidu.com域名,到集群的DNS

[root@k8sMaster ~]# kubectl create service externalname reflect --external-name www.baidu.com --dry-run=client -o yaml

apiVersion: v1

kind: Service

metadata:labels:app: reflectname: reflectnamespace: dev

spec:externalName: www.baidu.comselector:app: reflecttype: ExternalName这个目的是为了如果想要将我们的域外域名能够不被事先定制在镜像里面,可以通过固定名称访问外部

验证:查DNS

[root@k8sMaster ~]# kubectl -n kube-system get svc kube-dns

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 3d11h[root@k8sMaster ~]# dig reflect.dev.svc.cluster.local. @10.96.0.10

; <<>> DiG 9.16.23-RH <<>> reflect.dev.svc.cluster.local. @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 30096

;; flags: qr aa rd; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: a256fefc3351c2fe (echoed)

;; QUESTION SECTION:

;reflect.dev.svc.cluster.local. IN A;; ANSWER SECTION:

reflect.dev.svc.cluster.local. 30 IN CNAME www.baidu.com.

www.baidu.com. 30 IN CNAME www.a.shifen.com.

www.a.shifen.com. 30 IN A 183.2.172.17

www.a.shifen.com. 30 IN A 183.2.172.177;; Query time: 77 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Thu Aug 14 10:09:18 CST 2025

;; MSG SIZE rcvd: 233

Ingress-nginx

每一个传统的service都会暴露一个IP地址,并且由于service是四层的。ingress就是根据访问的域名提供一个类似于nginx的服务内容,根据访问的路由导向于各个service

这个需要两个image

[root@k8sNode1 ~]# docker images | grep ingress

OpenLabTest/ingress-nginx/controller v1.13.1 2686819d3b67 45 hours ago 318MB

OpenLabTest/ingress-nginx/kube-webhook-certgen v1.6.1 8884abbb6ca0 5 days ago 70MBcontroller:registry.k8s.io/ingress-nginx/controller:v1.13.1 - 镜像下载 | registry.k8s.io

kube-webhook-certgen:registry.k8s.io/ingress-nginx/kube-webhook-certgen:v1.6.1 - 镜像下载 | registry.k8s.io

解释一下这里面的controller作用是监听所有的Ingress对象,生成反向代理配置维护upstream,公布地址和重定向等等。默认情况下:

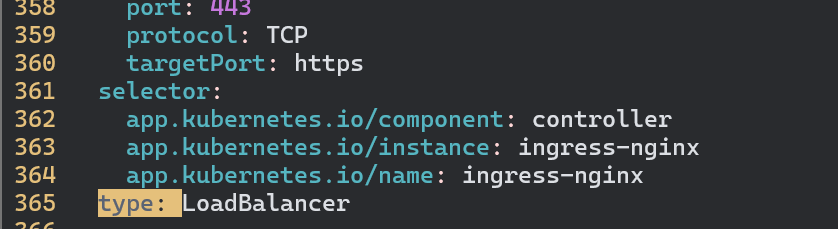

一般需要给它的controller做一个type: LoadBalancer

一套 Ingress Controller(对应一个 LoadBalancer Service)只会拿到一个外部 IP。

所有使用该 Controller 的 Ingress 对象都共用这一个 IP,流量由 Host / Path 区分转发到不同的后端 Service。

想给 某个 Ingress 单独再分配一个独立公网 IP,就必须:要么再部署一套新的 Ingress Controller(新的 Deployment + 新的 LoadBalancer Service)要么使用 支持“IP-per-Ingress”的云厂商专属 Controller(例如 AWS LoadBalancer Controller 的 ALB IngressClass、GCP GKE IngressClass 等)。

同理上面的metallb修改image地址,去到deploy.yml(官方给的部署yaml)地址:ingress-nginx/docs/deploy/index.md at main · kubernetes/ingress-nginx · GitHub

并且找到里面的一个controller,将其改为之前运行的loadbalancer类型(默认为clusterip)

ingress应该和需要被代理的服务在同一个nameSpace,并且我们的ingress会将访问的路径透传,也就是我们的pod上面也得有这个路径对应下的地址,否则需要重写功能。

测试

先创建两个小的deployment控制器,然后各自创建一个svc用于服务发现

[root@k8sMaster ~]# kubectl create deployment nginx-deploy1 -n dev --image=nginx:1.17.1 --replicas=1

[root@k8sMaster ~]# kubectl create deployment nginx-deploy2 -n dev --image=nginx:1.17.1 --replicas=1

[root@k8sMaster ingress]# kubectl expose deployment/nginx-deploy1 --name=svc-nginx1 --type=ClusterIP --port 80 --target-port 80 -n dev

service/svc-nginx1 exposed

[root@k8sMaster ingress]# kubectl expose deployment/nginx-deploy2 --name=svc-nginx2 --type=ClusterIP --port 80 --target-port 80 -n dev

service/svc-nginx2 exposed#----------

[root@k8sMaster ingress]# curl 10.98.91.16/foo

test page which from nginx-deploy1-85687ff5c9-kkmcc:10.244.1.10

[root@k8sMaster ingress]# curl 10.107.194.52/fun

test page which from nginx-deploy2-66fcb88fd9-prjw4:10.244.2.17

然后创建一个ingress进行测试

kubectl create ingress nginx-proxy --class nginx --rule='/nginx-service1=nginx-service1:80' -n dev --dry-run=client -o yaml > ingress-nginx-test.yaml之后后面进行一些完善:

[root@k8sMaster ingress]# cat ingress-nginx-test.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: nginx-proxynamespace: dev

spec:ingressClassName: nginxrules: #这里的配置和haproxy的配置很想都是一个指定其后端- http:paths:- backend:service:name: svc-nginx1port:number: 80path: /foopathType: Exact #精准匹配- backend:service:name: svc-nginx2port:number: 80path: /funpathType: Exact上面的Exact表示精准匹配到的/foo这个路由才会给到后端的服务,多一个字符都不行

所以我们创建这个ingress之后还得进入到pod里面创建一个文件,文件存放地址要为/usr/share/nginx/html/foo

[root@k8sMaster ~]# kubectl exec -it pod/nginx-deploy1-85687ff5c9-kkmcc -c nginx -n dev --/bin/bashroot@nginx-deploy1-85687ff5c9-kkmcc:/# echo "test page which from `hostname`:`hostname -I`" > /usr/share/nginx/foo同理为另一个pod也如此操作,然后部署我们的ingress服务,进行查看:

[root@k8sMaster ingress]# kubectl get ingress -n dev

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-proxy nginx * 192.168.118.100 80 92s访问测试:

[root@k8sMaster ingress]# curl 192.168.118.100/foo

test page which from nginx-deploy1-85687ff5c9-kkmcc:10.244.1.10

[root@k8sMaster ingress]# curl 192.168.118.100/fun

test page which from nginx-deploy2-66fcb88fd9-prjw4:10.244.2.17如果需要进行对于路由进行重写的话:

metadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: / #加上这个name: nginx-proxynamespace: dev如果需要对于基于主机的话,就需要

[root@k8sMaster ingress]# kubectl explain ingress.spec.rules.host

GROUP: networking.k8s.io

KIND: Ingress

VERSION: v1

FIELD: host <string>...

比如(pathType也可以不是Exact,可以为下面的值):

spec:ingressClassName: nginxrules:- host: www.openlab.com #这里http: paths:- path: /nginx-service1backend:service:name: svc-nginx1port:number: 80pathType: Exact[root@k8sMaster ~]# kubectl explain ingress.spec.rules.http.paths.pathType

GROUP: networking.k8s.io

KIND: Ingress

VERSION: v1FIELD: pathType <string>

ENUM:

Exact

ImplementationSpecific

Prefix...

* ImplementationSpecific: Interpretation of the Path matching is up to

the IngressClass. Implementations can treat this as a separate PathType

or treat it identically to Prefix or Exact path types.

Implementations are required to support all path types.Possible enum values:

- `"Exact"` matches the URL path exactly and with case sensitivity.

- `"ImplementationSpecific"` matching is up to the IngressClass.

Implementations can treat this as a separate PathType or treat it

identically to Prefix or Exact path types.

- `"Prefix"` matches based on a URL path prefix split by '/'. Matching is

case sensitive and done on a path element by element basis. A path element

refers to the list of labels in the path split by the '/' separator. A

request is a match for path p if every p is an element-wise prefix of p of

the request path. Note that if the last element of the path is a substring

of the last element in request path, it is not a match (e.g. /foo/bar

matches /foo/bar/baz, but does not match /foo/barbaz). If multiple matching

paths exist in an Ingress spec, the longest matching path is given priority.

Examples: - /foo/bar does not match requests to /foo/barbaz - /foo/bar

matches request to /foo/bar and /foo/bar/baz - /foo and /foo/ both match

requests to /foo and /foo/. If both paths are present in an Ingress spec,

the longest matching path (/foo/) is given priority.

进行tls的验证

先创建一个证书:

openssl req -newkey rsa:2048 -nodes -keyout tls.key -x509 \-days 365 -subj "/CN=nginxsvc/O=nginxsvc" -out tls.crt然后再结合上面的证书创建一个secret给到集群内部:

[root@k8sMaster ingress]# kubectl create secret tls ingress-tls --key=tls.key --cert=tls.crt

secret/ingress-tls created然后重新跑一个ingress,在里面指定tls关键字即可对想要加密的网页进管理

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: nginx-proxynamespace: dev

spec:tls: #新增字段- hosts: #选择虚拟主机- foo.openlab.com- fun.openlab.comsecretName: ingress-tls #集群的secret包含有生成的证书ingressClassName: nginxrules:- http:paths:- backend:service:name: svc-nginx1port:number: 80path: /foopathType: Exacthost: foo.openlab.com- http:paths:- backend:service:name: svc-nginx2port:number: 80path: /funpathType: Exacthost: fun.openlab.com上面就是带有https的

auth认证

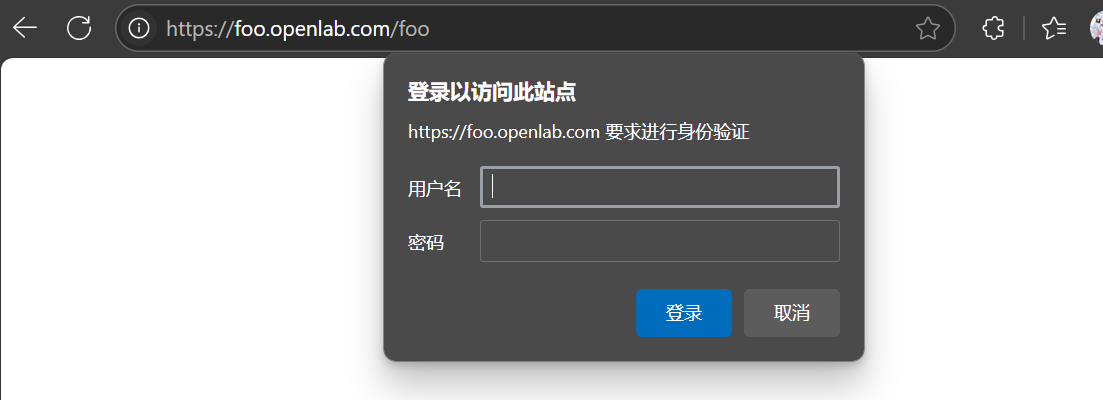

这种用于登录需要用户和密码的网页,会弹出一个弹窗,要求输入用户和密码才能访问

先创建一个用户,文件名必须为auth,没有这个htpasswd可以这么找: dnf whatprovides */htpasswd

[root@k8sMaster ingress]# htpasswd -cm auth openlab

New password:

Re-type new password:

Adding password for user openlab然后根据这个生成的文件创建一个secret

[root@k8sMaster ingress]# kubectl create secret generic ingress-auth --from-file auth -n dev

secret/ingress-auth created然后在ingress中稍微改变一下即可:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: nginx-proxyannotations:nginx.ingress.kubernetes.io/auth-type: basicnginx.ingress.kubernetes.io/auth-secret: ingress-authnginx.ingress.kubernetes.io/auth-realm: "input the passwd of this page"namespace: dev

spec:tls:- hosts:- foo.openlab.com- fun.openlab.comsecretName: ingress-tlsingressClassName: nginxrules:- http:paths:- backend:service:name: svc-nginx1port:number: 80path: /foopathType: Exacthost: foo.openlab.com- http:paths:- backend:service:name: svc-nginx2port:number: 80path: /funpathType: Exacthost: fun.openlab.com补充一个重写功能:

annotations:nginx.ingress.kubernetes.io/rewrite-target: /原始请求:/foo/index.html

匹配前缀:/foo

重写结果:/

后端 Pod 收到的就是 /,没有任何剩余片段。

重写

如果希望访问的路径能够传递给到后端服务的话,就需要用到重写功能,pathType: Prefix只支持前缀匹配,不会把(.*)当做正则捕获组,ImplementationSpecific 让 Nginx-Ingress 把整条 path 当作 正则表达式 使用。

应当这样写:

nginx.ingress.kubernetes.io/rewrite-target: /$1

...

path: /foo/(.*)

pathType: ImplementationSpecific

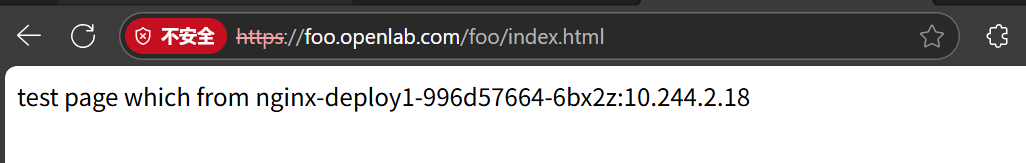

金丝雀发布

先发布一个老版本的ingress,然后创建一个新的版本,名称不用和旧版本的一样,但是里面要指定nginx.ingress.kubernetes.io/canary: "true"就可以按照新版本里面的指定流量分配的规则进行控制,然后通过kubectl edit不断调整访问新旧流量的比例。

为什么不用和老的一样?Ingress Controller 会把两个 Ingress 对象针对 同一个 host+path 的规则合并,再按注解里的权重/Header/Cookie 条件做流量分割。因此只要 host+path 相同即可

只需要添加一段metadata就可以,其他的不用变

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: nginx-canaryannotations:nginx.ingress.kubernetes.io/rewrite-target: /$1nginx.ingress.kubernetes.io/canary: "true"nginx.ingress.kubernetes.io/canary-by-header: "nginx"nginx.ingress.kubernetes.io/canary-by-header-value: "grey"namespace: dev上面就是只要携带header内容中包含nginx: dev就访问新的

[root@k8sNode1 ~]# curl fun.openlab.com/index.html

test page which from nginx-deploy1-996d57664-6bx2z:10.244.2.18

[root@k8sNode1 ~]# curl -H "nginx: grey" fun.openlab.com/index.html

test page which from nginx-deploy2-7957b9dcf-9sdkf:10.244.1.11上面还可以进行权重的调控:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: nginx-canaryannotations:nginx.ingress.kubernetes.io/rewrite-target: /$1nginx.ingress.kubernetes.io/canary: "true"nginx.ingress.kubernetes.io/canary-by-weight: "10"nginx.ingress.kubernetes.io/canary-by-weight-total: "100"namespace: dev

spec:ingressClassName: nginxrules:- http:paths:- backend:service:name: svc-nginx2port:number: 80path: /(.*)pathType: ImplementationSpecifichost: fun.openlab.com先10%的流量,之后如果没有问题的话可以kubectl edit ingress/nginx-canary -n dev将里面的权重提高。