矿物分类系统开发笔记(一):数据预处理

一、数据基础与预处理目标

本矿物分类系统基于矿物数据.xlsx展开,该数据包含 1044 条样本,涵盖 13 种元素特征(氯、钠、镁等)和 4 类矿物标签(A、B、C、D)。因数据敏感,故无法提供,仅提供代码用于学习。数据预处理的核心目标是:通过规范格式、处理缺失值、平衡类别等操作,为后续模型训练提供可靠输入。

二、具体预处理步骤及代码解析

2.1 数据加载与初步清洗

首先加载数据并剔除无效信息:

import pandas as pd# 加载数据,仅保留有效样本

data = pd.read_excel('矿物数据.xlsx', sheet_name='Sheet1')

# 删除特殊类别E(仅1条样本,无统计意义)

data = data[data['矿物类型'] != 'E']

# 转换数据类型,将非数值符号(如"/"、空格)转为缺失值NaN

for col in data.columns:if col not in ['序号', '矿物类型']: # 标签列不转换# errors='coerce'确保非数值转为NaNdata[col] = pd.to_numeric(data[col], errors='coerce')

此步骤解决了原始数据中格式混乱的问题,确保所有特征列均为数值型,为后续处理奠定基础。

2.2 标签编码

将字符标签(A/B/C/D)转为模型可识别的整数:

# 建立标签映射关系

label_dict = {'A': 0, 'B': 1, 'C': 2, 'D': 3}

# 转换标签并保持DataFrame格式

data['矿物类型'] = data['矿物类型'].map(label_dict)

# 分离特征与标签

X = data.drop(['序号', '矿物类型'], axis=1) # 特征集

y = data['矿物类型'] # 标签集

编码后,标签范围为 0-3,符合机器学习模型对输入格式的要求。

2.3 缺失值处理

针对数据中存在的缺失值,设计了 6 种处理方案,具体如下:

(1)删除含缺失值的样本

适用于缺失率极低(<1%)的场景,直接剔除无效样本:

def drop_missing(train_data, train_label):# 合并特征与标签,便于按行删除combined = pd.concat([train_data, train_label], axis=1)combined = combined.reset_index(drop=True) # 重置索引,避免删除后索引混乱cleaned = combined.dropna() # 删除含缺失值的行# 分离特征与标签return cleaned.drop('矿物类型', axis=1), cleaned['矿物类型']

该方法优点是无偏差,缺点是可能丢失有效信息(当缺失率较高时)。

(2)按类别均值填充

对数值型特征,按矿物类型分组计算均值,用组内均值填充缺失值(减少跨类别干扰):

def mean_fill(train_data, train_label):combined = pd.concat([train_data, train_label], axis=1)combined = combined.reset_index(drop=True)# 按矿物类型分组填充filled_groups = []for type_id in combined['矿物类型'].unique():group = combined[combined['矿物类型'] == type_id]# 计算组内各特征均值,用于填充该组缺失值filled_group = group.fillna(group.mean())filled_groups.append(filled_group)# 合并各组数据filled = pd.concat(filled_groups, axis=0).reset_index(drop=True)return filled.drop('矿物类型', axis=1), filled['矿物类型']

适用于特征分布较均匀的场景,避免了不同类别间的均值混淆。

(3)按类别中位数填充

当特征存在极端值(如个别样本钠含量远高于均值)时,用中位数填充更稳健:

def median_fill(train_data, train_label):combined = pd.concat([train_data, train_label], axis=1)combined = combined.reset_index(drop=True)filled_groups = []for type_id in combined['矿物类型'].unique():group = combined[combined['矿物类型'] == type_id]# 中位数对极端值不敏感filled_group = group.fillna(group.median())filled_groups.append(filled_group)filled = pd.concat(filled_groups, axis=0).reset_index(drop=True)return filled.drop('矿物类型', axis=1), filled['矿物类型']

(4)按类别众数填充

针对离散型特征(如部分元素含量为整数编码),采用众数(出现次数最多的值)填充:

def mode_fill(train_data, train_label):combined = pd.concat([train_data, train_label], axis=1)combined = combined.reset_index(drop=True)filled_groups = []for type_id in combined['矿物类型'].unique():group = combined[combined['矿物类型'] == type_id]# 对每列取众数,无众数时返回Nonefill_values = group.apply(lambda x: x.mode().iloc[0] if not x.mode().empty else None)filled_group = group.fillna(fill_values)filled_groups.append(filled_group)filled = pd.concat(filled_groups, axis=0).reset_index(drop=True)return filled.drop('矿物类型', axis=1), filled['矿物类型']

(5)线性回归填充

利用特征间的线性相关性(如氯与钠含量的关联)预测缺失值:

from sklearn.linear_model import LinearRegressiondef linear_reg_fill(train_data, train_label):combined = pd.concat([train_data, train_label], axis=1)features = combined.drop('矿物类型', axis=1)# 按缺失值数量升序处理(从缺失少的列开始)null_counts = features.isnull().sum().sort_values()for col in null_counts.index:if null_counts[col] == 0:continue # 无缺失值则跳过# 构建训练数据:用其他特征预测当前列X_train = features.drop(col, axis=1).dropna() # 其他特征无缺失的样本y_train = features.loc[X_train.index, col] # 当前列的非缺失值# 待填充样本(当前列缺失,其他特征完整)X_pred = features.drop(col, axis=1).loc[features[col].isnull()]# 训练线性回归模型lr = LinearRegression()lr.fit(X_train, y_train)# 预测并填充缺失值features.loc[features[col].isnull(), col] = lr.predict(X_pred)return features, combined['矿物类型']

该方法要求特征间存在一定线性关系,适用于元素含量呈比例关联的场景。

(6)随机森林填充

对于特征间非线性关系,采用随机森林模型预测缺失值:

from sklearn.ensemble import RandomForestRegressordef rf_fill(train_data, train_label):combined = pd.concat([train_data, train_label], axis=1)features = combined.drop('矿物类型', axis=1)null_counts = features.isnull().sum().sort_values()for col in null_counts.index:if null_counts[col] == 0:continue# 分离训练样本和待填充样本X_train = features.drop(col, axis=1).dropna()y_train = features.loc[X_train.index, col]X_pred = features.drop(col, axis=1).loc[features[col].isnull()]# 训练随机森林回归器(100棵树,固定随机种子确保结果可复现)rfr = RandomForestRegressor(n_estimators=100, random_state=10)rfr.fit(X_train, y_train)# 填充预测结果features.loc[features[col].isnull(), col] = rfr.predict(X_pred)return features, combined['矿物类型']

随机森林能捕捉特征间复杂关系,填充精度通常高于线性方法,但计算成本略高。

2.4 特征标准化

不同元素含量数值差异大(如钠可达上千,硒多为 0-1),需消除量纲影响:

from sklearn.preprocessing import StandardScalerdef standardize_features(X_train, X_test):# 用训练集的均值和标准差进行标准化(避免测试集信息泄露)scaler = StandardScaler()X_train_scaled = scaler.fit_transform(X_train) # 拟合训练集并转换X_test_scaled = scaler.transform(X_test) # 用相同参数转换测试集# 转回DataFrame格式,保留特征名称return pd.DataFrame(X_train_scaled, columns=X_train.columns), pd.DataFrame(X_test_scaled, columns=X_test.columns)

标准化后,所有特征均值为 0、标准差为 1,确保模型不受数值大小干扰。

2.5 数据集拆分与类别平衡

(1)拆分训练集与测试集

按 7:3 比例拆分,保持类别分布一致:

from sklearn.model_selection import train_test_split# stratify=y确保测试集与原始数据类别比例一致

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0, stratify=y

)

(2)处理类别不平衡

采用 SMOTE 算法生成少数类样本,平衡各类别数量:

from imblearn.over_sampling import SMOTE# 仅对训练集过采样(测试集保持原始分布)

smote = SMOTE(k_neighbors=1, random_state=0) # 近邻数=1,避免引入过多噪声

X_train_balanced, y_train_balanced = smote.fit_resample(X_train, y_train)

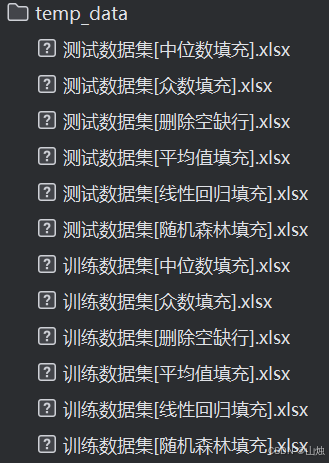

2.6 数据保存

将预处理后的数据存储,供后续模型训练使用:

def save_processed_data(X_train, y_train, X_test, y_test, method):# 拼接特征与标签train_df = pd.concat([X_train, pd.DataFrame(y_train, columns=['矿物类型'])], axis=1)test_df = pd.concat([X_test, pd.DataFrame(y_test, columns=['矿物类型'])], axis=1)# 保存为Excel,明确标识预处理方法train_df.to_excel(f'训练集_{method}.xlsx', index=False)test_df.to_excel(f'测试集_{method}.xlsx', index=False)# 示例:保存经随机森林填充和标准化的数据

save_processed_data(X_train_balanced, y_train_balanced, X_test, y_test, 'rf_fill_standardized')

三、具体代码

数据预处理.py:

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

import fill_datadata = pd.read_excel('矿物数据.xlsx')

data = data[data['矿物类型'] != 'E'] # 删除特殊类别E,整个数据集中只存在1个E数据

null_num = data.isnull()

null_total = data.isnull().sum()X_whole = data.drop('矿物类型', axis=1).drop('序号', axis=1)

y_whole = data.矿物类型'''将数据中的中文标签转换为字符'''

label_dict = {'A': 0, 'B': 1, 'C': 2, 'D': 3}

encoded_labels = [label_dict[label] for label in y_whole]

y_whole = pd.DataFrame(encoded_labels, columns=['矿物类型'])'''字符串数据转换成float,异常数据('\'和空格)转换成nan'''

for column_name in X_whole.columns:X_whole[column_name] = pd.to_numeric(X_whole[column_name], errors='coerce')'''Z标准化'''

scaler = StandardScaler()

X_whole_Z = scaler.fit_transform(X_whole)

X_whole = pd.DataFrame(X_whole_Z, columns=X_whole.columns) # Z标准化处理后为numpy数据,这里再转换回pandas数据'''数据集切分'''

X_train_w, X_test_w, y_train_w, y_test_w = train_test_split(X_whole, y_whole, test_size=0.3, random_state=0)'''数据填充,6种方法'''

# # 1.删除空缺行

# X_train_fill, y_train_fill = fill_data.cca_train_fill(X_train_w, y_train_w)

# X_test_fill, y_test_fill = fill_data.cca_test_fill(X_test_w, y_test_w)

# os_x_train, os_y_train = fill_data.oversampling(X_train_fill, y_train_fill)

# fill_data.cca_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill)

#

# # 2.平均值填充

# X_train_fill, y_train_fill = fill_data.mean_train_fill(X_train_w, y_train_w)

# X_test_fill, y_test_fill = fill_data.mean_test_fill(X_train_fill, y_train_fill, X_test_w, y_test_w)

# os_x_train, os_y_train = fill_data.oversampling(X_train_fill, y_train_fill)

# fill_data.mean_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill)

#

# # 3.中位数填充

# X_train_fill, y_train_fill = fill_data.median_train_fill(X_train_w, y_train_w)

# X_test_fill, y_test_fill = fill_data.median_test_fill(X_train_fill, y_train_fill, X_test_w, y_test_w)

# os_x_train, os_y_train = fill_data.oversampling(X_train_fill, y_train_fill)

# fill_data.median_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill)# # 4.众数填充

# X_train_fill, y_train_fill = fill_data.mode_train_fill(X_train_w, y_train_w)

# X_test_fill, y_test_fill = fill_data.mode_test_fill(X_train_fill, y_train_fill, X_test_w, y_test_w)

# os_x_train, os_y_train = fill_data.oversampling(X_train_fill, y_train_fill)

# fill_data.mode_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill)# # 5.线性回归填充

# X_train_fill, y_train_fill = fill_data.linear_train_fill(X_train_w, y_train_w)

# X_test_fill, y_test_fill = fill_data.linear_test_fill(X_train_fill, y_train_fill, X_test_w, y_test_w)

# os_x_train, os_y_train = fill_data.oversampling(X_train_fill, y_train_fill)

# fill_data.linear_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill)# 6.随机森林填充

X_train_fill, y_train_fill = fill_data.RandomForest_train_fill(X_train_w, y_train_w)

X_test_fill, y_test_fill = fill_data.RandomForest_test_fill(X_train_fill, y_train_fill, X_test_w, y_test_w)

os_x_train, os_y_train = fill_data.oversampling(X_train_fill, y_train_fill)

fill_data.RandomForest_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill)

fill_data.py:

import pandas as pd

from imblearn.over_sampling import SMOTE

from sklearn.ensemble import RandomForestRegressor

from sklearn.linear_model import LinearRegression'''过采样'''

def oversampling(train_data, train_label):oversampler = SMOTE(k_neighbors=1, random_state=0)os_x_train, os_y_train = oversampler.fit_resample(train_data, train_label)return os_x_train, os_y_train'''1.删除空缺行'''

def cca_train_fill(train_data, train_label):data = pd.concat([train_data, train_label], axis=1)data = data.reset_index(drop=True) # 重置索引df_filled = data.dropna() # 删除包含缺失值的行或列return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def cca_test_fill(test_data, test_label):data = pd.concat([test_data, test_label], axis=1)data = data.reset_index(drop=True)df_filled = data.dropna()return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def cca_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill):data_train = pd.concat([os_x_train, os_y_train], axis=1)data_test = pd.concat([X_test_fill, y_test_fill], axis=1)data_train.to_excel(r'..//temp_data//训练数据集[删除空缺行].xlsx', index=False)data_test.to_excel(r'..//temp_data//测试数据集[删除空缺行].xlsx', index=False)'''2.平均值填充'''

def mean_train_method(data):fill_values = data.mean()return data.fillna(fill_values)def mean_test_method(train_data, test_data):fill_values = train_data.mean()return test_data.fillna(fill_values)def mean_train_fill(train_data, train_label):data = pd.concat([train_data, train_label], axis=1)data = data.reset_index(drop=True)A = data[data['矿物类型'] == 0]B = data[data['矿物类型'] == 1]C = data[data['矿物类型'] == 2]D = data[data['矿物类型'] == 3]A = mean_train_method(A)B = mean_train_method(B)C = mean_train_method(C)D = mean_train_method(D)df_filled = pd.concat([A, B, C, D], axis=0)df_filled = df_filled.reset_index(drop=True)return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def mean_test_fill(train_data, train_label, test_data, test_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)test_data_all = pd.concat([test_data, test_label], axis=1)test_data_all = test_data_all.reset_index(drop=True)A_train = train_data_all[train_data_all['矿物类型'] == 0]B_train = train_data_all[train_data_all['矿物类型'] == 1]C_train = train_data_all[train_data_all['矿物类型'] == 2]D_train = train_data_all[train_data_all['矿物类型'] == 3]A_test = test_data_all[test_data_all['矿物类型'] == 0]B_test = test_data_all[test_data_all['矿物类型'] == 1]C_test = test_data_all[test_data_all['矿物类型'] == 2]D_test = test_data_all[test_data_all['矿物类型'] == 3]A = mean_test_method(A_train, A_test)B = mean_test_method(B_train, B_test)C = mean_test_method(C_train, C_test)D = mean_test_method(D_train, D_test)df_filled = pd.concat([A, B, C, D], axis=0)df_filled = df_filled.reset_index(drop=True)return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def mean_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill):data_train = pd.concat([os_x_train, os_y_train], axis=1)data_test = pd.concat([X_test_fill, y_test_fill], axis=1)data_train.to_excel(r'..//temp_data//训练数据集[平均值填充].xlsx', index=False)data_test.to_excel(r'..//temp_data//测试数据集[平均值填充].xlsx', index=False)'''3.中位数填充'''

def median_train_method(data):fill_values = data.median()return data.fillna(fill_values)def median_test_method(train_data, test_data):fill_values = train_data.median()return test_data.fillna(fill_values)def median_train_fill(train_data, train_label):data = pd.concat([train_data, train_label], axis=1)data = data.reset_index(drop=True)A = data[data['矿物类型'] == 0]B = data[data['矿物类型'] == 1]C = data[data['矿物类型'] == 2]D = data[data['矿物类型'] == 3]A = median_train_method(A)B = median_train_method(B)C = median_train_method(C)D = median_train_method(D)df_filled = pd.concat([A, B, C, D], axis=0)df_filled = df_filled.reset_index(drop=True)return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def median_test_fill(train_data, train_label, test_data, test_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)test_data_all = pd.concat([test_data, test_label], axis=1)test_data_all = test_data_all.reset_index(drop=True)A_train = train_data_all[train_data_all['矿物类型'] == 0]B_train = train_data_all[train_data_all['矿物类型'] == 1]C_train = train_data_all[train_data_all['矿物类型'] == 2]D_train = train_data_all[train_data_all['矿物类型'] == 3]A_test = test_data_all[test_data_all['矿物类型'] == 0]B_test = test_data_all[test_data_all['矿物类型'] == 1]C_test = test_data_all[test_data_all['矿物类型'] == 2]D_test = test_data_all[test_data_all['矿物类型'] == 3]A = median_test_method(A_train, A_test)B = median_test_method(B_train, B_test)C = median_test_method(C_train, C_test)D = median_test_method(D_train, D_test)df_filled = pd.concat([A, B, C, D], axis=0)df_filled = df_filled.reset_index(drop=True)return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def median_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill):data_train = pd.concat([os_x_train, os_y_train], axis=1)data_test = pd.concat([X_test_fill, y_test_fill], axis=1)data_train.to_excel(r'..//temp_data//训练数据集[中位数填充].xlsx', index=False)data_test.to_excel(r'..//temp_data//测试数据集[中位数填充].xlsx', index=False)'''4.众数填充'''

def mode_train_method(data):fill_values = data.apply(lambda x: x.mode().iloc[0] if len(x.mode()) > 0 else None)a = data.mode()return data.fillna(fill_values)def mode_test_method(train_data, test_data):fill_values = train_data.apply(lambda x: x.mode().iloc[0] if len(x.mode()) > 0 else None)a = train_data.mode()return test_data.fillna(fill_values)def mode_train_fill(train_data, train_label):data = pd.concat([train_data, train_label], axis=1)data = data.reset_index(drop=True)A = data[data['矿物类型'] == 0]B = data[data['矿物类型'] == 1]C = data[data['矿物类型'] == 2]D = data[data['矿物类型'] == 3]A = mode_train_method(A)B = mode_train_method(B)C = mode_train_method(C)D = mode_train_method(D)df_filled = pd.concat([A, B, C, D], axis=0)df_filled = df_filled.reset_index(drop=True)return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def mode_test_fill(train_data, train_label, test_data, test_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)test_data_all = pd.concat([test_data, test_label], axis=1)test_data_all = test_data_all.reset_index(drop=True)A_train = train_data_all[train_data_all['矿物类型'] == 0]B_train = train_data_all[train_data_all['矿物类型'] == 1]C_train = train_data_all[train_data_all['矿物类型'] == 2]D_train = train_data_all[train_data_all['矿物类型'] == 3]A_test = test_data_all[test_data_all['矿物类型'] == 0]B_test = test_data_all[test_data_all['矿物类型'] == 1]C_test = test_data_all[test_data_all['矿物类型'] == 2]D_test = test_data_all[test_data_all['矿物类型'] == 3]A = mode_test_method(A_train, A_test)B = mode_test_method(B_train, B_test)C = mode_test_method(C_train, C_test)D = mode_test_method(D_train, D_test)df_filled = pd.concat([A, B, C, D], axis=0)df_filled = df_filled.reset_index(drop=True)return df_filled.drop('矿物类型', axis=1), df_filled.矿物类型def mode_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill):data_train = pd.concat([os_x_train, os_y_train], axis=1)data_test = pd.concat([X_test_fill, y_test_fill], axis=1)data_train.to_excel(r'..//temp_data//训练数据集[众数填充].xlsx', index=False)data_test.to_excel(r'..//temp_data//测试数据集[众数填充].xlsx', index=False)'''5.线性回归填充'''

def linear_train_fill(train_data, train_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)train_data_X = train_data_all.drop('矿物类型', axis=1)null_num = train_data_X.isnull().sum()null_num_sorted = null_num.sort_values(ascending=True)filling_feature = []for i in null_num_sorted.index:filling_feature.append(i)if null_num_sorted[i] != 0:X = train_data_X[filling_feature].drop(i, axis=1)y = train_data_X[i]row_numbers_mg_null = train_data_X[train_data_X[i].isnull()].index.tolist()X_train = X.drop(row_numbers_mg_null)y_train = y.drop(row_numbers_mg_null)X_test = X.iloc[row_numbers_mg_null]lr = LinearRegression()lr.fit(X_train, y_train)y_pred = lr.predict(X_test)train_data_X.loc[row_numbers_mg_null, i] = y_predprint(f'完成训练数据集中的{i}列数据的填充')return train_data_X, train_data_all.矿物类型def linear_test_fill(train_data, train_label, test_data, test_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)test_data_all = pd.concat([test_data, test_label], axis=1)test_data_all = test_data_all.reset_index(drop=True)train_data_X = train_data_all.drop('矿物类型', axis=1)test_data_X = test_data_all.drop('矿物类型', axis=1)null_num = test_data_X.isnull().sum()null_num_sorted = null_num.sort_values(ascending=True)filling_feature = []for i in null_num_sorted.index:filling_feature.append(i)if null_num_sorted[i] != 0:X_train = train_data_X[filling_feature].drop(i, axis=1)y_train = train_data_X[i]X_test = test_data_X[filling_feature].drop(i, axis=1)row_numbers_mg_null = test_data_X[test_data_X[i].isnull()].index.tolist()X_test = X_test.iloc[row_numbers_mg_null]lr = LinearRegression()lr.fit(X_train, y_train)y_pred = lr.predict(X_test)test_data_X.loc[row_numbers_mg_null, i] = y_predprint(f'完成测试数据集中的{i}列数据的填充')return test_data_X, test_data_all.矿物类型def linear_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill):data_train = pd.concat([os_x_train, os_y_train], axis=1)data_test = pd.concat([X_test_fill, y_test_fill], axis=1)data_train.to_excel(r'..//temp_data//训练数据集[线性回归填充].xlsx', index=False)data_test.to_excel(r'..//temp_data//测试数据集[线性回归填充].xlsx', index=False)'''6.随机森林填充'''

def RandomForest_train_fill(train_data, train_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)train_data_X = train_data_all.drop('矿物类型', axis=1)null_num = train_data_X.isnull().sum()null_num_sorted = null_num.sort_values(ascending=True)filling_feature = []for i in null_num_sorted.index:filling_feature.append(i)if null_num_sorted[i] != 0:X = train_data_X[filling_feature].drop(i, axis=1)y = train_data_X[i]row_numbers_mg_null = train_data_X[train_data_X[i].isnull()].index.tolist()X_train = X.drop(row_numbers_mg_null)y_train = y.drop(row_numbers_mg_null)X_test = X.iloc[row_numbers_mg_null]rfg = RandomForestRegressor(n_estimators=100, random_state=10)rfg.fit(X_train, y_train)y_pred = rfg.predict(X_test)train_data_X.loc[row_numbers_mg_null, i] = y_predprint(f'完成训练数据集中的{i}列数据的填充')return train_data_X, train_data_all.矿物类型def RandomForest_test_fill(train_data, train_label, test_data, test_label):train_data_all = pd.concat([train_data, train_label], axis=1)train_data_all = train_data_all.reset_index(drop=True)test_data_all = pd.concat([test_data, test_label], axis=1)test_data_all = test_data_all.reset_index(drop=True)train_data_X = train_data_all.drop('矿物类型', axis=1)test_data_X = test_data_all.drop('矿物类型', axis=1)null_num = test_data_X.isnull().sum()null_num_sorted = null_num.sort_values(ascending=True)filling_feature = []for i in null_num_sorted.index:filling_feature.append(i)if null_num_sorted[i] != 0:X_train = train_data_X[filling_feature].drop(i, axis=1)y_train = train_data_X[i]X_test = test_data_X[filling_feature].drop(i, axis=1)row_numbers_mg_null = test_data_X[test_data_X[i].isnull()].index.tolist()X_test = X_test.iloc[row_numbers_mg_null]rfg = RandomForestRegressor(n_estimators=100, random_state=10)rfg.fit(X_train, y_train)y_pred = rfg.predict(X_test)test_data_X.loc[row_numbers_mg_null, i] = y_predprint(f'完成测试数据集中的{i}列数据的填充')return test_data_X, test_data_all.矿物类型def RandomForest_save_file(os_x_train, os_y_train, X_test_fill, y_test_fill):data_train = pd.concat([os_x_train, os_y_train], axis=1)data_test = pd.concat([X_test_fill, y_test_fill], axis=1)data_train.to_excel(r'..//temp_data//训练数据集[随机森林填充].xlsx', index=False)data_test.to_excel(r'..//temp_data//测试数据集[随机森林填充].xlsx', index=False)四、预处理小结

数据预处理完成以下关键工作:

- 清洗无效样本与异常符号,统一数据格式;

- 通过多种方法处理缺失值,适应不同数据特征;

- 标准化特征,消除量纲差异;

- 拆分并平衡数据集,为模型训练做准备。

经处理后的数据已满足模型输入要求,下一阶段将进行模型训练,包括:

- 选择随机森林、SVM 等分类算法;

- 开展模型评估与超参数调优;

- 对比不同模型的分类性能。

后续将基于本文预处理后的数据,详细介绍模型训练过程及结果分析。