Langchain入门:构建一个本地RAG应用

本指南将展示如何通过一个大模型供应商 Ollama 在本地(例如,在您的笔记本电脑上)使用本地嵌入和本地大型语言模型运行

设置

首先,我们需要设置 Ollama。

https://github.com/ollama/ollama

- 下载 并运行他们的桌面应用程序

- 从命令行中,从 https://ollama.com/library 获取模型。对于本指南,您需要:

- 一个通用模型,如 llama3.1:8b,您可以使用类似 ollama pull llama3.1:8b 的命令来获取

- 一个 文本嵌入模型,如 nomic-embed-text,您可以使用类似 ollama pull nomic-embed-text 的命令来获取

- 当应用程序运行时,所有模型都自动提供在 localhost:11434 上

- 请注意,您的模型选择将取决于您的硬件能力

文档加载

现在让我们加载并分割一个示例文档。

我们将使用Lilian Weng关于代理的博客文章作为示例。

from langchain_community.document_loaders import WebBaseLoader

from langchain_text_splitters import RecursiveCharacterTextSplitterloader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

接下来,以下步骤将初始化您的向量存储。我们使用nomic-embed-text

from langchain_chroma import Chroma

from langchain_ollama import OllamaEmbeddingslocal_embeddings = OllamaEmbeddings(model="nomic-embed-text")vectorstore = Chroma.from_documents(documents=all_splits, embedding=local_embeddings)

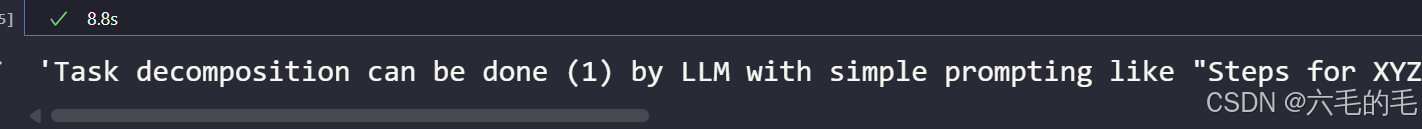

测试相似性搜索是否正常工作:

question = "What are the approaches to Task Decomposition?"

docs = vectorstore.similarity_search(question)

len(docs) # Number of documents retrieved

接下来,设置一个模型。我们在这里使用smollm2

from langchain_ollama import ChatOllamamodel = ChatOllama(model="smollm2")

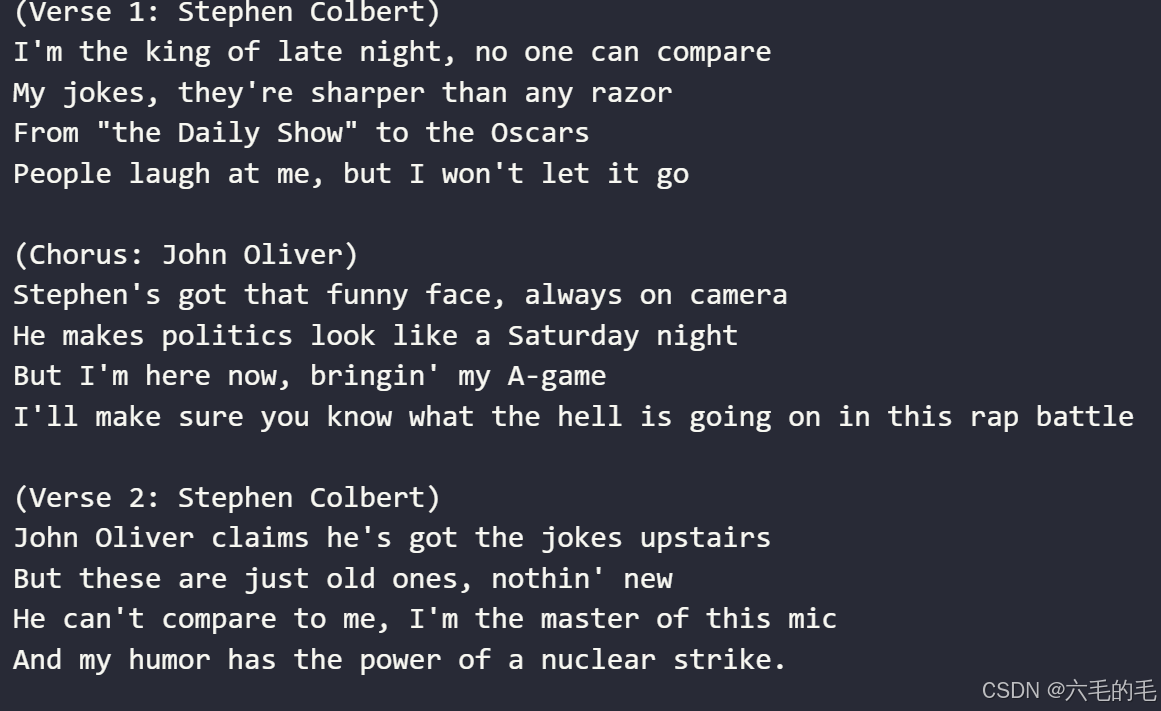

response_message = model.invoke("Simulate a rap battle between Stephen Colbert and John Oliver"

)

print(response_message.content)

在链中使用

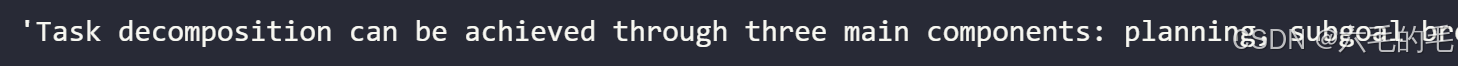

我们可以通过传入检索到的文档和一个简单的提示来创建一个摘要链,使用任一模型。

它使用提供的输入键值格式化提示词模板,并将格式化后的字符串传递给指定模型:

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplateprompt = ChatPromptTemplate.from_template("Summarize the main themes in these retrieved docs: {docs}"

)def format_docs(docs):return "\n\n".join(doc.page_content for doc in docs)chain = {"docs": format_docs} | prompt | model | StrOutputParser()question = "What are the approaches to Task Decomposition?"

docs = vectorstore.similarity_search(question)chain.invoke(docs)

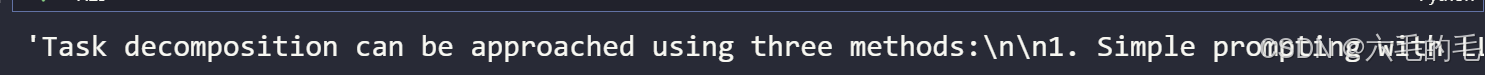

问答

您还可以使用本地模型和向量存储进行问答。以下是一个使用简单字符串提示的示例

from langchain_core.runnables import RunnablePassthroughRAG_TEMPLATE = """

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.<context>

{context}

</context>Answer the following question:{question}"""rag_prompt = ChatPromptTemplate.from_template(RAG_TEMPLATE)chain = (RunnablePassthrough().assign(context=lambda input: format_docs(input["context"]))| rag_prompt| model| StrOutputParser()

)question = "What are the approaches to Task Decomposition?"

docs = vectorstore.similarity_search(question)chain.invoke({"context": docs, "question": question})

带检索的问答

retriever = vectorstore.as_retriever()qa_chain = ({"context": retriever | format_docs, "question": RunnablePassthrough()}| rag_prompt| model| StrOutputParser()

)

qa_chain.invoke(question)