sealos 方式安装k8s5节点集群

参考:

使用sealos快速搭建kubernetes集群!!!-CSDN博客

安装sealos工具

参考:

K8s > Quick-start > Install-cli | Sealos Docs本文详细介绍了如何下载和安装Sealos命令行工具,包括版本选择、二进制下载、包管理工具安装和源码安装等多种方法,助您快速部署Kubernetes集群。![]() https://sealos.run/docs/k8s/quick-start/install-cli

https://sealos.run/docs/k8s/quick-start/install-cli

$ echo "deb [trusted=yes] https://apt.fury.io/labring/ /" | sudo tee /etc/apt/sources.list.d/labring.list

$ sudo apt update

$ sudo apt install sealos

sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.29.9 registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 registry.cn-shanghai.aliyuncs.com/labring/cilium:v1.13.4 \

--masters 192.168.0.178,192.168.0.179,192.168.0.180 \

--nodes 192.168.0.181,192.168.0.182 -p '密码'sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.29.9 registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 registry.cn-shanghai.aliyuncs.com/labring/cilium:v1.13.4 \--masters 192.168.0.178,192.168.0.179,192.168.0.180 \--nodes 192.168.0.181,192.168.0.182 -p '密码'192.168.0.179:22 net.ipv4.conf.default.accept_source_route = 0

192.168.0.179:22 sysctl: setting key "net.ipv4.conf.all.accept_source_route": Invalid argument

192.168.0.179:22 net.ipv4.conf.default.promote_secondaries = 1

192.168.0.179:22 sysctl: setting key "net.ipv4.conf.all.promote_secondaries": Invalid argument

192.168.0.179:22 net.ipv4.ping_group_range = 0 2147483647

192.168.0.179:22 net.core.default_qdisc = fq_codel

192.168.0.179:22 fs.protected_hardlinks = 1

192.168.0.179:22 fs.protected_symlinks = 1

192.168.0.179:22 fs.protected_regular = 1

192.168.0.179:22 fs.protected_fifos = 1

192.168.0.179:22 * Applying /usr/lib/sysctl.d/50-pid-max.conf ...

192.168.0.179:22 kernel.pid_max = 4194304

192.168.0.179:22 * Applying /usr/lib/sysctl.d/99-protect-links.conf ...

192.168.0.179:22 fs.protected_fifos = 1

192.168.0.179:22 fs.protected_hardlinks = 1

192.168.0.179:22 fs.protected_regular = 2

192.168.0.179:22 fs.protected_symlinks = 1

192.168.0.179:22 * Applying /etc/sysctl.d/99-sysctl.conf ...

192.168.0.179:22 vm.swappiness = 0

192.168.0.179:22 kernel.sysrq = 1

192.168.0.179:22 net.ipv4.neigh.default.gc_stale_time = 120

192.168.0.179:22 net.ipv4.conf.all.rp_filter = 0

192.168.0.179:22 net.ipv4.conf.default.rp_filter = 0

192.168.0.179:22 net.ipv4.conf.default.arp_announce = 2

192.168.0.179:22 net.ipv4.conf.lo.arp_announce = 2

192.168.0.179:22 net.ipv4.conf.all.arp_announce = 2

192.168.0.179:22 net.ipv4.tcp_max_tw_buckets = 5000

192.168.0.179:22 net.ipv4.tcp_syncookies = 1

192.168.0.179:22 net.ipv4.tcp_max_syn_backlog = 1024

192.168.0.179:22 net.ipv4.tcp_synack_retries = 2

192.168.0.179:22 net.ipv4.tcp_slow_start_after_idle = 0

192.168.0.179:22 fs.file-max = 1048576 # sealos

192.168.0.179:22 net.bridge.bridge-nf-call-ip6tables = 1 # sealos

192.168.0.179:22 net.bridge.bridge-nf-call-iptables = 1 # sealos

192.168.0.179:22 net.core.somaxconn = 65535 # sealos

192.168.0.179:22 net.ipv4.conf.all.rp_filter = 0 # sealos

192.168.0.179:22 net.ipv4.ip_forward = 1 # sealos

192.168.0.179:22 net.ipv4.ip_local_port_range = 1024 65535 # sealos

192.168.0.179:22 net.ipv4.tcp_keepalive_intvl = 30 # sealos

192.168.0.179:22 net.ipv4.tcp_keepalive_time = 600 # sealos

192.168.0.179:22 net.ipv4.vs.conn_reuse_mode = 0 # sealos

192.168.0.179:22 net.ipv4.vs.conntrack = 1 # sealos

192.168.0.179:22 net.ipv6.conf.all.forwarding = 1 # sealos

192.168.0.179:22 vm.max_map_count = 2147483642 # sealos

192.168.0.179:22 * Applying /etc/sysctl.conf ...

192.168.0.179:22 vm.swappiness = 0

192.168.0.179:22 kernel.sysrq = 1

192.168.0.179:22 net.ipv4.neigh.default.gc_stale_time = 120

192.168.0.179:22 net.ipv4.conf.all.rp_filter = 0

192.168.0.179:22 net.ipv4.conf.default.rp_filter = 0

192.168.0.179:22 net.ipv4.conf.default.arp_announce = 2

192.168.0.179:22 net.ipv4.conf.lo.arp_announce = 2

192.168.0.179:22 net.ipv4.conf.all.arp_announce = 2

192.168.0.179:22 net.ipv4.tcp_max_tw_buckets = 5000

192.168.0.179:22 net.ipv4.tcp_syncookies = 1

192.168.0.179:22 net.ipv4.tcp_max_syn_backlog = 1024

192.168.0.179:22 net.ipv4.tcp_synack_retries = 2

192.168.0.179:22 net.ipv4.tcp_slow_start_after_idle = 0

192.168.0.179:22 fs.file-max = 1048576 # sealos

192.168.0.179:22 net.bridge.bridge-nf-call-ip6tables = 1 # sealos

192.168.0.179:22 net.bridge.bridge-nf-call-iptables = 1 # sealos

192.168.0.179:22 net.core.somaxconn = 65535 # sealos

192.168.0.179:22 net.ipv4.conf.all.rp_filter = 0 # sealos

192.168.0.179:22 net.ipv4.ip_forward = 1 # sealos

192.168.0.179:22 net.ipv4.ip_local_port_range = 1024 65535 # sealos

192.168.0.179:22 net.ipv4.tcp_keepalive_intvl = 30 # sealos

192.168.0.179:22 net.ipv4.tcp_keepalive_time = 600 # sealos

192.168.0.179:22 net.ipv4.vs.conn_reuse_mode = 0 # sealos

192.168.0.179:22 net.ipv4.vs.conntrack = 1 # sealos

192.168.0.179:22 net.ipv6.conf.all.forwarding = 1 # sealos

192.168.0.179:22 vm.max_map_count = 2147483642 # sealos

192.168.0.179:22 INFO [2025-07-27 15:10:04] >> pull pause image sealos.hub:5000/pause:3.9

192.168.0.180:22 INFO [2025-07-27 15:10:04] >> pull pause image sealos.hub:5000/pause:3.9 INFO [2025-07-27 15:10:05] >> Health check containerd! INFO [2025-07-27 15:10:05] >> containerd is running INFO [2025-07-27 15:10:05] >> init containerd success

Created symlink /etc/systemd/system/multi-user.target.wants/image-cri-shim.service → /etc/systemd/system/image-cri-shim.service.

192.168.0.182:22 Image is up to date for sha256:e6f1816883972d4be47bd48879a08919b96afcd344132622e4d444987919323c

192.168.0.182:22 Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /etc/systemd/system/kubelet.service.

192.168.0.182:22 INFO [2025-07-27 15:10:07] >> init kubelet success

192.168.0.182:22 INFO [2025-07-27 15:10:07] >> init rootfs success INFO [2025-07-27 15:10:08] >> Health check image-cri-shim! INFO [2025-07-27 15:10:08] >> image-cri-shim is running INFO [2025-07-27 15:10:08] >> init shim success

127.0.0.1 localhost

::1 ip6-localhost ip6-loopback

192.168.0.180:22 Image is up to date for sha256:e6f1816883972d4be47bd48879a08919b96afcd344132622e4d444987919323c

192.168.0.181:22 Image is up to date for sha256:e6f1816883972d4be47bd48879a08919b96afcd344132622e4d444987919323c

192.168.0.179:22 Image is up to date for sha256:e6f1816883972d4be47bd48879a08919b96afcd344132622e4d444987919323c

192.168.0.181:22 Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /etc/systemd/system/kubelet.service.

192.168.0.180:22 Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /etc/systemd/system/kubelet.service.

192.168.0.179:22 Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /etc/systemd/system/kubelet.service.

192.168.0.181:22 INFO [2025-07-27 15:10:09] >> init kubelet success

Firewall stopped and disabled on system startup

192.168.0.181:22 INFO [2025-07-27 15:10:09] >> init rootfs success

192.168.0.180:22 INFO [2025-07-27 15:10:09] >> init kubelet success

192.168.0.180:22 INFO [2025-07-27 15:10:09] >> init rootfs success

192.168.0.179:22 INFO [2025-07-27 15:10:09] >> init kubelet success

192.168.0.179:22 INFO [2025-07-27 15:10:09] >> init rootfs success

* Applying /etc/sysctl.d/10-console-messages.conf ...

kernel.printk = 4 4 1 7

* Applying /etc/sysctl.d/10-ipv6-privacy.conf ...

net.ipv6.conf.all.use_tempaddr = 2

net.ipv6.conf.default.use_tempaddr = 2

* Applying /etc/sysctl.d/10-kernel-hardening.conf ...

kernel.kptr_restrict = 1

* Applying /etc/sysctl.d/10-magic-sysrq.conf ...

kernel.sysrq = 176

* Applying /etc/sysctl.d/10-network-security.conf ...

net.ipv4.conf.default.rp_filter = 2

net.ipv4.conf.all.rp_filter = 2

* Applying /etc/sysctl.d/10-ptrace.conf ...

kernel.yama.ptrace_scope = 1

* Applying /etc/sysctl.d/10-zeropage.conf ...

vm.mmap_min_addr = 65536

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 2

net.ipv4.conf.default.accept_source_route = 0

sysctl: setting key "net.ipv4.conf.all.accept_source_route": Invalid argument

net.ipv4.conf.default.promote_secondaries = 1

sysctl: setting key "net.ipv4.conf.all.promote_secondaries": Invalid argument

net.ipv4.ping_group_range = 0 2147483647

net.core.default_qdisc = fq_codel

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

fs.protected_regular = 1

fs.protected_fifos = 1

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

kernel.pid_max = 4194304

* Applying /usr/lib/sysctl.d/99-protect-links.conf ...

fs.protected_fifos = 1

fs.protected_hardlinks = 1

fs.protected_regular = 2

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

vm.swappiness = 0

kernel.sysrq = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_slow_start_after_idle = 0

fs.file-max = 1048576 # sealos

net.bridge.bridge-nf-call-ip6tables = 1 # sealos

net.bridge.bridge-nf-call-iptables = 1 # sealos

net.core.somaxconn = 65535 # sealos

net.ipv4.conf.all.rp_filter = 0 # sealos

net.ipv4.ip_forward = 1 # sealos

net.ipv4.ip_local_port_range = 1024 65535 # sealos

net.ipv4.tcp_keepalive_intvl = 30 # sealos

net.ipv4.tcp_keepalive_time = 600 # sealos

net.ipv4.vs.conn_reuse_mode = 0 # sealos

net.ipv4.vs.conntrack = 1 # sealos

net.ipv6.conf.all.forwarding = 1 # sealos

vm.max_map_count = 2147483642 # sealos

* Applying /etc/sysctl.conf ...

vm.swappiness = 0

kernel.sysrq = 1

net.ipv4.neigh.default.gc_stale_time = 120

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_announce = 2

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_slow_start_after_idle = 0

fs.file-max = 1048576 # sealos

net.bridge.bridge-nf-call-ip6tables = 1 # sealos

net.bridge.bridge-nf-call-iptables = 1 # sealos

net.core.somaxconn = 65535 # sealos

net.ipv4.conf.all.rp_filter = 0 # sealos

net.ipv4.ip_forward = 1 # sealos

net.ipv4.ip_local_port_range = 1024 65535 # sealos

net.ipv4.tcp_keepalive_intvl = 30 # sealos

net.ipv4.tcp_keepalive_time = 600 # sealos

net.ipv4.vs.conn_reuse_mode = 0 # sealos

net.ipv4.vs.conntrack = 1 # sealos

net.ipv6.conf.all.forwarding = 1 # sealos

vm.max_map_count = 2147483642 # sealosINFO [2025-07-27 15:10:10] >> pull pause image sealos.hub:5000/pause:3.9

Image is up to date for sha256:e6f1816883972d4be47bd48879a08919b96afcd344132622e4d444987919323c

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /etc/systemd/system/kubelet.service.INFO [2025-07-27 15:10:12] >> init kubelet success INFO [2025-07-27 15:10:12] >> init rootfs success

2025-07-27T15:10:12 info Executing pipeline Init in CreateProcessor.

2025-07-27T15:10:12 info Copying kubeadm config to master0

2025-07-27T15:10:12 info start to generate cert and kubeConfig...

2025-07-27T15:10:12 info start to generate and copy certs to masters...

2025-07-27T15:10:12 info apiserver altNames : {map[apiserver.cluster.local:apiserver.cluster.local kubernetes:kubernetes kubernetes.default:kubernetes.default kubernetes.default.svc:kubernetes.default.svc kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local localhost:localhost master01:master01] map[10.103.97.2:10.103.97.2 10.96.0.1:10.96.0.1 127.0.0.1:127.0.0.1 192.168.0.178:192.168.0.178 192.168.0.179:192.168.0.179 192.168.0.180:192.168.0.180]}

2025-07-27T15:10:12 info Etcd altnames : {map[localhost:localhost master01:master01] map[127.0.0.1:127.0.0.1 192.168.0.178:192.168.0.178 ::1:::1]}, commonName : master01

2025-07-27T15:10:14 info start to copy etc pki files to masters

2025-07-27T15:10:14 info start to create kubeconfig...

2025-07-27T15:10:15 info start to copy kubeconfig files to masters

2025-07-27T15:10:15 info start to copy static files to masters

2025-07-27T15:10:15 info start to init master0...

[config/images] Pulled registry.k8s.io/kube-apiserver:v1.29.9

[config/images] Pulled registry.k8s.io/kube-controller-manager:v1.29.9

[config/images] Pulled registry.k8s.io/kube-scheduler:v1.29.9

[config/images] Pulled registry.k8s.io/kube-proxy:v1.29.9

[config/images] Pulled registry.k8s.io/coredns/coredns:v1.11.1

[config/images] Pulled registry.k8s.io/pause:3.9

[config/images] Pulled registry.k8s.io/etcd:3.5.15-0

W0727 15:10:33.133384 3823 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

[init] Using Kubernetes version: v1.29.9

[preflight] Running pre-flight checks[WARNING FileExisting-socat]: socat not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W0727 15:10:33.352619 3823 checks.go:835] detected that the sandbox image "sealos.hub:5000/pause:3.9" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image.

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Using existing ca certificate authority

[certs] Using existing apiserver certificate and key on disk

[certs] Using existing apiserver-kubelet-client certificate and key on disk

[certs] Using existing front-proxy-ca certificate authority

[certs] Using existing front-proxy-client certificate and key on disk

[certs] Using existing etcd/ca certificate authority

[certs] Using existing etcd/server certificate and key on disk

[certs] Using existing etcd/peer certificate and key on disk

[certs] Using existing etcd/healthcheck-client certificate and key on disk

[certs] Using existing apiserver-etcd-client certificate and key on disk

[certs] Using the existing "sa" key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Writing "super-admin.conf" kubeconfig file

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/kubelet.conf"

W0727 15:10:34.405188 3823 kubeconfig.go:273] a kubeconfig file "/etc/kubernetes/controller-manager.conf" exists already but has an unexpected API Server URL: expected: https://192.168.0.178:6443, got: https://apiserver.cluster.local:6443

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/controller-manager.conf"

W0727 15:10:34.553432 3823 kubeconfig.go:273] a kubeconfig file "/etc/kubernetes/scheduler.conf" exists already but has an unexpected API Server URL: expected: https://192.168.0.178:6443, got: https://apiserver.cluster.local:6443

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/scheduler.conf"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 17.007773 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:kubeadm join apiserver.cluster.local:6443 --token <value withheld> \--discovery-token-ca-cert-hash sha256:fedf9ac9d94e7644dd72bdcb87361acdc6b6ff14bb706525f04ee0ab8bd67ca1 \--control-plane --certificate-key <value withheld>Then you can join any number of worker nodes by running the following on each as root:kubeadm join apiserver.cluster.local:6443 --token <value withheld> \--discovery-token-ca-cert-hash sha256:fedf9ac9d94e7644dd72bdcb87361acdc6b6ff14bb706525f04ee0ab8bd67ca1

2025-07-27T15:10:55 info Executing pipeline Join in CreateProcessor.

2025-07-27T15:10:55 info [192.168.0.179:22 192.168.0.180:22] will be added as master

2025-07-27T15:10:55 info start to send manifests to masters...

2025-07-27T15:10:55 info start to copy static files to masters

2025-07-27T15:10:55 info start to copy kubeconfig files to masters

2025-07-27T15:10:55 info start to copy etc pki files to masters1/1, 111 it/s)

2025-07-27T15:10:56 info start to get kubernetes token...

2025-07-27T15:10:57 info start to copy kubeadm join config to master: 192.168.0.180:22

2025-07-27T15:10:57 info start to copy kubeadm join config to master: 192.168.0.179:22

2025-07-27T15:10:58 info fetch certSANs from kubeadm configmap(1/1, 327 it/s)

2025-07-27T15:10:58 info start to join 192.168.0.179:22 as master

192.168.0.179:22 [config/images] Pulled registry.k8s.io/kube-apiserver:v1.29.9

192.168.0.179:22 [config/images] Pulled registry.k8s.io/kube-controller-manager:v1.29.9

192.168.0.179:22 [config/images] Pulled registry.k8s.io/kube-scheduler:v1.29.9

192.168.0.179:22 [config/images] Pulled registry.k8s.io/kube-proxy:v1.29.9

192.168.0.179:22 [config/images] Pulled registry.k8s.io/coredns/coredns:v1.11.1

192.168.0.179:22 [config/images] Pulled registry.k8s.io/pause:3.9

192.168.0.179:22 [config/images] Pulled registry.k8s.io/etcd:3.5.15-0

192.168.0.179:22 2025-07-27T15:11:19 info apiserver altNames : {map[apiserver.cluster.local:apiserver.cluster.local kubernetes:kubernetes kubernetes.default:kubernetes.default kubernetes.default.svc:kubernetes.default.svc kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local localhost:localhost master02:master02] map[10.103.97.2:10.103.97.2 10.96.0.1:10.96.0.1 127.0.0.1:127.0.0.1 192.168.0.178:192.168.0.178 192.168.0.179:192.168.0.179 192.168.0.180:192.168.0.180]}

192.168.0.179:22 2025-07-27T15:11:19 info Etcd altnames : {map[localhost:localhost master02:master02] map[127.0.0.1:127.0.0.1 192.168.0.179:192.168.0.179 ::1:::1]}, commonName : master02

192.168.0.179:22 2025-07-27T15:11:19 info sa.key sa.pub already exist

192.168.0.179:22 [preflight] Running pre-flight checks

192.168.0.179:22 [WARNING FileExisting-socat]: socat not found in system path

192.168.0.179:22 [preflight] Reading configuration from the cluster...

192.168.0.179:22 [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

192.168.0.179:22 W0727 15:11:20.791713 3130 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

192.168.0.179:22 [preflight] Running pre-flight checks before initializing the new control plane instance

192.168.0.179:22 [preflight] Pulling images required for setting up a Kubernetes cluster

192.168.0.179:22 [preflight] This might take a minute or two, depending on the speed of your internet connection

192.168.0.179:22 [preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

192.168.0.179:22 W0727 15:11:20.837239 3130 checks.go:835] detected that the sandbox image "sealos.hub:5000/pause:3.9" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image.

192.168.0.179:22 [download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

192.168.0.179:22 [download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki"

192.168.0.179:22 [certs] Using certificateDir folder "/etc/kubernetes/pki"

192.168.0.179:22 [certs] Using the existing "etcd/peer" certificate and key

192.168.0.179:22 [certs] Using the existing "etcd/healthcheck-client" certificate and key

192.168.0.179:22 [certs] Using the existing "etcd/server" certificate and key

192.168.0.179:22 [certs] Using the existing "apiserver-etcd-client" certificate and key

192.168.0.179:22 [certs] Using the existing "front-proxy-client" certificate and key

192.168.0.179:22 [certs] Using the existing "apiserver-kubelet-client" certificate and key

192.168.0.179:22 [certs] Using the existing "apiserver" certificate and key

192.168.0.179:22 [certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

192.168.0.179:22 [certs] Using the existing "sa" key

192.168.0.179:22 [kubeconfig] Generating kubeconfig files

192.168.0.179:22 [kubeconfig] Using kubeconfig folder "/etc/kubernetes"

192.168.0.179:22 [kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

192.168.0.179:22 W0727 15:11:22.627726 3130 kubeconfig.go:273] a kubeconfig file "/etc/kubernetes/controller-manager.conf" exists already but has an unexpected API Server URL: expected: https://192.168.0.179:6443, got: https://apiserver.cluster.local:6443

192.168.0.179:22 [kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/controller-manager.conf"

192.168.0.179:22 W0727 15:11:22.815362 3130 kubeconfig.go:273] a kubeconfig file "/etc/kubernetes/scheduler.conf" exists already but has an unexpected API Server URL: expected: https://192.168.0.179:6443, got: https://apiserver.cluster.local:6443

192.168.0.179:22 [kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/scheduler.conf"

192.168.0.179:22 [control-plane] Using manifest folder "/etc/kubernetes/manifests"

192.168.0.179:22 [control-plane] Creating static Pod manifest for "kube-apiserver"

192.168.0.179:22 [control-plane] Creating static Pod manifest for "kube-controller-manager"

192.168.0.179:22 [control-plane] Creating static Pod manifest for "kube-scheduler"

192.168.0.179:22 [check-etcd] Checking that the etcd cluster is healthy

192.168.0.179:22 [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

192.168.0.179:22 [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

192.168.0.179:22 [kubelet-start] Starting the kubelet

192.168.0.179:22 [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

192.168.0.179:22 [etcd] Announced new etcd member joining to the existing etcd cluster

192.168.0.179:22 [etcd] Creating static Pod manifest for "etcd"

192.168.0.179:22 {"level":"warn","ts":"2025-07-27T15:11:25.161572+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc000700540/192.168.0.178:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.179:22 {"level":"warn","ts":"2025-07-27T15:11:25.268454+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc000700540/192.168.0.178:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.179:22 {"level":"warn","ts":"2025-07-27T15:11:25.422631+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc000700540/192.168.0.178:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.179:22 {"level":"warn","ts":"2025-07-27T15:11:25.665056+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc000700540/192.168.0.178:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.179:22 {"level":"warn","ts":"2025-07-27T15:11:26.034455+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc000700540/192.168.0.178:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.179:22 {"level":"warn","ts":"2025-07-27T15:11:26.560367+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc000700540/192.168.0.178:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.179:22 [etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

192.168.0.179:22 The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

192.168.0.179:22 [mark-control-plane] Marking the node master02 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

192.168.0.179:22 [mark-control-plane] Marking the node master02 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

192.168.0.179:22

192.168.0.179:22 This node has joined the cluster and a new control plane instance was created:

192.168.0.179:22

192.168.0.179:22 * Certificate signing request was sent to apiserver and approval was received.

192.168.0.179:22 * The Kubelet was informed of the new secure connection details.

192.168.0.179:22 * Control plane label and taint were applied to the new node.

192.168.0.179:22 * The Kubernetes control plane instances scaled up.

192.168.0.179:22 * A new etcd member was added to the local/stacked etcd cluster.

192.168.0.179:22

192.168.0.179:22 To start administering your cluster from this node, you need to run the following as a regular user:

192.168.0.179:22

192.168.0.179:22 mkdir -p $HOME/.kube

192.168.0.179:22 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

192.168.0.179:22 sudo chown $(id -u):$(id -g) $HOME/.kube/config

192.168.0.179:22

192.168.0.179:22 Run 'kubectl get nodes' to see this node join the cluster.

192.168.0.179:22

192.168.0.179:22 2025-07-27T15:11:28 info domain apiserver.cluster.local delete success

192.168.0.179:22 2025-07-27T15:11:28 info domain apiserver.cluster.local:192.168.0.179 append success

2025-07-27T15:11:28 info succeeded in joining 192.168.0.179:22 as master

2025-07-27T15:11:28 info start to join 192.168.0.180:22 as master

192.168.0.180:22 [config/images] Pulled registry.k8s.io/kube-apiserver:v1.29.9

192.168.0.180:22 [config/images] Pulled registry.k8s.io/kube-controller-manager:v1.29.9

192.168.0.180:22 [config/images] Pulled registry.k8s.io/kube-scheduler:v1.29.9

192.168.0.180:22 [config/images] Pulled registry.k8s.io/kube-proxy:v1.29.9

192.168.0.180:22 [config/images] Pulled registry.k8s.io/coredns/coredns:v1.11.1

192.168.0.180:22 [config/images] Pulled registry.k8s.io/pause:3.9

192.168.0.180:22 [config/images] Pulled registry.k8s.io/etcd:3.5.15-0

192.168.0.180:22 2025-07-27T15:11:46 info apiserver altNames : {map[apiserver.cluster.local:apiserver.cluster.local kubernetes:kubernetes kubernetes.default:kubernetes.default kubernetes.default.svc:kubernetes.default.svc kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local localhost:localhost master00002:master00002] map[10.103.97.2:10.103.97.2 10.96.0.1:10.96.0.1 127.0.0.1:127.0.0.1 192.168.0.178:192.168.0.178 192.168.0.179:192.168.0.179 192.168.0.180:192.168.0.180]}

192.168.0.180:22 2025-07-27T15:11:46 info Etcd altnames : {map[localhost:localhost master00002:master00002] map[127.0.0.1:127.0.0.1 192.168.0.180:192.168.0.180 ::1:::1]}, commonName : master00002

192.168.0.180:22 2025-07-27T15:11:46 info sa.key sa.pub already exist

192.168.0.180:22 [preflight] Running pre-flight checks

192.168.0.180:22 [WARNING FileExisting-socat]: socat not found in system path

192.168.0.180:22 [preflight] Reading configuration from the cluster...

192.168.0.180:22 [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

192.168.0.180:22 W0727 15:11:48.906919 3263 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

192.168.0.180:22 [preflight] Running pre-flight checks before initializing the new control plane instance

192.168.0.180:22 [preflight] Pulling images required for setting up a Kubernetes cluster

192.168.0.180:22 [preflight] This might take a minute or two, depending on the speed of your internet connection

192.168.0.180:22 [preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

192.168.0.180:22 W0727 15:11:48.951445 3263 checks.go:835] detected that the sandbox image "sealos.hub:5000/pause:3.9" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image.

192.168.0.180:22 [download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

192.168.0.180:22 [download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki"

192.168.0.180:22 [certs] Using certificateDir folder "/etc/kubernetes/pki"

192.168.0.180:22 [certs] Using the existing "etcd/healthcheck-client" certificate and key

192.168.0.180:22 [certs] Using the existing "apiserver-etcd-client" certificate and key

192.168.0.180:22 [certs] Using the existing "etcd/server" certificate and key

192.168.0.180:22 [certs] Using the existing "etcd/peer" certificate and key

192.168.0.180:22 [certs] Using the existing "apiserver" certificate and key

192.168.0.180:22 [certs] Using the existing "apiserver-kubelet-client" certificate and key

192.168.0.180:22 [certs] Using the existing "front-proxy-client" certificate and key

192.168.0.180:22 [certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

192.168.0.180:22 [certs] Using the existing "sa" key

192.168.0.180:22 [kubeconfig] Generating kubeconfig files

192.168.0.180:22 [kubeconfig] Using kubeconfig folder "/etc/kubernetes"

192.168.0.180:22 [kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

192.168.0.180:22 W0727 15:11:50.873597 3263 kubeconfig.go:273] a kubeconfig file "/etc/kubernetes/controller-manager.conf" exists already but has an unexpected API Server URL: expected: https://192.168.0.180:6443, got: https://apiserver.cluster.local:6443

192.168.0.180:22 [kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/controller-manager.conf"

192.168.0.180:22 W0727 15:11:51.181091 3263 kubeconfig.go:273] a kubeconfig file "/etc/kubernetes/scheduler.conf" exists already but has an unexpected API Server URL: expected: https://192.168.0.180:6443, got: https://apiserver.cluster.local:6443

192.168.0.180:22 [kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/scheduler.conf"

192.168.0.180:22 [control-plane] Using manifest folder "/etc/kubernetes/manifests"

192.168.0.180:22 [control-plane] Creating static Pod manifest for "kube-apiserver"

192.168.0.180:22 [control-plane] Creating static Pod manifest for "kube-controller-manager"

192.168.0.180:22 [control-plane] Creating static Pod manifest for "kube-scheduler"

192.168.0.180:22 [check-etcd] Checking that the etcd cluster is healthy

192.168.0.180:22 [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

192.168.0.180:22 [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

192.168.0.180:22 [kubelet-start] Starting the kubelet

192.168.0.180:22 [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

192.168.0.180:22 [etcd] Announced new etcd member joining to the existing etcd cluster

192.168.0.180:22 [etcd] Creating static Pod manifest for "etcd"

192.168.0.180:22 {"level":"warn","ts":"2025-07-27T15:11:53.474062+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc00061a000/192.168.0.179:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.180:22 {"level":"warn","ts":"2025-07-27T15:11:53.578202+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc00061a000/192.168.0.179:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.180:22 {"level":"warn","ts":"2025-07-27T15:11:53.74711+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc00061a000/192.168.0.179:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.180:22 {"level":"warn","ts":"2025-07-27T15:11:53.983924+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc00061a000/192.168.0.179:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.180:22 {"level":"warn","ts":"2025-07-27T15:11:54.390568+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc00061a000/192.168.0.179:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.180:22 {"level":"warn","ts":"2025-07-27T15:11:54.918149+0800","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0xc00061a000/192.168.0.179:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"}

192.168.0.180:22 [etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

192.168.0.180:22 The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation

192.168.0.180:22 [mark-control-plane] Marking the node master00002 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

192.168.0.180:22 [mark-control-plane] Marking the node master00002 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

192.168.0.180:22

192.168.0.180:22 This node has joined the cluster and a new control plane instance was created:

192.168.0.180:22

192.168.0.180:22 * Certificate signing request was sent to apiserver and approval was received.

192.168.0.180:22 * The Kubelet was informed of the new secure connection details.

192.168.0.180:22 * Control plane label and taint were applied to the new node.

192.168.0.180:22 * The Kubernetes control plane instances scaled up.

192.168.0.180:22 * A new etcd member was added to the local/stacked etcd cluster.

192.168.0.180:22

192.168.0.180:22 To start administering your cluster from this node, you need to run the following as a regular user:

192.168.0.180:22

192.168.0.180:22 mkdir -p $HOME/.kube

192.168.0.180:22 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

192.168.0.180:22 sudo chown $(id -u):$(id -g) $HOME/.kube/config

192.168.0.180:22

192.168.0.180:22 Run 'kubectl get nodes' to see this node join the cluster.

192.168.0.180:22

192.168.0.180:22 2025-07-27T15:11:57 info domain apiserver.cluster.local delete success

192.168.0.180:22 2025-07-27T15:11:57 info domain apiserver.cluster.local:192.168.0.180 append success

2025-07-27T15:11:58 info succeeded in joining 192.168.0.180:22 as master

2025-07-27T15:11:58 info [192.168.0.181:22 192.168.0.182:22] will be added as worker

2025-07-27T15:11:58 info fetch certSANs from kubeadm configmap

2025-07-27T15:11:58 info start to join 192.168.0.182:22 as worker

2025-07-27T15:11:58 info start to copy kubeadm join config to node: 192.168.0.182:22

2025-07-27T15:11:58 info start to join 192.168.0.181:22 as worker

2025-07-27T15:11:59 info run ipvs once module: 192.168.0.182:221/1, 231 it/s)

2025-07-27T15:11:59 info start to copy kubeadm join config to node: 192.168.0.181:22

192.168.0.182:22 2025-07-27T15:11:59 info Trying to add route

192.168.0.182:22 2025-07-27T15:11:59 info success to set route.(host:10.103.97.2, gateway:192.168.0.182)

2025-07-27T15:11:59 info start join node: 192.168.0.182:22

192.168.0.182:22 [preflight] Running pre-flight checks

2025-07-27T15:11:59 info run ipvs once module: 192.168.0.181:221/1, 158 it/s)

192.168.0.182:22 [WARNING FileExisting-socat]: socat not found in system path

192.168.0.182:22 [preflight] Reading configuration from the cluster...

192.168.0.182:22 [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

192.168.0.182:22 W0727 15:11:59.887268 3022 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

192.168.0.182:22 [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

192.168.0.182:22 [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

192.168.0.182:22 [kubelet-start] Starting the kubelet

192.168.0.181:22 2025-07-27T15:11:59 info Trying to add route

192.168.0.181:22 2025-07-27T15:11:59 info success to set route.(host:10.103.97.2, gateway:192.168.0.181)

2025-07-27T15:11:59 info start join node: 192.168.0.181:22

192.168.0.181:22 [preflight] Running pre-flight checks

192.168.0.181:22 [WARNING FileExisting-socat]: socat not found in system path

192.168.0.181:22 [preflight] Reading configuration from the cluster...

192.168.0.181:22 [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

192.168.0.182:22 [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

192.168.0.181:22 W0727 15:12:00.276054 3545 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

192.168.0.181:22 [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

192.168.0.181:22 [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

192.168.0.181:22 [kubelet-start] Starting the kubelet

192.168.0.181:22 [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

192.168.0.182:22

192.168.0.182:22 This node has joined the cluster:

192.168.0.182:22 * Certificate signing request was sent to apiserver and a response was received.

192.168.0.182:22 * The Kubelet was informed of the new secure connection details.

192.168.0.182:22

192.168.0.182:22 Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

192.168.0.182:22

2025-07-27T15:12:02 info succeeded in joining 192.168.0.182:22 as worker

192.168.0.181:22

192.168.0.181:22 This node has joined the cluster:

192.168.0.181:22 * Certificate signing request was sent to apiserver and a response was received.

192.168.0.181:22 * The Kubelet was informed of the new secure connection details.

192.168.0.181:22

192.168.0.181:22 Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

192.168.0.181:22

2025-07-27T15:12:02 info succeeded in joining 192.168.0.181:22 as worker

2025-07-27T15:12:02 info start to sync lvscare static pod to node: 192.168.0.181:22 master: [192.168.0.178:6443 192.168.0.179:6443 192.168.0.180:6443]

2025-07-27T15:12:02 info start to sync lvscare static pod to node: 192.168.0.182:22 master: [192.168.0.178:6443 192.168.0.179:6443 192.168.0.180:6443]

192.168.0.181:22 2025-07-27T15:12:02 info generator lvscare static pod is success

192.168.0.182:22 2025-07-27T15:12:02 info generator lvscare static pod is success

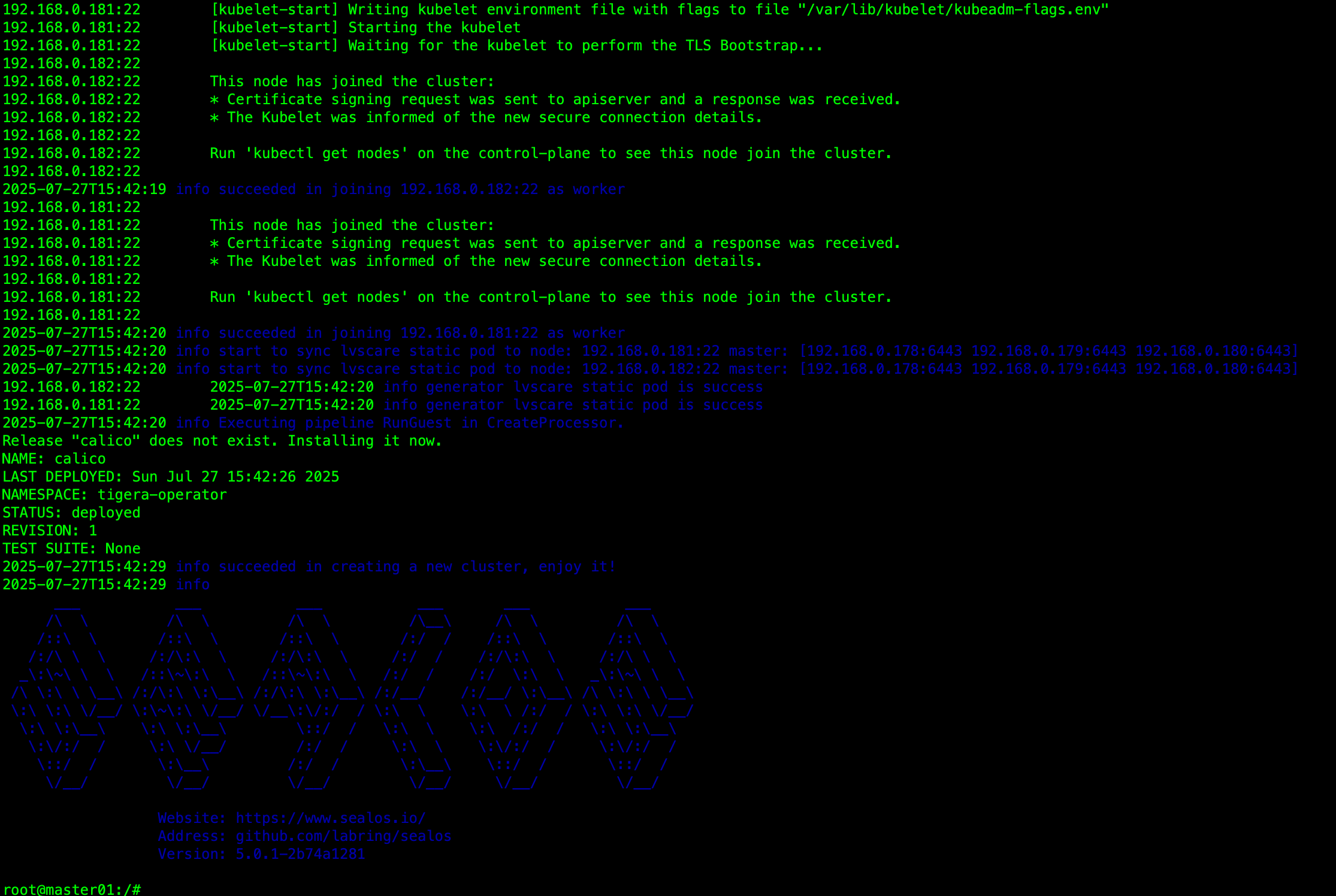

2025-07-27T15:12:02 info Executing pipeline RunGuest in CreateProcessor.

ℹ️ Using Cilium version 1.13.4

🔮 Auto-detected cluster name: kubernetes

🔮 Auto-detected datapath mode: tunnel

🔮 Auto-detected kube-proxy has been installed

2025-07-27T15:12:16 info succeeded in creating a new cluster, enjoy it!

2025-07-27T15:12:16 info ___ ___ ___ ___ ___ ___/\ \ /\ \ /\ \ /\__\ /\ \ /\ \/::\ \ /::\ \ /::\ \ /:/ / /::\ \ /::\ \/:/\ \ \ /:/\:\ \ /:/\:\ \ /:/ / /:/\:\ \ /:/\ \ \_\:\~\ \ \ /::\~\:\ \ /::\~\:\ \ /:/ / /:/ \:\ \ _\:\~\ \ \/\ \:\ \ \__\ /:/\:\ \:\__\ /:/\:\ \:\__\ /:/__/ /:/__/ \:\__\ /\ \:\ \ \__\\:\ \:\ \/__/ \:\~\:\ \/__/ \/__\:\/:/ / \:\ \ \:\ \ /:/ / \:\ \:\ \/__/\:\ \:\__\ \:\ \:\__\ \::/ / \:\ \ \:\ /:/ / \:\ \:\__\\:\/:/ / \:\ \/__/ /:/ / \:\ \ \:\/:/ / \:\/:/ /\::/ / \:\__\ /:/ / \:\__\ \::/ / \::/ /\/__/ \/__/ \/__/ \/__/ \/__/ \/__/Website: https://www.sealos.io/Address: github.com/labring/sealosVersion: 5.0.1-2b74a1281root@master01:/# root@master01:/# kubectl get node

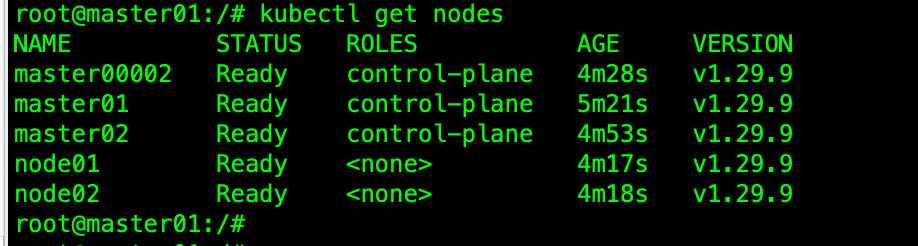

NAME STATUS ROLES AGE VERSION

master00002 Ready control-plane 6m12s v1.29.9

master01 Ready control-plane 7m17s v1.29.9

master02 Ready control-plane 6m41s v1.29.9

node01 Ready <none> 6m3s v1.29.9

node02 Ready <none> 6m4s v1.29.9

root@master01:/# root@master01:/# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8m28s

root@master01:/# kubectl get ns

NAME STATUS AGE

default Active 8m49s

kube-node-lease Active 8m50s

kube-public Active 8m50s

kube-system Active 8m50s

root@master01:/# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

cilium-6b2k7 1/1 Running 0 7m45s

cilium-hhssh 1/1 Running 0 7m45s

cilium-ln6jv 1/1 Running 0 7m45s

cilium-operator-6946ccbcc5-55jpj 1/1 Running 0 7m46s

cilium-sm6l6 1/1 Running 0 7m46s

cilium-tpsgd 1/1 Running 0 7m45s

coredns-76f75df574-2c24r 1/1 Running 0 8m54s

coredns-76f75df574-jljdx 1/1 Running 0 8m54s

etcd-master00002 1/1 Running 0 8m8s

etcd-master01 1/1 Running 0 9m9s

etcd-master02 1/1 Running 0 8m36s

kube-apiserver-master00002 1/1 Running 0 8m6s

kube-apiserver-master01 1/1 Running 0 9m8s

kube-apiserver-master02 1/1 Running 0 8m35s

kube-controller-manager-master00002 1/1 Running 0 8m6s

kube-controller-manager-master01 1/1 Running 0 9m11s

kube-controller-manager-master02 1/1 Running 0 8m35s

kube-proxy-7gxt9 1/1 Running 0 8m1s

kube-proxy-94zzb 1/1 Running 0 8m

kube-proxy-ghw6z 1/1 Running 0 8m38s

kube-proxy-kvqq7 1/1 Running 0 8m9s

kube-proxy-n8824 1/1 Running 0 8m55s

kube-scheduler-master00002 1/1 Running 0 8m6s

kube-scheduler-master01 1/1 Running 0 9m9s

kube-scheduler-master02 1/1 Running 0 8m35s

kube-sealos-lvscare-node01 1/1 Running 0 7m40s

kube-sealos-lvscare-node02 1/1 Running 0 7m41s

root@master01:/# sealos run registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 # install helm

sealos run registry.cn-shanghai.aliyuncs.com/labring/openebs:v3.9.0 # install openebs

sealos run registry.cn-shanghai.aliyuncs.com/labring/minio-operator:latest registry.cn-shanghai.aliyuncs.com/labring/ingress-nginx:4.1.0root@master01:/# sealos reset

2025-07-27T15:38:00 info are you sure to delete these nodes?

Do you want to continue on 'master01' cluster? Input 'master01' to continue: master01

2025-07-27T15:38:04 info start to delete Cluster: master [192.168.0.178 192.168.0.179 192.168.0.180], node [192.168.0.181 192.168.0.182]

2025-07-27T15:38:04 info start to reset nodes: [192.168.0.181:22 192.168.0.182:22]

2025-07-27T15:38:04 info start to reset node: 192.168.0.182:22

2025-07-27T15:38:04 info start to reset node: 192.168.0.181:22

192.168.0.181:22 /usr/bin/kubeadm

192.168.0.181:22 [preflight] Running pre-flight checks

192.168.0.181:22 W0727 15:38:05.232816 11808 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory

192.168.0.181:22 [reset] Deleted contents of the etcd data directory: /var/lib/etcd

192.168.0.181:22 [reset] Stopping the kubelet service

192.168.0.182:22 /usr/bin/kubeadm

192.168.0.182:22 [preflight] Running pre-flight checks

192.168.0.182:22 W0727 15:38:05.279981 11728 removeetcdmember.go:106] [reset] No kubeadm config, using etcd pod spec to get data directory

192.168.0.182:22 [reset] Deleted contents of the etcd data directory: /var/lib/etcd

192.168.0.182:22 [reset] Stopping the kubelet service

192.168.0.181:22 [reset] Unmounting mounted directories in "/var/lib/kubelet"

192.168.0.182:22 [reset] Unmounting mounted directories in "/var/lib/kubelet"

192.168.0.181:22 [reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

192.168.0.181:22 [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/super-admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

192.168.0.181:22

192.168.0.181:22 The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

192.168.0.181:22

192.168.0.181:22 The reset process does not reset or clean up iptables rules or IPVS tables.

192.168.0.181:22 If you wish to reset iptables, you must do so manually by using the "iptables" command.

192.168.0.181:22

192.168.0.181:22 If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

192.168.0.181:22 to reset your system's IPVS tables.

192.168.0.181:22

192.168.0.181:22 The reset process does not clean your kubeconfig files and you must remove them manually.

192.168.0.181:22 Please, check the contents of the $HOME/.kube/config file.

192.168.0.182:22 [reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

192.168.0.182:22 [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/super-admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

192.168.0.182:22

192.168.0.182:22 The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

192.168.0.182:22

192.168.0.182:22 The reset process does not reset or clean up iptables rules or IPVS tables.

192.168.0.182:22 If you wish to reset iptables, you must do so manually by using the "iptables" command.

192.168.0.182:22

192.168.0.182:22 If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

192.168.0.182:22 to reset your system's IPVS tables.

192.168.0.182:22

192.168.0.182:22 The reset process does not clean your kubeconfig files and you must remove them manually.

192.168.0.182:22 Please, check the contents of the $HOME/.kube/config file.

192.168.0.181:22 2025-07-27T15:38:06 info delete IPVS service 10.103.97.2:6443

192.168.0.181:22 2025-07-27T15:38:06 info Trying to delete route

192.168.0.181:22 2025-07-27T15:38:06 info success to del route.(host:10.103.97.2, gateway:192.168.0.181)

192.168.0.182:22 2025-07-27T15:38:06 info delete IPVS service 10.103.97.2:6443

192.168.0.182:22 2025-07-27T15:38:06 info Trying to delete route

192.168.0.182:22 2025-07-27T15:38:06 info success to del route.(host:10.103.97.2, gateway:192.168.0.182)

2025-07-27T15:38:06 info start to reset masters: [192.168.0.178:22 192.168.0.179:22 192.168.0.180:22]

2025-07-27T15:38:06 info start to reset node: 192.168.0.178:22

/usr/bin/kubeadm

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0727 15:38:06.943874 14793 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

[preflight] Running pre-flight checks

[reset] Deleted contents of the etcd data directory: /var/lib/etcd

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/super-admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.dThe reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

2025-07-27T15:38:09 info start to reset node: 192.168.0.179:22

192.168.0.179:22 /usr/bin/kubeadm

192.168.0.179:22 [reset] Reading configuration from the cluster...

192.168.0.179:22 [reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

192.168.0.179:22 W0727 15:38:10.525306 12210 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

192.168.0.179:22 [preflight] Running pre-flight checks

192.168.0.179:22 [reset] Deleted contents of the etcd data directory: /var/lib/etcd

192.168.0.179:22 [reset] Stopping the kubelet service

192.168.0.179:22 [reset] Unmounting mounted directories in "/var/lib/kubelet"

192.168.0.179:22 [reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

192.168.0.179:22 [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/super-admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

192.168.0.179:22

192.168.0.179:22 The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

192.168.0.179:22

192.168.0.179:22 The reset process does not reset or clean up iptables rules or IPVS tables.

192.168.0.179:22 If you wish to reset iptables, you must do so manually by using the "iptables" command.

192.168.0.179:22

192.168.0.179:22 If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

192.168.0.179:22 to reset your system's IPVS tables.

192.168.0.179:22

192.168.0.179:22 The reset process does not clean your kubeconfig files and you must remove them manually.

192.168.0.179:22 Please, check the contents of the $HOME/.kube/config file.

2025-07-27T15:38:12 info start to reset node: 192.168.0.180:22

192.168.0.180:22 /usr/bin/kubeadm

192.168.0.180:22 [reset] Reading configuration from the cluster...

192.168.0.180:22 [reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

192.168.0.180:22 W0727 15:38:13.562579 12459 utils.go:69] The recommended value for "healthzBindAddress" in "KubeletConfiguration" is: 127.0.0.1; the provided value is: 0.0.0.0

192.168.0.180:22 [preflight] Running pre-flight checks

192.168.0.180:22 [reset] Deleted contents of the etcd data directory: /var/lib/etcd

192.168.0.180:22 [reset] Stopping the kubelet service

192.168.0.180:22 [reset] Unmounting mounted directories in "/var/lib/kubelet"

192.168.0.180:22 [reset] Deleting contents of directories: [/etc/kubernetes/manifests /var/lib/kubelet /etc/kubernetes/pki]

192.168.0.180:22 [reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/super-admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

192.168.0.180:22

192.168.0.180:22 The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

192.168.0.180:22

192.168.0.180:22 The reset process does not reset or clean up iptables rules or IPVS tables.

192.168.0.180:22 If you wish to reset iptables, you must do so manually by using the "iptables" command.

192.168.0.180:22

192.168.0.180:22 If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

192.168.0.180:22 to reset your system's IPVS tables.

192.168.0.180:22

192.168.0.180:22 The reset process does not clean your kubeconfig files and you must remove them manually.

192.168.0.180:22 Please, check the contents of the $HOME/.kube/config file.

2025-07-27T15:38:16 info Executing pipeline Bootstrap in DeleteProcessor

2025-07-27T15:38:16 info domain sealos.hub delete success

192.168.0.182:22 2025-07-27T15:38:16 info domain sealos.hub delete success

192.168.0.181:22 2025-07-27T15:38:16 info domain sealos.hub delete success

192.168.0.179:22 2025-07-27T15:38:16 info domain sealos.hub delete success

192.168.0.180:22 2025-07-27T15:38:16 info domain sealos.hub delete success

192.168.0.182:22 2025-07-27T15:38:16 info domain apiserver.cluster.local delete success

192.168.0.181:22 2025-07-27T15:38:16 info domain apiserver.cluster.local delete success

192.168.0.180:22 2025-07-27T15:38:16 info domain apiserver.cluster.local delete success

192.168.0.179:22 2025-07-27T15:38:16 info domain apiserver.cluster.local delete success

192.168.0.182:22 2025-07-27T15:38:16 info domain lvscare.node.ip delete success

Removed /etc/systemd/system/multi-user.target.wants/registry.service.

192.168.0.181:22 2025-07-27T15:38:17 info domain lvscare.node.ip delete success

192.168.0.179:22 Removed /etc/systemd/system/multi-user.target.wants/kubelet.service.

192.168.0.180:22 Removed /etc/systemd/system/multi-user.target.wants/kubelet.service.

192.168.0.182:22 Removed /etc/systemd/system/multi-user.target.wants/kubelet.service.

192.168.0.181:22 Removed /etc/systemd/system/multi-user.target.wants/kubelet.service.INFO [2025-07-27 15:38:17] >> clean registry success

2025-07-27T15:38:17 info domain apiserver.cluster.local delete success

Removed /etc/systemd/system/multi-user.target.wants/kubelet.service.

192.168.0.179:22 INFO [2025-07-27 15:38:17] >> clean kubelet success

192.168.0.179:22 Removed /etc/systemd/system/multi-user.target.wants/image-cri-shim.service.

192.168.0.180:22 INFO [2025-07-27 15:38:17] >> clean kubelet success

192.168.0.182:22 INFO [2025-07-27 15:38:17] >> clean kubelet success

192.168.0.180:22 Removed /etc/systemd/system/multi-user.target.wants/image-cri-shim.service.

192.168.0.182:22 Removed /etc/systemd/system/multi-user.target.wants/image-cri-shim.service.

192.168.0.181:22 INFO [2025-07-27 15:38:18] >> clean kubelet success

192.168.0.181:22 Removed /etc/systemd/system/multi-user.target.wants/image-cri-shim.service.

192.168.0.179:22 INFO [2025-07-27 15:38:18] >> clean shim success

192.168.0.179:22 INFO [2025-07-27 15:38:18] >> clean rootfs success

192.168.0.182:22 INFO [2025-07-27 15:38:18] >> clean shim success

192.168.0.182:22 INFO [2025-07-27 15:38:18] >> clean rootfs success

192.168.0.179:22 Removed /etc/systemd/system/multi-user.target.wants/containerd.service.INFO [2025-07-27 15:38:18] >> clean kubelet success

192.168.0.182:22 Removed /etc/systemd/system/multi-user.target.wants/containerd.service.

Removed /etc/systemd/system/multi-user.target.wants/image-cri-shim.service.

192.168.0.181:22 INFO [2025-07-27 15:38:18] >> clean shim success

192.168.0.181:22 INFO [2025-07-27 15:38:18] >> clean rootfs success

192.168.0.181:22 Removed /etc/systemd/system/multi-user.target.wants/containerd.service.

192.168.0.180:22 INFO [2025-07-27 15:38:18] >> clean shim success

192.168.0.180:22 INFO [2025-07-27 15:38:18] >> clean rootfs success

192.168.0.180:22 Removed /etc/systemd/system/multi-user.target.wants/containerd.service.INFO [2025-07-27 15:38:19] >> clean shim success INFO [2025-07-27 15:38:19] >> clean rootfs success

192.168.0.182:22 INFO [2025-07-27 15:38:19] >> clean containerd success

192.168.0.182:22 INFO [2025-07-27 15:38:19] >> clean containerd cri success

Removed /etc/systemd/system/multi-user.target.wants/containerd.service.

192.168.0.181:22 INFO [2025-07-27 15:38:19] >> clean containerd success

192.168.0.181:22 INFO [2025-07-27 15:38:19] >> clean containerd cri success

192.168.0.179:22 INFO [2025-07-27 15:38:20] >> clean containerd success

192.168.0.179:22 INFO [2025-07-27 15:38:20] >> clean containerd cri success

192.168.0.180:22 INFO [2025-07-27 15:38:20] >> clean containerd success

192.168.0.180:22 INFO [2025-07-27 15:38:20] >> clean containerd cri success INFO [2025-07-27 15:38:20] >> clean containerd success INFO [2025-07-27 15:38:20] >> clean containerd cri success

2025-07-27T15:38:21 info succeeded in deleting current cluster

2025-07-27T15:38:21 info ___ ___ ___ ___ ___ ___/\ \ /\ \ /\ \ /\__\ /\ \ /\ \/::\ \ /::\ \ /::\ \ /:/ / /::\ \ /::\ \/:/\ \ \ /:/\:\ \ /:/\:\ \ /:/ / /:/\:\ \ /:/\ \ \_\:\~\ \ \ /::\~\:\ \ /::\~\:\ \ /:/ / /:/ \:\ \ _\:\~\ \ \/\ \:\ \ \__\ /:/\:\ \:\__\ /:/\:\ \:\__\ /:/__/ /:/__/ \:\__\ /\ \:\ \ \__\\:\ \:\ \/__/ \:\~\:\ \/__/ \/__\:\/:/ / \:\ \ \:\ \ /:/ / \:\ \:\ \/__/\:\ \:\__\ \:\ \:\__\ \::/ / \:\ \ \:\ /:/ / \:\ \:\__\\:\/:/ / \:\ \/__/ /:/ / \:\ \ \:\/:/ / \:\/:/ /\::/ / \:\__\ /:/ / \:\__\ \::/ / \::/ /\/__/ \/__/ \/__/ \/__/ \/__/ \/__/Website: https://www.sealos.io/Address: github.com/labring/sealosVersion: 5.0.1-2b74a1281root@master01:/# ---

sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.29.9 registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 registry.cn-shanghai.aliyuncs.com/labring/calico:v3.26.5 \

--masters 192.168.0.178,192.168.0.179,192.168.0.180 \

--nodes 192.168.0.181,192.168.0.182 -p '密码'root@master01:/# sealos run registry.cn-shanghai.aliyuncs.com/labring/kubernetes:v1.29.9 registry.cn-shanghai.aliyuncs.com/labring/helm:v3.9.4 registry.cn-shanghai.aliyuncs.com/labring/calico:v3.26.5 \

--masters 192.168.0.178,192.168.0.179,192.168.0.180 \

--nodes 192.168.0.181,192.168.0.182 -p '密码'

2025-07-27T15:39:34 info Start to create a new cluster: master [192.168.0.178 192.168.0.179 192.168.0.180], worker [192.168.0.181 192.168.0.182], registry 192.168.0.178

2025-07-27T15:39:34 info Executing pipeline Check in CreateProcessor.

2025-07-27T15:39:34 info checker:hostname [192.168.0.178:22 192.168.0.179:22 192.168.0.180:22 192.168.0.181:22 192.168.0.182:22]

2025-07-27T15:39:36 info checker:timeSync [192.168.0.178:22 192.168.0.179:22 192.168.0.180:22 192.168.0.181:22 192.168.0.182:22]

2025-07-27T15:39:37 info checker:containerd [192.168.0.178:22 192.168.0.179:22 192.168.0.180:22 192.168.0.181:22 192.168.0.182:22]

2025-07-27T15:39:37 info Executing pipeline PreProcess in CreateProcessor.

2025-07-27T15:39:37 info Executing pipeline RunConfig in CreateProcessor.

2025-07-27T15:39:37 info Executing pipeline MountRootfs in CreateProcessor.

[1/1]copying files to 192.168.0.182:22 20% [==> ] (1/5, 36 it/s) [0s:0s]2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/config.toml from /var/lib/sealos/data/default/rootfs/etc/config.toml.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/containerd.service from /var/lib/sealos/data/default/rootfs/etc/containerd.service.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/hosts.toml from /var/lib/sealos/data/default/rootfs/etc/hosts.toml.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/kubelet.service from /var/lib/sealos/data/default/rootfs/etc/kubelet.service.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/registry.service from /var/lib/sealos/data/default/rootfs/etc/registry.service.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/registry.yml from /var/lib/sealos/data/default/rootfs/etc/registry.yml.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/registry_config.yml from /var/lib/sealos/data/default/rootfs/etc/registry_config.yml.tmpl completed

2025-07-27T15:39:46 info render /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf from /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/config.toml from /var/lib/sealos/data/default/rootfs/etc/config.toml.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/containerd.service from /var/lib/sealos/data/default/rootfs/etc/containerd.service.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/hosts.toml from /var/lib/sealos/data/default/rootfs/etc/hosts.toml.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml.tmpl completed

[1/1]copying files to 192.168.0.182:22 6% [ ] (1/16, 176 it/s) [0s:0s]192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/kubelet.service from /var/lib/sealos/data/default/rootfs/etc/kubelet.service.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/registry.service from /var/lib/sealos/data/default/rootfs/etc/registry.service.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/registry.yml from /var/lib/sealos/data/default/rootfs/etc/registry.yml.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/registry_config.yml from /var/lib/sealos/data/default/rootfs/etc/registry_config.yml.tmpl completed

192.168.0.181:22 2025-07-27T15:40:12 info render /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf from /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf.tmpl completed

192.168.0.182:22es to 192025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/config.toml from /var/lib/sealos/data/default/rootfs/etc/config.toml.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/containerd.service from /var/lib/sealos/data/default/rootfs/etc/containerd.service.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/hosts.toml from /var/lib/sealos/data/default/rootfs/etc/hosts.toml.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/kubelet.service from /var/lib/sealos/data/default/rootfs/etc/kubelet.service.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry.service from /var/lib/sealos/data/default/rootfs/etc/registry.service.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry.yml from /var/lib/sealos/data/default/rootfs/etc/registry.yml.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry_config.yml from /var/lib/sealos/data/default/rootfs/etc/registry_config.yml.tmpl completed

192.168.0.182:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf from /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf.tmpl completed

[1/1]copying files to 192.168.0.180:22 93% [=============> ] (15/16, 352 it/s) [0s:0s]192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/config.toml from /var/lib/sealos/data/default/rootfs/etc/config.toml.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/containerd.service from /var/lib/sealos/data/default/rootfs/etc/containerd.service.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/hosts.toml from /var/lib/sealos/data/default/rootfs/etc/hosts.toml.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/kubelet.service from /var/lib/sealos/data/default/rootfs/etc/kubelet.service.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry.service from /var/lib/sealos/data/default/rootfs/etc/registry.service.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry.yml from /var/lib/sealos/data/default/rootfs/etc/registry.yml.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry_config.yml from /var/lib/sealos/data/default/rootfs/etc/registry_config.yml.tmpl completed

192.168.0.179:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf from /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf.tmpl completed

192.168.0.180:22es to 192025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/config.toml from /var/lib/sealos/data/default/rootfs/etc/config.toml.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/containerd.service from /var/lib/sealos/data/default/rootfs/etc/containerd.service.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/hosts.toml from /var/lib/sealos/data/default/rootfs/etc/hosts.toml.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.service.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml from /var/lib/sealos/data/default/rootfs/etc/image-cri-shim.yaml.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/kubelet.service from /var/lib/sealos/data/default/rootfs/etc/kubelet.service.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry.service from /var/lib/sealos/data/default/rootfs/etc/registry.service.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry.yml from /var/lib/sealos/data/default/rootfs/etc/registry.yml.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/registry_config.yml from /var/lib/sealos/data/default/rootfs/etc/registry_config.yml.tmpl completed

192.168.0.180:22 2025-07-27T15:40:13 info render /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf from /var/lib/sealos/data/default/rootfs/etc/systemd/system/kubelet.service.d/10-kubeadm.conf.tmpl completed

2025-07-27T15:40:13 info Executing pipeline MirrorRegistry in CreateProcessor.

2025-07-27T15:40:13 info trying default http mode to sync images to hosts [192.168.0.178:22]

2025-07-27T15:40:28 info Executing pipeline Bootstrap in CreateProcessorINFO [2025-07-27 15:40:28] >> Check port kubelet port 10249..10259, reserved port 5050..5054 inuse. Please wait...

192.168.0.182:22 INFO [2025-07-27 15:40:28] >> Check port kubelet port 10249..10259, reserved port 5050..5054 inuse. Please wait...

192.168.0.181:22 INFO [2025-07-27 15:40:28] >> Check port kubelet port 10249..10259, reserved port 5050..5054 inuse. Please wait...

192.168.0.179:22 INFO [2025-07-27 15:40:28] >> Check port kubelet port 10249..10259, reserved port 5050..5054 inuse. Please wait...

192.168.0.180:22 INFO [2025-07-27 15:40:28] >> Check port kubelet port 10249..10259, reserved port 5050..5054 inuse. Please wait...

192.168.0.182:22 INFO [2025-07-27 15:40:29] >> check root,port,cri success

192.168.0.181:22 INFO [2025-07-27 15:40:29] >> check root,port,cri success

192.168.0.180:22 INFO [2025-07-27 15:40:29] >> check root,port,cri success

192.168.0.179:22 INFO [2025-07-27 15:40:29] >> check root,port,cri success INFO [2025-07-27 15:40:29] >> check root,port,cri success

2025-07-27T15:40:29 info domain sealos.hub:192.168.0.178 append success

192.168.0.182:22 2025-07-27T15:40:29 info domain sealos.hub:192.168.0.178 append success

192.168.0.181:22 2025-07-27T15:40:29 info domain sealos.hub:192.168.0.178 append success

192.168.0.180:22 2025-07-27T15:40:29 info domain sealos.hub:192.168.0.178 append success

192.168.0.179:22 2025-07-27T15:40:29 info domain sealos.hub:192.168.0.178 append success

Created symlink /etc/systemd/system/multi-user.target.wants/registry.service → /etc/systemd/system/registry.service.INFO [2025-07-27 15:40:30] >> Health check registry! INFO [2025-07-27 15:40:30] >> registry is running INFO [2025-07-27 15:40:30] >> init registry success

2025-07-27T15:40:30 info domain apiserver.cluster.local:192.168.0.178 append success

192.168.0.181:22 2025-07-27T15:40:30 info domain apiserver.cluster.local:10.103.97.2 append success

192.168.0.182:22 2025-07-27T15:40:30 info domain apiserver.cluster.local:10.103.97.2 append success

192.168.0.180:22 2025-07-27T15:40:31 info domain apiserver.cluster.local:192.168.0.178 append success

192.168.0.179:22 2025-07-27T15:40:31 info domain apiserver.cluster.local:192.168.0.178 append success

192.168.0.182:22 2025-07-27T15:40:31 info domain lvscare.node.ip:192.168.0.182 append success

192.168.0.181:22 2025-07-27T15:40:31 info domain lvscare.node.ip:192.168.0.181 append success

192.168.0.181:22 Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /etc/systemd/system/containerd.service.

192.168.0.182:22 Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /etc/systemd/system/containerd.service.

192.168.0.179:22 Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /etc/systemd/system/containerd.service.

192.168.0.180:22 Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /etc/systemd/system/containerd.service.

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /etc/systemd/system/containerd.service.

192.168.0.181:22 INFO [2025-07-27 15:40:34] >> Health check containerd!

192.168.0.181:22 INFO [2025-07-27 15:40:34] >> containerd is running

192.168.0.181:22 INFO [2025-07-27 15:40:34] >> init containerd success

192.168.0.181:22 Created symlink /etc/systemd/system/multi-user.target.wants/image-cri-shim.service → /etc/systemd/system/image-cri-shim.service.

192.168.0.182:22 INFO [2025-07-27 15:40:34] >> Health check containerd!

192.168.0.182:22 INFO [2025-07-27 15:40:34] >> containerd is running

192.168.0.182:22 INFO [2025-07-27 15:40:34] >> init containerd success

192.168.0.182:22 Created symlink /etc/systemd/system/multi-user.target.wants/image-cri-shim.service → /etc/systemd/system/image-cri-shim.service.

192.168.0.180:22 INFO [2025-07-27 15:40:34] >> Health check containerd!

192.168.0.179:22 INFO [2025-07-27 15:40:34] >> Health check containerd!

192.168.0.180:22 INFO [2025-07-27 15:40:34] >> containerd is running

192.168.0.180:22 INFO [2025-07-27 15:40:34] >> init containerd success

192.168.0.179:22 INFO [2025-07-27 15:40:34] >> containerd is running

192.168.0.179:22 INFO [2025-07-27 15:40:34] >> init containerd success

192.168.0.179:22 Created symlink /etc/systemd/system/multi-user.target.wants/image-cri-shim.service → /etc/systemd/system/image-cri-shim.service.