OpenStack创建实例一直处于创建且未分配IP问题解决

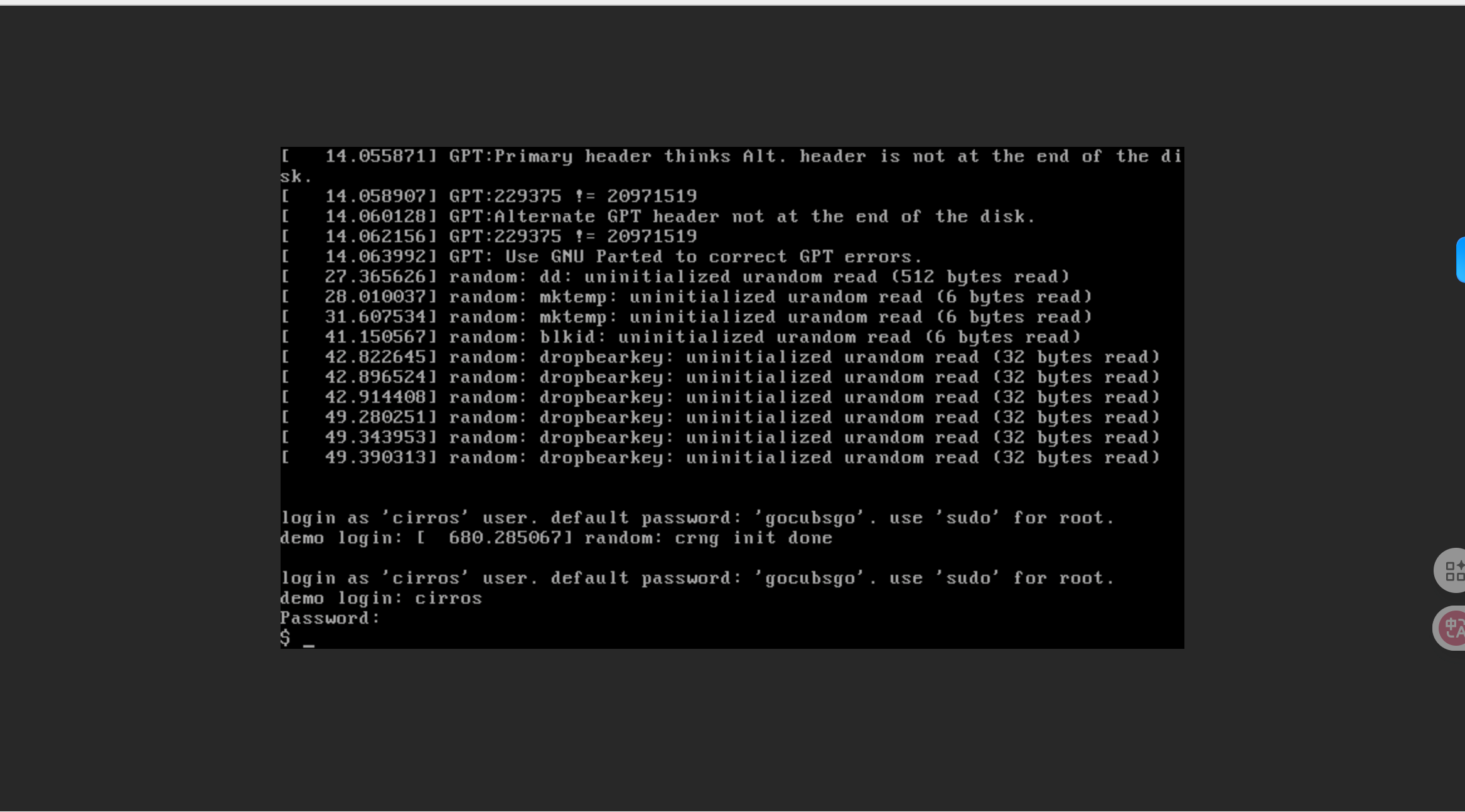

这个是当时所遇到的问题,一开始是创建状态无IP地址并且电源状态是一直处于调度中(忘记截屏了),一直运行之后就会变成下面的状态。

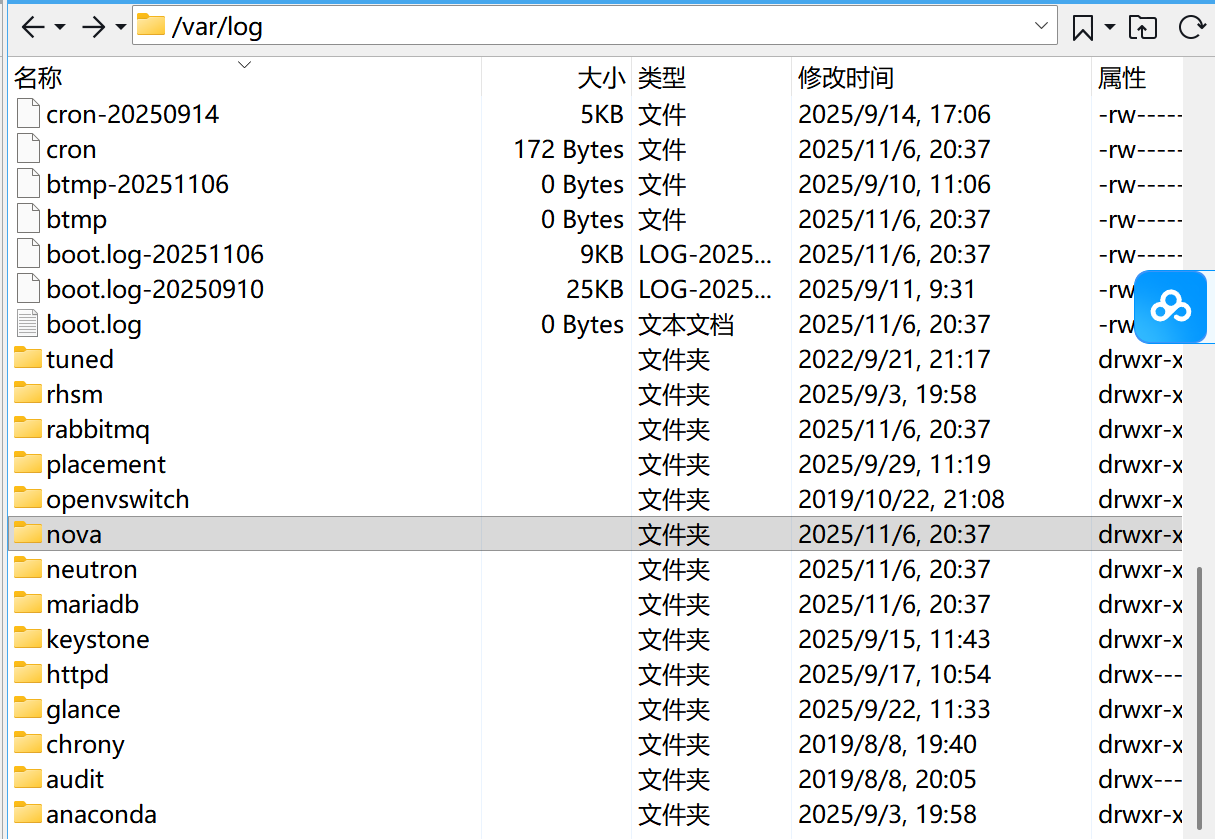

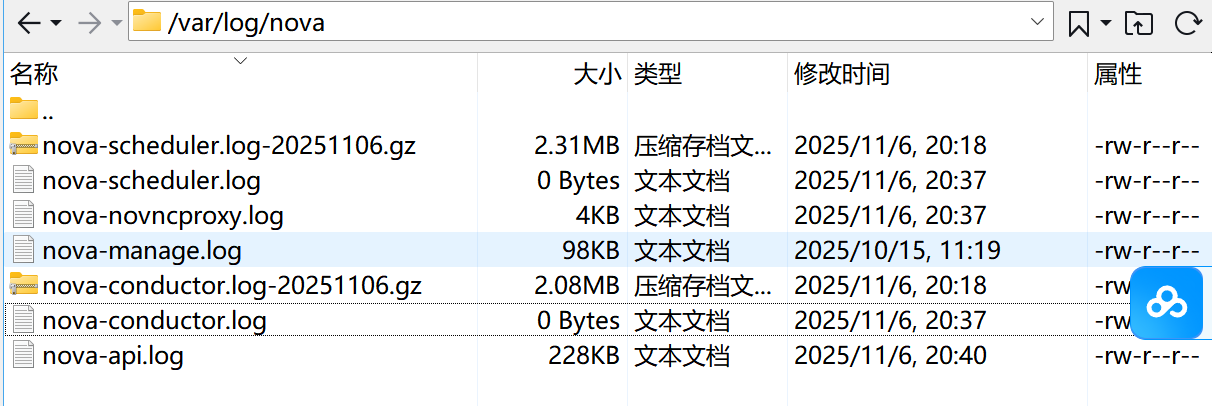

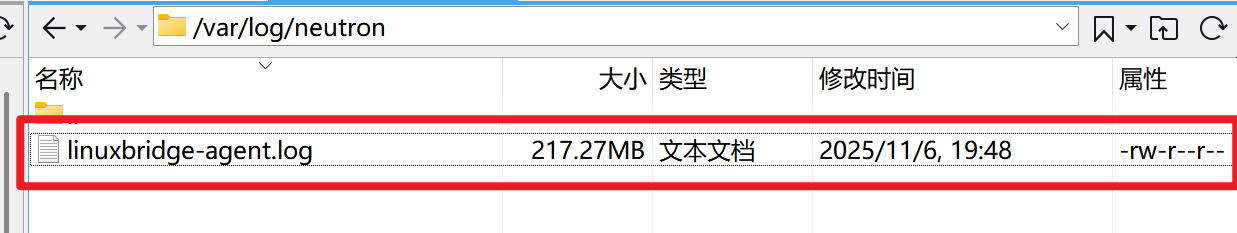

遇到问题之后就立即去查看日志文件,日志文件目录都在/var/log的各组件文件夹中。

我查看了Controller控制节点中的nova文件夹下的日志文件

若有可视化工具可借助其来查看

也可用命令行方式来查看

[root@controller ~]# sudo tail -f /var/log/nova/nova-conductor.log | grep -i error

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/amqp/method_framing.py", line 55, in on_frame

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server callback(channel, method_sig, buf, None)

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/amqp/connection.py", line 510, in on_inbound_method

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server method_sig, payload, content,

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/amqp/abstract_channel.py", line 126, in dispatch_method

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server listener(*args)

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server File "/usr/lib/python2.7/site-packages/amqp/connection.py", line 639, in _on_close

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server (class_id, method_id), ConnectionError)

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server AccessRefused: (0, 0): (403) ACCESS_REFUSED - Login was refused using authentication mechanism AMQPLAIN. For details see the broker logfile.

2025-11-05 04:44:06.094 60687 ERROR oslo_messaging.rpc.server

[root@controller ~]# tail -f /var/log/nova/nova-scheduler.log

2025-10-21 23:03:20.432 37093 WARNING oslo_config.cfg [req-a221a99e-d683-4dd6-983f-8147c2ddc268 - - - - -] Deprecated: Option "force_dhcp_release" from group "DEFAULT" is deprecated for removal (

nova-network is deprecated, as are any related configuration options.

). Its value may be silently ignored in the future.

2025-10-21 23:03:20.443 37093 WARNING oslo_config.cfg [req-a221a99e-d683-4dd6-983f-8147c2ddc268 - - - - -] Deprecated: Option "use_neutron" from group "DEFAULT" is deprecated for removal (

nova-network is deprecated, as are any related configuration options.

). Its value may be silently ignored in the future.

2025-10-21 23:04:09.574 37114 INFO nova.scheduler.host_manager [req-bfe28184-1b1f-46c2-b96c-d9bd08a387d5 - - - - -] Host mapping not found for host compute. Not tracking instance info for this host.

2025-10-21 23:04:09.576 37114 INFO nova.scheduler.host_manager [req-bfe28184-1b1f-46c2-b96c-d9bd08a387d5 - - - - -] Received a sync request from an unknown host 'compute'. Re-created its InstanceList.

2025-10-21 23:04:09.583 37113 INFO nova.scheduler.host_manager [req-bfe28184-1b1f-46c2-b96c-d9bd08a387d5 - - - - -] Host mapping not found for host compute. Not tracking instance info for this host.

2025-10-21 23:04:09.584 37113 INFO nova.scheduler.host_manager [req-bfe28184-1b1f-46c2-b96c-d9bd08a387d5 - - - - -] Received a sync request from an unknown host 'compute'. Re-created its InstanceList.核心问题是 RabbitMQ 身份认证失败。具体来说,Nova-Conductor 尝试通过 AMQPLAIN 认证机制登录 RabbitMQ 时被拒绝(错误码 403 ACCESS_REFUSED)。

- RabbitMQ 中用于 OpenStack 服务连接的账号密码配置错误(比如 Nova 服务配置文件中的 RabbitMQ 账号密码与实际 RabbitMQ 中设置的不一致);

- RabbitMQ 中该账号的权限不足,无法进行正常的消息队列操作。

在控制节点去执行下面的命令验证Nova 服务是否可以连接到 RabbitMQ 消息队列。

[root@controller ~]# openstack hypervisor show compute

Unexpected API Error. Please report this at http://bugs.launchpad.net/nova/ and attach the Nova API log if possible.

<class 'amqp.exceptions.AccessRefused'> (HTTP 500) (Request-ID: req-af3cf025-176d-4d84-a0e6-457f7bd42359)发现是我的Nova 服务无法连接到 RabbitMQ 消息队列。

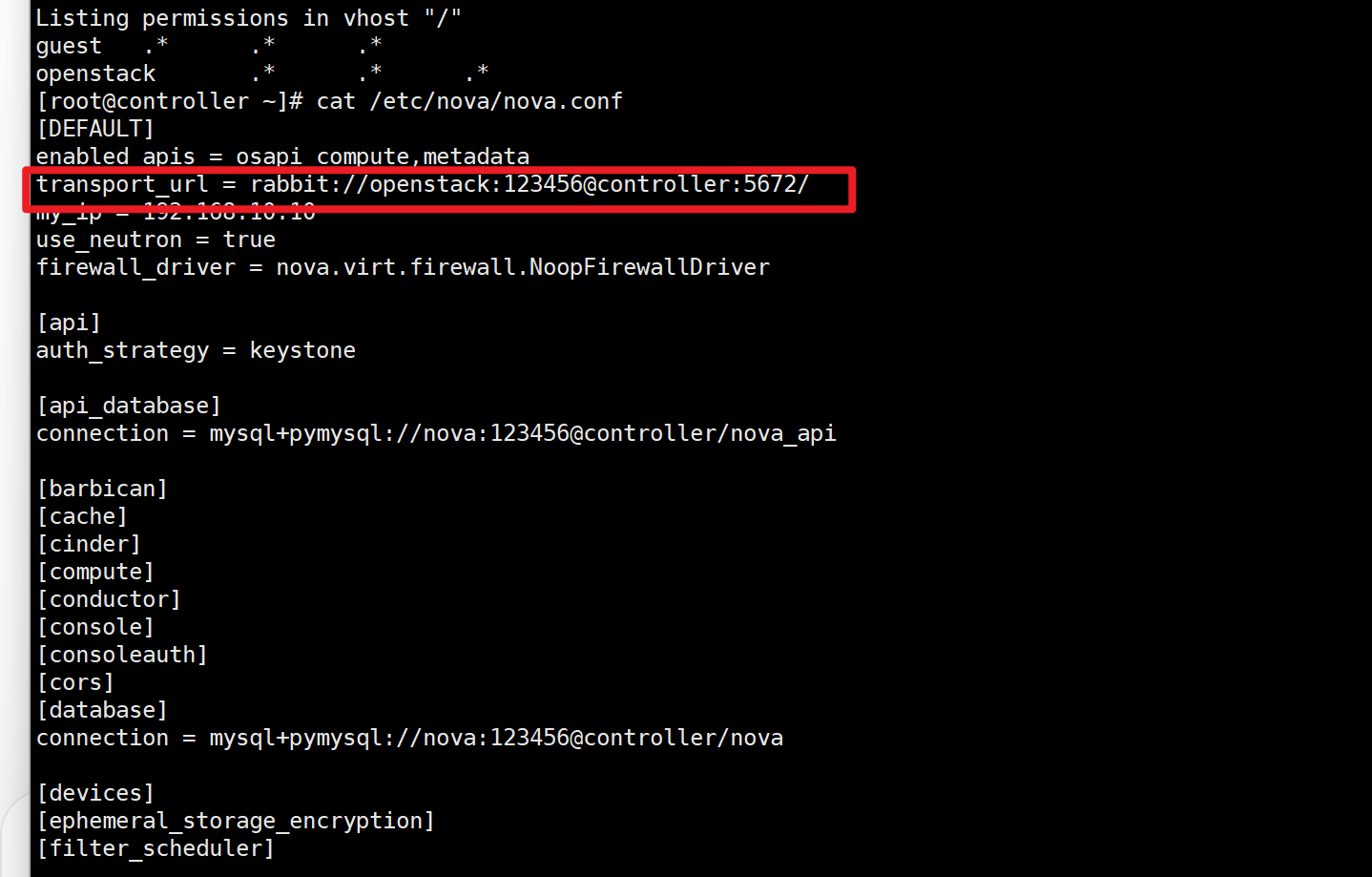

查看自己的配置文件是否配错:

可以用命令模式测试账号密码是否有效

# 测试密码有效性

rabbitmqctl authenticate_user jianglin(你自己设置的rabbitmq账号名) 123456(自己设置的密码)- 如果返回

Error: failed to authenticate user "jianglin"则说明密码错误

但我去查看我的配置文件之后,发现配置中的rabbitmq账号密码根本就没问题,只能不断地去查看日志信息,做了各种尝试还是不行,最后只能将原来的rabbitmq账号密码全都删掉,再重新创建一个新用户并设置其权限。

# 删除旧用户(避免权限残留)

rabbitmqctl delete_user jianglin# 创建新用户openstack

rabbitmqctl add_user openstack 123456

rabbitmqctl set_permissions -p / openstack ".*" ".*" ".*"

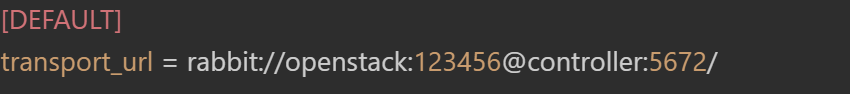

rabbitmqctl set_user_tags openstack administrator之后再更新 Nova 配置文件和Neutron配置文件,即当前所有组件中需要配置rabbitmq的账号密码的所有配置信息都要进行更新,注意:控制节点和计算节点都要进行配置文件更新。

# vi /etc/nova/nova.conf

[DEFAULT]

transport_url = rabbit://openstack:123456@controller:5672/

# vi /etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:123456@controller:5672/进行重启使配置文件生效

#控制节点

[root@controller ~]# sudo systemctl start rabbitmq-server

[root@controller ~]# sudo systemctl start neutron-server

[root@controller ~]# sudo systemctl start neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

[root@controller ~]# sudo systemctl start openstack-nova-api openstack-nova-scheduler openstack-nova-conductor

#计算节点

[root@compute ~]# sudo systemctl start neutron-linuxbridge-agent

[root@compute ~]# sudo systemctl start openstack-nova-compute

之后再去执行刚才的命令进行查看是否还报错,发现现在是有输出信息了。

[root@controller ~]# openstack hypervisor show compute

[root@controller ~]# rabbitmqctl delete_user jianglin

Deleting user "jianglin"

[root@controller ~]# rabbitmqctl add_user openstack 123456

Creating user "openstack"

[root@controller ~]# rabbitmqctl set_permissions -p / openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/"

[root@controller ~]# rabbitmqctl set_user_tags openstack administrator

Setting tags for user "openstack" to [administrator]

[root@controller ~]# vi /etc/nova/nova.conf

[root@controller ~]# systemctl restart rabbitmq-server

[root@controller ~]# systemctl restart openstack-nova-*

[root@controller ~]# openstack hypervisor show compute

+----------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+----------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| aggregates | [] |

| cpu_info | {"vendor": "AMD", "model": "Opteron_G2", "arch": "x86_64", "features": ["pge", "avx", "clflush", "pku", "syscall", "umip", "sse4a", "invpcid", "tsc", "fsgsbase", "xsave", "smap", "bmi2", "xsaveopt", "mmx", "erms", "cmov", "smep", "perfctr_core", "fpu", "clflushopt", "pat", "arat", "lm", "msr", "adx", "3dnowprefetch", "nx", "fxsr", "sha-ni", "vaes", "sse4.1", "pae", "sse4.2", "pclmuldq", "xgetbv1", "cmp_legacy", "fma", "vme", "xsaves", "osxsave", "cx8", "mce", "fxsr_opt", "cr8legacy", "clwb", "pse", "lahf_lm", "abm", "osvw", "rdseed", "popcnt", "mca", "pdpe1gb", "ibpb", "sse", "f16c", "invtsc", "xsavec", "pni", "aes", "svm", "ht", "mmxext", "sse2", "hypervisor", "topoext", "misalignsse", "bmi1", "apic", "ssse3", "de", "vpclmulqdq", "cx16", "extapic", "pse36", "mtrr", "movbe", "rdrand", "avx2", "ospke", "sep", "rdtscp", "wbnoinvd", "x2apic"], "topology": {"cores": 2, "cells": 1, "threads": 1, "sockets": 1}} |

| current_workload | 0 |

| disk_available_least | 37 |

| free_disk_gb | 39 |

| free_ram_mb | 3258 |

| host_ip | 192.168.10.20 |

| host_time | 22:40:38 |

| hypervisor_hostname | compute |

| hypervisor_type | QEMU |

| hypervisor_version | 2012000 |

| id | 1 |

| load_average | 0.28, 0.09, 0.06 |

| local_gb | 39 |

| local_gb_used | 0 |

| memory_mb | 3770 |

| memory_mb_used | 512 |

| running_vms | 0 |

| service_host | compute |

| service_id | 5 |

| state | up |

| status | enabled |

| uptime | 5:19 |

| users | 4 |

| vcpus | 2 |

| vcpus_used | 0 |

+----------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

[root@controller ~]# vi /etc/neutron/neutron.conf

[root@controller ~]# systemctl enable neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

[root@controller ~]# systemctl start neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

[root@controller ~]#

验证网络服务状态是否全部为UP

# 控制节点执行

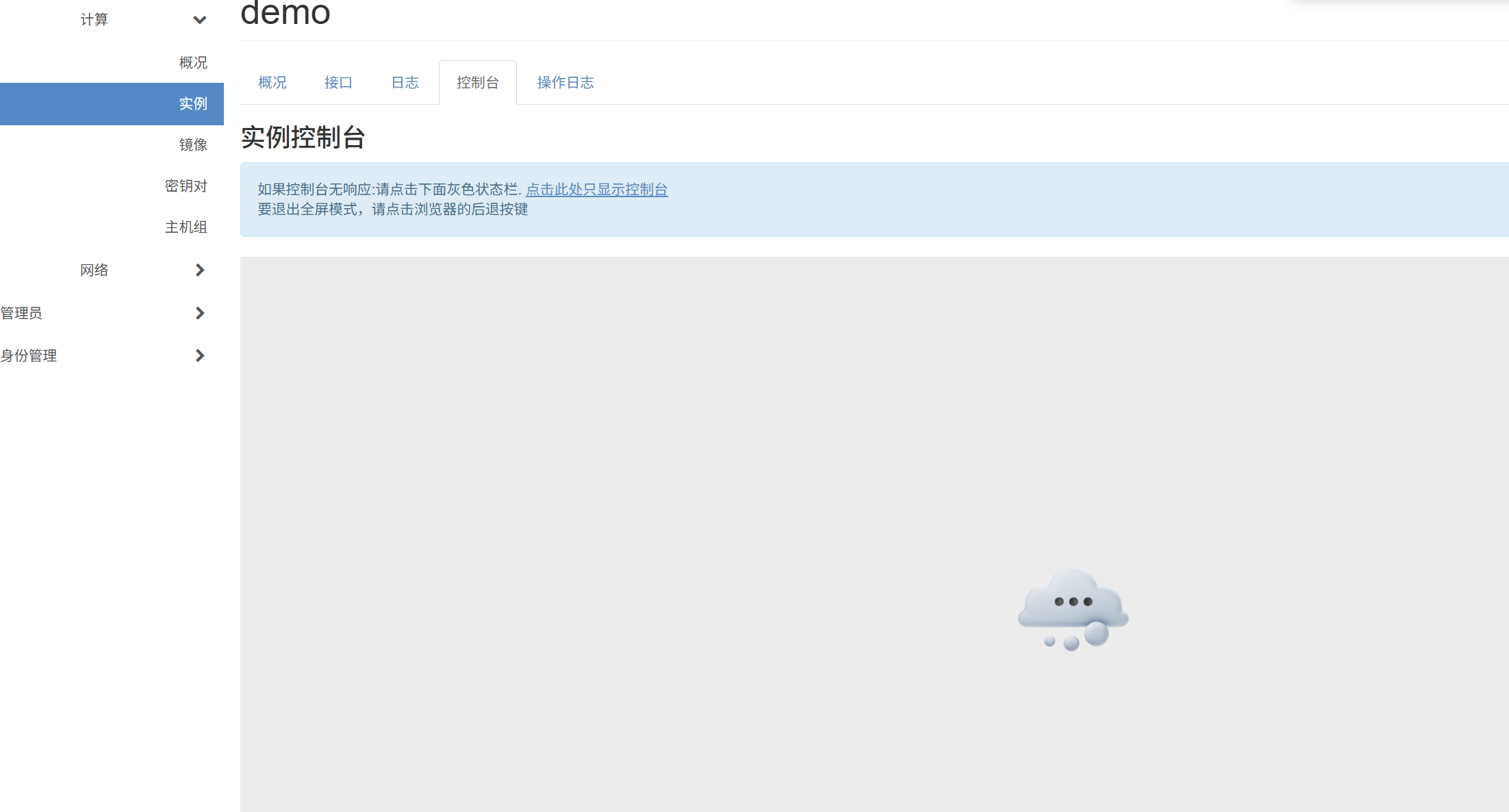

openstack network agent list再次创建实例之后,发现电源状态从调度变成了孵化,但未分配IP地址,最后状态显示为错误。

| 字段 | 内容 |

|---|---|

| 实例名称 | demo |

| 实例 ID | 72528eae-d702-4217-971c-89e534f0b373 |

| 实例描述 | - |

| 所属项目 ID | 39e79bc06683420989d1d2745c22a99d |

| 实例状态 | 错误 |

| 是否锁定 | False(未锁定) |

| 可用域 | - |

| 实例创建时间 | 2025 年 11 月 6 日 晚上 7:22(Nov. 6, 2025, 7:22 p.m.) |

| 运行时长 | 2 分钟 |

| 故障消息 | 超出最大重试次数。所有可用于重试实例创建失败的主机均已耗尽(Exceeded maximum number of retries. Exhausted all hosts available for retrying build failures for instance 72528eae-d702-4217-971c-89e534f0b373.) |

| 故障编码 | 500(服务器内部错误) |

| 故障详情(异常栈) | Traceback (most recent call last):File "/usr/lib/python2.7/site-packages/nova/conductor/manager.py", line 651, in build_instancesraise exception.MaxRetriesExceeded(reason=msg)MaxRetriesExceeded: Exceeded maximum number of retries. Exhausted all hosts available for retrying build failures for instance 72528eae-d702-4217-971c-89e534f0b373. |

| 故障记录创建时间 | Nov. 6, 2025, 7:22 p.m. |

| 实例规格 - 实例类型名称 | mini |

| 实例规格 - 实例类型 ID | 8803c4c2-01e3-4f0a-b9e7-e2442cded834 |

| 实例规格 - 内存 | 1GB |

| 实例规格 - VCPU 数量 | 1 VCPU |

| 实例规格 - 磁盘 | 10GB |

| IP 地址 | - |

| 安全组 | 不可用 |

| 元数据 - 密钥对名称 | 无 |

| 镜像名称 | cirros(轻量级测试镜像) |

| 镜像 ID | 96015ac4-c131-446b-9572-1a40de38007e |

| 已连接卷 | -(无连接的卷) |

该实例(demo)在创建时失败,状态变为 “错误”,核心原因是:OpenStack 的 Nova 服务(计算服务)尝试在所有可用的计算节点上创建该实例,但多次重试后均失败,最终耗尽了所有可尝试的主机资源,触发 MaxRetriesExceeded(超出最大重试次数)异常。

我怀疑是IP地址未分配问题,所以又去查看计算节点中neutron文件夹下的日志文件。

tail -f /var/log/nova/nova-compute.log

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] bind_host_id, requested_ports_dict)

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] File "/usr/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 1169, in _update_ports_for_instance

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] vif.destroy()

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] File "/usr/lib/python2.7/site-packages/oslo_utils/excutils.py", line 220, in __exit__

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] self.force_reraise()

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] File "/usr/lib/python2.7/site-packages/oslo_utils/excutils.py", line 196, in force_reraise

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] six.reraise(self.type_, self.value, self.tb)

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] File "/usr/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 1139, in _update_ports_for_instance

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] port_client, instance, port_id, port_req_body)

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] File "/usr/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 513, in _update_port

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] _ensure_no_port_binding_failure(port)

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] File "/usr/lib/python2.7/site-packages/nova/network/neutronv2/api.py", line 236, in _ensure_no_port_binding_failure

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] raise exception.PortBindingFailed(port_id=port['id'])

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373] PortBindingFailed: Binding failed for port dad9c59e-41e1-46a1-9253-e184899d2cab, please check neutron logs for more information.

2025-11-06 06:22:19.031 27909 ERROR nova.compute.manager [instance: 72528eae-d702-4217-971c-89e534f0b373]

2025-11-06 06:22:20.134 27909 INFO nova.compute.manager [req-87e9e592-aabe-4b54-9286-64df4df97014 dd24d1840bd145fea9a2ac9069bc89b7 39e79bc06683420989d1d2745c22a99d - default default] [instance: 72528eae-d702-4217-971c-89e534f0b373] Took 0.14 seconds to deallocate network for instance.

2025-11-06 06:22:21.019 27909 INFO nova.scheduler.client.report [req-87e9e592-aabe-4b54-9286-64df4df97014 dd24d1840bd145fea9a2ac9069bc89b7 39e79bc06683420989d1d2745c22a99d - default default] Deleted allocation for instance 72528eae-d702-4217-971c-89e534f0b373实例创建失败的核心原因是 Neutron 网络端口绑定失败。

再回过头去检查/etc/neutron/plugins/ml2/linuxbridge_agent.ini该配置文件内容,向其中添加下面的配置信息。

[agent]

root_helper = sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf之后再次进行重启neutron

# 控制节点

[root@controller ~]# sudo systemctl start neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

#计算节点

[root@compute ~]# sudo systemctl start neutron-linuxbridge-agent

再次创建实例,发现还是不行

验证 RabbitMQ 用户

在控制节点上确认 openstack 用户存在且权限正确:

rabbitmqctl list_users

rabbitmqctl list_permissions -p /更新所有节点的 RabbitMQ 配置

在所有节点编辑配置文件:

/etc/nova/nova.conf:

[oslo_messaging_rabbit]

rabbit_userid = openstack

rabbit_password = 123456

rabbit_hosts = controller:5672/etc/neutron/neutron.conf:

[oslo_messaging_rabbit]

rabbit_userid = openstack

rabbit_password = 123456

rabbit_hosts = controller:5672重启所有相关服务

在控制节点:

systemctl restart rabbitmq-server

systemctl restart openstack-nova-*

systemctl restart neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent在计算节点:

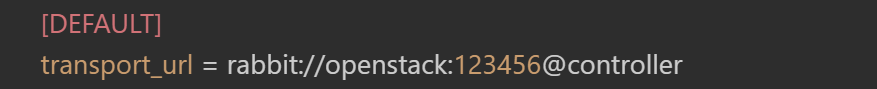

systemctl restart neutron-linuxbridge-agent openstack-nova-compute还要注意配置nova文件时要将rabbitmq的计算节点和控制节点配置信息都一致。

我的控制节点:

我的计算节点:

修正计算节点配置

在计算节点上修改配置文件:

修改 /etc/nova/nova.conf:

sudo sed -i 's#transport_url = rabbit://openstack:123456@controller#transport_url = rabbit://openstack:123456@controller:5672/#g' /etc/nova/nova.conf

修改 /etc/neutron/neutron.conf:

sudo sed -i 's#transport_url = rabbit://openstack:123456@controller#transport_url = rabbit://openstack:123456@controller:5672/#g' /etc/neutron/neutron.conf添加明确的 RabbitMQ 配置:

在 /etc/nova/nova.conf 和 /etc/neutron/neutron.conf 的 [oslo_messaging_rabbit] 部分添加:

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_port = 5672

rabbit_userid = openstack

rabbit_password = 123456

rabbit_virtual_host = /

sasl_mechanisms = PLAIN重启计算节点服务

sudo systemctl restart openstack-nova-compute neutron-linuxbridge-agent强制同步代理状态

在控制节点执行:

# 重置代理状态

sudo neutron agent-reset-state --agent lb000c29e3f0ff# 验证代理列表

openstack network agent list之后再验证并修复配置文件权限

# 在计算节点执行

sudo chown -R neutron:neutron /etc/neutron/

sudo chmod 755 /etc/neutron/

sudo restorecon -Rv /etc/neutron彻底重启所有服务

在控制节点:

# 停止服务

sudo systemctl stop neutron-* openstack-nova-*# 清理缓存

sudo rm -f /var/lib/neutron/* /var/lib/nova/nova.sqlite# 启动服务(注意顺序)

sudo systemctl start rabbitmq-server

sudo systemctl start neutron-server

sudo systemctl start neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

sudo systemctl start openstack-nova-api openstack-nova-scheduler openstack-nova-conductor在计算节点:

# 停止服务

sudo systemctl stop neutron-linuxbridge-agent openstack-nova-compute# 清理缓存

sudo rm -f /var/lib/nova/nova.sqlite# 启动服务

sudo systemctl start neutron-linuxbridge-agent

sudo systemctl start openstack-nova-compute到目前为止是能正确创建了,但打开控制台无法打开,显示控制节点拒绝连接。

检查控制节点的VNC代理配置与服务状态

关键配置:

控制节点的/etc/nova/nova.conf需正确设置VNC代理参数(确保以下内容存在且正确):

[vnc]

enabled = true # 启用VNC

server_listen = 0.0.0.0 # 监听所有IP(允许外部访问)

server_proxyclient_address = 192.168.10.10 # 控制节点的管理IP(必须正确)

novncproxy_base_url = http://controller:6080/vnc_auto.html # VNC控制台URL(控制器域名/IP+6080端口)检查服务状态:

控制节点执行

systemctl status openstack-nova-novncproxy # 确认服务是否运行

systemctl restart openstack-nova-novncproxy # 重启服务(若未运行)检查计算节点的VNC配置与实例绑定

关键配置:计算节点的/etc/nova/nova.conf需正确设置VNC参数(确保以下内容存在且正确):

[vnc]

enabled = true # 启用VNC

server_listen = 0.0.0.0 # 监听所有IP

server_proxyclient_address = 192.168.10.20 # 计算节点的管理IP(必须正确)

novncproxy_base_url = http://controller:6080/vnc_auto.html # 与控制节点一致检查实例的VNC绑定状态:

virsh list --all # 找到实例的域名(如`instance-00000001`)这个命令有输出后再次查看控制台,发现就能打开了。