计算机视觉·TagCLIP

TagCLIP

Abstract—Contrastive Language-Image Pre-training (CLIP) has recently shown great promise in pixel-level zero-shot learning tasks. However, existing approaches utilizing CLIP’s text and patch embeddings to generate semantic masks often misidentify input pixels from unseen classes, leading to confusion between novel classes and semantically similar ones. In this work, we propose a novel approach, TagCLIP (Trusty-aware guided CLIP), to address this issue. We disentangle the ill-posed optimization problem into two parallel processes: semantic matching performed individually and reliability judgment for improving discrimination ability. Building on the idea of special tokens in language modeling representing sentence-level embeddings, we introduce a trusty token that enables distinguishing novel classes from known ones in prediction. To evaluate our approach, we conduct experiments on two benchmark datasets, PASCAL VOC 2012 and COCO-Stuff 164 K. Our results show that TagCLIP improves the Intersection over Union (IoU) of unseen classes by 7.4% and 1.7%, respectively, with negligible overheads. The code is available at here.

动机

过去的工作总是将不可见类错误分类为相似类(应该指的是可见类)。

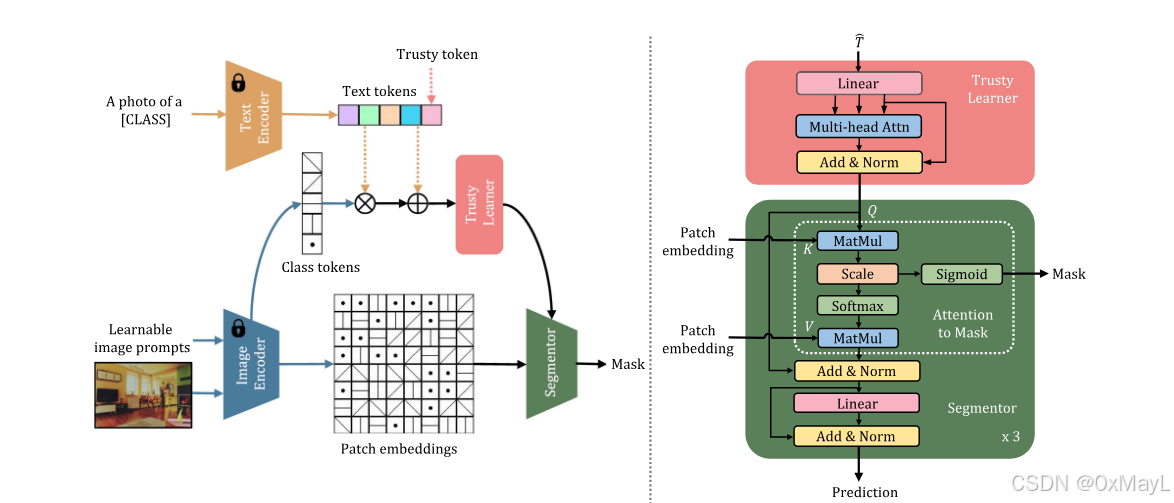

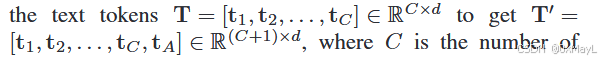

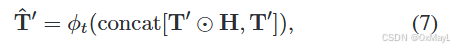

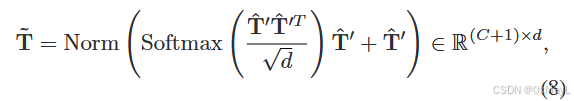

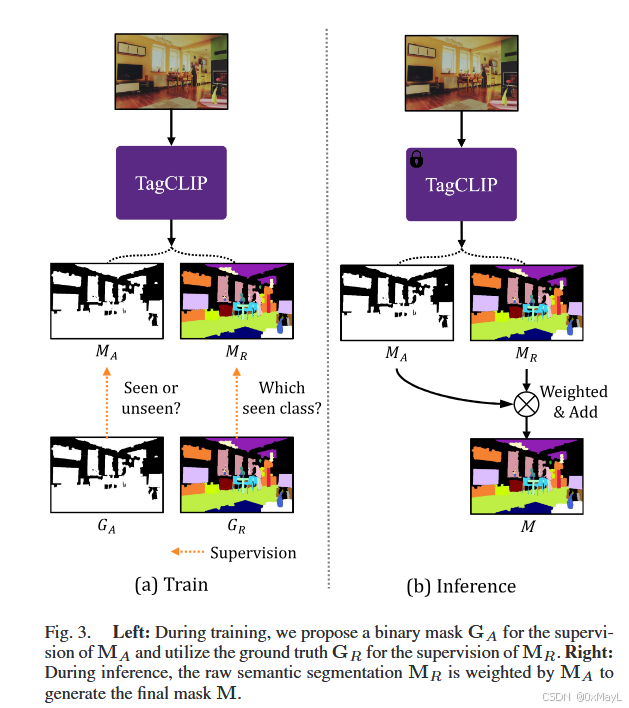

- 引入一个额外的token tCt_CtC

可信token学习器:就是一个自注意力机制。

-

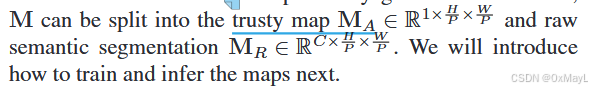

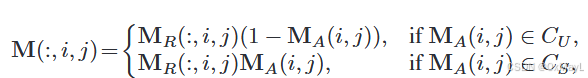

分为两个MAM_AMA和MRM_RMR,MRM_RMR用于减少对于不可见类的概率。

-

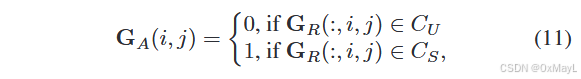

可见类为1,不可见类为0

-

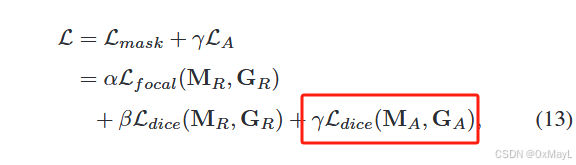

损失函数:就是一个Dice损失

推理

- 减少可见类的预测概率

- 适当调整概率

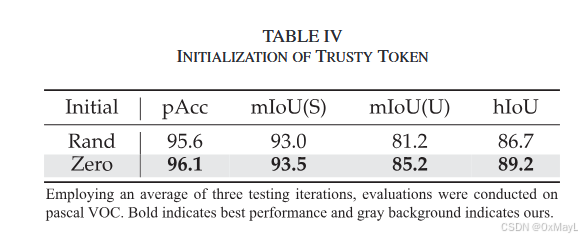

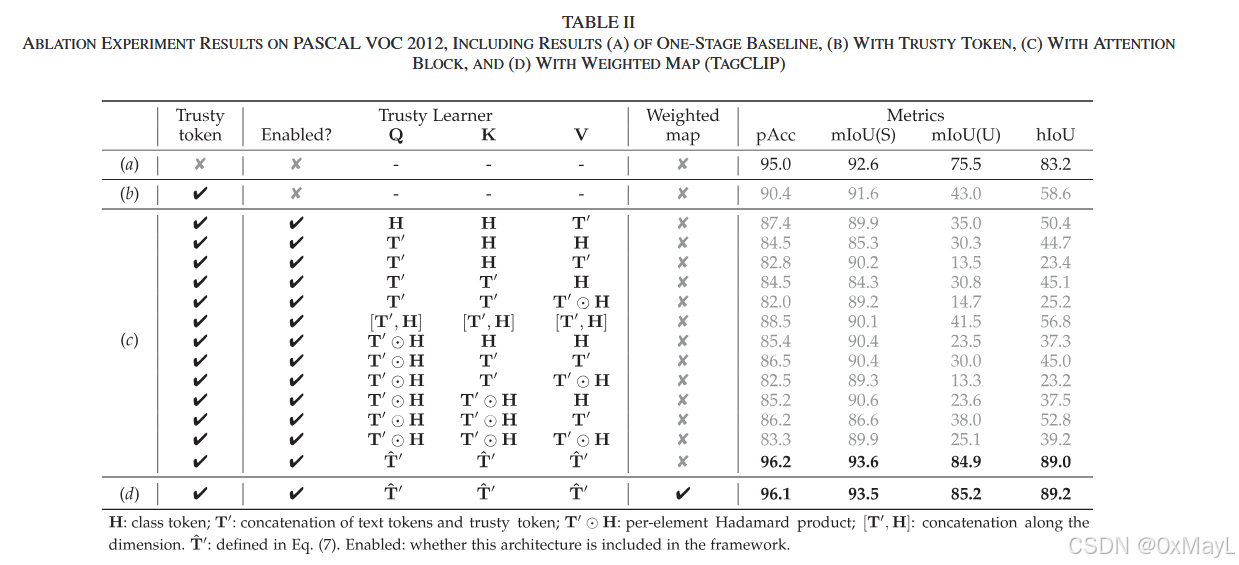

消融实验

- 作者的消融实验还是比较丰富的。可以学习以下