ELK企业级日志分析系统学习

ELK企业级日志分析系统

概述

日志分析是运维工程师解决系统故障,发现问题的主要手段。日志主要包括系统日志、应用程序日志和安全日志。系统运维和开发人员可以通过日志了解服务器软硬件信息、检查配置过程中的错误及错误发生的原因。经常分析日志可以了解服务器的负荷,性能安全性,从而及时采取措施纠正错误。

传统方法查阅日志面对上万条日志消息不现实,繁琐又效率低下。为此,我们可以使用集中化的日志管理。

开源实时日志分析ELK平台能够完美的解决我们上述的问题,ELK由Elasticsearch、Logstash和Kiabana三个开源工具组成。

- Elasticsearch

- Logstash

- Kibana

工作组件

- APPServer集群

- Logstash Agent 采集器

- Elasticsearch

- ClusteKibana

- ServerBrowser

工作原理

Logstash收集AppServer产生的Log,并存放到ElasticSearch集群中,而Kibana则从ES集群中查询数据生成图表,

再返回给Browser。简单来说,进行日志处理分析,一般需要经过以下几个步骤:

- 将日志进行集中化管理(beats)

- 将日志格式化(logstash)

- 对格式化后的数据进行索引和存储(elasticsearch)

- 前端数据的展示(kibana)

配置环境

| host | name | ip | work |

|---|---|---|---|

| node1 | node1 | 10.1.8.20 | main |

| node2 | node2 | 10.1.8.21 | backup |

| apache | apache | 10.1.8.22 | webserver |

hosts

#node1 node2

10.1.8.20 node1

10.1.8.21 node2

java

#node1 node2

[root@node ~]# java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)#未安装

[root@node ~]# yum install -y java

elasticsearch

#node1 node2

[root@node ~]# cd /opt

[root@node opt]# rpm -ivh elasticsearch-5.5.0.rpm #重载服务

[root@node opt]# systemctl daemon-reload

[root@node opt]# systemctl enable elasticsearch.service#备份 修改配置

[root@node opt]# cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak[root@node opt]# vim /etc/elasticsearch/elasticsearch.yml#集群名字

17/ cluster.name: my-elk-cluster #节点名字

23/ node.name: node1 #数据存放路径

33/ path.data: /data/elk_data#日志存放路径

37/ path.logs: /var/log/elasticsearch/ #不在启动的时候锁定内存,锁定物理内存地址,防止es内存被交换出去,也就是避免es使用swap交换分区,频繁的交换,会导致IOPS变高

43/ bootstrap.memory_lock: false####提供服务绑定的IP地址,0.0.0.0代表所有地址

55/ network.host: 0.0.0.0 ##侦听端口为9200

59/ http.port: 9200 #集群发现通过单播实现

68/ discovery.zen.ping.unicast.hosts: ["node1", "node2"] [root@node opt]# grep -v "^#" /etc/elasticsearch/elasticsearch.yml

cluster.name: my-elk-cluster

node.name: node1

path.data: /data/elk_data

path.logs: /var/log/elasticsearch/

bootstrap.memory_lock: false

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["node1", "node2"]#创建数据路径 启服

[root@node opt]# mkdir -p /data/elk_data

[root@node opt]# chown elasticsearch:elasticsearch /data/elk_data/

[root@node1 elasticsearch]# systemctl start elasticsearch.service#在宿主机broswer验证

#10.1.8.20:9200

#10.1.8.21:9200

node组件

#node1 node2

[root@node opt]# yum install gcc gcc-c++ make -y

[root@node opt]# tar xzvf node-v8.2.1.tar.gz

[root@node opt]# cd node-v8.2.1/

[root@node node-v8.2.1]# ./configure

[root@node node-v8.2.1]# make -j4

[root@node node-v8.2.1]# make install

phantomjs

[root@node node-v8.2.1]# cd /usr/local/src/

[root@node src]# tar xjvf phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@node src]# cd phantomjs-2.1.1-linux-x86_64/bin

[root@node bin]# cp phantomjs /usr/local/bin

elasticsearch-head

[root@node bin]# cd /usr/local/src/

[root@node src]# tar xzvf elasticsearch-head.tar.gz

[root@node src]# cd elasticsearch-head/

[root@node elasticsearch-head]# cd [root@node ~]# vim /etc/elasticsearch/elasticsearch.yml#开启跨域访问支持,默认为false

http.cors.enabled: true # 跨域访问允许的域名地址

http.cors.allow-origin: "*" #重启服务

[root@node ~]# systemctl restart elasticsearch

[root@node ~]# cd /usr/local/src/elasticsearch-head/

[root@node elasticsearch-head]# npm run start & ####切换到后台运行

[1] 114729#宿主机browser验证

#10.1.8.20:9200

#10.1.8.21:9200

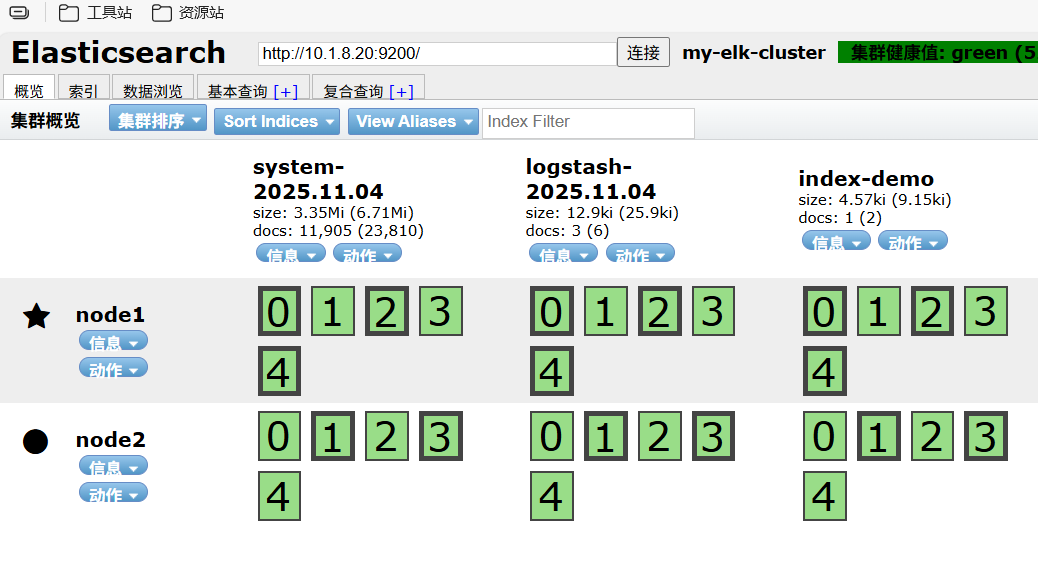

测试demo

[root@node1 ~]# curl -XPUT 'localhost:9200/index-demo/test/1?pretty&pretty' -H 'content-Type: application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{"_index" : "index-demo","_type" : "test","_id" : "1","_version" : 1,"result" : "created","_shards" : {"total" : 2,"successful" : 2,"failed" : 0},"created" : true

}#宿主机browser验证index-demo

实战

logstash输出日志

#apache

[root@apache ~]# yum install -y httpd

[root@apache ~]# systemctl enable httpd --now#验证java环境

[root@apache ~]# java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)#安装logstash

[root@apache ~]# cd /opt

[root@apache opt]# rpm -ivh logstash-5.5.1.rpm

[root@apache opt]# systemctl start logstash.service

[root@apache opt]# systemctl enable logstash.service#建立logstash软连接

[root@apache opt]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/

标准输入输出测试

[root@apache opt]# logstash -e 'input { stdin{} } output { stdout{} }'#输入www.baidu.com测试10:44:15.349 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}2025-11-04T02:44:21.730Z apache www.baidu.com#json格式测试[root@apache opt]# logstash -e 'input { stdin{} } output { stdout{ codec=>rubydebug } }'10:46:29.438 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.sina.com

{"@timestamp" => 2025-11-04T02:46:58.639Z,"@version" => "1","host" => "apache","message" => "www.sina.com"

}

www.baidu.com

{"@timestamp" => 2025-11-04T02:47:36.655Z,"@version" => "1","host" => "apache","message" => "www.baidu.com"

配置apache日志输出

#logstash配置文件

[root@apache log]# chmod o+r /var/log/messages

[root@apache log]# ll messages

-rw----r--. 1 root root 1007400 11月 4 11:16 messages

[root@apache opt]# vim /etc/logstash/conf.d/system.conf input {file{path => "/var/log/messages"type => "system"start_position => "beginning"}}

output {elasticsearch {hosts => ["192.168.100.41:9200"]index => "system-%{+YYYY.MM.dd}"}}[root@apache opt]# systemctl restart logstash.service

安装kibana

#node1 node2

[root@node ~]# cd /usr/local/src/

[root@node src]# rpm -ivh kibana-5.5.1-x86_64.rpm

[root@node src]# cd /etc/kibana/

[root@node kibana]# cp kibana.yml kibana.yml.bak

[root@node kibana]# vi kibana.yml# kibana开放端口

2/ server.port: 5601 #kibana侦听的地址

7/ server.host: "0.0.0.0" #和elasticsearch建立联系

21/ elasticsearch.url: "http://10.1.8.20:9200" #在elasticsearch中添加.kibana索引

30/ kibana.index: ".kibana" [root@node kibana]# systemctl start kibana.service

[root@node kibana]# systemctl enable kibana.service

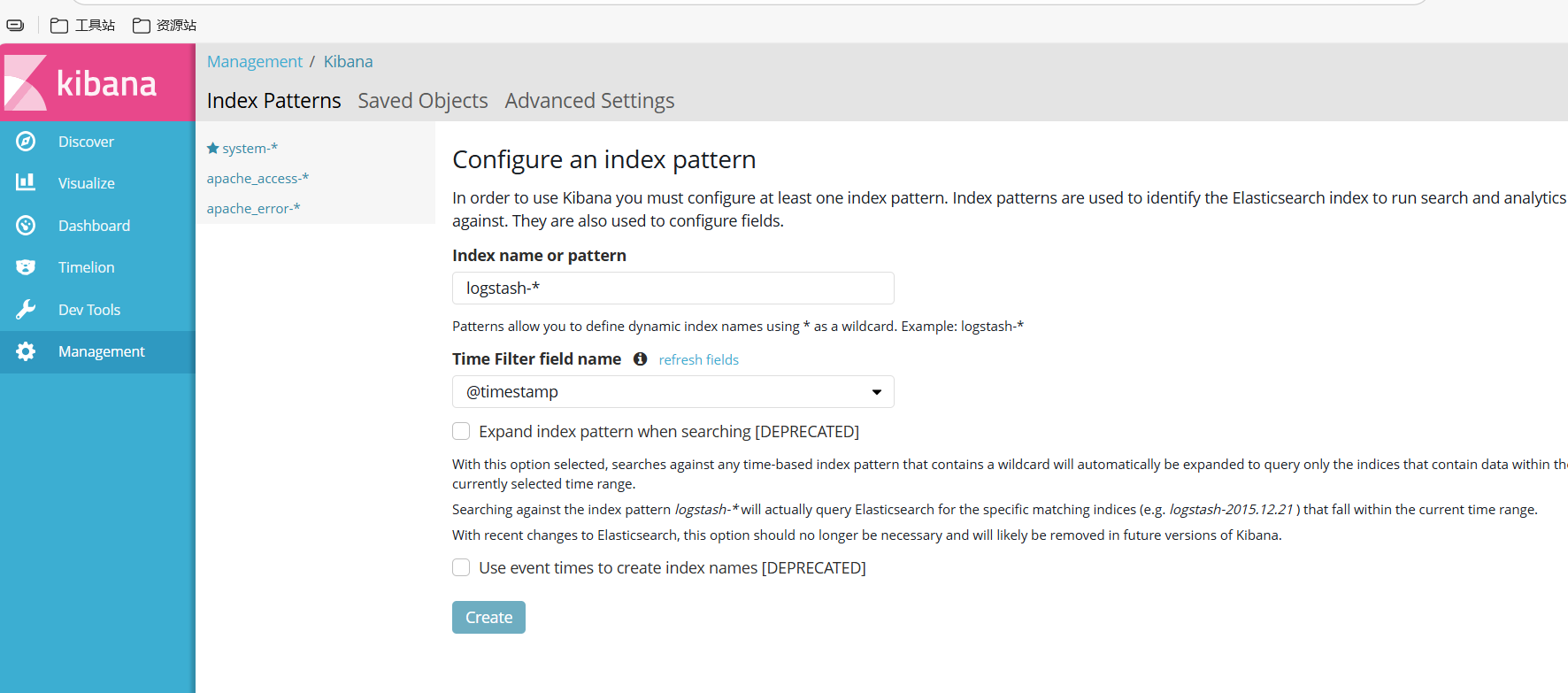

修改索引名字 system-*

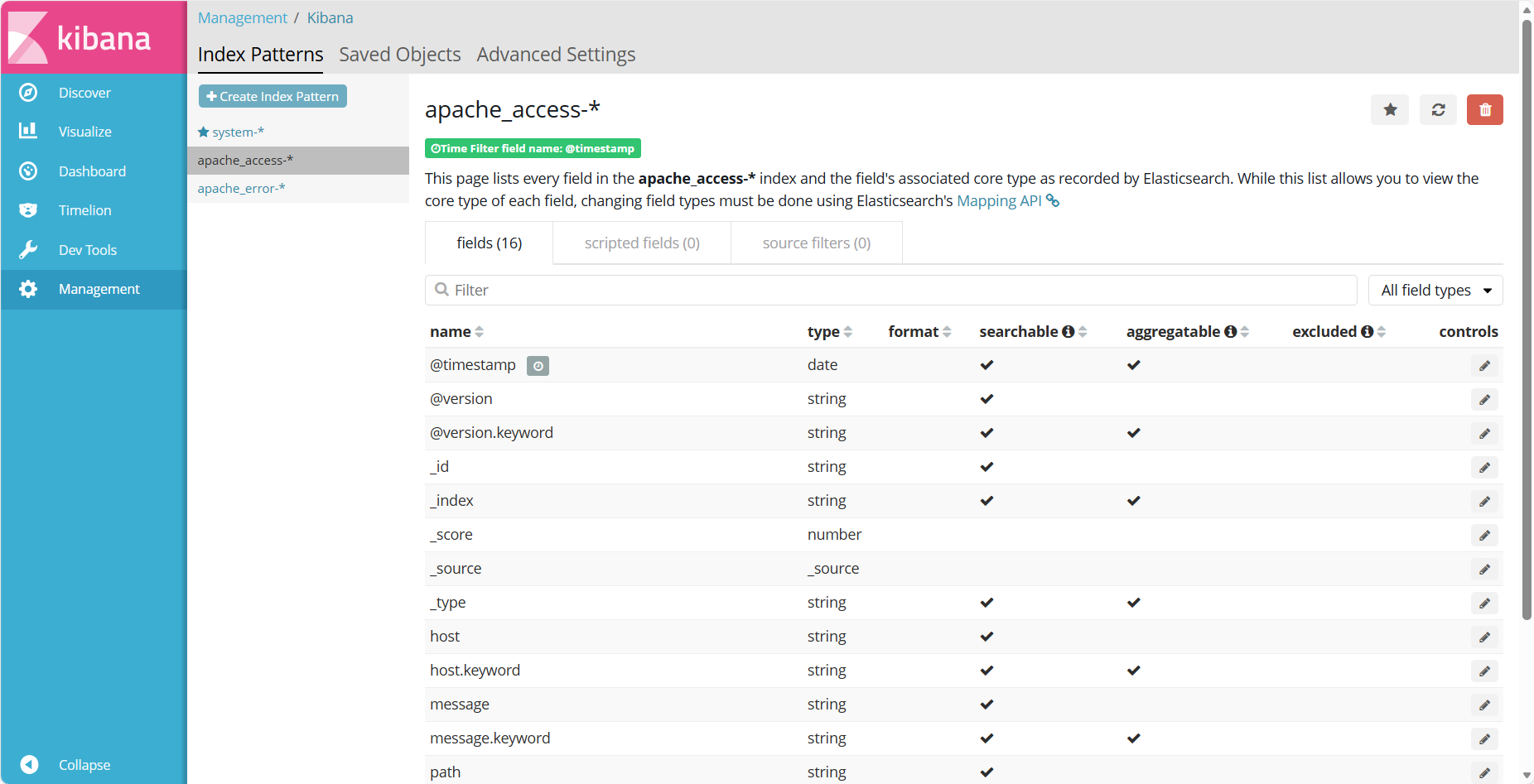

对接apache_log

[root@apache opt]# cd /etc/logstash/conf.d/

[root@apache conf.d]# touch apache_log.conf

[root@apache conf.d]# vim apache_log.conf

input {file{path => "/etc/httpd/logs/access_log"type => "access"start_position => "beginning"}file{path => "/etc/httpd/logs/error_log"type => "error"start_position => "beginning"}}

output {if [type] == "access" {elasticsearch {hosts => ["192.168.100.41:9200"]index => "apache_access-%{+YYYY.MM.dd}"}}if [type] == "error" {elasticsearch {hosts => ["192.168.100.41:9200"]index => "apache_error-%{+YYYY.MM.dd}"}}}[root@apache conf.d]# logstash -f apache_log.conf#新开终端重启服务生成error日志

#访问test测试页生成access日志

建立索引

pache_access-%{+YYYY.MM.dd}"

}

}

if [type] == “error” {

elasticsearch {

hosts => [“192.168.100.41:9200”]

index => “apache_error-%{+YYYY.MM.dd}”

}

}

}

[root@apache conf.d]# logstash -f apache_log.conf

#新开终端重启服务生成error日志

#访问test测试页生成access日志

### 建立索引