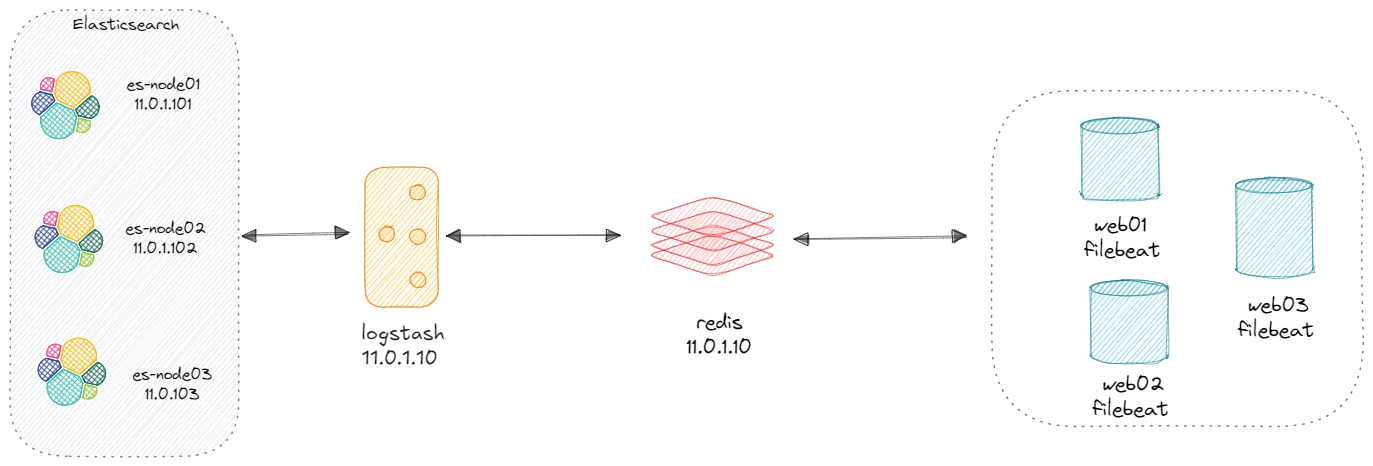

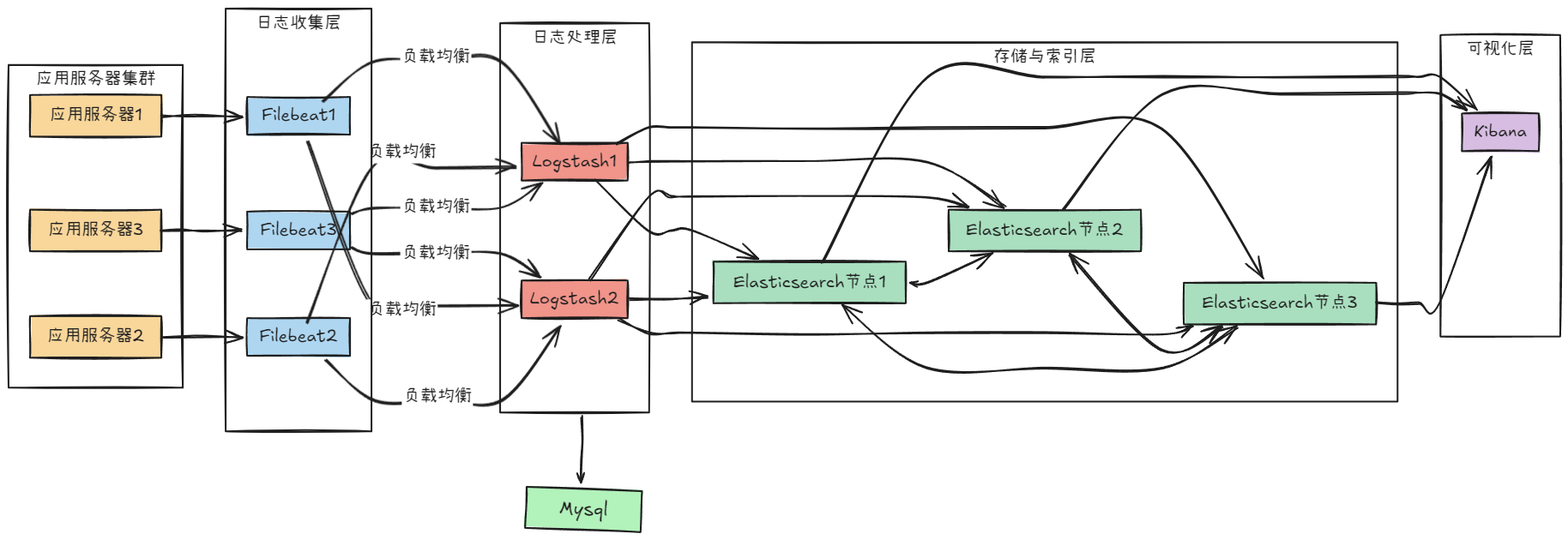

EL(F)K日志分析系统

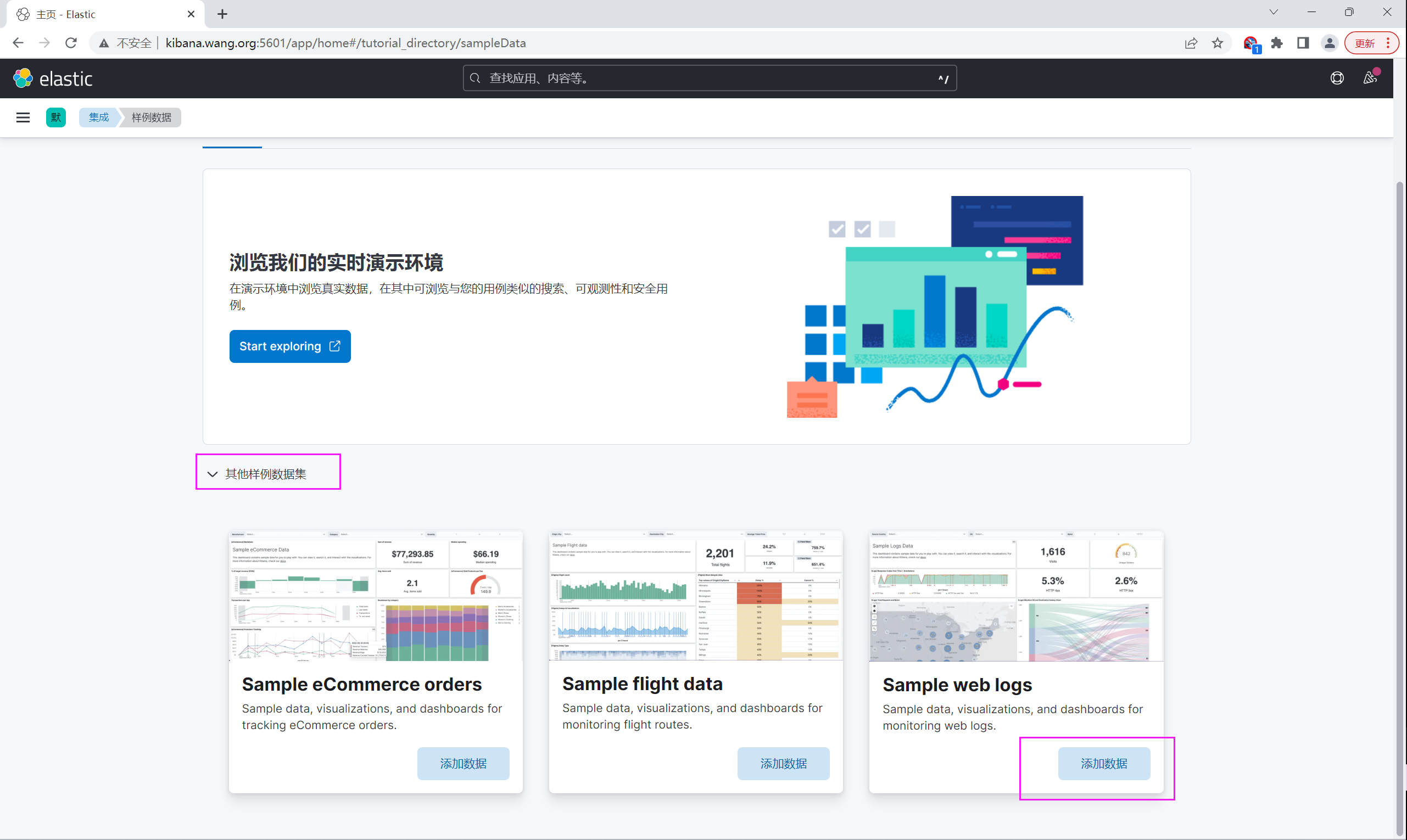

1 概述

早期IT架构中的系统和应用的日志分散在不同的主机和文件,如果应用出现问题,开发和运维人员想排

查原因,就要先找到相应的主机上的日志文件再进行查找和分析,所以非常不方便,而且还涉及到权限

安全问题,ELK的出现就很好的解决这一问题

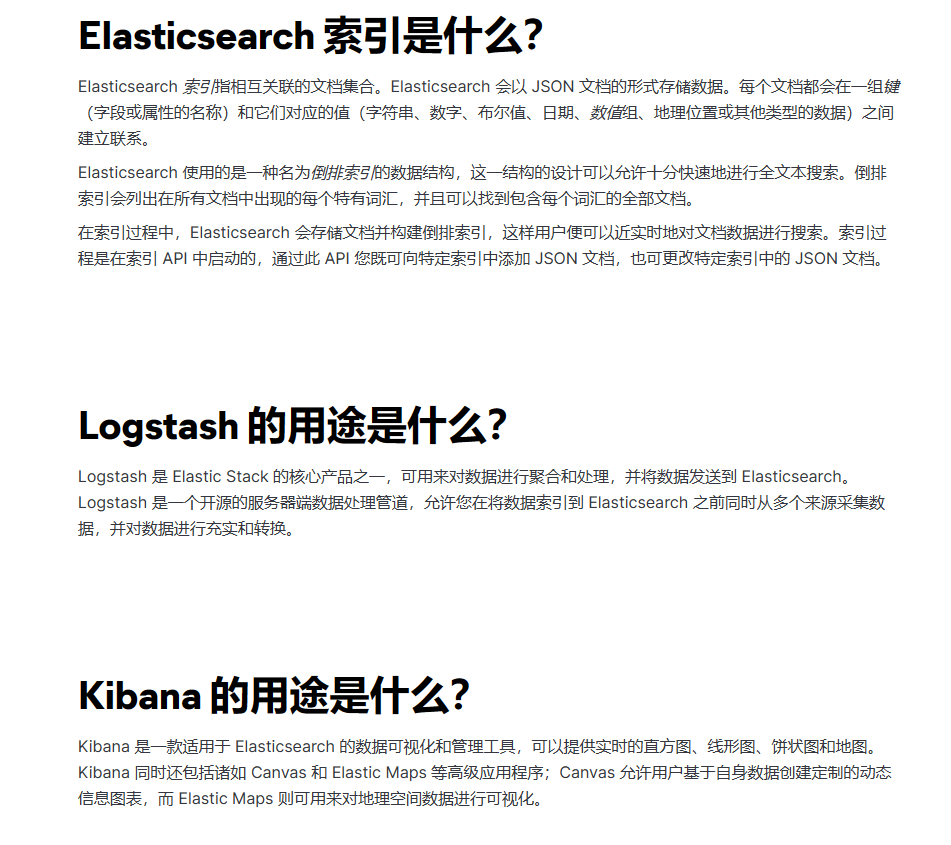

ELK 是由一家 Elastic 公司开发的三个开源项目的首字母缩写,即是三个相关的项目组成的系统

这三个项目分别是:Elasticsearch、Logstash 和 Kibana。三个项目各有不同的功能

- Elasticsearch 是一个实时的全文搜索,存储库和分析引擎。

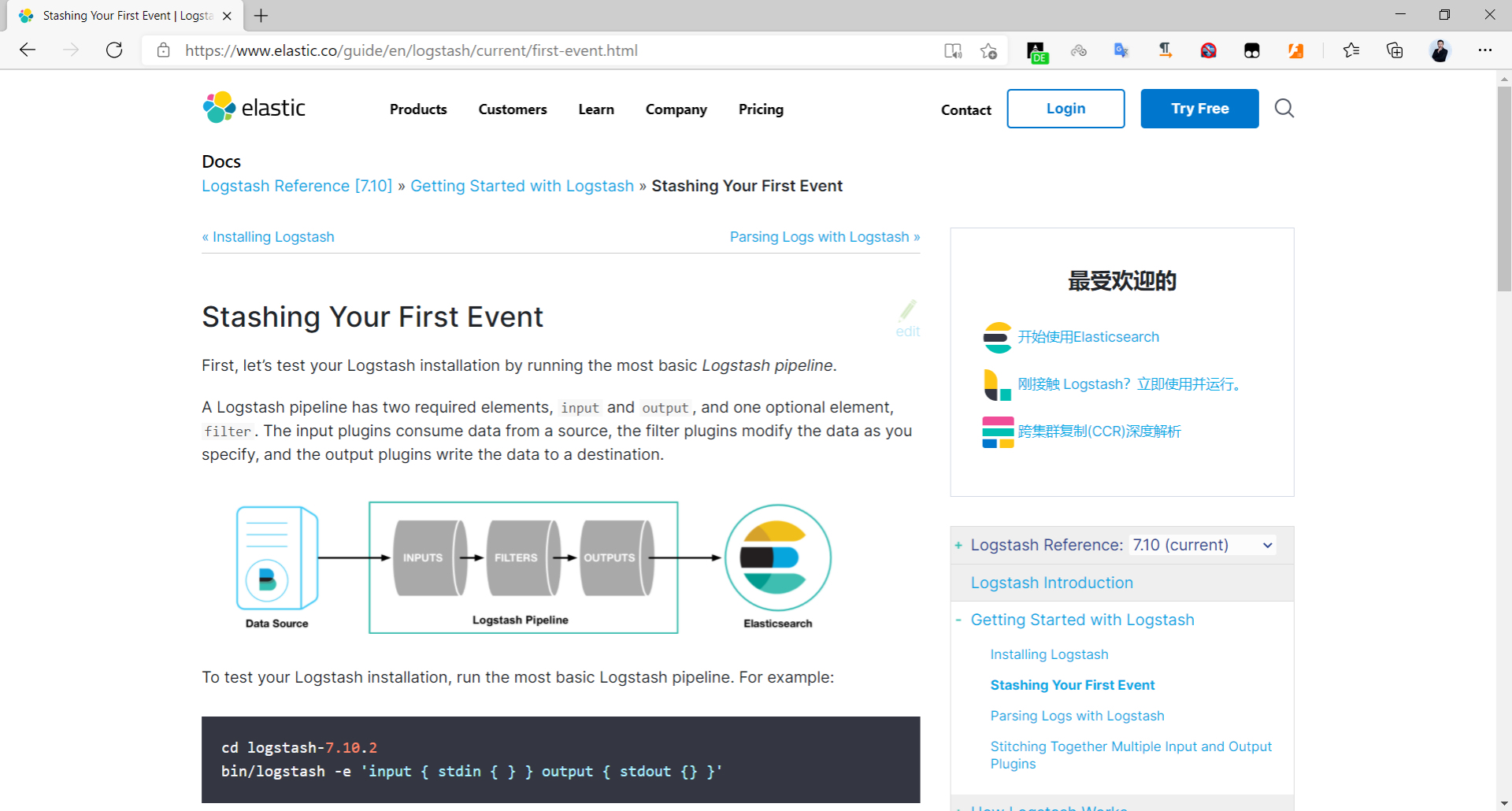

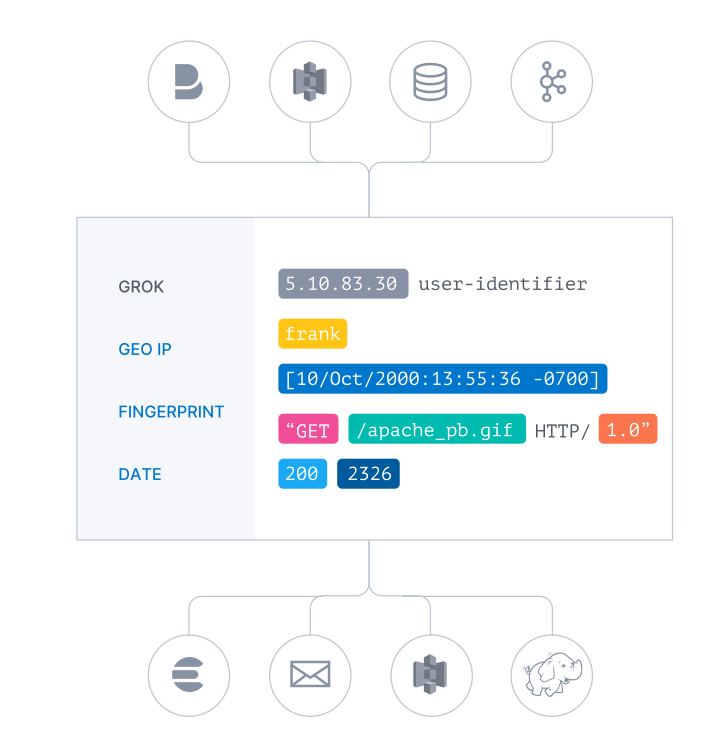

- Logstash 是数据处理的管道,能够同时从多个来源采集数据,转换数据,然后将数据发送到诸如Elasticsearch 等存储库中。

- Kibana 则可以让用户在 Elasticsearch 中使用图形和图表对数据进行可视化。

Elastic Stack 是一套适用于数据采集、扩充、存储、分析和可视化的免费开源工具。

目前 Elastic Stack 包括一系列丰富的轻量型数据采集代理,这些代理统称为 Beats,可用来向Elasticsearch 发送数据。

ELK 版本演进: 0.X,1.X,2,X,5.X,6,X,7.X,8.X …

官网: https://www.elastic.co/

ELK官方介绍: https://www.elastic.co/cn/what-is/elk-stack

ELK 下载链接: https://www.elastic.co/cn/downloads/

ELK 说明: https://www.elastic.co/guide/cn/index.html

ELK 权威指南: https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

ELK stack的主要优点:

- 功能强大:Elasticsearch 是实时全文索引,具有强大的搜索功能

- 配置相对简单:Elasticsearch 全部基于 JSON,Logstash使用模块化配置,Kibana的配置都比较简单。

- 检索性能高效:基于优秀的设计,每次查询可以实时响应,即使百亿级数据的查询也能达到秒级响应。

- 集群线性扩展:Elasticsearch 和 Logstash都可以灵活线性扩展

- 前端操作方便:Kibana提供了比较美观UI前端,操作也比较简单

官方下载

https://www.elastic.co/cn/downloads/

EFK 由ElasticSearch、Fluentd和Kibana三个开源工具组成。

Fluentd是一个实时开源的数据收集器,和logstash功能相似,这三款开源工具的组合为日志数据提供了分布式的实时搜集与分析的监控系统。

Fluentd官网和文档:

https://www.fluentd.org/

https://docs.fluentd.org/

2 Elasticsearch 部署与管理

Elasticsearch 基于 Java 语言开发,利用全文搜索引擎 Apache Lucene 实现

- Elasticsearch 很快

- Elasticsearch 具有分布式的本质特征

- Elasticsearch 包含一系列广泛的功能

- Elastic Stack 简化了数据采集、可视化和报告过程

Elasticsearch 是一个分布式的免费开源搜索和分析引擎,适用于包括文本、数字、地理空间、结构化和非结构化数据等在内的所有类型的数据。

Elasticsearch 基于 Java 语言实现

2.1 Elasticsearch 安装说明

官方文档

https://www.elastic.co/guide/en/elastic-stack/index.html

https://www.elastic.co/guide/en/elasticsearch/reference/master/install-elasticsearch.html

部署方式

- 包安装

- 二进制安装

- Docker 部署

- Ansible 批量部署

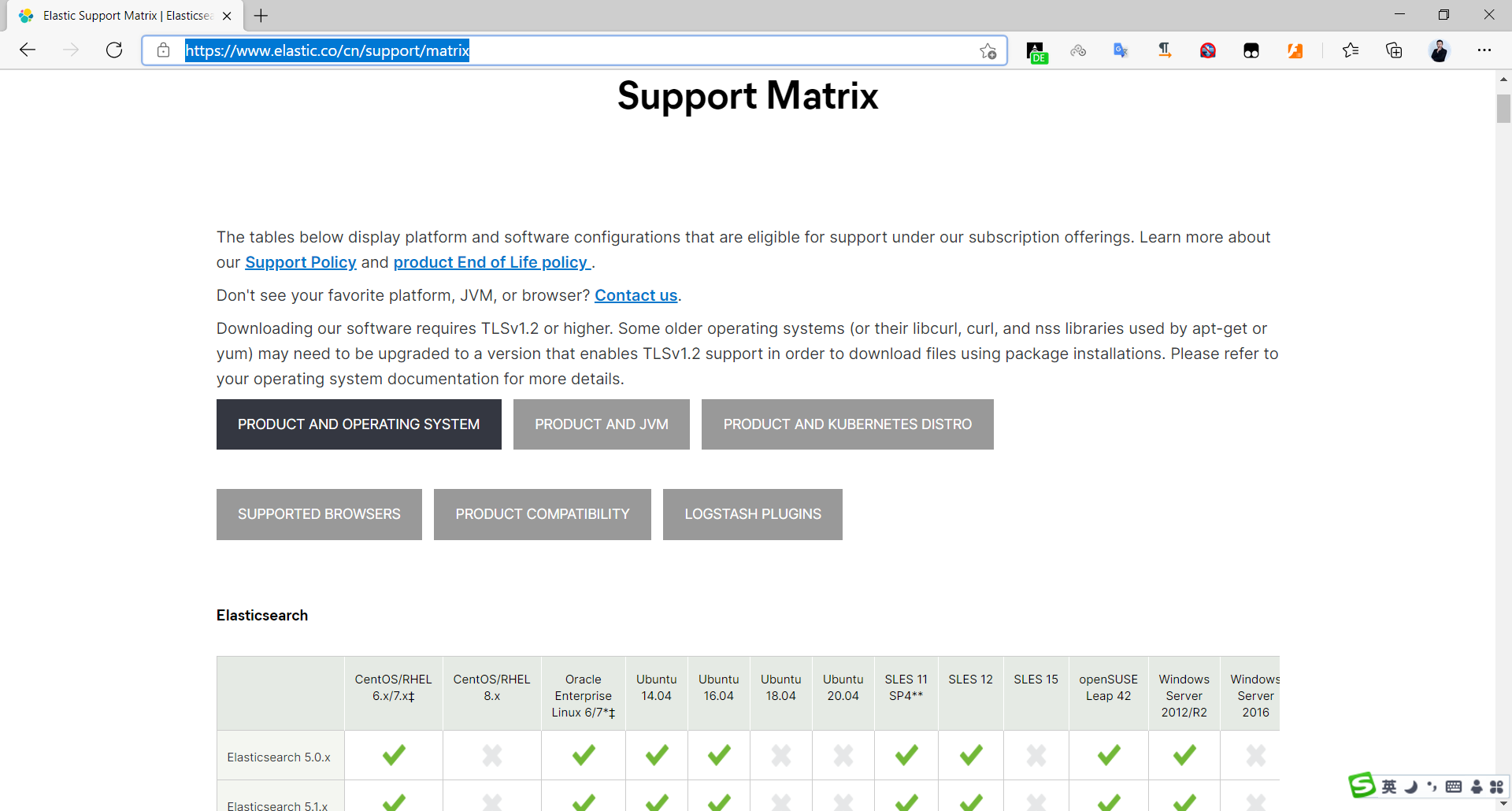

ES支持操作系统版本和 Java 版本官方说明

https://www.elastic.co/cn/support/matrix

2.2 Elasticsearch 安装前准备

2.2.1 安装前环境初始化

CPU 2C

内存4G或更多

操作系统: Ubuntu22.04,Ubuntu20.04,Ubuntu18.04,Rocky8.X,Centos 7.X

操作系统盘50G

主机名设置规则为nodeX.dinginx.org

生产环境建议准备单独的数据磁盘

2.2.1.1 主机名

#各服务器配置自己的主机名

[root@ubuntu2004 ~]# hostnamectl set-hostname es-node01.dinginx.org

[root@ubuntu2004 ~]# hostnamectl set-hostname es-node02.dinginx.org

[root@ubuntu2004 ~]# hostnamectl set-hostname es-node03.dinginx.org

2.2.1.2 关闭防火墙和SELinux

关闭防所有服务器的防火墙和 SELinux#RHEL系列的系统执行下以下配置

[root@es-node01 ~]# systemctl disable firewalld

[root@es-node01 ~]# systemctl disable NetworkManager

[root@es-node01 ~]# sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

[root@es-node01 ~]# reboot

2.2.1.3 各服务器配置本地域名解析

#es-node01、es-node02、es-node03三节点执行cat >> /etc/hosts <<EOF

11.0.1.101 es-node01.dinginx.org

11.0.1.102 es-node02.dinginx.org

11.0.1.103 es-node03.dinginx.org

EOF

2.2.1.4 优化资源限制配置

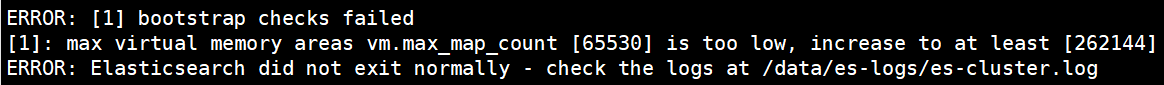

2.2.1.4.1 修改内核参数

内核参数 vm.max_map_count 用于限制一个进程可以拥有的VMA(虚拟内存区域)的数量

使用默认系统配置,二进制安装时会提示下面错误,包安装会自动修改此配置

#查看默认值

[root@es-node01 ~]#sysctl -a|grep vm.max_map_count

vm.max_map_count = 65530#修改配置

[root@es-node01 ~]#echo "vm.max_map_count = 262144" >> /etc/sysctl.conf

[root@es-node01 ~]#sysctl -p

vm.max_map_count = 262144#设置系统最大打开的文件描述符数

[root@es-node01 ~]#echo "fs.file-max = 11.0.10" >> /etc/sysctl.conf

[root@es-node01 ~]#sysctl -p

vm.max_map_count = 262144

fs.file-max = 11.0.10#Ubuntu20.04/22.04默认值已经满足要求

[root@ubuntu2204 ~]#sysctl fs.file-max

fs.file-max = 9223372036854775807

范例: Ubuntu 基于包安装后会自动修改文件

root@ubuntu2204:~# cat /usr/lib/sysctl.d/elasticsearch.conf

vm.max_map_count=262144

2.2.1.4.2 修改资源限制配置(可选)

#在node节点执行cat >> /etc/security/limits.conf <<EOF

* soft core unlimited

* hard core unlimited

* soft nproc 11.0.10

* hard nproc 11.0.10

* soft nofile 11.0.10

* hard nofile 11.0.10

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

EOF

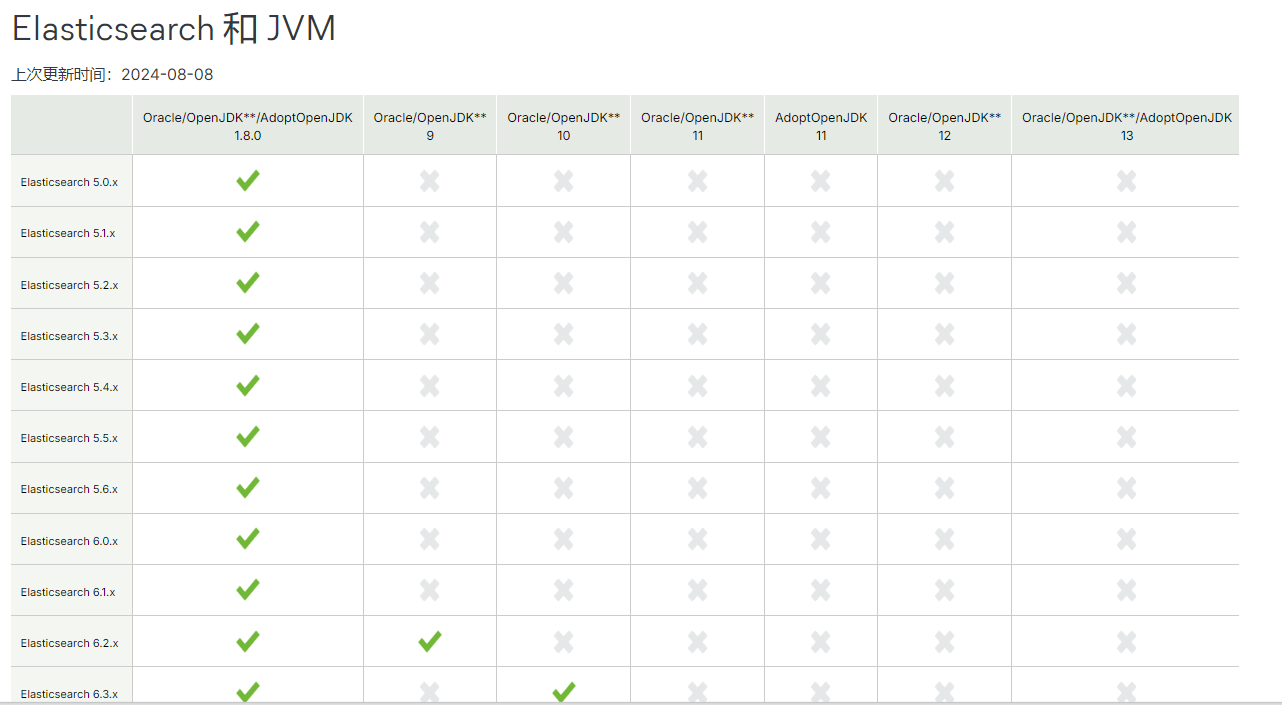

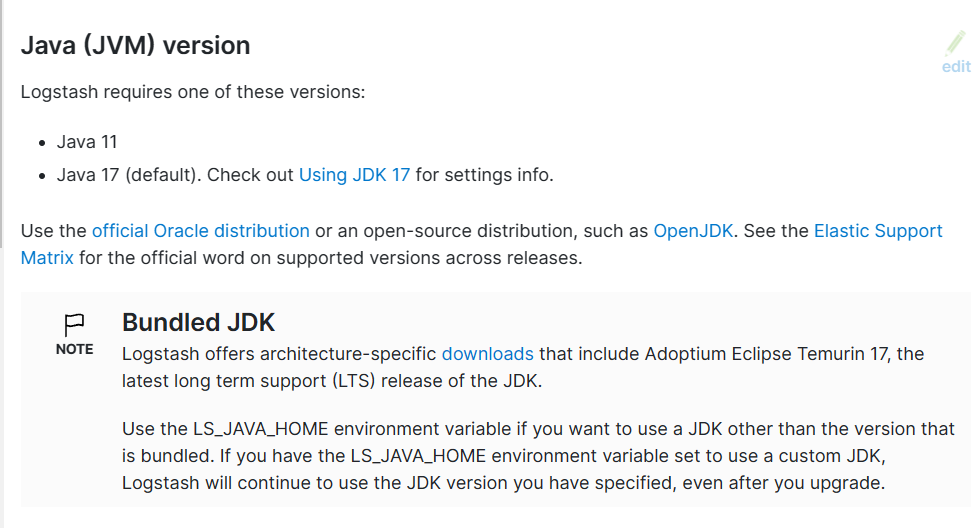

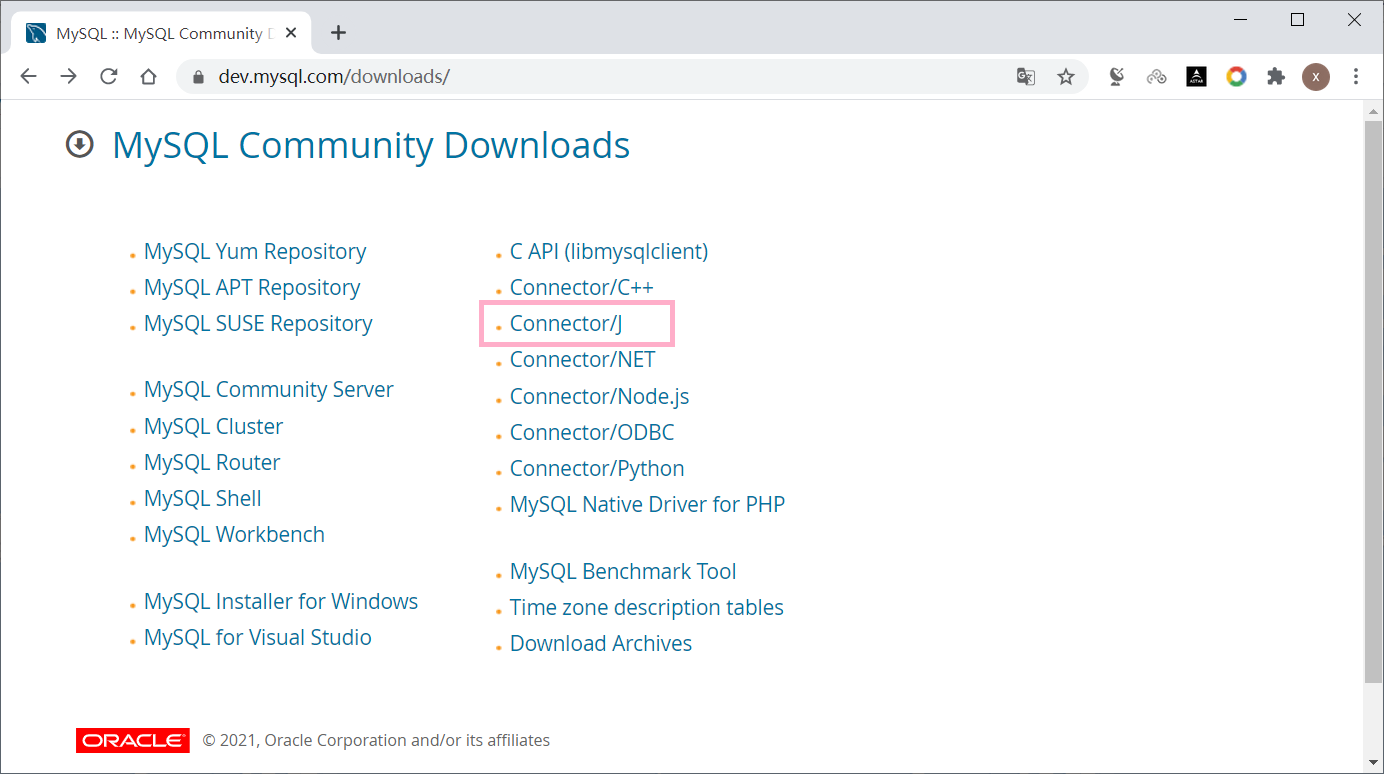

2.2.2 安装 Java 环境 (可选)

Elasticsearch 是基于java的应用,所以依赖JDK环境

注意: 安装7.X以后版本官方建议要安装集成JDK的包,所以无需再专门安装 JDK

关于JDK环境说明

1.x 2.x 5.x 6.x都没有集成JDK的安装包,也就是需要自己安装java环境

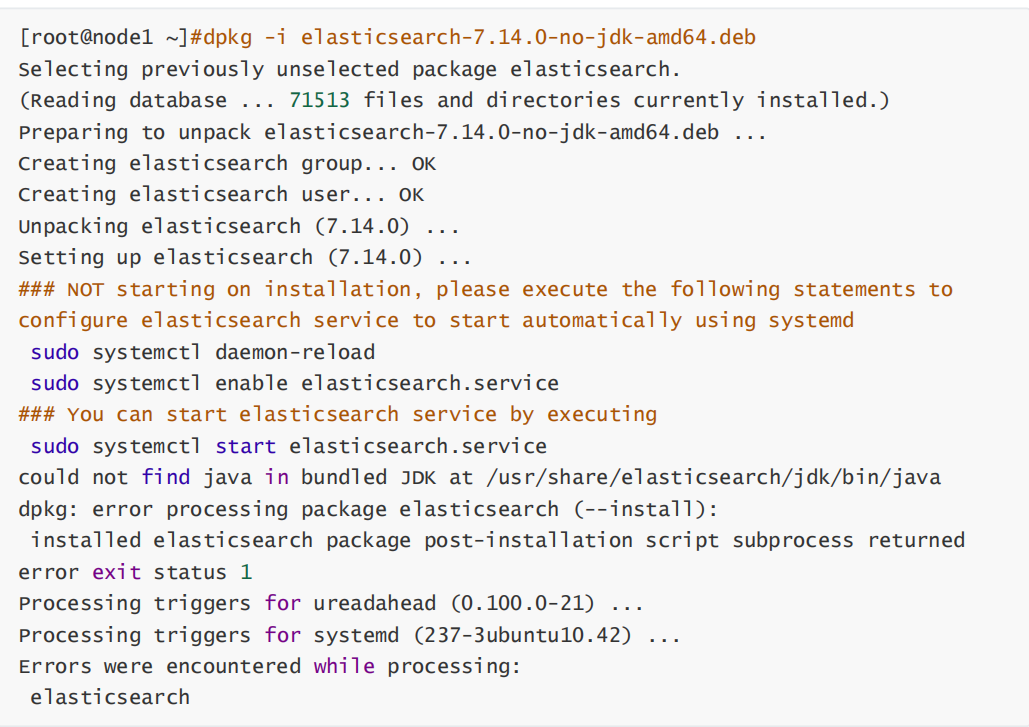

7.x 版本的安装包分为带JDK和不带JDK两种包,带JDK的包在安装时不需要再安装java,如果不带JDK的包

仍然需要自己去安装java

8.X 版本内置JDK,不再支持自行安装的JDK

如果安装no-jdk的包,才需要安装java环境

官网JAVA版支持说明

https://www.elastic.co/cn/support/matrix#matrix_jvm

因为 Elasticsearch 服务运行需要 Java环境,如果要安装没有JDK的包,需要提前安装JAVA环境,可以使用以下方式安装

如果没有java 环境,安装elasticsearch时会出下面错误提示

方式一:直接使用包管理器yum/apt安装openjdk

[root@es-node01 ~]#yum -y install java-1.8.0-openjdk

[root@es-node01 ~]#apt update;apt -y install openjdk-8-jdk

方式二:本地安装在oracle官网下载rpm安装包:

[root@es-node01 ~]# yum -y install jdk-8u92-linux-x64.rpm

方式三:安装二进制包并自定义环境变量

下载地址: http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

[root@es-node01 ~]# tar xvf jdk-8u121-linux-x64.tar.gz -C /usr/local/

[root@es-node01 ~]# ln -sv /usr/local/jdk1.8.0_121 /usr/local/jdk

[root@es-node01 ~]# ln -sv /usr/local/jdk/bin/java /usr/bin/

[root@es-node01 ~]# vim /etc/profile

export HISTTIMEFORMAT="%F %T `whoami` "

export JAVA_HOME=/usr/local/jdk

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

[root@es-node01 ~]# source /etc/profile

[root@es-node01 ~]# java -version

java version "1.8.0_121" #确认可以出现当前的java版本号

Java(TM) SE Runtime Environment (build 1.8.0_121-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.121-b13, mixed mode)

2.3 Elasticsearch 安装

有两种包: 包含jdk和no-jdk的包

注意: 官方提示no-jdk的包将被淘汰,建议使用包含JDK的包

下载地址:

#包含JDK的版本下载

https://www.elastic.co/downloads/elasticsearch

https://mirrors.tuna.tsinghua.edu.cn/elasticstack/

#不包含JDK的版本下载

https://www.elastic.co/cn/downloads/elasticsearch-no-jdk

范例: 查看两种包

[root@es-node01 ~]#ll -h elasticsearch-8.14.3-amd64.deb

-rw-r--r-- 1 root root 562M Sep 23 21:53 elasticsearch-8.14.3-amd64.deb

2.3.1 包安装 Elasticsearch

2.3.1.1 安装 Elasticsearch 包

下载链接

https://www.elastic.co/cn/downloads/elasticsearch

https://mirrors.tuna.tsinghua.edu.cn/elasticstack/

#下载包

[root@es-node01 ~]#wget https://mirrors.tuna.tsinghua.edu.cn/elasticstack/8.x/apt/pool/main/e/elasticsearch/elasticsearch-8.14.3-amd64.deb

[root@es-node01 ~]#ll -h elasticsearch-8.14.3-amd64.deb

-rw-r--r-- 1 root root 562M Sep 23 21:53 elasticsearch-8.14.3-amd64.deb#内置jdk环境包安装

[root@es-node01 ~]#dpkg -i elasticsearch-8.14.3-amd64.deb

Selecting previously unselected package elasticsearch.

(Reading database ... 72068 files and directories currently installed.)

Preparing to unpack elasticsearch-8.14.3-amd64.deb ...

Creating elasticsearch group... OK

Creating elasticsearch user... OK

Unpacking elasticsearch (8.14.3) ...

Setting up elasticsearch (8.14.3) ...

--------------------------- Security autoconfiguration information ------------------------------Authentication and authorization are enabled.

TLS for the transport and HTTP layers is enabled and configured.The generated password for the elastic built-in superuser is : kN_N*jTGvq0UxL+lnjY2If this node should join an existing cluster, you can reconfigure this with

'/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token <token-here>'

after creating an enrollment token on your existing cluster.You can complete the following actions at any time:Reset the password of the elastic built-in superuser with

'/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic'.Generate an enrollment token for Kibana instances with '/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana'.Generate an enrollment token for Elasticsearch nodes with

'/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s node'.-------------------------------------------------------------------------------------------------

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemdsudo systemctl daemon-reloadsudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executingsudo systemctl start elasticsearch.service##内置JAVA

[root@es-node01 ~]#dpkg -L elasticsearch

[root@es-node01 ~]#/usr/share/elasticsearch/jdk/bin/java -version

openjdk version "22.0.1" 2024-04-16

OpenJDK Runtime Environment (build 22.0.1+8-16)

OpenJDK 64-Bit Server VM (build 22.0.1+8-16, mixed mode, sharing)#JVM优化

#########################JVM优化#################################

## IMPORTANT: JVM heap size

################################################################

##

## The heap size is automatically configured by Elasticsearch

## based on the available memory in your system and the roles

## each node is configured to fulfill. If specifying heap is

## required, it should be done through a file in jvm.options.d,

## which should be named with .options suffix, and the min and

## max should be set to the same value. For example, to set the

## heap to 4 GB, create a new file in the jvm.options.d

## directory containing these lines:

##

## -Xms4g

-Xms512m

## -Xmx4g

-Xms512m

##

## See https://www.elastic.co/guide/en/elasticsearch/reference/8.14/heap-size.html

## for more information

##

################################################################[root@es-node01 ~]#systemctl enable --now elasticsearch.service #启动服务#默认8.X开启xpack安全,导致无法直接访问

[root@es-node01 ~]#curl 127.0.0.1:9200

curl: (52) Empty reply from server##关闭xpack安全功能......# Enable security features

xpack.security.enabled: false #true改为false......[root@es-node01 ~]#curl 127.0.0.1:9200

{"name" : "es-node01.dinginx.org","cluster_name" : "elasticsearch","cluster_uuid" : "qG1IJ-a8QwCO7JFAJExb2w","version" : {"number" : "8.14.3","build_flavor" : "default","build_type" : "deb","build_hash" : "d55f984299e0e88dee72ebd8255f7ff130859ad0","build_date" : "2024-07-07T22:04:49.882652950Z","build_snapshot" : false,"lucene_version" : "9.10.0","minimum_wire_compatibility_version" : "7.17.0","minimum_index_compatibility_version" : "7.0.0"},"tagline" : "You Know, for Search"

}

2.3.1.2 编辑服务配置文件

参考文档:

https://www.ibm.com/support/knowledgecenter/zh/SSFPJS_8.5.6/com.ibm.wbpm.main.doc/topics/rfps_esearch_configoptions.html

https://www.elastic.co/guide/en/elasticsearch/reference/index.html

https://www.elastic.co/guide/en/elasticsearch/reference/master/settings.html

https://www.elastic.co/guide/en/elasticsearch/reference/master/important-settings.html

配置文件说明

[root@es-node01 ~]# grep "^[a-Z]" /etc/elasticsearch/elasticsearch.yml#ELK集群名称,单节点无需配置,同一个集群内每个节点的此项必须相同,新加集群的节点此项和其它节点相同即可加入集群,而无需再验证

cluster.name: ELK-Cluster #当前节点在集群内的节点名称,同一集群中每个节点要确保此名称唯一

node.name: es-node01 #ES 数据保存目录,包安装默认路径:/var/lib/elasticsearch/

path.data: /data/es-data #ES 日志保存目录,包安装默认路径:/var/llog/elasticsearch/

path.logs: /data/es-logs#服务启动的时候立即分配(锁定)足够的内存,防止数据写入swap,提高启动速度,但是true会导致启动失败,需要优化

bootstrap.memory_lock: true#指定该节点用于集群的监听IP,默认监听在127.0.0.1:9300,集群模式必须修改此行,单机默认即可

network.host: 0.0.0.0 #监听端口

http.port: 9200#发现集群的node节点列表,可以添加部分或全部节点IP

#在新增节点到已有集群时,此处需指定至少一个已经在集群中的节点地址

discovery.seed_hosts: ["11.0.1.101", "11.0.1.102","11.0.1.103"]#集群初始化时指定希望哪些节点可以被选举为 master,只在初始化时使用,新加节点到已有集群时此项可不配置

cluster.initial_master_nodes: ["11.0.1.101", "11.0.1.102","11.0.1.103"]#一个集群中的 N 个节点启动后,才允许进行数据恢复处理,默认是1,一般设为为所有节点的一半以上,防止出现脑裂现象

#当集群无法启动时,可以将之修改为1,或者将下面行注释掉,实现快速恢复启动

gateway.recover_after_nodes: 2#设置是否可以通过正则表达式或者_all匹配索引库进行删除或者关闭索引库,默认true表示必须需要明确指定索引库名称,不能使用正则表达式和_all,生产环境建议设置为 true,防止误删索引库。

action.destructive_requires_name: true#如果不参与主节点选举设为false,默认值为true

node.master: false#存储数据,默认值为true,此值为false则不存储数据而成为一个路由节点

#如果将原有的true改为false,需要先执行/usr/share/elasticsearch/bin/elasticsearch-noderepurpose 清理数据

node.data: true#7.x以后版本下面指令已废弃,在2.x 5.x 6.x 版本中用于配置节点发现列表

discovery.zen.ping.unicast.hosts: ["11.0.1.101", "11.0.1.102","11.0.1.103"]#8.X版后默认即开启Xpack功能,可以修改为false禁用

xpack. security. enabled : true#开启跨域访问支持,默认为false

http.cors.enabled: true# 跨域访问允许的域名地址,(允许所有域名)以上使用正则

http.cors.allow-origin: "*"

范例:8.X 单机配置

#8.X版,修改配置文件一行即可

[root@ubuntu2204 ~]#vim /etc/elasticsearch/elasticsearch.yml

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

#network.host: 0.0.0.0 #保留注释此行

xpack.security.enabled: false #只需要修改此行true为false即可,其它行默认即可

xpack.security.enrollment.enabled: true

xpack.security.http.ssl:enabled: truekeystore.path: certs/http.p12

xpack.security.transport.ssl:enabled: trueverification_mode: certificatekeystore.path: certs/transport.p12truststore.path: certs/transport.p12

cluster.initial_master_nodes: ["ubuntu2204.dinginx.org"] #默认此行不做修改

http.host: 0.0.0.0

[root@ubuntu2204 ~]#systemctl enable --now elasticsearch.service #查看9200和9300端口

root@ubuntu2204:~# ss -ntl|grep -E '9200|9300'

LISTEN 0 4096 [::ffff:127.0.0.1]:9300 *:*

LISTEN 0 4096 [::1]:9300 [::]:*

LISTEN 0 4096 *:9200 *:* #测试访问

root@ubuntu2204:~# curl 11.0.1.10:9200

{"name" : "ubuntu2204","cluster_name" : "elasticsearch","cluster_uuid" : "Lur3kHLqQLiHIQPds_8JMw","version" : {"number" : "8.8.2","build_flavor" : "default","build_type" : "deb","build_hash" : "98e1271edf932a480e4262a471281f1ee295ce6b","build_date" : "2023-06-26T05:16:16.196344851Z","build_snapshot" : false,"lucene_version" : "9.6.0","minimum_wire_compatibility_version" : "7.17.0","minimum_index_compatibility_version" : "7.0.0"},"tagline" : "You Know, for Search"

}

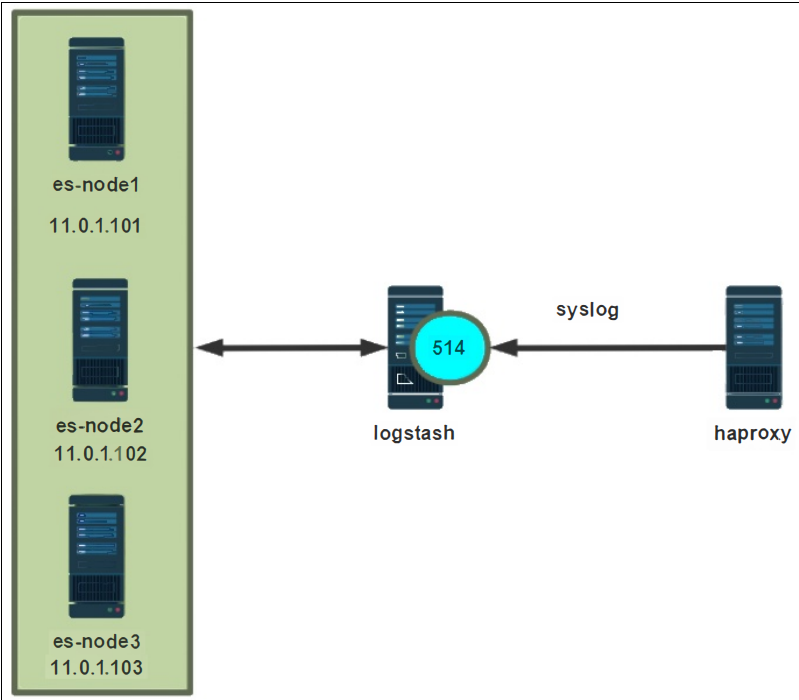

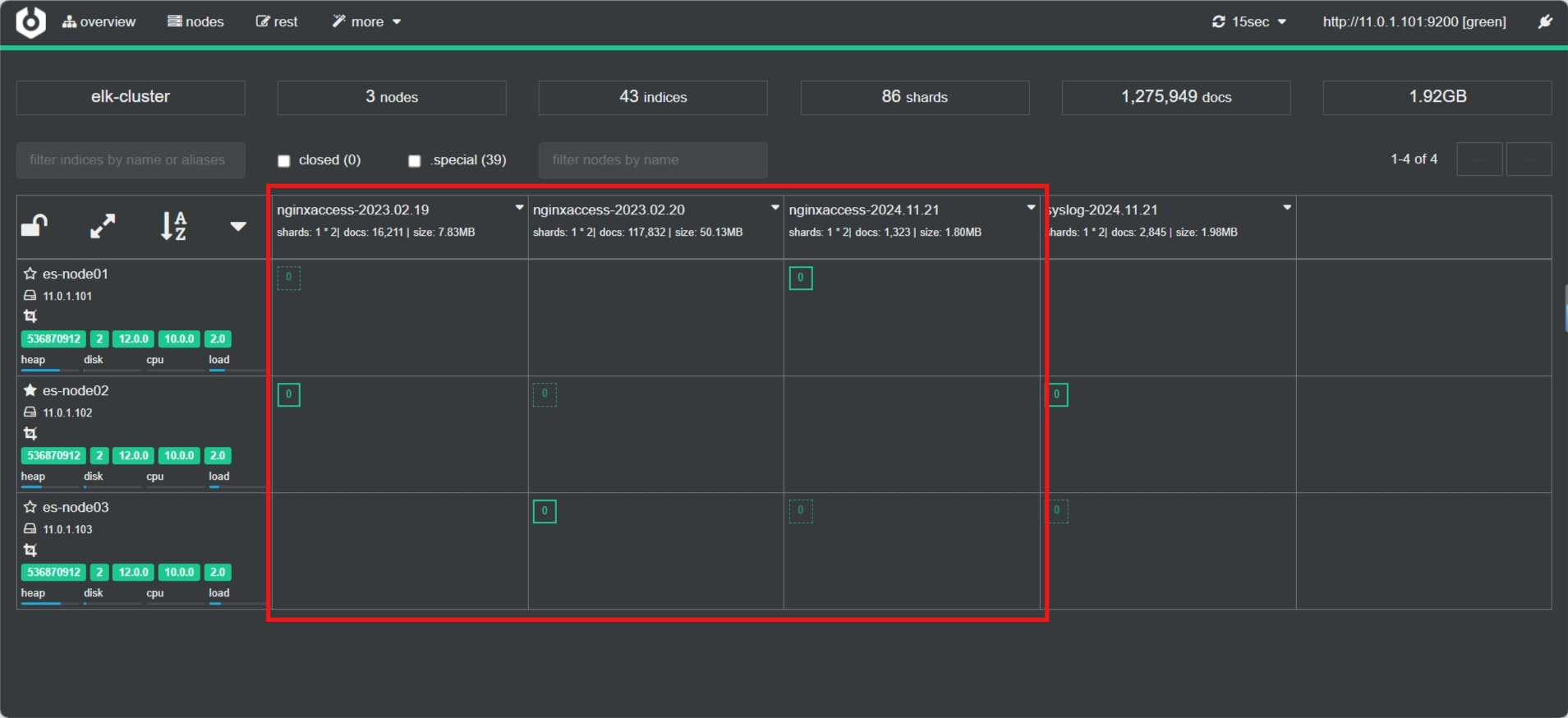

范例:8.X 集群配置

[root@es-node01 ~]#grep -Ev '^(#|$)' /etc/elasticsearch/elasticsearch.yml

cluster.name: elk-cluster

node.name: es-node01

path.data: /data/es-data

path.logs: /data/es-logs

network.host: 0.0.0.0 #集群模式必须修改此行,默认是127.0.0.1:9300,否则集群节点无法通过9300端口通信每个节点相同

bootstrap.memory_lock: true

http.port: 9200

discovery.seed_hosts: ["11.0.1.101", "11.0.1.102","11.0.1.103"] #修改此行,每个节点相同

cluster.initial_master_nodes: ["11.0.1.101", "11.0.1.102","11.0.1.103"] #修改此行,每个节点相同,和下面重复

action.destructive_requires_name: true

xpack.security.enabled: false #修改此行,每个节点相同

xpack.security.enrollment.enabled: true

xpack.security.http.ssl:enabled: truekeystore.path: certs/http.p12

xpack.security.transport.ssl:enabled: trueverification_mode: certificatekeystore.path: certs/transport.p12truststore.path: certs/transport.p12#cluster.initial_master_nodes: ["es-node01.dinginx.org"] #将此行注释

http.host: 0.0.0.0#创建存放路径并授权

[root@es-node01 ~]#mkdir /data/{es-data,es-logs} && chown elasticsearch. /data/es-* && systemctl restart elasticsearch.service#打开9200和9300端口

[root@es-node03 ~]#ss -ntl|grep -E '9200|9300'

LISTEN 0 4096 *:9300 *:*

LISTEN 0 4096 *:9200 *:* #访问测试

[root@es-node01 ~]#curl http://127.0.0.1:9200/_cluster/health?pretty=true

{"cluster_name" : "elk-cluster","status" : "green","timed_out" : false,"number_of_nodes" : 3,"number_of_data_nodes" : 3,"active_primary_shards" : 0,"active_shards" : 0,"relocating_shards" : 0,"initializing_shards" : 0,"unassigned_shards" : 0,"delayed_unassigned_shards" : 0,"number_of_pending_tasks" : 0,"number_of_in_flight_fetch" : 0,"task_max_waiting_in_queue_millis" : 0,"active_shards_percent_as_number" : 100.0

}#查看集群信息

[root@es-node01 ~]#curl 'http://127.0.0.1:9200/_cat/nodes?v'

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

11.0.1.102 32 97 0 0.40 0.51 0.44 cdfhilmrstw - es-node02

11.0.1.101 23 96 1 1.01 0.53 0.41 cdfhilmrstw * es-node01

11.0.1.103 21 96 0 0.42 0.48 0.43 cdfhilmrstw - es-node03

2.3.1.3 优化 ELK 资源配置

2.3.1.3.1 开启 bootstrap.memory_lock 优化

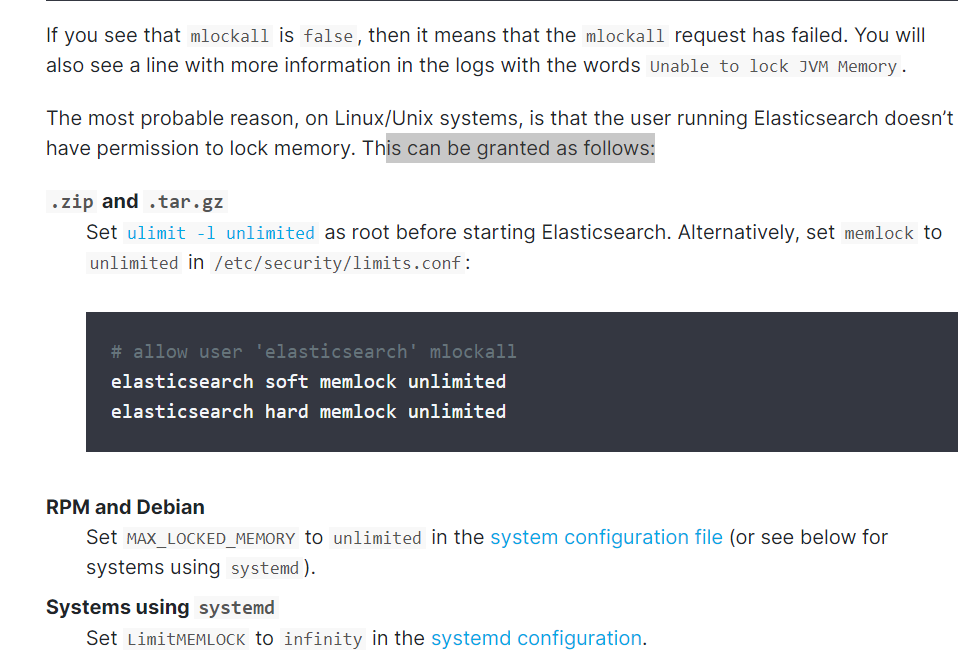

开启 bootstrap.memory_lock: true 可以优化性能,但会导致无法启动的错误解决方法

注意:开启 bootstrap.memory_lock: true 需要足够的内存,建议4G以上,否则内存不足,启动会很慢

官方文档:

https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-configuration-memory.html#bootstrap-memory_lock

https://www.elastic.co/guide/en/elasticsearch/reference/current/setting-system-settings.html#systemd

[root@node01 ~]#vim /etc/elasticsearch/elasticsearch.yml

#开启此功能导致无法启动

bootstrap.memory_lock: true

[root@node01 ~]#systemctl restart elasticsearch.service

Job for elasticsearch.service failed because the control process exited with

error code.

See "systemctl status elasticsearch.service" and "journalctl -xe" for details.

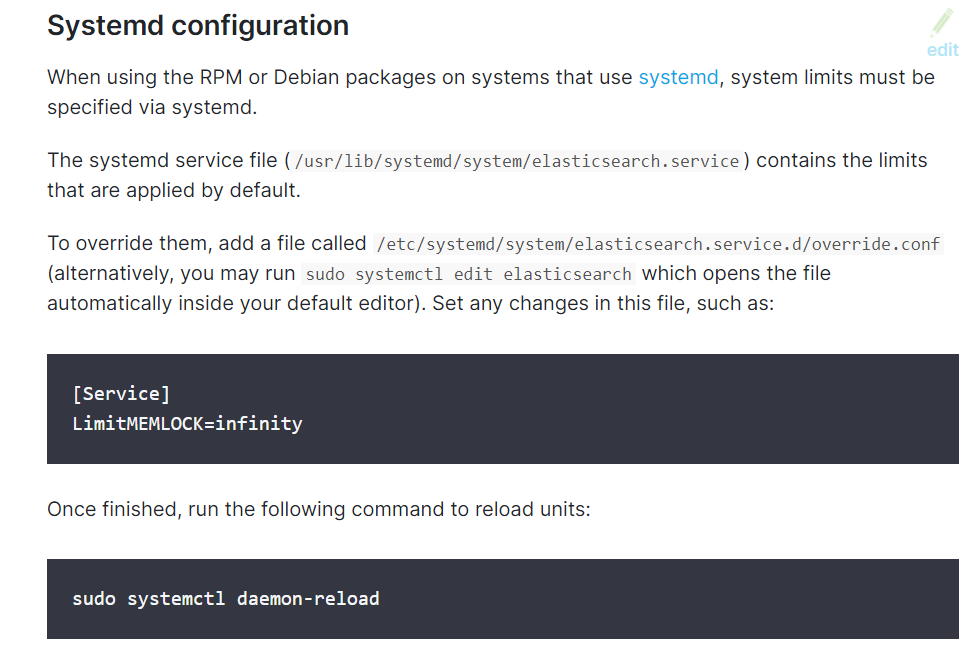

[root@node01 ~]#tail /data/es-logs/es-cluster.log#方法1:直接修改elasticsearch.service

[root@node01 ~]#vim /lib/systemd/system/elasticsearch.service

[Service]

#加下面一行

LimitMEMLOCK=infinity

#方法2:新建文件

[root@node01 ~]#systemctl edit elasticsearch

### Anything between here and the comment below will become the new contents of

the file

#加下面两行,注意加在中间位置

[Service]

LimitMEMLOCK=infinity

### Lines below this comment will be discarded

[root@node01 ~]#cat /etc/systemd/system/elasticsearch.service.d/override.conf

[Service]

LimitMEMLOCK=infinity

[root@node01 ~]#systemctl daemon-reload

[root@node01 ~]#systemctl restart elasticsearch.service

[root@node01 ~]#systemctl is-active elasticsearch.service

active

#测试访问是否成功

[root@node01 ~]#curl http://node01.dinginx.com:9200

[root@node01 ~]#curl http://node02.dinginx.com:9200

[root@node01 ~]#curl http://node03.dinginx.com:9200

2.3.1.3.2 内存优化

官方文档:

https://www.elastic.co/guide/en/elasticsearch/reference/current/important-settings.html#heap-size-settings#关于heap内存大小

## 虽然JVM可以处理大量的堆内存,但是将堆内存设置为过大的值可能导致以下问题:

堆内存分配的效率低

操作系统内存管理的限制

垃圾回收(Garbage Collection, GC): 压力变大

对象指针的大小: 在某些JVM实现(例如Oracle的HotSpot),在堆(Heap)大小超过32GB之后,对象指针的表示将从32位压缩oops(Ordinary Object Pointers)转变为64位非压缩指针,这导致了内存使用的增加。

推荐使用宿主机物理内存的一半,ES的heap内存最大不超过30G,26G是比较安全的

内存优化建议:

为了保证性能,每个ES节点的JVM内存设置具体要根据 node 要存储的数据量来估算,建议符合下面约定

- 在内存和数据量有一个建议的比例:对于一般日志类文件,1G 内存能存储48G~96GB数据

- JVM 堆内存最大不要超过30GB

- 单个分片控制在30-50GB,太大查询会比较慢,索引恢复和更新时间越长;分片太小,会导致索引碎片化越严重,性能也会下降

范例:

#假设总数据量为1TB,3个node节点,1个副本;那么实际要存储的大小为2TB

每个节点需要存储的数据量为: 2TB / 3 = 700GB,每个节点还需要预留20%的空间,所以每个node要存储大约 700*100/80=875GB 的数据;每个节点按照内存与存储数据的比率计算:875GB/48GB=18,即需要JVM内存为18GB,小于30GB

因为要尽量控制分片的大小为30GB;875GB/30GB=30个分片,即最多每个节点有30个分片#思考:假设总数据量为2TB,3个node节点,1个副本呢?

范例:指定heap内存最小和最大内存限制

#建议将heap内存设置为物理内存的一半且最小和最大设置一样大,但最大不能超过30G

[root@es-node01 ~]# vim /etc/elasticsearch/jvm.options

-Xms30g

-Xmx30g#每天产生1TB左右的数据量的建议服务器配置,还需要定期清理磁盘

16C 64G 6T硬盘 共3台服务器

范例: 修改service文件,做优化配置

[root@es-node01 ~]# vim /usr/lib/systemd/system/elasticsearch.service #修改内存限制

LimitNOFILE=11.0.10 #修改最大打开的文件数,默认值为65535

LimitNPROC=65535 #修改打开最大的进程数,默认值为4096

LimitMEMLOCK=infinity #无限制使用内存,以前旧版需要修改,否则无法启动服务,当前版本无需修改

内存锁定的配置参数:

https://discuss.elastic.co/t/memory-lock-not-working/70576

2.3.1.4 目录权限更改

在各个ES服务器创建数据和日志目录并修改目录权限为elasticsearch

#创建存放路径并授权,#必须分配权限,否则服务无法启动

[root@es-node01 ~]#mkdir /data/{es-data,es-logs} && chown elasticsearch. /data/es-* && systemctl restart elasticsearch.service[root@es-node01 ~]#ll /data/

total 32

drwxr-xr-x 5 root root 4096 Sep 24 13:05 ./

drwxr-xr-x 21 root root 4096 Sep 7 2023 ../

drwxr-xr-x 4 elasticsearch elasticsearch 4096 Sep 24 14:02 es-data/

drwxr-xr-x 2 elasticsearch elasticsearch 4096 Sep 24 13:14 es-logs/

drwx------ 2 root root 16384 Sep 7 2023 lost+found/

2.3.1.5 启动 Elasticsearch 服务并验证

#启动

[root@es-node01 ~]# systemctl enable --now elasticsearch

[root@es-node01 ~]# id elasticsearch

uid=113(elasticsearch) gid=117(elasticsearch) groups=117(elasticsearch)

[root@es-node01 ~]#ps aux|grep elasticsearch

root 10621 0.0 0.2 21876 9840 pts/3 S+ 12:18 0:00 vim /etc/elasticsearch/elasticsearch.yml

elastic+ 13029 0.1 2.6 2541072 105284 ? Ssl 13:14 0:05 /usr/share/elasticsearch/jdk/bin/java -Xms4m -Xmx64m -XX:+UseSerialGC -Dcli.name=server -Dcli.script=/usr/share/elasticsearch/bin/elasticsearch -Dcli.libs=lib/tools/server-cli -Des.path.home=/usr/share/elasticsearch -Des.path.conf=/etc/elasticsearch -Des.distribution.type=deb -cp /usr/share/elasticsearch/lib/*:/usr/share/elasticsearch/lib/cli-launcher/* org.elasticsearch.launcher.CliToolLauncher -p /var/run/elasticsearch/elasticsearch.pid --quiet

elastic+ 13102 1.6 21.5 3308948 858060 ? Sl 13:14 0:51 /usr/share/elasticsearch/jdk/bin/java -Des.networkaddress.cache.ttl=60 -Des.networkaddress.cache.negative.ttl=10 -Djava.security.manager=allow -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Dlog4j2.formatMsgNoLookups=true -Djava.locale.providers=SPI,COMPAT --add-opens=java.base/java.io=org.elasticsearch.preallocate --add-opens=org.apache.lucene.core/org.apache.lucene.store=org.elasticsearch.vec --enable-native-access=org.elasticsearch.nativeaccess -XX:ReplayDataFile=/var/log/elasticsearch/replay_pid%p.log -Djava.library.path=/usr/share/elasticsearch/lib/platform/linux-x64:/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib -Djna.library.path=/usr/share/elasticsearch/lib/platform/linux-x64:/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib -Des.distribution.type=deb -XX:+UnlockDiagnosticVMOptions -XX:G1NumCollectionsKeepPinned=11.0.100 -Xms512m -Xmx512m -XX:+UseG1GC -Djava.io.tmpdir=/tmp/elasticsearch-285470383223225162 --add-modules=jdk.incubator.vector -XX:+HeapDumpOnOutOfMemoryError -XX:+ExitOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log -Xlog:gc*,gc+age=trace,safepoint:file=/var/log/elasticsearch/gc.log:utctime,level,pid,tags:filecount=32,filesize=64m -XX:MaxDirectMemorySize=268435456 -XX:G1HeapRegionSize=4m -XX:InitiatingHeapOccupancyPercent=30 -XX:G1ReservePercent=15 --module-path /usr/share/elasticsearch/lib --add-modules=jdk.net --add-modules=ALL-MODULE-PATH -m org.elasticsearch.server/org.elasticsearch.bootstrap.Elasticsearch

elastic+ 13122 0.0 0.2 108944 8868 ? Sl 13:14 0:00 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

root 15002 0.0 0.0 6432 720 pts/4 S+ 14:07 0:00 grep --color=auto elasticsearch#查看监听port,9200端口集群访问端口,9300集群同步端口

[root@es-node01 ~]#ss -ntlp|grep java

LISTEN 0 4096 *:9200 *:* users:(("java",pid=13102,fd=445))

LISTEN 0 4096 *:9300 *:* users:(("java",pid=13102,fd=443)) #显示如下信息,表示服务启动,但不意味着集群是健康的

[root@es-node01 ~]#curl 127.0.0.1:9200

{"name" : "es-node01","cluster_name" : "elk-cluster","cluster_uuid" : "UgMCqg2JTWyBtLAaA_SbJw","version" : {"number" : "8.14.3","build_flavor" : "default","build_type" : "deb","build_hash" : "d55f984299e0e88dee72ebd8255f7ff130859ad0","build_date" : "2024-07-07T22:04:49.882652950Z","build_snapshot" : false,"lucene_version" : "9.10.0","minimum_wire_compatibility_version" : "7.17.0","minimum_index_compatibility_version" : "7.0.0"},"tagline" : "You Know, for Search"

}##显示如下信息表示集群是健康的

[root@es-node01 ~]#curl 127.0.0.1:9200/_cat/health

1727158170 06:09:30 elk-cluster green 3 3 0 0 0 0 0 0 - 100.0%

2.4 Elasticsearch 访问

官方文档

https://www.elastic.co/guide/en/elasticsearch/reference/master/rest-apis.html

Elasticsearch 支持各种语言使用 RESTful API 通过端口 9200 与之进行通信,可以用你习惯的 web 客户端访问 Elasticsearch

可以用三种方式和 Elasticsearch进行交互

- curl 命令和其它浏览器: 基于命令行,操作不方便

- 插件: 在node节点上安装head,Cerebro 等插件,实现图形操作,查看数据方便

- Kibana: 需要java环境并配置,图形操作,显示格式丰富

2.4.1 Shell 命令

https://www.elastic.co/guide/en/elasticsearch/reference/current/query-dsl-match-query.html

https://nasuyun.com/docs/api/

Elasticsearch 提供了功能十分丰富、多种表现形式的查询 DSL**(Domain Specific Language领域特定语言)**语言

DSL 查询使用 JSON 格式的请求体与 Elasticsearch 交互,可以实现各种各样的增删改查等功能

2.4.1.1 查看 ES 集群状态

访问 ES

#查看支持的指令

curl http://127.0.0.1:9200/_cat#查看es集群状态

curl http://127.0.0.1:9200/_cat/health

curl 'http://127.0.0.1:9200/_cat/health?v'#查看集群分健康性,获取到的是一个json格式的返回值,那就可以通过python等工具对其中的信息进行分析

#注意:status 字段为green才是正常状态

curl http://127.0.0.1:9200/_cluster/health?pretty=true#查看所有的节点信息

curl 'http://127.0.0.1:9200/_cat/nodes?v'#列出所有的索引 以及每个索引的相关信息

curl 'http://127.0.0.1:9200/_cat/indices?v'

范例:

[root@es-node01 ~]#curl http://127.0.0.1:9200/_cat

=^.^=

/_cat/allocation

/_cat/shards

/_cat/shards/{index}

/_cat/master

/_cat/nodes

/_cat/tasks

/_cat/indices

/_cat/indices/{index}

/_cat/segments

/_cat/segments/{index}

/_cat/count

/_cat/count/{index}

/_cat/recovery

/_cat/recovery/{index}

/_cat/health

/_cat/pending_tasks

/_cat/aliases

/_cat/aliases/{alias}

/_cat/thread_pool

/_cat/thread_pool/{thread_pools}

/_cat/plugins

/_cat/fielddata

/_cat/fielddata/{fields}

/_cat/nodeattrs

/_cat/repositories

/_cat/snapshots/{repository}

/_cat/templates

/_cat/component_templates/_cat/ml/anomaly_detectors

/_cat/ml/anomaly_detectors/{job_id}

/_cat/ml/datafeeds

/_cat/ml/datafeeds/{datafeed_id}

/_cat/ml/trained_models

/_cat/ml/trained_models/{model_id}

/_cat/ml/data_frame/analytics

/_cat/ml/data_frame/analytics/{id}

/_cat/transforms

/_cat/transforms/{transform_id}[root@es-node01 ~]#curl http://127.0.0.1:9200/_cat/master

hYZ9eBiJSv6BYpoWVkbmAA 11.0.1.101 11.0.1.101 es-node01[root@es-node01 ~]#curl http://127.0.0.1:9200/_cat/nodes

11.0.1.102 67 94 0 0.51 0.47 0.45 cdfhilmrstw - es-node02

11.0.1.101 41 92 0 0.43 0.45 0.45 cdfhilmrstw * es-node01

11.0.1.103 48 94 0 0.54 0.40 0.38 cdfhilmrstw - es-node03#列出所有的索引 以及每个索引的相关信息

[root@es-node01 ~]#curl '127.0.0.1:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size dataset.size

green open index2 3OXaH2FjQGeqMYL2VNohZQ 1 1 0 0 454b 227b 227b

#查看指定索引信息

curl -XPOST '127.0.0.1:9200/nginx-errorlog-0.107-2021.02.04/_search?pretty'#创建索引

[root@es-node01 ~]#curl -XPUT '127.0.0.1:9200/index01'

{"acknowledged":true,"shards_acknowledged":true,"index":"index01"}

[root@es-node01 ~]#curl -XPUT '127.0.0.1:9200/index02'

{"acknowledged":true,"shards_acknowledged":true,"index":"index02"}#查看索引

[root@es-node01 ~]#curl 127.0.0.1:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size dataset.size

green open index02 SSrQw1IJTGGrakGxX2XU9Q 1 1 0 0 454b 227b 227b

green open index01 0A60ppwsSZW697xkuPN-Xw 1 1 0 0 454b 227b 227b

2.4.1.2 创建和查看索引

https://www.elastic.co/guide/en/elasticsearch/reference/current/indices-create-index.html

示例:(副本不包含本身,区别于kafka)

#两个分片,每个分片各一个副本,共四个分片

curl -X PUT '127.0.0.1:9200/test01' \

-H 'Content-Type: application/json' \

-d '{"settings": {"index": {"number_of_shards": 3,"number_of_replicas": 2}}

}'#创建3个分片和2个副本的索引(3个分片,每个分片各2个副本,共9个分片)

[root@es-node01 ~]#curl -X PUT '127.0.0.1:9200/index3' \

> -H 'Content-Type: application/json' \

> -d '{

> "settings": {

> "index": {

> "number_of_shards": 3,

> "number_of_replicas": 2

> }

> }

> }'

{"acknowledged":true,"shards_acknowledged":true,"index":"index3"}#查看所有索引

[root@es-node01 ~]#curl '127.0.0.1:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size dataset.size

green open index02 SSrQw1IJTGGrakGxX2XU9Q 1 1 0 0 498b 249b 249b

green open index3 2WL6y08cTJuhK8tbB9LEPw 3 2 0 0 2.1kb 747b 747b

green open index2 3OXaH2FjQGeqMYL2VNohZQ 1 1 0 0 498b 249b 249b

green open index1 g8FFVd9YSXCA8UXnI0ErqA 1 1 0 0 498b 249b 249b

green open index01 0A60ppwsSZW697xkuPN-Xw 1 1 0 0 498b 249b 249b[root@es-node01 ~]#curl '127.0.0.1:9200/index3?pretty'

{"index3" : {"aliases" : { },"mappings" : { },"settings" : {"index" : {"routing" : {"allocation" : {"include" : {"_tier_preference" : "data_content"}}},"number_of_shards" : "3","provided_name" : "index3","creation_date" : "1727172087833","number_of_replicas" : "2","uuid" : "2WL6y08cTJuhK8tbB9LEPw","version" : {"created" : "8505000"}}}}

}#早期版本,如es1.X,2.X可以直在下面数据目录下直接看到index的名称,5.X版本后只会显示下面信息

#说明: 2WL6y08cTJuhK8tbB9LEPw表示索引ID

## /data/es-data/indices/索引ID/分片ID

[root@es-node01 ~]#ls /data/es-data/indices/ -l

total 16

drwxr-xr-x 6 elasticsearch elasticsearch 4096 Sep 24 18:01 2WL6y08cTJuhK8tbB9LEPw

drwxr-xr-x 4 elasticsearch elasticsearch 4096 Sep 24 15:47 3OXaH2FjQGeqMYL2VNohZQ

drwxr-xr-x 4 elasticsearch elasticsearch 4096 Sep 24 16:03 g8FFVd9YSXCA8UXnI0ErqA

drwxr-xr-x 4 elasticsearch elasticsearch 4096 Sep 24 15:52 SSrQw1IJTGGrakGxX2XU9Q[root@es-node01 ~]#ll /data/es-data/indices/2WL6y08cTJuhK8tbB9LEPw/

total 24

drwxr-xr-x 6 elasticsearch elasticsearch 4096 Sep 24 18:01 ./

drwxr-xr-x 6 elasticsearch elasticsearch 4096 Sep 24 18:01 ../

drwxr-xr-x 5 elasticsearch elasticsearch 4096 Sep 24 18:01 0/

drwxr-xr-x 5 elasticsearch elasticsearch 4096 Sep 24 18:01 1/

drwxr-xr-x 5 elasticsearch elasticsearch 4096 Sep 24 18:01 2/

drwxr-xr-x 2 elasticsearch elasticsearch 4096 Sep 24 18:01 _state/

2.4.1.3 插入文档

范例:

#创建文档时不指定_id,会自动生成

#8.X版本后因为删除了type,所以索引操作:{index}/{type}/需要修改成PUT {index}/_doc/

#index1是索引数据库,book是type

#8.X版本之后#插入文档

curl -X POST 'http://127.0.0.1:9200/test01/_doc/' \

-H 'Content-Type: application/json' \

-d '{"name": "linux","author": "dinginx","version": "1.0"

}'#查询

[root@es-node01 ~]#curl 127.0.0.1:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size dataset.size

green open index02 SSrQw1IJTGGrakGxX2XU9Q 1 1 0 0 498b 249b 249b

green open index03 axKgSJfiTiirt2XiT4cj3A 2 1 0 0 996b 498b 498b

green open test01 HbUbm1WgTKGxqYm3wH3YRg 2 1 0 0 996b 498b 498b

green open index1 g8FFVd9YSXCA8UXnI0ErqA 1 1 1 #显示一条数据 0 12.2kb 6.1kb 6.1kb

green open index01 0A60ppwsSZW697xkuPN-Xw 1 1 0 0 498b 249b 249b

green open index3 2WL6y08cTJuhK8tbB9LEPw 3 2 0 0 2.1kb 747b 747b

green open index2 3OXaH2FjQGeqMYL2VNohZQ 1 1 0 0 498b 249b 249b[root@es-node01 ~]#curl 'http://127.0.0.1:9200/index1/_search?q=name:linux&pretty'

{"took" : 3,"timed_out" : false,"_shards" : {"total" : 1,"successful" : 1,"skipped" : 0,"failed" : 0},"hits" : {"total" : {"value" : 1,"relation" : "eq"},"max_score" : 0.2876821,"hits" : [{"_index" : "index1","_id" : "naaZI5IBAmvSJbVZPOuV","_score" : 0.2876821,"_source" : {"name" : "linux","author" : "wangxiaochun","version" : "1.0"}}]}

}2.4.1.4 查询文档

范例:

#查询索引的中所有文档

[root@es-node01 ~]#curl 'http://127.0.0.1:9200/index1/_search?pretty'

{"took" : 2,"timed_out" : false,"_shards" : {"total" : 1,"successful" : 1,"skipped" : 0,"failed" : 0},"hits" : {"total" : {"value" : 2,"relation" : "eq"},"max_score" : 1.0,"hits" : [{"_index" : "index1","_id" : "naaZI5IBAmvSJbVZPOuV","_score" : 1.0,"_source" : {"name" : "linux","author" : "wangxiaochun","version" : "1.0"}},{"_index" : "index1","_id" : "3","_score" : 1.0,"_source" : {"version" : "2.0","name" : "dinginx","author" : "dingbaohang"}}]}

}

2.4.1.5 更新文档

范例:

#创建或更新文档

[root@es-node03 ~]# curl -X POST 'http://127.0.0.1:9200/test01/_doc/3' -H 'Content-Type: application/json' -d '{"version": "1.0", "name": "golang", "author": "ding"}'#获取文档

[root@es-node03 ~]#curl 127.0.0.1:9200/test01/_doc/3?pretty

{"_index" : "test01","_id" : "3","_version" : 3,"_seq_no" : 12,"_primary_term" : 2,"found" : true,"_source" : {"version" : "1.0","name" : "golang","author" : "ding"}

}#更新版本

[root@es-node03 ~]#curl -X POST 'http://127.0.0.1:9200/test01/_doc/3' -H 'Content-Type: application/json' -d '{"version": "2.0", "name": "golang", "author": "ding"}'

[root@es-node03 ~]#curl 'http://127.0.0.1:9200/test01/_doc/3?pretty'

{"_index" : "test01","_id" : "3","_version" : 2,"_seq_no" : 11,"_primary_term" : 2,"found" : true,"_source" : {"version" : "2.0","name" : "golang","author" : "ding"}

}#Elasticsearch 7.x 示例,创建或更新文档

curl -X POST 'http://127.0.0.1:9200/index1/book/3' -H 'Content-Type: application/json' -d '{"version": "2.0", "name": "golang", "author": "zhang"}'#获取文档

curl 'http://127.0.0.1:9200/index1/_doc/3?pretty'2.4.1.6 删除文档

#8.X版本

curl -XDELETE http://kibana服务器:9200/<索引名称>/_doc/<文档id>

#7.X版本前

curl -XDELETE http://kibana服务器:9200/<索引名称>/type/<文档id>

范例: 删除指定文档

#8.X

[root@es-node01 ~]#curl 'http://127.0.0.1:9200/index1/_search?pretty'

{"took" : 1,"timed_out" : false,"_shards" : {"total" : 1,"successful" : 1,"skipped" : 0,"failed" : 0},"hits" : {"total" : {"value" : 1,"relation" : "eq"},"max_score" : 1.0,"hits" : [{"_index" : "index1","_id" : "naaZI5IBAmvSJbVZPOuV","_score" : 1.0,"_source" : {"name" : "linux","author" : "wangxiaochun","version" : "1.0"}}]}

}

[root@es-node01 ~]#curl -XDELETE 'http://127.0.0.1:9200/index1/_doc/naaZI5IBAmvSJbVZPOuV'

{"_index":"index1","_id":"naaZI5IBAmvSJbVZPOuV","_version":2,"result":"deleted","_shards":{"total":2,"successful":2,"failed":0},"_seq_no":6,"_primary_term":1}[root@es-node01 ~]#

[root@es-node01 ~]#curl 'http://127.0.0.1:9200/index1/_search?pretty'

{"took" : 20,"timed_out" : false,"_shards" : {"total" : 1,"successful" : 1,"skipped" : 0,"failed" : 0},"hits" : {"total" : {"value" : 0,"relation" : "eq"},"max_score" : null,"hits" : [ ]}

}2.4.1.7 删除索引

范例:删除指定索引

[root@es-node01 ~]#curl -XDELETE http://127.0.0.1:9200/index2

{"acknowledged":true}#查看索引是否删除

[root@es-node1 ~]#curl 'http://127.0.0.1:9200/_cat/indices?pretty'#删除多个指定索引

curl -XDELETE 'http://127.0.0.1:9200/index_one,index_two#删除通配符多个索引,需要设置action.destructive_requires_name: false

curl -XDELETE 'http://127.0.0.1:9200/index_*'

范例: 删除所有索引

#以下需要设置action.destructive_requires_name: false

[root@es-node01 ~]#curl -X DELETE "http://127.0.0.1:9200/*"

[root@es-node01 ~]#curl -X DELETE "127.0.0.1:9200/_all"#以下无需配置

[root@es-node01 ~]#for i in `curl 'http://127.0.0.1:9200/_cat/indices?v'|awk '{print $3}'`;do curl -XDELETE http://127.0.0.1:9200/$i;done

2.4.2 Python 脚本:集群健康性检查

#修改脚本中参数值ELASTICSEARCH_URL为ES集群IP

##环境准备

[root@es-node01 ~]#apt install -y python3

[root@es-node01 ~]#apt install -y python3-pip

[root@es-node01 ~]#pip install elasticsearch #必要时安装[root@es-node01 ~]#cat elastic.sh

#!/usr/bin/python3

#coding:utf-8import subprocess

import json# Elasticsearch集群健康检查的URL

ELASTICSEARCH_URL = "http://11.0.1.101:9200/_cluster/health?pretty=true"# 使用subprocess来执行curl命令获取Elasticsearch集群健康状态

try:# 使用curl命令获取Elasticsearch集群的健康状态result = subprocess.Popen(("curl -sXGET " + ELASTICSEARCH_URL), shell=True, stdout=subprocess.PIPE)data = result.stdout.read() # 从stdout中读取curl返回的数据

except Exception as e:print(f"Error occurred while running curl command: {e}")data = None# 如果获取到数据,解析为字典

if data:try:es_dict = json.loads(data) # 将JSON格式的字符串转换为Python字典except json.JSONDecodeError as e:print(f"Failed to parse JSON: {e}")es_dict = {}

else:es_dict = {}# 获取集群状态

status = es_dict.get("status")# 根据状态进行判断并输出结果

if status == "green":print("OK")

elif status == "yellow":print("Warning: Cluster status is yellow.")

elif status == "red":print("Critical: Cluster status is red!")

else:print("Not OK: Unable to determine cluster status.")#执行并测试

[root@es-node01 ~]#python3.8 elastic.sh

OK

示例二:

##环境准备

[root@es-node01 ~]#apt install -y python3

[root@es-node01 ~]#apt install -y python3-pip

[root@es-node01 ~]#pip install elasticsearch #必要时安装[root@es-node01 ~]#cat es_status.py

from elasticsearch import Elasticsearch

import jsondef serialize_es_response(response):if hasattr(response, 'body'):return response.bodyelif isinstance(response, dict):return responseelse:return str(response)def check_cluster_status(es_host='localhost', es_port=9200):# 创建Elasticsearch客户端,添加scheme参数es = Elasticsearch([{'scheme': 'http', 'host': es_host, 'port': es_port}])try:# 获取集群健康状态health = es.cluster.health()print("集群健康状态:")print(json.dumps(serialize_es_response(health), indent=2))# 获取集群状态stats = es.cluster.stats()stats_body = serialize_es_response(stats)print("\n集群统计信息:")print(json.dumps({"cluster_name": stats_body.get('cluster_name'),"status": stats_body.get('status'),"number_of_nodes": stats_body.get('nodes', {}).get('count', {}).get('total'),"number_of_data_nodes": stats_body.get('nodes', {}).get('count', {}).get('data'),"active_primary_shards": stats_body.get('indices', {}).get('shards', {}).get('primary'),"active_shards": stats_body.get('indices', {}).get('shards', {}).get('total'),}, indent=2))# 获取节点信息nodes = es.nodes.info()nodes_body = serialize_es_response(nodes)print("\n节点信息:")for node_id, node_info in nodes_body.get('nodes', {}).items():print(f"节点ID: {node_id}")print(f"节点名称: {node_info.get('name')}")print(f"节点角色: {', '.join(node_info.get('roles', []))}")print(f"IP地址: {node_info.get('ip')}")print("---")except Exception as e:print(f"连接到Elasticsearch集群时发生错误: {str(e)}")if __name__ == "__main__":check_cluster_status()#执行测试

[root@es-node01 ~]#python3.8 es_status.py

集群健康状态:

{"cluster_name": "elk-cluster","status": "green","timed_out": false,"number_of_nodes": 3,"number_of_data_nodes": 3,"active_primary_shards": 1,"active_shards": 2,"relocating_shards": 0,"initializing_shards": 0,"unassigned_shards": 0,"delayed_unassigned_shards": 0,"number_of_pending_tasks": 0,"number_of_in_flight_fetch": 0,"task_max_waiting_in_queue_millis": 0,"active_shards_percent_as_number": 100.0

}集群统计信息:

{"cluster_name": "elk-cluster","status": "green","number_of_nodes": 3,"number_of_data_nodes": 3,"active_primary_shards": null,"active_shards": 2

}节点信息:

节点ID: hYZ9eBiJSv6BYpoWVkbmAA

节点名称: es-node01

节点角色: data, data_cold, data_content, data_frozen, data_hot, data_warm, ingest, master, ml, remote_cluster_client, transform

IP地址: 11.0.1.101

---

节点ID: GpWJv16KSBi9k8pHwfX-ow

节点名称: es-node02

节点角色: data, data_cold, data_content, data_frozen, data_hot, data_warm, ingest, master, ml, remote_cluster_client, transform

IP地址: 11.0.1.102

---

节点ID: BRFD5lSgQ0Cho7dZn0qCaA

节点名称: es-node03

节点角色: data, data_cold, data_content, data_frozen, data_hot, data_warm, ingest, master, ml, remote_cluster_client, transform

IP地址: 11.0.1.103

---

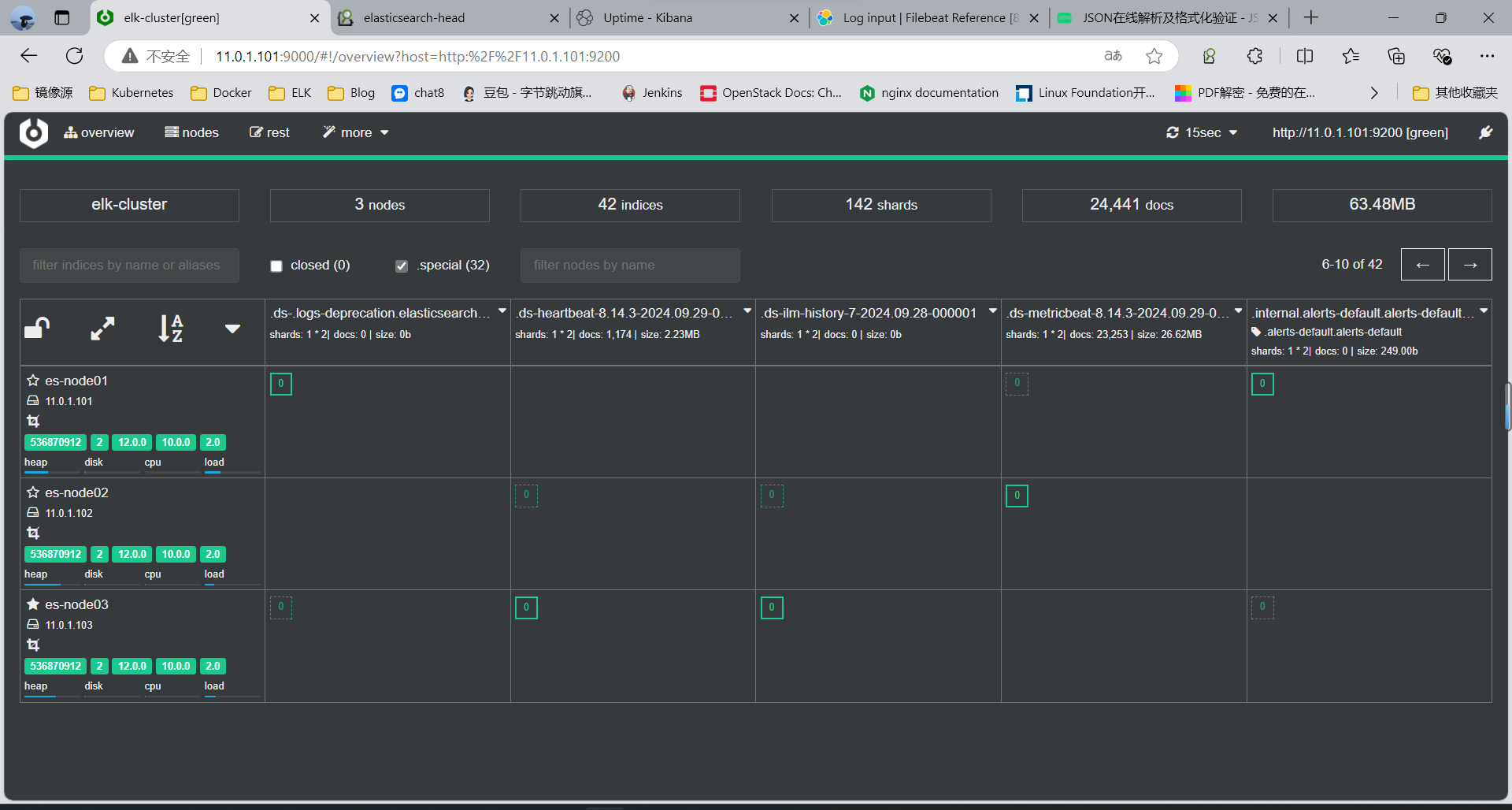

2.5 Elasticsearch 插件

通过使用各种插件可以实现对 ES 集群的状态监控, 数据访问, 管理配置等功能

ES集群状态:

- green 绿色状态:表示集群各节点运行正常,而且没有丢失任何数据,各主分片和副本分片都运行正常

- yellow 黄色状态:表示由于某个节点宕机或者其他情况引起的,node节点无法连接、所有主分片都正常分配,有副本分片丢失,但是还没有丢失任何数据

- red 红色状态:表示由于某个节点宕机或者其他情况引起的主分片丢失及数据丢失,但仍可读取数据和存储

监控下面两个条件都满足才是正常的状态

- 集群状态为 green

- 所有节点都启动

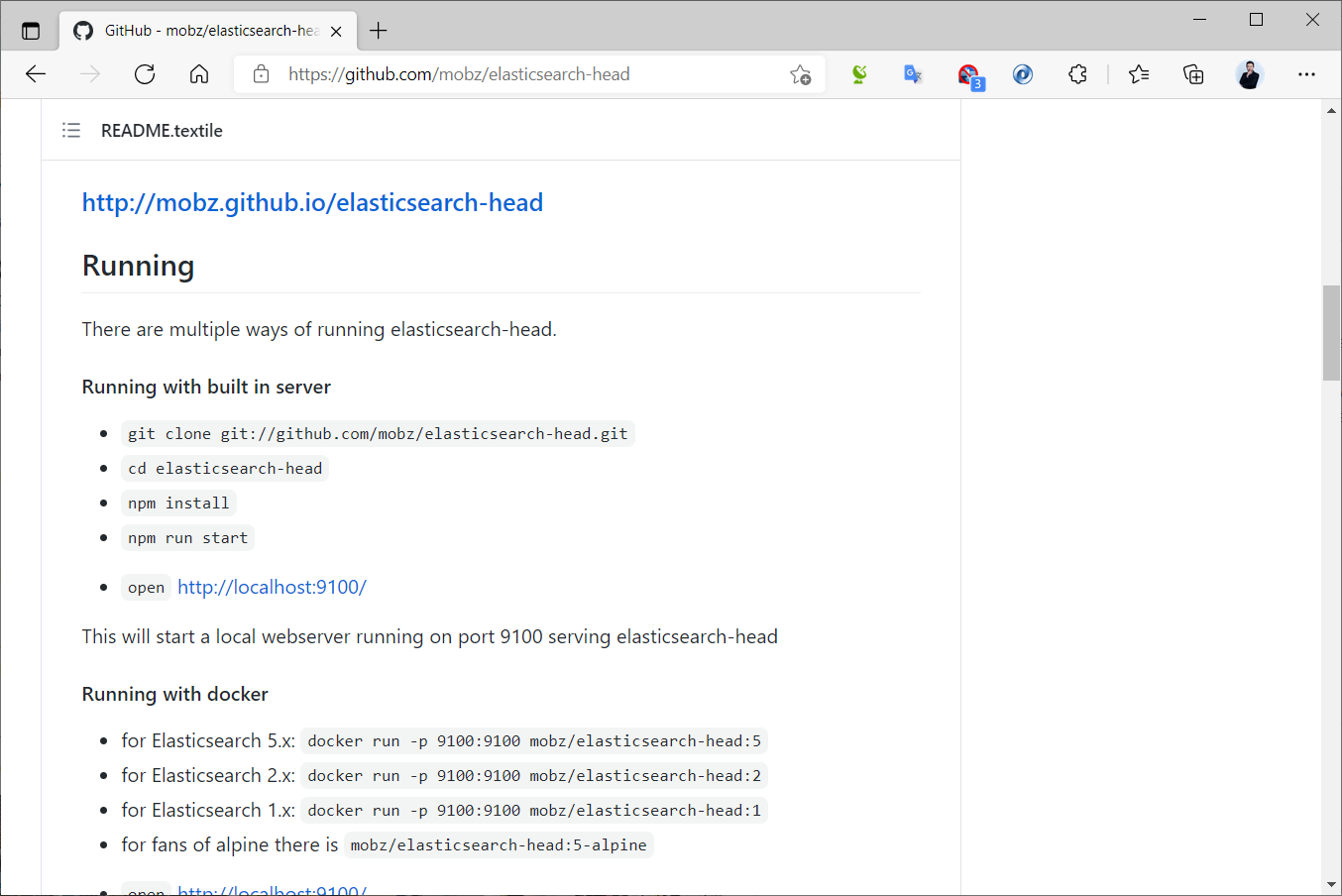

2.5.1 Head 插件

2.5.1.1 Head 介绍

Head 是一个 ES 在生产较为常用的插件,目前此插件更新较慢,还是2018年4月的版本

git地址: https://github.com/mobz/elasticsearch-head

2.5.2 Cerebro 插件

2.5.2.1 Cerebro 介绍

Cerebro 是开源的 elasticsearch 集群 Web 管理程序,此工具应用也很广泛,此项目升级比 Head 频繁

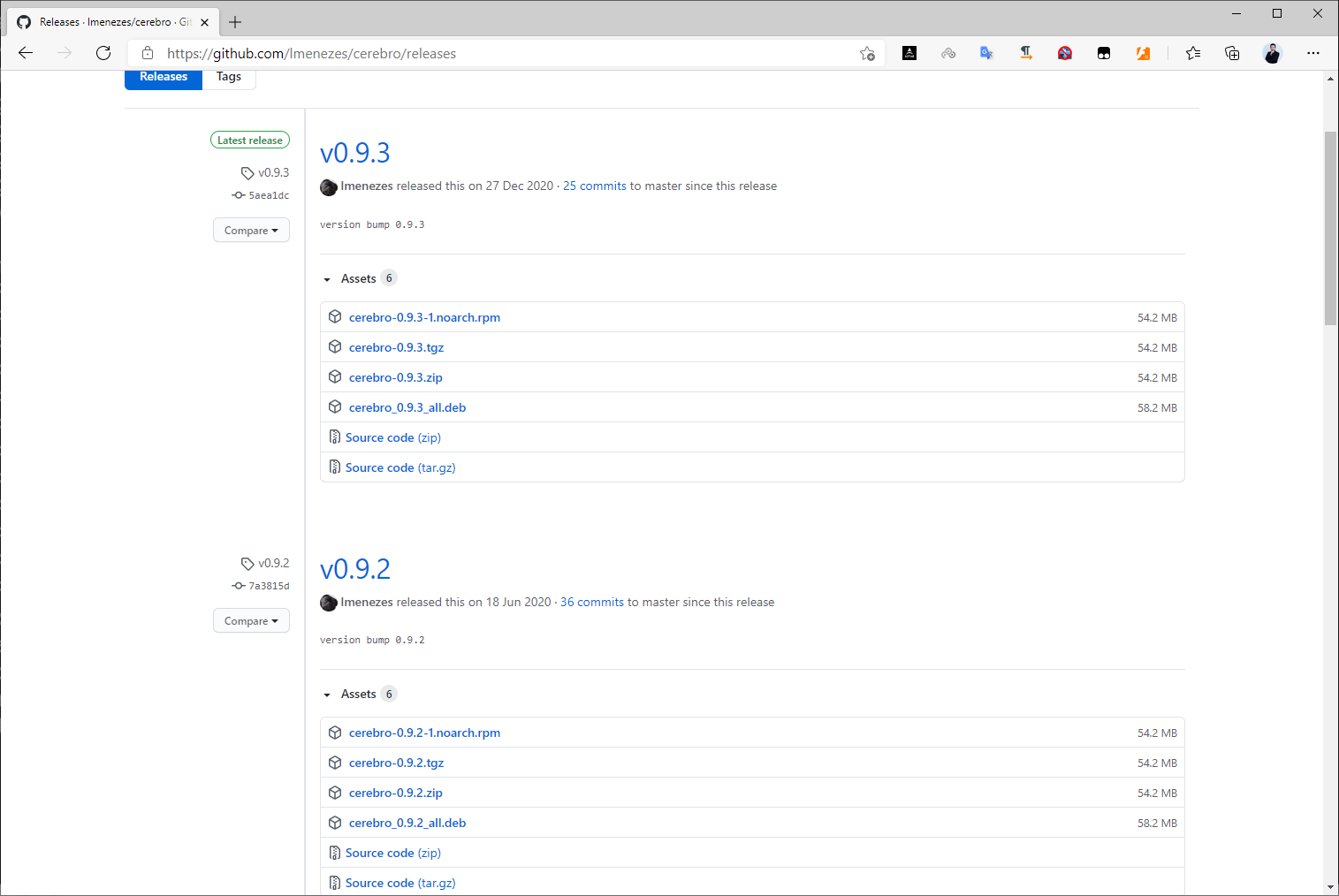

当前最新版本为Apr 10, 2021发布的v0.9.4

Cerebro v0.9.3 版本之前需要 java1.8 或者更高版本

Cerebro v0.9.4 版本更高版本需要 Java11

github链接:

https://github.com/lmenezes/cerebro

2.6 Elasticsearch 集群工作原理

官方说明

https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html

单机节点 ES 存在单点可用性和性能问题,可以实现Elasticsearch多机的集群解决

Elasticsearch 支持集群模式

- 能够提高Elasticsearch可用性,即使部分节点停止服务,整个集群依然可以正常服务

- 能够增大Elasticsearch的性能和容量,如内存、磁盘,使得Elasticsearch集群可以支持PB级的数据

2.6.1 ES 节点分类

Elasticsearch 集群的每个节点的角色有所不同,但都会保存集群状态Cluster State的相关的数据信息

- 节点信息:每个节点名称和地址

- 索引信息:所有索引的名称,配置,数据等

ES的节点有下面几种

- Master 节点

有从提主,无从创从

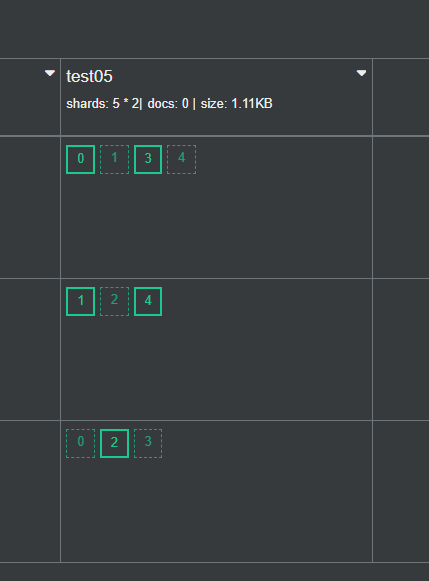

2.6.3.3 默认分片配置

默认情况下,elasticsearch将分片相关的配置从配置文件中的属性移除了,可以借助于一个默认的模板接口将索引的分片属性更改成我们想要的分片效果

curl -X PUT 'http://127.0.0.1:9200/_template/template_http_request_record' \

-H 'Content-Type: application/json' \

-d '{"index_patterns": ["*"],"settings": {"number_of_shards": 5,"number_of_replicas": 1}

}'

解释:

-

-X PUT:使用PUT方法发送请求,这是创建或替换 Elasticsearch 索引模板的标准方法。 -

'http://127.0.0.1:9200/_template/template_http_request_record':这是请求的 URL,指向 Elasticsearch 的模板 API,用于创建或更新一个名为template_http_request_record的模板。 -

-H 'Content-Type: application/json':指定请求的内容类型为application/json,这是 Elasticsearch API 的标准要求。 -

-d '...':这是请求的数据部分,包含我们要发送的 JSON 数据:"index_patterns": ["\*"]:指定模板适用的索引模式,"*"表示匹配所有索引。"number_of_shards": 5:设置每个索引的分片数量为 5。"number_of_replicas": 1:为每个分片设置 1 个副本。

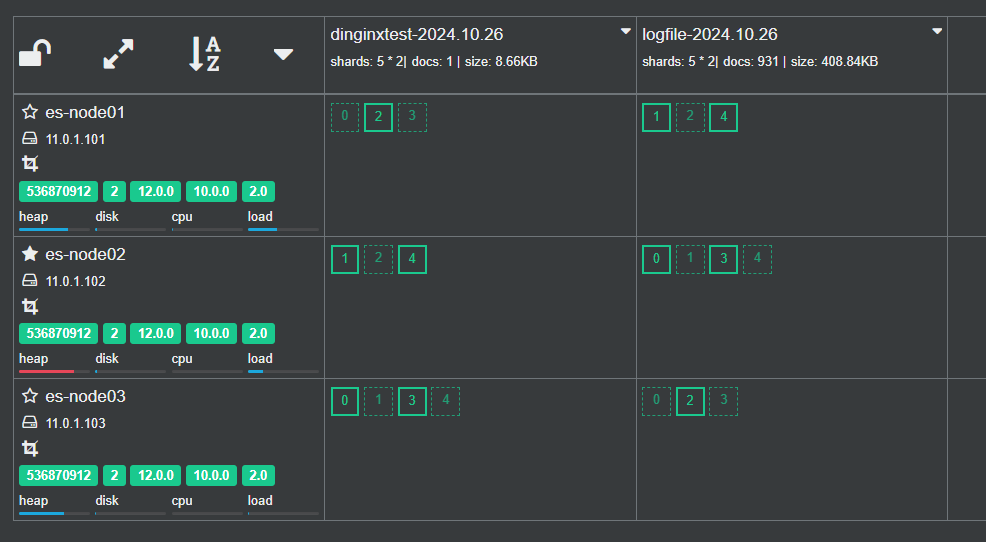

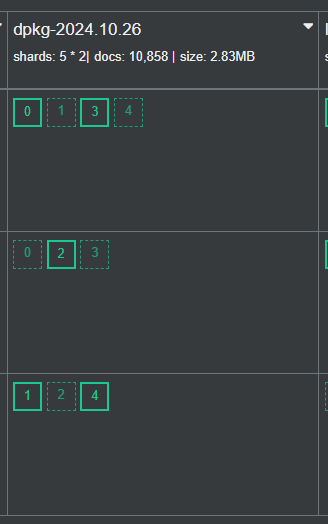

验证生成的索引:

2.6.3.4 数据同步机制

Elasticsearch主要依赖Zen Discovery协议来管理集群中节点的加入和离开,以及选举主节点(master node)。Zen Discovery是Elasticsearch自带的一个协议,不依赖于任何外部服务。

然而,Elasticsearch对于一致性的处理与传统的一致性协议(如Raft或Paxos)有所不同。它采取了一种“最终一致性”(eventual consistency)的模型。

每个索引在Elasticsearch中被分成多个分片(shard),每个分片都有一个主分片和零个或多个副本分片。主分片负责处理所有的写操作,并将写操作复制到其副本分片。当主分片失败时,一个副本分片会被提升为新的主分片。

Elasticsearch为了提高写操作的性能,允许在主分片写入数据后立即确认写操作,而不需要等待数据被所有副本分片确认写入。这就意味着,在某些情况下,主分片可能会确认写操作成功,而实际上副本分片还没有完全写入数据。这就可能导致数据在短时间内在主分片和副本分片之间不一致。然而,一旦所有副本分片都确认写入了数据,那么系统就会达到“最终一致性”。

为了保证搜索的准确性,Elasticsearch还引入了一个"refresh"机制,每隔一定时间(默认为1秒)将最新的数据加载到内存中,使其可以被搜索到。这个过程是在主分片和所有副本分片上独立进行的,所以可能存在在短时间内搜索结果在不同分片之间有些许不一致的情况,但随着时间的推移,所有分片上的数据都会达到一致状态。

综上所述,Elasticsearch通过Zen Discovery协议管理节点和选举,通过主分片和副本分片的机制保证数据的最终一致性,并通过"refresh"机制保证数据的搜索准确性。

2.6.2 ES 集群选举

ES集群的选举是由master-eligble(有资格充当的master节点)发起

当该节点发现当前节点不是master,并且该节点通过ZenDiscovery模块ping其他节点,如果发现超过mininum_master_nodes个节点无法连接master时,就会发起新的选举,选举时,优先选举ClusterStateVersion最大的Node节点,如果ClusterStateVersion相同,则选举ID最小的Node

ClusterStateVersion是集群的状态版本号,每一次集群选举ClusterStateVersion都会更新,因此最大的

ClusterStateVersion是与原有集群数据最接近或者是相同的,这样就尽可能的避免数据丢失。

Node的ID是在第一次服务启动时随机生成的,直接选用最小ID的Node,主要是为了选举的稳定性,尽量少出现选举不出来的问题。

每个集群中只有一个Master节点

每个集群中损坏的节点不能超过集群一半以上,否则集群将无法提供服务

2.6.3 ES 集群分片 Shard 和副本 Replication

2.6.3.1 分片 Shard

2.6.3.2 副本 Replication

2.6.3.3 默认分片配置

2.6.3.4 数据同步机制

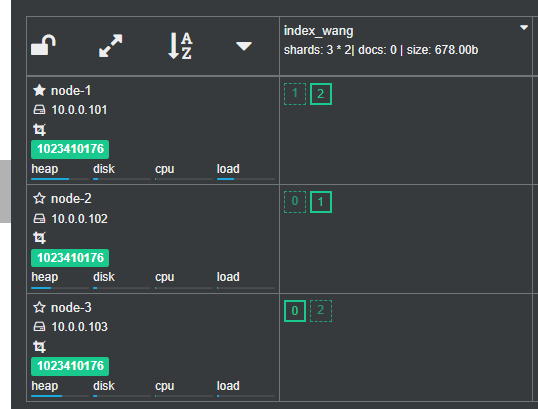

2.6.4 ES 集群故障转移

故障转移指的是,当集群中有节点发生故障时,ES集群会进行自动修复

假设由3个节点的ES集群组成,有一个索引index_wang,三个主分片,三个副本分片,如果其中一个节点宕机

ES集群的故障转移流程如下

重新选举

假设当前Master节点 node3 节点宕机,同时也导致 node3 的原有的P0和R2分片丢失node1 和 node2 发现 Master节点 node3 无法响应

过一段时间后会重新发起 master 选举

比如这次选择 node1 为 新 master 节点;此时集群状态变为 Red 状态

其实无论选举出的新Master节点是哪个节点,都不影响后续的分片的重新分布结果

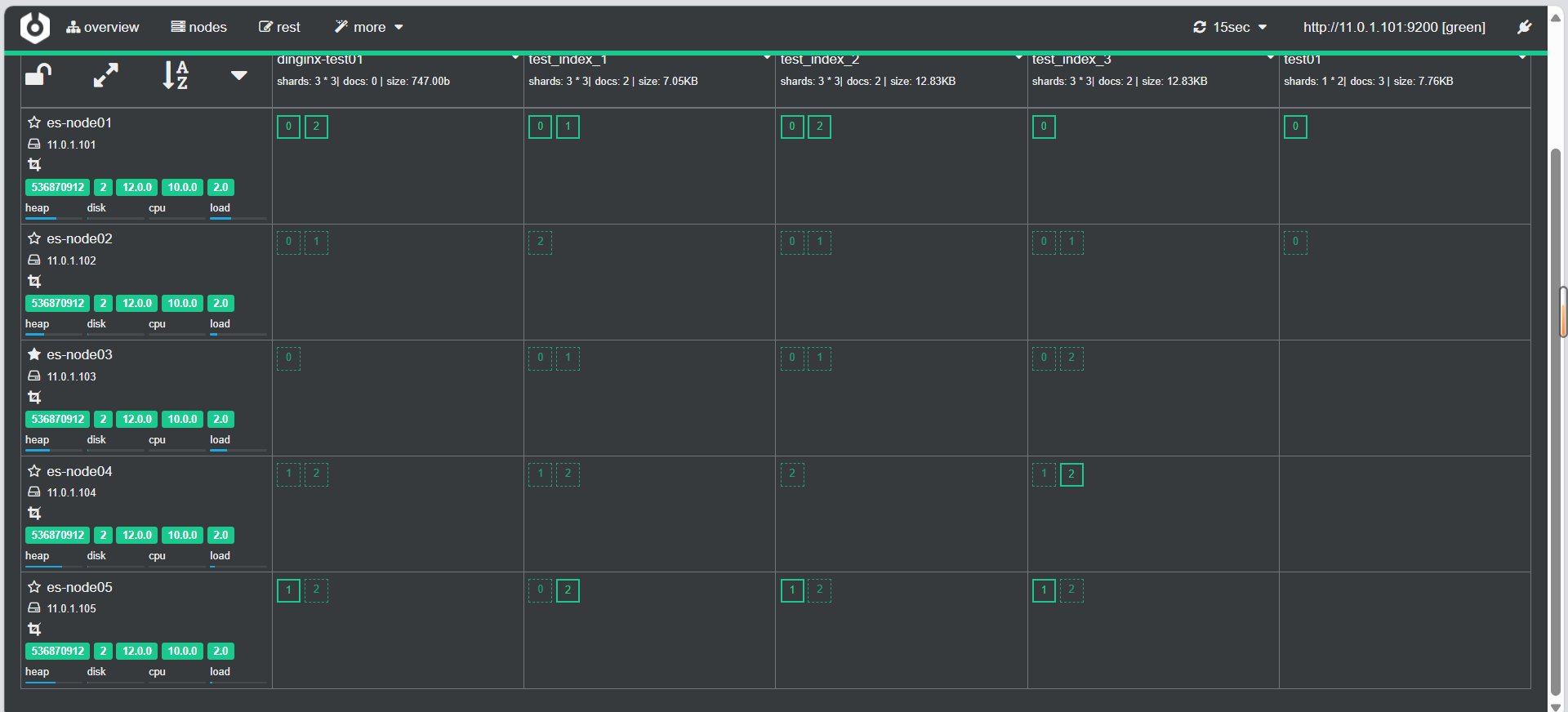

2.7 Elasticsearch 集群扩容和缩容

2.7.1 集群扩容

新加入两个节点node04和node05,变为Data节点

在两个新节点安装 ES,并配置文件如下

环境准备:

#es-node04: 11.0.1.104

#es-node05: 11.0.1.105#/etc/hosts文件

[root@es-node04 ~]#cat >> /etc/hosts <<EOF

11.0.1.101 es-node01.dinginx.org

11.0.1.102 es-node02.dinginx.org

11.0.1.103 es-node03.dinginx.org

11.0.1.104 es-node04.dinginx.org

11.0.1.105 es-node05.dinginx.org

EOF#文件准备

[root@es-node04 ~]#ll -h elasticsearch-8.14.3-amd64.deb

-rw-r--r-- 1 root root 562M Sep 23 21:53 elasticsearch-8.14.3-amd64.deb

[root@es-node04 ~]#dpkg -i elasticsearch-8.14.3-amd64.deb #同步配置文件

[root@es-node01 ~]#scp /etc/elasticsearch/elasticsearch.yml 11.0.1.104:/etc/elasticsearch/elasticsearch.yml

[root@es-node01 ~]#scp /etc/elasticsearch/elasticsearch.yml 11.0.1.105:/etc/elasticsearch/elasticsearch.yml[root@es-node01 ~]# scp /etc/elasticsearch/jvm.options 11.0.1.104:/etc/elasticsearch/jvm.options

[root@es-node01 ~]# scp /etc/elasticsearch/jvm.options 11.0.1.105:/etc/elasticsearch/jvm.options

配置文件内容修改

vim /etc/elasticsearch/elasticsearch.yml

cluster.name: elk-cluster #和原集群名称相同#当前节点在集群内的节点名称,同一集群中每个节点要确保此名称唯一

node.name: es-node04 #第二个新节点为es-node05#集群监听端口对应的IP,默认是127.0.0.1:9300

network.host: 0.0.0.0#指定任意集群节点即可

discovery.seed_hosts: ["11.0.1.101","11.0.1.102","11.0.1.103"]#集群初始化时指定希望哪些节点可以被选举为 master,只在初始化时使用,新加节点到已有集群时此项可不配置

cluster.initial_master_nodes: ["11.0.1.101","11.0.1.102","11.0.1.103"]

#cluster.initial_master_nodes: ["ubuntu2204.dinginx.org"] #如果不参与主节点选举设为false,默认值为true

node.master: false

#存储数据,默认值为true,此值为false则不存储数据而成为一个路由节点#如果将原有的true改为false,需要先执行/usr/share/elasticsearch/bin/elasticsearch-node repurpose 清理数据

node.data: true

systemctl restart elasticsearch

2.7.2 集群缩容

从集群中删除两个节点node4和node5,在两个节点停止服务,即可自动退出集群

systemctl stop elasticsearch

2.7.3 Data 节点变为Coordinating 节点

将一个节点node3变为Coordinating 节点

在node3执行下面操作

vim /etc/elasticsearch/elasticsearch.yml

node.master: false#存储数据,默认值为true,此值为false则不存储数据而成为一个路由节点,如果将原有的true改为false,需要先执行 清理数据

node.data: false#无法启动

systemctl restart elasticsearch#清理数据

/usr/share/elasticsearch/bin/elasticsearch-node repurpose#重启

systemctl start elasticsearch

#注意:Coordinating 节点在 Cerebro 等插件中前端数据页面不显示,在nodes页才会显示出来

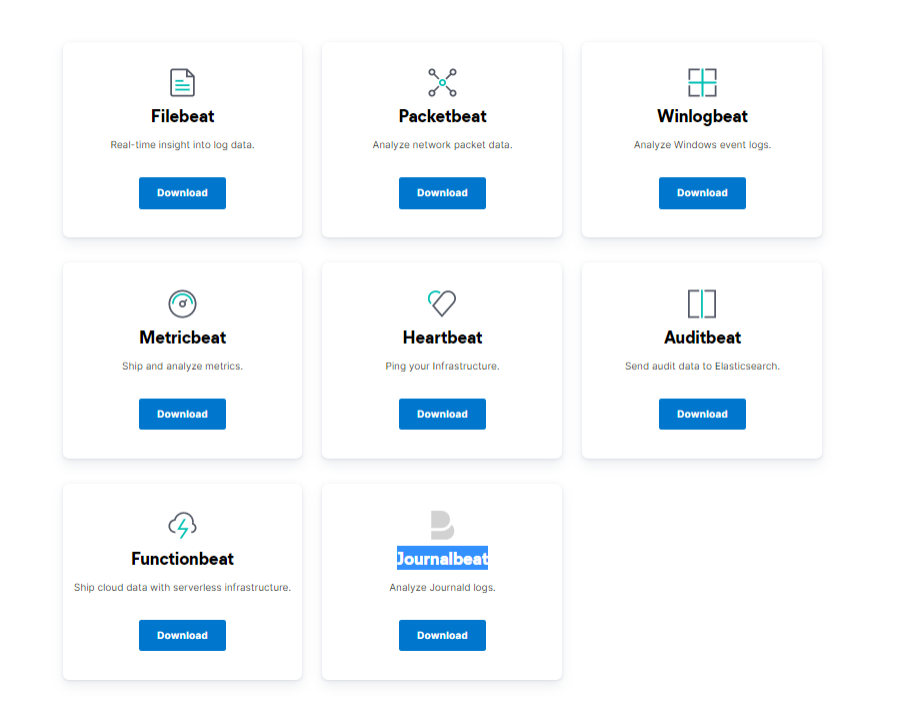

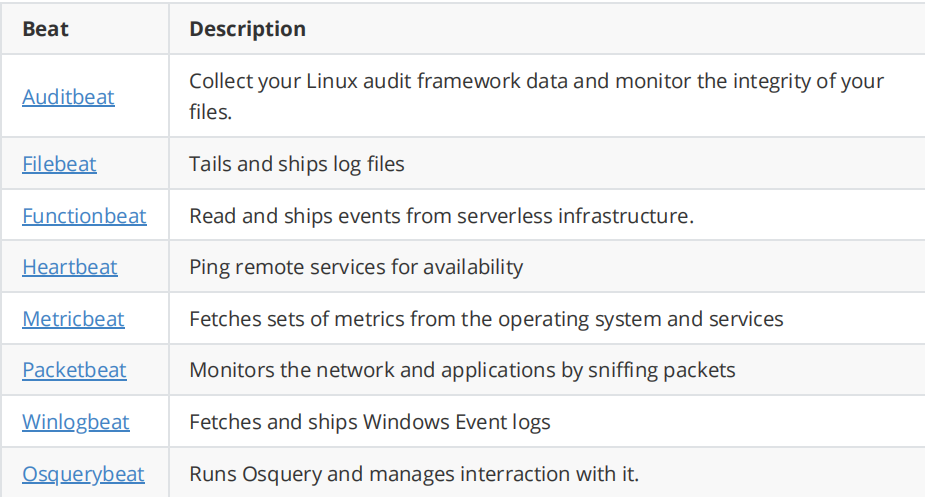

3 Beats 收集数据

Beats 是一个免费且开放的平台,集合了多种单一用途数据采集器。它们从成百上千或成千上万台机器和系统向 Logstash 或 Elasticsearch 发送数据。

虽然利用 logstash 就可以收集日志,功能强大,但由于 Logtash 是基于Java实现,需要在采集日志的主机上安装JAVA环境

logstash运行时最少也会需要额外的500M的以上的内存,会消耗比较多的内存和磁盘空间,可以采有基于Go开发的 Beat 工具代替 Logstash 收集日志,部署更为方便,而且只占用10M左右的内存空间及更小的磁盘空间。

官方链接:

https://www.elastic.co/cn/beats/#Github 链接

https://github.com/elastic/beats

https://www.elastic.co/cn/downloads/beats

Beats 是一些工具集,包括以下,其中 filebeat 应用最为广泛‘

filebeat: 收集日志文件数据。最常用的工具

packetbeat: 用于收集网络数据。一般用zabbix实现此功能

metricbeat: 从OS和服务收集指标数据,比如系统运行状态、CPU 内存利用率等。

winlogbeat: 从Windows平台日志收集工具。

heartbeat: 定时探测服务是否可用。支持ICMP、TCP 和 HTTP,也支持TLS、身份验证和代理

auditbeat: 收集审计日志

Functionbeat: 使用无服务器基础架构提供云数据。面向云端数据的无服务器采集器,处理云数据

注意: Beats 版本要和 Elasticsearch 相同的版本,否则可能会出错

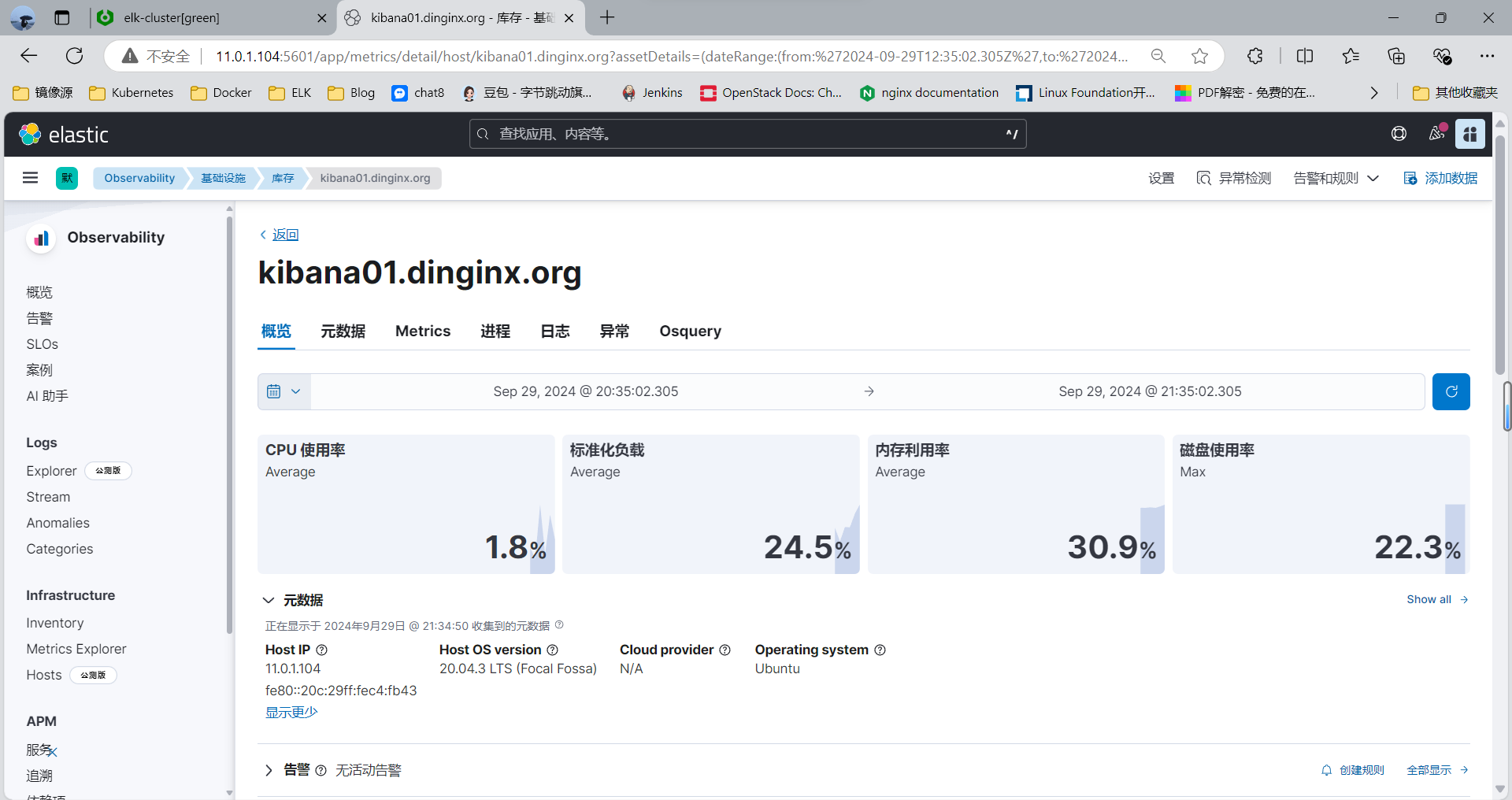

3.1 利用 Metricbeat 监控性能相关指标

Metricbeat 可以收集指标数据,比如系统运行状态、CPU、内存利用率等。

生产中一般用 zabbix 等专门的监控系统实现此功能

官方配置说明

https://www.elastic.co/guide/en/beats/metricbeat/current/configuring-howto-metricbeat.html

3.1.1 下载 metricbeat 并安装

#下载链接

https://www.elastic.co/cn/downloads/beats/metricbeat

https://mirrors.tuna.tsinghua.edu.cn/elasticstack/8.x/apt/pool/main/m/metricbeat/

https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/apt/pool/main/m/metricbeat/

范例:

[root@kibana01 ~]#ll metricbeat-8.14.3-amd64.deb -h

-rw-r--r-- 1 root root 53M Sep 23 22:10 metricbeat-8.14.3-amd64.deb

[root@kibana01 ~]#dpkg -i metricbeat-8.14.3-amd64.deb

3.1.2 修改配置

[root@kibana01 ~]# vim /etc/metricbeat/metricbeat.yml

#setup.kibana:

# host: "11.0.1.104:5601" #指向kabana服务器地址和端口,非必须项,即使不设置Kibana也可以通过ES获取Metrics信息

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["11.0.1.101:9200","11.0.1.102:9200","11.0.1.103:9200"] #指向任意一个ELK集群节点即可[root@kibana01 ~]#grep -Ev '^#|^$' /etc/metricbeat/metricbeat.yml |grep -Ev '^.*#'

metricbeat.config.modules:path: ${path.config}/modules.d/*.ymlreload.enabled: false

setup.template.settings:index.number_of_shards: 1index.codec: best_compression

setup.kibana:host: "11.0.1.104:5601"

output.elasticsearch:hosts: ["11.0.1.101:9200","11.0.1.102:9200","11.0.1.103:9200"]preset: balanced

processors:- add_host_metadata: ~- add_cloud_metadata: ~- add_docker_metadata: ~- add_kubernetes_metadata: ~

3.1.3 启动服务

[root@kibana01 ~]#systemctl enable --now metricbeat.service

[root@kibana01 ~]#systemctl is-active metricbeat.service

active

3.2 利用 Heartbeat 监控

heartbeat 用来定时探测服务是否正常运行。支持ICMP、TCP 和 HTTP,也支持TLS、身份验证和代理

官方heartbeat配置文档

https://www.elastic.co/guide/en/beats/heartbeat/current/configuring-howto-heartbeat.html

3.2.1 下载并安装

#下载链接

https://www.elastic.co/cn/downloads/beats/heartbeat

https://mirrors.tuna.tsinghua.edu.cn/elasticstack/8.x/apt/pool/main/h/heartbeat-elastic/

https://mirrors.tuna.tsinghua.edu.cn/elasticstack/7.x/apt/pool/main/h/heartbeat-elastic/#新版

[root@kibana01 ~]#ll

total 1043708

drwx------ 6 root root 4096 Oct 2 13:13 ./

drwxr-xr-x 21 root root 4096 Sep 7 2023 ../

-rw-r--r-- 1 root root 8894 Sep 29 09:53 1

-rw------- 1 root root 4710 Sep 29 22:53 .bash_history

-rw-r--r-- 1 root root 3125 Nov 30 2023 .bashrc

drwx------ 2 root root 4096 Oct 2 2023 .cache/

-rw-r--r-- 1 root root 588546352 Sep 23 21:53 elasticsearch-8.14.3-amd64.deb

-rw-r--r-- 1 root root 50436520 Sep 23 22:07 filebeat-8.14.3-amd64.deb

-rw-r--r-- 1 root root 33725040 Sep 23 22:09 heartbeat-8.14.3-amd64.deb

-rw-r--r-- 1 root root 3411.0.10 Sep 23 21:57 kibana-8.14.3-amd64.deb

-rw-r--r-- 1 root root 54877340 Sep 23 22:10 metricbeat-8.14.3-amd64.deb

-rw-r--r-- 1 root root 161 Dec 5 2019 .profile

drwx------ 3 root root 4096 Sep 7 2023 snap/

drwx------ 2 root root 4096 Sep 7 2023 .ssh/

drwxr-xr-x 2 root root 4096 Oct 2 2023 .vim/

-rw------- 1 root root 12996 Sep 29 23:42 .viminfo

-rw------- 1 root root 507 Oct 2 13:13 .Xauthority

[root@kibana01 ~]#dpkg -i heartbeat-8.14.3-amd64.deb#准备需要监控的服务httpd#修改配置文件

[root@kibana01 ~]#grep -Ev '^(.*#)|^$' /etc/heartbeat/heartbeat.yml

heartbeat.config.monitors:path: ${path.config}/monitors.d/*.ymlreload.enabled: falsereload.period: 5s

heartbeat.monitors:

- type: httpenabled: true #改为trueid: dingbh.topname: dingbh.topurls: ["http://11.0.1.104:80"] #被监控http服务域名schedule: '@every 10s'- type: tcpid: myhost-tcp-echoname: My Host TCP Echohosts: ["11.0.1.105:80"] # default TCP Echo Protocolschedule: '@every 5s'

- type: icmp #添加下面5行,用于监控ICMPid: ping-myhostname: My Host Pinghosts: ["11.0.1.100"]schedule: '*/5 * * * * * *'setup.template.settings:index.number_of_shards: 1index.codec: best_compression

setup.kibana:host: "11.0.1.104:5601" #kibana服务器地址

output.elasticsearch:hosts: ["11.0.1.101:9200","11.0.1.102:9200","11.0.1.103:9200"] #es服务器IPpreset: balanced

processors:- add_observer_metadata:#启动服务

systemctl enable --now heartbeat-elastic.service

3.2.2 head 插件查看索引

3.2.3 通过 Kibana 查看收集的性能指标

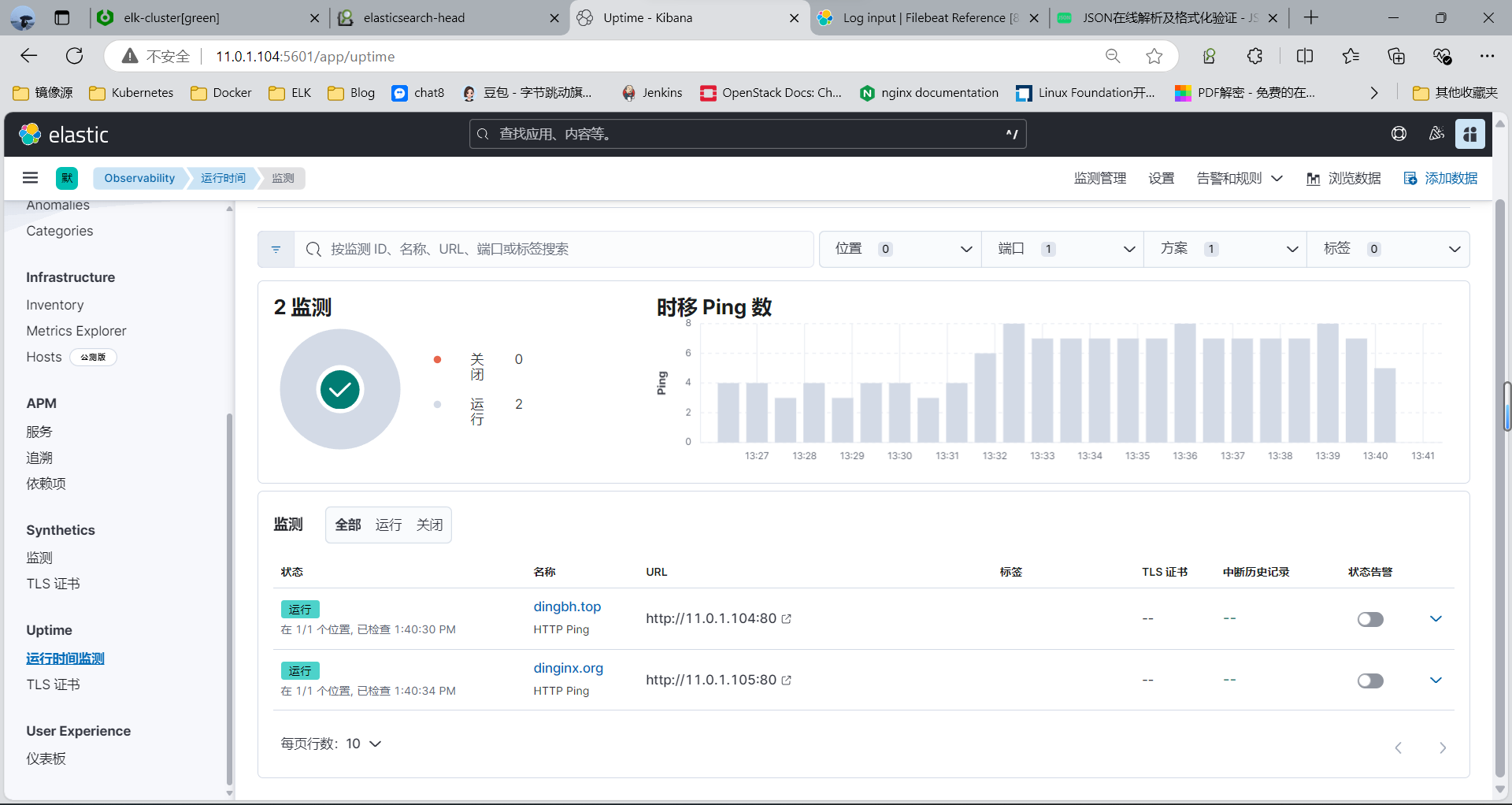

通过Kibana 可以看到主机的状态

新版

运行时间 — 监测

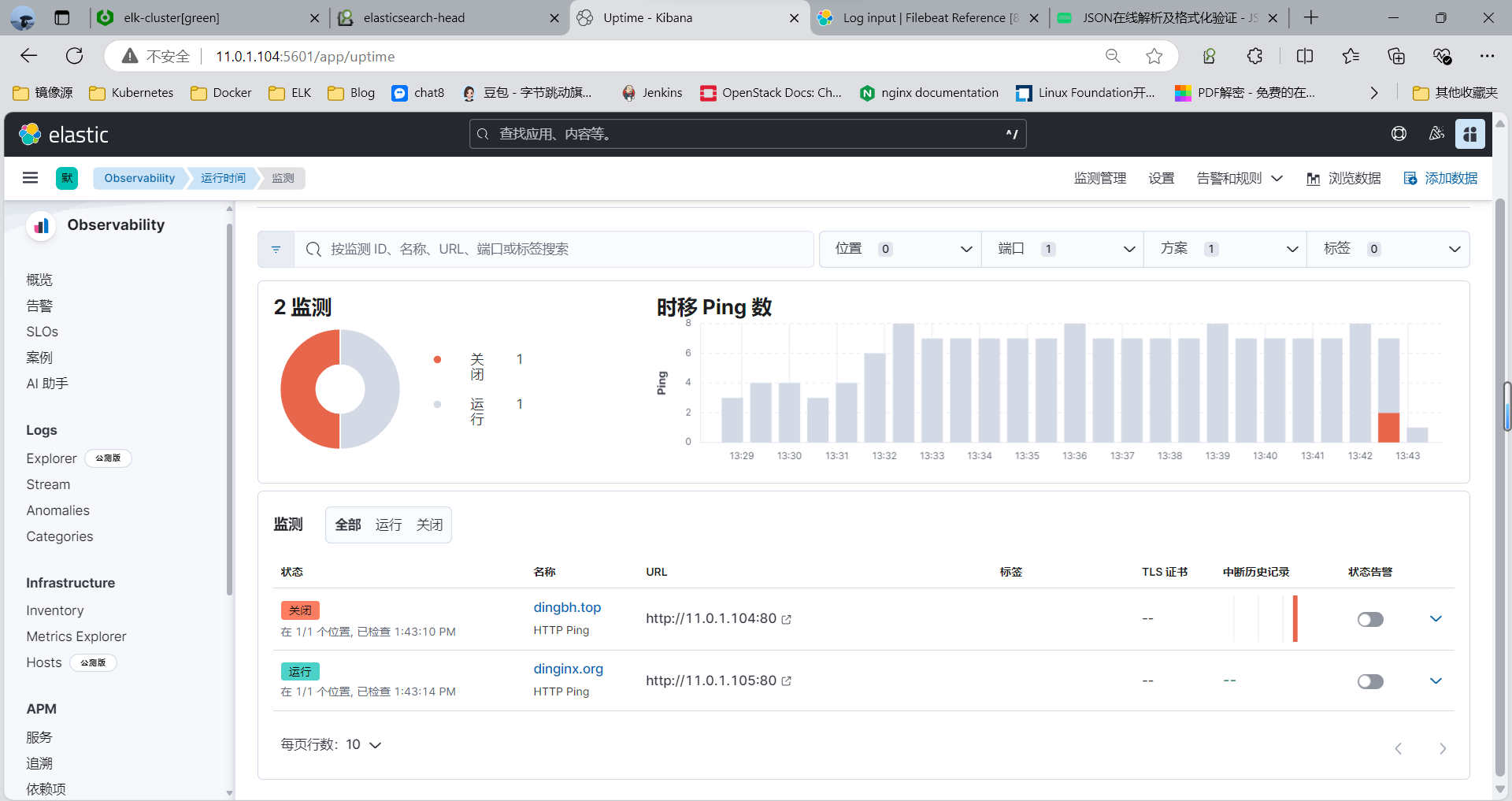

3.2.4 停止监控的服务再观察 Kibana

#Down 机后Refresh ,可以看到下面的橙色

systemctl stop nginx

3.3 利用 Filebeat 收集日志

Filebeat 是用于转发和集中日志数据的轻量级传送程序。作为服务器上的代理安装,Filebeat监视您指定的日志文件或位置,收集日志事件,并将它们转发到Elasticsearch或Logstash进行索引。

Logstash 也可以直接收集日志,但需要安装JDK并且会占用至少500M 以上的内存

生产一般使用filebeat代替logstash, 基于go开发,部署方便,重要的是只需要10M多内存,比较节约资源.

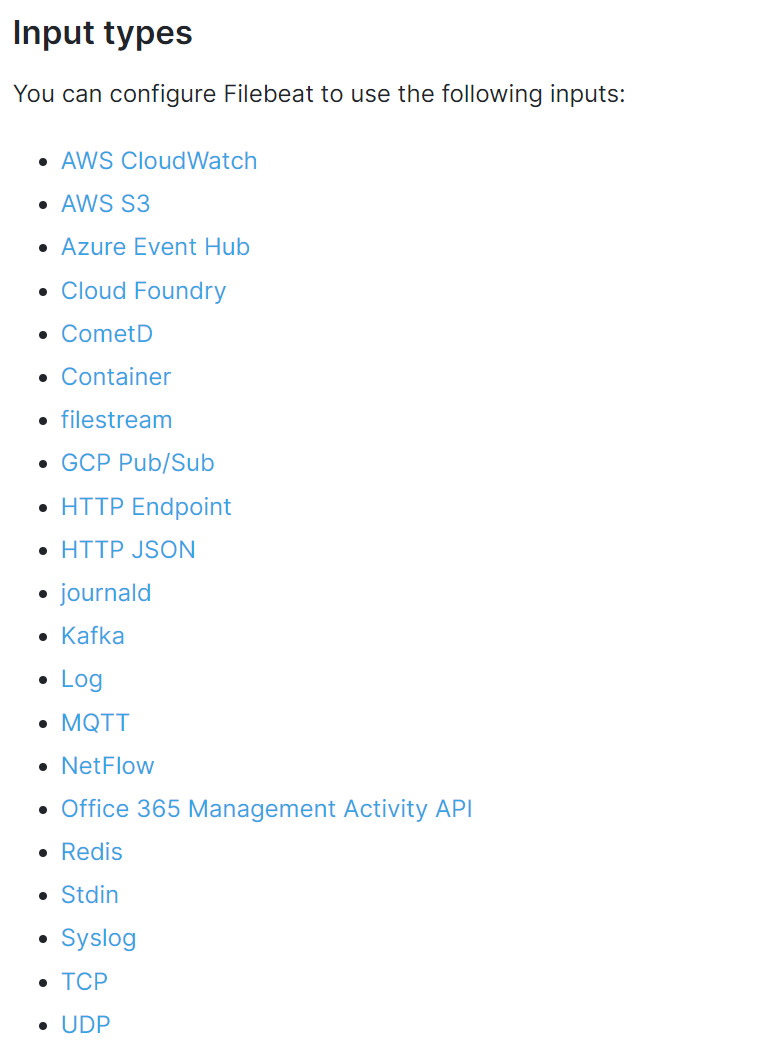

filebeat 支持从日志文件,Syslog,Redis,Docker,TCP,UDP,标准输入等读取数据,对数据做简单处理,再输出至Elasticsearch,logstash,Redis,Kafka等

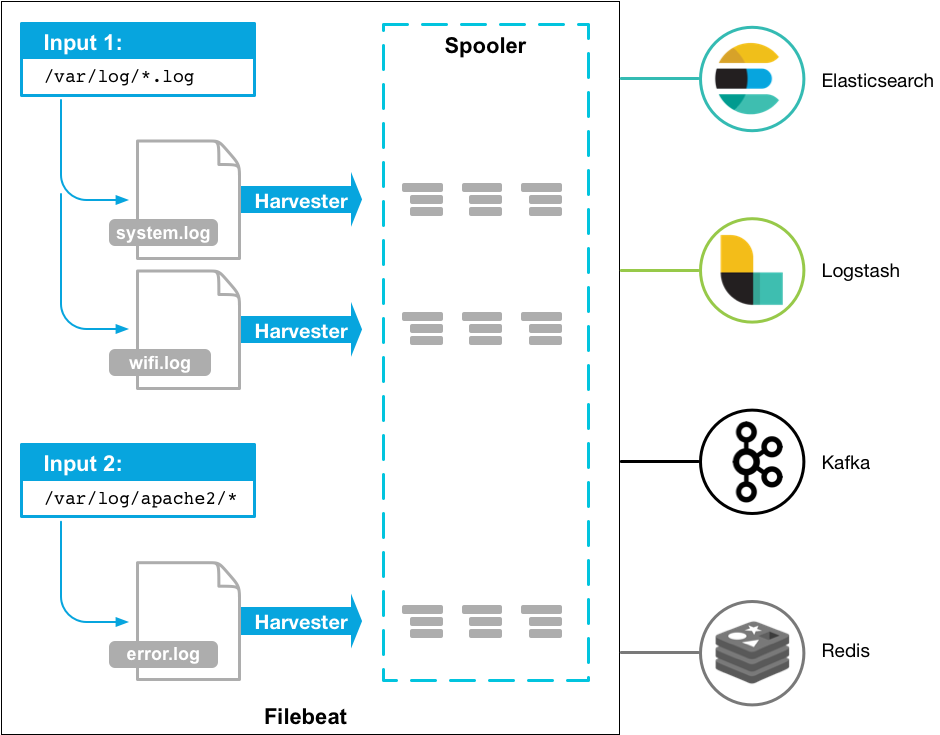

Filebeat的工作方式如下:

- 启动Filebeat时,它将启动一个或多个输入源,这些输入将在为日志数据指定的位置中查找。

- 对于Filebeat所找到的每个日志,Filebeat都会启动收集器harvester进程。

- 每个收集器harvester都读取一个日志以获取新内容,并将新日志数据发送到libbeat

- libbeat会汇总事件并将汇总的数据发送到为Filebeat配置的输出。

Filebeat 官方说明

https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-overview.html

https://www.elastic.co/guide/en/beats/filebeat/current/configuring-howto-filebeat.html

输入和输入官方说明

https://www.elastic.co/guide/en/beats/filebeat/current/configuration-filebeat-options.html

https://www.elastic.co/guide/en/beats/filebeat/current/configuring-output.html

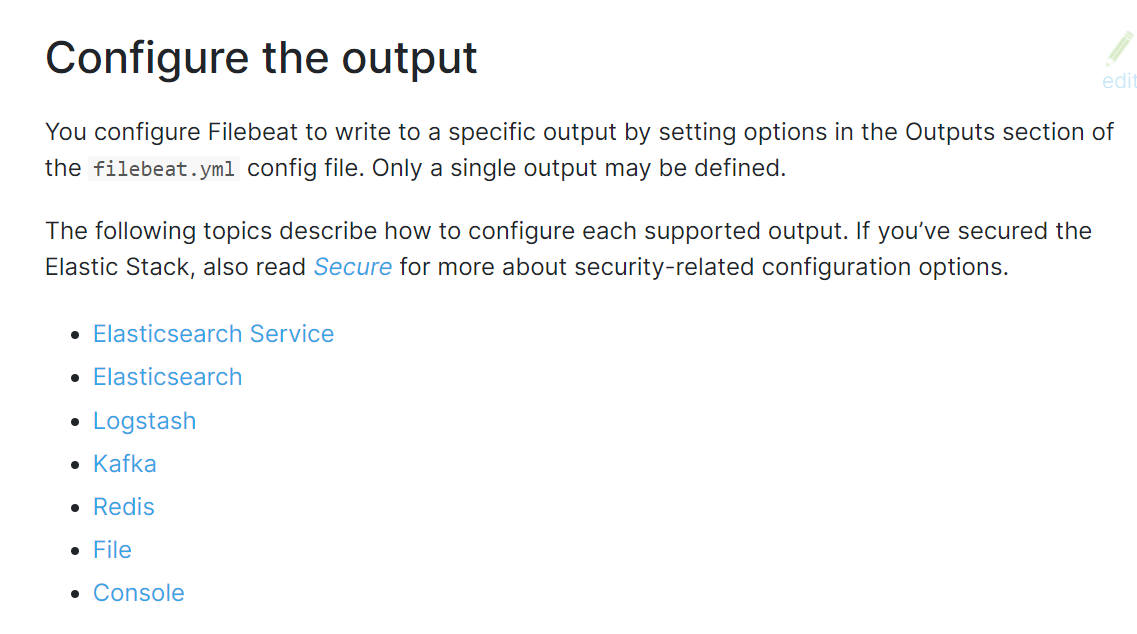

注意: Filebeat 支持多个输入,但不支持同时有多个输出,如果多输出,会报错如下

Exiting: error unpacking config data: more than one namespace configured accessing 'output' (source:'/etc/filebeat/stdout_file.yml')

3.3.1 安装 Filebeat 和配置说明

下载链接

https://www.elastic.co/cn/downloads/beats/filebeat

3.3.1.1 安装 Filebeat

[root@kibana01 ~]#ll filebeat-8.14.3-amd64.deb -h

-rw-r--r-- 1 root root 49M Sep 23 14:07 filebeat-8.14.3-amd64.deb

[root@kibana01 ~]#dpkg -i filebeat-8.14.3-amd64.deb #默认没有启动

[root@kibana01 ~]#systemctl enable --now filebeat.service #filebeat以root身份启动

[root@kibana01 ~]#systemctl status filebeat.service |ps aux|grep filebeat

root 34670 1.3 2.5 1842004 101976 ? Ssl 14:05 0:00 /usr/share/filebeat/bin/filebeat --environment systemd -c /etc/filebeat/filebeat.yml --path.home /usr/share/filebeat --path.config /etc/filebeat --path.data /var/lib/filebeat --path.logs /var/log/filebeat

root 34706 0.0 0.0 6432 660 pts/1 S+ 14:06 0:00 grep --color=auto filebeat#先停止服务,方便后续调试

[root@kibana01 ~]#systemctl stop filebeat

3.3.1.2 Filebeat 配置

配置文件官方说明

https://www.elastic.co/guide/en/beats/filebeat/current/configuring-howto-filebeat.html

https://www.elastic.co/guide/en/beats/filebeat/8.3/configuration-general-options.html

Filebeat的 默认配置文件是/etc/filebeat/filebeat.yml,遵循YAML语法。常见配置包括如下

- Filebeat

- Output

- Shipper

- Logging(可选)

- Run Options(可选)

filebeat.yml的格式说明

{input_type: log

#指定输入类型

paths

#支持基本的正则,所有golang glob都支持,支持/var/log/*/*.log

encoding

#plain, latin1, utf-8, utf-16be-bom, utf-16be, utf-16le, big5, gb18030, gbk, hzgb-2312,

euc-kr, euc-jp, iso-2022-jp, shift-jis, and so on

exclude_lines

#支持正则 排除匹配的行,如果有多行,合并成一个单一行来进行过滤

include_lines

#支持正则 include_lines执行完毕之后会执行exclude_lines。

exclude_files

#支持正则 排除匹配的文件

exclude_files: ['.gz$']

tags

#列表中添加标签,用过过滤

filebeat.inputs:

- paths: ["/var/log/app/*.json"]

tags: ["json"]

fields

#可选字段,选择额外的字段进行输出

#可以是标量值,元组,字典等嵌套类型ignore_older

#可以指定Filebeat忽略指定时间段以外修改的日志内容

#文件被忽略之前,确保文件不在被读取,必须设置ignore older时间范围大于close_inactive

#如果一个文件正在读取时候被设置忽略,它会取得到close_inactive后关闭文件,然后文件被忽略

close_*

#close_ *配置选项用于在特定标准或时间之后关闭harvester。 关闭harvester意味着关闭文件处理程

序。 如果在harvester关闭后文件被更新,则在scan_frequency过后,文件将被重新拾取。 但是,如果

在harvester关闭时移动或删除文件,Filebeat将无法再次接收文件,并且harvester未读取的任何数据都

将丢失。

close_inactive

#启动选项时,如果在制定时间没有被读取,将关闭文件句柄

#读取的最后一条日志定义为下一次读取的起始点,而不是基于文件的修改时间

#如果关闭的文件发生变化,一个新的harverster将在scan_frequency运行后被启动

#建议至少设置一个大于读取日志频率的值,配置多个prospector来实现针对不同更新速度的日志文件

#使用内部时间戳机制,来反映记录日志的读取,每次读取到最后一行日志时开始倒计时

#使用2h 5m 来表示

recursive_glob.enabled

#递归匹配日志文件,默认false

close_rename

#当选项启动,如果文件被重命名和移动,filebeat关闭文件的处理读取

close_removed

#当选项启动,文件被删除时,filebeat关闭文件的处理读取

#这个选项启动后,必须启动clean_removed

close_eof

#适合只写一次日志的文件,然后filebeat关闭文件的处理读取

close_timeout

#当选项启动时,filebeat会给每个harvester设置预定义时间,不管这个文件是否被读取,达到设定时间

后,将被关闭

close_timeout 不能等于ignore_older,会导致文件更新时,不会被读取

#如果output一直没有输出日志事件,这个timeout是不会被启动的,至少要要有一个事件发送,然后

haverter将被关闭

#设置0 表示不启动

clean_inactived

#从注册表文件中删除先前收获的文件的状态

#设置必须大于ignore_older+scan_frequency,以确保在文件仍在收集时没有删除任何状态

#配置选项有助于减小注册表文件的大小,特别是如果每天都生成大量的新文件

#此配置选项也可用于防止在Linux上重用inode的Filebeat问题

clean_removed

#启动选项后,如果文件在磁盘上找不到,将从注册表中清除filebeat

#如果关闭close removed 必须关闭clean removed

scan_frequency

#prospector检查指定用于收获的路径中的新文件的频率,默认10s

document_type

#类型事件,被用于设置输出文档的type字段,默认是logharvester_buffer_size

#每次harvester读取文件缓冲字节数,默认是16384

max_bytes

#对于多行日志信息,很有用,最大字节数

json

#这些选项使Filebeat解码日志结构化为JSON消息,逐行进行解码json

keys_under_root

#设置key为输出文档的顶级目录

overwrite_keys

#覆盖其他字段

add_error_key

#定义一个json_error

message_key

#指定json 关键建作为过滤和多行设置,与之关联的值必须是string

multiline

#控制filebeat如何处理跨多行日志的选项,多行日志通常发生在java堆栈中

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

上面匹配是将多行日志所有不是以[符号开头的行合并成一行它可以将下面的多行日志进行合并成一行

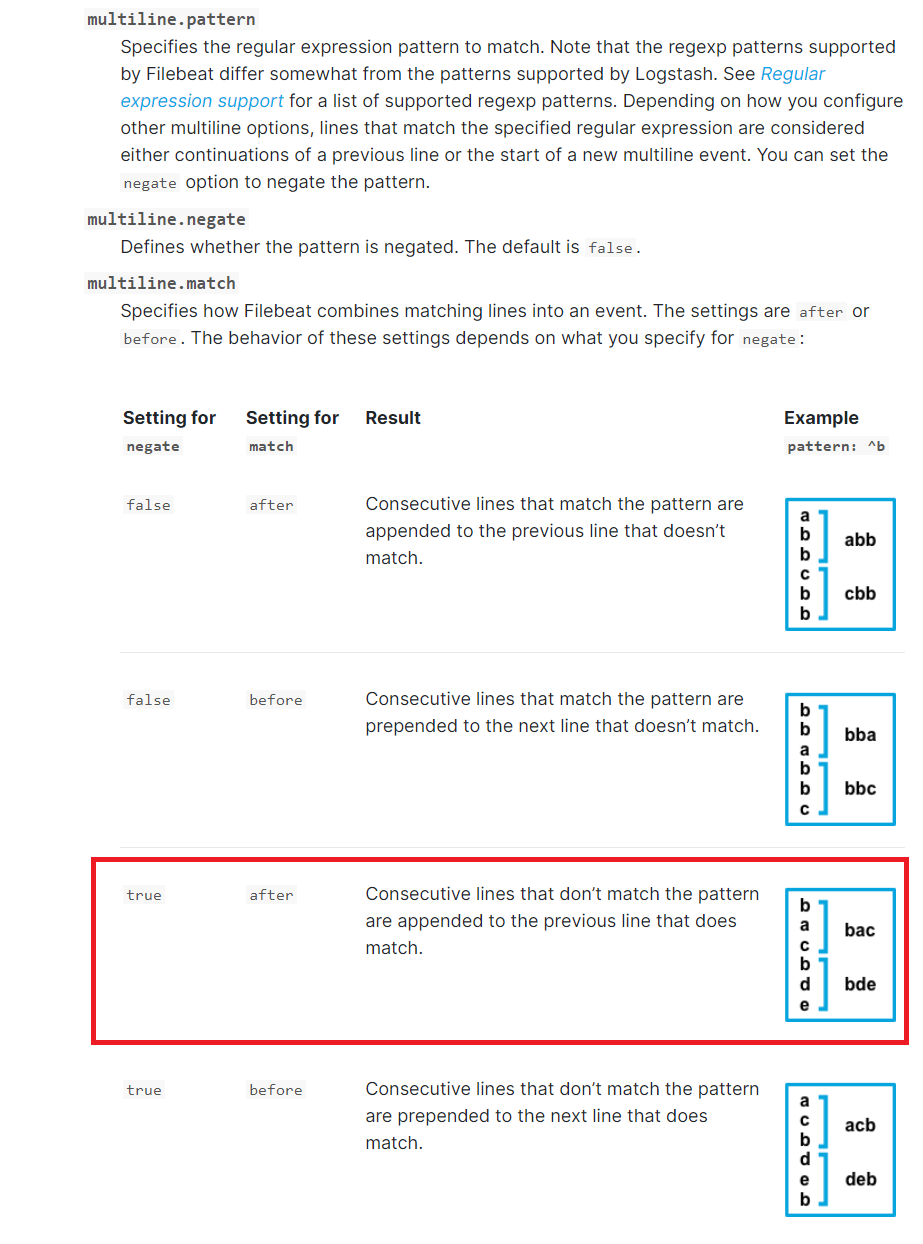

multiline.pattern

指定匹配的正则表达式,filebeat支持的regexp模式与logstash支持的模式有所不同

pattern regexp

multiline.negate

定义上面的模式匹配条件的动作是 否定的,默认是false

假如模式匹配条件'^b',默认是false模式,表示讲按照模式匹配进行匹配 将不是以b开头的日志行进行合并

如果是true,表示将不以b开头的日志行进行合并

multiline.match

#指定Filebeat如何将匹配行组合成事件,在之前或者之后,取决于上面所指定的negate

multiline.max_lines

#可以组合成一个事件的最大行数,超过将丢弃,默认500

multiline.timeout

#定义超时时间,如果开始一个新的事件在超时时间内没有发现匹配,也将发送日志,默认是5s

tail_files

#如果此选项设置为true,Filebeat将在每个文件的末尾开始读取新文件,而不是开头

#此选项适用于Filebeat尚未处理的文件

symlinks

#符号链接选项允许Filebeat除常规文件外,可以收集符号链接。收集符号链接时,即使为符号链接的路径,

Filebeat也会打开并读取原始文件。

backoff

#backoff选项指定Filebeat如何积极地抓取新文件进行更新。默认1s

#backoff选项定义Filebeat在达到EOF之后再次检查文件之间等待的时间。

max_backoff#在达到EOF之后再次检查文件之前Filebeat等待的最长时间

backoff_factor

#指定backoff尝试等待时间几次,默认是2

harvester_limit

#harvester_limit选项限制一个prospector并行启动的harvester数量,直接影响文件打开数

enabled

#控制prospector的启动和关闭

filebeat global

spool_size

#事件发送的阀值,超过阀值,强制刷新网络连接

filebeat.spool_size: 2048

publish_async

#异步发送事件,实验性功能

idle_timeout

#事件发送的超时时间,即使没有超过阀值,也会强制刷新网络连接

filebeat.idle_timeout: 5s

registry_file

#注册表文件的名称,如果使用相对路径,则被认为是相对于数据路径

#有关详细信息,请参阅目录布局部分 默认值为${path.data}/registry

filebeat.registry_file: registry

config_dir

#包含额外的prospector配置文件的目录的完整路径

#每个配置文件必须以.yml结尾

#每个配置文件也必须指定完整的Filebeat配置层次结构,即使只处理文件的prospector部分。

#所有全局选项(如spool_size)将被忽略

#必须是绝对路径

filebeat.config_dir: path/to/configs

shutdown_timeout

#Filebeat等待发布者在Filebeat关闭之前完成发送事件的时间。

Filebeat General

name

#设置名字,如果配置为空,则用该服务器的主机名

name: "my-shipper"

queue_size

#单个事件内部队列的长度 默认1000

bulk_queue_size

#批量事件内部队列的长度

max_procs

#设置最大使用cpu数量

范例:添加新字段

3.3.2 案例: 从标准输入读取再输出至标准输出

3.3.2.1 创建配置

[root@kibana01 ~]# cat /etc/filebeat/modules.d/stdin.yml

filebeat.inputs:

- type: stdinenabled: true

output.console:pretty: trueenable: true#配置检查

[root@kibana01 ~]# filebeat test config -c /etc/filebeat/modules.d/stdin.yml

Config OK

3.3.2.2 执行读取

#从指定文件中读取配置

#-e 表示Log to stderr and disable syslog/file output

[root@kibana01 ~]#filebeat -e -c /etc/filebeat/modules.d/stdin.yml ... ...hello world #标准输入内容,以下为生成的数据

{"@timestamp": "2024-10-02T06:25:26.909Z","@metadata": {"beat": "filebeat","type": "_doc","version": "8.14.3"},"input": {"type": "stdin"},"ecs": {"version": "8.0.0"},"host": {"name": "kibana01.dinginx.org"},"agent": {"type": "filebeat","version": "8.14.3","ephemeral_id": "5eb661df-0710-429e-8b00-987095f5e3bf","id": "c5e80544-fb8d-4ec1-a3de-d954f88c429a","name": "kibana01.dinginx.org"},"message": "hello world", #真正的数据只有此行,其它都是Filebeat添加的元数据"log": {"offset": 0,"file": {"path": ""}}

}

{"log.level":"error","@timestamp":"2024-10-02T14:25:36.913+0800","log.origin":{"function":"github.com/elastic/beats/v7/filebeat/input/file.(*States).CleanupWith","file.name":"file/states.go","file.line":125},"message":"State for should have been dropped, but couldn't as state is not finished.","service.name":"filebeat","ecs.version":"1.6.0"}......3.3.3 案例: 从标准输入读取再输出至 Json 格式的文件

3.3.3.1 创建配置

#8.X版本生成的文件名,json.keys_under_root: false

[root@kibana01 ~]#cat /etc/filebeat/modules.d/stdout_file.yml

filebeat.inputs:

- type: stdinjson.keys_under_root: true #添加此段

output.console:pretty: trueenable: true[root@kibana01 ~]#filebeat test config -c /etc/filebeat/modules.d/stdout_file.yml

Config OK#输入如下Json格式信息,再回车后输出如下

{"name" : "dingbaohang", "age" : "18", "phone" : "0123456789"}[root@kibana01 ~]#filebeat -c /etc/filebeat/modules.d/stdout_file.yml

{"name" : "dingbaohang", "age" : "18", "phone" : "0123456789"}

{"@timestamp": "2024-10-02T06:43:36.118Z","@metadata": {"beat": "filebeat","type": "_doc","version": "8.14.3"},"input": {"type": "stdin"},"ecs": {"version": "8.0.0"},"host": {"name": "kibana01.dinginx.org"},"agent": {"version": "8.14.3","ephemeral_id": "0ee3848b-c4cd-4126-9515-90833f26c935","id": "c5e80544-fb8d-4ec1-a3de-d954f88c429a","name": "kibana01.dinginx.org","type": "filebeat"},"name": "dingbaohang", #生成独立字段的Json数据"log": {"file": {"path": ""},"offset": 0},"age": "18","phone": "0123456789"

}#查看log文件

[root@kibana01 ~]#cat /tmp/filebeat.log-20241002.ndjson |jq{"@timestamp": "2024-10-02T07:10:06.248Z","@metadata": {"beat": "filebeat","type": "_doc","version": "8.14.3"},"message": "hello world!","input": {"type": "stdin"},"ecs": {"version": "8.0.0"},"host": {"name": "kibana01.dinginx.org"},"agent": {"ephemeral_id": "25cb3a18-6161-40ce-a158-53de61747dfb","id": "c5e80544-fb8d-4ec1-a3de-d954f88c429a","name": "kibana01.dinginx.org","type": "filebeat","version": "8.14.3"},"log": {"offset": 0,"file": {"path": ""}},"json": {}

}3.3.4 案例: 从文件读取再输出至标准输出

https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-input-log.html

filebeat 会将每个文件的读取数据的相关信息记录在/var/lib/filebeat/registry/filebeat/log.json文件中,可以实现日志采集的持续性,而不会重复采集

3.3.4.1 创建配置

[root@kibana01 ~]#cat /etc/filebeat/modules.d/file.yml

filebeat.inputs:

- type: logjson.keys_under_root: truepaths:#- /var/log/syslog- /var/log/test.log

output.console:pretty: trueenable: true[root@kibana01 ~]#filebeat test config -c /etc/filebeat/modules.d/file.yml

Config OK3.3.4.2 执行读取

[root@kibana01 ~]#filebeat -e -c /etc/filebeat/modules.d/file.yml......sed -n '3p' /var/log/syslog > /var/log/test.log #模拟数据生成......{"@timestamp": "2024-10-02T07:32:27.095Z","@metadata": {"beat": "filebeat","type": "_doc","version": "8.14.3"},"log": {"offset": 128,"file": {"path": "/var/log/test.log"}},"json": {},"message": "Oct 2 12:59:06 kibana01 systemd[1]: man-db.service: Succeeded.","input": {"type": "log"},"ecs": {"version": "8.0.0"},"host": {"name": "kibana01.dinginx.org"},"agent": {"ephemeral_id": "e198106e-a522-43ed-ad17-cce6a551f14e","id": "c5e80544-fb8d-4ec1-a3de-d954f88c429a","name": "kibana01.dinginx.org","type": "filebeat","version": "8.14.3"}

}#filebeat记录日志文件读取的当前位置,以防止重复读取日志

[root@kibana01 ~]#cat /var/lib/filebeat/registry/filebeat/log.json

{"op":"set","id":1}

{"k":"filebeat::logs::","v":{"identifier_name":"","id":"","prev_id":"","source":"","timestamp":[2062186294301,1727850026],"ttl":0,"type":"","FileStateOS":{"inode":0,"device":0},"offset":1}}

{"op":"set","id":2}

{"k":"filebeat::logs::","v":{"type":"","FileStateOS":{"inode":0,"device":0},"identifier_name":"","id":"","offset":1,"timestamp":[2062186294301,1727850026],"ttl":0,"prev_id":"","source":""}}

{"op":"set","id":3}

{"k":"filebeat::logs::native::0-0","v":{"id":"native::0-0","prev_id":"","source":"","offset":1,"FileStateOS":{"device":0,"inode":0},"timestamp":[2062186294301,1727850026],"ttl":-2,"type":"","identifier_name":"native"}}

{"op":"set","id":4}

{"k":"filebeat::logs::","v":{"ttl":0,"type":"","FileStateOS":{"inode":0,"device":0},"id":"","timestamp":[2061662661557,1727850056],"offset":6,"identifier_name":"","prev_id":"","source":""}}

{"op":"set","id":5}

{"k":"filebeat::logs::native::0-0","v":{"offset":1,"ttl":-2,"type":"","FileStateOS":{"inode":0,"device":0},"id":"native::0-0","prev_id":"","source":"","timestamp":[2062186294301,1727850026],"identifier_name":"native"}}

{"op":"set","id":6}

{"k":"filebeat::logs::","v":{"type":"","identifier_name":"","timestamp":[2061662661557,1727850056],"ttl":0,"FileStateOS":{"inode":0,"device":0},"id":"","prev_id":"","source":"","offset":6}}

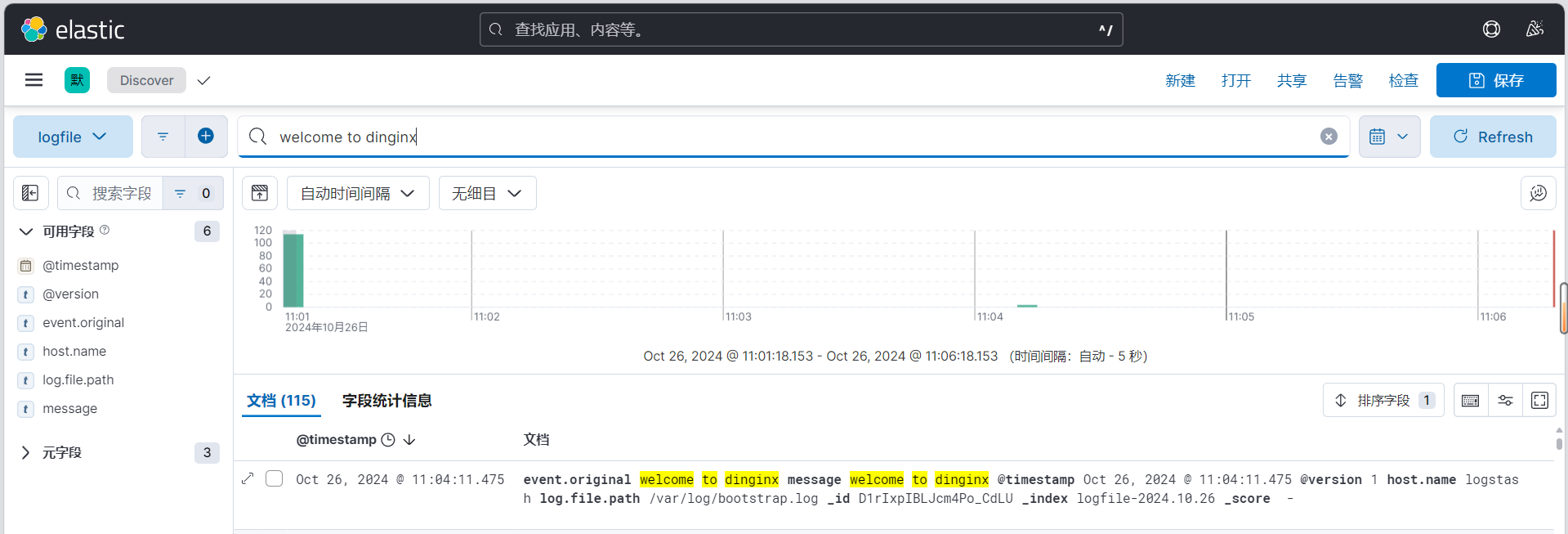

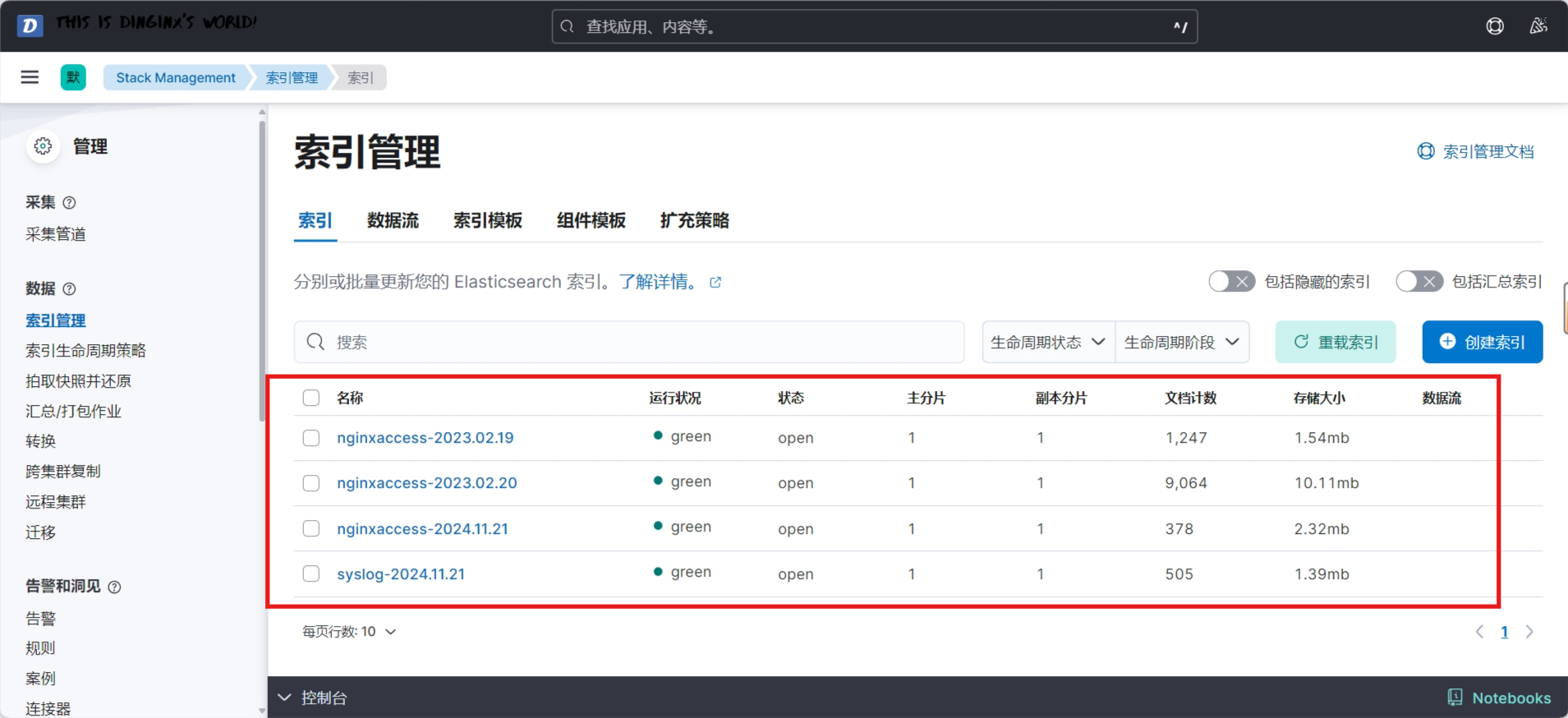

{"op":"set","id":7}......3.3.5 案例: 利用 Filebeat 收集系统日志到 ELasticsearch

https://www.elastic.co/guide/en/beats/filebeat/current/elasticsearch-output.html

Filebeat收集的日志在Elasticsearch中默认生成的索引名称为

#新版

.ds-filebeat-<版本>-<时间>-<ID>

#旧版

filebeat-<版本>-<时间>-<ID>

3.3.5.1 修改配置

#修改syslog.conf

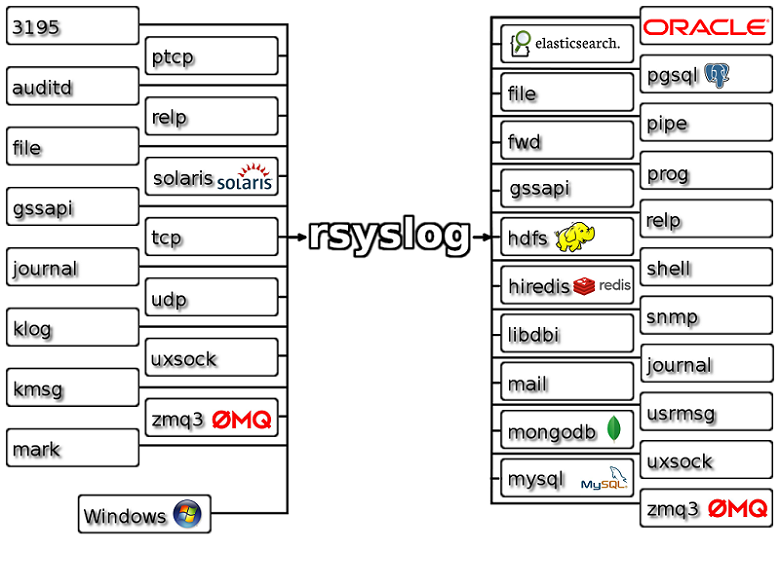

[root@kibana01 /etc/filebeat]# vim /etc/rsyslog.conf

*.* /var/log/system.log

[root@kibana01 /etc/filebeat]# systemctl restart rsyslog.service[root@kibana01 /etc/filebeat]# cat filebeat.yml

filebeat.inputs:

- type: logenabled: true #开启日志paths:- /var/log/system.log #指定收集的日志文件#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:hosts: ["11.0.1.101:9200","11.0.1.102:9200","11.0.1.103:9200"] #指定ELK集群任意节点的地址和端口,多个地址容错index: "system104-%{[agent.version]}-%{+yyyy.MM.dd}" # 定义索引名称模板,格式为 "system104-Filebeat版本号-日期"setup.ilm.enabled: false # 禁用 Elasticsearch 的 ILM(Index Lifecycle Management)功能

setup.template.name: "system104" # 定义自定义模板的名称为 "system104"

setup.template.pattern: "system104-*" # 指定模板匹配的索引模式,适用于所有以 "system104-" 开头的索引setup.template.settings: # 定义 Elasticsearch 模板的配置选项index.number_of_shards: 3 # 设置每个索引的主分片数量为 3index.number_of_replicas: 1 # 设置每个分片的副本数量为 1#启动服务

[root@kibana01 /etc/filebeat]# systemctl enable --now filebeat.service

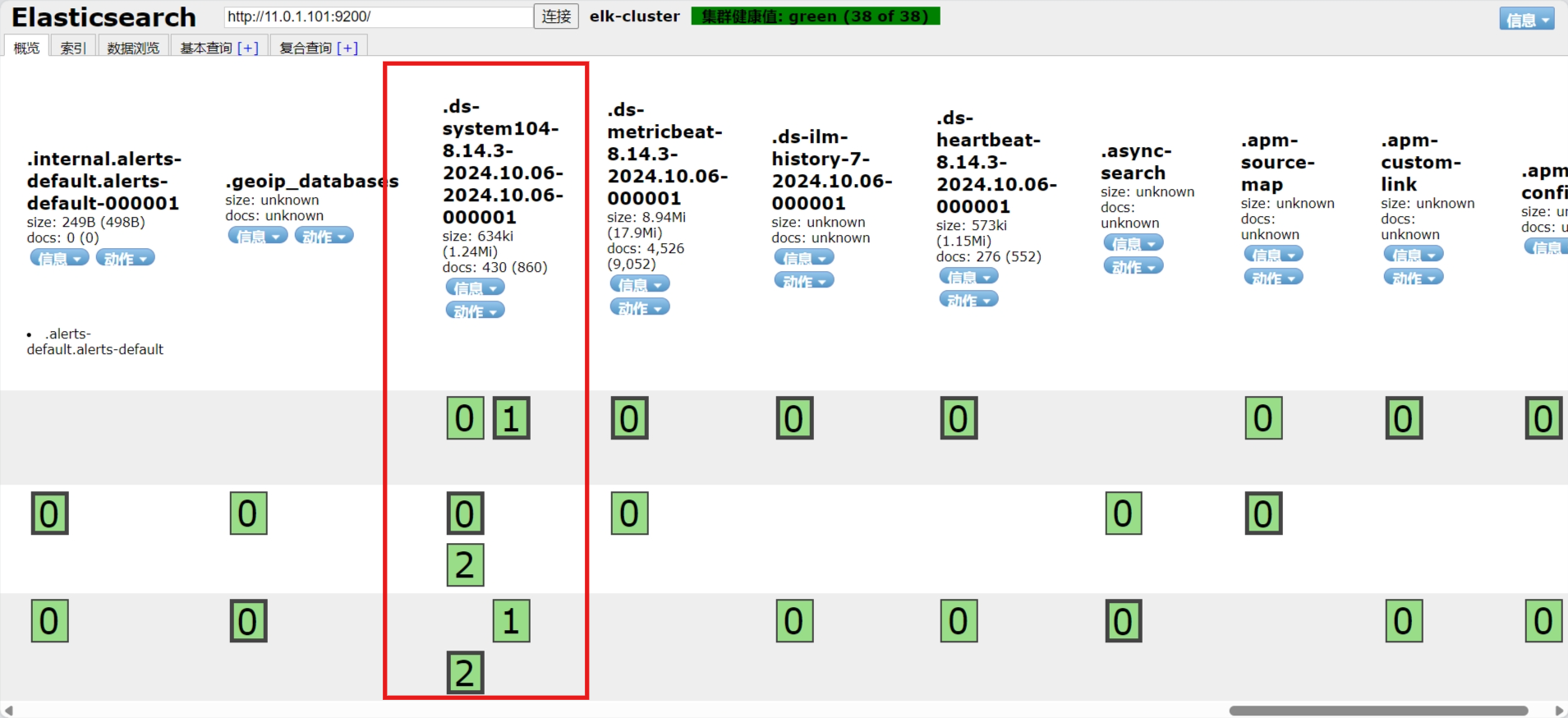

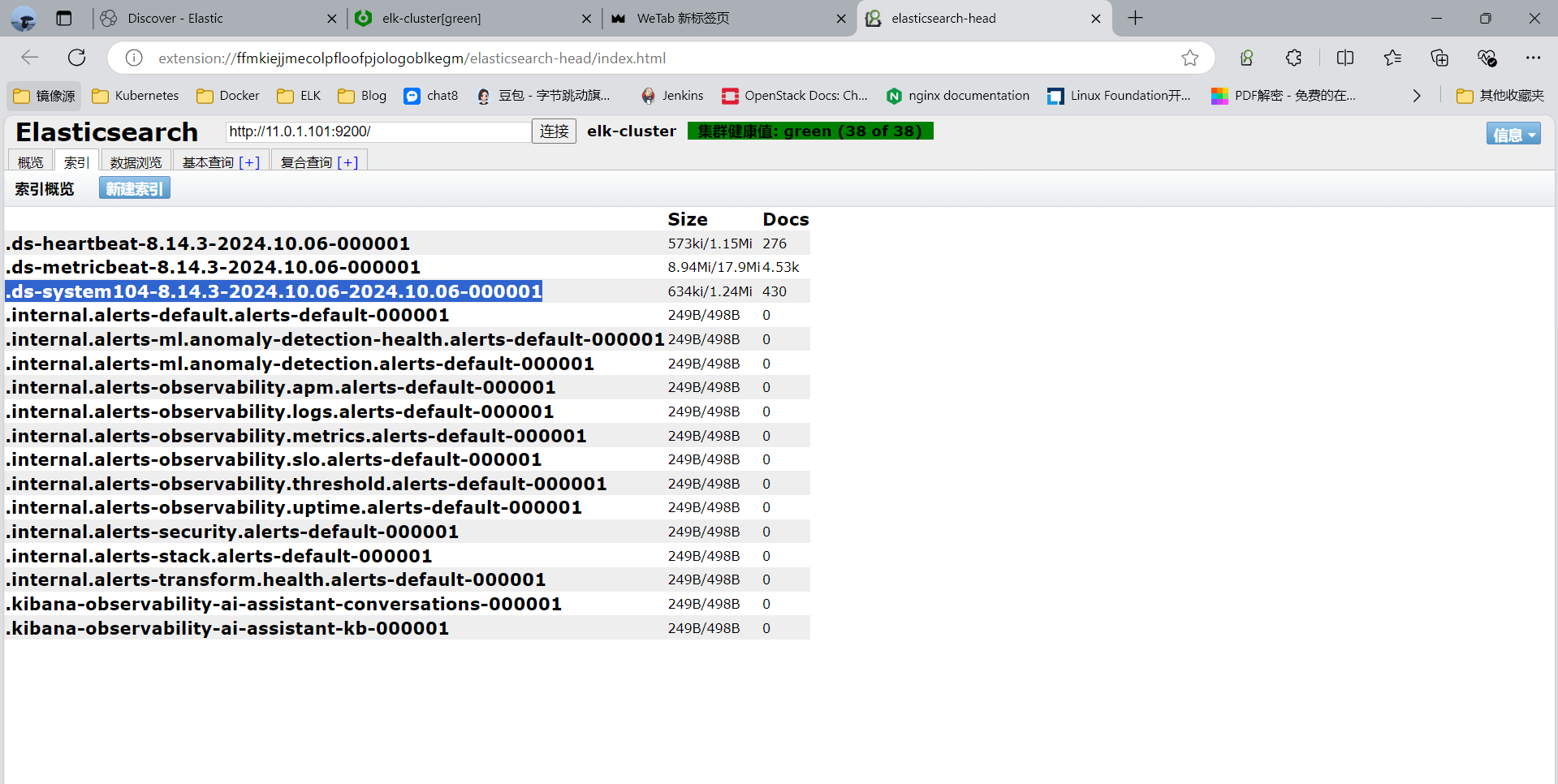

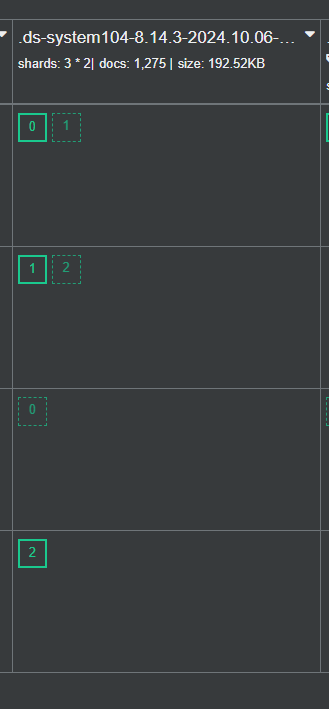

3.3.5.2 插件查看索引

测试用ssh登录,用插件查看日志

注意:8.X版后索引名默认为.ds-filebeat—

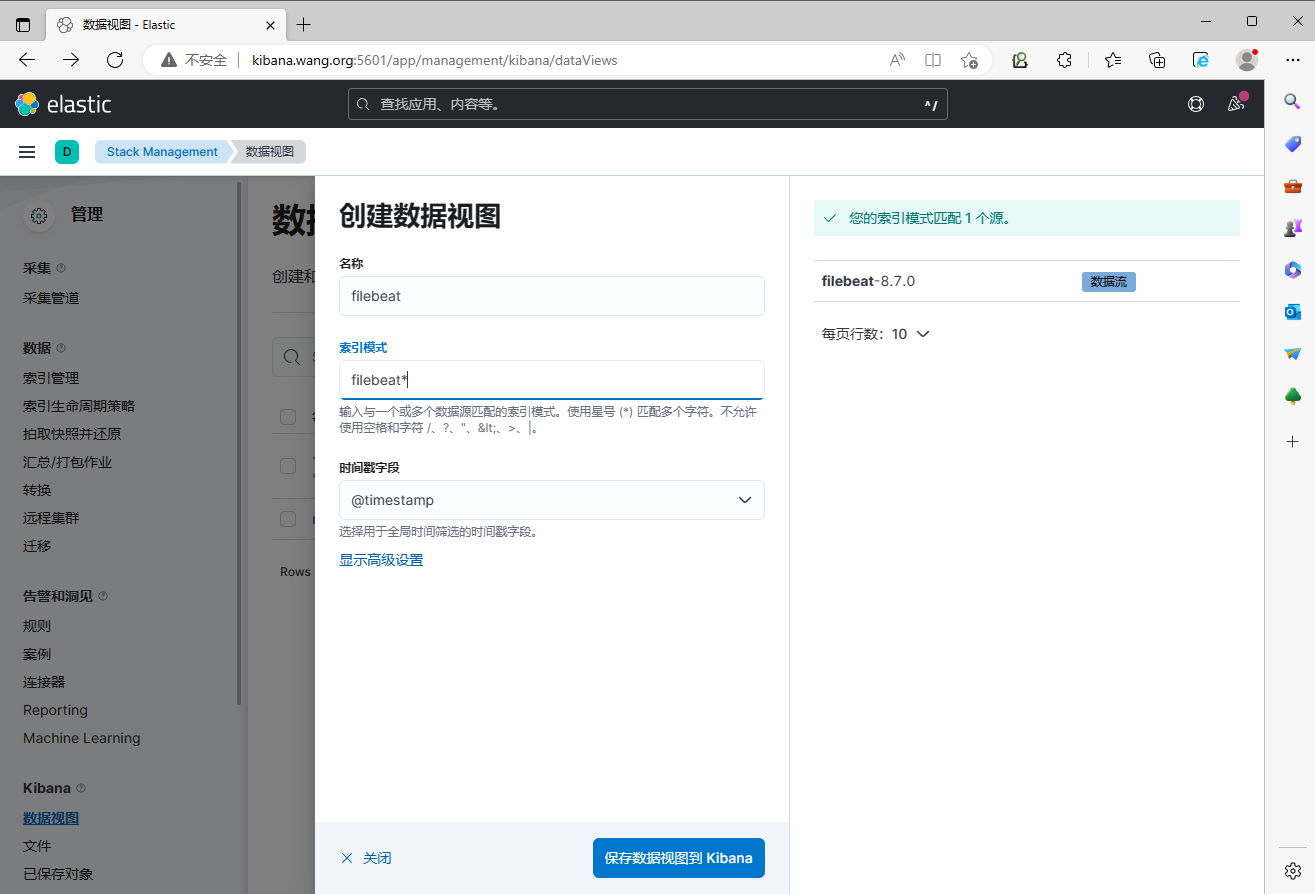

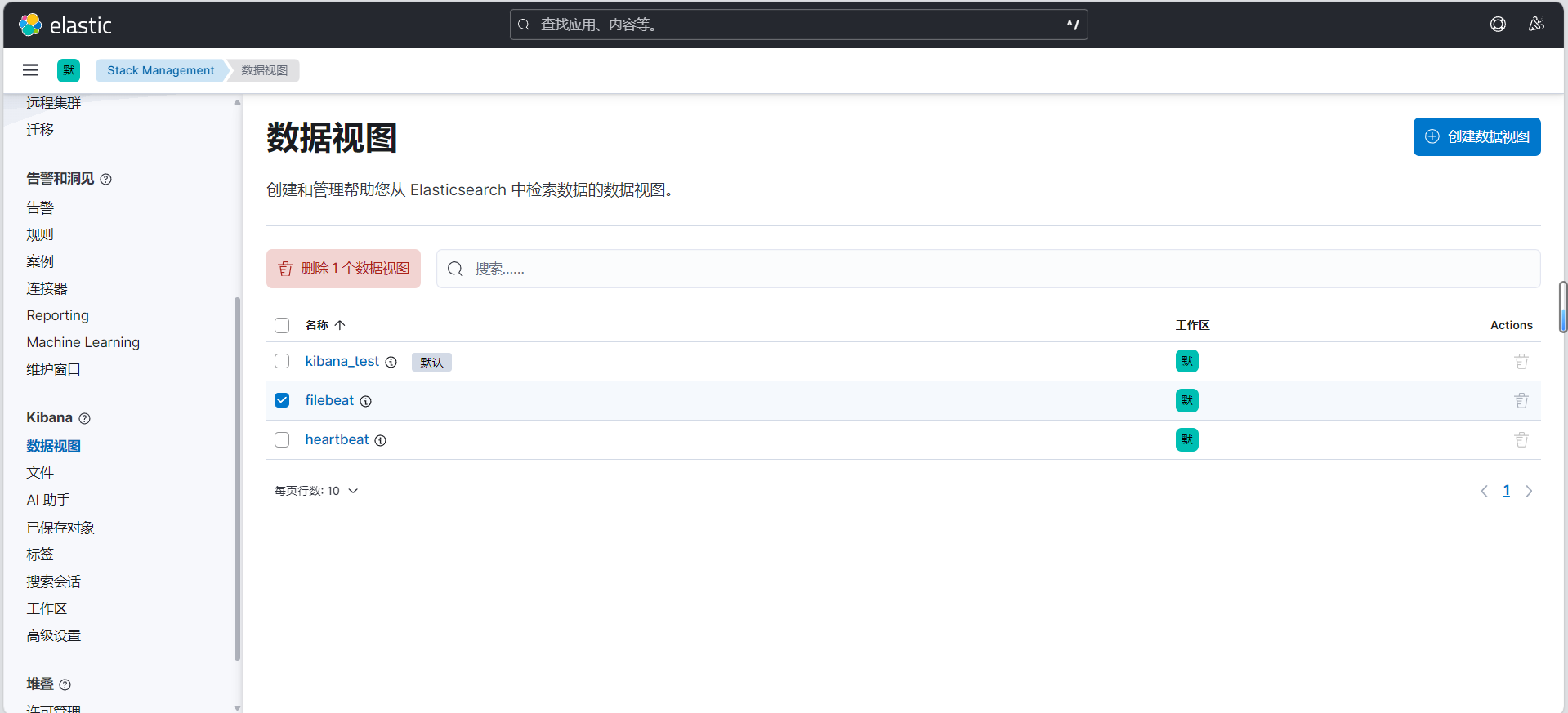

3.3.5.3 通过 Kibana 查看收集的日志信息

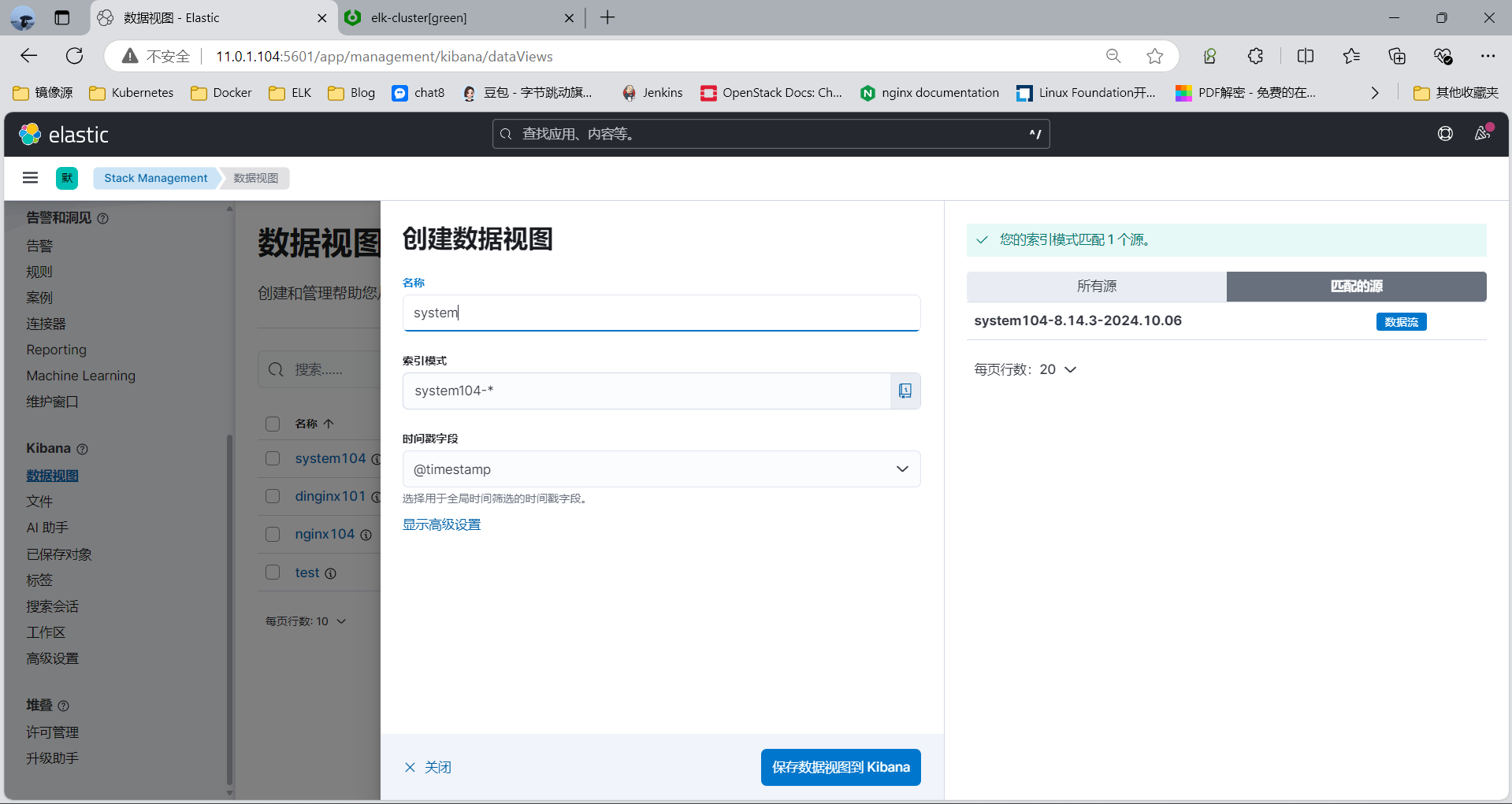

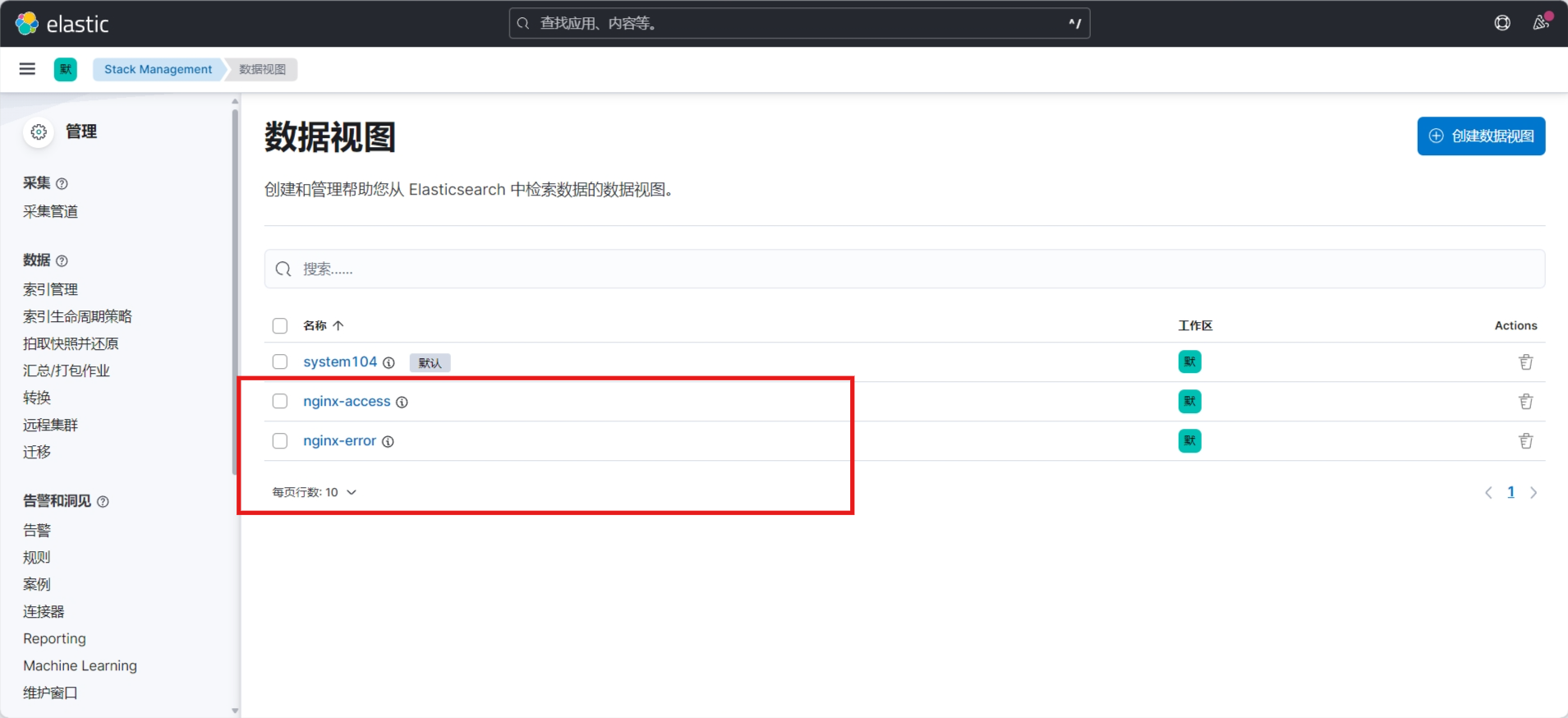

创建数据视图

新版

3.3.6 案例: 自定义索引名称收集日志到 ELasticsearch

3.3.6.1 修改配置

范例:自定义索引名称收集所有系统日志到 ELasticsearch

[root@kibana01 /etc/filebeat]#cat system104.yml

filebeat.inputs:

- type: log # 定义输入类型为日志文件enabled: true # 启用此输入项paths:- /var/log/system.log # 指定要读取的日志文件路径,这里是 /var/log/system.log 文件include_lines: ['dinginx','failed','password'] # 仅采集包含这些关键词的行:'dinginx'、'failed'、'password'output.elasticsearch:hosts: ["11.0.1.101:9200"] # 指定 Elasticsearch 集群的地址(此处指向单节点,IP 为 11.0.1.101,端口 9200)index: "system104-%{[agent.version]}-%{+yyyy.MM.dd}" # 定义索引名称模板,格式为 "system104-Filebeat版本号-日期"setup.ilm.enabled: false # 禁用 Elasticsearch 的 ILM(Index Lifecycle Management)功能

setup.template.name: "system104" # 定义自定义模板的名称为 "system104"

setup.template.pattern: "system104-*" # 指定模板匹配的索引模式,适用于所有以 "system104-" 开头的索引setup.template.settings: # 定义 Elasticsearch 模板的配置选项index.number_of_shards: 3 # 设置每个索引的主分片数量为 3index.number_of_replicas: 1 # 设置每个分片的副本数量为 1

systemctl restart filebeat.service

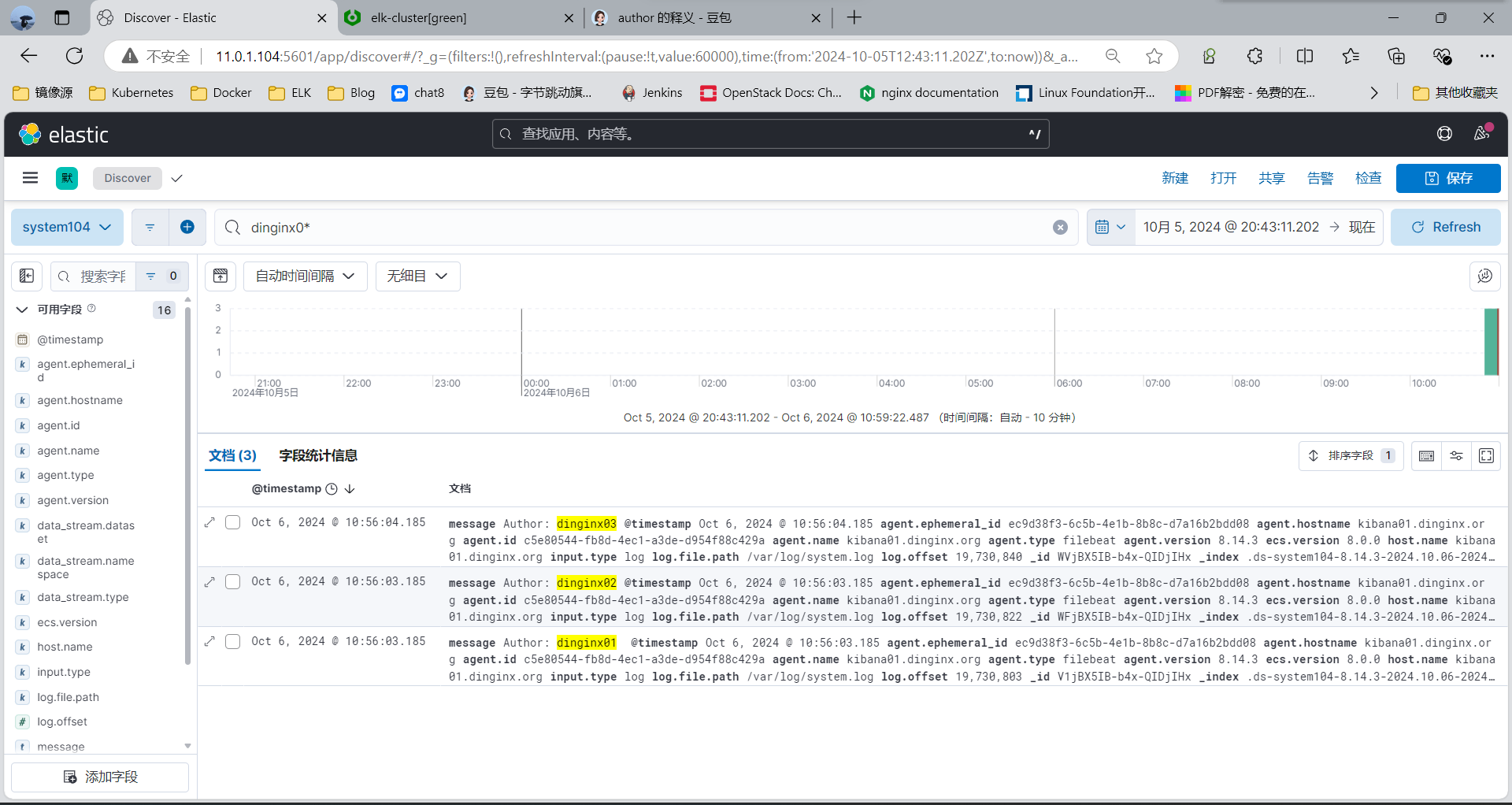

测试数据

[root@kibana01 ~]#echo "Author: dinginx01 " | tee -a /var/log/system.log

Author: dinginx01

[root@kibana01 ~]#echo "Author: dinginx02" | tee -a /var/log/system.log

Author: dinginx02

[root@kibana01 ~]#echo "Author: dinginx03" | tee -a /var/log/system.log

Author: dinginx03

3.3.6.3 通过kibana查看收集的日志信息

3.3.6.4 修改es集群索引分片副本数

默认情况下 Filebeat 写入到 ES 的索引分片为1,副本数为1,如果需要修改分片和副本数,可以通过如下实现

#方法1,修改filebeat.yml配置文件,此方式只适合直接连接ES时才有效,适用度不高

#注意:如果模板已经存在,需要先删除模板和索引才能生效,利用kibana或者cerebro(8.X不支持)插件(more--index templates--existing templates--输入搜索的模板名wang)

vim /etc/filebeat/filebeat.yml

setup.template.settings:index.number_of_shards: 3index.number_of_replicas: 1

#生效后,在cerebro插件中more--index templates--existing templates--输入搜索的模板名wang 中可以看到以下的分片和副本配置,8.X不支持#settings中number_of_shards和number_of_replication

#方法2:也可以通过修下面方式修改,8.X不支持

1.停止filebeat服务

2.在cerebro web页面修改:修改模板settings 配置,调整分片以及副本

3.删除模板关联的索引

4.启动filebeat产生新的索引

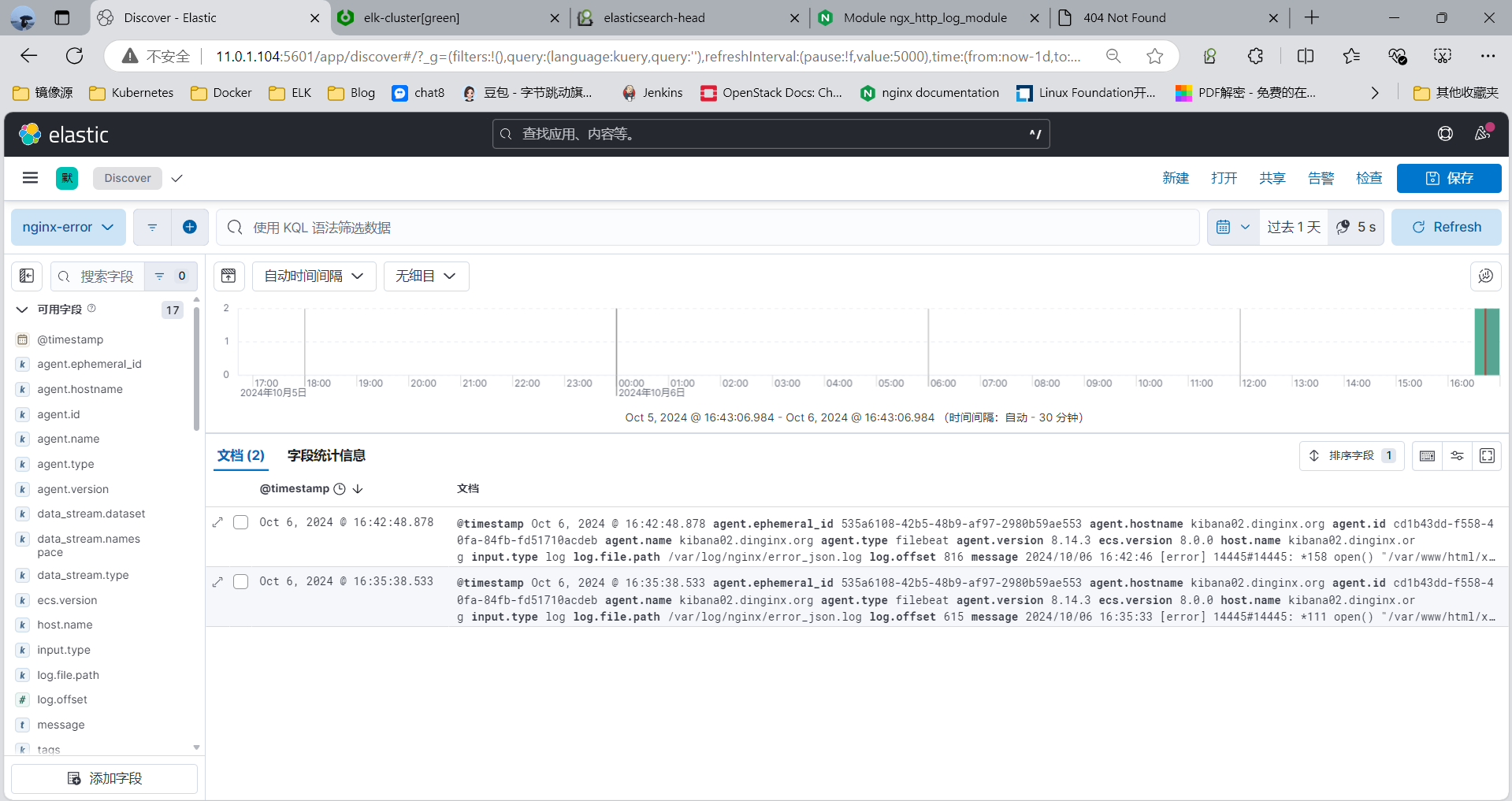

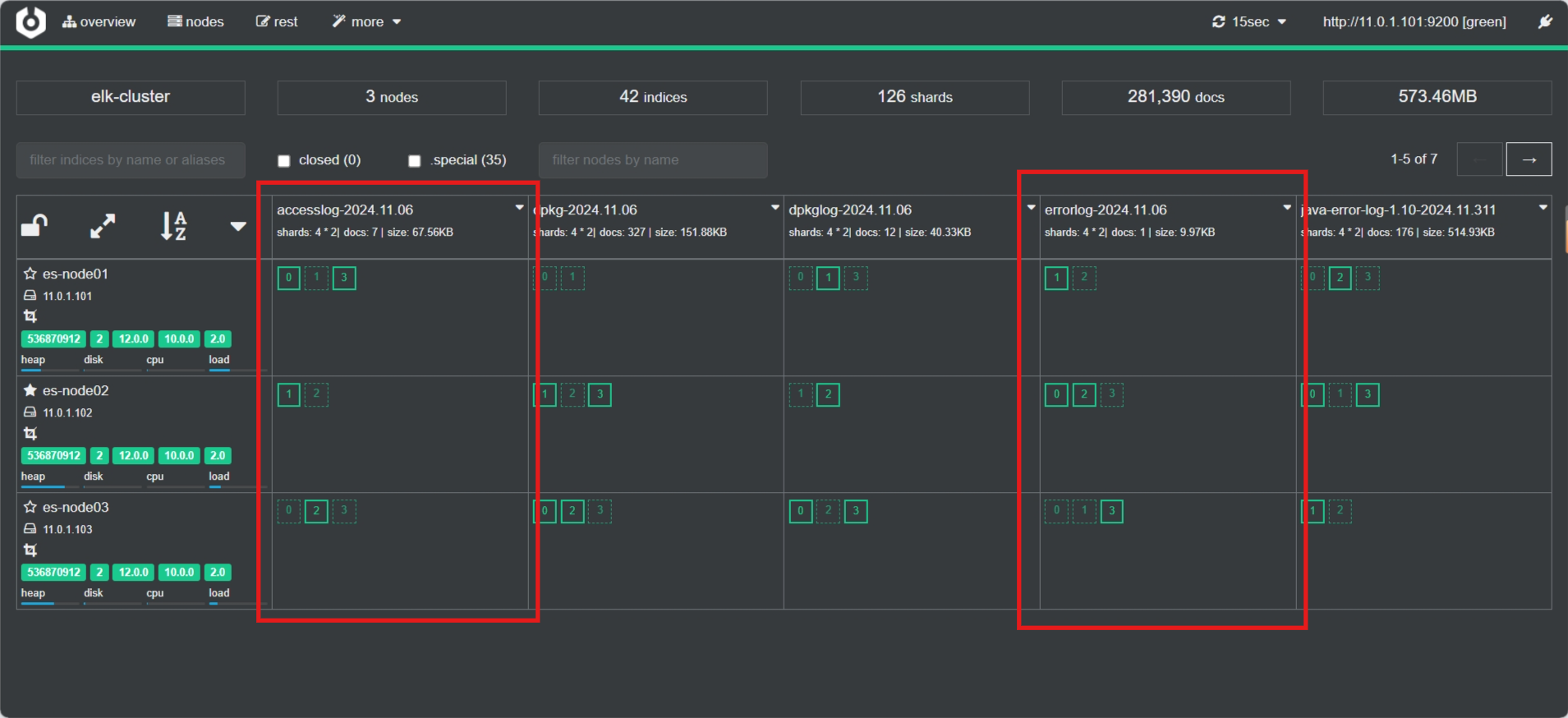

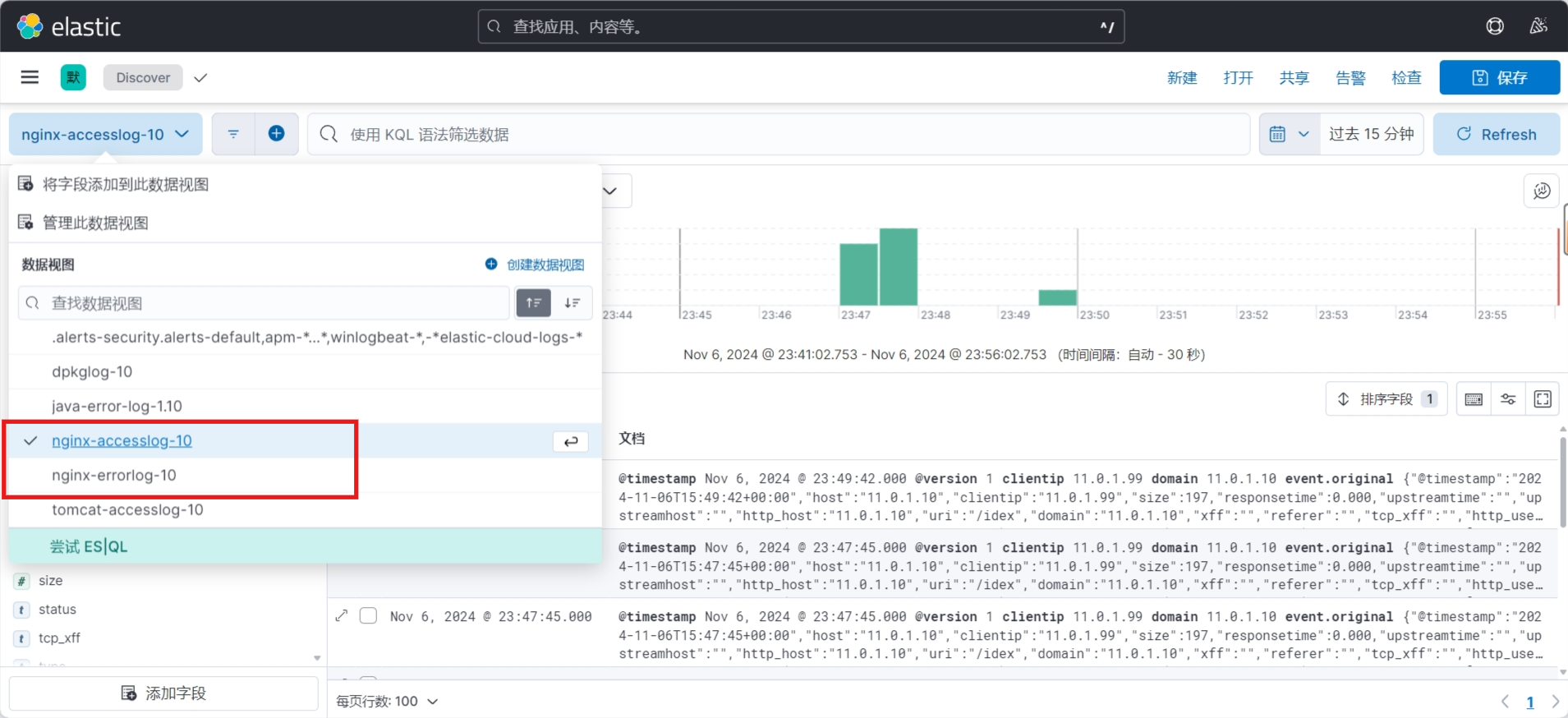

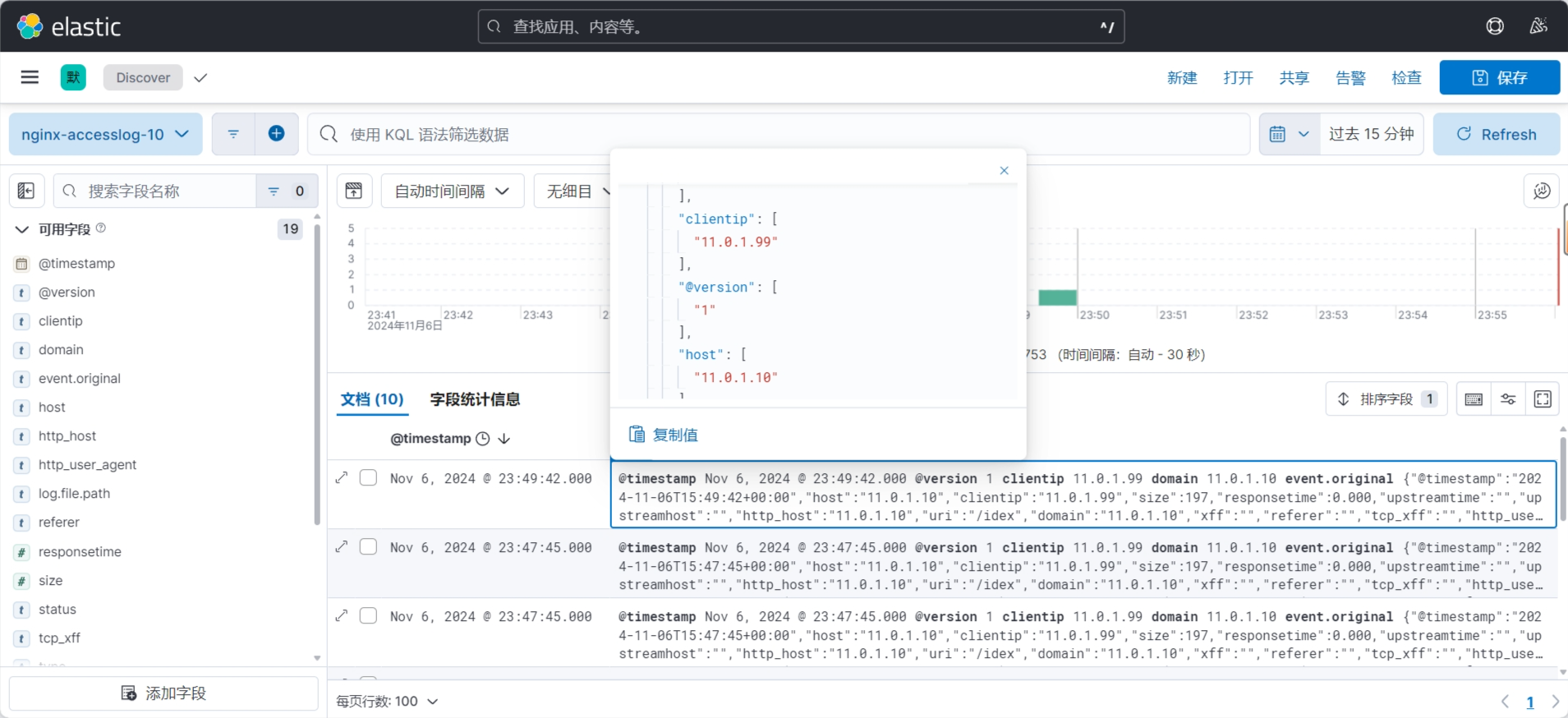

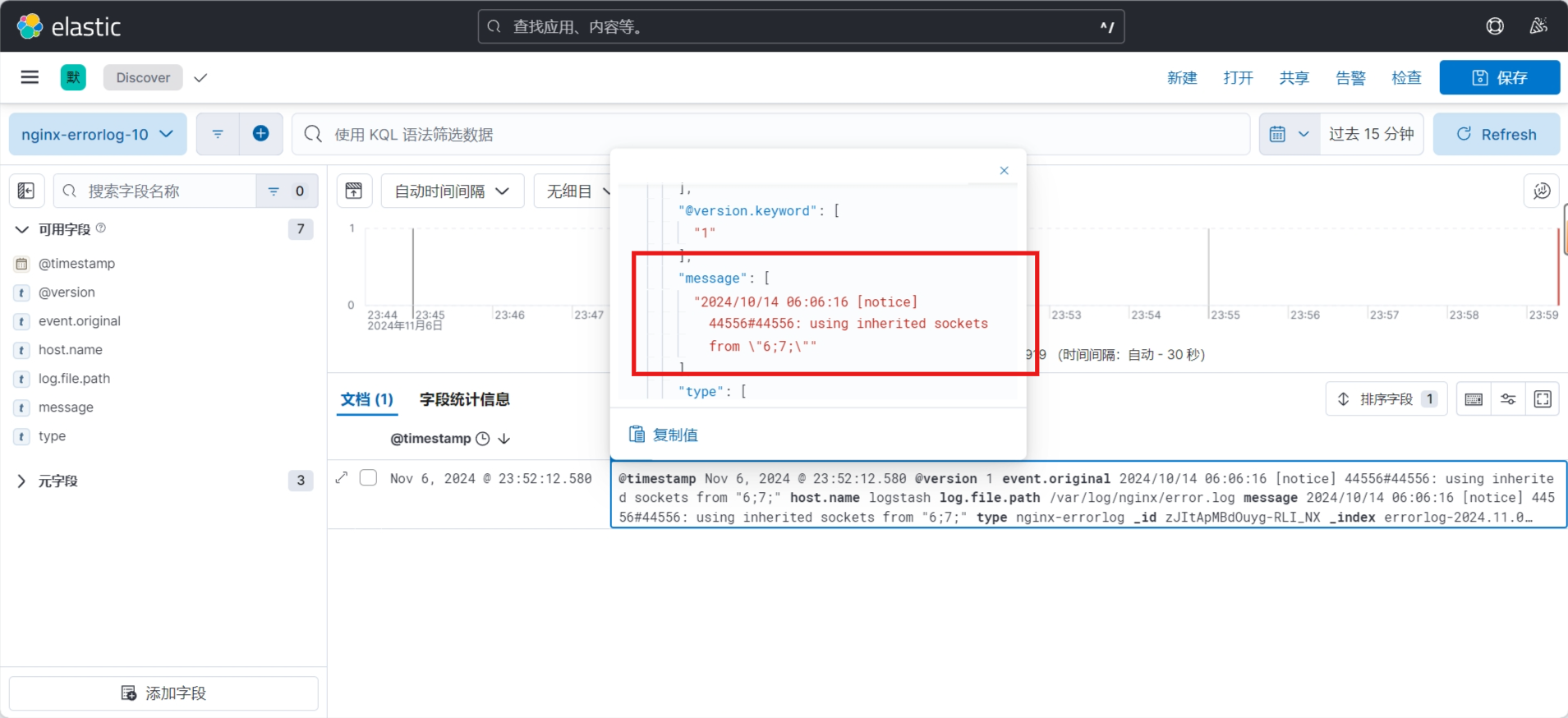

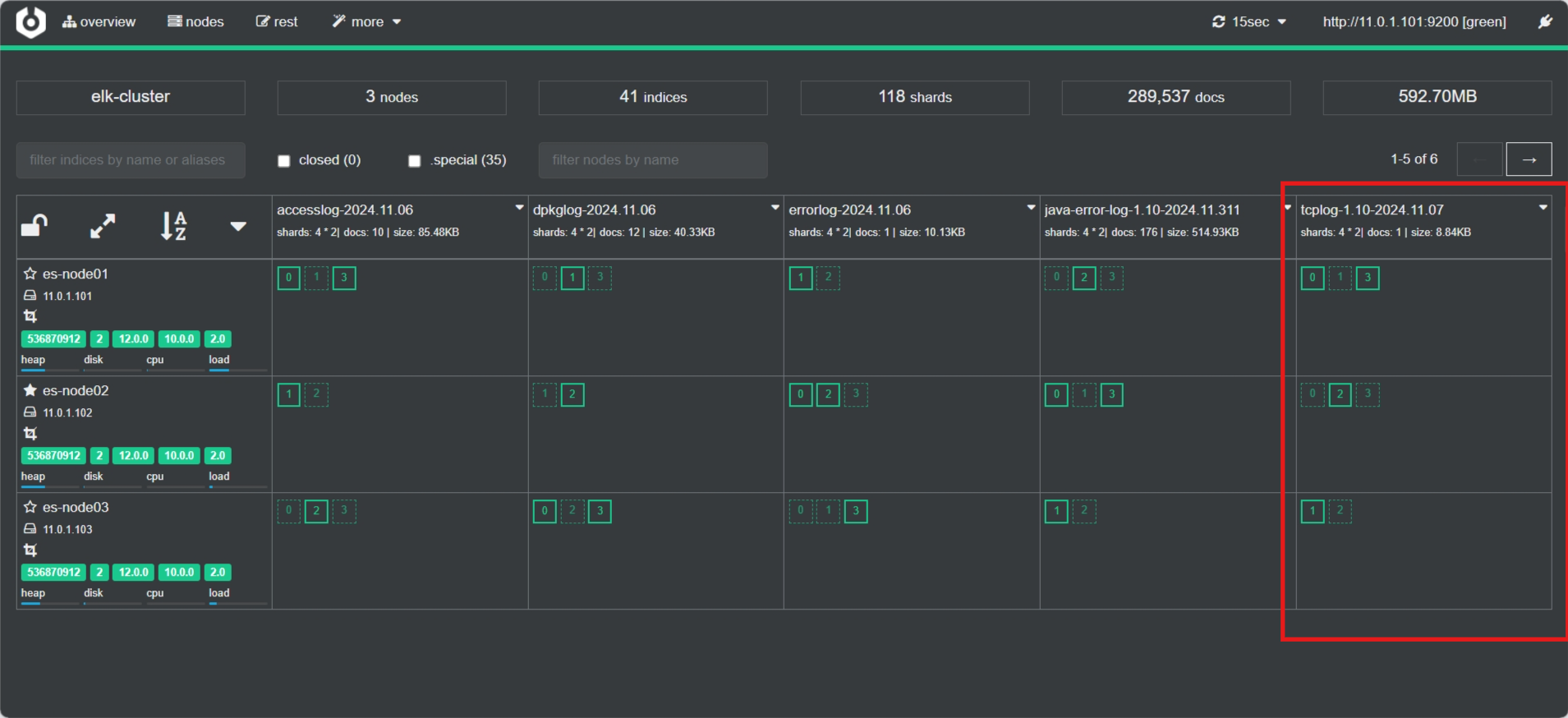

3.3.7 案例: 利用 Filebeat 收集 Nginx的 Json 格式访问日志和错误日志到 Elasticsearch 不同的索引

官方文档

https://www.elastic.co/guide/en/beats/filebeat/7.6/filebeat-input-log.html

https://www.elastic.co/guide/en/beats/filebeat/7.6/redis-output.html

生产环境中经常需要获取Web访问用户的信息,比如:网站的PV、UV、状态码、用户来自哪个地区,访问时间等

可以通过收集的Nginx的J访问日志实现

默认Nginx的每一次访问生成的访问日志是一行文本,ES没办法直接提取有效信息,不利于后续针对特定信息的分析

可以将Nginx访问日志转换为JSON格式解决这一问题

3.3.7.1 安装 nginx 配置访问日志使用 Json格式

#安装Nginx

[root@kibana02 ~]# apt update && apt -y install nginx#修改nginx访问日志为Json格式

[root@kibana02 ~]# cat /etc/nginx/nginx.conf ......http {# 定义自定义日志格式为 JSON 格式,并进行 JSON 字符转义log_format json_logs escape=json '{''"@timestamp":"$time_iso8601",' # 记录 ISO 8601 格式的时间戳'"host":"$server_addr",' # 服务器地址'"clientip":"$remote_addr",' # 客户端 IP 地址'"size":$body_bytes_sent,' # 响应发送给客户端的字节数'"responsetime":$request_time,' # 请求处理时间'"upstreamtime":"$upstream_response_time",' # 上游服务器响应时间'"upstreamhost":"$upstream_addr",' # 上游服务器地址'"http_host":"$host",' # 请求中的 Host 头部'"uri":"$uri",' # 请求的 URI'"domain":"$host",' # 请求中的域名'"xff":"$http_x_forwarded_for",' # 客户端的 X-Forwarded-For 头,表示客户端的原始 IP'"referer":"$http_referer",' # 请求的 Referer 头'"tcp_xff":"$proxy_protocol_addr",' # 通过 TCP 代理协议传递的原始客户端 IP 地址'"http_user_agent":"$http_user_agent",' # 用户代理(客户端的浏览器或其他信息)'"status":"$status"}'; # HTTP 响应状态码# 使用自定义的 JSON 格式记录访问日志access_log /var/log/nginx/access_json.log json_logs;# 指定错误日志的输出位置error_log /var/log/nginx/error_json.log;

}......#注意

#默认开启nginx的错误日志,但如果是ubuntu,还需要修改下面行才能记录错误日志

[root@kibana02 ~]# vim /etc/nginx/sites-available/default location / {# First attempt to serve request as file, then# as directory, then fall back to displaying a 404.#try_files $uri $uri/ =404; #将此行注释[root@kibana02 ~]# systemctl restart nginx.service

[root@kibana02 ~]# ll /var/log/nginx/*_json.log

-rw-r--r-- 1 root root 122291 Oct 6 15:49 /var/log/nginx/access_json.log

-rw-r--r-- 1 root root 0 Oct 6 15:09 /var/log/nginx/error_json.log[root@kibana02 /etc/filebeat]# cat filebeat.yml

# 配置 Filebeat 收集 NGINX 日志,并将其发送至 Elasticsearchfilebeat.inputs:

- type: logenabled: true # 启用该日志输入配置json.keys_under_root: truepaths:- /var/log/nginx/*_json.log # 指定 NGINX 访问日志的路径output.elasticsearch:hosts: ["11.0.1.101:9200","11.0.1.102:9200","11.0.1.103:9200"] # 目标 Elasticsearch 的 IP 地址和端口index: "nginx-access-%{[agent.version]}-%{+yyyy.MM.dd}" # 索引模式,按日期创建新索引setup.ilm.enabled: false # 禁用 Elasticsearch 的索引生命周期管理(ILM)setup.template.name: "nginx" # 模板名称,用于处理 NGINX 日志的字段映射

setup.template.pattern: "nginx-*" # 模板匹配模式,应用于所有以 "nginx-" 开头的索引setup.template.settings: # 定义 Elasticsearch 模板的配置选项index.number_of_shards: 3 # 设置每个索引的主分片数量为 3index.number_of_replicas: 1 # 设置每个分片的副本数量为 1

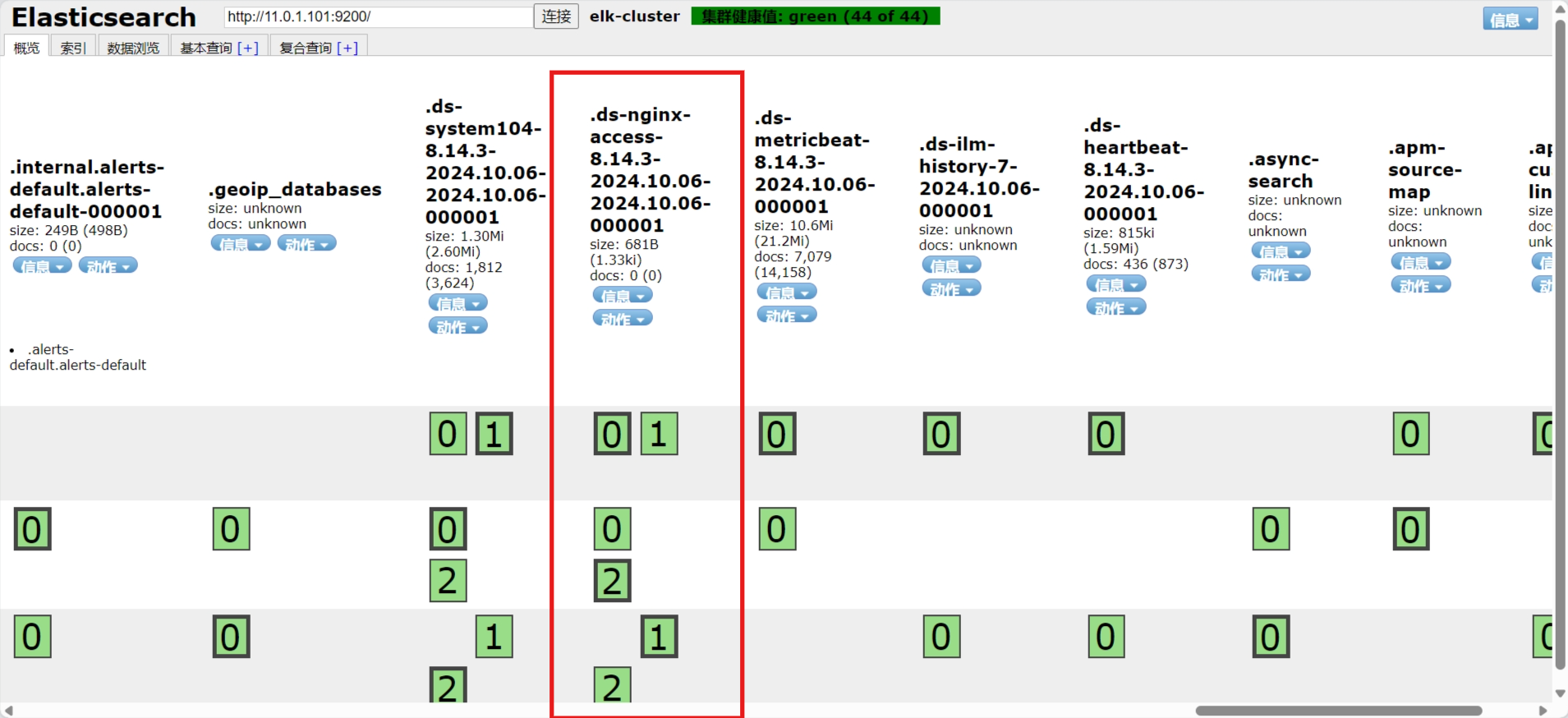

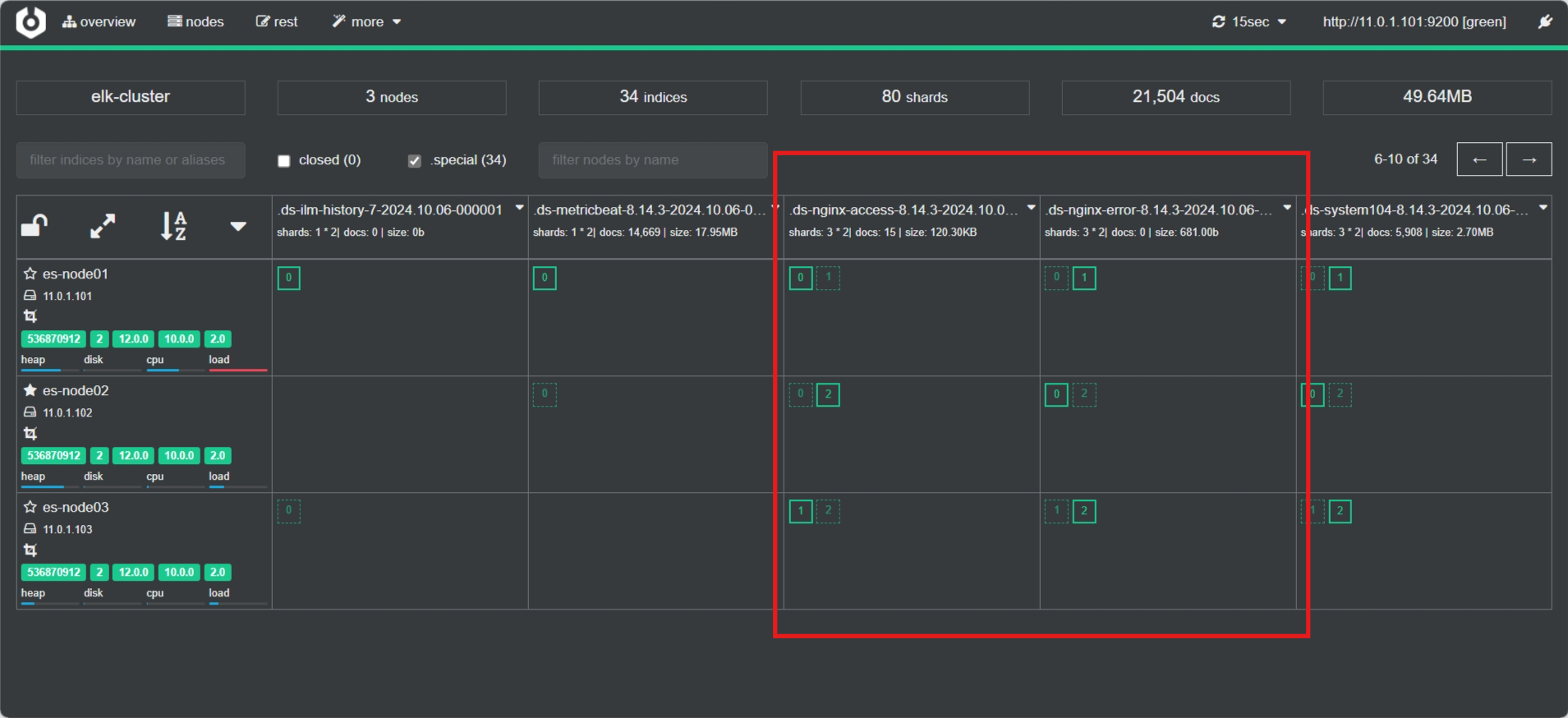

3.3.7.2 插件查看索引

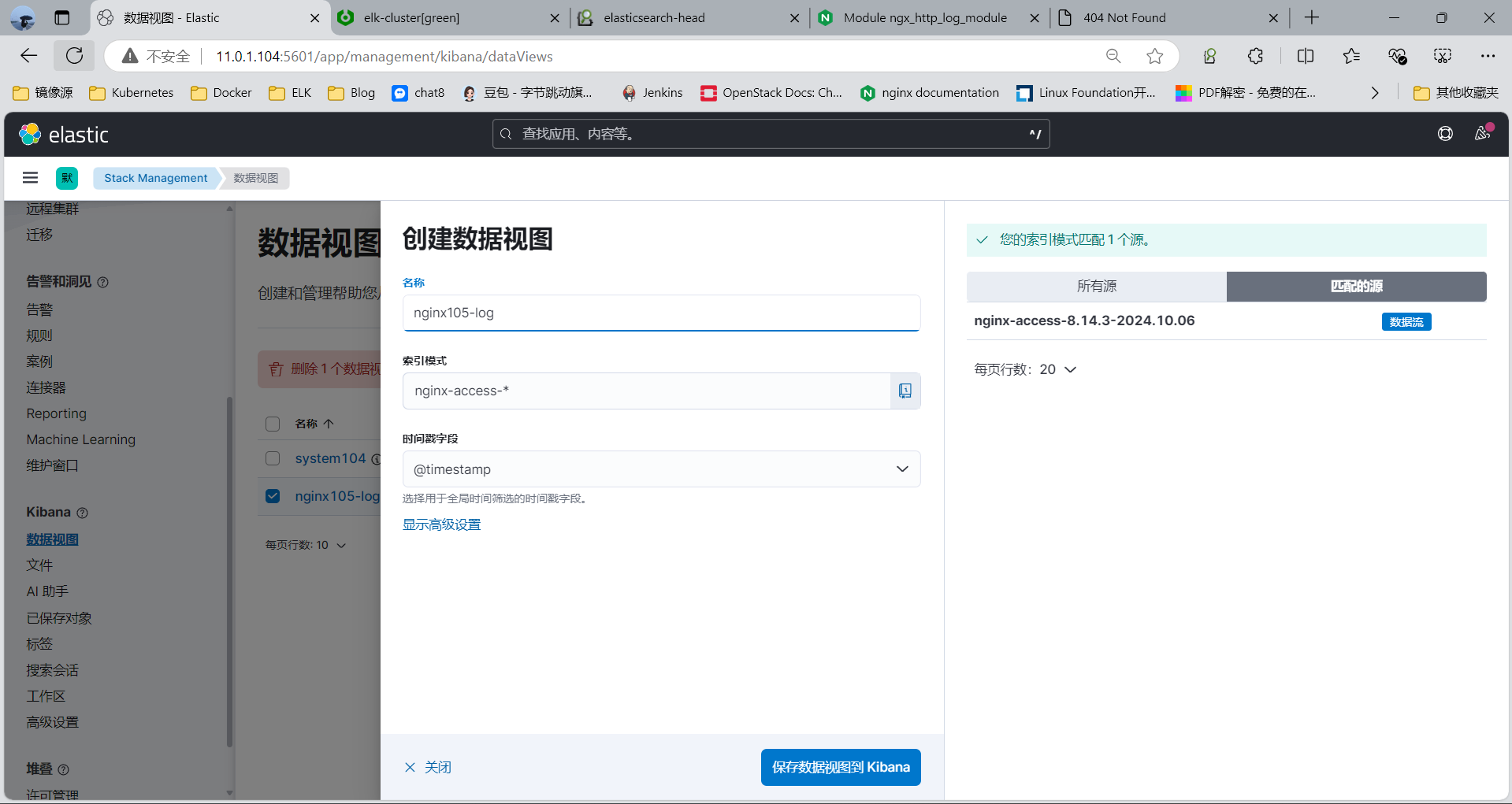

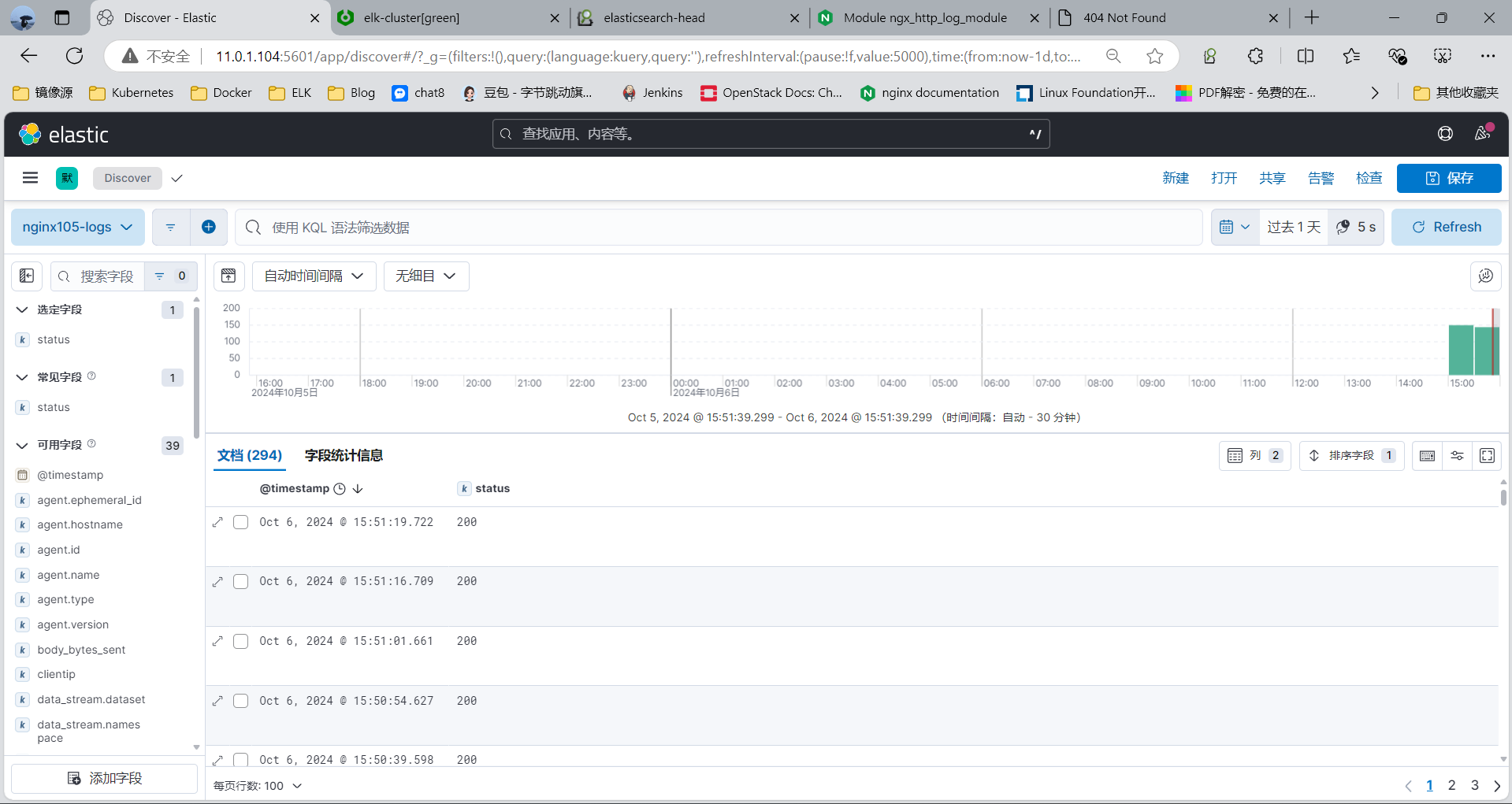

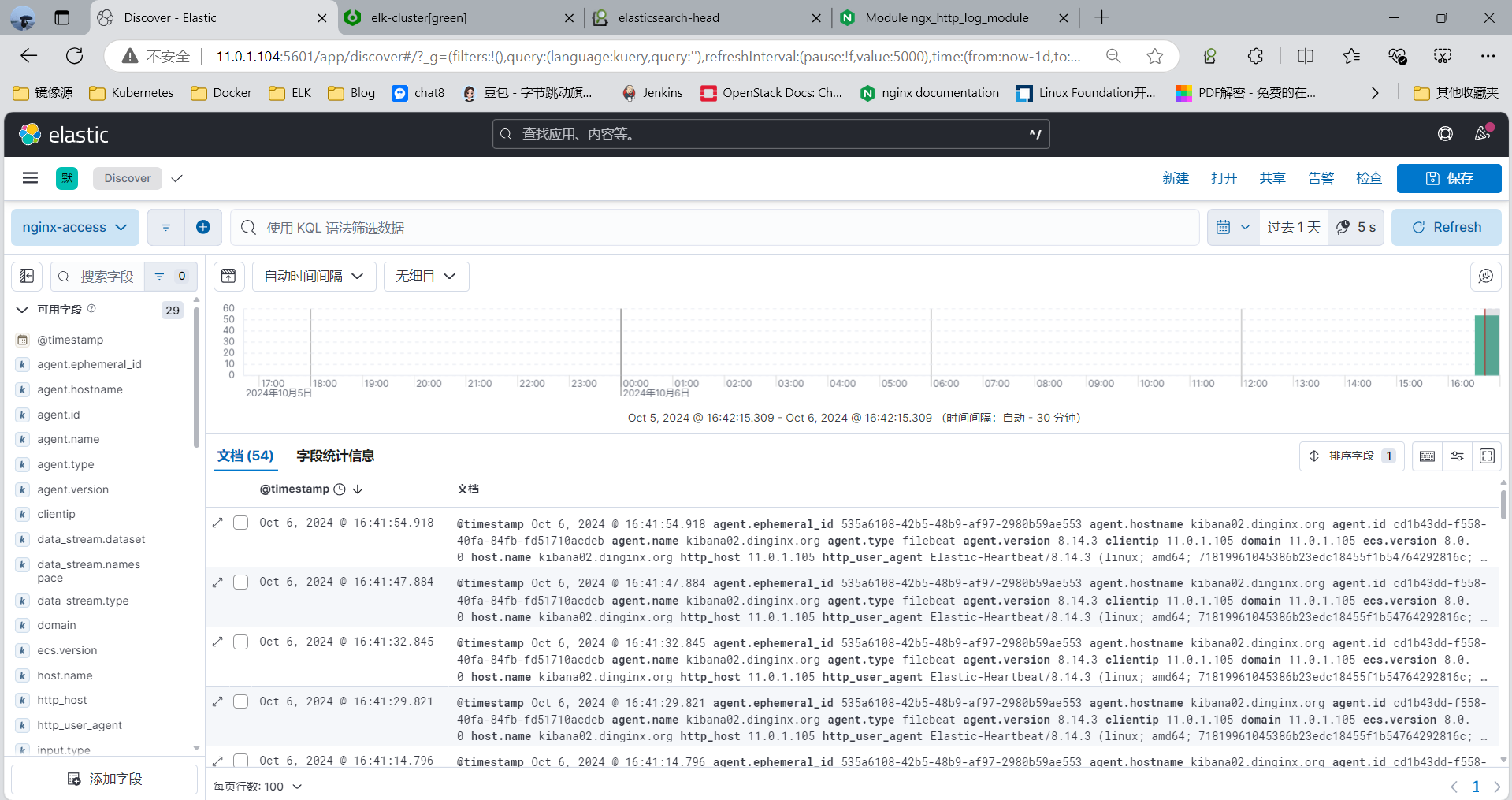

3.3.7.3 在 Kibana 验证日志数据

查看索引

3.3.8 范例: 利用 fields 实现索引的分类

3.3.8.1 filebeat配置文件

#方法一: 使用tags标签分类

[root@kibana02 /etc/filebeat]#vim filebeat.yml filebeat.inputs:

- type: logenabled: truepaths:- /var/log/nginx/access_json.logjson.keys_under_root: truetags: ["nginx-access"]- type: logenabled: truepaths:- /var/log/nginx/error_json.logjson.keys_under_root: truetags: ["nginx-error"]output.elasticsearch:hosts: ["11.0.1.101:9200", "11.0.1.102:9200", "11.0.1.103:9200"]indices:- index: "nginx-access-%{[agent.version]}-%{+yyyy.MM.dd}"when.contains:tags: "nginx-access" # 匹配单个值,而非数组- index: "nginx-error-%{[agent.version]}-%{+yyyy.MM.dd}"when.contains:tags: "nginx-error" # 匹配单个值,而非数组setup.ilm.enabled: false # 禁用 Elasticsearch 的索引生命周期管理(ILM)

setup.template.name: "nginx" # 模板名称,用于处理 NGINX 日志的字段映射

setup.template.pattern: "nginx-*" # 模板匹配模式,应用于所有以 "nginx-" 开头的索引setup.template.settings: # 定义 Elasticsearch 模板的配置选项index.number_of_shards: 3 # 设置每个索引的主分片数量为 3index.number_of_replicas: 1 # 设置每个分片的副本数量为 1注意: 路径文件下须有内容方可生成对应索引#方法二: 使用状态码分类

[root@kibana02 /etc/filebeat]#vim filebeat.yml

filebeat.inputs:

- type: logenabled: truepaths:- /var/log/nginx/access_json.logjson.keys_under_root: trueinclude_lines: ['404'] # 仅包含 404 状态码的日志行fields:status_code: "404"- type: logenabled: truepaths:- /var/log/nginx/access_json.loginclude_lines: ['200'] # 仅包含 200 状态码的日志行fields:status_code: "200"- type: logenabled: truepaths:- /var/log/nginx/access_json.loginclude_lines: ['304'] # 仅包含 304 状态码的日志行fields:status_code: "304"# 输出到 Elasticsearch 配置

output.elasticsearch:hosts: ["http://11.0.1.101:9200"] # 指定 Elasticsearch 集群地址# 指定索引的动态模板,根据状态码选择索引indices:- index: "nginx-error-404-%{+yyyy.MM.dd}" # 404 错误的日志索引when.equals:fields.status_code: "404"- index: "nginx-ok-200-%{+yyyy.MM.dd}" # 200 成功的日志索引when.equals:fields.status_code: "200"- index: "nginx-red-304-%{+yyyy.MM.dd}" # 304 重定向的日志索引when.equals:fields.status_code: "304"setup.ilm.enabled: false # 禁用 Elasticsearch 的索引生命周期管理(ILM)setup.template.name: "nginx" # 模板名称,用于处理 NGINX 日志的字段映射

setup.template.pattern: "nginx-*" # 模板匹配模式,应用于所有以 "nginx-" 开头的索引setup.template.settings: # 定义 Elasticsearch 模板的配置选项index.number_of_shards: 3 # 设置每个索引的主分片数量为 3index.number_of_replicas: 1 # 设置每个分片的副本数量为 13.3.8.2 方法一实现如下,插件查看索引

注意: 路径文件下须有内容方可生成对应索引

3.3.8.3 kibana查看数据

模拟测试

[root@kibana02 ~]#curl 127.0.0.1/xxx.html

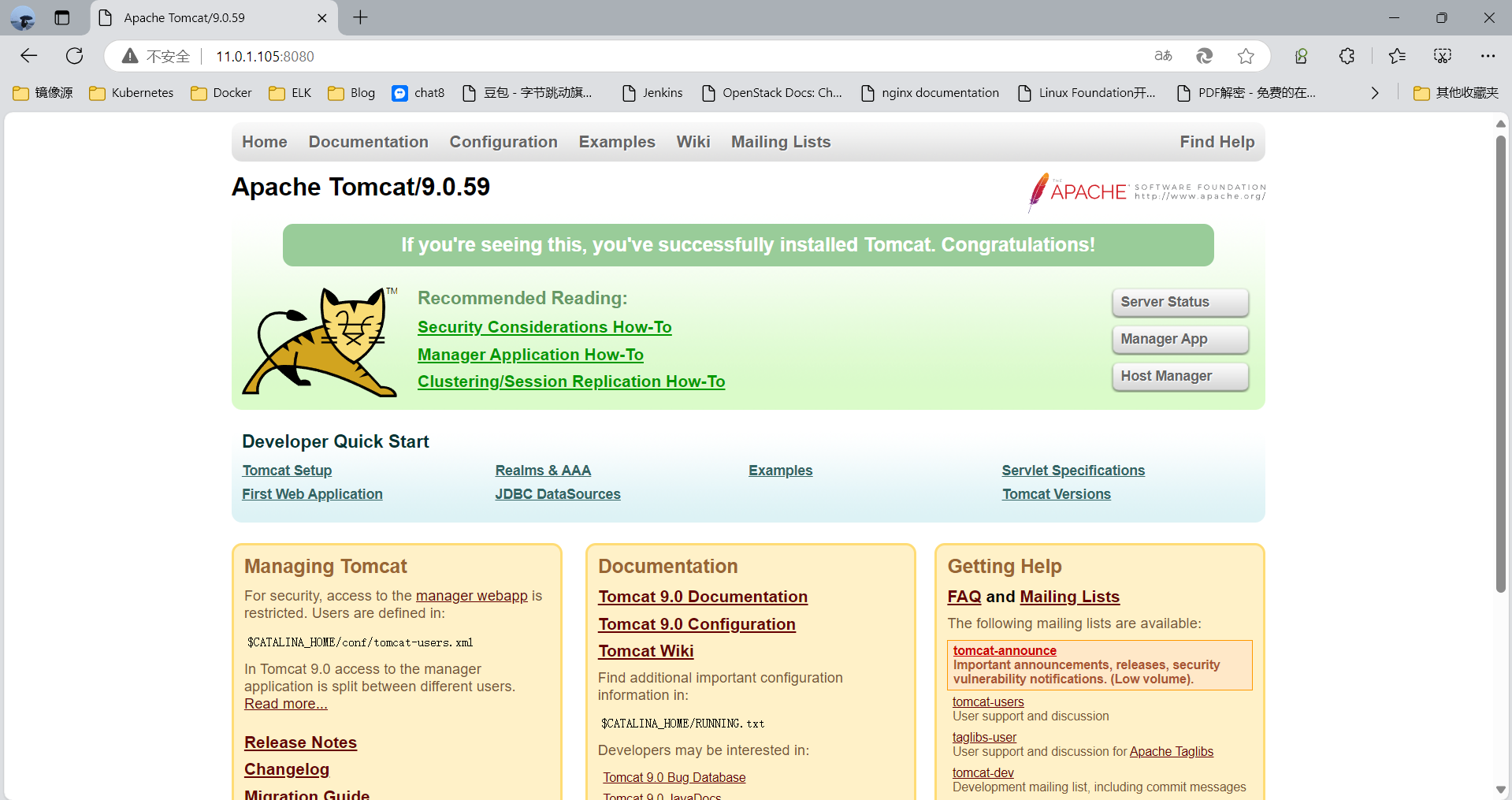

3.3.9 案例: 利用 Filebeat 收集 Tomat 的 Json 格式的访问日志和错误日志到 Elasticsearch

3.3.9.1 安装 Tomcat 并配置使用 Json 格式的访问日志

#安装Tomcat,可以包安装或者二进制安装

[root@web-server ~]#apt install -y tomcat9 tomcat9-admin#修改tomcat配置文件

[root@web-server ~]#vim /etc/tomcat9/server.xml......<!-- Access log processes all example.Documentation at: /docs/config/valve.htmlNote: The pattern used is equivalent to using pattern="common" --><Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"prefix="localhost_access_log" suffix=".txt"pattern=" {"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}" /> #添加自定义日志格式......#日志路径

[root@web-server ~]#tail -f /var/log/tomcat9/localhost_access_log.2024-10-06.txt |jq

3.3.9.2 修改 Filebeat 配置文件

[root@web-server /etc/filebeat]#cat filebeat.yml

filebeat.inputs:

- type: logenabled: truepaths:- /var/log/tomcat9/localhost_access_log.2024-10-06.txtjson.keys_under_root: truetags: ["tomcat-access"]- type: logenabled: truepaths:- /var/log/tomcat9/localhost_access_log.2024-10-06.txtjson.keys_under_root: truetags: ["tomcat-error"]output.elasticsearch:hosts: ["11.0.1.101:9200", "11.0.1.102:9200", "11.0.1.103:9200"]indices:- index: "tomcat-access-%{[agent.version]}-%{+yyyy.MM.dd}"when.contains:tags: "tomcat-access" # 匹配单个值,而非数组- index: "tomcat-error-%{[agent.version]}-%{+yyyy.MM.dd}"when.contains:tags: "tomcat-error" # 匹配单个值,而非数组setup.ilm.enabled: false # 禁用 Elasticsearch 的索引生命周期管理(ILM)

setup.template.name: "tomcat" # 模板名称,用于处理 NGINX 日志的字段映射

setup.template.pattern: "tomcat-*" # 模板匹配模式,应用于所有以 "tomcat-" 开头的索引setup.template.settings: # 定义 Elasticsearch 模板的配置选项index.number_of_shards: 3 # 设置每个索引的主分片数量为 3index.number_of_replicas: 1 # 设置每个分片的副本数量为 1#启动服务

systemctl restart filebeat

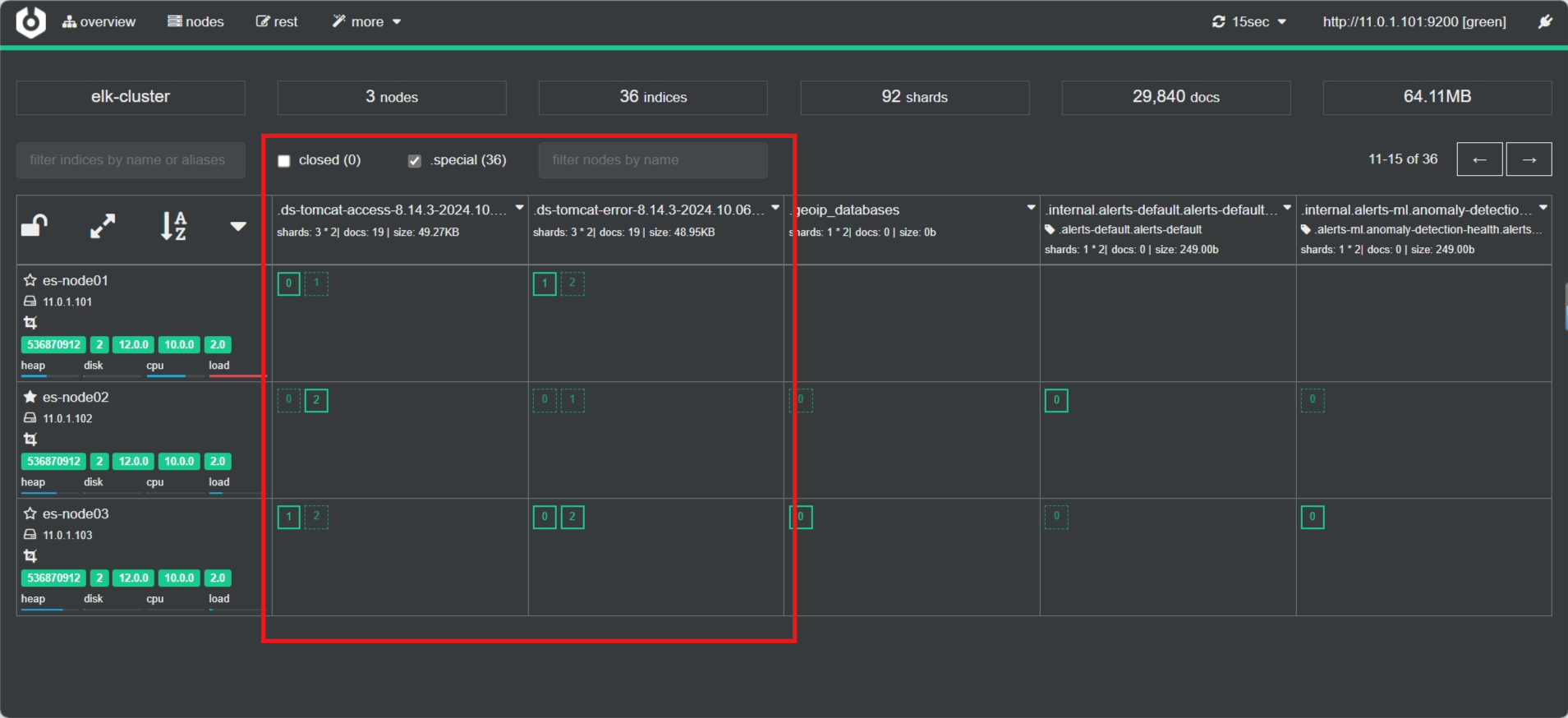

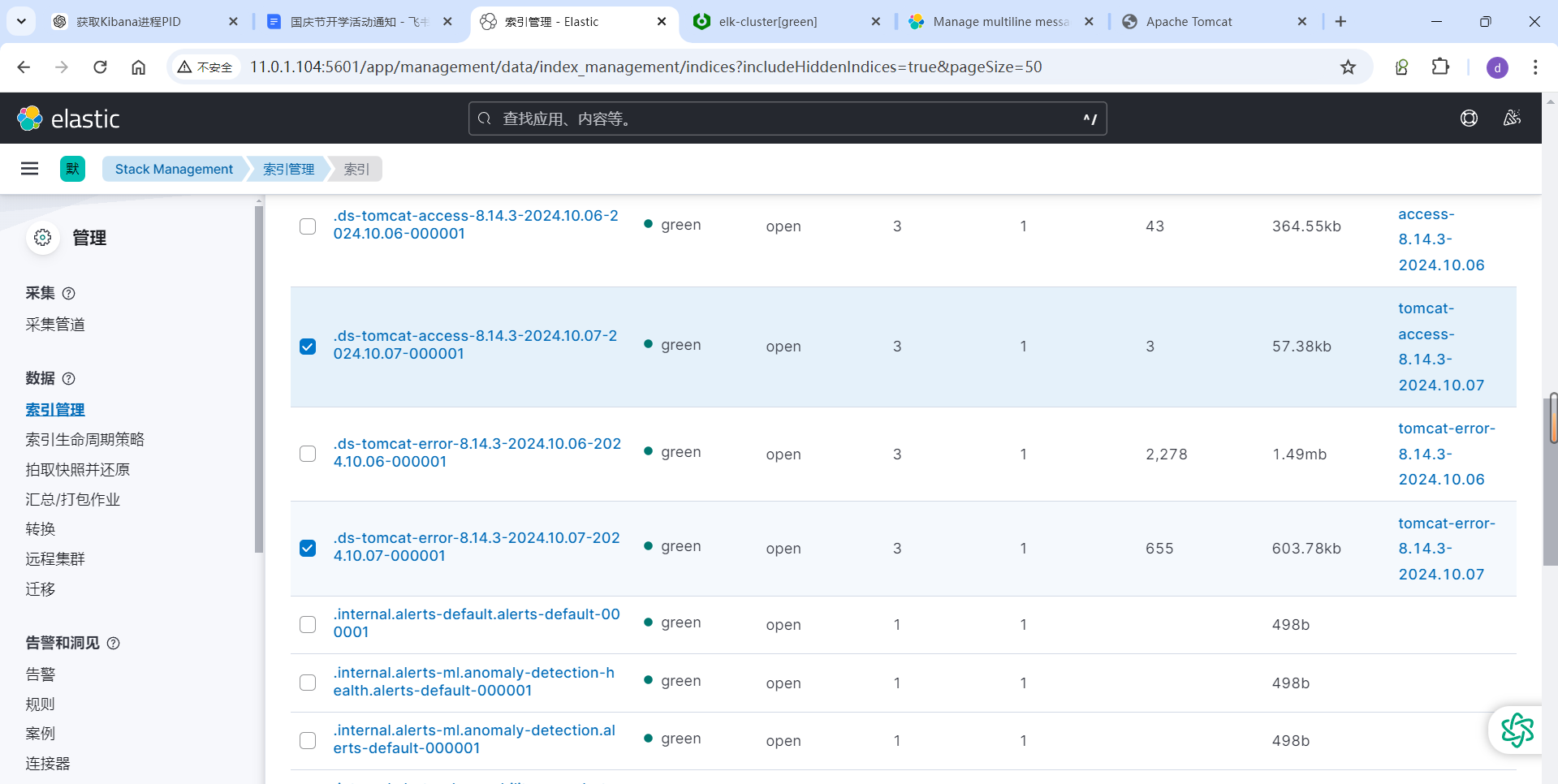

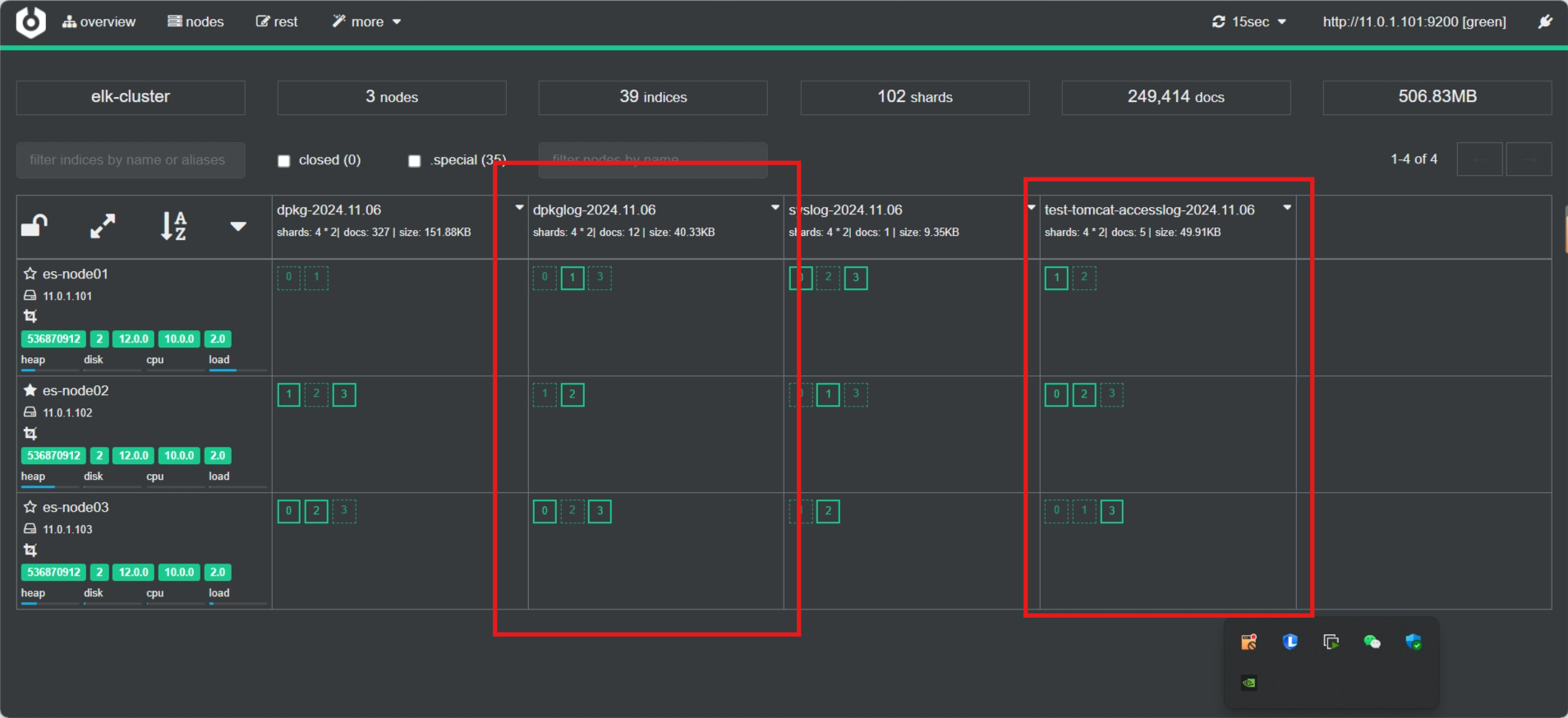

3.3.9.3 插件查看索引

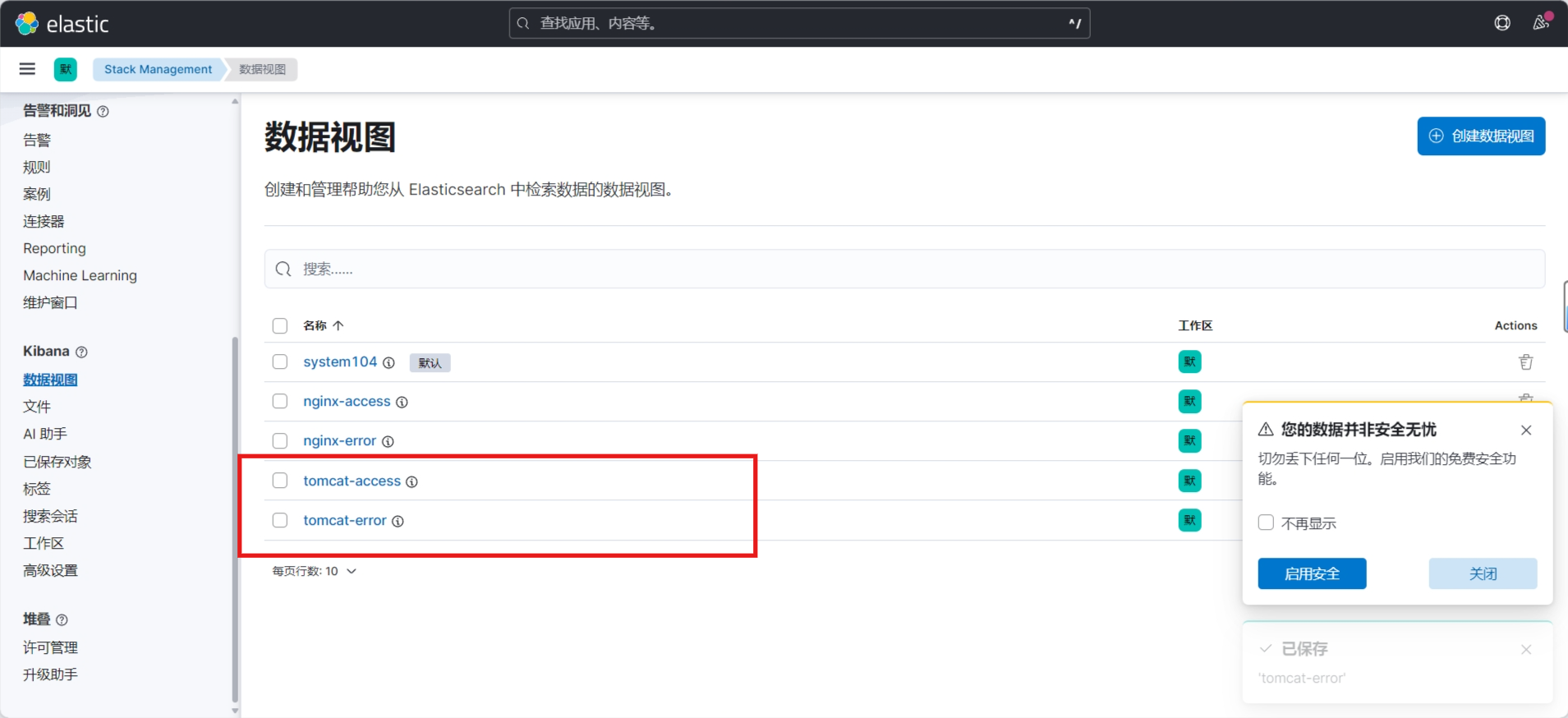

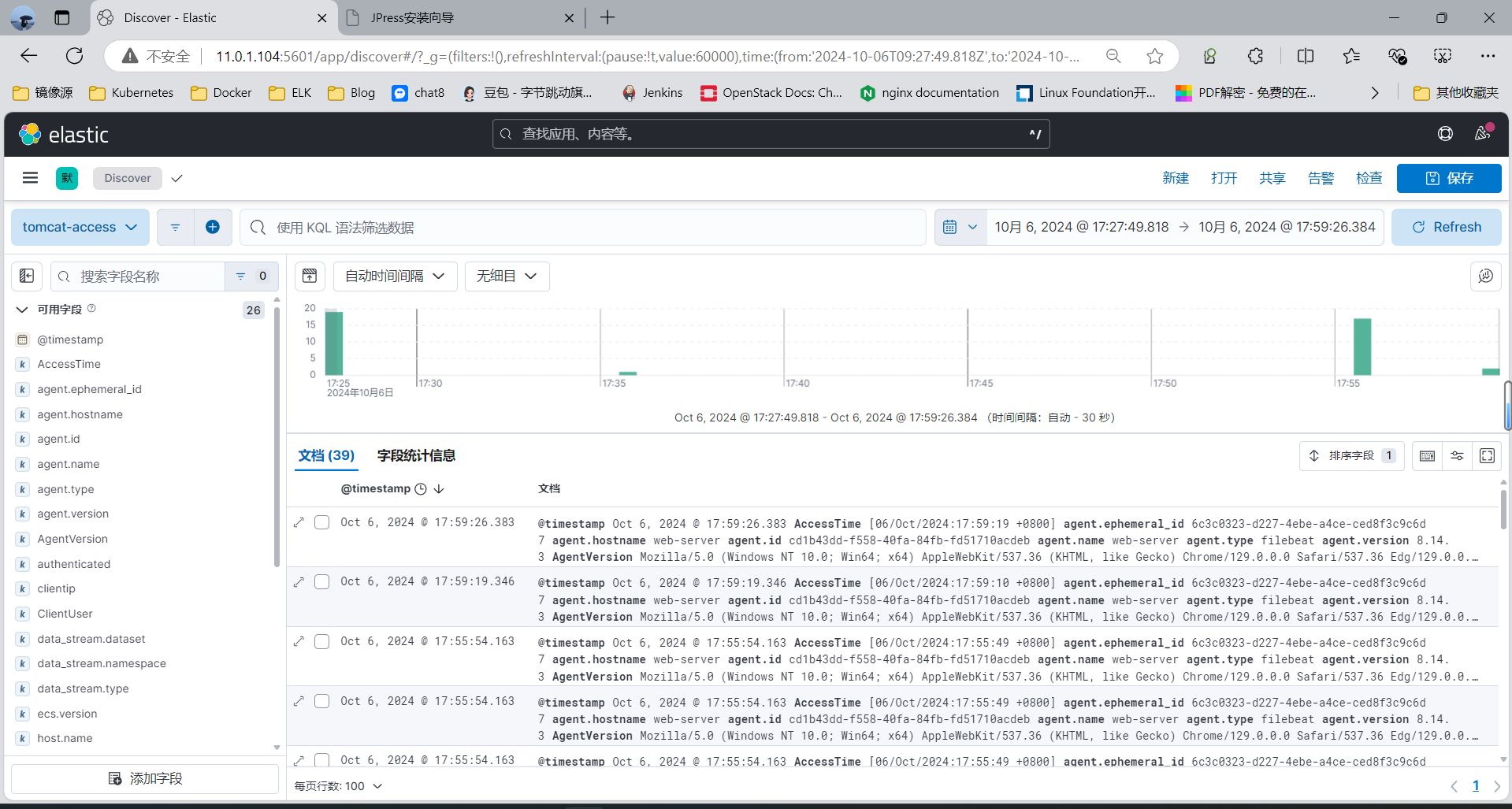

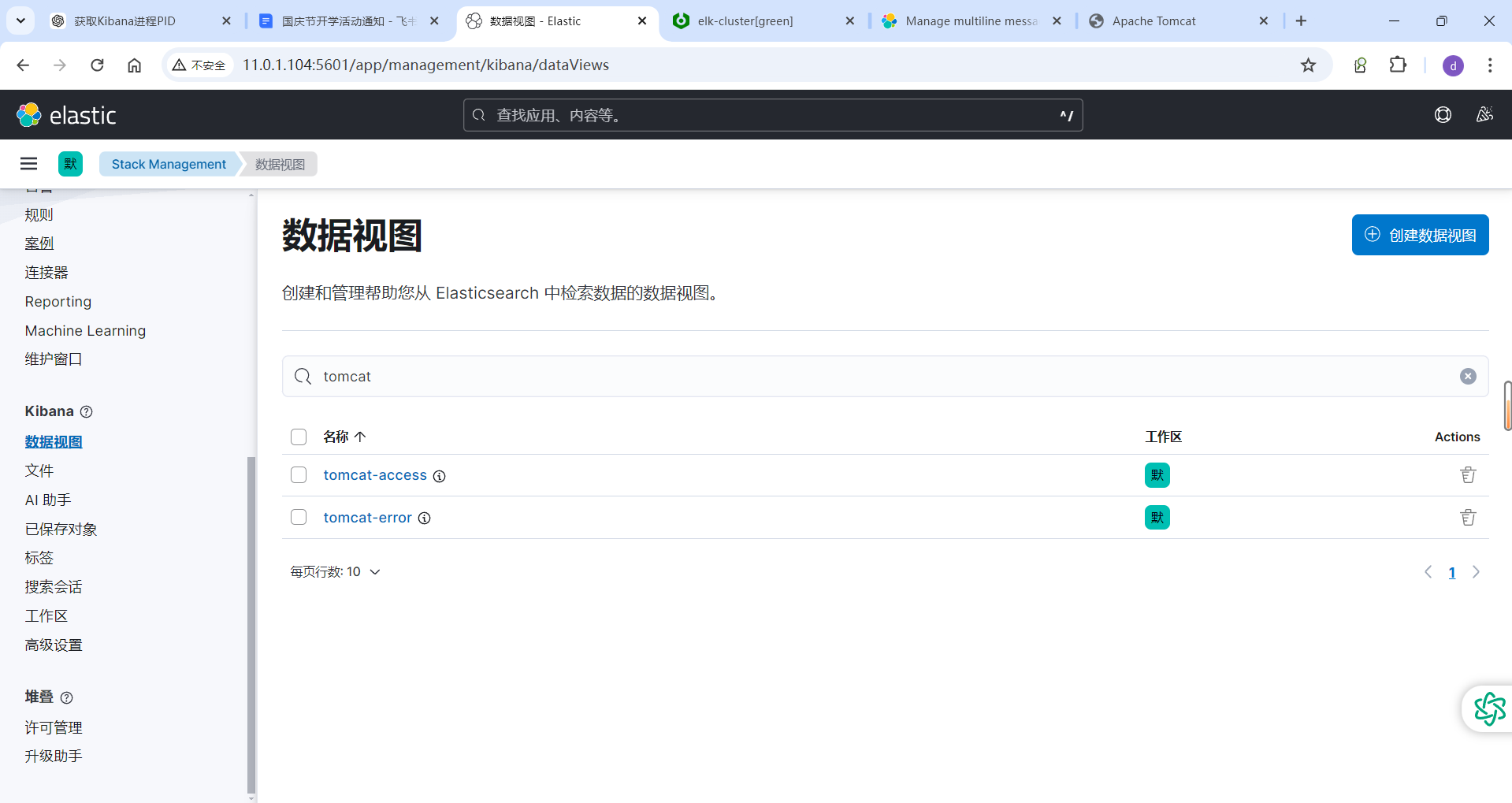

3.3.9.4 通过 Kibana 查看收集的日志信息

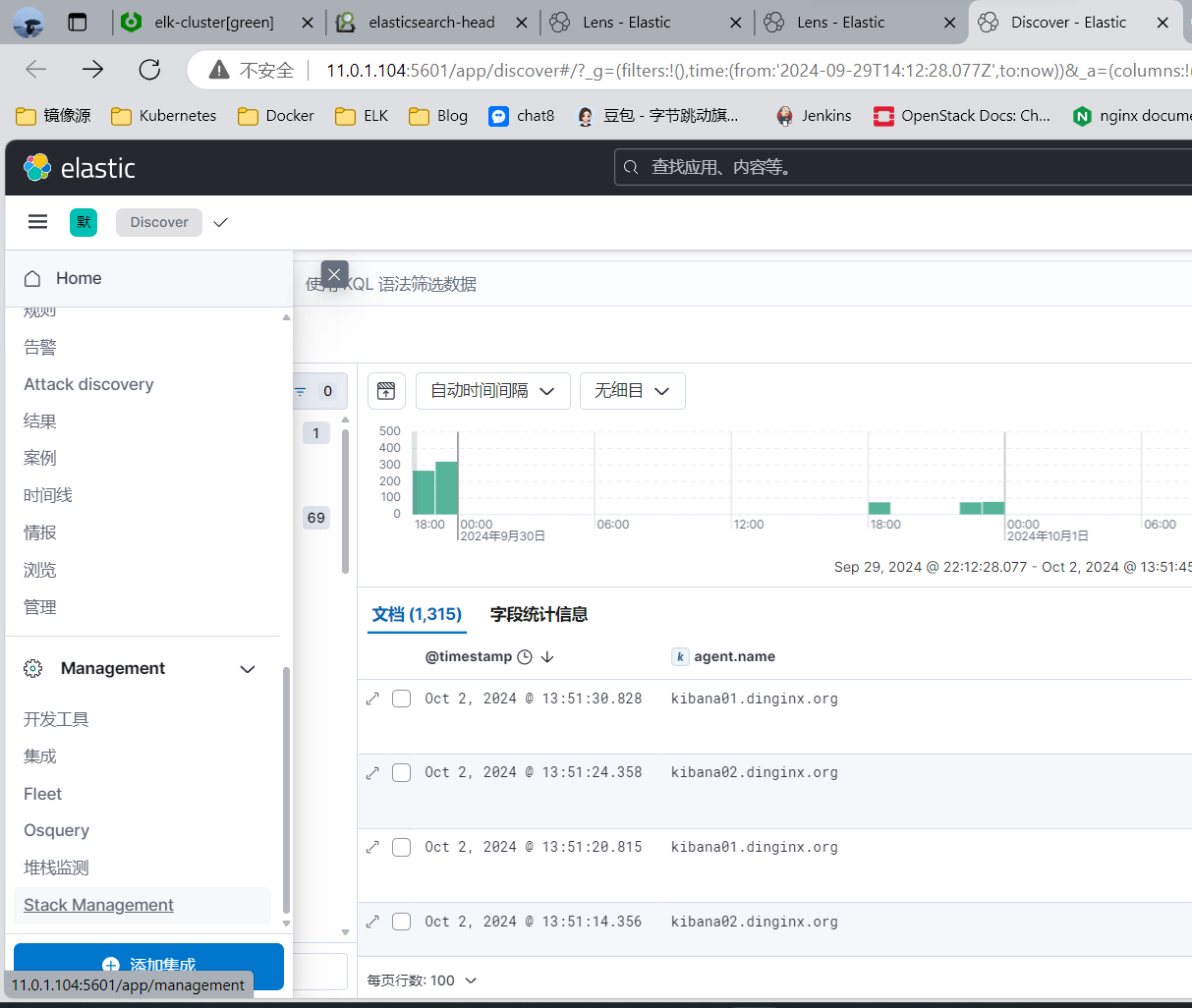

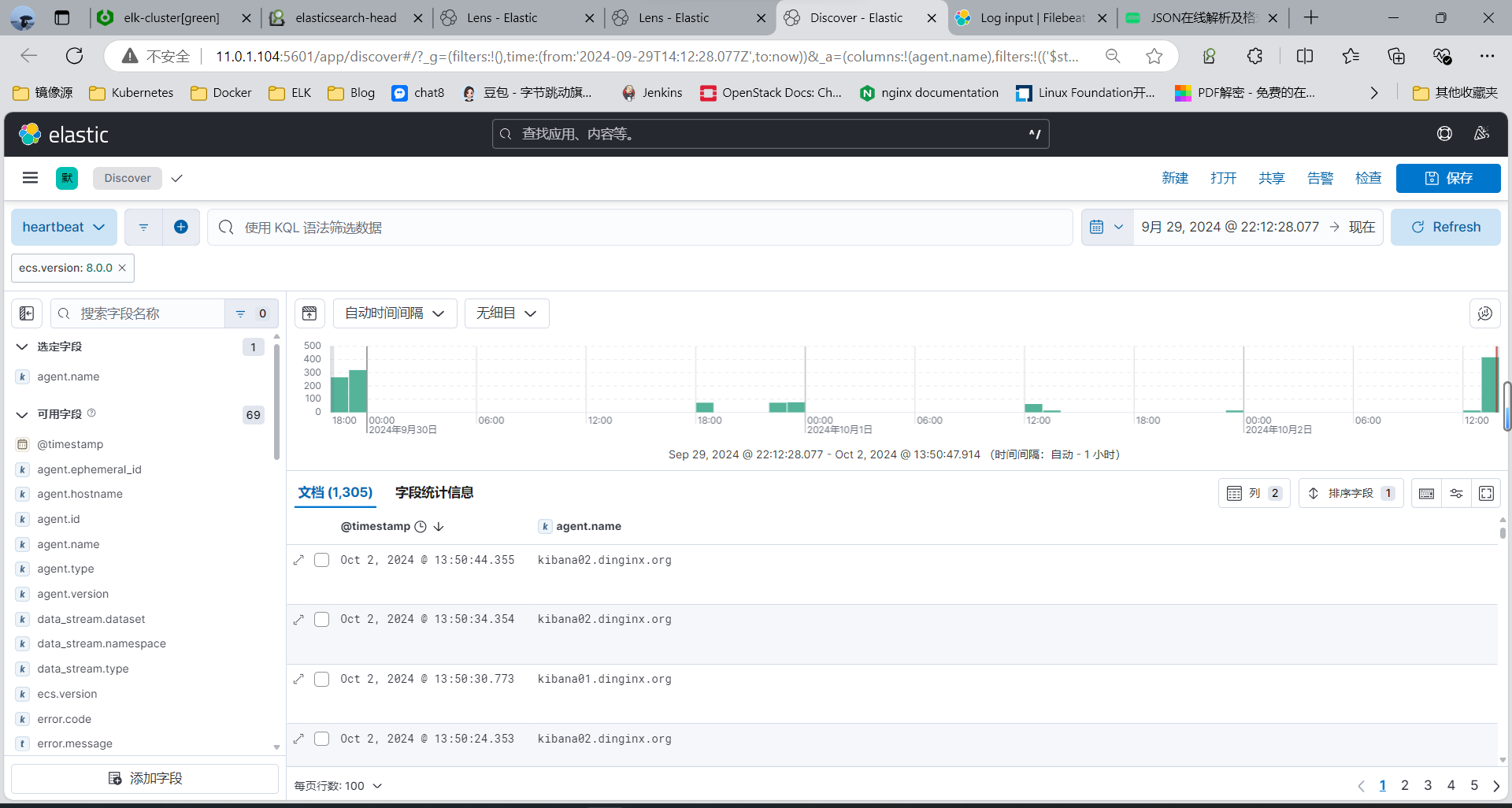

选中agent.name字段

只显示指定字段,所以可以将多个tomcat主机的日志合并成一个索引,利用,再利用此字段区别不同的主机

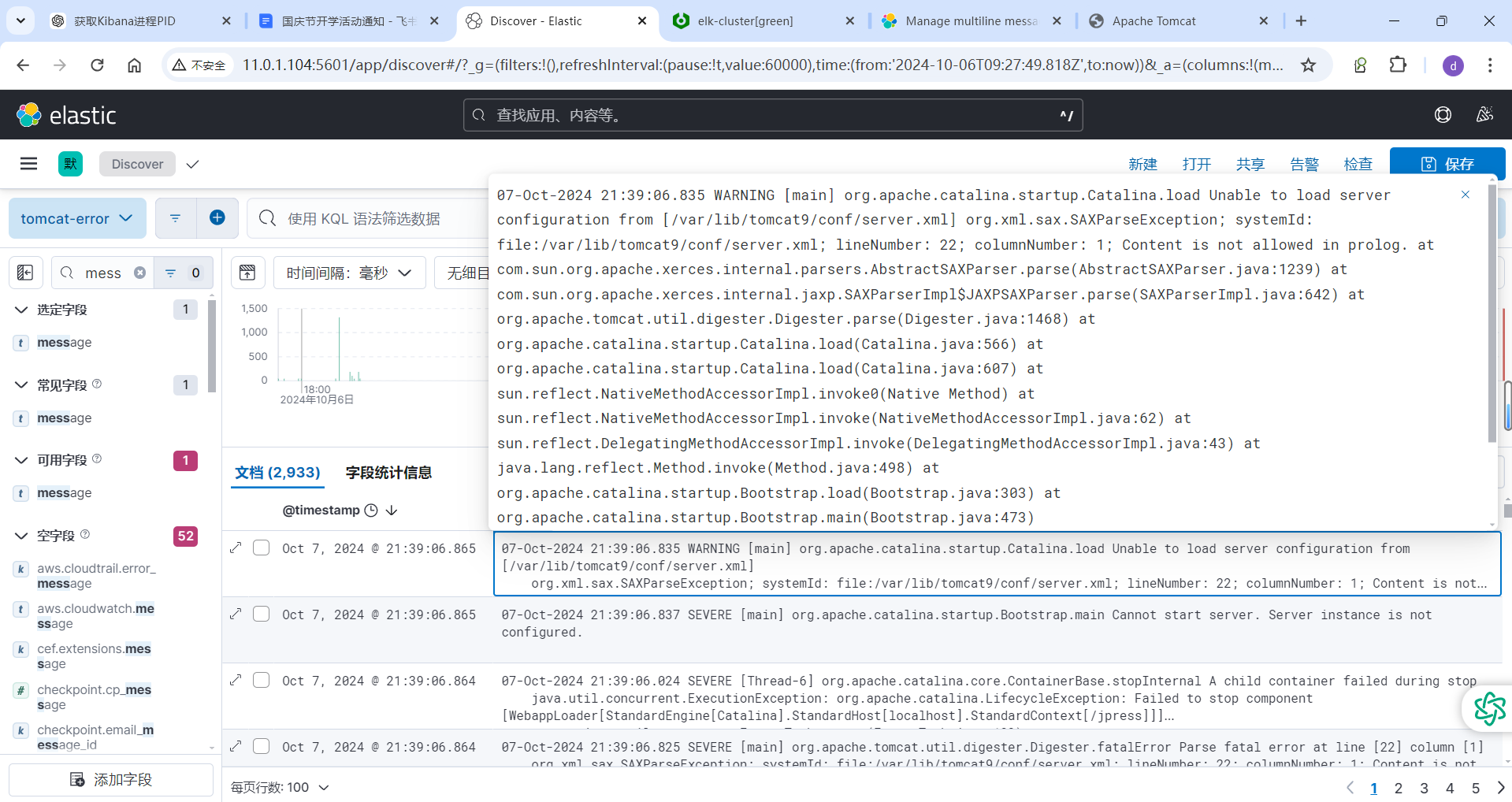

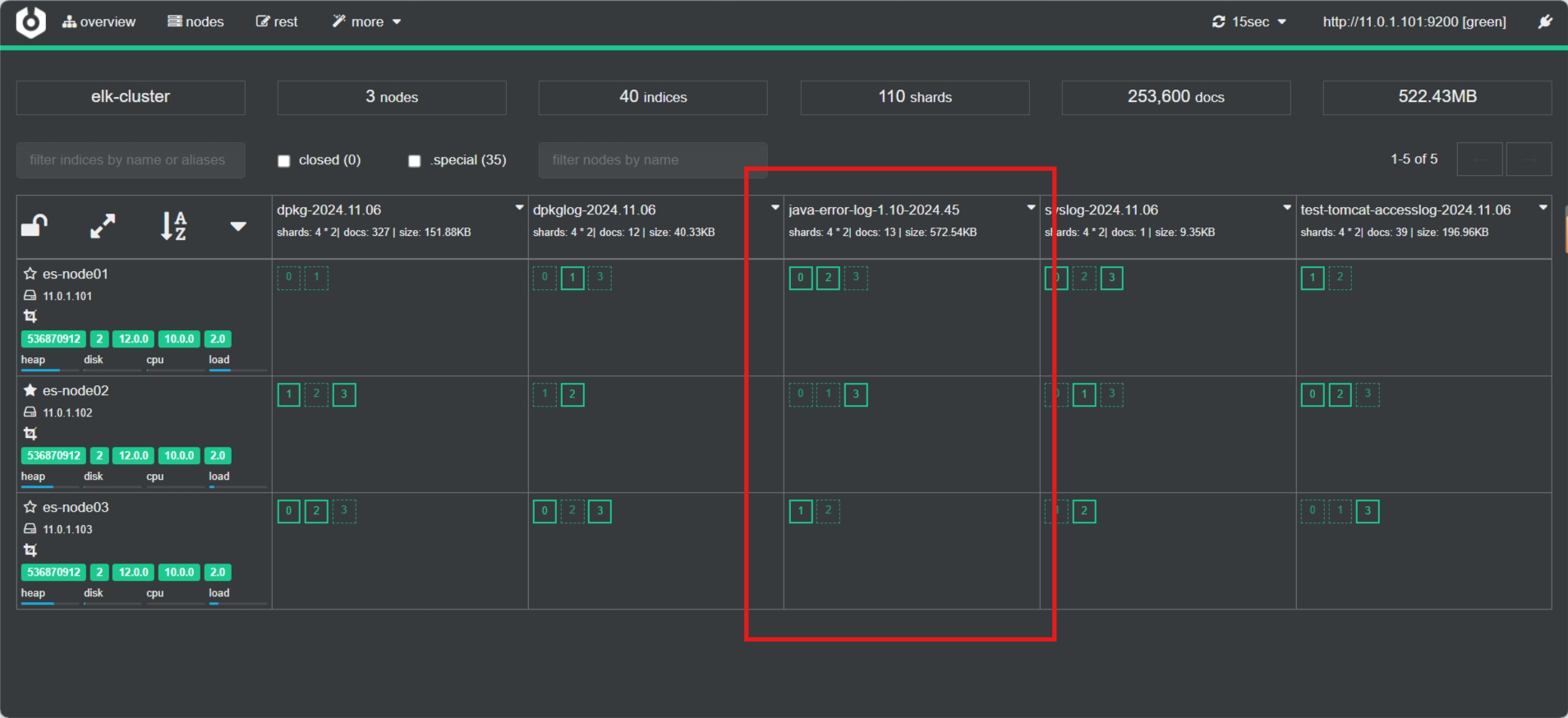

3.3.10 案例: 利用 Filebeat 收集 Tomat 的多行错误日志到Elasticsearch

3.3.10.1 Tomcat 错误日志解析

Tomcat 是 Java 应用,当只出现一个错误时,会显示很多行的错误日志,如下所示

[root@web-server ~]#cat /var/log/tomcat9/catalina.2024-10-06.log ......06-Oct-2024 19:10:46.060 SEVERE [main] org.apache.catalina.startup.ContextConfig.beforeStart Exception fixing docBase for context [/jpress]java.io.IOException: Unable to create the directory [/data/website/jpress]at org.apache.catalina.startup.ExpandWar.expand(ExpandWar.java:115)at org.apache.catalina.startup.ContextConfig.fixDocBase(ContextConfig.java:615)at org.apache.catalina.startup.ContextConfig.beforeStart(ContextConfig.java:747)at org.apache.catalina.startup.ContextConfig.lifecycleEvent(ContextConfig.java:303)at org.apache.catalina.util.LifecycleBase.fireLifecycleEvent(LifecycleBase.java:123)at org.apache.catalina.util.LifecycleBase.setStateInternal(LifecycleBase.java:423)at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:182)at org.apache.catalina.core.ContainerBase.addChildInternal(ContainerBase.java:717)at org.apache.catalina.core.ContainerBase.addChild(ContainerBase.java:690)at org.apache.catalina.core.StandardHost.addChild(StandardHost.java:705)at org.apache.catalina.startup.HostConfig.deployWAR(HostConfig.java:978)at org.apache.catalina.startup.HostConfig$DeployWar.run(HostConfig.java:1849)at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)at java.util.concurrent.FutureTask.run(FutureTask.java:266)at org.apache.tomcat.util.threads.InlineExecutorService.execute(InlineExecutorService.java:75)at java.util.concurrent.AbstractExecutorService.submit(AbstractExecutorService.java:112)at org.apache.catalina.startup.HostConfig.deployWARs(HostConfig.java:773)at org.apache.catalina.startup.HostConfig.deployApps(HostConfig.java:427)at org.apache.catalina.startup.HostConfig.start(HostConfig.java:1576)at org.apache.catalina.startup.HostConfig.lifecycleEvent(HostConfig.java:309)at org.apache.catalina.util.LifecycleBase.fireLifecycleEvent(LifecycleBase.java:123)at org.apache.catalina.util.LifecycleBase.setStateInternal(LifecycleBase.java:423)at org.apache.catalina.util.LifecycleBase.setState(LifecycleBase.java:366)at org.apache.catalina.core.ContainerBase.startInternal(ContainerBase.java:936)at org.apache.catalina.core.StandardHost.startInternal(StandardHost.java:841)at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:183)at org.apache.catalina.core.ContainerBase$StartChild.call(ContainerBase.java:1384)at org.apache.catalina.core.ContainerBase$StartChild.call(ContainerBase.java:1374)at java.util.concurrent.FutureTask.run(FutureTask.java:266)at org.apache.tomcat.util.threads.InlineExecutorService.execute(InlineExecutorService.java:75)at java.util.concurrent.AbstractExecutorService.submit(AbstractExecutorService.java:134)at org.apache.catalina.core.ContainerBase.startInternal(ContainerBase.java:909)at org.apache.catalina.core.StandardEngine.startInternal(StandardEngine.java:262)at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:183)at org.apache.catalina.core.StandardService.startInternal(StandardService.java:421)at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:183)at org.apache.catalina.core.StandardServer.startInternal(StandardServer.java:930)at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:183)at org.apache.catalina.startup.Catalina.start(Catalina.java:633)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.catalina.startup.Bootstrap.start(Bootstrap.java:343)at org.apache.catalina.startup.Bootstrap.main(Bootstrap.java:478)

Java 应用的一个错误导致生成的多行日志其实是同一个事件的日志的内容

而ES默认是根据每一行来区别不同的日志,就会导致一个错误对应多行错误信息会生成很多行的ES文档记录

可以将一个错误对应的多个行合并成一个ES的文档记录来解决此问题

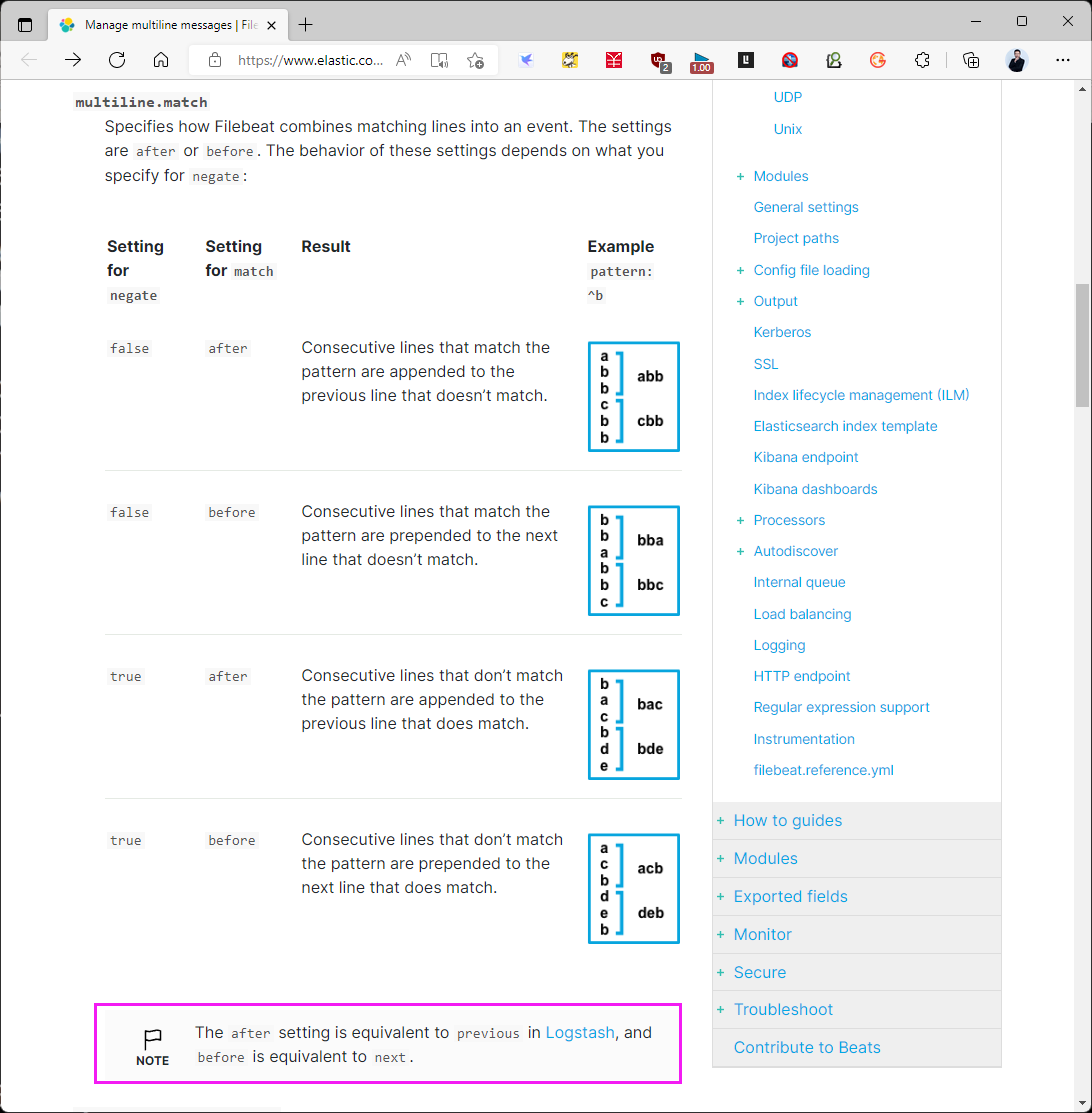

官方文档

https://www.elastic.co/guide/en/beats/filebeat/current/multiline-examples.html

https://www.elastic.co/guide/en/beats/filebeat/7.0/multiline-examples.html

3.3.10.2 安装 Tomcat 并配置

#安装配置

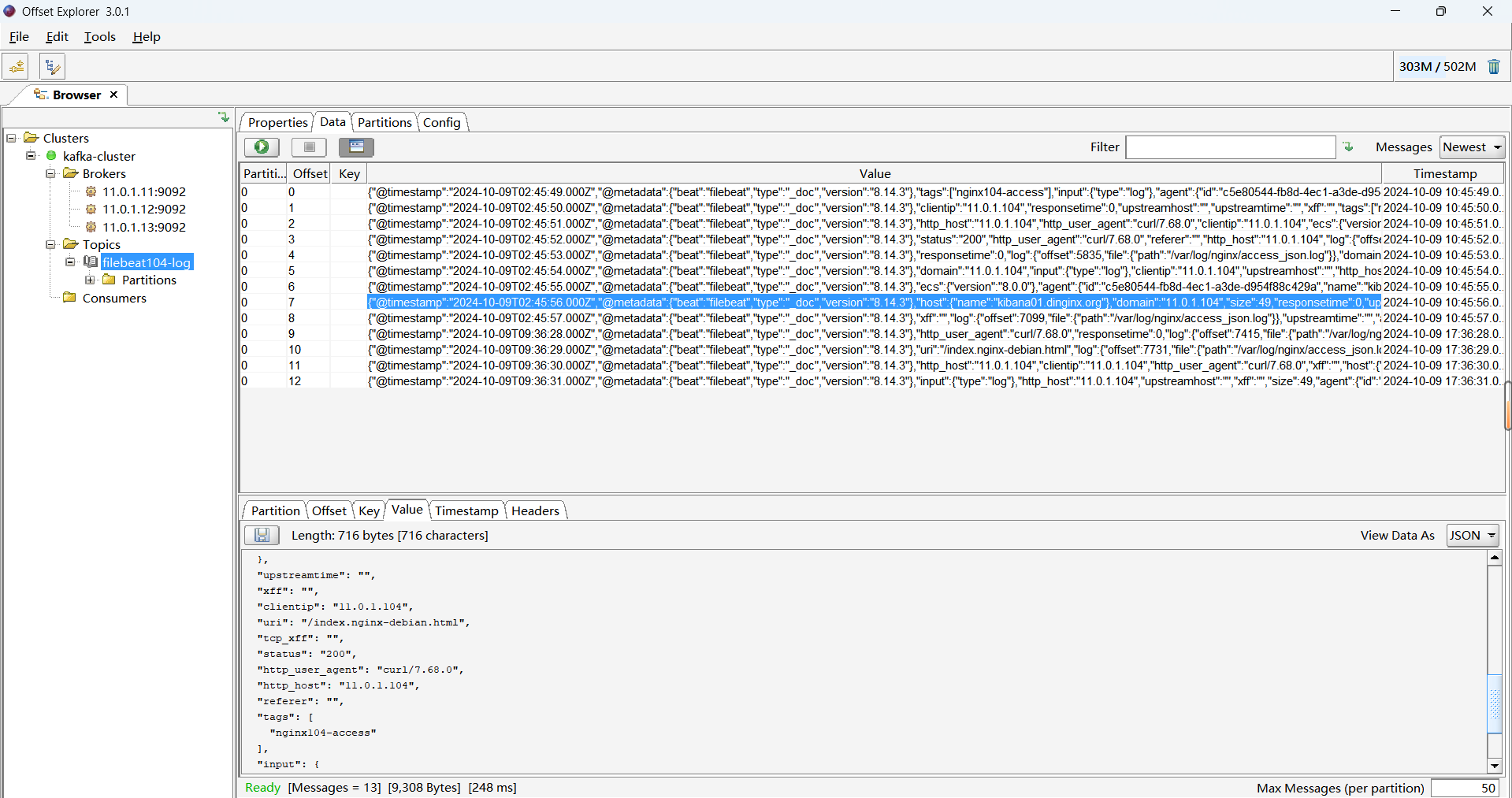

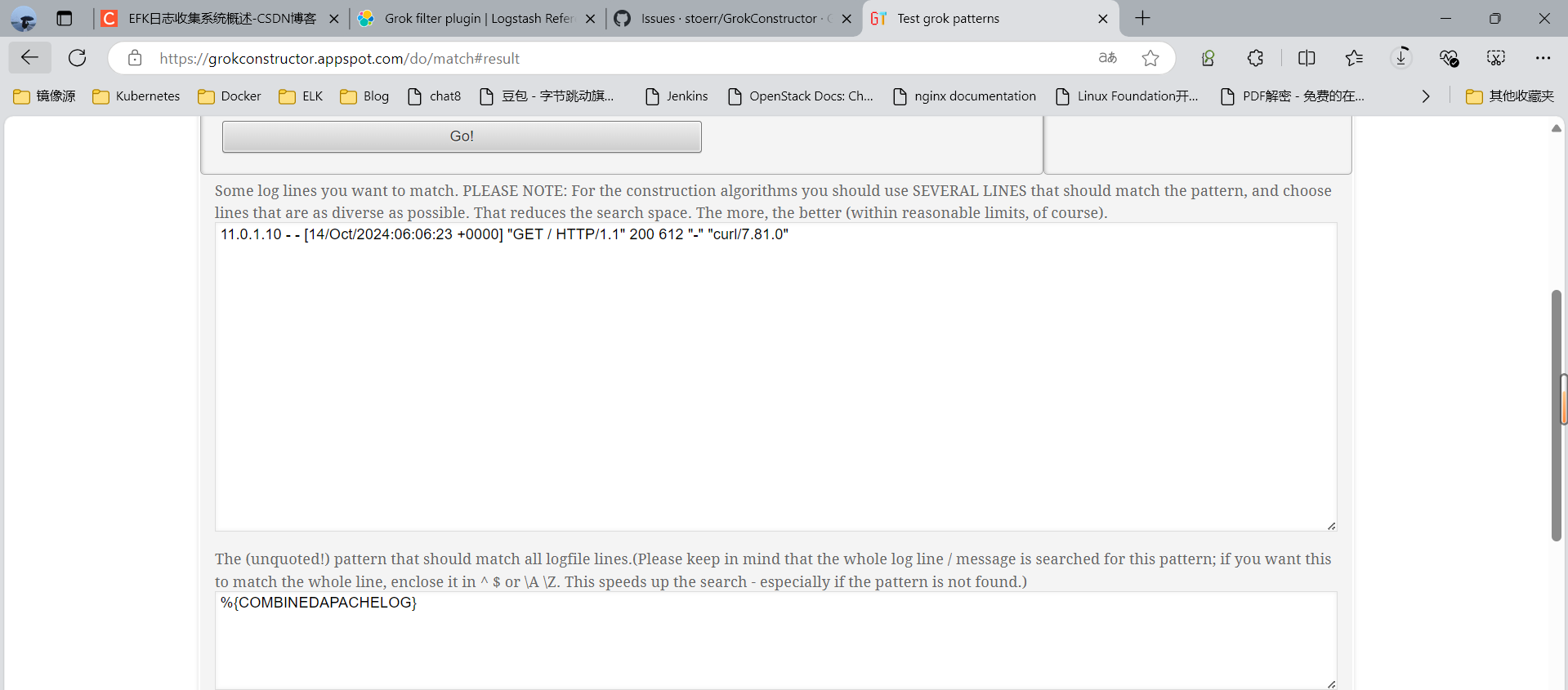

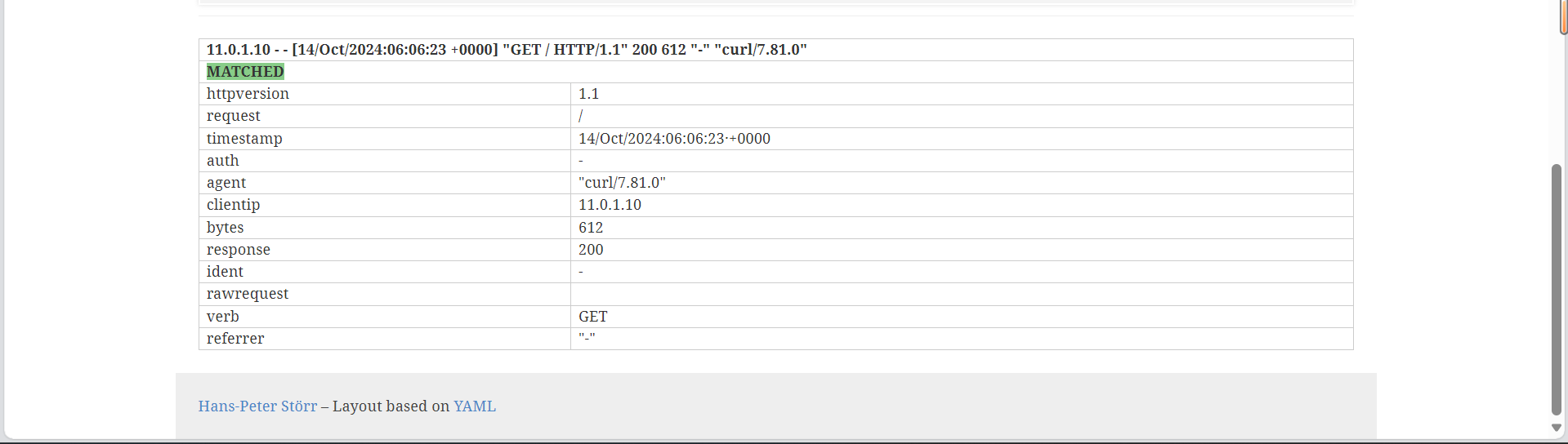

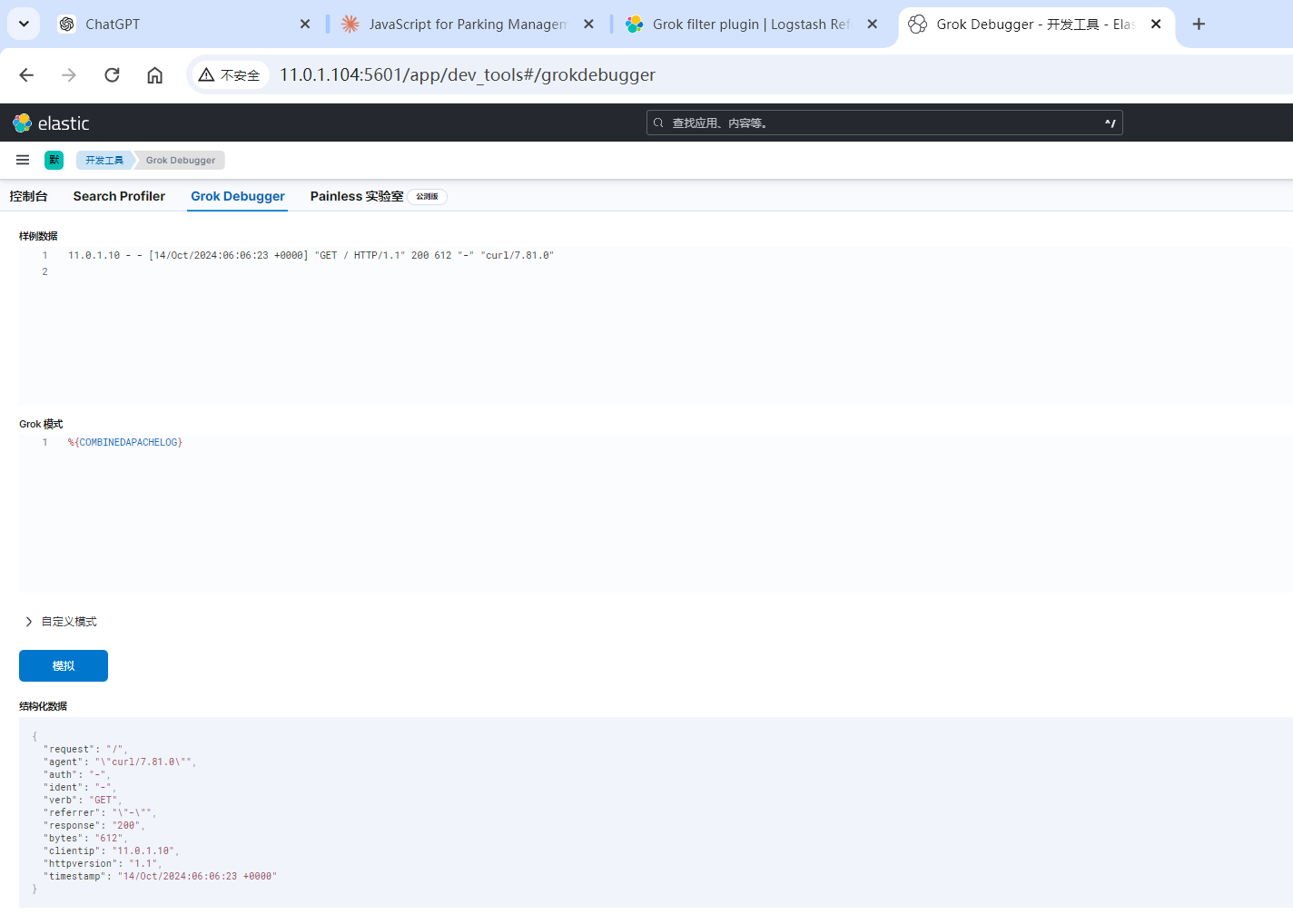

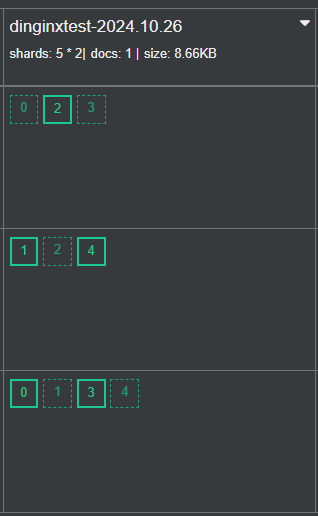

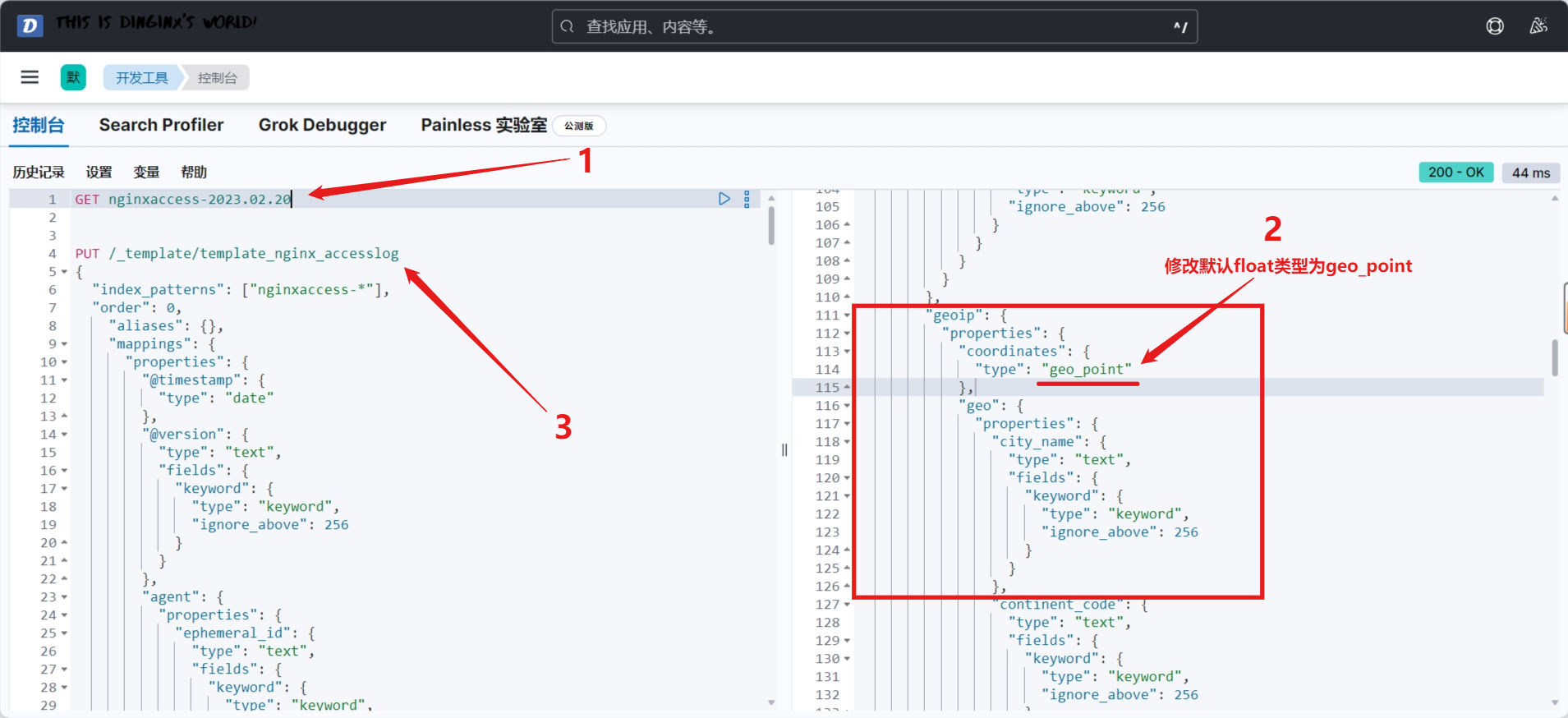

[root@web-server ~]#apt update && apt install -y tomcat9 tomcat9-admin#修改日志格式,添加pattern="......