nginx日志同步阿里云datahub后写入数据库

一、项目背景

使用nginx进行打点访问,将nginx的日志同步到阿里云的datahub,通过datahub再将数据写入到数据库

使用的组件服务有jdk、nginx、logstash插件、阿里云datahub、postgresql

二、安装nginx、logstash、jdk 11

yum -y install nginx java-11-openjdk-devel rpm -ivh logstash-8.14.3-x86_64.rpm

systemctl start logstash.service

三、安装logstash插件

/usr/share/logstash/bin/logstash-plugin install ./logstash-output-datahub-1.0.15.gem

#检查插件安装

/usr/share/logstash/bin/logstash-plugin list |grep logstash-output-datahub#这种方式安装比较慢,可以使用tar.gz包直接安装,见阿里云提供

四、配置nginx、logstash

创建对应日志目录、需要使用ak、sk、datahub的域名、datahub中的project

mkdir /data/logs/nginx/ -p

mkdir /data/logstashdata/ -p

chown nginx:nginx -R /data/logs/nginx/

chown -R logstash:logstash /data/logstashdata/

chomod 755 -R /data access_id => "xxxxx"

access_key => "xxxxx"

endpoint => "xxxxx"

project_name => "xxxxx"

``

`nginx相关配置如下:

```bash

nginx.conf配置文件内容修改如下:

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;events {worker_connections 1024;

}http {

# log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';map $http_user_agent $log_user_agent {# 默认值,如果没有匹配上,则使用原始UAdefault $http_user_agent;# 使用正则匹配 "extra":{ 开头,直到遇到右大括号和右括号的结构,并替换。# 这个正则尝试匹配到额外的右括号,因为整个UA末尾还有一个右括号。"~*(.*)\"extra\":\\s*\\{[^}]*\\}\\}(.*)" "$1\"extra\":{}}$2";}log_format main 'remote_user=$remote_user&time_zh_ms=$time_zh_ms&ip=$http_x_forwarded_for&rip=$http_x_forwarded_for&log_time=$time_local&request_time=$request_time&host=$http_host&$args&status=$status&body_bytes_sent=$body_bytes_sent&referer=$http_referer&ua=$http_user_agent&nowtime=$msec';access_log /var/log/nginx/access.log main;sendfile on;tcp_nopush on;tcp_nodelay on;keepalive_timeout 65;types_hash_max_size 4096;include /etc/nginx/mime.types;default_type application/octet-stream;# Load modular configuration files from the /etc/nginx/conf.d directory.# See http://nginx.org/en/docs/ngx_core_module.html#include# for more information.include /etc/nginx/conf.d/*.conf;server {listen 80;listen [::]:80;server_name _;root /usr/share/nginx/html;# Load configuration files for the default server block.include /etc/nginx/default.d/*.conf;error_page 404 /404.html;location = /40x.html {}error_page 500 502 503 504 /50x.html;location = /50x.html {}}# Settings for a TLS enabled server.

#

# server {

# listen 443 ssl http2;

# listen [::]:443 ssl http2;

# server_name _;

# root /usr/share/nginx/html;

#

# ssl_certificate "/etc/pki/nginx/server.crt";

# ssl_certificate_key "/etc/pki/nginx/private/server.key";

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 10m;

# ssl_ciphers PROFILE=SYSTEM;

# ssl_prefer_server_ciphers on;

#

# # Load configuration files for the default server block.

# include /etc/nginx/default.d/*.conf;

#

# error_page 404 /404.html;

# location = /40x.html {

# }

#

# error_page 500 502 503 504 /50x.html;

# location = /50x.html {

# }

# }}

在/etc/nginx/conf.d中配置log.conf

server {listen 80;server_name t-test.com; #注意修改成对应的域名if ($time_iso8601 ~ "^(\d{4})-(\d{2})-(\d{2})T(\d{2}):(\d{2}):(\d{2})") {set $year $1;set $month $2;set $day $3;set $hour $4;set $minutes $5;set $seconds $6;set $time_zh "$1-$2-$3 $4:$5:$6";}if ($msec ~ "(\d+)\.(\d+)") {set $time_zh_ms $time_zh.$2;}location = /s.gif {empty_gif;# 跨域配置add_header 'Access-Control-Allow-Origin' '*';add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, PUT, DELETE';add_header 'Access-Control-Allow-Headers' '*';# 处理 OPTIONS 预检请求if ($request_method = 'OPTIONS') {add_header 'Access-Control-Allow-Origin' '*';add_header 'Access-Control-Allow-Methods' 'GET, POST, OPTIONS, PUT, DELETE';add_header 'Access-Control-Allow-Headers' '*';add_header 'Access-Control-Max-Age' 86400;return 204;}}#location = /ss.gif {# proxy_pass http://192.168.0.34:8189/hlAdLog/collect;# proxy_buffer_size 64k;# proxy_buffers 32 32k;# proxy_busy_buffers_size 128k;#}location /nginx_status {stub_status on;allow 127.0.0.1;deny all; #deny all other hosts}access_log /data/logs/nginx/log.access_${year}${month}${day}.log main;

}

logstash的配置如下:

input {file {# Windows 中也使用 "/",而非 "\"path => "/data/logs/nginx/log.access_*.log"type => "nginx_access_log"start_position => "beginning"sincedb_path => "/data/logstashdata/logtxt/log.txt"sincedb_write_interval => 15stat_interval => "2"# 可选:增加 codec 以调试原始日志# codec => "plain"}

}filter {# ---------------------# Step 1: 解析 key=value&key=value 类型参数# ---------------------kv {field_split => "&"source => "message"}# ---------------------# Step 2: URL 解码所有字段# ---------------------urldecode {all_fields => true}# ---------------------# Step 3: 安全处理数值字段(price, amount)# ---------------------# 处理 price 字段:确保为浮点数,否则设为 0.0ruby {code => 'value = event.get("price")if value.is_a?(Numeric)event.set("price", value.to_f)elsif value.is_a?(String) && value.strip.match(/\A[-+]?\d*\.?\d+\Z/)event.set("price", value.strip.to_f)elseevent.set("price", 0.0)end'}# 处理 amount 字段ruby {code => 'value = event.get("amount")if value.is_a?(Numeric)event.set("amount", value.to_f)elsif value.is_a?(String) && value.strip.match(/\A[-+]?\d*\.?\d+\Z/)event.set("amount", value.strip.to_f)elseevent.set("amount", 0.0)end'}# ---------------------# Step 4: 处理 nowtime -> tts(毫秒时间戳,必须为整数)# ---------------------mutate {convert => { "nowtime" => "integer" }}ruby {code => 'nowtime = event.get("nowtime")if nowtime.nil?event.set("tts", nil)elsif nowtime.is_a?(Numeric)# 转为毫秒并强制为整数event.set("tts", (nowtime.to_f * 1000).to_i)elseevent.set("tts", nil)end'}# 确保 tts 是整数类型mutate {convert => { "tts" => "integer" }}# ---------------------# Step 5: 处理 ts 字段(关键修复:必须是整数!)# ---------------------ruby {code => 'value = event.get("ts")if value.nil?# 默认值:2050-01-01 00:00:00.000(未来时间,便于识别异常)event.set("ts", 2493043200000)elsif value.is_a?(Numeric)# 关键:直接转为整数(不是 to_f!)event.set("ts", value.to_i)elsif value.is_a?(String)cleaned = value.to_s.strip.gsub(/\.0+$/, "") # 去掉 .0, .00 等if cleaned.match(/\A\d+\Z/) # 纯数字字符串event.set("ts", cleaned.to_i)elseevent.set("ts", 2493043200000)endelseevent.set("ts", 2493043200000)end'}# 再次确保 ts 是整数mutate {convert => { "ts" => "integer" }}# ---------------------# Step 6: 处理 total_switch 字段(0/1 校验)# ---------------------ruby {code => 'value = event.get("total_switch")if value.is_a?(Numeric)event.set("total_switch", value.to_i)elsif value.is_a?(String) && value.strip.match(/\A\d+\Z/)event.set("total_switch", value.strip.to_i)elseevent.set("total_switch", 0)end'}mutate {convert => { "total_switch" => "integer" }}# 如果值不是 0 或 1,则设为 0if [total_switch] != 0 and [total_switch] != 1 {mutate {replace => { "total_switch" => 0 }}}# ---------------------# Step 7: 可选调试字段(上线前建议关闭)# ---------------------# mutate {# add_field => { "debug_pipeline" => "v2" }# add_field => { "debug_ts_type" => "%{[ts]}" }# }

}output {# ---------------------# 所有 DataHub 输出共用相同 access_id/secret,可考虑使用变量(但 Logstash 不支持全局变量)# 因此保持重复,或使用管道变量(高级用法)# ---------------------if [et] == "0" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "app_device"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "1" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "hl_ad_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "2" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "hl_ad_report"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "3" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "ad_report_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "4" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "app_ad_switch"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "5" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "app_activation"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "10" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "mid_ad_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "11" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "mid_activation"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "12" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "kv_ad_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "13" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "kv_ad_log_key"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "14" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "kv_report_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "15" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "user_pull_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "16" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "user_open_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "17" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "app_ad_report"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "18" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "app_report_error_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "19" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "mid_ad_log_kyy"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "20" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "user_match_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "21" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "user_interval_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "30" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "app_page_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "31" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "ad_material_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "90" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "cert_abnormal_data"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log-30.data"dirty_data_file_max_size => 100000}}if [et] == "91" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "file_download_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log-30.data"dirty_data_file_max_size => 100000}}if [et] == "92" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "wallpaper_and_pip_log"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log-30.data"dirty_data_file_max_size => 100000}}if [et] == "100" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "black_device"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "101" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_tt"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "102" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_ks"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "103" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_tx"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "104" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_oppo"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "105" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_vivo"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "106" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_huawei"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "107" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_xiaomi"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}if [et] == "108" {datahub {access_id => "xxxxx"access_key => "xxxxx"endpoint => "xxxxx"project_name => "xxxxx"topic_name => "adlog_baidu"dirty_data_continue => truedirty_data_file => "/data/logstashdata/log.data"dirty_data_file_max_size => 1000}}}

注意:上面的logstash配置文件根据实际业务侧进行转换

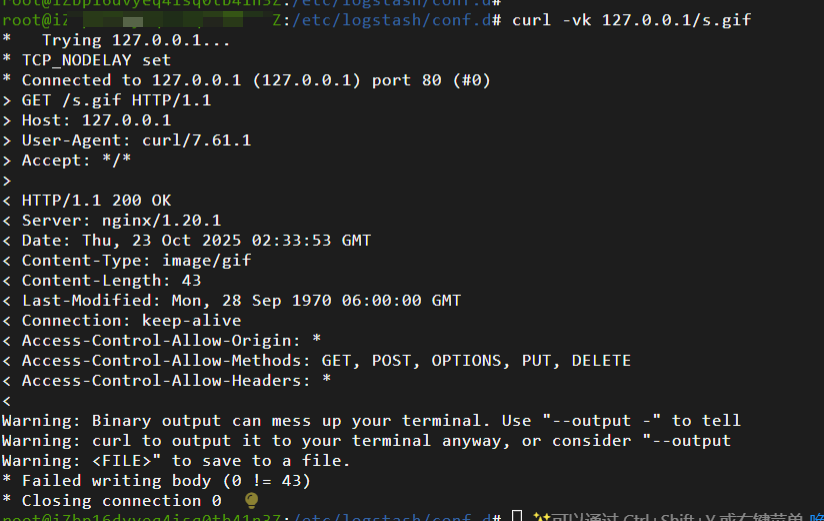

五、启动nginx进行测试

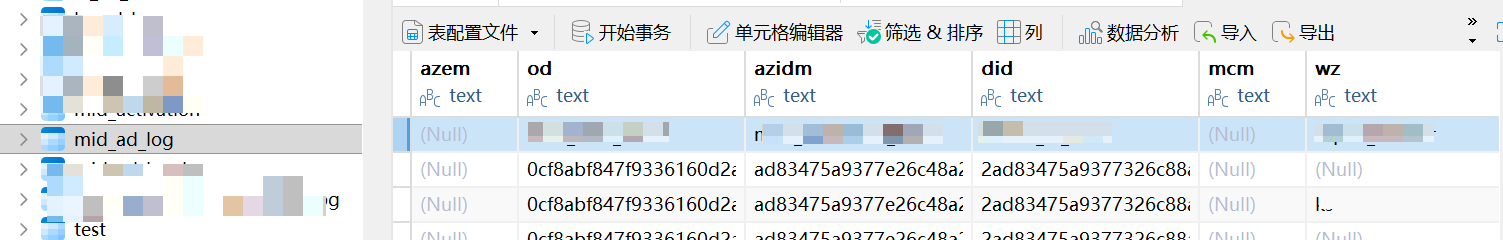

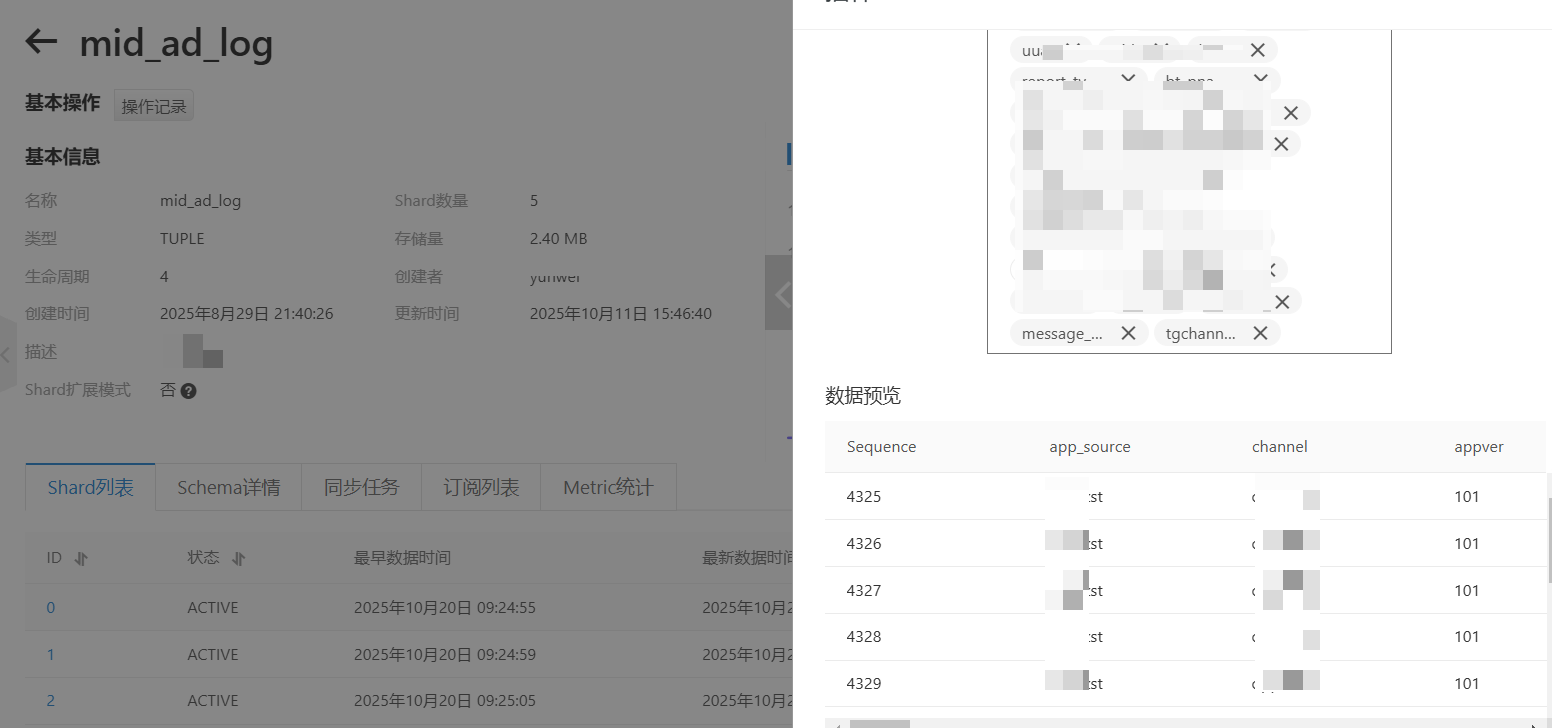

在datahub中数据查看

六、配置datahub同步到postgresql

目前阿里云支持的同步有如下几种方式,可以对应进行配置同步,目前本人用的是一个第三方的pg,只需要填写ak、sk、数据库名称即可启动同步任务,这里不做演示,可提供数据库数据查询