Pytorch Yolov11目标检测+Android部署 留贴记录

**

这应该是CSDN第一篇在Qt for android搭建YOLOV11目标检测的博文吧,太难了

**

耗费接近两天…特意记录和分享一下

Pytorch Yolov11目标检测+Android部署 留贴记录

这偏博文是对之前三篇yolo11的补充,感兴趣去前面看

1.大前提:还是要先训练一个模型出来,根据自己的需求

这部分看前面吧,都写的挺详细的了,翻翻我之前的博文

2.安卓环境搭建

我用的不是主流的Androidstudio,我也不会用,不熟悉java,我用的是Qt for android,环境自己搭吧

3.正式开始

3.1 首先导出一份torchscript格式的模型文件

from ultralytics import YOLO

# Load a model

model = YOLO("best_cow.pt")

model.export(format="torchscript",imgsz = 640,batch = 1)

3.2 用pnnx 转换一下格式

这个一般都没有 可以自己下载一下

pip install pnnx ncnn

然后转换命令

yolo11:

pnnx yolo11n.torchscript

自己的模型:

pnnx best_cow.torchscript

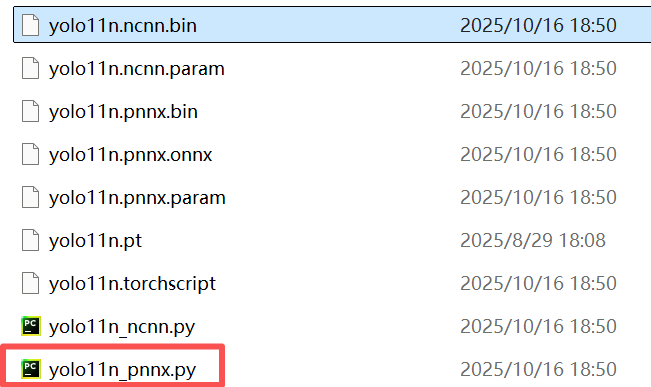

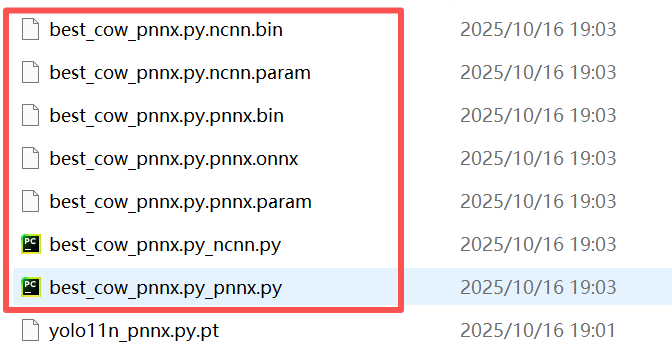

有时候找不到pnnx命令,我们找到绝对路径,用exe命令即可,转换成功,会出现下面一堆玩意,这一步就算成功了

*****\Python39\site-packages\pnnx\pnnx.exe yolo11n.torchscript

3.3 开始编辑

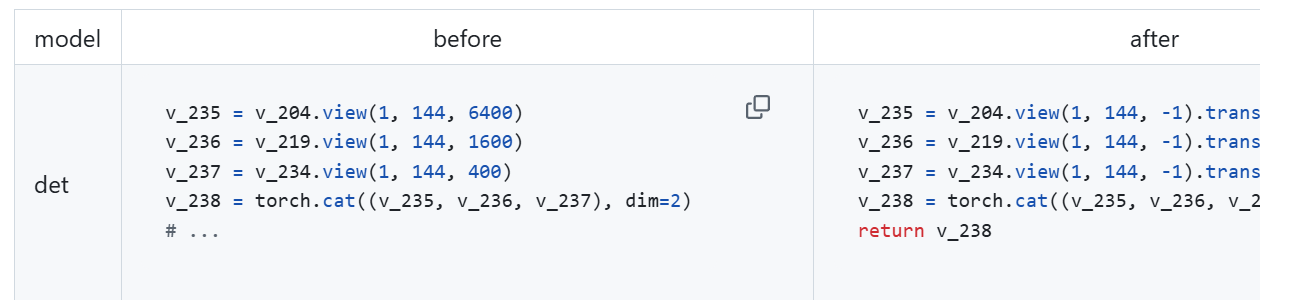

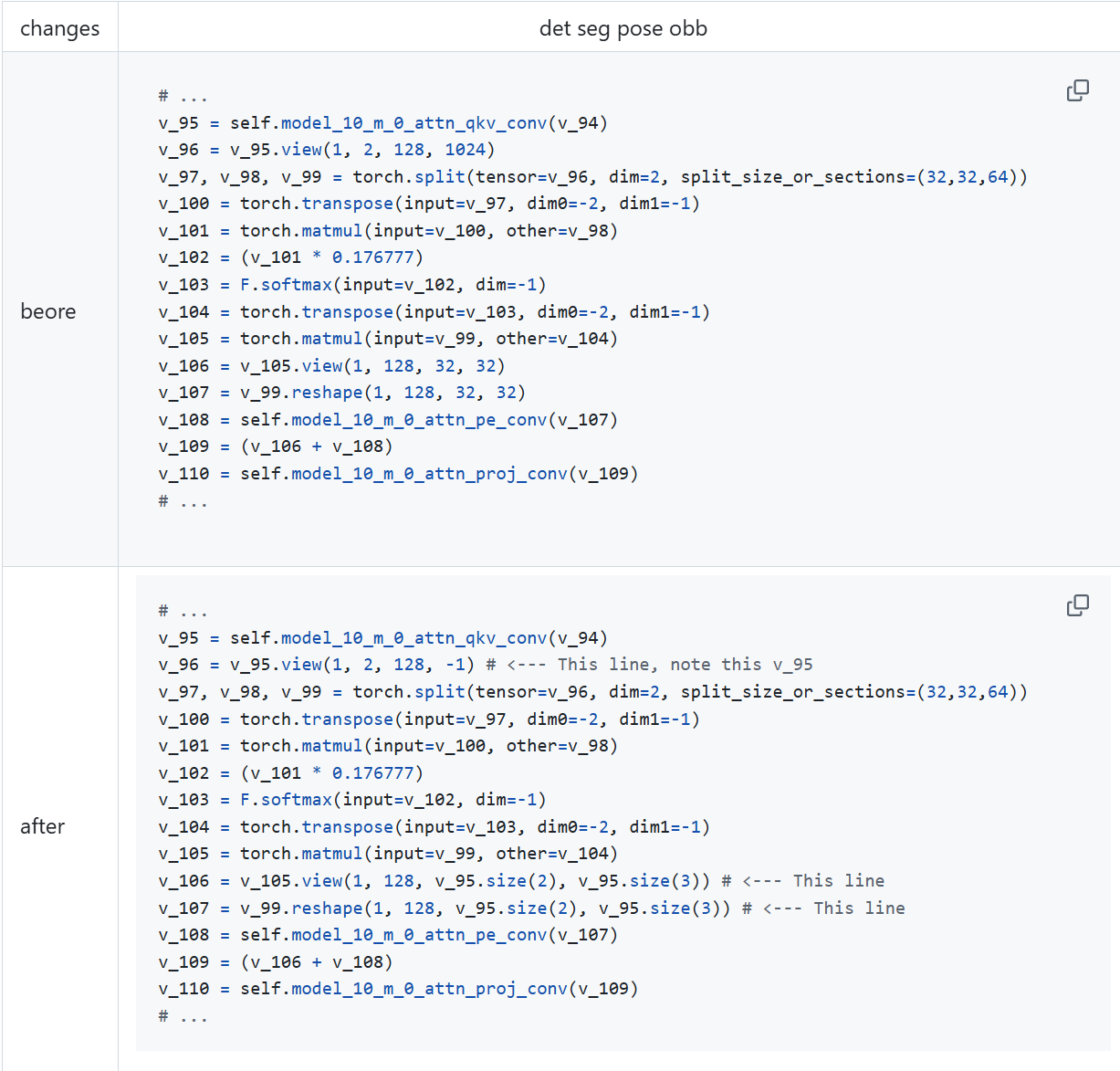

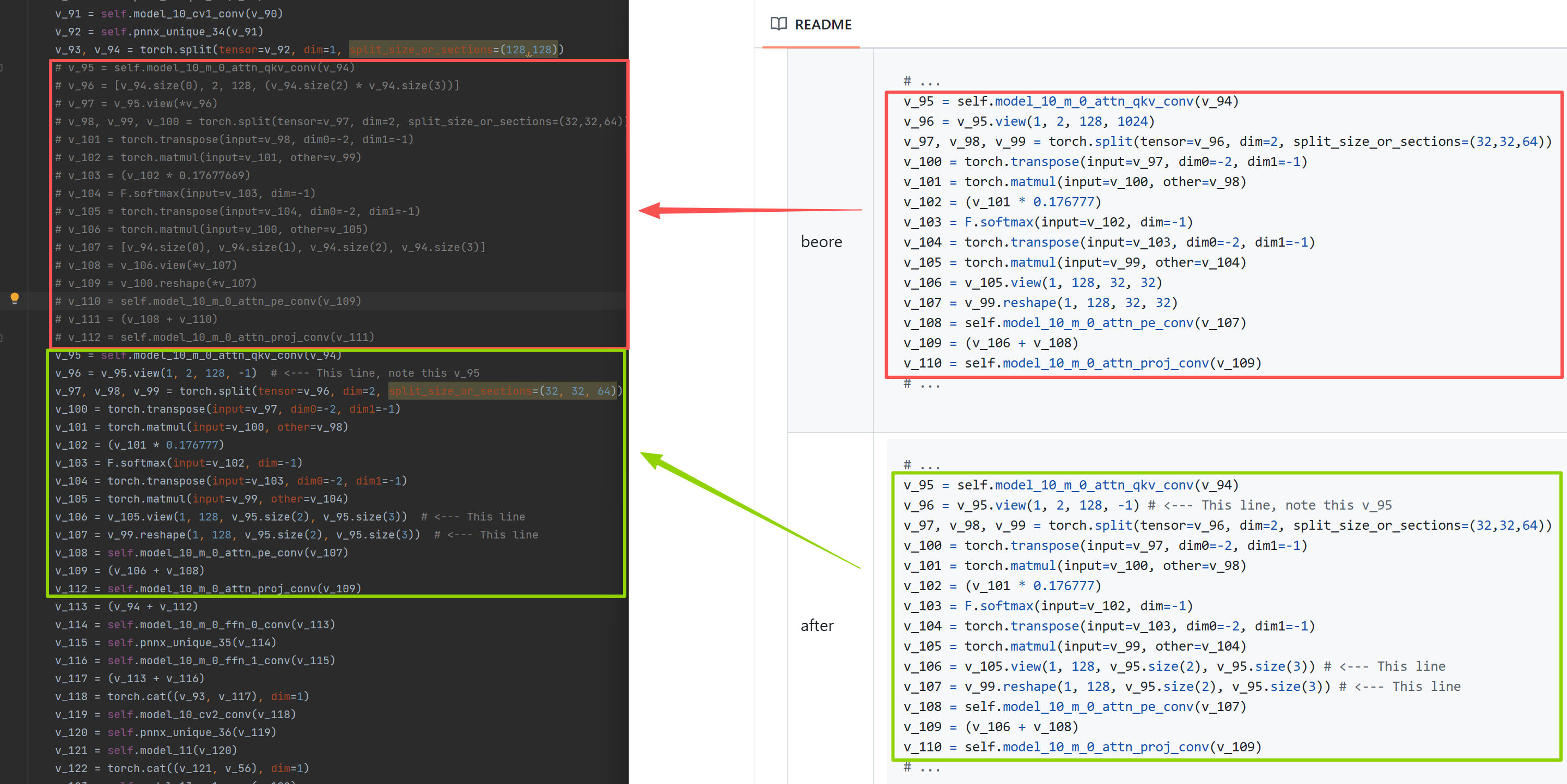

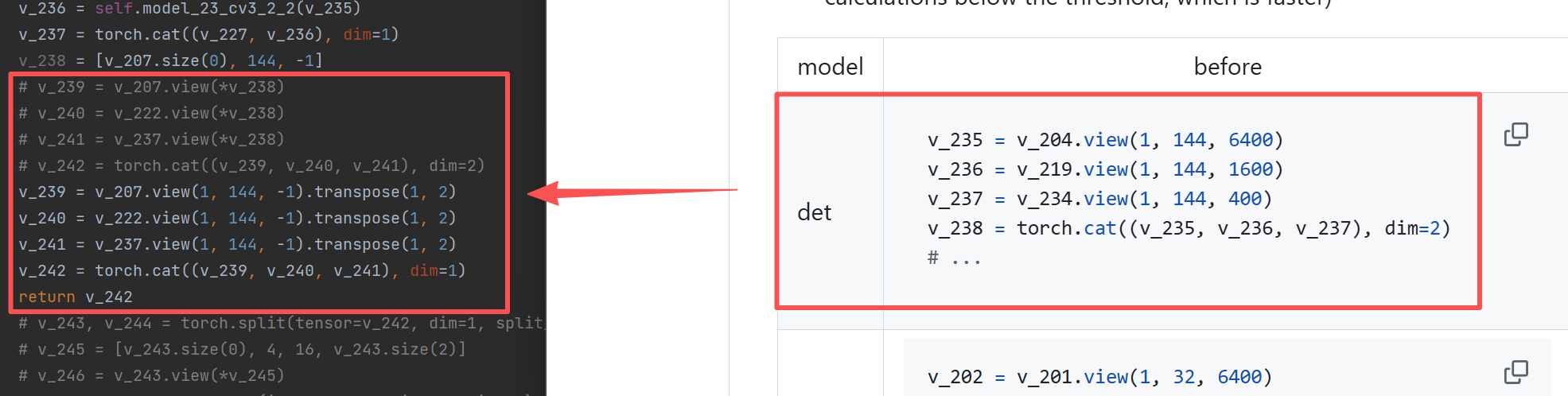

这一步网上所有的方案都类似,都是替换一下*_pnnx.py中的文件,如下:

几乎所有的yolo11模型移植,都是参考的这个

https://github.com/nihui/ncnn-android-yolo11

但是我实际转出来的文件,你说一样吧也差不多,说不一样吧,也不太一样,但是基本上差不多:

第一个位置:

这个位置yolo11和自己的模型都一样

第二个位置:

修改一下,主要区别还是层的命名有点区别

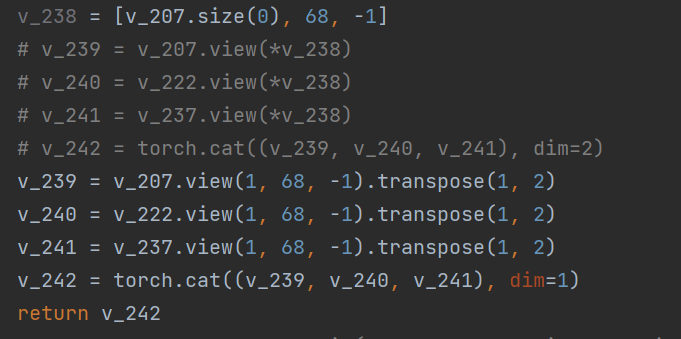

这里需要注意:

yolo11是144,但是自己的模型要看着修改

144 = 4 x 16 +80类

我的是4类,所以是4 x 16 + 4 ,所以我填的是68

其实上一层就提示是68了,直接抄吧

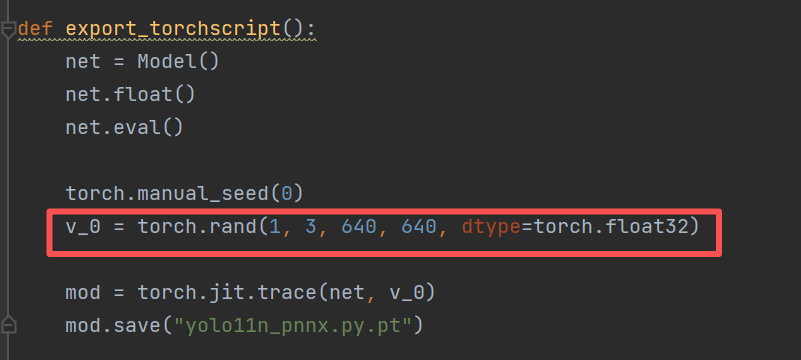

第三个位置:

给个任意输入,替换原来的null

3.4 重新导出一份.torchscript

用改了的这个,重新导出一下,命令如下:

import yolo11n_pnnx

yolo11n_pnnx.export_torchscript()

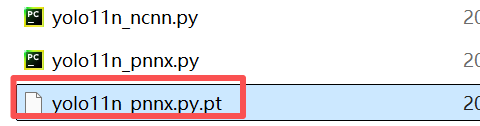

运行成功后,出来一个.pt文件

3.5 重新pnnx一份

conda 中执行以下命令

pnnx yolo11n_pnnx.py.pt inputshape=[1,3,640,640] inputshape2=[1,3,320,320]

然后就发现多了这些文件,其中*.ncnn.bin和*.ncnn.param是我们需要的

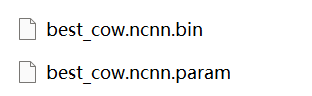

3.6 重命名一份模型文件

把名字里面的_pnnx.py删掉就行

3.7 至此就算模型准备好了

煎熬…

4. 然后开始加入QT

4.1 准备好一个安卓工程,准备这俩C++文件,加入进来,直接就能用

唯一需要改动的就是,绘制里面的类名替换成自己的,或者我压根也不用这个函数

yolo11.h

#ifndef YOLO11_H

#define YOLO11_H#include <opencv2/core/core.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/opencv.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <net.h>struct KeyPoint

{cv::Point2f p;float prob;

};struct Object

{cv::Rect_<float> rect;cv::RotatedRect rrect;int label;float prob;int gindex;cv::Mat mask;std::vector<KeyPoint> keypoints;

};

class YOLO11_det

{

public:YOLO11_det();~YOLO11_det();int load(const char* parampath, const char* modelpath, bool use_gpu = false);int load(AAssetManager* mgr, const char* parampath, const char* modelpath, bool use_gpu = false);/*** @brief set_det_target_size 设置影像大小 640* @param target_size*/void set_det_target_size(int target_size);/*** @brief set_conf_nms 设置置信度和非极大抑制的参数* @param conf* @param nms*/void set_conf_nms(float conf,float nms);/*** @brief detect 检测 推理* @param rgb* @param ww* @param hh* @param objects* @return*/int detect(const unsigned char* rgb, int ww, int hh, std::vector<Object>& objects);int detect(const cv::Mat& rgb, std::vector<Object>& objects);int draw(cv::Mat& rgb, const std::vector<Object>& objects);

protected:ncnn::Net yolo11;int det_target_size;float m_conf;float m_nms;

};#endif // YOLO11_Hyolo11.cpp

#include "yolo11.h"

#include<QDebug>

#include <QFile>

YOLO11_det::YOLO11_det()

{det_target_size = 640;m_conf = 0.5;m_nms = 0.45;

}

YOLO11_det::~YOLO11_det()

{det_target_size = 320;

}

void YOLO11_det::set_det_target_size(int target_size){det_target_size = target_size;

}

void YOLO11_det::set_conf_nms(float conf,float nms)

{m_conf = conf;m_nms = nms;

}

int YOLO11_det::load(const char* parampath, const char* modelpath, bool use_gpu)

{yolo11.clear();yolo11.opt = ncnn::Option();#if NCNN_VULKANyolo11.opt.use_vulkan_compute = use_gpu;

#endif// 检查文件是否存在QFile param_file(parampath);if (!param_file.exists()) {qDebug() << "Param file not found or inaccessible:" << QString::fromStdString(parampath);return -1;}QFile model_file(modelpath);if (!model_file.exists()) {qDebug() << "Model file not found or inaccessible:" << QString::fromStdString(modelpath);return -1;}// 加载参数文件int ret = yolo11.load_param(parampath);if (ret != 0) {qDebug() << "Failed to load param file:" << QString::fromStdString(parampath) << "error code:" << ret;return ret;}// 加载模型文件ret = yolo11.load_model(modelpath);if (ret != 0) {qDebug() << "Failed to load model file:" << QString::fromStdString(modelpath) << "error code:" << ret;return ret;}return 0;

}

int YOLO11_det::load(AAssetManager* mgr, const char* parampath, const char* modelpath, bool use_gpu)

{yolo11.clear();yolo11.opt = ncnn::Option();#if NCNN_VULKANyolo11.opt.use_vulkan_compute = use_gpu;

#endifyolo11.load_param(mgr, parampath);yolo11.load_model(mgr, modelpath);return 0;

}static inline float intersection_area(const Object& a, const Object& b)

{cv::Rect_<float> inter = a.rect & b.rect;return inter.area();

}static void qsort_descent_inplace(std::vector<Object>& objects, int left, int right)

{int i = left;int j = right;float p = objects[(left + right) / 2].prob;while (i <= j){while (objects[i].prob > p)i++;while (objects[j].prob < p)j--;if (i <= j){// swapstd::swap(objects[i], objects[j]);i++;j--;}}// #pragma omp parallel sections{// #pragma omp section{if (left < j) qsort_descent_inplace(objects, left, j);}// #pragma omp section{if (i < right) qsort_descent_inplace(objects, i, right);}}

}static void qsort_descent_inplace(std::vector<Object>& objects)

{if (objects.empty())return;qsort_descent_inplace(objects, 0, objects.size() - 1);

}static void nms_sorted_bboxes(const std::vector<Object>& objects, std::vector<int>& picked, float nms_threshold, bool agnostic = false)

{picked.clear();const int n = objects.size();std::vector<float> areas(n);for (int i = 0; i < n; i++){areas[i] = objects[i].rect.area();}for (int i = 0; i < n; i++){const Object& a = objects[i];int keep = 1;for (int j = 0; j < (int)picked.size(); j++){const Object& b = objects[picked[j]];if (!agnostic && a.label != b.label)continue;// intersection over unionfloat inter_area = intersection_area(a, b);float union_area = areas[i] + areas[picked[j]] - inter_area;// float IoU = inter_area / union_areaif (inter_area / union_area > nms_threshold)keep = 0;}if (keep)picked.push_back(i);}

}static inline float sigmoid(float x)

{return 1.0f / (1.0f + expf(-x));

}static void generate_proposals(const ncnn::Mat& pred, int stride, const ncnn::Mat& in_pad, float prob_threshold, std::vector<Object>& objects)

{const int w = in_pad.w;const int h = in_pad.h;const int num_grid_x = w / stride;const int num_grid_y = h / stride;const int reg_max_1 = 16;const int num_class = pred.w - reg_max_1 * 4; // number of classes. 80 for COCOfor (int y = 0; y < num_grid_y; y++){for (int x = 0; x < num_grid_x; x++){const ncnn::Mat pred_grid = pred.row_range(y * num_grid_x + x, 1);// find label with max scoreint label = -1;float score = -FLT_MAX;{const ncnn::Mat pred_score = pred_grid.range(reg_max_1 * 4, num_class);for (int k = 0; k < num_class; k++){float s = pred_score[k];if (s > score){label = k;score = s;}}score = sigmoid(score);}if (score >= prob_threshold){ncnn::Mat pred_bbox = pred_grid.range(0, reg_max_1 * 4).reshape(reg_max_1, 4);{ncnn::Layer* softmax = ncnn::create_layer("Softmax");ncnn::ParamDict pd;pd.set(0, 1); // axispd.set(1, 1);softmax->load_param(pd);ncnn::Option opt;opt.num_threads = 1;opt.use_packing_layout = false;softmax->create_pipeline(opt);softmax->forward_inplace(pred_bbox, opt);softmax->destroy_pipeline(opt);delete softmax;}float pred_ltrb[4];for (int k = 0; k < 4; k++){float dis = 0.f;const float* dis_after_sm = pred_bbox.row(k);for (int l = 0; l < reg_max_1; l++){dis += l * dis_after_sm[l];}pred_ltrb[k] = dis * stride;}float pb_cx = (x + 0.5f) * stride;float pb_cy = (y + 0.5f) * stride;float x0 = pb_cx - pred_ltrb[0];float y0 = pb_cy - pred_ltrb[1];float x1 = pb_cx + pred_ltrb[2];float y1 = pb_cy + pred_ltrb[3];Object obj;obj.rect.x = x0;obj.rect.y = y0;obj.rect.width = x1 - x0;obj.rect.height = y1 - y0;obj.label = label;obj.prob = score;objects.push_back(obj);}}}

}static void generate_proposals(const ncnn::Mat& pred, const std::vector<int>& strides, const ncnn::Mat& in_pad, float prob_threshold, std::vector<Object>& objects)

{const int w = in_pad.w;const int h = in_pad.h;int pred_row_offset = 0;for (size_t i = 0; i < strides.size(); i++){const int stride = strides[i];const int num_grid_x = w / stride;const int num_grid_y = h / stride;const int num_grid = num_grid_x * num_grid_y;generate_proposals(pred.row_range(pred_row_offset, num_grid), stride, in_pad, prob_threshold, objects);pred_row_offset += num_grid;}

}

int YOLO11_det::detect(const unsigned char* rgb, int ww,int hh,std::vector<Object>& objects){const int target_size = det_target_size;//640;const float prob_threshold = m_conf;const float nms_threshold = m_nms;int img_w = ww;int img_h = hh;// ultralytics/cfg/models/v8/yolo11.yamlstd::vector<int> strides(3);strides[0] = 8;strides[1] = 16;strides[2] = 32;const int max_stride = 32;int w = img_w;int h = img_h;float scale = 1.f;if (w > h){scale = (float)target_size / w;w = target_size;h = h * scale;}else{scale = (float)target_size / h;h = target_size;w = w * scale;}ncnn::Mat in = ncnn::Mat::from_pixels_resize(rgb, ncnn::Mat::PIXEL_RGB, img_w, img_h, w, h);// letterbox pad to target_size rectangleint wpad = (w + max_stride - 1) / max_stride * max_stride - w;int hpad = (h + max_stride - 1) / max_stride * max_stride - h;ncnn::Mat in_pad;ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f);const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};in_pad.substract_mean_normalize(0, norm_vals);ncnn::Extractor ex = yolo11.create_extractor();ex.input("in0", in_pad);ncnn::Mat out;ex.extract("out0", out);std::vector<Object> proposals;generate_proposals(out, strides, in_pad, prob_threshold, proposals);// sort all proposals by score from highest to lowestqsort_descent_inplace(proposals);// apply nms with nms_thresholdstd::vector<int> picked;nms_sorted_bboxes(proposals, picked, nms_threshold);int count = picked.size();objects.resize(count);for (int i = 0; i < count; i++){objects[i] = proposals[picked[i]];// adjust offset to original unpaddedfloat x0 = (objects[i].rect.x - (wpad / 2)) / scale;float y0 = (objects[i].rect.y - (hpad / 2)) / scale;float x1 = (objects[i].rect.x + objects[i].rect.width - (wpad / 2)) / scale;float y1 = (objects[i].rect.y + objects[i].rect.height - (hpad / 2)) / scale;// clipx0 = std::max(std::min(x0, (float)(img_w - 1)), 0.f);y0 = std::max(std::min(y0, (float)(img_h - 1)), 0.f);x1 = std::max(std::min(x1, (float)(img_w - 1)), 0.f);y1 = std::max(std::min(y1, (float)(img_h - 1)), 0.f);objects[i].rect.x = x0;objects[i].rect.y = y0;objects[i].rect.width = x1 - x0;objects[i].rect.height = y1 - y0;}// sort objects by areastruct{bool operator()(const Object& a, const Object& b) const{return a.rect.area() > b.rect.area();}} objects_area_greater;std::sort(objects.begin(), objects.end(), objects_area_greater);return 0;

}

int YOLO11_det::detect(const cv::Mat& rgb, std::vector<Object>& objects)

{const int target_size = det_target_size;//640;const float prob_threshold = m_conf;const float nms_threshold = m_nms;int img_w = rgb.cols;int img_h = rgb.rows;// ultralytics/cfg/models/v8/yolo11.yamlstd::vector<int> strides(3);strides[0] = 8;strides[1] = 16;strides[2] = 32;const int max_stride = 32;// letterbox pad to multiple of max_strideint w = img_w;int h = img_h;float scale = 1.f;if (w > h){scale = (float)target_size / w;w = target_size;h = h * scale;}else{scale = (float)target_size / h;h = target_size;w = w * scale;}ncnn::Mat in = ncnn::Mat::from_pixels_resize(rgb.data, ncnn::Mat::PIXEL_RGB, img_w, img_h, w, h);// letterbox pad to target_size rectangleint wpad = (w + max_stride - 1) / max_stride * max_stride - w;int hpad = (h + max_stride - 1) / max_stride * max_stride - h;ncnn::Mat in_pad;ncnn::copy_make_border(in, in_pad, hpad / 2, hpad - hpad / 2, wpad / 2, wpad - wpad / 2, ncnn::BORDER_CONSTANT, 114.f);const float norm_vals[3] = {1 / 255.f, 1 / 255.f, 1 / 255.f};in_pad.substract_mean_normalize(0, norm_vals);ncnn::Extractor ex = yolo11.create_extractor();ex.input("in0", in_pad);ncnn::Mat out;ex.extract("out0", out);std::vector<Object> proposals;generate_proposals(out, strides, in_pad, prob_threshold, proposals);// sort all proposals by score from highest to lowestqsort_descent_inplace(proposals);// apply nms with nms_thresholdstd::vector<int> picked;nms_sorted_bboxes(proposals, picked, nms_threshold);int count = picked.size();objects.resize(count);for (int i = 0; i < count; i++){objects[i] = proposals[picked[i]];// adjust offset to original unpaddedfloat x0 = (objects[i].rect.x - (wpad / 2)) / scale;float y0 = (objects[i].rect.y - (hpad / 2)) / scale;float x1 = (objects[i].rect.x + objects[i].rect.width - (wpad / 2)) / scale;float y1 = (objects[i].rect.y + objects[i].rect.height - (hpad / 2)) / scale;// clipx0 = std::max(std::min(x0, (float)(img_w - 1)), 0.f);y0 = std::max(std::min(y0, (float)(img_h - 1)), 0.f);x1 = std::max(std::min(x1, (float)(img_w - 1)), 0.f);y1 = std::max(std::min(y1, (float)(img_h - 1)), 0.f);objects[i].rect.x = x0;objects[i].rect.y = y0;objects[i].rect.width = x1 - x0;objects[i].rect.height = y1 - y0;}// sort objects by areastruct{bool operator()(const Object& a, const Object& b) const{return a.rect.area() > b.rect.area();}} objects_area_greater;std::sort(objects.begin(), objects.end(), objects_area_greater);return 0;

}int YOLO11_det::draw(cv::Mat& rgb, const std::vector<Object>& objects)

{

// static const char* class_names[] = {

// "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

// "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

// "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

// "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

// "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

// "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

// "potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

// "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

// "hair drier", "toothbrush"

// };static const char* class_names[] = {"level1", "level2", "level3", "level4"};static cv::Scalar colors[] = {cv::Scalar( 67, 54, 244),cv::Scalar( 30, 99, 233),cv::Scalar( 39, 176, 156),cv::Scalar( 58, 183, 103),cv::Scalar( 81, 181, 63),cv::Scalar(150, 243, 33),cv::Scalar(169, 244, 3),cv::Scalar(188, 212, 0),cv::Scalar(150, 136, 0),cv::Scalar(175, 80, 76),cv::Scalar(195, 74, 139),cv::Scalar(220, 57, 205),cv::Scalar(235, 59, 255),cv::Scalar(193, 7, 255),cv::Scalar(152, 0, 255),cv::Scalar( 87, 34, 255),cv::Scalar( 85, 72, 121),cv::Scalar(158, 158, 158),cv::Scalar(125, 139, 96)};for (size_t i = 0; i < objects.size(); i++){const Object& obj = objects[i];const cv::Scalar& color = colors[i % 19];// fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,// obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);cv::rectangle(rgb, obj.rect, color);char text[256];sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);int baseLine = 0;cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);int x = obj.rect.x;int y = obj.rect.y - label_size.height - baseLine;if (y < 0)y = 0;if (x + label_size.width > rgb.cols)x = rgb.cols - label_size.width;cv::rectangle(rgb, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),cv::Scalar(255, 255, 255), -1);cv::putText(rgb, text, cv::Point(x, y + label_size.height),cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));}return 0;

}调用方法:

初始化:

m_AIDetector = new YOLO11_det;// 加载模型文件const char* param_path = "/storage/emulated/0/NippleGrading/model/best_cow.ncnn.param";const char* model_path = "/storage/emulated/0/NippleGrading/model/best_cow.ncnn.bin";bool use_gpu = false; // Android 上通常用 CPUm_AIDetector->set_det_target_size(640);m_AIDetector->set_conf_nms(0.5,0.45);int ret = m_AIDetector->load(param_path, model_path, use_gpu);if (ret != 0) {AddLogInfoEng("Failed to load AI model"+QString::fromStdString(param_path),ERROR_LOG_LEVEL);qDebug()<<"------------------------模型初始化失败--------------------";delete m_AIDetector;m_AIDetector = nullptr;} else {qDebug()<<"------------------------模型初始化成功--------------------";UpdateAIConnect(true);AddLogInfoEng("AI model loaded successfully");}

预测:

if(m_AIDetector==nullptr){AddLogInfo("AI未正常初始化,无法进行推理",ERROR_LOG_LEVEL);AddLogInfoEng("Failed: AI not Init Success, Dont infer",ERROR_LOG_LEVEL);return ;}qDebug()<<"------------------------开始识别....1--------------------";m_time_start = QDateTime::currentDateTime();// 2. 执行目标检测m_AIDetectobjects.clear();int detectResult = m_AIDetector->detect(VaildImageBuffer[0],m_imgw,m_imgh, m_AIDetectobjects);

解析:

//0.如果是0 表示没有识别到,直接返回int num = m_AIDetectobjects.size();//开始显示结果//1.显示一下图像std::vector<int>zspoints;zspoints.resize(4,0);for (int j = 0; j < num; j++){float x1 = m_AIDetectobjects[j].rect.x;float y1 = m_AIDetectobjects[j].rect.y;float x3 = m_AIDetectobjects[j].rect.x+m_AIDetectobjects[j].rect.width;float y3 = m_AIDetectobjects[j].rect.y+m_AIDetectobjects[j].rect.height;float score = m_AIDetectobjects[j].prob;float labelId = m_AIDetectobjects[j].label;float x2 = x3;float y2 = y1;float x4 = x1;float y4 = y3;//qDebug()<<x1<<" "<<y1<<" "<<x3<<" "<<y3<<" "<<"score:"<<score<<"labelId:"<<labelId;QPoint p1(x1, y1), p2(x2, y2), p3(x3, y3), p4(x4, y4);int label = static_cast<int>(labelId);ui->widget_l->DrawPolygonWithPoints(p1,p2,p3,p4,colorImage,label);zspoints[label]+=1;}

4.2 第三方库准备

还需要一堆第三方库,

顺便给小白讲一下,如何纳入opencv及所需要的其他库

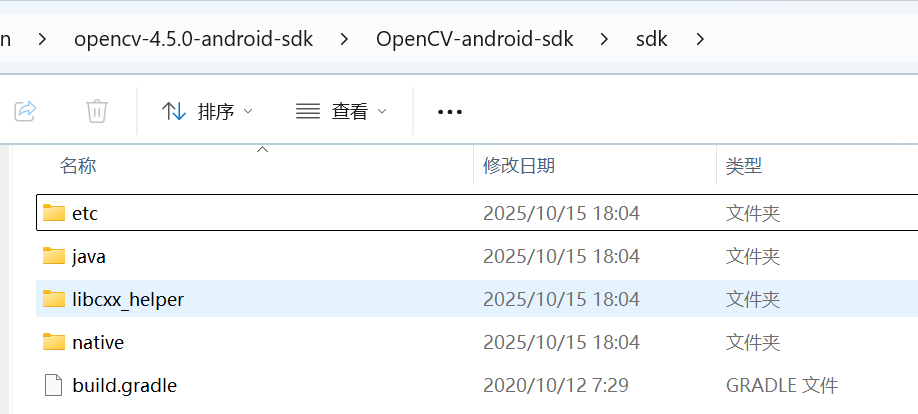

4.2.1 下载opencv,直接下载android版本就行

把下面这里面所有的include和.a 和 .so整理一下,放到一起

pro文件中包含一下,需要注意的是,.so是动态库,需要在/android/libs/里面放一份,并用ANDROID_EXTRA_LIBS 添加一下,这样动态库就会一起打包到apk中,要么编译能通过,时间运行缺库

QT += androidextras

#.......

android {# LIBS += -fopenmp

# QMAKE_LFLAGS += -fopenmp

# QMAKE_CXXFLAGS+= -fopenmpINCLUDEPATH += $$PWD/ThirdLib/ncnn/arm64-v8a/include/ncnn

# LIBS += \

# $$PWD/ThirdLib/ncnn/arm64-v8a/lib/libncnn.so# 引用 android/libs 目录下的库ANDROID_EXTRA_LIBS += \$$PWD/android/libs/arm64-v8a/libncnn.so \$$PWD/android/libs/arm64-v8a/libomp.so \$$PWD/android/libs/arm64-v8a/libopencv_java4.soLIBS += \$$PWD/android/libs/arm64-v8a/libncnn.so \$$PWD/android/libs/arm64-v8a/libomp.so \$$PWD/android/libs/arm64-v8a/libopencv_java4.soINCLUDEPATH += $$PWD/ThirdLib/opencv/includeLIBS += \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_calib3d.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_core.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_dnn.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_features2d.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_flann.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_gapi.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_highgui.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_imgcodecs.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_imgproc.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_ml.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_objdetect.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_photo.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_stitching.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_video.a \$$PWD/ThirdLib/opencv/lib/opencv/libopencv_videoio.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libade.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libcpufeatures.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libIlmImf.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libittnotify.a \$$PWD/ThirdLib/opencv/lib/3rdparty/liblibjpeg-turbo.a \$$PWD/ThirdLib/opencv/lib/3rdparty/liblibopenjp2.a \$$PWD/ThirdLib/opencv/lib/3rdparty/liblibpng.a \$$PWD/ThirdLib/opencv/lib/3rdparty/liblibprotobuf.a \$$PWD/ThirdLib/opencv/lib/3rdparty/liblibtiff.a \$$PWD/ThirdLib/opencv/lib/3rdparty/liblibwebp.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libquirc.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libtbb.a \$$PWD/ThirdLib/opencv/lib/3rdparty/libtegra_hal.a \

# $$PWD/ThirdLib/opencv/lib/libopencv_java4.so

ANDROID_PACKAGE_SOURCE_DIR = $$PWD/android

}

4.2.2 下载ncnn

我直接下载的是

网上都是添加静态库,我试过不知道为啥不行,动态库可以,而且版本要新点,要不会缺头文件

ncnn-20250916-android-vulkan-shared

也一样,需要include 和 一个.so,一起整理一下

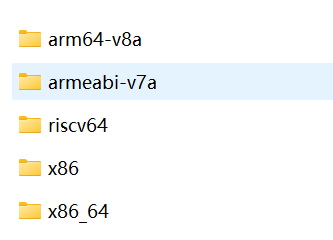

值得注意的是,需要注意安卓编译器的版本,一般都会有好几个,看你自己是哪个编译器,选择哪个编译器,pro文件全在上面

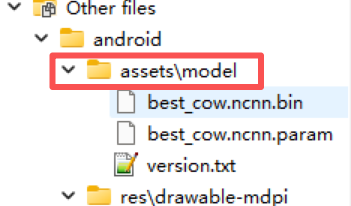

4.2.3 把模型文件打包到apk

把模型文件放到这个文件夹下,这样打包才能打包进去

实际安装好的位置如下:

assets:/model/best_cow.ncnn.param

注:

1.值得注意的是,这几个文件是只读的,加载模型加载失败,在启动软件时,需要拷贝到其他地方,在给路径赋值

如:

QFile dbFile(“assets:/model/best_cow.ncnn.param”);

if (dbFile.exists())

{

QString dbPath =“/storage/emulated/0/NippleGrading/model/best_cow.ncnn.param”;

dbFile.copy(dbPath);

QFile::setPermissions(dbPath,QFile::WriteOwner | QFile::ReadOwner);

}else{

AddLogInfoEng(“assets:/model/best_cow.ncnn.param is not exist”,ERROR_LOG_LEVEL);

}

2.小技巧:拷贝,总不能每次打开都拷贝吧,所以加了一个version.txt,用来存版本号,拷贝前对比下版本号,不一致再拷贝,避免问题

5. 至此,算是部署结束了,大家可以照着试试,操作一下

贴个图纪念一下

码字不易,留下点赞和收藏…