LazyLLM 学习

参考文章:

https://blog.csdn.net/csdnstudent/article/details/151999341

https://blog.csdn.net/csdnstudent/article/details/151827710

LazyLLM github:

https://github.com/LazyAGI/LazyLLM

实现目标:

给智能体一些pdf,通过问答形式了解书中知识,便于学习。

环境搭建

python下载

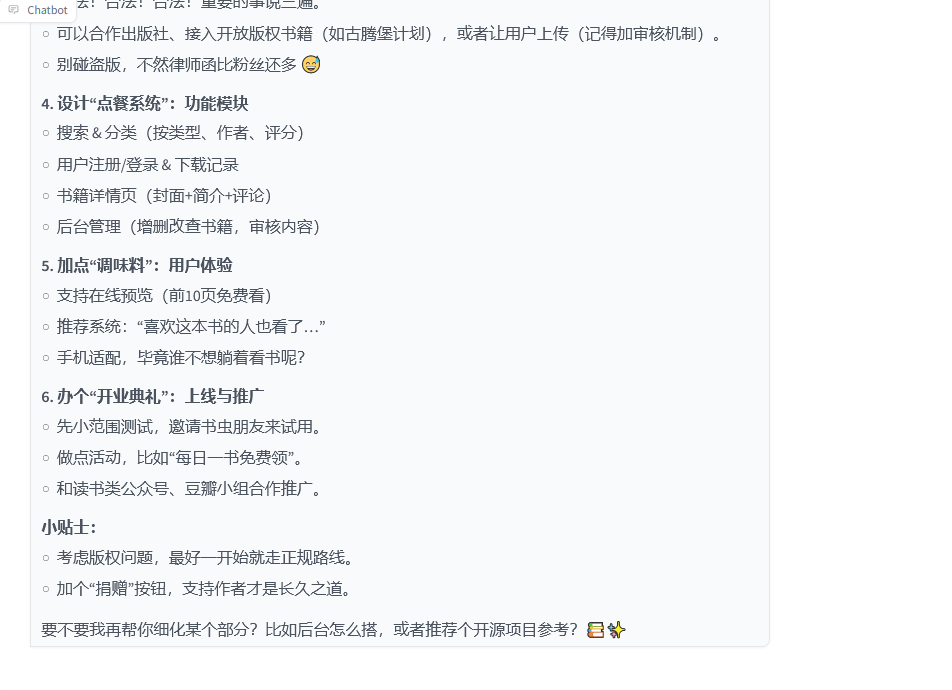

根据参考文章中内容,下载python 3.10

官网下载地址:https://www.python.org/downloads/windows/

选合适版本下载,直接执行安装,安装成功后在cmd中可以直接执行python。

环境搭建

win10环境,在对应文件夹运行:

python -m venv lazyllm-venv

cd lazyllm-venv

lazyllm-venv/bin/activate

pip3 install lazyllm -i https://pypi.tuna.tsinghua.edu.cn/simple运行activate后进入虚拟环境,和docker的环境一样。

在虚拟环境中执行pip3安装依赖。

根据文档获取和设置对应key,本次测试使用通义千问。

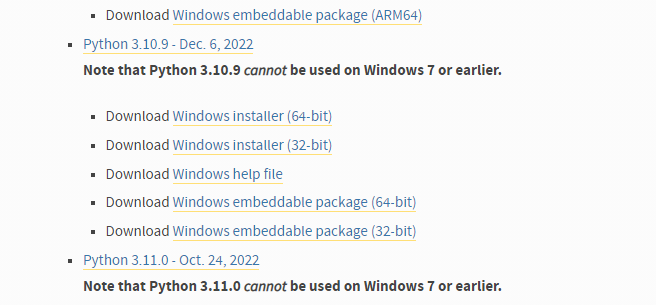

获取通义千问的key

进入阿里大模型平台

https://bailian.console.aliyun.com

登录后,获取key,对应模型可以直接使用,不用开通。

实现原理

设置线上推理模型

base_url_http = "https://dashscope.aliyuncs.com/compatible-mode"

llm=OnlineChatModule(source='qwen', model="qwen-plus", stream=False,base_url=base_url_http)文档路径:lazyllm-venv\Lib\site-packages

根据文档

class OnlineChatModule(metaclass=_ChatModuleMeta):"""Used to manage and create access modules for large model platforms currently available on the market. Currently, it supports openai, sensenova, glm, kimi, qwen, doubao and deepseek (since the platform does not allow recharges for the time being, access is not supported for the time being). For how to obtain the platform's API key, please visit [Getting Started](/#platform)Args:model (str): Specify the model to access (Note that you need to use Model ID or Endpoint ID when using Doubao. For details on how to obtain it, see [Getting the Inference Access Point](https://www.volcengine.com/docs/82379/1099522). Before using the model, you must first activate the corresponding service on the Doubao platform.), default is ``gpt-3.5-turbo(openai)`` / ``SenseChat-5(sensenova)`` / ``glm-4(glm)`` / ``moonshot-v1-8k(kimi)`` / ``qwen-plus(qwen)`` / ``mistral-7b-instruct-v0.2(doubao)`` .source (str): Specify the type of module to create. Options include ``openai`` / ``sensenova`` / ``glm`` / ``kimi`` / ``qwen`` / ``doubao`` / ``deepseek (not yet supported)`` .base_url (str): Specify the base link of the platform to be accessed. The default is the official link.system_prompt (str): Specify the requested system prompt. The default is the official system prompt.stream (bool): Whether to request and output in streaming mode, default is streaming.return_trace (bool): Whether to record the results in trace, default is False. Examples:>>> import lazyllm>>> from functools import partial>>> m = lazyllm.OnlineChatModule(source="sensenova", stream=True)>>> query = "Hello!">>> with lazyllm.ThreadPoolExecutor(1) as executor:... future = executor.submit(partial(m, llm_chat_history=[]), query)... while True:... if value := lazyllm.FileSystemQueue().dequeue():... print(f"output: {''.join(value)}")... elif future.done():... break... print(f"ret: {future.result()}")...output: Hellooutput: ! How can I assist you today?ret: Hello! How can I assist you today?>>> from lazyllm.components.formatter import encode_query_with_filepaths>>> vlm = lazyllm.OnlineChatModule(source="sensenova", model="SenseChat-Vision")>>> query = "what is it?">>> inputs = encode_query_with_filepaths(query, ["/path/to/your/image"])>>> print(vlm(inputs))"""MODELS = {'openai': OpenAIModule,'sensenova': SenseNovaModule,'glm': GLMModule,'kimi': KimiModule,'qwen': QwenModule,'doubao': DoubaoModule,'deepseek': DeepSeekModule}@staticmethoddef _encapsulate_parameters(base_url: str, model: str, stream: bool, return_trace: bool, **kwargs) -> Dict[str, Any]:params = {"stream": stream, "return_trace": return_trace}if base_url is not None:params['base_url'] = base_urlif model is not None:params['model'] = modelparams.update(kwargs)return paramsdef __new__(self, model: str = None, source: str = None, base_url: str = None, stream: bool = True,return_trace: bool = False, skip_auth: bool = False, type: Optional[str] = None, **kwargs):if model in OnlineChatModule.MODELS.keys() and source is None: source, model = model, sourceparams = OnlineChatModule._encapsulate_parameters(base_url, model, stream, return_trace,skip_auth=skip_auth, type=type, **kwargs)if skip_auth:source = source or "openai"if not base_url:raise KeyError("base_url must be set for local serving.")if source is None:if "api_key" in kwargs and kwargs["api_key"]:raise ValueError("No source is given but an api_key is provided.")for source in OnlineChatModule.MODELS.keys():if lazyllm.config[f'{source}_api_key']: breakelse:raise KeyError(f"No api_key is configured for any of the models {OnlineChatModule.MODELS.keys()}.")assert source in OnlineChatModule.MODELS.keys(), f"Unsupported source: {source}"return OnlineChatModule.MODELS[source](**params)class QwenModule(OnlineChatModuleBase, FileHandlerBase):"""#TODO: The Qianwen model has been finetuned and deployed successfully,but it is not compatible with the OpenAI interface and can onlybe accessed through the Dashscope SDK."""TRAINABLE_MODEL_LIST = ["qwen-turbo", "qwen-7b-chat", "qwen-72b-chat"]MODEL_NAME = "qwen-plus"def __init__(self, base_url: str = "https://dashscope.aliyuncs.com/", model: str = None,api_key: str = None, stream: bool = True, return_trace: bool = False, **kwargs):OnlineChatModuleBase.__init__(self, model_series="QWEN", api_key=api_key or lazyllm.config['qwen_api_key'],model_name=model or lazyllm.config['qwen_model_name'] or QwenModule.MODEL_NAME,base_url=base_url, stream=stream, return_trace=return_trace, **kwargs)FileHandlerBase.__init__(self)self._deploy_paramters = dict()if stream:self._model_optional_params['incremental_output'] = Trueself.default_train_data = {"model": "qwen-turbo","training_file_ids": None,"validation_file_ids": None,"training_type": "efficient_sft", # sft or efficient_sft"hyper_parameters": {"n_epochs": 1,"batch_size": 16,"learning_rate": "1.6e-5","split": 0.9,"warmup_ratio": 0.0,"eval_steps": 1,"lr_scheduler_type": "linear","max_length": 2048,"lora_rank": 8,"lora_alpha": 32,"lora_dropout": 0.1,}}self.fine_tuning_job_id = Nonedef _set_chat_url(self):self._url = urljoin(self._base_url, 'compatible-mode/v1/chat/completions')创建OnlineChatModule对象时设置参数source=qwen,返回QwenModule。

QwenModule可设置参数api_key,即除了像参考文档设置环境变量,还可以将key直接写在代码里。

base_url在代码执行时会进行修改,测试代码中的base_url可以走通……就没细研究……

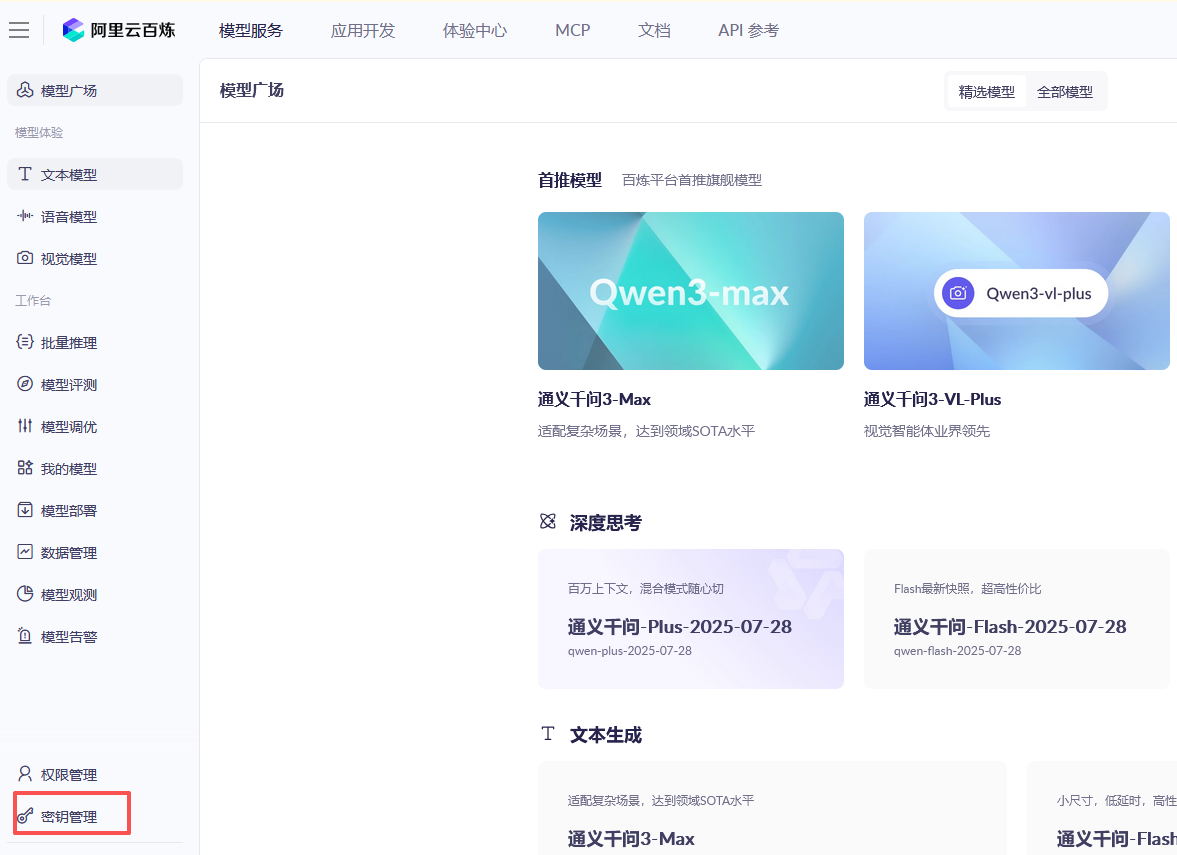

执行对话

while True:query = input("query(enter 'quit' to exit): ")if query == "quit":breakres = llm.forward(query)print(f"answer: {res}") 测试代码

from lazyllm import (OnlineChatModule,OnlineEmbeddingModule,Retriever,Document

)base_url_http = "https://dashscope.aliyuncs.com/compatible-mode"

llm=OnlineChatModule(source='qwen', model="qwen-plus", stream=False)while True:query = input("query(enter 'quit' to exit): ")if query == "quit":breakres = llm.forward(query)print(f"answer: {res}") 执行结果

设置文档

文档使用方式时字符串转向量,通过向量运算获取结果。

doc_path="F:\\file\\my\\workplace\\agentLazyLLM\\lazyllm-venv\\docs"

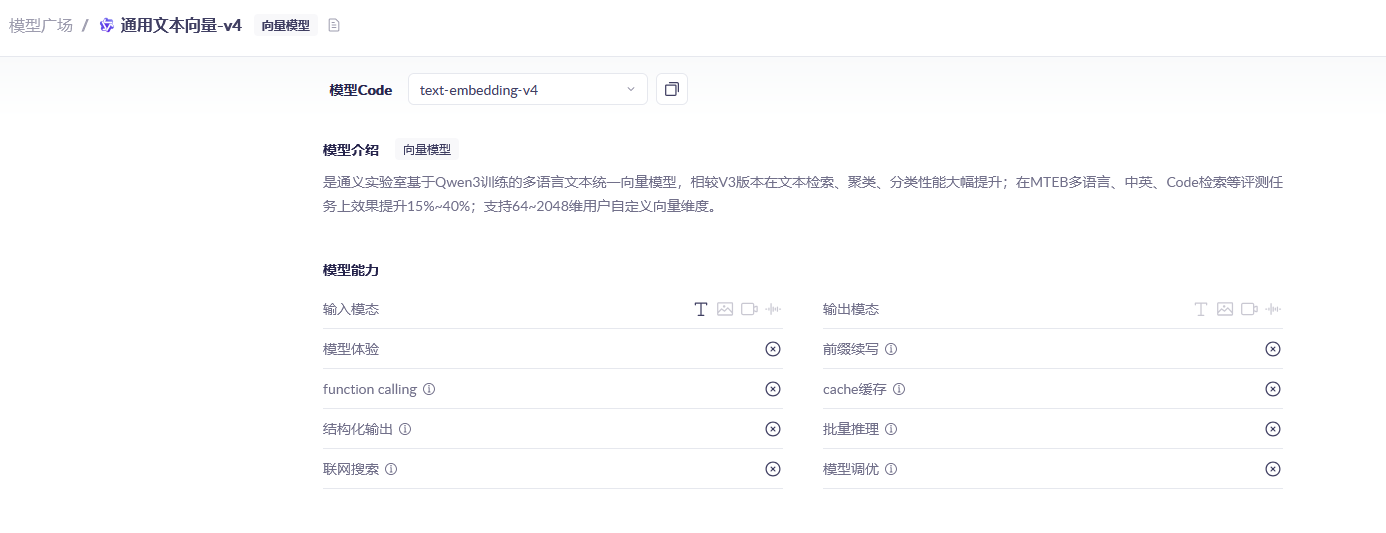

embed_model = OnlineEmbeddingModule(source='qwen', embed_model_name='text-embedding-v4',type="embed")

doc = Document(dataset_path=doc_path, embed=embed_model)

retriever = Retriever(doc, group_name='CoarseChunk', similarity="bm25_chinese", topk=3)OnlineEmbeddingModule为线上模型,用于字符串转向量。

该模型可用户文档搜索和重排,用变量type控制。

根据文档

class __EmbedModuleMeta(type):def __instancecheck__(self, __instance: Any) -> bool:if isinstance(__instance, OnlineEmbeddingModuleBase):return Truereturn super().__instancecheck__(__instance)class OnlineEmbeddingModule(metaclass=__EmbedModuleMeta):"""Used to manage and create online Embedding service modules currently on the market, currently supporting openai, sensenova, glm, qwen, doubao.Args:source (str): Specify the type of module to create. Options are ``openai`` / ``sensenova`` / ``glm`` / ``qwen`` / ``doubao``.embed_url (str): Specify the base link of the platform to be accessed. The default is the official link.embed_mode_name (str): Specify the model to access (Note that you need to use Model ID or Endpoint ID when using Doubao. For details on how to obtain it, see [Getting the Inference Access Point](https://www.volcengine.com/docs/82379/1099522). Before using the model, you must first activate the corresponding service on the Doubao platform.), default is ``text-embedding-ada-002(openai)`` / ``nova-embedding-stable(sensenova)`` / ``embedding-2(glm)`` / ``text-embedding-v1(qwen)`` / ``doubao-embedding-text-240715(doubao)``Examples:>>> import lazyllm>>> m = lazyllm.OnlineEmbeddingModule(source="sensenova")>>> emb = m("hello world")>>> print(f"emb: {emb}")emb: [0.0010528564, 0.0063285828, 0.0049476624, -0.012008667, ..., -0.009124756, 0.0032043457, -0.051696777]"""EMBED_MODELS = {'openai': OpenAIEmbedding,'sensenova': SenseNovaEmbedding,'glm': GLMEmbedding,'qwen': QwenEmbedding,'doubao': DoubaoEmbedding}RERANK_MODELS = {'qwen': QwenReranking,'glm': GLMReranking}@staticmethoddef _encapsulate_parameters(embed_url: str,embed_model_name: str,**kwargs) -> Dict[str, Any]:params = {}if embed_url is not None:params["embed_url"] = embed_urlif embed_model_name is not None:params["embed_model_name"] = embed_model_nameparams.update(kwargs)return params@staticmethoddef _check_available_source(available_models):for source in available_models.keys():if lazyllm.config[f'{source}_api_key']: breakelse:raise KeyError(f"No api_key is configured for any of the models {available_models.keys()}.")assert source in available_models.keys(), f"Unsupported source: {source}"return sourcedef __new__(self,source: str = None,embed_url: str = None,embed_model_name: str = None,**kwargs):params = OnlineEmbeddingModule._encapsulate_parameters(embed_url, embed_model_name, **kwargs)if source is None and "api_key" in kwargs and kwargs["api_key"]:raise ValueError("No source is given but an api_key is provided.")if "type" in params:params.pop("type")if kwargs.get("type", "embed") == "embed":if source is None:source = OnlineEmbeddingModule._check_available_source(OnlineEmbeddingModule.EMBED_MODELS)if source == "doubao":if embed_model_name.startswith("doubao-embedding-vision"):return DoubaoMultimodalEmbedding(**params)else:return DoubaoEmbedding(**params)return OnlineEmbeddingModule.EMBED_MODELS[source](**params)elif kwargs.get("type") == "rerank":if source is None:source = OnlineEmbeddingModule._check_available_source(OnlineEmbeddingModule.RERANK_MODELS)return OnlineEmbeddingModule.RERANK_MODELS[source](**params)else:raise ValueError("Unknown type of online embedding module.")

OnlineEmbeddingModule参数source='qwen'、type="embed",返回对象QwenEmbedding。

class QwenEmbedding(OnlineEmbeddingModuleBase):def __init__(self,embed_url: str = ("https://dashscope.aliyuncs.com/api/v1/services/""embeddings/text-embedding/text-embedding"),embed_model_name: str = "text-embedding-v1",api_key: str = None):super().__init__("QWEN", embed_url, api_key or lazyllm.config['qwen_api_key'], embed_model_name)

OnlineEmbeddingModule参数source='qwen'、type="rerank",返回重排对象QwenReranking。

class QwenReranking(OnlineEmbeddingModuleBase):def __init__(self,embed_url: str = ("https://dashscope.aliyuncs.com/api/v1/services/""rerank/text-rerank/text-rerank"),embed_model_name: str = "gte-rerank",api_key: str = None, **kwargs):super().__init__("QWEN", embed_url, api_key or lazyllm.config['qwen_api_key'], embed_model_name)若embed_model_name未设置会报错,其值根据平台

Document设置本地文档,将本地文档和线上模型做关联,获取向量。

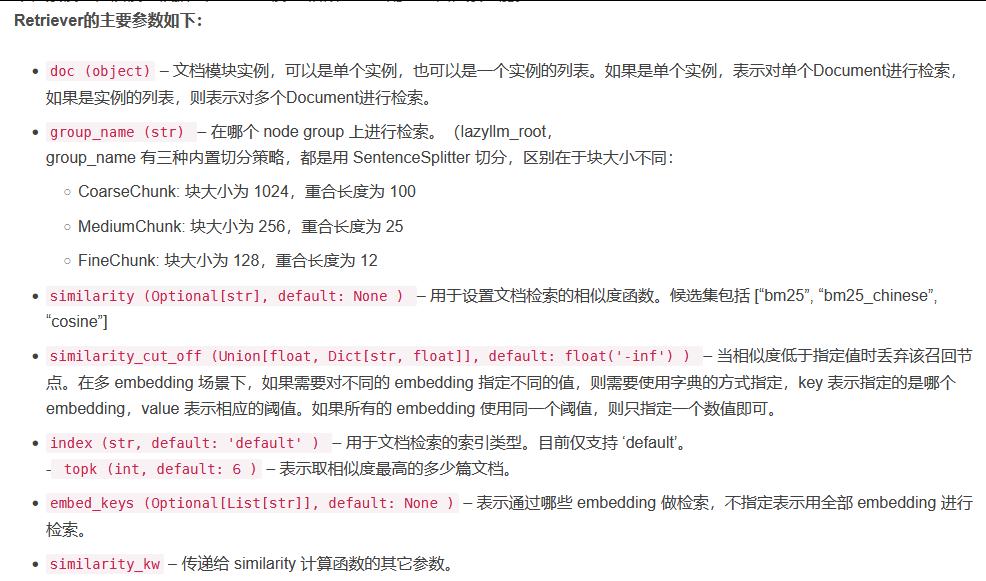

Retriever对向量进项解析。

测试代码

用户信息.txt

管理员 名字 王幺幺 性别 男

采购员 名字 王吾 性别 女from lazyllm import (OnlineChatModule,OnlineEmbeddingModule,Retriever,Document

)

base_url_http = "https://dashscope.aliyuncs.com/compatible-mode"

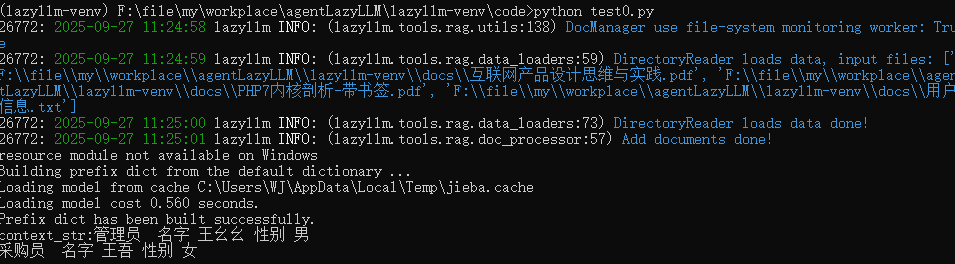

doc_path="F:\\file\\my\\workplace\\agentLazyLLM\\lazyllm-venv\\docs"embed_model = OnlineEmbeddingModule(source='qwen', embed_model_name='text-embedding-v4',type="embed")

doc = Document(dataset_path=doc_path, embed=embed_model)

retriever = Retriever(doc, group_name='CoarseChunk', similarity="bm25_chinese", topk=3)

retriever_result = retriever(query="管理员性别") context_str = "|".join([node.get_content() for node in retriever_result])

print("context_str:"+context_str)执行结果

返回对应知识库信息,可通过线上对话模型返回对应信息。

设置工具

设置工具可将文档搜索和对象相管理。

用fc_register("tool")设置工具,文档必选设置。

@fc_register("tool")

def search_knowledge_base(query:str):"""搜索知识库并返回相关文档内容Args:query (str): 搜索查询字符串"""doc_node_list = retriever(query=query)context_str = "".join([node.get_content() for node in doc_node_list])return context_str工具使用

agent = ReactAgent(llm,tools=['search_knowledge_base'],prompt=prompt,stream=False

)w = WebModule(agent,stream=False)

w.start().wait()llm是设置线上对话模型,工具调用线上向量模型和解析。

WebModule开启线上服务,参数设置ReactAgnet对像。

根据ReactAgne源码

lass ReactAgent(ModuleBase):"""ReactAgent follows the process of `Thought->Action->Observation->Thought...->Finish` step by step through LLM and tool calls to display the steps to solve user questions and the final answer to the user.Args:llm (ModuleBase): The LLM to be used can be either TrainableModule or OnlineChatModule.tools (List[str]): A list of tool names for LLM to use.max_retries (int): The maximum number of tool call iterations. The default value is 5.return_trace (bool): If True, return intermediate steps and tool calls.stream (bool): Whether to stream the planning and solving process.Examples:>>> import lazyllm>>> from lazyllm.tools import fc_register, ReactAgent>>> @fc_register("tool")>>> def multiply_tool(a: int, b: int) -> int:... '''... Multiply two integers and return the result integer...... Args:... a (int): multiplier... b (int): multiplier... '''... return a * b...>>> @fc_register("tool")>>> def add_tool(a: int, b: int):... '''... Add two integers and returns the result integer...... Args:... a (int): addend... b (int): addend... '''... return a + b...>>> tools = ["multiply_tool", "add_tool"]>>> llm = lazyllm.TrainableModule("internlm2-chat-20b").start() # or llm = lazyllm.OnlineChatModule(source="sensenova")>>> agent = ReactAgent(llm, tools)>>> query = "What is 20+(2*4)? Calculate step by step.">>> res = agent(query)>>> print(res)'Answer: The result of 20+(2*4) is 28.'"""def __init__(self, llm, tools: List[str], max_retries: int = 5, return_trace: bool = False,prompt: str = None, stream: bool = False):super().__init__(return_trace=return_trace)self._max_retries = max_retriesassert llm and tools, "llm and tools cannot be empty."if not prompt:prompt = INSTRUCTION.replace("{TOKENIZED_PROMPT}", WITHOUT_TOKEN_PROMPT if isinstance(llm, OnlineChatModule)else WITH_TOKEN_PROMPT)prompt = prompt.replace("{tool_names}", json.dumps([t.__name__ if callable(t) else t for t in tools],ensure_ascii=False))self._agent = loop(FunctionCall(llm, tools, _prompt=prompt, return_trace=return_trace, stream=stream),stop_condition=lambda x: isinstance(x, str), count=self._max_retries)def forward(self, query: str, llm_chat_history: List[Dict[str, Any]] = None):ret = self._agent(query, llm_chat_history) if llm_chat_history is not None else self._agent(query)return ret if isinstance(ret, str) else (_ for _ in ()).throw(ValueError(f"After retrying \{self._max_retries} times, the function call agent still failes to call successfully."))

可以调用forward执行对话。

测试代码

base_url_http = "https://dashscope.aliyuncs.com/compatible-mode"

doc_path="F:\\file\\my\\workplace\\agentLazyLLM\\lazyllm-venv\\docs"

embed_model = OnlineEmbeddingModule(source='qwen', embed_model_name='text-embedding-v4',type="embed")

doc = Document(dataset_path=doc_path, embed=embed_model)

retriever = Retriever(doc, group_name='CoarseChunk', similarity="bm25_chinese", topk=3)

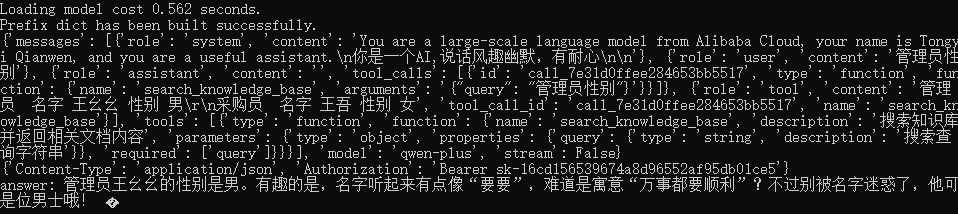

llm=OnlineChatModule(source='qwen', model="qwen-plus", stream=False,base_url=base_url_http)

# prompt 设计

prompt = '你是一个AI,说话风趣幽默,有耐心'

llm.prompt(ChatPrompter(instruction=prompt, extra_keys=['context_str']))@fc_register("tool")

def search_knowledge_base(query:str):"""搜索知识库并返回相关文档内容Args:query (str): 搜索查询字符串"""print(query)doc_node_list = retriever(query=query)context_str = "".join([node.get_content() for node in doc_node_list])return context_stragent = ReactAgent(llm,tools=['search_knowledge_base'],prompt=prompt,stream=False

)

res = agent.forward("管理员性别")

print(f"answer: {res}") 直接结果

代码

import lazyllm

from lazyllm import (fc_register, Document, Retriever, OnlineEmbeddingModule, OnlineChatModule, WebModule,ReactAgent,Reranker,SentenceSplitter,pipeline

)

base_url_http = "https://dashscope.aliyuncs.com/compatible-mode"

doc_path="F:\\file\\my\\workplace\\agentLazyLLM\\lazyllm-venv\\docs"embed_model = OnlineEmbeddingModule(source='qwen', embed_model_name='text-embedding-v4',type="embed")doc = Document(dataset_path=doc_path, embed=embed_model)

retriever = Retriever(doc, group_name='CoarseChunk', similarity="bm25_chinese", topk=3)@fc_register("tool")

def search_knowledge_base(query:str):"""搜索知识库并返回相关文档内容Args:query (str): 搜索查询字符串"""doc_node_list = retriever(query=query)context_str = "".join([node.get_content() for node in doc_node_list])return context_strllm=OnlineChatModule(source='qwen', model="qwen-plus", stream=False,base_url=base_url_http)

# prompt 设计

prompt = '你是一个AI,说话风趣幽默,有耐心'

llm.prompt(lazyllm.ChatPrompter(instruction=prompt, extra_keys=['context_str']))agent = ReactAgent(llm,tools=['search_knowledge_base'],prompt=prompt,stream=False

)w = WebModule(agent,stream=False)

w.start().wait()执行结果