小红书开放平台笔记详情接口实战:内容解析与数据挖掘全方案

小红书作为以 UGC 内容为核心的生活方式分享平台,其笔记内容包含丰富的消费体验、产品评测和生活攻略。与传统电商平台不同,小红书接口提供的笔记数据具有强烈的社交属性和内容导向特征。本文将系统讲解小红书开放平台笔记详情接口的技术实现,重点解决接口认证、富文本解析、多媒体资源处理和社交数据整合等关键问题,提供一套可直接应用于内容分析系统的完整解决方案。

一、接口基础信息与应用场景

接口核心信息

小红书开放平台笔记详情接口的关键技术参数:

- 接口域名:

https://open.xiaohongshu.com - 认证方式:OAuth 2.0(Access Token)

- 请求格式:HTTP GET,URL 参数

- 响应格式:JSON

- 编码格式:UTF-8

- 调用限制:单应用 QPS=5,日调用上限 5000 次

核心接口列表

| 接口名称 | 接口地址 | 请求方式 | 功能描述 |

|---|---|---|---|

| 笔记详情 | /api/v1/note/detail | GET | 获取单篇笔记的详细信息 |

| 笔记列表 | /api/v1/note/list | GET | 按条件获取笔记列表 |

| 笔记评论 | /api/v1/note/comments | GET | 获取笔记的评论数据 |

| 用户信息 | /api/v1/user/profile | GET | 获取笔记作者信息 |

| 相关笔记 | /api/v1/note/related | GET | 获取相关推荐笔记 |

典型应用场景

- 内容分析系统:挖掘热门笔记的创作特征与传播规律

- 品牌监控工具:跟踪品牌相关笔记的用户反馈与评价

- 竞品分析平台:分析同类产品在小红书的内容营销策略

- 内容推荐系统:基于笔记特征构建个性化推荐模型

- 舆情监测系统:监控特定话题在小红书的讨论热度与情感倾向

接口调用流程

plaintext

开发者认证 → 应用注册 → OAuth授权 → Token获取 → 接口调用 →

内容解析 → 数据存储 → 定期更新 → Token刷新

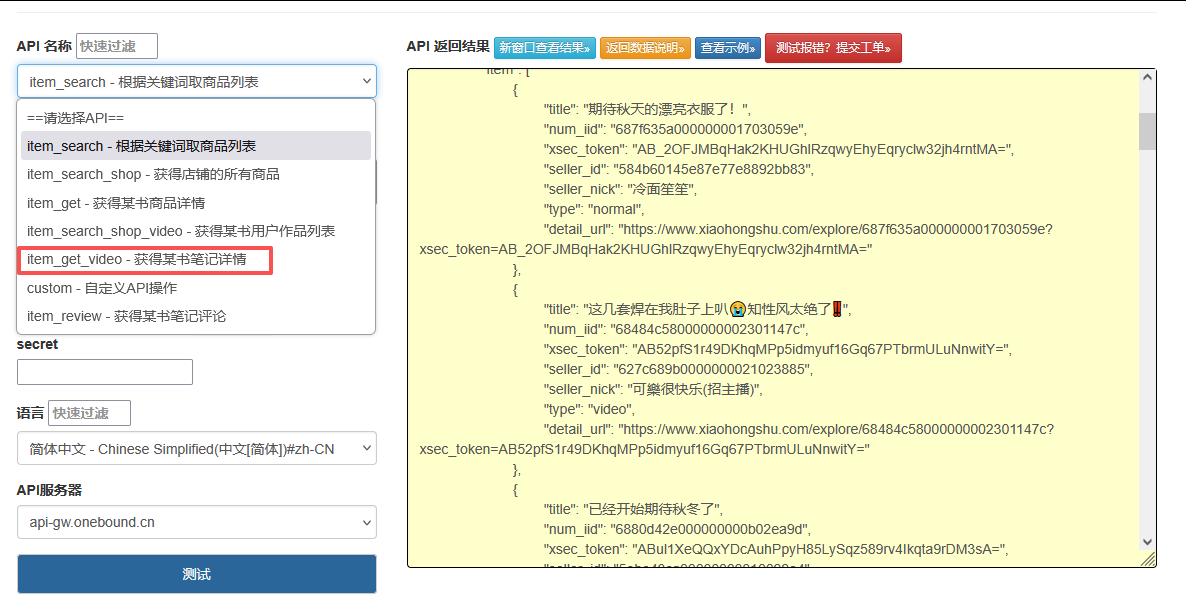

点击获取key和secret

二、接口认证与参数详解

OAuth 2.0 认证流程

小红书采用标准 OAuth 2.0 授权流程,具体步骤:

- 开发者在开放平台注册应用,获取 client_id 和 client_secret

- 引导用户授权,获取 code

- 使用 code 换取 access_token 和 refresh_token

- 调用接口时在 HTTP 头部携带 access_token

- access_token 过期(默认 2 小时)时,使用 refresh_token 重新获取

笔记详情接口参数

| 参数名 | 类型 | 说明 | 是否必须 |

|---|---|---|---|

| note_id | String | 笔记 ID | 是 |

| need_related | Boolean | 是否返回相关笔记,默认 false | 否 |

| need_author | Boolean | 是否返回作者信息,默认 false | 否 |

| need_statistics | Boolean | 是否返回统计数据,默认 true | 否 |

| need_content | Boolean | 是否返回完整内容,默认 true | 否 |

响应结果结构

笔记详情接口的核心响应字段:

json

{"code": 0,"msg": "success","data": {"note_id": "6123456789abcdef01234567","title": "夏日必备的5款防晒霜测评","content": "夏天快到了,给大家分享几款我常用的防晒霜...","content_rich": [{"type": "text", "text": "夏天快到了,给大家分享几款我常用的防晒霜..."},{"type": "image", "url": "https://sns-img-hw.xhscdn.com/abcdef.jpg", "width": 1080, "height": 1440},{"type": "text", "text": "这款质地很轻薄,适合油性皮肤..."},{"type": "video", "url": "https://sns-video-hw.xhscdn.com/123456.mp4", "duration": 156}],"tags": ["防晒霜", "夏日护肤", "美妆测评"],"at_users": [{"user_id": "5123456789", "name": "美妆达人"}],"location": {"name": "上海市", "longitude": 121.47, "latitude": 31.23},"statistics": {"like_count": 12563,"collect_count": 8952,"comment_count": 1256,"share_count": 325,"view_count": 156890},"author": {"user_id": "612345678","name": "护肤小能手","avatar": "https://sns-avatar.xhscdn.com/avatar.jpg","follower_count": 56800,"following_count": 320,"note_count": 128},"create_time": 1625097600,"update_time": 1625100800,"related_notes": [/* 相关笔记列表 */]}

}

三、核心技术实现

1. OAuth 认证与 Token 管理工具

python

运行

import requests

import time

import json

from datetime import datetime, timedeltaclass XiaohongshuAuthManager:"""小红书OAuth认证与Token管理工具"""def __init__(self, client_id, client_secret, redirect_uri):self.client_id = client_idself.client_secret = client_secretself.redirect_uri = redirect_uriself.base_auth_url = "https://open.xiaohongshu.com/oauth"self.token_url = f"{self.base_auth_url}/token"self.access_token = Noneself.refresh_token = Noneself.expires_at = 0 # 令牌过期时间戳self.scope = "note.read user.read comment.read"def get_authorization_url(self):"""生成授权URL"""params = {"client_id": self.client_id,"response_type": "code","redirect_uri": self.redirect_uri,"scope": self.scope,"state": self._generate_state()}query_string = "&".join([f"{k}={v}" for k, v in params.items()])return f"{self.base_auth_url}/authorize?{query_string}"def get_token_by_code(self, code):"""使用授权码获取令牌"""data = {"client_id": self.client_id,"client_secret": self.client_secret,"grant_type": "authorization_code","code": code,"redirect_uri": self.redirect_uri}try:response = requests.post(self.token_url,data=data,headers={"Content-Type": "application/x-www-form-urlencoded"},timeout=10)result = response.json()self._process_token_result(result)return self.access_tokenexcept Exception as e:print(f"通过code获取token失败: {str(e)}")return Nonedef refresh_access_token(self):"""刷新访问令牌"""if not self.refresh_token:raise Exception("没有可用的refresh_token")data = {"client_id": self.client_id,"client_secret": self.client_secret,"grant_type": "refresh_token","refresh_token": self.refresh_token}try:response = requests.post(self.token_url,data=data,headers={"Content-Type": "application/x-www-form-urlencoded"},timeout=10)result = response.json()self._process_token_result(result)return self.access_tokenexcept Exception as e:print(f"刷新token失败: {str(e)}")return Nonedef get_authorization_header(self):"""获取认证头部信息"""# 检查token是否过期,提前60秒刷新if not self.access_token or time.time() >= self.expires_at - 60:if not self.refresh_access_token():raise Exception("无法获取有效的access_token")return {"Authorization": f"Bearer {self.access_token}","Content-Type": "application/json"}def _process_token_result(self, result):"""处理令牌响应结果"""if "error" in result:raise Exception(f"获取token失败: {result.get('error_description', '未知错误')}")self.access_token = result.get("access_token")self.refresh_token = result.get("refresh_token")expires_in = int(result.get("expires_in", 7200)) # 默认2小时self.expires_at = time.time() + expires_inprint(f"令牌更新成功,有效期至: {datetime.fromtimestamp(self.expires_at)}")def _generate_state(self):"""生成随机state参数"""import uuidreturn str(uuid.uuid4()).replace("-", "")[:16]def is_token_valid(self):"""检查令牌是否有效"""return self.access_token is not None and time.time() < self.expires_at - 60

2. 笔记详情接口客户端

python

运行

import requests

import time

import json

from threading import Lock

from datetime import datetimeclass XiaohongshuNoteClient:"""小红书笔记接口客户端"""def __init__(self, auth_manager):self.auth_manager = auth_managerself.base_url = "https://open.xiaohongshu.com/api/v1"self.timeout = 15 # 超时时间(秒)self.qps_limit = 5 # QPS限制self.last_request_time = 0self.request_lock = Lock() # 线程锁控制QPSdef _check_qps(self):"""检查并控制QPS"""with self.request_lock:current_time = time.time()interval = 1.0 / self.qps_limit # 每次请求最小间隔elapsed = current_time - self.last_request_timeif elapsed < interval:# 需要等待的时间time.sleep(interval - elapsed)self.last_request_time = current_timedef get_note_detail(self, note_id, **kwargs):"""获取笔记详情:param note_id: 笔记ID:param kwargs: 其他参数:- need_related: 是否返回相关笔记- need_author: 是否返回作者信息- need_statistics: 是否返回统计数据- need_content: 是否返回完整内容:return: 笔记详情字典"""# 检查QPS限制self._check_qps()# 1. 构造请求URLurl = f"{self.base_url}/note/detail"# 2. 构建请求参数params = {"note_id": note_id}# 添加其他参数valid_params = ["need_related", "need_author", "need_statistics", "need_content"]for param in valid_params:if param in kwargs and kwargs[param] is not None:params[param] = "true" if kwargs[param] else "false"# 3. 获取认证头部headers = self.auth_manager.get_authorization_header()if not headers:raise Exception("获取认证信息失败")# 4. 发送请求try:response = requests.get(url,params=params,headers=headers,timeout=self.timeout)# 5. 解析响应result = response.json()# 6. 处理响应结果if result.get("code") == 0:return self._parse_note_detail(result.get("data", {}))else:raise Exception(f"获取笔记详情失败: {result.get('msg', '未知错误')} "f"(错误码: {result.get('code', '未知')})")except Exception as e:print(f"笔记详情接口调用异常: {str(e)}")return Nonedef get_note_comments(self, note_id, page=1, page_size=20, sort="latest"):"""获取笔记评论:param note_id: 笔记ID:param page: 页码:param page_size: 每页条数:param sort: 排序方式:latest-最新,hot-最热:return: 评论列表"""# 检查QPS限制self._check_qps()# 1. 构造请求URLurl = f"{self.base_url}/note/comments"# 2. 构建请求参数params = {"note_id": note_id,"page": page,"page_size": min(50, max(1, page_size)), # 限制最大50条"sort": sort if sort in ["latest", "hot"] else "latest"}# 3. 获取认证头部headers = self.auth_manager.get_authorization_header()if not headers:raise Exception("获取认证信息失败")# 4. 发送请求try:response = requests.get(url,params=params,headers=headers,timeout=self.timeout)# 5. 解析响应result = response.json()# 6. 处理响应结果if result.get("code") == 0:return self._parse_comments(result.get("data", {}))else:raise Exception(f"获取笔记评论失败: {result.get('msg', '未知错误')} "f"(错误码: {result.get('code', '未知')})")except Exception as e:print(f"笔记评论接口调用异常: {str(e)}")return Nonedef get_related_notes(self, note_id, limit=10):"""获取相关笔记"""return self.get_note_detail(note_id, need_related=True, need_author=False, need_statistics=True, need_content=False)def _parse_note_detail(self, raw_data):"""解析笔记详情数据"""if not raw_data or "note_id" not in raw_data:return None# 处理富文本内容content_text = ""media_resources = {"images": [],"videos": [],"other": []}if "content_rich" in raw_data and isinstance(raw_data["content_rich"], list):for item in raw_data["content_rich"]:if item.get("type") == "text" and "text" in item:content_text += item["text"] + "\n"elif item.get("type") == "image" and "url" in item:media_resources["images"].append({"url": item["url"],"width": item.get("width", 0),"height": item.get("height", 0),"format": item.get("format", "")})elif item.get("type") == "video" and "url" in item:media_resources["videos"].append({"url": item["url"],"duration": item.get("duration", 0),"width": item.get("width", 0),"height": item.get("height", 0),"cover_url": item.get("cover_url", "")})else:media_resources["other"].append(item)# 处理作者信息author_info = Noneif "author" in raw_data and raw_data["author"]:author = raw_data["author"]author_info = {"user_id": author.get("user_id", ""),"name": author.get("name", ""),"avatar": author.get("avatar", ""),"description": author.get("description", ""),"follower_count": int(author.get("follower_count", 0)),"following_count": int(author.get("following_count", 0)),"note_count": int(author.get("note_count", 0)),"level": author.get("level", 0),"tags": author.get("tags", [])}# 处理相关笔记related_notes = []if "related_notes" in raw_data and isinstance(raw_data["related_notes"], list):for note in raw_data["related_notes"]:related_notes.append({"note_id": note.get("note_id", ""),"title": note.get("title", ""),"cover_image": note.get("cover_image", ""),"tags": note.get("tags", []),"statistics": self._parse_statistics(note.get("statistics", {}))})return {"note_id": raw_data.get("note_id", ""),"title": raw_data.get("title", ""),"content": raw_data.get("content", ""),"content_text": content_text.strip(), # 纯文本内容"content_rich": raw_data.get("content_rich", []), # 富文本结构"media_resources": media_resources, # 整理后的媒体资源"tags": raw_data.get("tags", []),"at_users": raw_data.get("at_users", []),"location": raw_data.get("location", {}),"statistics": self._parse_statistics(raw_data.get("statistics", {})),"author": author_info,"related_notes": related_notes,"create_time": self._format_timestamp(raw_data.get("create_time")),"update_time": self._format_timestamp(raw_data.get("update_time")),"fetch_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S")}def _parse_comments(self, raw_data):"""解析评论数据"""if not raw_data or "comments" not in raw_data:return Nonecomments = []for item in raw_data["comments"]:# 处理子评论sub_comments = []if "sub_comments" in item and isinstance(item["sub_comments"], list):for sub in item["sub_comments"]:sub_comments.append(self._parse_single_comment(sub))comments.append({**self._parse_single_comment(item),"sub_comments": sub_comments,"sub_comment_count": int(item.get("sub_comment_count", 0))})return {"note_id": raw_data.get("note_id", ""),"comments": comments,"total_count": int(raw_data.get("total_count", 0)),"page": int(raw_data.get("page", 1)),"page_size": int(raw_data.get("page_size", 20)),"total_page": (int(raw_data.get("total_count", 0)) + int(raw_data.get("page_size", 20)) - 1) // int(raw_data.get("page_size", 20)),"fetch_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S")}def _parse_single_comment(self, comment):"""解析单条评论"""return {"comment_id": comment.get("comment_id", ""),"content": comment.get("content", ""),"user": {"user_id": comment.get("user_id", ""),"name": comment.get("user_name", ""),"avatar": comment.get("user_avatar", "")},"like_count": int(comment.get("like_count", 0)),"create_time": self._format_timestamp(comment.get("create_time")),"reply_to": comment.get("reply_to", {}) # 回复对象信息}def _parse_statistics(self, stats):"""解析统计数据"""return {"like_count": int(stats.get("like_count", 0)),"collect_count": int(stats.get("collect_count", 0)),"comment_count": int(stats.get("comment_count", 0)),"share_count": int(stats.get("share_count", 0)),"view_count": int(stats.get("view_count", 0)),"forward_count": int(stats.get("forward_count", 0))}def _format_timestamp(self, timestamp):"""格式化时间戳"""if not timestamp:return ""try:# 小红书时间戳可能是秒或毫秒if len(str(timestamp)) > 10:timestamp = int(timestamp) / 1000return datetime.fromtimestamp(int(timestamp)).strftime("%Y-%m-%d %H:%M:%S")except:return str(timestamp)

3. 笔记数据管理工具(缓存与内容分析)

python

运行

import os

import json

import sqlite3

from datetime import datetime, timedelta

import time

import re

from concurrent.futures import ThreadPoolExecutor

import jieba

from jieba.analyse import extract_tags# 初始化结巴分词

jieba.initialize()class XiaohongshuNoteManager:"""小红书笔记数据管理工具,支持缓存与内容分析"""def __init__(self, client_id, client_secret, redirect_uri, cache_dir="./xiaohongshu_cache"):self.auth_manager = XiaohongshuAuthManager(client_id, client_secret, redirect_uri)self.note_client = XiaohongshuNoteClient(self.auth_manager)self.cache_dir = cache_dirself.db_path = os.path.join(cache_dir, "xiaohongshu_cache.db")self._init_cache()self._init_stopwords()def _init_cache(self):"""初始化缓存数据库"""if not os.path.exists(self.cache_dir):os.makedirs(self.cache_dir)# 连接数据库conn = sqlite3.connect(self.db_path)cursor = conn.cursor()# 创建笔记缓存表cursor.execute('''CREATE TABLE IF NOT EXISTS note_cache (note_id TEXT PRIMARY KEY,data TEXT,fetch_time TEXT,update_time TEXT)''')# 创建评论缓存表cursor.execute('''CREATE TABLE IF NOT EXISTS comment_cache (comment_id TEXT PRIMARY KEY,note_id TEXT,data TEXT,fetch_time TEXT)''')# 创建用户缓存表cursor.execute('''CREATE TABLE IF NOT EXISTS user_cache (user_id TEXT PRIMARY KEY,data TEXT,fetch_time TEXT)''')conn.commit()conn.close()def _init_stopwords(self):"""初始化停用词表"""self.stopwords = set()try:# 尝试从文件加载停用词stopwords_path = os.path.join(self.cache_dir, "stopwords.txt")if os.path.exists(stopwords_path):with open(stopwords_path, "r", encoding="utf-8") as f:self.stopwords = set([line.strip() for line in f.readlines() if line.strip()])else:# 默认停用词self.stopwords = {"的", "了", "在", "是", "我", "有", "和", "就", "不", "人", "都", "一", "一个", "上", "也", "很", "到", "说", "要", "去", "你", "会", "着", "没有", "看", "好", "自己", "这"}except Exception as e:print(f"初始化停用词失败: {str(e)}")self.stopwords = set()def get_note_detail(self, note_id, use_cache=True, cache_ttl=3600, **kwargs):"""获取笔记详情,支持缓存:param note_id: 笔记ID:param use_cache: 是否使用缓存:param cache_ttl: 缓存有效期(秒):param kwargs: 其他接口参数:return: 笔记详情"""# 尝试从缓存获取if use_cache:cached_data = self._get_cached_data("note_cache", "note_id", note_id, cache_ttl)if cached_data:print(f"使用缓存数据,笔记ID: {note_id}")return cached_data# 从接口获取print(f"调用接口获取笔记详情,ID: {note_id}")result = self.note_client.get_note_detail(note_id, **kwargs)# 更新缓存if result:self._update_note_cache(note_id, result)return resultdef get_note_comments(self, note_id, page=1, page_size=20, sort="latest",use_cache=True, cache_ttl=1800):"""获取笔记评论,支持缓存"""# 生成缓存键(包含分页信息)cache_key = f"{note_id}_page{page}_size{page_size}_sort{sort}"# 尝试从缓存获取if use_cache:cached_data = self._get_cached_data("comment_cache", "comment_id", cache_key, cache_ttl)if cached_data:print(f"使用缓存数据,笔记ID: {note_id},评论页: {page}")return cached_data# 从接口获取print(f"调用接口获取笔记评论,ID: {note_id},评论页: {page}")result = self.note_client.get_note_comments(note_id, page, page_size, sort)# 更新缓存if result:self._update_comment_cache(cache_key, note_id, result)return resultdef batch_get_notes(self, note_ids, max_workers=2,** kwargs):"""批量获取笔记详情"""if not note_ids:return []# 使用线程池并行获取with ThreadPoolExecutor(max_workers=max_workers) as executor:futures = [executor.submit(self.get_note_detail, note_id, **kwargs)for note_id in note_ids]# 收集结果results = []for future in futures:try:note = future.result()if note:results.append(note)except Exception as e:print(f"获取笔记详情异常: {str(e)}")return resultsdef analyze_note_content(self, note_data):"""分析笔记内容,提取关键词和情感倾向"""if not note_data or "content_text" not in note_data:return Nonecontent = note_data["content_text"]if not content:return None# 文本预处理processed_text = self._preprocess_text(content)# 提取关键词keywords = extract_tags(processed_text, topK=10, withWeight=True)# 简单情感分析(基于关键词匹配)sentiment = self._simple_sentiment_analysis(processed_text)# 统计媒体资源media_stats = {"image_count": len(note_data["media_resources"]["images"]),"video_count": len(note_data["media_resources"]["videos"]),"total_media": len(note_data["media_resources"]["images"]) + len(note_data["media_resources"]["videos"])}# 互动率计算stats = note_data.get("statistics", {})view_count = stats.get("view_count", 1) # 避免除以0interaction_rate = ((stats.get("like_count", 0) + stats.get("comment_count", 0) + stats.get("collect_count", 0) + stats.get("share_count", 0)) / view_count) * 100return {"note_id": note_data["note_id"],"title": note_data["title"],"keywords": [{"word": k, "weight": round(w, 4)} for k, w in keywords],"sentiment": sentiment,"media_stats": media_stats,"tag_count": len(note_data.get("tags", [])),"interaction_rate": round(interaction_rate, 2), # 百分比"analysis_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S")}def _preprocess_text(self, text):"""文本预处理:去除特殊字符、停用词"""# 去除HTML标签和特殊字符text = re.sub(r"<.*?>", "", text)text = re.sub(r"[^\u4e00-\u9fa5a-zA-Z0-9\s]", " ", text)text = re.sub(r"\s+", " ", text).strip()# 分词并去除停用词words = jieba.cut(text)return " ".join([word for word in words if word not in self.stopwords and word.strip()])def _simple_sentiment_analysis(self, text):"""简单情感分析(基于正面/负面词表)"""# 简单的情感词表positive_words = {"好", "棒", "优秀", "推荐", "喜欢", "不错", "完美", "满意", "值得", "惊喜", "舒适", "好看"}negative_words = {"差", "不好", "糟糕", "失望", "讨厌", "劣质", "垃圾", "难吃", "难看", "麻烦", "后悔", "失望"}# 分词words = jieba.cut(text)# 计数positive_count = 0negative_count = 0for word in words:if word in positive_words:positive_count += 1elif word in negative_words:negative_count += 1# 计算情感得分total = positive_count + negative_countif total == 0:return {"sentiment": "neutral", "score": 0, "positive": 0, "negative": 0}score = (positive_count - negative_count) / totalsentiment = "positive" if score > 0 else "negative" if score < 0 else "neutral"return {"sentiment": sentiment,"score": round(score, 4),"positive": positive_count,"negative": negative_count}def _get_cached_data(self, table, id_field, id_value, ttl):"""从缓存获取数据"""conn = sqlite3.connect(self.db_path)cursor = conn.cursor()cursor.execute(f"SELECT data, fetch_time FROM {table} WHERE {id_field} = ?",(id_value,))result = cursor.fetchone()conn.close()if result:data_str, fetch_time = result# 检查缓存是否过期fetch_time_obj = datetime.strptime(fetch_time, "%Y-%m-%d %H:%M:%S")if (datetime.now() - fetch_time_obj).total_seconds() <= ttl:try:return json.loads(data_str)except:return Nonereturn Nonedef _update_note_cache(self, note_id, data):"""更新笔记缓存"""if not data or "note_id" not in data:returnconn = sqlite3.connect(self.db_path)cursor = conn.cursor()data_str = json.dumps(data, ensure_ascii=False)fetch_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S")update_time = data.get("update_time", fetch_time)cursor.execute('''INSERT OR REPLACE INTO note_cache (note_id, data, fetch_time, update_time)VALUES (?, ?, ?, ?)''', (note_id, data_str, fetch_time, update_time))conn.commit()conn.close()def _update_comment_cache(self, comment_id, note_id, data):"""更新评论缓存"""if not data or "comments" not in data:returnconn = sqlite3.connect(self.db_path)cursor = conn.cursor()data_str = json.dumps(data, ensure_ascii=False)fetch_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S")cursor.execute('''INSERT OR REPLACE INTO comment_cache (comment_id, note_id, data, fetch_time)VALUES (?, ?, ?, ?)''', (comment_id, note_id, data_str, fetch_time))conn.commit()conn.close()def clean_expired_cache(self, max_age=86400):"""清理过期缓存,默认保留24小时内的数据"""conn = sqlite3.connect(self.db_path)cursor = conn.cursor()# 计算过期时间expire_time = (datetime.now() - timedelta(seconds=max_age)).strftime("%Y-%m-%d %H:%M:%S")# 清理笔记缓存cursor.execute("DELETE FROM note_cache WHERE fetch_time < ?", (expire_time,))deleted_notes = cursor.rowcount# 清理评论缓存cursor.execute("DELETE FROM comment_cache WHERE fetch_time < ?", (expire_time,))deleted_comments = cursor.rowcount# 清理用户缓存(保留7天)user_expire_time = (datetime.now() - timedelta(days=7)).strftime("%Y-%m-%d %H:%M:%S")cursor.execute("DELETE FROM user_cache WHERE fetch_time < ?", (user_expire_time,))deleted_users = cursor.rowcountconn.commit()conn.close()print(f"清理过期缓存完成,删除笔记缓存 {deleted_notes} 条,评论缓存 {deleted_comments} 条,用户缓存 {deleted_users} 条")return {"deleted_notes": deleted_notes,"deleted_comments": deleted_comments,"deleted_users": deleted_users}

四、完整使用示例

1. 笔记详情获取与内容分析示例

python

运行

def note_detail_analysis_demo():# 替换为实际的应用信息CLIENT_ID = "your_client_id"CLIENT_SECRET = "your_client_secret"REDIRECT_URI = "https://yourdomain.com/callback"# 初始化笔记管理器note_manager = XiaohongshuNoteManager(CLIENT_ID, CLIENT_SECRET, REDIRECT_URI)# 1. 获取授权URL(实际应用中需要引导用户访问该URL进行授权)auth_url = note_manager.auth_manager.get_authorization_url()print(f"请访问以下URL进行授权:\n{auth_url}")# 2. 在实际应用中,用户授权后会重定向到REDIRECT_URI,并附带code参数# 这里需要手动输入获取到的codecode = input("请输入授权后获取的code: ").strip()# 3. 使用code获取access_tokenif note_manager.auth_manager.get_token_by_code(code):print("授权成功,开始获取笔记数据...")# 要分析的笔记IDnote_id = "6123456789abcdef01234567" # 示例笔记ID# 4. 获取笔记详情note_detail = note_manager.get_note_detail(note_id,use_cache=True,cache_ttl=3600,need_related=True,need_author=True)if note_detail:print(f"\n===== 笔记详情: {note_detail['title']} =====")print(f"ID: {note_detail['note_id']}")print(f"发布时间: {note_detail['create_time']}")print(f"作者: {note_detail['author']['name']} (粉丝数: {note_detail['author']['follower_count']})")print(f"标签: {', '.join(note_detail['tags'])}")print(f"互动数据: 点赞 {note_detail['statistics']['like_count']}, "f"收藏 {note_detail['statistics']['collect_count']}, "f"评论 {note_detail['statistics']['comment_count']}")print(f"内容预览: {note_detail['content_text'][:200]}...")# 5. 分析笔记内容analysis = note_manager.analyze_note_content(note_detail)if analysis:print("\n===== 笔记内容分析 =====")print(f"情感倾向: {analysis['sentiment']['sentiment']} (得分: {analysis['sentiment']['score']})")print(f"互动率: {analysis['interaction_rate']}%")print(f"媒体资源: 图片 {analysis['media_stats']['image_count']} 张, "f"视频 {analysis['media_stats']['video_count']} 个")print("关键词: " + ", ".join([f"{kw['word']}({kw['weight']:.2f})" for kw in analysis['keywords']]))# 6. 获取笔记评论(第一页)comments = note_manager.get_note_comments(note_id, page=1, page_size=10, sort="hot")if comments:print(f"\n===== 热门评论 ({comments['total_count']} 条) =====")for i, comment in enumerate(comments["comments"][:5], 1):print(f"\n{i}. {comment['user']['name']}: {comment['content']}")print(f" 点赞: {comment['like_count']}, 时间: {comment['create_time']}")# 显示第一条子评论if comment["sub_comments"]:sub = comment["sub_comments"][0]print(f" 回复: {sub['user']['name']}: {sub['content']}")# 7. 清理过期缓存note_manager.clean_expired_cache()else:print("授权失败,无法继续操作")if __name__ == "__main__":note_detail_analysis_demo()

2. 批量笔记获取与对比分析示例

python

运行

def batch_note_analysis_demo():# 替换为实际的应用信息CLIENT_ID = "your_client_id"CLIENT_SECRET = "your_client_secret"REDIRECT_URI = "https://yourdomain.com/callback"# 初始化笔记管理器note_manager = XiaohongshuNoteManager(CLIENT_ID, CLIENT_SECRET, REDIRECT_URI)# 假设已经完成授权流程,这里直接使用已有的token# 实际应用中需要先完成授权if not note_manager.auth_manager.is_token_valid():print("请先完成授权流程")return# 要分析的笔记ID列表note_ids = ["6123456789abcdef01234567","6123456789abcdef01234568","6123456789abcdef01234569","6123456789abcdef01234570","6123456789abcdef01234571"]# 批量获取笔记详情notes = note_manager.batch_get_notes(note_ids,max_workers=2,use_cache=True,need_author=True)print(f"\n===== 批量获取完成,共获取 {len(notes)}/{len(note_ids)} 篇笔记 =====")# 分析每篇笔记并汇总结果analysis_results = []for note in notes:analysis = note_manager.analyze_note_content(note)if analysis:analysis_results.append({"note_id": note["note_id"],"title": note["title"],"author": note["author"]["name"],"publish_time": note["create_time"],"like_count": note["statistics"]["like_count"],"comment_count": note["statistics"]["comment_count"],"interaction_rate": analysis["interaction_rate"],"sentiment": analysis["sentiment"]["sentiment"],"keywords": [kw["word"] for kw in analysis["keywords"]]})# 按互动率排序analysis_results.sort(key=lambda x: x["interaction_rate"], reverse=True)# 输出对比结果print("\n===== 笔记对比分析结果 =====")for i, item in enumerate(analysis_results, 1):print(f"\n{i}. {item['title']}")print(f" ID: {item['note_id']}")print(f" 作者: {item['author']}, 发布时间: {item['publish_time']}")print(f" 互动率: {item['interaction_rate']}%, 情感倾向: {item['sentiment']}")print(f" 点赞: {item['like_count']}, 评论: {item['comment_count']}")print(f" 关键词: {', '.join(item['keywords'][:5])}")if __name__ == "__main__":batch_note_analysis_demo()

五、常见问题与优化建议

1. 常见错误码及解决方案

| 错误码 | 说明 | 解决方案 |

|---|---|---|

| 0 | 成功 | - |

| 400 | 参数错误 | 检查 note_id 是否有效,参数格式是否正确 |

| 401 | 未授权 | 检查 access_token 是否有效,重新授权 |

| 403 | 权限不足 | 检查应用是否已申请相应接口权限 |

| 404 | 笔记不存在 | 确认 note_id 是否正确,笔记可能已被删除或私密 |

| 429 | 频率超限 | 降低调用频率,确保不超过 QPS 限制 |

| 500 | 服务器错误 | 记录错误信息,稍后重试 |

| 10001 | 授权过期 | 使用 refresh_token 刷新 access_token |

| 10002 | 应用未审核 | 完成应用审核流程 |

2. 接口调用优化策略

- Token 管理:实现自动刷新机制,避免频繁授权操作

- 缓存策略:根据内容更新频率设置缓存(热门笔记 1-2 小时,普通笔记 6-12 小时)

- 批量处理:使用多线程批量获取时控制并发数不超过 QPS 限制

- 数据过滤:按需获取字段(如列表展示时不需要完整内容)

- 增量更新:通过 update_time 字段判断是否需要更新缓存数据

3. 内容分析特色处理

- 富文本解析:区分文本、图片、视频等不同类型内容,分别处理

- 关键词提取:结合小红书特色词汇库优化分词结果

- 情感分析:针对不同领域(美妆、美食等)使用领域特定情感词表

- 互动质量评估:不仅看数量,还需分析评论内容与笔记的相关性

- 多媒体分析:统计图片 / 视频数量与互动数据的相关性

通过本文提供的技术方案,开发者可以高效获取和分析小红书平台的笔记内容。该方案特别针对小红书的 UGC 内容特点进行了优化,实现了完整的 OAuth 2.0 认证、富文本解析、内容分析和缓存管理功能。在实际应用中,需注意遵守平台的数据使用规范,合理设置缓存策略和请求频率,以确保系统稳定高效运行。