docker快速安装环境

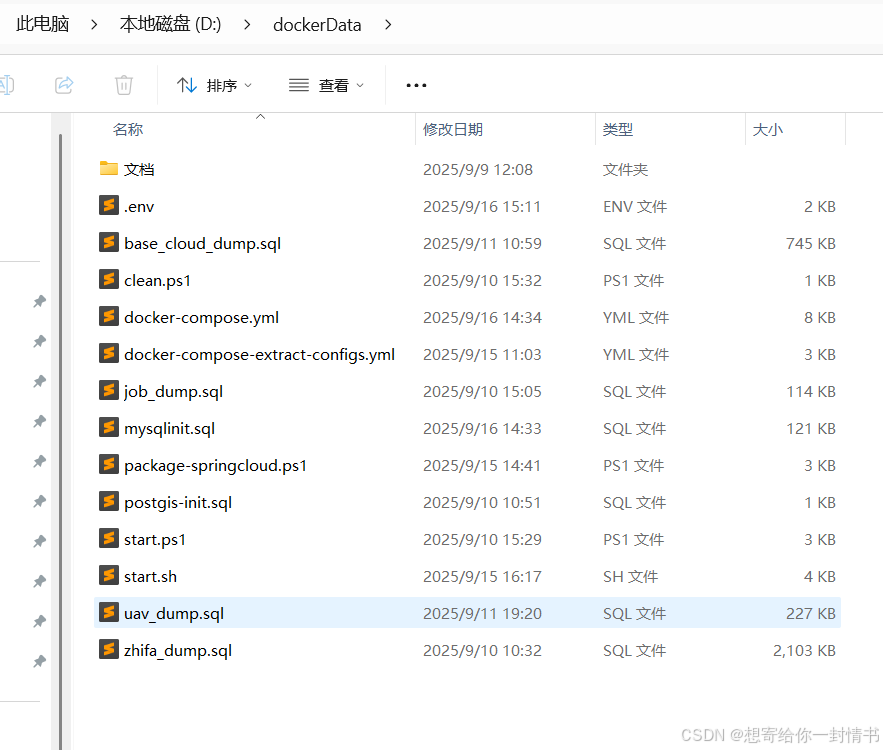

以搭建一个微服务应用基本环境为例,准备目录如下

.env为变量文件

# common

TIME_ZONE=Asia/Shanghai# MySQL

MYSQL_IMAGE=mysql:8.0.33

MYSQL_ROOT_PASSWORD=feg#fe@fjel

MYSQL_USER=appuser

MYSQL_PASSWORD=apppass

MYSQL_DATABASE=nacos

MYSQL_PORT=3306# pg

PG_USER=postgres

PG_PASSWORD=postgres@#fedfd

PG_DB=postgres

PG_PORT=5432

PG_IMAGE=postgis/postgis:14-3.3# Redis

REDIS_IMAGE=redis:6.2.17

REDIS_PASSWORD=fegjfefem

REDIS_PORT=6379# Nacos

NACOS_IMAGE=nacos/nacos-server:v2.3.2

NACOS_PORT=8848

NACOS_CLIENT_RPC_PORT=9848

NACOS_SERVER_RPC_PORT=9849

NACOS_USERNAME=nacos

NACOS_PASSWORD=m7LnWX3KgJMadr8jOhKNfelfe# MinIO

MINIO_IMAGE=minio/minio:RELEASE.2024-05-28T17-19-04Z

MINIO_ROOT_USER=admin

MINIO_ROOT_PASSWORD=minioadmin@fefejmv

MINIO_PORT=9000

MINIO_CONSOLE_PORT=9001# EMQX

EMQX_IMAGE=emqx/emqx:5.7.0

EMQX_PORT=1883

EMQX_DASHBOARD_PORT=18083

EMMQTT_WS_PORT=8083

EMQX_SSL_PORT=8883

EMQX_WSS_PORT=8084

EMQX_USERNAME=admin

EMQX_PASSWORD=public# Nginx

NGINX_IMAGE=nginx:latest

NGINX_PORT=80

NGINX_HTTPS_PORT=443# SRS

SRS_IMAGE=ossrs/srs:5.0.170

SRS_RTMP_PORT=1935

SRS_API_PORT=1985

SRS_WEBRTC_PORT=8000

SRS_HTTP_PORT=8080 # RabbitMQ

RABBITMQ_IMAGE=rabbitmq:3.12-management

RABBITMQ_PORT=5672

RABBITMQ_MANAGEMENT_PORT=15672

RABBITMQ_DEFAULT_USER=admin

RABBITMQ_DEFAULT_PASS=fejlgfel@#D

docker-compose-extract-configs.yml为目录挂载文件

version: '3.8'services:nacos-extract:image: ${NACOS_IMAGE}container_name: nacos-extractrestart: "no"volumes:- ./nacos/conf:/tmp/confentrypoint: ["/bin/bash", "-c"]command: >"cp -r /home/nacos/conf/. /tmp/conf/ && echo 'Nacos config files copied to host'"rabbitmq-extract:image: ${RABBITMQ_IMAGE}container_name: rabbitmq-extractrestart: "no"volumes:- ./rabbitmq/conf:/tmp/confcommand: >bash -c "cp -r /etc/rabbitmq/. /tmp/conf/ && echo 'RabbitMQ config files copied to host'"nginx-extract:image: ${NGINX_IMAGE}container_name: nginx-extractrestart: "no"user: "0:0" volumes:- ./nginx/conf:/tmp/conf - ./nginx/html:/tmp/html command: |bash -c "echo '=== Starting Nginx config extraction ===';if cp -rv /etc/nginx/. /tmp/conf/; thenecho '/etc/nginx copied successfully';elseecho '/etc/nginx copy failed (possibly empty)';fi;# Copy static filesif cp -rv /usr/share/nginx/html/. /tmp/html/; thenecho '/usr/share/nginx/html copied successfully';elseecho '/usr/share/nginx/html copy failed';fi;echo '=== Nginx extraction completed ==='"emqx-extract: image: ${EMQX_IMAGE}container_name: emqx-extractrestart: "no"volumes:- ./emqx/etc:/tmp/confcommand: >bash -c "cp -r /opt/emqx/etc/. /tmp/conf/ && echo 'EMQX config files copied to host'"ossrs-extract:image: ${SRS_IMAGE} container_name: ossrs-extractrestart: "no" volumes:- ./ossrs/conf:/tmp/conf - ./ossrs/data:/tmp/data command: >bash -c "mkdir -p /tmp/conf && cp -r /usr/local/srs/conf/. /tmp/conf/ && echo 'SRS config files copied to host ./ossrs/conf';mkdir -p /tmp/data && cp -r /usr/local/srs/objs/nginx/html/. /tmp/data/ && echo 'SRS web files copied to host ./ossrs/data';echo 'SRS config and web files extraction completed, service will exit'"

docker-compose.yml为主服务启动文件

version: '3.8'services:mysql:image: ${MYSQL_IMAGE}container_name: mysqlrestart: alwaysenvironment:MYSQL_ROOT_PASSWORD: ${MYSQL_ROOT_PASSWORD}MYSQL_DATABASE: ${MYSQL_DATABASE}MYSQL_USER: ${MYSQL_USER}MYSQL_PASSWORD: ${MYSQL_PASSWORD}TZ: ${TIME_ZONE}LANG: C.UTF-8 ports:- "${MYSQL_PORT}:3306"volumes:- ./mysql/data:/var/lib/mysql- ./mysql/logs:/var/log/mysql- ./mysqlinit.sql:/docker-entrypoint-initdb.d/init.sql:ronetworks:- app-networkhealthcheck:test: ["CMD-SHELL", "mysql -h localhost -uroot -p${MYSQL_ROOT_PASSWORD} -e 'SELECT 1'"]interval: 10stimeout: 5sretries: 5start_period: 60sdeploy:resources:limits:cpus: '2' # 分配2个CPU核心memory: 5G # 内存需大于innodb_buffer_pool_size(5G > 3G) command:# --character-set-server=utf8mb4# --collation-server=utf8mb4_general_ci # --init_connect='SET NAMES utf8mb4' # --init_connect='SET collation_connection = utf8mb4_unicode_ci'# --max_connections=1000["--character-set-server=utf8mb4","--collation-server=utf8mb4_general_ci","--init_connect='SET NAMES utf8mb4'","--init_connect='SET collation_connection = utf8mb4_unicode_ci'","--max_connections=1000",# 以下是专门针对大文件导入的优化参数"--innodb_buffer_pool_size=3G", # 缓冲池大小(建议为内存的70%)"--innodb_log_buffer_size=64M", # 日志缓冲区大小# "--innodb_flush_log_at_trx_commit=0", # 导入期间降低日志写入频率# "--innodb_doublewrite=0", # 关闭双写缓冲(导入期间临时禁用)"--max_allowed_packet=256M", # 增大允许的数据包大小"--bulk_insert_buffer_size=256M", # 批量插入缓冲区"--skip-log-bin", # 临时禁用二进制日志"--innodb_autoinc_lock_mode=2", # 提高自增ID并发]pg:image: ${PG_IMAGE} container_name: pgrestart: alwaysenvironment:POSTGRES_USER: ${PG_USER}POSTGRES_PASSWORD: ${PG_PASSWORD}POSTGRES_DB: ${PG_DB}TZ: ${TIME_ZONE}ports:- "${PG_PORT}:5432"volumes:- ./pg/data:/var/lib/postgresql/data- ./postgis-init.sql:/docker-entrypoint-initdb.d/postgis-init.sql:ro networks:- app-networkhealthcheck:test: ["CMD-SHELL", "pg_isready -U ${PG_USER} -d ${PG_DB}"]interval: 10stimeout: 5sretries: 5redis:image: ${REDIS_IMAGE}container_name: redisrestart: alwaysenvironment:TZ: ${TIME_ZONE}ports:- "${REDIS_PORT}:6379"volumes:- ./redis/data:/data- ./redis/logs:/var/log/redisnetworks:- app-networkcommand: redis-server --requirepass ${REDIS_PASSWORD} --appendonly yeshealthcheck:test: ["CMD-SHELL", "redis-cli -a ${REDIS_PASSWORD} ping"]timeout: 5sretries: 3nacos:image: ${NACOS_IMAGE}container_name: nacosrestart: alwaysenvironment:MODE: standaloneSPRING_DATASOURCE_PLATFORM: mysqlMYSQL_SERVICE_HOST: mysql MYSQL_SERVICE_PORT: ${MYSQL_PORT}MYSQL_SERVICE_DB_NAME: ${MYSQL_DATABASE}MYSQL_SERVICE_USER: rootMYSQL_SERVICE_PASSWORD: ${MYSQL_ROOT_PASSWORD}MYSQL_SERVICE_DB_PARAM: "characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true"NACOS_AUTH_USERNAME: ${NACOS_USERNAME} NACOS_AUTH_PASSWORD: ${NACOS_PASSWORD} NACOS_AUTH_ENABLE: "true"NACOS_AUTH_IDENTITY_KEY: serverIdentityNACOS_AUTH_IDENTITY_VALUE: securityNACOS_AUTH_TOKEN: SecretKey012345678901234567890123456789012345678901234567890123456789 ports:- "${NACOS_PORT}:8848"- "${NACOS_CLIENT_RPC_PORT}:9848" - "${NACOS_SERVER_RPC_PORT}:9849" volumes:- ./nacos/conf:/home/nacos/conf- ./nacos/data:/home/nacos/data- ./nacos/logs:/home/nacos/logsdepends_on:mysql:condition: service_healthynetworks:- app-networkhealthcheck:test: ["CMD", "curl", "-f", "http://localhost:8848/nacos/actuator/health"]interval: 30stimeout: 10sretries: 3minio:image: ${MINIO_IMAGE}container_name: miniorestart: alwaysenvironment:MINIO_ROOT_USER: ${MINIO_ROOT_USER}MINIO_ROOT_PASSWORD: ${MINIO_ROOT_PASSWORD}TZ: ${TIME_ZONE}ports:- "${MINIO_PORT}:9000"- "${MINIO_CONSOLE_PORT}:9001"volumes:- ./minio/data:/data- ./minio/config:/root/.minionetworks:- app-networkcommand: server /data --console-address ":9001"healthcheck:test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]interval: 30stimeout: 20sretries: 3rabbitmq:image: ${RABBITMQ_IMAGE}container_name: rabbitmqrestart: alwaysenvironment:RABBITMQ_DEFAULT_USER: ${RABBITMQ_DEFAULT_USER}RABBITMQ_DEFAULT_PASS: ${RABBITMQ_DEFAULT_PASS}RABBITMQ_DEFAULT_VHOST: /TZ: ${TIME_ZONE}ports:- "${RABBITMQ_PORT}:5672" - "${RABBITMQ_MANAGEMENT_PORT}:15672" volumes:- ./rabbitmq/data:/var/lib/rabbitmq- ./rabbitmq/logs:/var/log/rabbitmq- ./rabbitmq/conf:/etc/rabbitmqnetworks:- app-networkhealthcheck:test: ["CMD", "rabbitmq-diagnostics", "check_port_connectivity"]interval: 30stimeout: 10sretries: 3 nginx:image: ${NGINX_IMAGE}container_name: nginxrestart: alwaysports:- "${NGINX_PORT}:80" volumes:- ./nginx/conf/nginx.conf:/etc/nginx/nginx.conf - ./nginx/conf/conf.d:/etc/nginx/conf.d - ./nginx/html:/usr/share/nginx/html- ./nginx/logs:/var/log/nginxcommand: ["nginx", "-g", "daemon off;"] networks:- app-networkdepends_on:- nacos- minio emqx:image: ${EMQX_IMAGE}container_name: emqxrestart: alwaysenvironment:TZ: ${TIME_ZONE}EMQX_DASHBOARD__DEFAULT_USER__LOGIN: ${EMQX_USERNAME}EMQX_DASHBOARD__DEFAULT_USER__PASSWORD: ${EMQX_PASSWORD}ports:- "${EMQX_PORT}:1883" - "${EMQX_DASHBOARD_PORT}:18083" - "${EMMQTT_WS_PORT}:8083" - "${EMQX_SSL_PORT}:8883" - "${EMQX_WSS_PORT}:8084" volumes:- ./emqx/data:/opt/emqx/data- ./emqx/log:/opt/emqx/log- ./emqx/etc:/opt/emqx/etcnetworks:- app-networkhealthcheck:test: ["CMD", "/opt/emqx/bin/emqx_ctl", "status"]interval: 30stimeout: 10sretries: 3ossrs:image: ${SRS_IMAGE}container_name: ossrsrestart: alwaysenvironment:TZ: ${TIME_ZONE}ports:- "${SRS_RTMP_PORT}:1935/tcp" - "${SRS_API_PORT}:1985/tcp" - "${SRS_WEBRTC_PORT}:8000/udp"- "${SRS_HTTP_PORT}:8080/tcp" volumes:- ./ossrs/conf:/usr/local/srs/conf- ./ossrs/logs:/usr/local/srs/logs- ./ossrs/data:/usr/local/srs/objs/nginx/htmlnetworks:- app-network

networks:app-network:driver: bridgename: app-networkstart.ps1为windows系统下power shell执行脚本,在脚本当前目录执行:Set-ExecutionPolicy Bypass -Scope Process -Force; .\start.ps1

<#

.SYNOPSIS

Full initialization workflow: Extract configs → Start services → Initialize PostgreSQL databases (Windows PowerShell)

#># ==============================================

# Step 1: Extract configs (docker-compose-extract-configs.yml)

# ==============================================

Write-Host "`n=== [1/3] Extracting configuration files ===" -ForegroundColor Cyan

Write-Host "Starting config extraction container..." -ForegroundColor Gray

docker compose -f docker-compose-extract-configs.yml up -d 2>&1 | Out-NullWrite-Host "Waiting for config extraction (10s)..." -ForegroundColor Gray

Start-Sleep -Seconds 10Write-Host "Stopping and cleaning up config container..." -ForegroundColor Gray

docker compose -f docker-compose-extract-configs.yml down -v 2>&1 | Out-Null# ==============================================

# Step 2: Start main services (docker-compose.yml)

# ==============================================

Write-Host "`n=== [2/3] Starting main services ===" -ForegroundColor Cyan

Write-Host "Starting main containers (docker-compose up -d)..." -ForegroundColor Gray

docker compose up -d 2>&1 | Out-NullWrite-Host "Waiting for services to be ready (15s)..." -ForegroundColor Gray

Start-Sleep -Seconds 15# ==============================================

# Step 3: Initialize databases

# ==============================================

Write-Host "`n=== [3/3] Initializing databases ===" -ForegroundColor Cyan$DB_SQL_PAIRS = @("uav:uav_dump.sql","base_cloud:base_cloud_dump.sql","zhifa:zhifa_dump.sql","job:job_dump.sql"

)foreach ($PAIR in $DB_SQL_PAIRS) {$DB_NAME = $PAIR -split ':' | Select-Object -First 1$SQL_FILE = $PAIR -split ':' | Select-Object -Last 1Write-Host "`n--- Processing $DB_NAME ($SQL_FILE) ---" -ForegroundColor DarkCyan# Create databasetry {docker exec pg createdb -U postgres -O postgres $DB_NAME 2>&1 | Out-NullWrite-Host "Database '$DB_NAME' created successfully" -ForegroundColor Green}catch {Write-Host "Database '$DB_NAME' already exists (skipping)" -ForegroundColor Yellow}# Copy SQL file to containertry {docker cp "./$SQL_FILE" "pg:/tmp/$SQL_FILE" 2>&1 | Out-NullWrite-Host "SQL file '$SQL_FILE' copied to container" -ForegroundColor Green}catch {Write-Host "Failed to copy SQL file '$SQL_FILE' (file not found?)" -ForegroundColor Redexit 1}# Import SQL filetry {docker exec pg psql -U postgres -d $DB_NAME -f "/tmp/$SQL_FILE" 2>&1 | Out-NullWrite-Host "SQL file imported to '$DB_NAME' successfully" -ForegroundColor Green}catch {Write-Host "Failed to import SQL file to '$DB_NAME'" -ForegroundColor Redexit 1}

}Write-Host "All workflows completed! Databases initialized successfully!" -ForegroundColor Green

start.sh为linux系统下执行脚本

#!/bin/bash

set -euo pipefail # 严格模式:遇到错误/未定义变量/管道失败时退出# ==============================================

# Step 1: Extract configs (docker-compose-extract-configs.yml)

# ==============================================

echo -e "\n=== [1/3] Extracting configuration files ==="

if [ ! -f "docker-compose-extract-configs.yml" ]; thenecho "Error: docker-compose-extract-configs.yml not found!" >&2exit 1

fi

echo "Starting config extraction container..."

docker compose -f docker-compose-extract-configs.yml up -d > /dev/null 2>&1echo "Waiting for config extraction (10s)..."

sleep 10echo "Stopping and cleaning up config container..."

docker compose -f docker-compose-extract-configs.yml down -v > /dev/null 2>&1# ==============================================

# Step 2: Start main services (docker-compose.yml)

# ==============================================

echo -e "\n=== [2/3] Starting main services ==="

if [ ! -f "docker-compose.yml" ]; thenecho "Error: docker-compose.yml not found!" >&2exit 1

fi

echo "Starting main containers (docker compose up -d)..."

docker compose up -d > /dev/null 2>&1echo "Waiting for services to be ready (15s)..."

sleep 15# 检查 pg 容器是否运行

if ! docker inspect -f '{{.State.Running}}' pg > /dev/null 2>&1; thenecho "Error: PostgreSQL container 'pg' is not running!" >&2exit 1

fi# ==============================================

# Step 3: Initialize databases

# ==============================================

echo -e "\n=== [3/3] Initializing databases ==="# 数据库与SQL文件映射(Linux格式数组)

DB_SQL_PAIRS=("uav:uav_dump.sql""base_cloud:base_cloud_dump.sql""zhifa:zhifa_dump.sql""job:job_dump.sql"

)for PAIR in "${DB_SQL_PAIRS[@]}"; do# 分割数据库名和SQL文件名(兼容Linux字符串处理)DB_NAME="${PAIR%:*}"SQL_FILE="${PAIR#*:}"echo -e "\n--- Processing $DB_NAME ($SQL_FILE) ---"# 1. 创建数据库if docker exec pg createdb -U postgres -O postgres "$DB_NAME" > /dev/null 2>&1; thenecho "✅ Database '$DB_NAME' created successfully"elseecho "ℹ️ Database '$DB_NAME' already exists (skipping)"fi# 2. 复制SQL文件到容器if [ -f "./$SQL_FILE" ]; thendocker cp "./$SQL_FILE" "pg:/tmp/$SQL_FILE" > /dev/null 2>&1echo "✅ SQL file '$SQL_FILE' copied to container"elseecho "❌ Failed to copy SQL file: './$SQL_FILE' not found!" >&2exit 1fi# 3. 导入SQL文件(显示详细错误)echo "Importing SQL file to '$DB_NAME'..."if docker exec pg psql -U postgres -d "$DB_NAME" -f "/tmp/$SQL_FILE" > /dev/null 2>&1; thenecho "✅ SQL file imported to '$DB_NAME' successfully"elseecho "❌ Failed to import SQL to '$DB_NAME'! Check container logs:" >&2docker logs pg --tail 20 >&2 # 显示pg容器最近20行日志exit 1fi

doneecho -e "\n🎉 All workflows completed! Databases initialized successfully!"

其余均是一些初始化sql脚本文件,按需配置即可, 以下一些docker基本操作命令

# #启动指定yml文件所有服务

docker compose -f docker-compose-extract-configs.yml up # 停止指定yml文件所有服务的容器(额外删除由 Compose 管理的 命名卷(named volumes) 和 绑定挂载的匿名卷(但不会删除宿主机的挂载目录本身))

docker compose -f docker-compose-extract-configs.yml down -v #启动所有服务

docker-compose up -d#查看服务状态

docker-compose ps# 查看特定服务日志(如postgres)

docker-compose logs -f pg#停止所有服务

docker-compose down#停止并删除数据卷

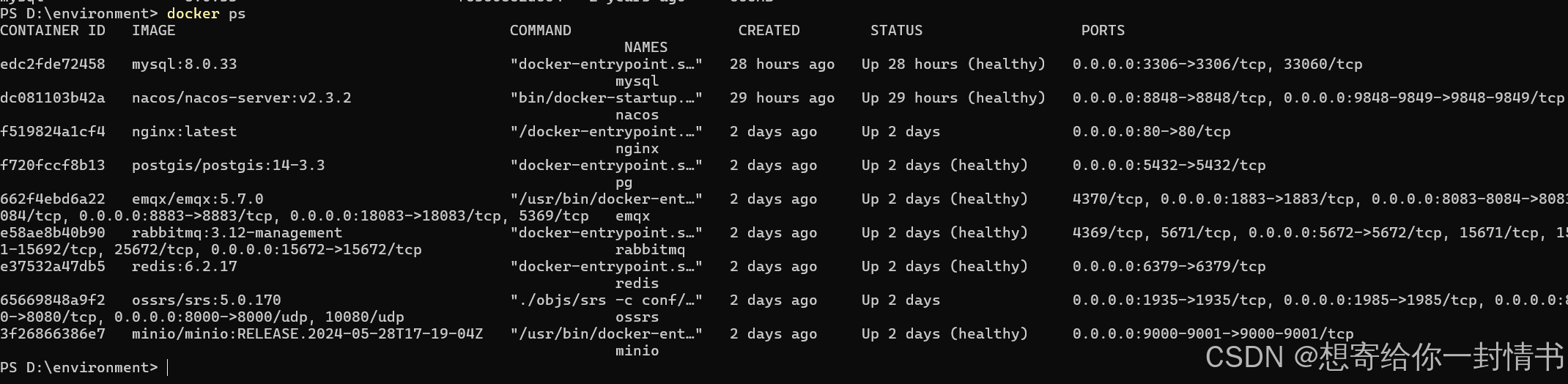

docker-compose down -v第一次执行脚本需要拉取镜像比较慢,脚本执行完毕容器都成功启动