scrapy框架-day02

scrapy的项目文件

scrapy.cfg

# Automatically created by: scrapy startproject # # For more information about the [deploy] section see: # https://scrapyd.readthedocs.io/en/latest/deploy.html [settings] default = day01.settings [deploy] #url = http://localhost:6800/ project = day01

在里面的settings表示指定的项目设置文件也就是我们的settings.py

这里的deploy表示的是我们的项目部署方面的内容,后续会详细的进行讲解

items.py

这个文件主要是用于编写我们的数据是怎么存的,或者说我们要将爬取的数据那些内容存储进去,可以说是定义模型

# Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class Day01Item(scrapy.Item):# define the fields for your item here like:# name = scrapy.Field()passmiddlewares.py

# Define here the models for your spider middleware## See documentation in:# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

# useful for handling different item types with a single interfacefrom itemadapter import ItemAdapter

# 爬虫中间件class Day01SpiderMiddleware:# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the spider middleware does not modify the# passed objects.

@classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return s

def process_spider_input(self, response, spider):# Called for each response that goes through the spider# middleware and into the spider.

# Should return None or raise an exception.return None

def process_spider_output(self, response, result, spider):# Called with the results returned from the Spider, after# it has processed the response.

# Must return an iterable of Request, or item objects.for i in result:yield i

def process_spider_exception(self, response, exception, spider):# Called when a spider or process_spider_input() method# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request or item objects.pass

async def process_start(self, start):# Called with an async iterator over the spider start() method or the# maching method of an earlier spider middleware.async for item_or_request in start:yield item_or_request

def spider_opened(self, spider):spider.logger.info("Spider opened: %s" % spider.name)

# 下载中间件class Day01DownloaderMiddleware:# Not all methods need to be defined. If a method is not defined,# scrapy acts as if the downloader middleware does not modify the# passed objects.

@classmethoddef from_crawler(cls, crawler):# This method is used by Scrapy to create your spiders.s = cls()crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)return s

def process_request(self, request, spider):# Called for each request that goes through the downloader# middleware.

# Must either:# - return None: continue processing this request# - or return a Response object# - or return a Request object# - or raise IgnoreRequest: process_exception() methods of# installed downloader middleware will be calledreturn None

def process_response(self, request, response, spider):# Called with the response returned from the downloader.

# Must either;# - return a Response object# - return a Request object# - or raise IgnoreRequestreturn response

def process_exception(self, request, exception, spider):# Called when a download handler or a process_request()# (from other downloader middleware) raises an exception.

# Must either:# - return None: continue processing this exception# - return a Response object: stops process_exception() chain# - return a Request object: stops process_exception() chainpass

def spider_opened(self, spider):spider.logger.info("Spider opened: %s" % spider.name)这个文件主要是用于定制化操作的时候需要编写,里面有两个中间件,一个是爬虫中间件,另一个是下载中间件,比如可以在发送请求的时候更换请求头里面的UA或者使用代理ip

pipelines.py

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html useful for handling different item types with a single interface from itemadapter import ItemAdapterclass Day01Pipeline:def process_item(self, item, spider):return item主要用于数据清洗和保存等相关操作

settings.py

# Scrapy settings for day01 project## For simplicity, this file contains only settings considered important or# commonly used. You can find more settings consulting the documentation:## https://docs.scrapy.org/en/latest/topics/settings.html# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html# https://docs.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = "day01" SPIDER_MODULES = ["day01.spiders"]NEWSPIDER_MODULE = "day01.spiders" ADDONS = {} # Crawl responsibly by identifying yourself (and your website) on the user-agentUSER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/140.0.0.0 Safari/537.36" # Obey robots.txt rules"""君子协议"""ROBOTSTXT_OBEY = False # Concurrency and throttling settings#CONCURRENT_REQUESTS = 16CONCURRENT_REQUESTS_PER_DOMAIN = 1"""请求时间"""DOWNLOAD_DELAY = 2 # Disable cookies (enabled by default)#COOKIES_ENABLED = False # Disable Telnet Console (enabled by default)#TELNETCONSOLE_ENABLED = False # Override the default request headers:#DEFAULT_REQUEST_HEADERS = {# "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",# "Accept-Language": "en",#} # Enable or disable spider middlewares# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html#SPIDER_MIDDLEWARES = {# "day01.middlewares.Day01SpiderMiddleware": 543,#} # Enable or disable downloader middlewares# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#DOWNLOADER_MIDDLEWARES = {# "day01.middlewares.Day01DownloaderMiddleware": 543,#} # Enable or disable extensions# See https://docs.scrapy.org/en/latest/topics/extensions.html#EXTENSIONS = {# "scrapy.extensions.telnet.TelnetConsole": None,#} # Configure item pipelines# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html#ITEM_PIPELINES = {# "day01.pipelines.Day01Pipeline": 300,#} # Enable and configure the AutoThrottle extension (disabled by default)# See https://docs.scrapy.org/en/latest/topics/autothrottle.html#AUTOTHROTTLE_ENABLED = True# The initial download delay#AUTOTHROTTLE_START_DELAY = 5# The maximum download delay to be set in case of high latencies#AUTOTHROTTLE_MAX_DELAY = 60# The average number of requests Scrapy should be sending in parallel to# each remote server#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0# Enable showing throttling stats for every response received:#AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default)# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings#HTTPCACHE_ENABLED = True#HTTPCACHE_EXPIRATION_SECS = 0#HTTPCACHE_DIR = "httpcache"#HTTPCACHE_IGNORE_HTTP_CODES = []#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage" # Set settings whose default value is deprecated to a future-proof valueFEED_EXPORT_ENCODING = "utf-8"这个文件里面可以写UA,是否要遵守君子协议,开启管道等等

提取数据的方法

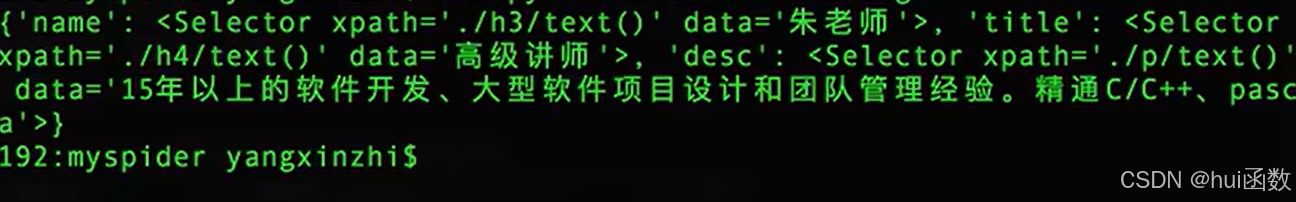

在我们的scrapy框架里面,提取数据是使用xpath语法来进行爬取,但是提前出来的是一个选择器的形式的数据,如下图所示

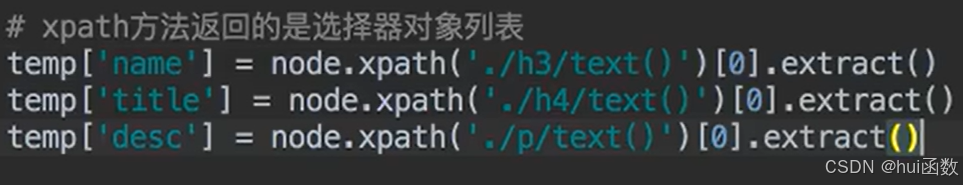

提取数据的方法有俩种,方法一是先提取列表的第一个,再使用extract来进行数据的提前,如下图

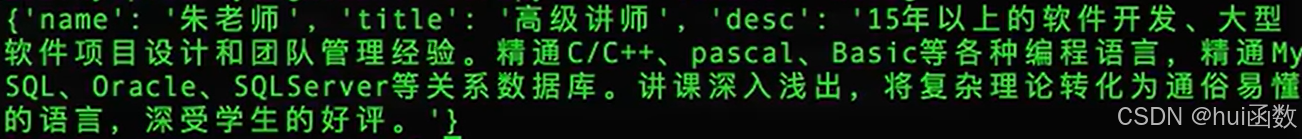

最终结果为

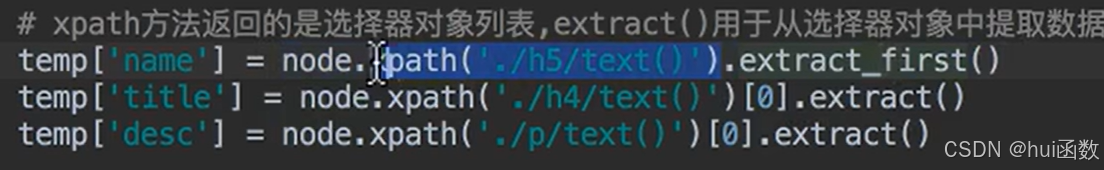

方法2是使用extract_first()来进行数据的提取,无需索引,如下图

注意:两个方法的区别在于如果xpath语法写错了,那么就相当与列表里面没有数据,如果这个时候使用方法1,那么就会报错,原因是没有数据还使用了索引,但是如果这里使用方法2就不会出问题,如果没有数据,这个方法会将没有提取数据的内容设置为none

spider目录文件的注意事项

scrapy.spider爬虫类中必须要有parse的解析

如果网站结构层次复杂,可以自定义其他解析函数

在解析函数中提取到的url地址如果要发送请求,必须属于allowed_domains范围内,但是start_urls中的url不受这个限制

启动爬虫的时候要在项目路径下启动

parse()函数里面可以通过yield来返回数据,注意解析函数中yield能传递的对象只能是BaseItem,Request,dict,None

response响应对象的常用属性

response.url:当前响应的url地址

response.request.url:当前响应对应的请求的url地址(可能请求的和响应的不一样)

response.headers:响应头

response.request.headers:当前响应的请求头

response.body:响应体,也就是html代码,byte类型

response.status:响应的状态码

保存数据

在pipelines.py文件中对数据的操作

定义一个管道

重写管道类的process_item方法

process_item方法处理完item后必须返回给引擎

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class Day01Pipeline:# item表示spider爬虫文件里面yield返回的数据,处理完item后必须返回给引擎# spider表示是哪一个爬虫文件进来的,显示爬虫的namedef process_item(self, item, spider):# 进行item的处理,比如保存文件return item在settings.py配置启动管道

# 代码63行

ITEM_PIPELINES = {"day01.pipelines.Day01Pipeline": 300,

}注意:这个数字300表示执行管道的顺序,数字越小越先执行