实战Kaggle比赛:狗的品种识别(ImageNet Dogs)

比赛链接:Dog Breed Identification | Kaggle

在这场比赛中,我们将识别120类不同品种的狗。 这个数据集实际上是著名的ImageNet的数据集子集。

这里我们选择小批量进行训练出于学习目的

代码实现

先导入

import os

import torch

import torchvision

from torch import nn

from d2l import torch as d2l下载小数据集

d2l.DATA_HUB['dog_tiny'] = (d2l.DATA_URL + 'kaggle_dog_tiny.zip','0cb91d09b814ecdc07b50f31f8dcad3e81d6a86d')# 如果使用Kaggle比赛的完整数据集,请将下面的变量更改为False

demo = True

if demo:data_dir = d2l.download_extract('dog_tiny')

else:data_dir = os.path.join('..', 'data', 'dog-breed-identification')划分数据

def reorg_dog_data(data_dir, valid_ratio):labels = d2l.read_csv_labels(os.path.join(data_dir, 'labels.csv'))d2l.reorg_train_valid(data_dir, labels, valid_ratio)d2l.reorg_test(data_dir)batch_size = 32 if demo else 128

valid_ratio = 0.1

reorg_dog_data(data_dir, valid_ratio)数据增强

transform_train = torchvision.transforms.Compose([# 随机裁剪图像,所得图像为原始面积的0.08~1之间,高宽比在3/4和4/3之间。# 然后,缩放图像以创建224x224的新图像torchvision.transforms.RandomResizedCrop(224,scale=(0.8,1.0),ratio=(0.75,1.3)),# 随机水平翻转图像torchvision.transforms.RandomHorizontalFlip(),# 随机变化亮度、对比度和饱和度torchvision.transforms.ColorJitter(brightness=0.4,contrast=0.4,saturation=0.4),torchvision.transforms.ToTensor(),# 标准化图像torchvision.transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

transform_test = torchvision.transforms.Compose([torchvision.transforms.Resize(256),torchvision.transforms.CenterCrop(224),torchvision.transforms.ToTensor(),torchvision.transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])dataset

train_ds, train_valid_ds = [torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train_valid_test', folder),transform=transform_train) for folder in ['train', 'train_valid']]valid_ds, test_ds = [torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train_valid_test', folder),transform=transform_test) for folder in ['valid', 'test']]dataset iter

train_iter,train_valid_iter=[torch.utils.data.DataLoader( dataset,batch_size=32,shuffle=True,num_workers=4,drop_last=True) for dataset in [train_ds,train_valid_ds]]

valid_iter = torch.utils.data.DataLoader(valid_ds, batch_size, shuffle=False,drop_last=True)test_iter = torch.utils.data.DataLoader(test_ds, batch_size, shuffle=False,drop_last=False)迁移学习

# 迁移学习,不重新训练用于特征提取的预训练模型,训练的小型自定义输出网络替换原始输出层

# 微调是迁移学习的一种具体技术,指在预训练模型的基础上,通过继续训练(调整部分或全部参数)来适应新任务,FLOPS大

# 这里我们使用迁移学习,计算量小,只需要训练一个小型自定义输出网络

def get_net(devices):model=torchvision.models.vit_b_16(pretrained=True)# 获取原始分类头的输入特征维度num_classifier_feature = model.heads.head.in_features# 定义一个新的输出网络,共有120个输出类别model.heads.head = nn.Sequential(nn.Linear(num_classifier_feature, 120))# 将模型移动到指定设备(如GPU)model = model.to(devices[0])# 冻结全部for param in model.parameters():param.requires_grad = False# 只训练新添加的分类头(解冻)for param in model.heads.head.parameters():param.requires_grad = Truereturn modelloss评估函数

loss = nn.CrossEntropyLoss(reduction='none')def evaluate_loss(data_iter, net, devices):l_sum, n = 0.0, 0for features, labels in data_iter:features, labels = features.to(devices[0]), labels.to(devices[0])outputs = net(features)l = loss(outputs, labels)l_sum += l.sum()n += labels.numel()return (l_sum / n).to('cpu')train code

def train(net, train_iter, valid_iter, num_epochs, lr, wd, devices, lr_period,lr_decay):# 只训练小型自定义输出网络net = nn.DataParallel(net, device_ids=devices).to(devices[0])trainer = torch.optim.SGD((param for param in net.parameters()if param.requires_grad), lr=lr,momentum=0.9, weight_decay=wd)scheduler = torch.optim.lr_scheduler.StepLR(trainer, lr_period, lr_decay)num_batches, timer = len(train_iter), d2l.Timer()legend = ['train loss']if valid_iter is not None:legend.append('valid loss')animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs],legend=legend)for epoch in range(num_epochs):metric = d2l.Accumulator(2)for i, (features, labels) in enumerate(train_iter):timer.start()features, labels = features.to(devices[0]), labels.to(devices[0])trainer.zero_grad()output = net(features)l = loss(output, labels).sum()l.backward()trainer.step()metric.add(l, labels.shape[0])timer.stop()if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:animator.add(epoch + (i + 1) / num_batches,(metric[0] / metric[1], None))measures = f'train loss {metric[0] / metric[1]:.3f}'if valid_iter is not None:valid_loss = evaluate_loss(valid_iter, net, devices)animator.add(epoch + 1, (None, valid_loss.detach().cpu()))scheduler.step()if valid_iter is not None:measures += f', valid loss {valid_loss:.3f}'print(measures + f'\n{metric[1] * num_epochs / timer.sum():.1f}'f' examples/sec on {str(devices)}')devices, num_epochs, lr, wd = d2l.try_all_gpus(), 30, 1e-4, 2e-4

lr_period, lr_decay, net = 2, 0.9, get_net(devices)

train(net, train_iter, valid_iter, num_epochs, lr, wd, devices, lr_period,lr_decay)

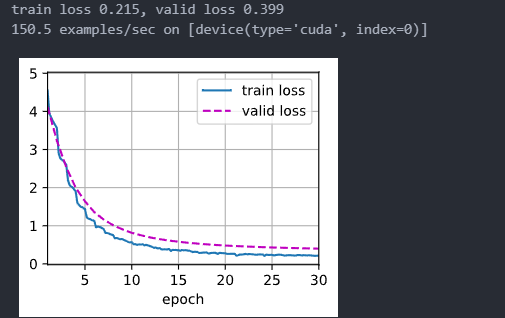

# train loss 0.215, valid loss 0.399

# 150.5 examples/sec on [device(type='cuda', index=0)]

可以看到30轮后面差不多就收敛了,当然还有很多提升空间,比如数据增强or使用vit_l_16或者更大的预训练模型做迁移学习,这里不多加概述

一个低loss的代码实现:nb.pretrained.vit - Loss : 0.09921

完整代码实现:dive-into-deep-learning/d2l/dog-breed-identification/main.ipynb at main · hllqkb/dive-into-deep-learning