Java中使用Spring Boot+Ollama构建本地对话机器人

目录结构

- Ollama是什么

- 安装 Ollama

- 下载大模型

- 运行模型

- Java和IDEA版本

- 创建一个springboot项目

- 创建一个简单的对话接口

- 启动spring boot

- 流式对话输出

- 用原生 HTML 打造可交互前端

- 接入 OpenAI、DeepSeek 等云模型(可选)

原文地址传送门

我是想做一个大模型本地部署辅助工具,类似当前手机上各家厂商的AI助手,结合 MCP(Model Context Protocol)协议,可实现 AI 与文件系统交互、自动生成文件等强大能力。

但是这篇文章中的内容比较精炼,很多细节容易踩坑,所以记录一下

Ollama是什么

Ollama 是一个简洁好用的本地大模型运行工具,你可以在自己的电脑上直接部署和运行 LLM。

安装 Ollama

访问官网下载安装(支持 Windows/Mac/Linux):

https://ollama.com/download

下载好安装包后会一键安装到C盘(不能改路径是我没想到的)

启动客户端就是一个和AI的对话框

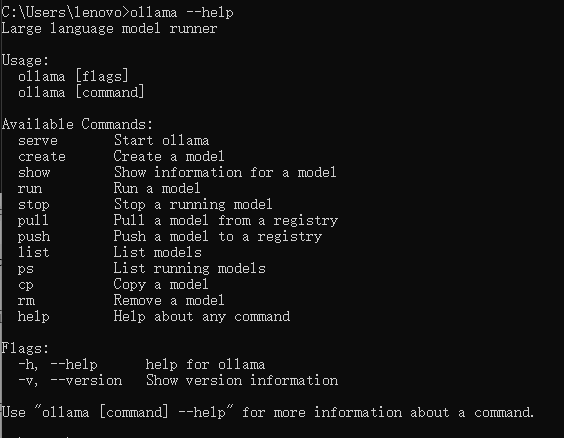

在命令行cmd中输入

ollama --help

出现如下提示证明安装成功

下载大模型

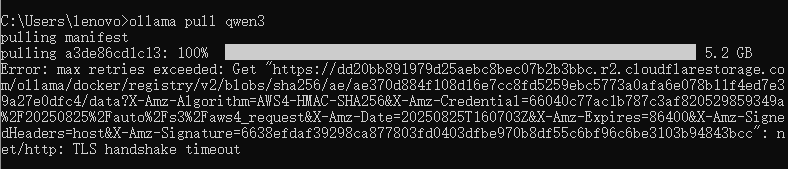

依旧是在cmd中输入指令

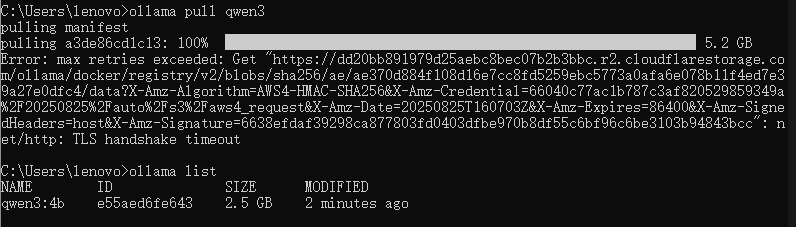

ollama pull qwen3

其中qwen3是我准备使用的模型,原文中使用的是gemma3:1b,但后续我准备接入MCP,当前Qwen3是支持的,所以我选择它。

如果没用梯子可以使用阿里云镜像

ollama pull qwen3 --registry https://hub.aliyun.com # 阿里云镜像站

最后出现TLS超时

- 核心原因:中国网络环境对 TLS 通信有特殊限制(防火墙/网络策略)

- Ollama 默认用国际 Hugging Face 仓库 → 国内访问会超时

- Qwen3 模型在 Hugging Face 上的国际地址 → 中国网络无法正常 TLS 握手

但我实际使用下来还是可以正常运行,证明自己安装成功指令如下

ollama list

出现自己想要的模型名称,证明安装成功

运行模型

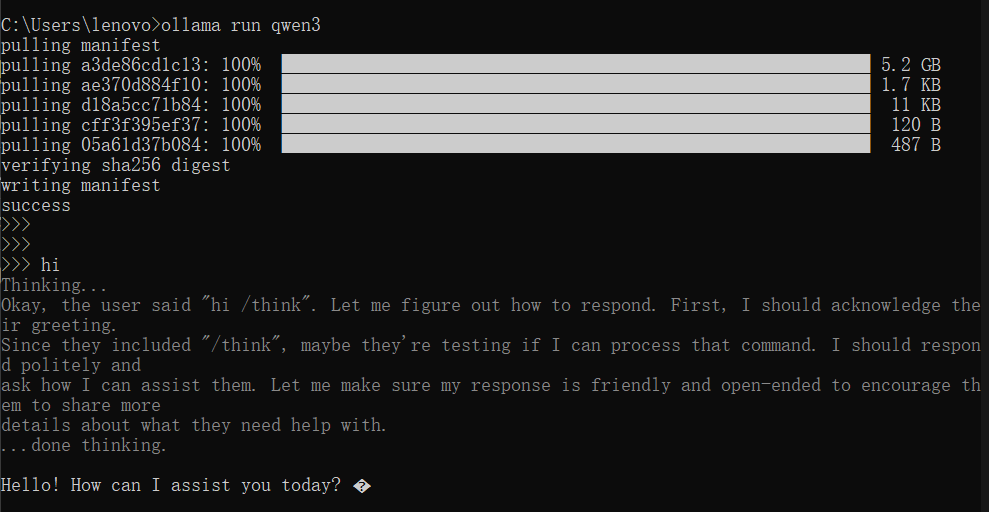

依旧cmd执行命令,ollama会自动下载相关的内容并执行

ollama run qwen3

实测能够进行中文问答,并且有思考过程和最终呈现内容

使用 /bye 结束对话

至此,本地模型就算是搭建好了暂时不要关闭,等待spring boot调用这个接口

Java和IDEA版本

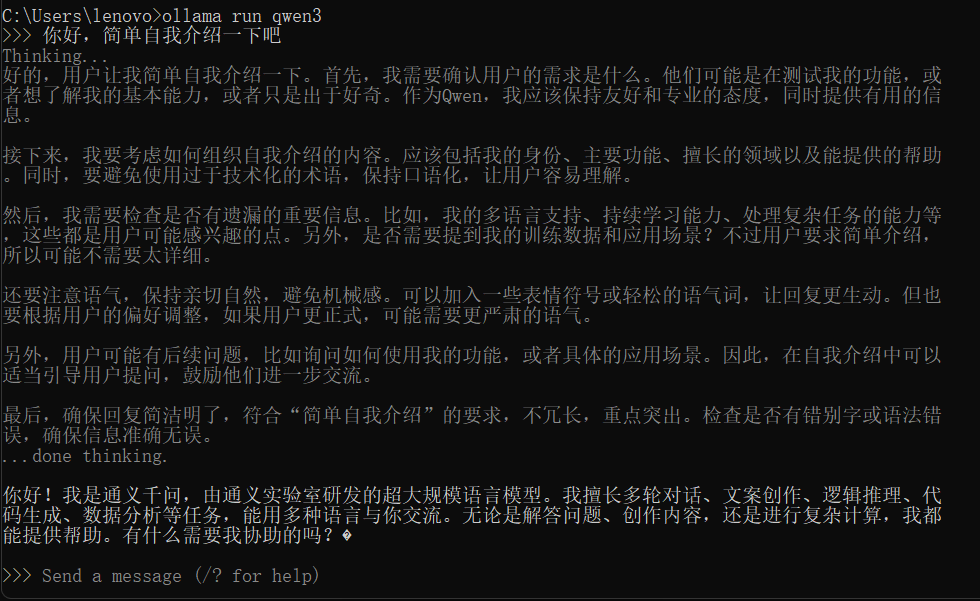

使用Spring AI 1.0.1 要求Java 17 ,我当前的Java还是老掉牙的8,所以去升级了一下,参考教程

值得一提的是,Java已经有24的版本了,我还纠结了要不要安装最新的,以防后期又要安装新版本,问了下AI

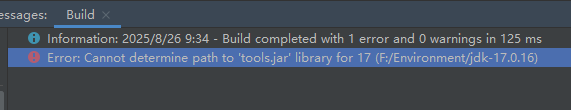

在用上Java17之后,我又发现IDEA出现了报错

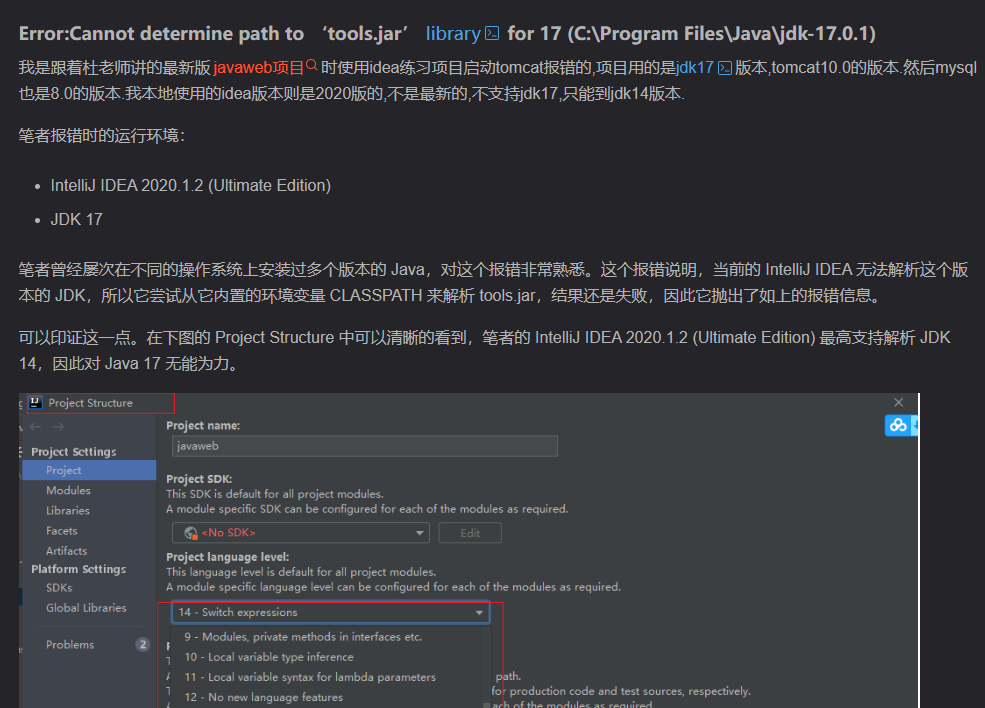

本来以为是Java17没安装好,实际结合参考文章

我确实是在用IDEA2020,太老啦,无法解析了,所以又去更新IDEA,参考文章

彻底卸载旧的IDEA,安装最新的2025版本,说起来2020版本已经陪我度过5年了,真不容易

maven版本也需要升级一下,Spring Boot 3.2.1 要求Maven 3.8.x

参考文章

文章最后要记得使用Java17,并且<\mirror>标签貌似有问题,我把完整的mirror文件粘贴如下

<?xml version="1.0" encoding="UTF-8"?><!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License athttp://www.apache.org/licenses/LICENSE-2.0Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

--><!--| This is the configuration file for Maven. It can be specified at two levels:|| 1. User Level. This settings.xml file provides configuration for a single user,| and is normally provided in ${user.home}/.m2/settings.xml.|| NOTE: This location can be overridden with the CLI option:|| -s /path/to/user/settings.xml|| 2. Global Level. This settings.xml file provides configuration for all Maven| users on a machine (assuming they're all using the same Maven| installation). It's normally provided in| ${maven.conf}/settings.xml.|| NOTE: This location can be overridden with the CLI option:|| -gs /path/to/global/settings.xml|| The sections in this sample file are intended to give you a running start at| getting the most out of your Maven installation. Where appropriate, the default| values (values used when the setting is not specified) are provided.||-->

<settings xmlns="http://maven.apache.org/SETTINGS/1.2.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.2.0 https://maven.apache.org/xsd/settings-1.2.0.xsd"><!-- localRepository| The path to the local repository maven will use to store artifacts.|| Default: ${user.home}/.m2/repository<localRepository>/path/to/local/repo</localRepository>--><localRepository>F:\Environment\apache-maven-3.8.9\maven_repositroy</localRepository><!-- interactiveMode| This will determine whether maven prompts you when it needs input. If set to false,| maven will use a sensible default value, perhaps based on some other setting, for| the parameter in question.|| Default: true<interactiveMode>true</interactiveMode>--><!-- offline| Determines whether maven should attempt to connect to the network when executing a build.| This will have an effect on artifact downloads, artifact deployment, and others.|| Default: false<offline>false</offline>--><!-- pluginGroups| This is a list of additional group identifiers that will be searched when resolving plugins by their prefix, i.e.| when invoking a command line like "mvn prefix:goal". Maven will automatically add the group identifiers| "org.apache.maven.plugins" and "org.codehaus.mojo" if these are not already contained in the list.|--><pluginGroups><!-- pluginGroup| Specifies a further group identifier to use for plugin lookup.<pluginGroup>com.your.plugins</pluginGroup>--></pluginGroups><!-- proxies| This is a list of proxies which can be used on this machine to connect to the network.| Unless otherwise specified (by system property or command-line switch), the first proxy| specification in this list marked as active will be used.|--><proxies><!-- proxy| Specification for one proxy, to be used in connecting to the network.|<proxy><id>optional</id><active>true</active><protocol>http</protocol><username>proxyuser</username><password>proxypass</password><host>proxy.host.net</host><port>80</port><nonProxyHosts>local.net|some.host.com</nonProxyHosts></proxy>--></proxies><!-- servers| This is a list of authentication profiles, keyed by the server-id used within the system.| Authentication profiles can be used whenever maven must make a connection to a remote server.|--><servers><!-- server| Specifies the authentication information to use when connecting to a particular server, identified by| a unique name within the system (referred to by the 'id' attribute below).|| NOTE: You should either specify username/password OR privateKey/passphrase, since these pairings are| used together.|<server><id>deploymentRepo</id><username>repouser</username><password>repopwd</password></server>--><!-- Another sample, using keys to authenticate.<server><id>siteServer</id><privateKey>/path/to/private/key</privateKey><passphrase>optional; leave empty if not used.</passphrase></server>--></servers><!-- mirrors| This is a list of mirrors to be used in downloading artifacts from remote repositories.|| It works like this: a POM may declare a repository to use in resolving certain artifacts.| However, this repository may have problems with heavy traffic at times, so people have mirrored| it to several places.|| That repository definition will have a unique id, so we can create a mirror reference for that| repository, to be used as an alternate download site. The mirror site will be the preferred| server for that repository.|--><mirrors><!-- mirror| Specifies a repository mirror site to use instead of a given repository. The repository that| this mirror serves has an ID that matches the mirrorOf element of this mirror. IDs are used| for inheritance and direct lookup purposes, and must be unique across the set of mirrors.|<mirror><id>mirrorId</id><mirrorOf>repositoryId</mirrorOf><name>Human Readable Name for this Mirror.</name><url>http://my.repository.com/repo/path</url></mirror>--><mirror><id>nexus-aliyun</id><mirrorOf>central</mirrorOf><name>Nexus aliyun</name><url>http://maven.aliyun.com/nexus/content/groups/public</url></mirror><!-- <mirror><id>maven-default-http-blocker</id><mirrorOf>external:http:*</mirrorOf><name>Pseudo repository to mirror external repositories initially using HTTP.</name><url>http://0.0.0.0/</url><blocked>true</blocked></mirror> --></mirrors><!-- profiles| This is a list of profiles which can be activated in a variety of ways, and which can modify| the build process. Profiles provided in the settings.xml are intended to provide local machine-| specific paths and repository locations which allow the build to work in the local environment.|| For example, if you have an integration testing plugin - like cactus - that needs to know where| your Tomcat instance is installed, you can provide a variable here such that the variable is| dereferenced during the build process to configure the cactus plugin.|| As noted above, profiles can be activated in a variety of ways. One way - the activeProfiles| section of this document (settings.xml) - will be discussed later. Another way essentially| relies on the detection of a system property, either matching a particular value for the property,| or merely testing its existence. Profiles can also be activated by JDK version prefix, where a| value of '1.4' might activate a profile when the build is executed on a JDK version of '1.4.2_07'.| Finally, the list of active profiles can be specified directly from the command line.|| NOTE: For profiles defined in the settings.xml, you are restricted to specifying only artifact| repositories, plugin repositories, and free-form properties to be used as configuration| variables for plugins in the POM.||--><profiles><!-- profile| Specifies a set of introductions to the build process, to be activated using one or more of the| mechanisms described above. For inheritance purposes, and to activate profiles via <activatedProfiles/>| or the command line, profiles have to have an ID that is unique.|| An encouraged best practice for profile identification is to use a consistent naming convention| for profiles, such as 'env-dev', 'env-test', 'env-production', 'user-jdcasey', 'user-brett', etc.| This will make it more intuitive to understand what the set of introduced profiles is attempting| to accomplish, particularly when you only have a list of profile id's for debug.|| This profile example uses the JDK version to trigger activation, and provides a JDK-specific repo.<profile><id>jdk-1.4</id><activation><jdk>1.4</jdk></activation><repositories><repository><id>jdk14</id><name>Repository for JDK 1.4 builds</name><url>http://www.myhost.com/maven/jdk14</url><layout>default</layout><snapshotPolicy>always</snapshotPolicy></repository></repositories></profile>-->

<profile><!-- 以下配置会自动使用Java 17 --><profile><id>java-17</id><activation><activeByDefault>true</activeByDefault></activation><properties><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><maven.compiler.source>17</maven.compiler.source><maven.compiler.target>17</maven.compiler.target></properties></profile>

</profile><!--| Here is another profile, activated by the system property 'target-env' with a value of 'dev',| which provides a specific path to the Tomcat instance. To use this, your plugin configuration| might hypothetically look like:|| ...| <plugin>| <groupId>org.myco.myplugins</groupId>| <artifactId>myplugin</artifactId>|| <configuration>| <tomcatLocation>${tomcatPath}</tomcatLocation>| </configuration>| </plugin>| ...|| NOTE: If you just wanted to inject this configuration whenever someone set 'target-env' to| anything, you could just leave off the <value/> inside the activation-property.|<profile><id>env-dev</id><activation><property><name>target-env</name><value>dev</value></property></activation><properties><tomcatPath>/path/to/tomcat/instance</tomcatPath></properties></profile>--></profiles><!-- activeProfiles| List of profiles that are active for all builds.|<activeProfiles><activeProfile>alwaysActiveProfile</activeProfile><activeProfile>anotherAlwaysActiveProfile</activeProfile></activeProfiles>-->

</settings>我使用的是

Java 17 + Maven 3.8.9 + Spring Boot 3.2.1 + Spring AI 1.0.1

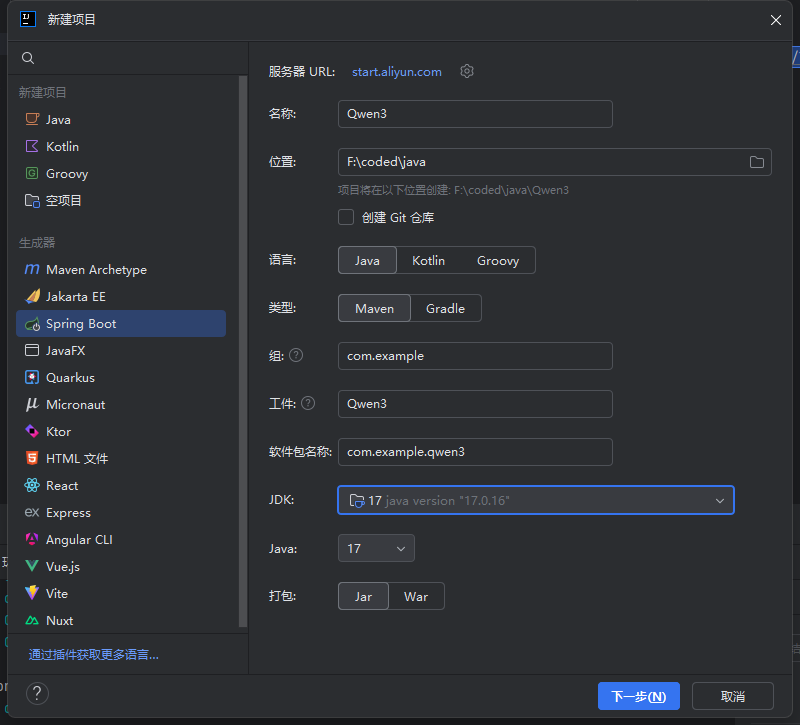

创建一个springboot项目

修改pom.xml文件,并且maven编译,以下是我的,可以参考一下

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.example</groupId><artifactId>Qwen3</artifactId><version>0.0.1-SNAPSHOT</version><name>Qwen3</name><description>Qwen3</description><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>3.2.1</version></parent><properties><java.version>17</java.version><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-data-jpa</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-jdbc</artifactId></dependency><dependency><groupId>com.mysql</groupId><artifactId>mysql-connector-j</artifactId><scope>runtime</scope></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><optional>true</optional></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><!-- Spring AI Ollama Starter necessary--><dependency><groupId>org.springframework.ai</groupId><artifactId>spring-ai-starter-model-ollama</artifactId></dependency></dependencies><dependencyManagement><dependencies><dependency><groupId>org.springframework.ai</groupId><artifactId>spring-ai-bom</artifactId><version>1.0.1</version><type>pom</type><scope>import</scope></dependency></dependencies></dependencyManagement><build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><version>3.8.1</version><configuration><source>17</source><target>17</target><encoding>UTF-8</encoding></configuration></plugin><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId><configuration><mainClass>com.example.qwen3.Qwen3Application</mainClass></configuration></plugin></plugins></build>

</project>

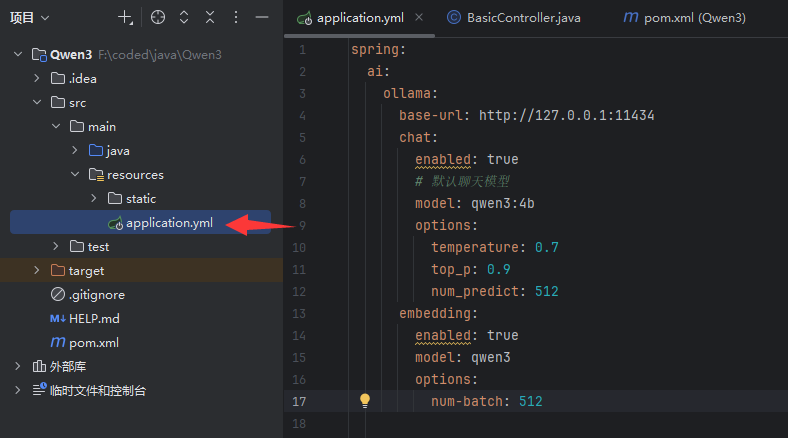

修改/src/main/resources下application.propertity为application.yml

spring:ai:ollama:base-url: http://127.0.0.1:11434chat:enabled: true# 默认聊天模型model: qwen3:4boptions:temperature: 0.7top_p: 0.9num_predict: 512embedding:enabled: truemodel: qwen3options:num-batch: 512# MCP 配置(用于插件/扩展对接)mcp:client:stdio:servers-configuration: classpath:config/mcp-servers.jsonserver:port: 8181

为了方便后面接入MCP,我直接加上去了,注意我这里的端口是8181,默认应该是8080,为了避免后续冲突,我使用8181端口

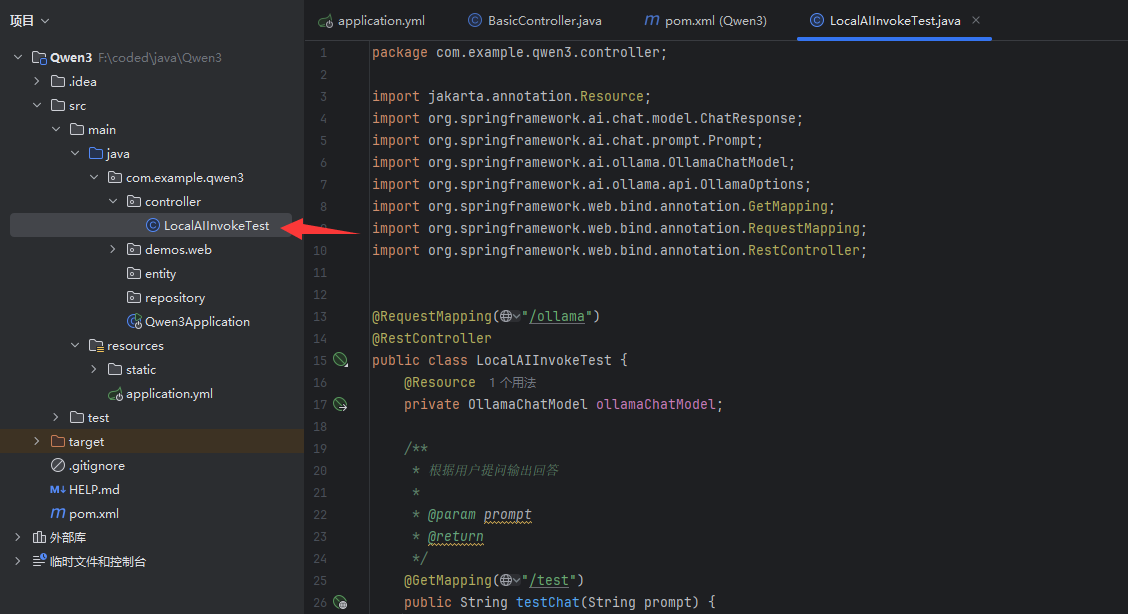

创建一个简单的对话接口

在controller下创建Java文件LocalAIInvokeTest

原文中没用指明包,我这里把全文都粘贴上,以免包导入的不对

package com.example.qwen3.controller;import jakarta.annotation.Resource;

import org.springframework.ai.chat.model.ChatResponse;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.ollama.OllamaChatModel;

import org.springframework.ai.ollama.api.OllamaOptions;

import org.springframework.http.MediaType;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Flux;@RequestMapping("/ollama")

@RestController

public class LocalAIInvokeTest {@Resourceprivate OllamaChatModel ollamaChatModel;/*** 根据用户提问输出回答** @param prompt* @return*/@GetMapping("/test")public String testChat(String prompt) {ChatResponse chatResponse= ollamaChatModel.call(new Prompt(prompt,OllamaOptions.builder().model("qwen3:4b").build()));return chatResponse.getResult().getOutput().getText();}@GetMapping(value = "/testStream", produces = MediaType.TEXT_EVENT_STREAM_VALUE)public Flux<ChatResponse> testChatStream(String prompt) {return ollamaChatModel.stream(new Prompt(prompt, OllamaOptions.builder().model("qwen3:4b").build()));}

}

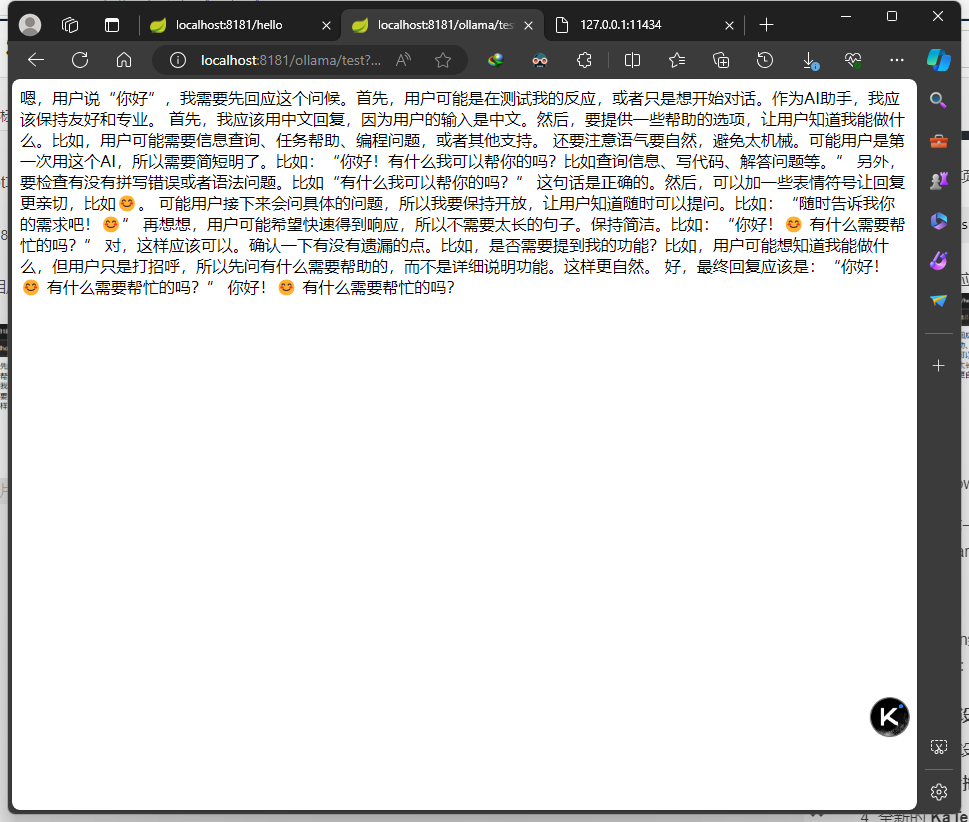

启动spring boot

启动spring boot项目,在浏览器中输入

http://localhost:8181/test?prompt=你好

过一会就会有相应

可以看到思考过程也是呈现出来的,证明我们连接成功

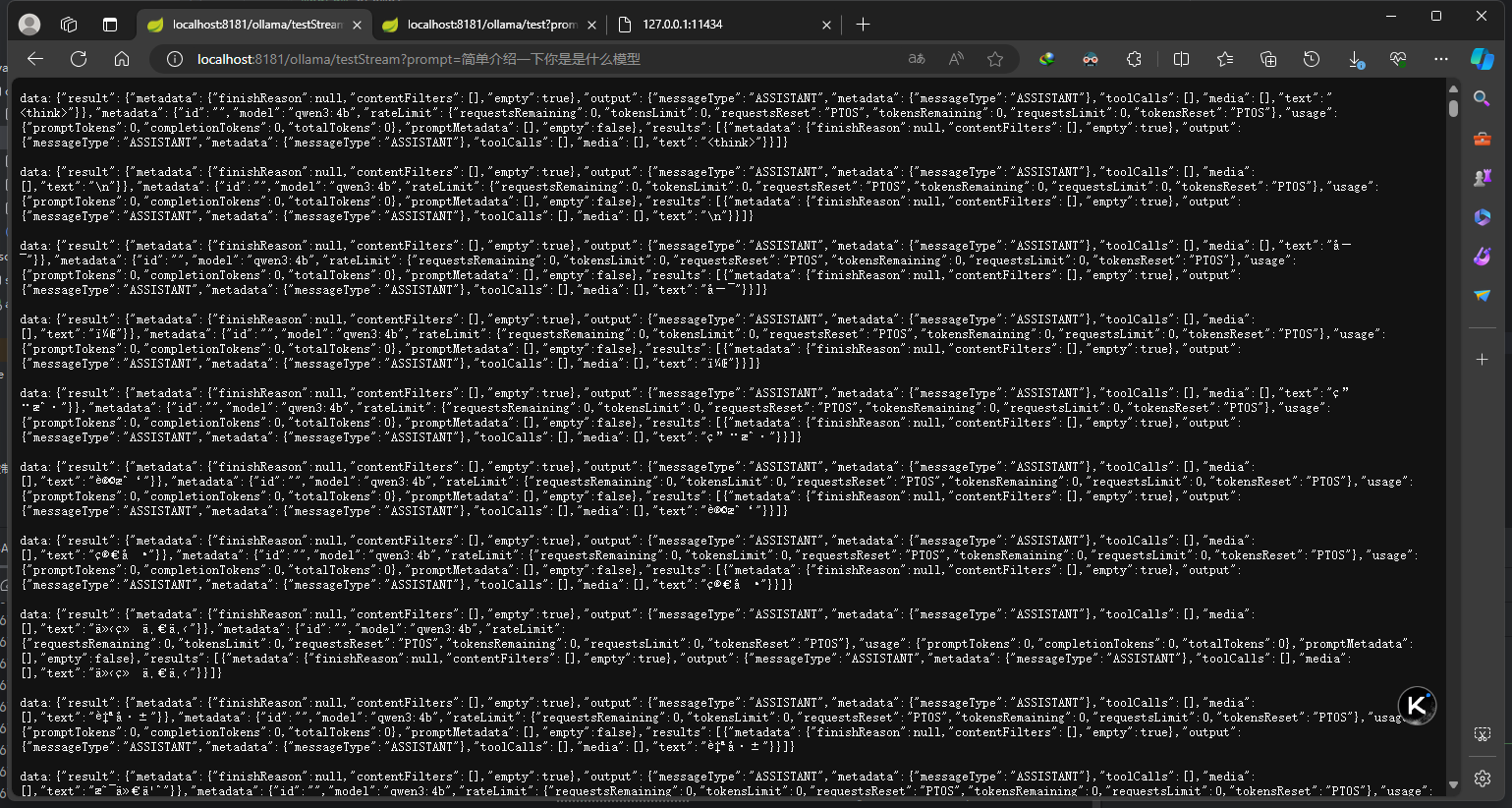

流式对话输出

普通对话是要等很久一次性输出内容,我们当前的AI大多都已经能够逐字输出,更加人信汇,使用springboot中的Flux + TEXT_EVENT_STREAM_VALUE就能实现,在参考文章中也有

在原来test映射后面加入上面这段内容,我上面的代码已经给出了,alt+回车导入依赖就行,重启springboot项目后,在浏览器可以使用

http://localhost:8181/ollama/testStream?prompt=你是什么模型

测试一下,结果确实是返回了很多json,浏览器没有解析而已,符合预期。

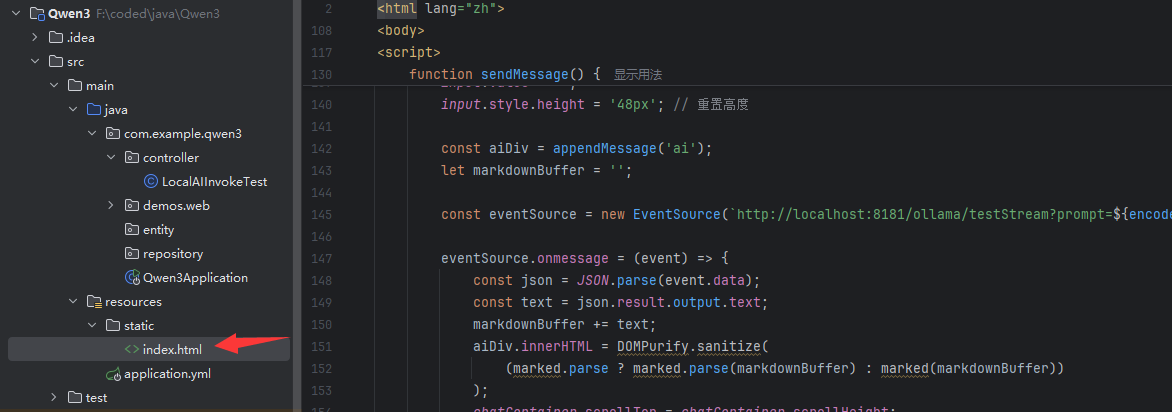

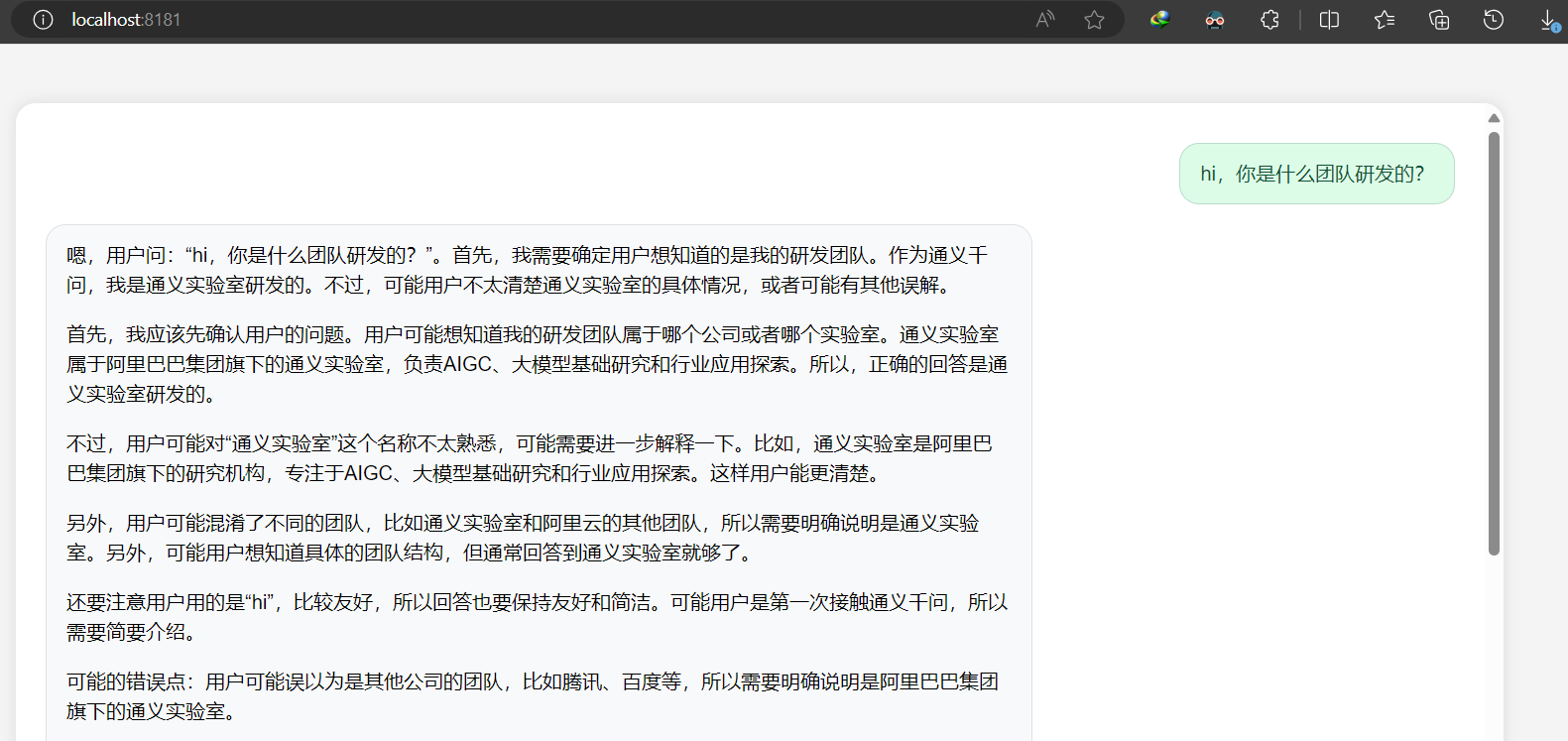

用原生 HTML 打造可交互前端

由于原文中的代码很多缺少空格导致编译器没法识别,我粘贴我使用的代码,具体就是把spring boot自带的index.html改成如下

我在原来代码基础上优化了思考内容和正文的显示样式,并且

<!DOCTYPE html>

<html lang="zh">

<head><meta charset="UTF-8" /><title>本地 AI 对话系统</title><script src="https://cdn.jsdelivr.net/npm/marked/marked.min.js"></script><script src="https://cdn.jsdelivr.net/npm/dompurify@3.0.5/dist/purify.min.js"></script><style>* { box-sizing: border-box; }body {font-family: "Helvetica Neue", Arial, sans-serif;background: #f4f4f4;margin: 0;padding: 48px;display: flex;justify-content: center;align-items: center;height: 100vh;}.chat-wrapper {display: flex;flex-direction: column;width: 100%;max-width: 1200px;height: 100%;background: #fff;border-radius: 16px;box-shadow: 0 0 12px rgba(0, 0, 0, 0.1);overflow: hidden;}.chat-container {flex: 1;overflow-y: auto;padding: 24px;display: flex;flex-direction: column;}.message {max-width: 70%;padding: 12px 16px;margin: 8px 0;border-radius: 16px;word-break: break-word;line-height: 1.5;}.user {align-self: flex-end;background-color: #dcfce7;color: #0f5132;border: 1px solid #badbcc;}.ai {align-self: flex-start;background-color: #f8f9fa;border: 1px solid #dee2e6;display: flex;flex-direction: column;}.ai-thought {font-size: 12px;color: #6c757d;margin-bottom: 6px;font-style: italic;animation: blink 5s infinite;}@keyframes blink {0%, 50%, 100% { opacity: 0.3; }25%, 75% { opacity: 1; }}.ai-reply {font-size: 16px;color: #212529;line-height: 1.6;}.input-bar {display: flex;padding: 16px 24px;border-top: 1px solid #ddd;background-color: #ffffff;}#promptInput {flex: 1;padding: 12px;font-size: 16px;border: 1px solid #ccc;border-radius: 8px;resize: none;height: 48px;line-height: 1.5;}#sendBtn {margin-left: 12px;padding: 12px 20px;font-size: 16px;background-color: #4caf50;color: white;border: none;border-radius: 8px;cursor: pointer;transition: background-color 0.2s ease;}#sendBtn:hover { background-color: #45a049; }pre code {background: #f6f8fa;padding: 8px;border-radius: 6px;display: block;overflow-x: auto;}/* 新增表格样式 */.ai-reply table {border-collapse: collapse;width: 100%;margin: 8px 0;background-color: #fff;border: 1px solid #dee2e6;}.ai-reply th,.ai-reply td {border: 1px solid #dee2e6;padding: 8px;text-align: left;}.ai-reply th {background-color: #e9ecef;font-weight: bold;}.ai-reply tr:nth-child(even) {background-color: #f8f9fa;}.ai-reply tr:hover {background-color: #e9ecef;}</style>

</head>

<body>

<div class="chat-wrapper"><div class="chat-container" id="chatContainer"></div><div class="input-bar"><textarea id="promptInput" placeholder="请输入你的问题..." rows="3"></textarea><button id="sendBtn">发送</button></div>

</div><script>const chatContainer = document.getElementById('chatContainer');const input = document.getElementById('promptInput');const button = document.getElementById('sendBtn');// 创建消息容器function appendMessage(className) {const div = document.createElement('div');div.className = `message ${className}`;chatContainer.appendChild(div);chatContainer.scrollTop = chatContainer.scrollHeight;return div;}// 显示用户消息function displayUserMessage(text) {if (!text) return;const userDiv = appendMessage('user');userDiv.textContent = text;input.value = '';input.style.height = '48px';}// 显示AI消息(包括思考过程和回复)function displayAIMessage(prompt) {const aiDiv = appendMessage('ai');const thoughtDiv = document.createElement('div');thoughtDiv.className = 'ai-thought';thoughtDiv.textContent = '思考中...';aiDiv.appendChild(thoughtDiv);const replyDiv = document.createElement('div');replyDiv.className = 'ai-reply';aiDiv.appendChild(replyDiv);let inThought = false;let thoughtBuffer = '';let answerBuffer = '';const eventSource = new EventSource(`http://localhost:8181/ollama/testStream?prompt=${encodeURIComponent(prompt)}`);eventSource.onmessage = (event) => {const json = JSON.parse(event.data);const textChunk = json.result.output.text;if (textChunk.includes('<think>')) {inThought = true;return;}if (textChunk.includes('</think>')) {inThought = false;thoughtDiv.textContent = thoughtBuffer.trim();return;}if (inThought) {thoughtBuffer += textChunk;thoughtDiv.textContent = thoughtBuffer.trim();} else {answerBuffer += textChunk;replyDiv.innerHTML = DOMPurify.sanitize(marked.parse ? marked.parse(answerBuffer) : marked(answerBuffer));if (!inThought && thoughtDiv.textContent !== '') {thoughtDiv.remove();}}chatContainer.scrollTop = chatContainer.scrollHeight;};eventSource.onerror = () => {eventSource.close();if (thoughtDiv.textContent !== '') {thoughtDiv.remove();}};}// 发送用户消息并触发AI回复function sendUserMessage() {const prompt = input.value.trim();if (!prompt) return;displayUserMessage(prompt);displayAIMessage(prompt);}// 显示AI开场白function sendAIGreeting() {const greeting = '你好,有什么可以帮你?';const aiDiv = appendMessage('ai');const replyDiv = document.createElement('div');replyDiv.className = 'ai-reply';replyDiv.textContent = greeting;aiDiv.appendChild(replyDiv);}// 点击发送按钮button.onclick = sendUserMessage;// 按回车键发送(Shift+Enter换行)input.addEventListener('keydown', (e) => {if (e.key === 'Enter' && !e.shiftKey) {e.preventDefault();sendUserMessage();}});// 自动调整 textarea 高度input.addEventListener('input', () => {input.style.height = 'auto';input.style.height = input.scrollHeight + 'px';});// 页面加载时显示AI开场白window.onload = () => {setTimeout(() => {sendAIGreeting();}, 400);};

</script>

</body>

</html>

注意在184行那边要写自己的大模型访问接口,就是上面我们测试流式输出的url地址

然后我们重启springboot,浏览器访问

localhost:8181

就可以顺利对话了

接入 OpenAI、DeepSeek 等云模型(可选)

这个部分我就没有继续尝试了,因为我想做成本地部署的模型,操作自己的文件,使用在线大冒险多少会有安全风险,感兴趣可以看教程进行探索