基于动捕实现Epuck2的轨迹跟踪

文章目录

- 一、Rviz中生成期望跟踪轨迹

- 二、Epuck2导航到目标点

- 三、Epuck2跟踪期望轨迹

- 1.sin曲线跟踪

- 2.bonuli曲线跟踪

- 补充资料:

一、Rviz中生成期望跟踪轨迹

之前学习了编队的纯跟踪算法Pure Pursuit,本周在此基础上实现领航者跟踪期望轨迹以使整个一致性编队朝期望位置移动。

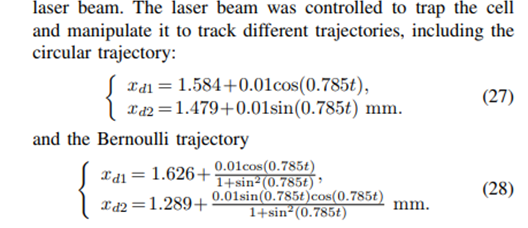

首先画出circular trajectory和Bernoulli trajectory的图形,如图所示:

为将画出的轨迹加载到Rviz中,将轨迹转化为(x,y,theta)坐标形式,取100-200个点进行连线,生成为csv格式的储存文件。阿木实验室开源的waypoint_loader坐标点加载代码( )将轨迹生成到 中,如图所示。

但由于实际场地大小的限制,并不能把轨迹平移那么远,且期望编队前进轨迹的大小应符合实际情况,故对跟踪曲线进行了调整,整体形状不变,但对其位置和大小进行了修改。改后曲线表达式为(1-1)所示,形状如图所示。

waypoint_loader.py:

#!/usr/bin/env pythonimport os

import csv

import math

import tf

import rospy

import sysfrom geometry_msgs.msg import Quaternion, PoseStampedfrom styx_msgs.msg import Lane, Waypointfrom nav_msgs.msg import PathCSV_HEADER = ['x', 'y', 'yaw']

MAX_DECEL = 1.0class WaypointLoader(object):def __init__(self):rospy.init_node('waypoint_loader', log_level=rospy.DEBUG)self.pub = rospy.Publisher('/base_waypoints', Lane, queue_size=1, latch=True)self.pub_path = rospy.Publisher('/path', Path, queue_size=1, latch=True)self.velocity = self.kmph2mps(rospy.get_param('~velocity'))self.new_waypoint_loader(rospy.get_param('~path'))rospy.spin()def new_waypoint_loader(self, path):if os.path.isfile(path):waypoints, base_path = self.load_waypoints(path)self.publish(waypoints, base_path)rospy.loginfo('Waypoint Loded')else:rospy.logerr('%s is not a file', path)def quaternion_from_yaw(self, yaw):return tf.transformations.quaternion_from_euler(0., 0., yaw)def kmph2mps(self, velocity_kmph):return (velocity_kmph * 1000.) / (60. * 60.)def load_waypoints(self, fname):waypoints = []base_path = Path()#base_path.header.frame_id = 'world'base_path.header.frame_id = 'map'with open(fname) as wfile:reader = csv.DictReader(wfile, CSV_HEADER)for wp in reader:#print(wp)p = Waypoint()p.pose.pose.position.x = float(wp['x'])p.pose.pose.position.y = float(wp['y'])q = self.quaternion_from_yaw(float(wp['yaw']))p.pose.pose.orientation = Quaternion(*q)p.twist.twist.linear.x = float(self.velocity)p.forward = Truewaypoints.append(p)path_element = PoseStamped()path_element.pose.position.x = p.pose.pose.position.xpath_element.pose.position.y = p.pose.pose.position.ybase_path.poses.append(path_element)waypoints = self.decelerate(waypoints)return waypoints,base_pathdef distance(self, p1, p2):x, y, z = p1.x - p2.x, p1.y - p2.y, p1.z - p2.zreturn math.sqrt(x*x + y*y + z*z)def decelerate(self, waypoints):last = waypoints[-1]last.twist.twist.linear.x = 0.for wp in waypoints[:-1][::-1]:print(wp)dist = self.distance(wp.pose.pose.position, last.pose.pose.position)vel = math.sqrt(2 * MAX_DECEL * dist)print(vel)if vel < 1.0:vel = 0.0wp.twist.twist.linear.x = min(vel, wp.twist.twist.linear.x)return waypointsdef publish(self, waypoints, base_path):lane = Lane()lane.header.frame_id = 'map'lane.header.stamp = rospy.Time(0)lane.waypoints = waypointsself.pub.publish(lane)self.pub_path.publish(base_path)rospy.loginfo('publishing......\n')if __name__ == '__main__':try:WaypointLoader()print("waypoint loader closed.")except rospy.ROSInterruptException:rospy.logerr('Could not start waypoint node.')

二、Epuck2导航到目标点

#include <iostream>

#include <ros/ros.h>

#include <geometry_msgs/Twist.h>

#include <geometry_msgs/PoseWithCovarianceStamped.h>

#include <geometry_msgs/PoseStamped.h>

#include "nav_msgs/Odometry.h"

#include "std_msgs/String.h"

#include <tf/tf.h>

#include <ros/time.h>

#include <queue>

#include <vector>

#include <cmath>

#include <chrono>

#include <thread>#include <tf2/LinearMath/Quaternion.h>

#include <tf2_geometry_msgs/tf2_geometry_msgs.h>#include "sensor_msgs/Range.h"using namespace std;geometry_msgs::Twist global_motion_msg;//转向控制增益

#define k_theta_ 0.05

//直线速度控制增益

#define k_linear_ 8

//转向阈值

#define threshold_heading_ 2

//到达目标点距离阈值

#define threshold_distance 0.01

//最大直线速度

#define max_linear_velocity_ 2.2

//最大角速度

#define max_angular_velocity_ 0.8//跟随者epuck1

#define EK1 30

#define EK2 0.2 //角度不受动捕单位改变的影响,看能否绕过障碍物(1、不行的话先扩大比例系数,2、定义数组把临机的位置考虑到避障中去)//机器人避障参数

#define safe_r 0.15

#define detect_R 0.2

#define k_avoid 0.2//定义2dPose

struct Pose2D{double x;double y;double angle;Pose2D(){x=0;y=0;angle=0;}Pose2D(double X, double Y, double YAW){x = X;y = Y;angle = YAW;}Pose2D operator= (const Pose2D& pose){x = pose.x;y = pose.y;angle = pose.angle;}};// std::queue<geometry_msgs::PoseWithCovarianceStamped> nokov_pose_buf_;//动捕位置

std::vector<Pose2D> nokov_pose_buf_0;

std::vector<Pose2D> nokov_pose_buf_1;

std::vector<Pose2D> nokov_pose_buf_2;//存放障碍物的位置信息

std::vector<Pose2D> nokov_pose_buf_3_0; //领航者用来避障

std::vector<Pose2D> nokov_pose_buf_3_1; //跟随机器人1用来避障

std::vector<Pose2D> nokov_pose_buf_3_2; //跟随机器人2用来避障std::vector<Pose2D> nokov_pose_buf_f1;

std::vector<Pose2D> nokov_pose_buf_f2;std::vector<Pose2D> nokov_pose_buf_1f2;//储存跟随者1的位置信息队列,f2拿着用

std::vector<Pose2D> nokov_pose_buf_2f1;//储存跟随者2的位置信息队列,f1拿着用//存放距离传感器测得的距离

std::vector<double> epuck2_dist_buf_3_0;

std::vector<double> epuck2_dist_buf_3_1;

std::vector<double> epuck2_dist_buf_3_2;//调整角度

double cropAngle(double angle){while(angle > M_PI) angle-=2*M_PI;while(angle < -M_PI) angle+=2*M_PI;return angle;

}//将领航者的动捕数据放入nokov_pose_buf_0

void NoKovCallback0(const geometry_msgs::PoseStamped::ConstPtr& novkov_msg){double x=novkov_msg->pose.position.x;double y=novkov_msg->pose.position.y;tf2::Quaternion quaternion;tf2::fromMsg(novkov_msg->pose.orientation, quaternion);tf2::Matrix3x3 mat(quaternion);double roll, pitch, yaw;mat.getRPY(roll, pitch, yaw);// double yaw=novkov_msg->pose.orientation.w;//std::cout << "novkov_msg: " << x << ", " << y << ", " << yaw * 180 / M_PI << std::endl;Pose2D novkov_pose(x, y, yaw * 180 / M_PI);nokov_pose_buf_0.push_back(novkov_pose);//领航者自身位置信息容器nokov_pose_buf_f1.push_back(novkov_pose);//跟随者1所需领航者的位置nokov_pose_buf_f2.push_back(novkov_pose);//跟随者2所需领航者的位置

}//将跟随者1的动捕数据放入nokov_pose_buf_1

void NoKovCallback1(const geometry_msgs::PoseStamped::ConstPtr& novkov_msg){double x=novkov_msg->pose.position.x;double y=novkov_msg->pose.position.y;tf2::Quaternion quaternion;tf2::fromMsg(novkov_msg->pose.orientation, quaternion);tf2::Matrix3x3 mat(quaternion);double roll, pitch, yaw;mat.getRPY(roll, pitch, yaw);// double yaw=novkov_msg->pose.orientation.w;//std::cout << "novkov_msg: " << x << ", " << y << ", " << yaw * 180 / M_PI << std::endl;Pose2D novkov_pose(x, y, yaw * 180 / M_PI);nokov_pose_buf_1.push_back(novkov_pose);//跟随者1自身位置信息队列nokov_pose_buf_1f2.push_back(novkov_pose);//跟随者2所需跟随者1位置信息队列}//将跟随者2动捕数据放入nokov_pose_buf_2

void NoKovCallback2(const geometry_msgs::PoseStamped::ConstPtr& novkov_msg){double x=novkov_msg->pose.position.x;double y=novkov_msg->pose.position.y;tf2::Quaternion quaternion;tf2::fromMsg(novkov_msg->pose.orientation, quaternion);tf2::Matrix3x3 mat(quaternion);double roll, pitch, yaw;mat.getRPY(roll, pitch, yaw);// double yaw=novkov_msg->pose.orientation.w;//std::cout << "novkov_msg: " << x << ", " << y << ", " << yaw * 180 / M_PI << std::endl;Pose2D novkov_pose(x, y, yaw * 180 / M_PI);nokov_pose_buf_2.push_back(novkov_pose);//跟随者2自身位置信息队列nokov_pose_buf_2f1.push_back(novkov_pose);//跟随者1所需跟随者2位置信息队列

}//将 epuck2_robot_0 距离传感器测得距障碍物的距离信息放入epuck2_dist_buf_3_0

void DistCallback0(const sensor_msgs::Range::ConstPtr& dist_msg){double r0 = dist_msg->range;//std::cout<<"r0: "<< r0 <<std::endl;epuck2_dist_buf_3_0.push_back(r0); //epuck2_robot_0测得的距障碍物距离

}//将 epuck2_robot_1 距离传感器测得距障碍物的距离信息放入epuck2_dist_buf_3_1

void DistCallback1(const sensor_msgs::Range::ConstPtr& dist_msg){double r1 = dist_msg->range;//std::cout<<"r1: "<< r1<<std::endl;epuck2_dist_buf_3_1.push_back(r1); //epuck2_robot_1测得的距障碍物距离

}//将 epuck2_robot_2 距离传感器测得距障碍物的距离信息放入epuck2_dist_buf_3_2

void DistCallback2(const sensor_msgs::Range::ConstPtr& dist_msg){double r2 = dist_msg->range;//std::cout<<"r2: "<< r2 <<std::endl;epuck2_dist_buf_3_2.push_back(r2); //epuck2_robot_1测得的距障碍物距离

}double compute_theta(double theta, double rotation1) {double w;if (theta * rotation1 < 0) {if (theta > 0) {if (std::abs(rotation1) + theta <= M_PI) {w = std::abs(rotation1) + theta;} else {w = -(2 * M_PI + rotation1 - theta);}} else {if (rotation1 + std::abs(theta) <= M_PI) {w = -(std::abs(theta) + rotation1);} else {w = (2 * M_PI - rotation1 + theta);}}} else {w = theta - rotation1;}return w;

}geometry_msgs::Twist motionControl(const Pose2D& current_position, const Pose2D& target_position,const double& obstacle_distance){//Step 1: 计算车辆当前位置与目标位置之间的距离和方向double delta_x = target_position.x - current_position.x;double delta_y = target_position.y - current_position.y;double distance_to_target = sqrt(delta_x * delta_x + delta_y * delta_y);double heading_to_target = atan2(delta_y, delta_x);std::cout << "————————————————————————————————————————————————————————————" << std::endl;std::cout << "target_position: " << target_position.x << ", " << target_position.y << ", " << target_position.angle << std::endl;std::cout << "current_position: " << current_position.x << ", " << current_position.y << ", " << current_position.angle << std::endl;std::cout << "epuck0_delta_x: " << delta_x << ", " << "epuck0_delta_y: " << delta_y << std::endl;// std::cout << "heading_to_target: " << heading_to_target * 180 / M_PI << std::endl; //Step 2: 计算当前车辆的航向角double current_heading = current_position.angle / 180 * M_PI;//Step 3: 计算目标航向角与当前航向角之间的差异 double heading_difference = cropAngle(heading_to_target - current_heading) * 180 / M_PI;std::cout << "heading_difference: " << heading_difference << std::endl;//计算领航者与障碍物之间的距离double r = 0;double avoid_delta = 0;r = obstacle_distance;std::cout<<"r0: "<< r <<std::endl;if(r<0.5){double temp_x = (1/r-1/detect_R)*(current_position.x-(current_position.x+r*cos(current_position.angle)));double temp_y = (1/r-1/detect_R)*(current_position.y-(current_position.x+r*sin(current_position.angle)));double temp_fenmu = pow(r,3);double temp_x1 = temp_x/temp_fenmu;double temp_y1 = temp_y/temp_fenmu;double temp_x_sum = temp_x1;double temp_y_sum = temp_y1;double avoid_delta = temp_x_sum*cos(current_position.angle)+temp_y_sum*sin(current_position.angle);std::cout << "avoid_delta0: " << avoid_delta;}else{double avoid_delta = 0;}//Step 4计算转向角速度,直线速度double angular_velocity0 = 0.0;if(fabs(heading_difference) > (threshold_heading_)) {angular_velocity0 = k_theta_ * heading_difference ;//考虑如果前面遇到障碍物是否给角度加上avoid_delta使机器人避开障碍物}double linear_velocity0 = 0.0;if(distance_to_target > threshold_distance){linear_velocity0 = k_linear_ * distance_to_target + k_avoid * avoid_delta;}//Step 5: 限制速度if(linear_velocity0 > max_linear_velocity_){linear_velocity0 = max_linear_velocity_;}if(fabs(angular_velocity0) > max_angular_velocity_){angular_velocity0 = (angular_velocity0 < 0.0) ? (-max_angular_velocity_) : max_angular_velocity_; }// //限制转的时候直线速度为0if(fabs(angular_velocity0) > 0.0){linear_velocity0 = 0;}//Step 6: 将速度下发/cmd_velgeometry_msgs::Twist msg0;msg0.linear.x = linear_velocity0; msg0.angular.z = angular_velocity0; // msg0.linear.x = 1.2; // msg0.angular.z = 0.3; std::cout << "linear_velocity0: " << msg0.linear.x << ", angular_velocity0: " << msg0.angular.z << std::endl;// 设置全局变量的值global_motion_msg = msg0;return msg0;}geometry_msgs::Twist formationControl1(const Pose2D& epuck1_position, const Pose2D& epuck0_position,const Pose2D& epuck2_position,const double& obstacle_distance){//Step 1: 计算跟随者1小车,与领航者0和临机2之间的相对位置关系double epuck1_delta_x = ((epuck0_position.x - 0.2) - epuck1_position.x)+(epuck2_position.x - epuck1_position.x);double epuck1_delta_y = ((epuck0_position.y - 0.2) - epuck1_position.y)+(epuck2_position.y - epuck1_position.y-(0.2-(-0.2)));double epuck1_target_heading = atan2(epuck1_delta_y, epuck1_delta_x);double epuck0_linear_velocity = global_motion_msg.linear.x;double epuck0_angular_velocity = global_motion_msg.angular.z;std::cout << "————————————————————————————————————————————————————————————" << std::endl;std::cout << "leader: " << epuck0_position.x << ", " << epuck0_position.y << std::endl;std::cout << "epuck1_position: " << epuck1_position.x << ", " << epuck1_position.y << std::endl;// std::cout << "epuck2_position: " << epuck2_position.x << ", " << epuck2_position.y << std::endl;std::cout << "epuck1_delta_x: " << epuck1_delta_x << ", " << "epuck1_delta_y: " << epuck1_delta_y << std::endl;std::cout << "epuck1_current_heading: " << epuck1_position.angle << std::endl;std::cout << "epuck1_target_heading: " << epuck1_target_heading * 180 / M_PI << std::endl;// std::cout << "epuck0_linear_velocity: " << epuck0_linear_velocity << std::endl;// std::cout << "epuck0_angular_velocity: " << epuck0_angular_velocity << std::endl; //Step 2: 计算当跟随者1小车当前实时的航向角double epuck1_current_heading = epuck1_position.angle / 180 * M_PI; //rand//Step 3: 计算目标航向角与当前航向角之间的差异 double epuck1_delta_theta = compute_theta(epuck1_target_heading,epuck1_current_heading);std::cout << "epuck1_delta_theta: " << epuck1_delta_theta * 180 / M_PI << std::endl;//计算跟随者1与障碍物之间的距离double r = 0;double avoid_delta = 0;r = obstacle_distance;std::cout<<"r1: "<< r <<std::endl;if(r<0.5){double temp_x = (1/r-1/detect_R)*(epuck1_position.x-(epuck1_position.x+r*cos(epuck1_position.angle)));double temp_y = (1/r-1/detect_R)*(epuck1_position.y-(epuck1_position.y+r*sin(epuck1_position.angle)));double temp_fenmu = pow(r,3);double temp_x1 = temp_x/temp_fenmu;double temp_y1 = temp_y/temp_fenmu;double temp_x_sum = temp_x1;double temp_y_sum = temp_y1;double avoid_delta = temp_x_sum*cos(epuck1_position.angle)+temp_y_sum*sin(epuck1_position.angle);std::cout << "avoid_delta1: " << avoid_delta;}else{double avoid_delta = 0;}//Step 4计算转向角速度,直线速度double angular_velocity1 = 0.0;if(fabs(epuck1_delta_theta * 180 / M_PI) > (threshold_heading_)) {angular_velocity1 = EK2 * epuck1_delta_theta + epuck0_angular_velocity;std::cout << "11111angular_velocity1: " << angular_velocity1;}double linear_velocity1 = 0.0;if(fabs(epuck1_delta_x) > threshold_distance || fabs(epuck1_delta_y) > threshold_distance){linear_velocity1 = EK1*(epuck1_delta_x*cos(epuck1_current_heading)+epuck1_delta_y*sin(epuck1_current_heading))+ epuck0_linear_velocity + k_avoid*avoid_delta;std::cout << "linear_velocity1: " << linear_velocity1<< std::endl;}//Step 5: 限制速度if(fabs(linear_velocity1) > max_linear_velocity_){linear_velocity1 =(linear_velocity1 < 0.0) ? (-max_linear_velocity_) : max_linear_velocity_;}if(fabs(angular_velocity1) > max_angular_velocity_){angular_velocity1 = (angular_velocity1 < 0.0) ? (-max_angular_velocity_) : max_angular_velocity_;}//限制转的时候直线速度为0// if(fabs(angular_velocity) > 0.0){// linear_velocity = 0;// }//Step 6: 将速度下发/cmd_velgeometry_msgs::Twist msg1;msg1.linear.x = linear_velocity1; msg1.angular.z = angular_velocity1; std::cout << "linear_velocity1: " << msg1.linear.x << ", angular_velocity1: " << msg1.angular.z << std::endl;return msg1;}geometry_msgs::Twist formationControl2(const Pose2D& epuck2_position, const Pose2D& epuck0_position,const Pose2D& epuck1_position,const double& obstacle_distance){//Step 1: 计算跟随者2小车,与领航者0和临机1之间的相对位置关系double epuck2_delta_x = ((epuck0_position.x - epuck2_position.x)-(0-(-0.2))) + (epuck1_position.x - epuck2_position.x-(-0.2-(-0.2)));double epuck2_delta_y = ((epuck0_position.y - epuck2_position.y)-(0-(0.2))) + (epuck1_position.y - epuck2_position.y-(-0.2-0.2));double epuck2_target_heading = atan2(epuck2_delta_y, epuck2_delta_x);double epuck0_linear_velocity = global_motion_msg.linear.x;double epuck0_angular_velocity = global_motion_msg.angular.z;std::cout << "————————————————————————————————————————————————————————————" << std::endl;std::cout << "leader: " << epuck0_position.x << ", " << epuck0_position.y << std::endl;std::cout << "epuck2_position: " << epuck2_position.x << ", " << epuck2_position.y << std::endl;std::cout << "epuck2_delta_x: " << epuck2_delta_x << ", " << "epuck2_delta_y: " << epuck2_delta_y << std::endl;std::cout << "epuck2_current_heading: " << epuck2_position.angle << std::endl;std::cout << "epuck2_target_heading: " << epuck2_target_heading * 180 / M_PI << std::endl;// std::cout << "epuck2_delta_x: " << epuck2_delta_x << ", " << "epuck2_delta_y: " << epuck2_delta_y << std::endl;// std::cout << "epuck2_current_heading: " << epuck2_position.angle << std::endl;// std::cout << "epuck2_target_heading: " << epuck2_target_heading * 180 / M_PI << std::endl;// std::cout << "epuck0_linear_velocity: " << epuck0_linear_velocity << std::endl;// std::cout << "epuck0_angular_velocity: " << epuck0_angular_velocity << std::endl;//Step 2: 计算当跟随者1小车当前实时的航向角double epuck2_current_heading = epuck2_position.angle / 180 * M_PI;//弧度//Step 3: 计算目标航向角与当前航向角之间的差异 double epuck2_delta_theta=compute_theta(epuck2_target_heading,epuck2_current_heading);std::cout << "epuck2_delta_theta: " << epuck2_delta_theta * 180 / M_PI << std::endl;//计算跟随者1与障碍物之间的距离double r = 0;double avoid_delta = 0;r = obstacle_distance;std::cout<<"r2: "<< r <<std::endl;if(r<0.5){double temp_x = (1/r-1/detect_R)*(epuck2_position.x-(epuck2_position.x+cos(epuck2_position.angle)));double temp_y = (1/r-1/detect_R)*(epuck2_position.y-(epuck2_position.y+sin(epuck2_position.angle)));double temp_fenmu = pow(r,3);double temp_x1 = temp_x/temp_fenmu;double temp_y1 = temp_y/temp_fenmu;double temp_x_sum = temp_x1;double temp_y_sum = temp_y1;double avoid_delta = temp_x_sum*cos(epuck2_position.angle)+temp_y_sum*sin(epuck2_position.angle);std::cout << "avoid_delta2: " << avoid_delta;}else{double avoid_delta = 0;}//Step 4计算转向角速度,直线速度double angular_velocity2 = 0.0;if(fabs(epuck2_delta_theta* 180 / M_PI) > (threshold_heading_)) {angular_velocity2 = EK2 * epuck2_delta_theta + epuck0_angular_velocity;std::cout << "22222angular_velocity2: " << angular_velocity2<<" , ";}double linear_velocity2 = 0.0;if(fabs(epuck2_delta_x) > threshold_distance || fabs(epuck2_delta_y) > threshold_distance){linear_velocity2 = EK1*(epuck2_delta_x*cos(epuck2_current_heading)+epuck2_delta_y*sin(epuck2_current_heading))+epuck0_linear_velocity+ k_avoid*avoid_delta;std::cout << "22222linear_velocity2: " << linear_velocity2 << std::endl;}// std::cout << "linear_velocity2: " << linear_velocity2 << std::endl;//Step 5: 限制速度if(fabs(linear_velocity2) > max_linear_velocity_){linear_velocity2 =(linear_velocity2 < 0.0) ? (-max_linear_velocity_): max_linear_velocity_;}if(fabs(angular_velocity2) > max_angular_velocity_){angular_velocity2 = (angular_velocity2 < 0.0) ? (-max_angular_velocity_) : max_angular_velocity_;}//限制转的时候直线速度为0// if(fabs(angular_velocity) > 0.0){// linear_velocity = 0;// }//Step 6: 将速度下发/cmd_velgeometry_msgs::Twist msg2;msg2.linear.x = linear_velocity2; msg2.angular.z = angular_velocity2; std::cout << "linear_velocity2: " << linear_velocity2 << ", angular_velocity2: " << angular_velocity2 << std::endl;return msg2;}// while循环函数

void whileLoop0() {ros::NodeHandle loop_nh;ros::Publisher velocity_publisher0 = loop_nh.advertise<geometry_msgs::Twist>("epuck_robot_0/mobile_base/cmd_vel", 10);// 目标点// Pose2D target_position_(100.0, 100.0, M_PI/4);Pose2D target_position_(1.28, 0.29, 60); //单位mstd::chrono::milliseconds duration_time(3);while (ros::ok()) {std::this_thread::sleep_for(duration_time);if(!nokov_pose_buf_0.empty() && !epuck2_dist_buf_3_0.empty()){geometry_msgs::Twist pub_msg0 = motionControl(nokov_pose_buf_0.back(), target_position_, epuck2_dist_buf_3_0.back());velocity_publisher0.publish(pub_msg0);nokov_pose_buf_0.clear();epuck2_dist_buf_3_0.clear();}else{// std::cout << "whileLoop0, "// << "nokov_pose_buf_0.size: " << nokov_pose_buf_0.size() << std::endl;}}}void whileLoop1() {ros::NodeHandle loop_nh;ros::Publisher velocity_publisher1 = loop_nh.advertise<geometry_msgs::Twist>("epuck_robot_1/mobile_base/cmd_vel", 10);std::chrono::milliseconds duration_time(3);while (ros::ok()) {std::this_thread::sleep_for(duration_time);if(!nokov_pose_buf_1.empty() && !nokov_pose_buf_f1.empty() && !nokov_pose_buf_2f1.empty() && !epuck2_dist_buf_3_1.empty()){std::cout << "nokov_pose_buf_1.size: " << nokov_pose_buf_1.size() << std::endl;geometry_msgs::Twist pub_msg1 = formationControl1(nokov_pose_buf_1.back(), nokov_pose_buf_f1.back(),nokov_pose_buf_2f1.back(),epuck2_dist_buf_3_1.back());velocity_publisher1.publish(pub_msg1);nokov_pose_buf_1.clear();nokov_pose_buf_f1.clear();nokov_pose_buf_2f1.clear();epuck2_dist_buf_3_1.clear();}else{// std::cout << "whileLoop1, "// << "nokov_pose_buf_1.size: " << nokov_pose_buf_1.size() // << ", nokov_pose_buf_f1.size: " << nokov_pose_buf_f1.size() // << ", nokov_pose_buf_2f1.size: " << nokov_pose_buf_2f1.size() << std::endl;}}

}void whileLoop2() {ros::NodeHandle loop_nh;ros::Publisher velocity_publisher2 = loop_nh.advertise<geometry_msgs::Twist>("epuck_robot_2/mobile_base/cmd_vel", 10);std::chrono::milliseconds duration_time(3);while (ros::ok()) {std::this_thread::sleep_for(duration_time);if(!nokov_pose_buf_2.empty() && !nokov_pose_buf_f2.empty() && !nokov_pose_buf_1f2.empty() && !epuck2_dist_buf_3_2.empty()){std::cout << "nokov_pose_buf_2.size: " << nokov_pose_buf_2.size() << std::endl;geometry_msgs::Twist pub_msg2 = formationControl2(nokov_pose_buf_2.back(), nokov_pose_buf_f2.back(),nokov_pose_buf_1f2.back(),epuck2_dist_buf_3_2.back());velocity_publisher2.publish(pub_msg2);nokov_pose_buf_2.clear();nokov_pose_buf_f2.clear();nokov_pose_buf_1f2.clear();epuck2_dist_buf_3_2.clear();}else{// std::cout << "whileLoop2, "// << "nokov_pose_buf_2.size: " << nokov_pose_buf_2.size() // << ", nokov_pose_buf_f2.size: " << nokov_pose_buf_f2.size() // << ", nokov_pose_buf_1f2.size: " << nokov_pose_buf_1f2.size() << std::endl;}}

}int main(int argc, char *argv[]){//初始化ros节点ros::init(argc, argv, "epuck_2");ros::NodeHandle nh;std::cout << "1111111111" << std::endl;// 创建订阅者数组,用于接收动捕位置std::vector<ros::Subscriber> subs;subs.push_back(nh.subscribe("/vrpn_client_node/Tracker0/pose", 10, NoKovCallback0));subs.push_back(nh.subscribe("/vrpn_client_node/Tracker1/pose", 10, NoKovCallback1));subs.push_back(nh.subscribe("/vrpn_client_node/Tracker2/pose", 10, NoKovCallback2));//订阅epuck2距离传感器的信息subs.push_back(nh.subscribe("/epuck_robot_0/dist_sens", 10, DistCallback0));//后面再起一台机器人看消息类型subs.push_back(nh.subscribe("/epuck_robot_1/dist_sens", 10, DistCallback1));subs.push_back(nh.subscribe("/epuck_robot_2/dist_sens", 10, DistCallback2));std::thread while_thread0(whileLoop0); // 创建一个独立线程执行while循环->用于发送领航者的运动控制指令std::thread while_thread1(whileLoop1); // 发送跟随者1的运动控制指令std::thread while_thread2(whileLoop2); // 发送跟随者2的运动控制指令ros::spin(); // 进入ROS事件循环while_thread0.join(); // 等待while线程结束while_thread1.join(); // 等待while线程结束while_thread2.join(); // 等待while线程结束return 0;}

Epuck2导航跟踪1

三、Epuck2跟踪期望轨迹

验证之前写的纯跟踪算法(pure_pursuit),发现Epuck2机器人能够较好的跟踪预设轨迹。

1.sin曲线跟踪

sin曲线跟踪

接着加入一致性编队控制,使编队中的其他成员跟随领航者朝期望位置移动,可以看到编队能够按照预设的轨迹朝期望位置移动如图1.6所示。

2.bonuli曲线跟踪

多bonuli曲线跟踪

补充资料:

epuck2的drive驱动代码:

#include <sstream>

#include <math.h>

#include <time.h>

#include <sys/time.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <arpa/inet.h>

#include <stdlib.h>

#include <stdio.h>

#include <unistd.h>

#include <fcntl.h>

#include <ros/ros.h>

#include <sensor_msgs/Range.h>

#include <sensor_msgs/Image.h>

#include <sensor_msgs/Imu.h>

#include <nav_msgs/Odometry.h>

#include <geometry_msgs/Twist.h>

#include <std_msgs/UInt8MultiArray.h>

#include <tf/transform_broadcaster.h>

#include <visualization_msgs/Marker.h>

#include <cv_bridge/cv_bridge.h>

#include <sensor_msgs/image_encodings.h>

#include <opencv/cv.h>

//#include <opencv2/opencv.hpp>

#include <sensor_msgs/LaserScan.h>

#include <sensor_msgs/MagneticField.h>

#include <sensor_msgs/BatteryState.h>//新添加

#include <iostream>

#include <geometry_msgs/PoseWithCovarianceStamped.h>

#include <geometry_msgs/PoseStamped.h>

#include "std_msgs/String.h"

#include <tf/tf.h>

#include <ros/time.h>

#include <vector>

#include <cmath>#include <tf2/LinearMath/Quaternion.h>

#include <tf2_geometry_msgs/tf2_geometry_msgs.h>#include <iomanip> // 包含 setprecision#define DEBUG_CONNECTION_INIT 1

#define DEBUG_ROS_PARAMS 1

#define DEBUG_UPDATE_SENSORS_DATA 0

#define DEBUG_ODOMETRY 0

#define DEBUG_IMU 0

#define DEBUG_SPEED_RECEIVED 0

#define DEBUG_LED_RECEIVED 0

#define DEBUG_RGB_RECEIVED 0

#define DEBUG_MAG_FIELD 0

#define DEBUG_BATTERY 0#define READ_TIMEOUT_SEC 10

#define READ_TIMEOUT_USEC 0

#define MAX_CONNECTION_TRIALS 3#define WHEEL_DIAMETER 4 // cm.

#define WHEEL_SEPARATION 5.3 // Separation between wheels (cm).

#define WHEEL_DISTANCE 0.053 // Distance between wheels in meters (axis length); it's the same value as "WHEEL_SEPARATION" but expressed in meters.

#define WHEEL_CIRCUMFERENCE ((WHEEL_DIAMETER*M_PI)/100.0) // Wheel circumference (meters).

#define MOT_STEP_DIST (WHEEL_CIRCUMFERENCE/1000.0) // Distance for each motor step (meters); a complete turn is 1000 steps (0.000125 meters per step (m/steps)).

#define ROBOT_RADIUS 0.035 // meters.#define LED_NUMBER 6 // total number of LEDs on the robot (0,2,4,6=leds, 8=body, 9=front)

#define RGB_LED_NUMBER 4#define DEG2RAD(deg) (deg / 180 * M_PI)

#define GYRO_RAW2DPS (250.0/32768.0f) //250DPS (degrees per second) scale for int16 raw value

#define STANDARD_GRAVITY 9.80665f#define RESISTOR_R1 220 //kohm

#define RESISTOR_R2 330 //kohm

#define VOLTAGE_DIVIDER (1.0f * RESISTOR_R2 / (RESISTOR_R1 + RESISTOR_R2))

#define VREF 3.0f //volt correspond to the voltage on the VREF+ pin

#define ADC_RESOLUTION 4096

#define COEFF_ADC_TO_VOLT ((1.0f * ADC_RESOLUTION * VOLTAGE_DIVIDER) / VREF) //convertion from adc value to voltage

#define MAX_VOLTAGE 4.2f //volt

#define MIN_VOLTAGE 3.4f //volt// Communication variables

struct sockaddr_in robot_addr;

int fd;

unsigned char command[21];

unsigned char header, sensor[104];

int bytes_sent = 0, bytes_recv = 0;

bool camera_enabled, ground_sensors_enabled;

uint8_t expected_recv_packets = 0;

bool newImageReceived = false;

std::string epuckAddress("");// Sensors data variables

unsigned char image[160*120*2];

float acceleration, orientation, inclination; /**< acceleration data*/

int16_t accData[3];

int16_t gyroRaw[3];

float magneticField[3];

uint8_t temperature;

int proxData[8]; /**< proximity sensors data*/

int lightAvg; /**< light sensor data*/

uint16_t distanceMm;

uint16_t micVolume[4]; /**< microphone data*/

int16_t motorSteps[2];

uint16_t batteryRaw;

uint8_t microSdState;

uint8_t irCheck, irAddress, irData;

uint8_t selector;

int16_t groundProx[3], groundAmbient[3];

uint8_t buttonState;// ROS variables

ros::Publisher proxPublisher[8];

sensor_msgs::Range proxMsg[8];

ros::Publisher laserPublisher;

sensor_msgs::LaserScan laserMsg;

ros::Publisher odomPublisher;

nav_msgs::Odometry odomMsg;

ros::Publisher imagePublisher;

ros::Publisher imuPublisher;

sensor_msgs::Imu imuMsg;

ros::Publisher microphonePublisher;

visualization_msgs::Marker microphoneMsg;

ros::Publisher floorPublisher;

visualization_msgs::Marker floorMsg;

ros::Publisher distSensPublisher;

sensor_msgs::Range distSensMsg;

ros::Publisher magFieldPublisher;

sensor_msgs::MagneticField magFieldMsg;

ros::Publisher magFieldVectorPublisher;

visualization_msgs::Marker magFieldVectorMsg;

ros::Publisher battPublisher;

sensor_msgs::BatteryState battMsg;ros::Subscriber cmdVelSubscriber, cmdLedSubscriber, cmdRgbLedsSubscriber, cmdNokovSubscriber;double leftStepsDiff = 0, rightStepsDiff = 0;

double leftStepsPrev = 0, rightStepsPrev = 0;

signed long int leftStepsRawPrev = 0, rightStepsRawPrev = 0;

signed long int motorPositionDataCorrect[2];

double xPos, yPos, theta;

double deltaSteps, deltaTheta;

ros::Time currentTime, lastTime, currentTimeMap, lastTimeMap;

int overflowCountLeft = 0, overflowCountRight = 0;

int16_t gyroOffset[3] = {0, 0, 0}; // Used if making an initial calibration of the gyro.

int speedLeft = 0, speedRight = 0;//接收动捕的位置

//定义2dPose

struct Pose2D{double x;double y;double angle;Pose2D(){x=0;y=0;angle=0;}Pose2D(double X, double Y, double YAW){x = X;y = Y;angle = YAW;}Pose2D operator= (const Pose2D& pose){x = pose.x;y = pose.y;angle = pose.angle;}};std::vector<Pose2D> nokov_pose_buf_00;//将领航者的动捕数据放入nokov_pose_buf_00

void NoKovCallback00(const geometry_msgs::PoseStamped::ConstPtr& novkov_msg){double x=novkov_msg->pose.position.x;double y=novkov_msg->pose.position.y;tf2::Quaternion quaternion;tf2::fromMsg(novkov_msg->pose.orientation, quaternion);tf2::Matrix3x3 mat(quaternion);double roll, pitch, yaw;mat.getRPY(roll, pitch, yaw);// double yaw=novkov_msg->pose.orientation.w;//std::cout << "novkov_msg: " << x << ", " << y << ", " << yaw * 180 / M_PI << std::endl;Pose2D novkov_pose(x, y, yaw * 180 / M_PI);nokov_pose_buf_00.push_back(novkov_pose);//领航者自身位置信息容器}// General variables

std::string epuckname;int initConnectionWithRobot() {int ret_value;std::stringstream ss;struct timeval tv;socklen_t len = sizeof(tv);uint8_t trials = 0;robot_addr.sin_family = AF_INET;robot_addr.sin_addr.s_addr = inet_addr(epuckAddress.c_str());robot_addr.sin_port = htons(1000);if(DEBUG_CONNECTION_INIT)fprintf(stderr, "Try to connect to %s:%d (TCP)\n", inet_ntoa(robot_addr.sin_addr), htons(robot_addr.sin_port));fd = socket(AF_INET, SOCK_STREAM, 0);if(fd < 0) {perror("TCP cannot create socket: ");return -1;}// Set to non-blocking mode during connection otherwise it will block for too much time if the robot isn't ready to accept connectionsif( (ret_value = fcntl(fd, F_GETFL, 0)) < 0) {perror("Cannot get flag status: ");return -1;}// else {// if((ret_value & O_NONBLOCK) > 0) {// std::cout << "Non-blocking socket" << std::endl;// } else {// std::cout << "Blocking socket" << std::endl;// }//}ret_value |= O_NONBLOCK;if(fcntl(fd, F_SETFL, ret_value) < 0) {perror("Cannot set non-blocking mode: ");return -1;}while(trials < MAX_CONNECTION_TRIALS) {// Connection to the robot (server).ret_value = connect(fd, (struct sockaddr *) &robot_addr, sizeof(robot_addr)); if (ret_value == 0) {break;} else {trials++;if(DEBUG_CONNECTION_INIT)fprintf(stderr, "Connection trial %d\n", trials);sleep(3); }}if(trials == MAX_CONNECTION_TRIALS) {ss.str("");ss << "[" << epuckname << "] " << "Error, can't connect to tcp socket";//if(DEBUG_CONNECTION_INIT)perror(ss.str().c_str());return -1;}// Set to blocking mode.if( (ret_value = fcntl(fd, F_GETFL, 0)) < 0) {perror("Cannot get flag status: ");return -1;}// else {// if((ret_value & O_NONBLOCK) > 0) {// std::cout << "Non-blocking socket" << std::endl;// } else {// std::cout << "Blocking socket" << std::endl;// }//}ret_value &= (~O_NONBLOCK);if(fcntl(fd, F_SETFL, ret_value) < 0) {perror("Cannot set blocking mode: ");return -1;} // Set the reception timeout. This is used when blocking mode is activated after connection.tv.tv_sec = READ_TIMEOUT_SEC;tv.tv_usec = READ_TIMEOUT_USEC;ret_value = setsockopt(fd, SOL_SOCKET, SO_RCVTIMEO, &tv, sizeof(tv));if(ret_value < 0) {perror("Cannot set rx timeout: ");return -1;} //ret_value = getsockopt(fd, SOL_SOCKET, SO_RCVTIMEO, &tv, &len);//if(ret_value < 0) {// perror("Cannot read rx timeout: ");//} else {// std::cout << "rx timeout: " << tv.tv_sec << " s and " << tv.tv_usec << " us" << std::endl;//} return 0;

}void closeConnection() {std::stringstream ss;if(close(fd) < 0) {ss.str("");ss << "[" << epuckname << "] " << "Can't close tcp socket";if(DEBUG_CONNECTION_INIT)perror(ss.str().c_str());}

}void updateSensorsAndActuators() {int bytes_sent = 0, bytes_recv = 0, ret_value;long mantis = 0;short exp = 0;float flt = 0.0;bytes_sent = 0;while(bytes_sent < sizeof(command)) {bytes_sent += send(fd, (char *)&command[bytes_sent], sizeof(command)-bytes_sent, 0);}command[2] = 0; // Stop proximity calibration.while(expected_recv_packets > 0) {bytes_recv = recv(fd, (char *)&header, 1, 0);if (bytes_recv <= 0) {closeConnection();if(initConnectionWithRobot() < 0) {std::cerr << "Lost connection with the robot" << std::endl;exit(1);} else {return; // Wait for the next sensor request}}switch(header) {case 0x01: // Camera. bytes_recv = 0;while(bytes_recv < sizeof(image)) {ret_value = recv(fd, (char *)&image[bytes_recv], sizeof(image)-bytes_recv, 0);if(ret_value <= 0) {closeConnection();if(initConnectionWithRobot() < 0) {std::cerr << "Lost connection with the robot" << std::endl;exit(1);} else {return; // Wait for the next sensor request}} else {bytes_recv += ret_value;//std::cout << "image read = " << bytes_recv << std::endl;}}if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "camera read correctly" << std::endl;newImageReceived = true;//red = image[0] & 0xf8;//green = image[0] << 5;//green += (image[1] & 0xf8) >> 3;//blue = image[1] << 3;//printf("1st pixel = %d, %d, %d\r\n", red, green, blue);break;case 0x02: // Sensors.bytes_recv = 0;while(bytes_recv < sizeof(sensor)) {ret_value = recv(fd, (char *)&sensor[bytes_recv], sizeof(sensor)-bytes_recv, 0);if(ret_value <= 0) {closeConnection();if(initConnectionWithRobot() < 0) {std::cerr << "Lost connection with the robot" << std::endl;exit(1);} else {return; // Wait for the next sensor request}} else {bytes_recv += ret_value;//std::cout << "sensors read = " << bytes_recv << std::endl;}}accData[0] = sensor[0] + sensor[1]*256;accData[1] = sensor[2] + sensor[3]*256;accData[2] = sensor[4] + sensor[5]*256; if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "acc: " << accData[0] << "," << accData[1] << "," << accData[2] << std::endl;// Compute accelerationmantis = (sensor[6] & 0xff) + ((sensor[7] & 0xffl) << 8) + (((sensor[8] &0x7fl) | 0x80) << 16);exp = (sensor[9] & 0x7f) * 2 + ((sensor[8] & 0x80) ? 1 : 0);if (sensor[9] & 0x80) {mantis = -mantis;}flt = (mantis || exp) ? ((float) ldexp (mantis, (exp - 127 - 23))): 0;acceleration=flt;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "acceleration: " << acceleration << std::endl;// Compute orientation.mantis = (sensor[10] & 0xff) + ((sensor[11] & 0xffl) << 8) + (((sensor[12] &0x7fl) | 0x80) << 16);exp = (sensor[13] & 0x7f) * 2 + ((sensor[12] & 0x80) ? 1 : 0);if (sensor[13] & 0x80)mantis = -mantis;flt = (mantis || exp) ? ((float) ldexp (mantis, (exp - 127 - 23))): 0;orientation=flt;if (orientation < 0.0 )orientation=0.0;if (orientation > 360.0 )orientation=360.0;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "orientation: " << orientation << std::endl;// Compute inclination.mantis = (sensor[14] & 0xff) + ((sensor[15] & 0xffl) << 8) + (((sensor[16] &0x7fl) | 0x80) << 16);exp = (sensor[17] & 0x7f) * 2 + ((sensor[16] & 0x80) ? 1 : 0);if (sensor[17] & 0x80)mantis = -mantis;flt = (mantis || exp) ? ((float) ldexp (mantis, (exp - 127 - 23))): 0;inclination=flt;if (inclination < 0.0 )inclination=0.0;if (inclination > 180.0 )inclination=180.0;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "inclination: " << inclination << std::endl;// GyrogyroRaw[0] = sensor[18]+sensor[19]*256;gyroRaw[1] = sensor[20]+sensor[21]*256;gyroRaw[2] = sensor[22]+sensor[23]*256;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "gyro: " << gyroRaw[0] << "," << gyroRaw[1] << "," << gyroRaw[2] << std::endl; // MagnetometermagneticField[0] = *((float*)&sensor[24]);magneticField[1] = *((float*)&sensor[28]);magneticField[2] = *((float*)&sensor[32]);if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "mag: " << magneticField[0] << "," << magneticField[1] << "," << magneticField[2] << std::endl; // Temperature.temperature = sensor[36];if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "temperature: " << (int)temperature << std::endl;// Proximity sensors data.proxData[0] = sensor[37]+sensor[38]*256;proxData[1] = sensor[39]+sensor[40]*256;proxData[2] = sensor[41]+sensor[42]*256;proxData[3] = sensor[43]+sensor[44]*256;proxData[4] = sensor[45]+sensor[46]*256;proxData[5] = sensor[47]+sensor[48]*256;proxData[6] = sensor[49]+sensor[50]*256;proxData[7] = sensor[51]+sensor[52]*256;if(proxData[0]<0) {proxData[0]=0;}if(proxData[1]<0) {proxData[1]=0;}if(proxData[2]<0) {proxData[2]=0;}if(proxData[3]<0) {proxData[3]=0;}if(proxData[4]<0) {proxData[4]=0;}if(proxData[5]<0) {proxData[5]=0;}if(proxData[6]<0) {proxData[6]=0;}if(proxData[7]<0) {proxData[7]=0;}if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "prox: " << proxData[0] << "," << proxData[1] << "," << proxData[2] << "," << proxData[3] << "," << proxData[4] << "," << proxData[5] << "," << proxData[6] << "," << proxData[7] << std::endl; // Compute abmient light.lightAvg += (sensor[53]+sensor[54]*256);lightAvg += (sensor[55]+sensor[56]*256);lightAvg += (sensor[57]+sensor[58]*256);lightAvg += (sensor[59]+sensor[60]*256);lightAvg += (sensor[61]+sensor[62]*256);lightAvg += (sensor[63]+sensor[64]*256);lightAvg += (sensor[65]+sensor[66]*256);lightAvg += (sensor[67]+sensor[68]*256);lightAvg = (int) (lightAvg/8);lightAvg = (lightAvg>4000)?4000:lightAvg;if(lightAvg<0) {lightAvg=0;}if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "lightAvg: " << lightAvg << std::endl;// ToFdistanceMm = (uint16_t)((uint8_t)sensor[70]<<8)|((uint8_t)sensor[69]);if(distanceMm > 2000) {distanceMm = 2000;}if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "distanceMm: " << distanceMm << "(" << (int)sensor[69] << "," << (int)sensor[70] << ")" << std::endl;// MicrophonemicVolume[0] = ((uint8_t)sensor[71]+(uint8_t)sensor[72]*256);micVolume[1] = ((uint8_t)sensor[73]+(uint8_t)sensor[74]*256);micVolume[2] = ((uint8_t)sensor[75]+(uint8_t)sensor[76]*256);micVolume[3] = ((uint8_t)sensor[77]+(uint8_t)sensor[78]*256);if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "mic: " << micVolume[0] << "," << micVolume[1] << "," << micVolume[2] << "," << micVolume[3] << std::endl;// Left stepsmotorSteps[0] = (sensor[79]+sensor[80]*256);// Right stepsmotorSteps[1] = (sensor[81]+sensor[82]*256);if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "steps: " << motorSteps[0] << "," << motorSteps[1] << std::endl;// BatterybatteryRaw = (uint8_t)sensor[83]+(uint8_t)sensor[84]*256;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "batteryRaw: " << batteryRaw << std::endl;// Micro sd state.microSdState = sensor[85];if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "microSdState: " << (int)microSdState << std::endl;// Tv remote.irCheck = sensor[86];irAddress = sensor[87];irData = sensor[88];if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "tv remote: " << (int)irCheck << "," << (int)irAddress << "," << (int)irData << std::endl;// Selector.selector = sensor[89];if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "selector: " << (int)selector << std::endl;// Ground sensor proximity.groundProx[0] = sensor[90]+sensor[91]*256;groundProx[1] = sensor[92]+sensor[93]*256;groundProx[2] = sensor[94]+sensor[95]*256;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "groundProx: " << groundProx[0] << "," << groundProx[1] << "," << groundProx[2] << std::endl;// Ground sensor ambient light.groundAmbient[0] = sensor[96]+sensor[97]*256;groundAmbient[1] = sensor[98]+sensor[99]*256;groundAmbient[2] = sensor[100]+sensor[101]*256;if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "groundAmbient: " << groundAmbient[0] << "," << groundAmbient[1] << "," << groundAmbient[2] << std::endl;// Button state.buttonState = sensor[102]; if(DEBUG_UPDATE_SENSORS_DATA)std::cout << "[" << epuckname << "] " << "buttonState: " << (int)buttonState << std::endl;break;case 0x03:printf("empty packet\r\n");break;default:printf("unexpected packet\r\n");break;}expected_recv_packets--;}if(camera_enabled) {expected_recv_packets = 2;} else {expected_recv_packets = 1;}}void RGB565toRGB888(int width, int height, unsigned char *src, unsigned char *dst) {int line, column;int index_src=0, index_dst=0;for (line = 0; line < height; ++line) {for (column = 0; column < width; ++column) {dst[index_dst++] = (unsigned char)(src[index_src] & 0xF8);dst[index_dst++] = (unsigned char)((src[index_src]&0x07)<<5) | (unsigned char)((src[index_src+1]&0xE0)>>3);dst[index_dst++] = (unsigned char)((src[index_src+1]&0x1F)<<3);index_src+=2;}}

}void updateRosInfo() {static tf::TransformBroadcaster br;std::stringstream ss;geometry_msgs::Quaternion orientQuat;std::stringstream parent;std::stringstream child;tf::Transform transform;tf::Quaternion q;int i = 0;//#############################################################################################################################################// Proximity topicsfor(i=0; i<8; i++) {if(proxData[i] > 0) {proxMsg[i].range = 0.5/sqrt(proxData[i]); // Transform the analog value to a distance value in meters (given from field tests).} else {proxMsg[i].range = proxMsg[i].max_range;}if(proxMsg[i].range > proxMsg[i].max_range) {proxMsg[i].range = proxMsg[i].max_range;}if(proxMsg[i].range < proxMsg[i].min_range) {proxMsg[i].range = proxMsg[i].min_range;}proxMsg[i].header.stamp = ros::Time::now();proxPublisher[i].publish(proxMsg[i]);}// e-puck proximity positions (cm), x pointing forward, y pointing left// P7(3.5, 1.0) P0(3.5, -1.0)// P6(2.5, 2.5) P1(2.5, -2.5)// P5(0.0, 3.0) P2(0.0, -3.0)// P4(-3.5, 2.0) P3(-3.5, -2.0)//// e-puck proximity orentations (degrees)// P7(10) P0(350)// P6(40) P1(320)// P5(90) P2(270)// P4(160) P3(200)transform.setOrigin( tf::Vector3(0.035, -0.010, 0.034) ); q.setRPY(0, 0, 6.11);transform.setRotation(q); parent << epuckname << "/base_prox0";child << epuckname << "/base_link";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(0.025, -0.025, 0.034) ); q.setRPY(0, 0, 5.59);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox1";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(0.000, -0.030, 0.034) ); q.setRPY(0, 0, 4.71);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox2";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(-0.035, -0.020, 0.034) ); q.setRPY(0, 0, 3.49);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox3";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(-0.035, 0.020, 0.034) ); q.setRPY(0, 0, 2.8);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox4";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(0.000, 0.030, 0.034) ); q.setRPY(0, 0, 1.57);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox5";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(0.025, 0.025, 0.034) ); q.setRPY(0, 0, 0.70);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox6";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));transform.setOrigin( tf::Vector3(0.035, 0.010, 0.034) ); q.setRPY(0, 0, 0.17);transform.setRotation(q);parent.str("");parent << epuckname << "/base_prox7";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));//############################################################################################################################################# //############################################################################################################################################# // Laserscan topiccurrentTimeMap = ros::Time::now();parent.str("");parent << epuckname << "/base_laser";//populate the LaserScan messagelaserMsg.header.stamp = ros::Time::now();laserMsg.header.frame_id = parent.str();laserMsg.angle_min = -M_PI/2.0;laserMsg.angle_max = M_PI/2.0;laserMsg.angle_increment = M_PI/18.0; // 10 degrees.//laserMsg.time_increment = (currentTimeMap-lastTimeMap).toSec()/180; //0.003; //(1 / laser_frequency) / (num_readings);//laserMsg.scan_time = (currentTimeMap-lastTimeMap).toSec();// The laser is placed in the center of the robot, but the proximity sensors are placed around the robot thus add "ROBOT_RADIUS" to get correct values.laserMsg.range_min = 0.005+ROBOT_RADIUS; // 0.5 cm + ROBOT_RADIUS.laserMsg.range_max = 0.05+ROBOT_RADIUS; // 5 cm + ROBOT_RADIUS. laserMsg.ranges.resize(19);laserMsg.intensities.resize(19);lastTimeMap = ros::Time::now();// We use the information from the 6 proximity sensors on the front side of the robot to get 19 laser scan points. The interpolation used is the following:// -90 degrees: P2// -80 degrees: 4/5*P2 + 1/5*P1// -70 degrees: 3/5*P2 + 2/5*P1// -60 degrees: 2/5*P2 + 3/5*P1// -50 degrees: 1/5*P2 + 4/5*P1// -40 degrees: P1// -30 degrees: 2/3*P1 + 1/3*P0// -20 degrees: 1/3*P1 + 2/3*P0// -10 degrees: P0// 0 degrees: 1/2*P0 + 1/2*P7// 10 degrees: P7// 20 degrees: 1/3*P6 + 2/3*P7// 30 degrees: 2/3*P6 + 1/3*P7// 40 degrees: P6// 50 degrees: 1/5*P5 + 4/5*P6// 60 degrees: 2/5*P5 + 3/5*P6// 70 degrees: 3/5*P5 + 2/5*P6// 80 degrees: 4/5*P5 + 1/5*P6// 90 degrees: P5float tempProx;tempProx = proxData[2];if(tempProx > 0) { laserMsg.ranges[0] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[0] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[0] = laserMsg.range_max;laserMsg.intensities[0] = 0;}tempProx = proxData[2]*4/5 + proxData[1]*1/5;if(tempProx > 0) { laserMsg.ranges[1] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[1] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[1] = laserMsg.range_max;laserMsg.intensities[1] = 0;}tempProx = proxData[2]*3/5 + proxData[1]*2/5;if(tempProx > 0) { laserMsg.ranges[2] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[2] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[2] = laserMsg.range_max;laserMsg.intensities[2] = 0;}tempProx = proxData[2]*2/5 + proxData[1]*3/5;if(tempProx > 0) { laserMsg.ranges[3] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[3] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[3] = laserMsg.range_max;laserMsg.intensities[3] = 0;} tempProx = proxData[2]*1/5 + proxData[1]*4/5;if(tempProx > 0) { laserMsg.ranges[4] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[4] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[4] = laserMsg.range_max;laserMsg.intensities[4] = 0;} tempProx = proxData[1];if(tempProx > 0) { laserMsg.ranges[5] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[5] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[5] = laserMsg.range_max;laserMsg.intensities[5] = 0;} tempProx = proxData[1]*2/3 + proxData[0]*1/3;if(tempProx > 0) { laserMsg.ranges[6] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[6] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[6] = laserMsg.range_max;laserMsg.intensities[6] = 0;} tempProx = proxData[1]*1/3 + proxData[0]*2/3;if(tempProx > 0) { laserMsg.ranges[7] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[7] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[7] = laserMsg.range_max;laserMsg.intensities[7] = 0;} tempProx = proxData[0];if(tempProx > 0) { laserMsg.ranges[8] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[8] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[8] = laserMsg.range_max;laserMsg.intensities[8] = 0;} tempProx = (proxData[0]+proxData[7])>>1;if(tempProx > 0) { laserMsg.ranges[9] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[9] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[9] = laserMsg.range_max;laserMsg.intensities[9] = 0;} tempProx = proxData[7];if(tempProx > 0) { laserMsg.ranges[10] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[10] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[10] = laserMsg.range_max;laserMsg.intensities[10] = 0;} tempProx = proxData[7]*2/3 + proxData[6]*1/3;if(tempProx > 0) { laserMsg.ranges[11] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[11] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[11] = laserMsg.range_max;laserMsg.intensities[11] = 0;} tempProx = proxData[7]*1/3 + proxData[6]*2/3;if(tempProx > 0) { laserMsg.ranges[12] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[12] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[12] = laserMsg.range_max;laserMsg.intensities[12] = 0;} tempProx = proxData[6];if(tempProx > 0) { laserMsg.ranges[13] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[13] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[13] = laserMsg.range_max;laserMsg.intensities[13] = 0;} tempProx = proxData[6]*4/5 + proxData[5]*1/5;if(tempProx > 0) { laserMsg.ranges[14] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[14] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[14] = laserMsg.range_max;laserMsg.intensities[14] = 0;} tempProx = proxData[6]*3/5 + proxData[5]*2/5;if(tempProx > 0) { laserMsg.ranges[15] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[15] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[15] = laserMsg.range_max;laserMsg.intensities[15] = 0;} tempProx = proxData[6]*2/5 + proxData[5]*3/5;if(tempProx > 0) { laserMsg.ranges[16] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[16] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[16] = laserMsg.range_max;laserMsg.intensities[16] = 0;} tempProx = proxData[6]*1/5 + proxData[5]*4/5;if(tempProx > 0) { laserMsg.ranges[17] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[17] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[17] = laserMsg.range_max;laserMsg.intensities[17] = 0;} tempProx = proxData[5];if(tempProx > 0) { laserMsg.ranges[18] = (0.5/sqrt(tempProx))+ROBOT_RADIUS; // Transform the analog value to a distance value in meters (given from field tests).laserMsg.intensities[18] = tempProx; } else { // Sometimes the values could be negative due to the calibration, it means there is no obstacles.laserMsg.ranges[18] = laserMsg.range_max;laserMsg.intensities[18] = 0;} for(i=0; i<19; i++) {if(laserMsg.ranges[i] > laserMsg.range_max) {laserMsg.ranges[i] = laserMsg.range_max;}if(laserMsg.ranges[i] < laserMsg.range_min) {laserMsg.ranges[i] = laserMsg.range_min;}}transform.setOrigin( tf::Vector3(0.0, 0.0, 0.034) ); q.setRPY(0, 0, 0);transform.setRotation(q); parent.str("");child.str("");parent << epuckname << "/base_laser";child << epuckname << "/base_link";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));laserPublisher.publish(laserMsg);//#############################################################################################################################################//############################################################################################################################################# // Odometry topic// The encoders values coming from the e-puck are 2 bytes signed int thus we need to handle the overflows otherwise the odometry will be wrong after a while (about 4 meters).if((leftStepsRawPrev>0) && (motorSteps[0]<0) && (abs(motorSteps[0]-leftStepsRawPrev)>30000)) { // Overflow detected (positive).overflowCountLeft++;}if((leftStepsRawPrev<0) && (motorSteps[0]>0) && (abs(motorSteps[0]-leftStepsRawPrev)>30000)) { // Overflow detected (negative).overflowCountLeft--;}motorPositionDataCorrect[0] = (overflowCountLeft*65536) + motorSteps[0];if((rightStepsRawPrev>0) && (motorSteps[1]<0) && (abs(motorSteps[1]-rightStepsRawPrev)>30000)) { // Overflow detected (positive).overflowCountRight++;}if((rightStepsRawPrev<0) && (motorSteps[1]>0) && (abs(motorSteps[1]-rightStepsRawPrev)>30000)) { // Overflow detected (negative).overflowCountRight--;}motorPositionDataCorrect[1] = (overflowCountRight*65536) + motorSteps[1]; leftStepsRawPrev = motorSteps[0];rightStepsRawPrev = motorSteps[1];if(DEBUG_ODOMETRY)std::cout << "[" << epuckname << "] " << "left, right raw: " << motorSteps[0] << ", " << motorSteps[1] << std::endl;if(DEBUG_ODOMETRY)std::cout << "[" << epuckname << "] " << "left, right raw corrected: " << motorPositionDataCorrect[0] << ", " << motorPositionDataCorrect[1] << std::endl;// Compute odometry.leftStepsDiff = motorPositionDataCorrect[0]*MOT_STEP_DIST - leftStepsPrev; // Expressed in meters.rightStepsDiff = motorPositionDataCorrect[1]*MOT_STEP_DIST - rightStepsPrev; // Expressed in meters.if(DEBUG_ODOMETRY)std::cout << "[" << epuckname << "] " << "left, right steps diff: " << leftStepsDiff << ", " << rightStepsDiff << std::endl;deltaTheta = (rightStepsDiff - leftStepsDiff)/WHEEL_DISTANCE; // Expressed in radiant.deltaSteps = (rightStepsDiff + leftStepsDiff)/2; // Expressed in meters.if(DEBUG_ODOMETRY)std::cout << "[" << epuckname << "] " << "delta theta, steps: " << deltaTheta << ", " << deltaSteps << std::endl;xPos += deltaSteps*cos(theta + deltaTheta/2); // Expressed in meters.yPos += deltaSteps*sin(theta + deltaTheta/2); // Expressed in meters.theta += deltaTheta; // Expressed in radiant.if(DEBUG_ODOMETRY)std::cout << "[" << epuckname << "] " << "x, y, theta: " << xPos << ", " << yPos << ", " << theta << std::endl;leftStepsPrev = motorPositionDataCorrect[0]*MOT_STEP_DIST; // Expressed in meters.rightStepsPrev = motorPositionDataCorrect[1]*MOT_STEP_DIST; // Expressed in meters.if(!nokov_pose_buf_00.empty()){// Publish the odometry message over ROS.odomMsg.header.stamp = ros::Time::now();odomMsg.header.frame_id = "odom";ss << epuckname << "/base_link";odomMsg.child_frame_id = ss.str();odomMsg.pose.pose.position.x = nokov_pose_buf_00.back().x; odomMsg.pose.pose.position.y = nokov_pose_buf_00.back().y;std::cout << "nokov_pose_buf_00, x: " << nokov_pose_buf_00.back().x << ", y: " << nokov_pose_buf_00.back().y;// odomMsg.pose.pose.position.x = xPos; // odomMsg.pose.pose.position.y = yPos;odomMsg.pose.pose.position.z = 0;odomMsg.pose.covariance[0] = 0.001;odomMsg.pose.covariance[7] = 0.001;odomMsg.pose.covariance[14] = 1000.0;odomMsg.pose.covariance[21] = 1000.0;odomMsg.pose.covariance[28] = 1000.0;odomMsg.pose.covariance[35] = 0.001;// Since all odometry is 6DOF we'll need a quaternion created from yaw.orientQuat = tf::createQuaternionMsgFromYaw(nokov_pose_buf_00.back().angle / 180 * M_PI);odomMsg.pose.pose.orientation = orientQuat;currentTime = ros::Time::now();// odomMsg.twist.twist.linear.x = deltaSteps / ((currentTime-lastTime).toSec()); // "deltaSteps" is the linear distance covered in meters from the last update (delta distance);// // the time from the last update is measured in seconds thus to get m/s we multiply them.// odomMsg.twist.twist.angular.z = deltaTheta / ((currentTime-lastTime).toSec()); // "deltaTheta" is the angular distance covered in radiant from the last update (delta angle);// the time from the last update is measured in seconds thus to get rad/s we multiply them.if(DEBUG_ODOMETRY)std::cout << "[" << epuckname << "] " << "time elapsed = " << (currentTime-lastTime).toSec() << " seconds" << std::endl;lastTime = ros::Time::now();odomPublisher.publish(odomMsg);// Publish the transform over tf.geometry_msgs::TransformStamped odomTrans;odomTrans.header.stamp = odomMsg.header.stamp;odomTrans.header.frame_id = odomMsg.header.frame_id;odomTrans.child_frame_id = odomMsg.child_frame_id;odomTrans.transform.translation.x = odomMsg.pose.pose.position.x;odomTrans.transform.translation.y = odomMsg.pose.pose.position.y;odomTrans.transform.translation.z = 0.0;odomTrans.transform.rotation = orientQuat;br.sendTransform(odomTrans);nokov_pose_buf_00.clear();}//#############################################################################################################################################//############################################################################################################################################# // IMU topicss.str(std::string());ss << epuckname << "/base_link";imuMsg.header.frame_id = ss.str();imuMsg.header.stamp = ros::Time::now();// Exchange x and y to be compatible with e-puck 1.ximuMsg.linear_acceleration.x = (accData[1])/800.0*STANDARD_GRAVITY; // 1 g = about 800, then transforms in m/s^2.imuMsg.linear_acceleration.y = (accData[0])/800.0*STANDARD_GRAVITY;imuMsg.linear_acceleration.z = (accData[2])/800.0*STANDARD_GRAVITY;imuMsg.linear_acceleration_covariance[0] = 0.01;imuMsg.linear_acceleration_covariance[1] = 0.0;imuMsg.linear_acceleration_covariance[2] = 0.0;imuMsg.linear_acceleration_covariance[3] = 0.0;imuMsg.linear_acceleration_covariance[4] = 0.01;imuMsg.linear_acceleration_covariance[5] = 0.0;imuMsg.linear_acceleration_covariance[6] = 0.0;imuMsg.linear_acceleration_covariance[7] = 0.0;imuMsg.linear_acceleration_covariance[8] = 0.01;if(DEBUG_IMU)std::cout << "[" << epuckname << "] " << "accel raw: " << accData[0] << ", " << accData[1] << ", " << accData[2] << std::endl;if(DEBUG_IMU)std::cout << "[" << epuckname << "] " << "accel (m/s2): " << imuMsg.linear_acceleration.x << ", " << imuMsg.linear_acceleration.y << ", " << imuMsg.linear_acceleration.z << std::endl;imuMsg.angular_velocity.x = (gyroRaw[0] - gyroOffset[0]) * DEG2RAD(GYRO_RAW2DPS); // rad/simuMsg.angular_velocity.y = (gyroRaw[1] - gyroOffset[1]) * DEG2RAD(GYRO_RAW2DPS);imuMsg.angular_velocity.z = (gyroRaw[2] - gyroOffset[2]) * DEG2RAD(GYRO_RAW2DPS);imuMsg.angular_velocity_covariance[0] = 0.01;imuMsg.angular_velocity_covariance[1] = 0.0;imuMsg.angular_velocity_covariance[2] = 0.0;imuMsg.angular_velocity_covariance[3] = 0.0;imuMsg.angular_velocity_covariance[4] = 0.01;imuMsg.angular_velocity_covariance[5] = 0.0;imuMsg.angular_velocity_covariance[6] = 0.0;imuMsg.angular_velocity_covariance[7] = 0.0;imuMsg.angular_velocity_covariance[8] = 0.01;if(DEBUG_IMU)std::cout << "[" << epuckname << "] " << "gyro raw: " << gyroRaw[0] << ", " << gyroRaw[1] << ", " << gyroRaw[2] << std::endl;if(DEBUG_IMU)std::cout << "[" << epuckname << "] " << "gyro (rad/s): " << imuMsg.angular_velocity.x << ", " << imuMsg.angular_velocity.y << ", " << imuMsg.angular_velocity.z << std::endl; // Pitch and roll computed assuming the aerospace rotation sequence Rxyzdouble roll = atan2(imuMsg.linear_acceleration.y, imuMsg.linear_acceleration.z);double pitch = atan2(-imuMsg.linear_acceleration.x, sqrt(imuMsg.linear_acceleration.y*imuMsg.linear_acceleration.y + imuMsg.linear_acceleration.z*imuMsg.linear_acceleration.z));double yaw = 0.0;orientQuat = tf::createQuaternionMsgFromRollPitchYaw(roll, pitch, yaw);imuMsg.orientation = orientQuat;imuMsg.orientation_covariance[0] = 0.01;imuMsg.orientation_covariance[1] = 0.0;imuMsg.orientation_covariance[2] = 0.0;imuMsg.orientation_covariance[3] = 0.0;imuMsg.orientation_covariance[4] = 0.01;imuMsg.orientation_covariance[5] = 0.0;imuMsg.orientation_covariance[6] = 0.0;imuMsg.orientation_covariance[7] = 0.0;imuMsg.orientation_covariance[8] = 0.01;if(DEBUG_IMU)std::cout << "[" << epuckname << "] " << "roll=" << roll << ", pitch=" << pitch << std::endl; imuPublisher.publish(imuMsg); //############################################################################################################################################# //############################################################################################################################################# // Magnetic field topicss.str(std::string());ss << epuckname << "/base_link";magFieldMsg.header.frame_id = ss.str();magFieldMsg.header.stamp = ros::Time::now();magFieldMsg.magnetic_field_covariance[0] = 0.01;magFieldMsg.magnetic_field_covariance[4] = 0.01;magFieldMsg.magnetic_field_covariance[8] = 0.01;magFieldMsg.magnetic_field.x = magneticField[0]/1000000.0; // given in TeslamagFieldMsg.magnetic_field.y = magneticField[1]/1000000.0; // given in TeslamagFieldMsg.magnetic_field.z = magneticField[2]/1000000.0; // given in Teslaif(DEBUG_MAG_FIELD)std::cout << "[" << epuckname << "] " << "mag field (Tesla): " << magFieldMsg.magnetic_field.x << ", " << magFieldMsg.magnetic_field.y << ", " << magFieldMsg.magnetic_field.z << std::endl;magFieldPublisher.publish(magFieldMsg); // Magnetic field vector (normalized) topic// The resulting vector will have a length of 1 meterss.str(std::string());ss << epuckname << "/base_link";magFieldVectorMsg.header.frame_id = ss.str();magFieldVectorMsg.header.stamp = ros::Time::now();magFieldVectorMsg.type = visualization_msgs::Marker::ARROW;geometry_msgs::Point p;// Start pointp.x = 0.0;p.y = 0.0;p.z = 0.0;magFieldVectorMsg.points.clear();magFieldVectorMsg.points.push_back(p);double mod = sqrt(magFieldMsg.magnetic_field.x*magFieldMsg.magnetic_field.x + magFieldMsg.magnetic_field.y*magFieldMsg.magnetic_field.y);// End pointp.x = magFieldMsg.magnetic_field.x/mod;p.y = magFieldMsg.magnetic_field.y/mod;magFieldVectorMsg.points.push_back(p);magFieldVectorMsg.scale.x = 0.002;magFieldVectorMsg.scale.y = 0.003;magFieldVectorMsg.scale.z = 0.005;magFieldVectorMsg.color.a = 1.0;magFieldVectorMsg.color.r = 1.0;magFieldVectorMsg.color.g = 1.0;magFieldVectorMsg.color.b = 0.0;magFieldVectorPublisher.publish(magFieldVectorMsg); //############################################################################################################################################# //############################################################################################################################################# // Microphone topicss.str(std::string());ss << epuckname << "/base_link";microphoneMsg.header.frame_id = ss.str();microphoneMsg.header.stamp = ros::Time::now();microphoneMsg.type = visualization_msgs::Marker::TEXT_VIEW_FACING;microphoneMsg.pose.position.x = 0.15;microphoneMsg.pose.position.y = 0;microphoneMsg.pose.position.z = 0.11;orientQuat = tf::createQuaternionMsgFromYaw(0);microphoneMsg.pose.orientation = orientQuat;microphoneMsg.scale.z = 0.01;microphoneMsg.color.a = 1.0;microphoneMsg.color.r = 1.0;microphoneMsg.color.g = 1.0;microphoneMsg.color.b = 1.0;ss.str(std::string());;ss << "mic: [" << micVolume[0] << ", " << micVolume[1] << ", " << micVolume[2] << ", " << micVolume[3] << "]";microphoneMsg.text = ss.str();microphonePublisher.publish(microphoneMsg);//############################################################################################################################################# //############################################################################################################################################# // Time of flight topicdistSensMsg.range = (float)distanceMm/1000.0;if(distSensMsg.range > distSensMsg.max_range) {distSensMsg.range = distSensMsg.max_range;}if(distSensMsg.range < distSensMsg.min_range) {distSensMsg.range = distSensMsg.min_range;}distSensMsg.header.stamp = ros::Time::now();distSensPublisher.publish(distSensMsg);transform.setOrigin( tf::Vector3(0.035, 0.0, 0.034) ); q.setRPY(0, -0.21, 0.0);transform.setRotation(q); parent.str(""); parent << epuckname << "/base_dist_sens";child.str("");child << epuckname << "/base_link";br.sendTransform(tf::StampedTransform(transform, ros::Time::now(), child.str(), parent.str()));//#############################################################################################################################################//############################################################################################################################################# // Battery topicss.str(std::string());ss << epuckname << "/base_link";battMsg.header.frame_id = ss.str();battMsg.header.stamp = ros::Time::now();battMsg.voltage = (float)batteryRaw / COEFF_ADC_TO_VOLT;battMsg.percentage = (battMsg.voltage - MIN_VOLTAGE) / (MAX_VOLTAGE - MIN_VOLTAGE);battMsg.present = 1;if(DEBUG_BATTERY)std::cout << "[" << epuckname << "] " << "battery V: " << battMsg.voltage << ", " << battMsg.percentage*100.0 << " %" << std::endl;battPublisher.publish(battMsg); //############################################################################################################################################# //############################################################################################################################################# // Camera image topicif(camera_enabled) {if(newImageReceived) {newImageReceived = false;cv::Mat rgb888;cv_bridge::CvImage out_msg;out_msg.header.stamp = ros::Time::now();; // Same timestamp and tf frame as input imagergb888 = cv::Mat(120, 160, CV_8UC3);RGB565toRGB888(160, 120, &image[0], rgb888.data); out_msg.encoding = sensor_msgs::image_encodings::RGB8;out_msg.image = rgb888;imagePublisher.publish(out_msg.toImageMsg());}}//############################################################################################################################################# //############################################################################################################################################# // Ground sensor topicif(ground_sensors_enabled) {ss.str(std::string());ss << epuckname << "/base_link";floorMsg.header.frame_id = ss.str();floorMsg.header.stamp = ros::Time::now();floorMsg.type = visualization_msgs::Marker::TEXT_VIEW_FACING;floorMsg.pose.position.x = 0.15;floorMsg.pose.position.y = 0;floorMsg.pose.position.z = 0.13;orientQuat = tf::createQuaternionMsgFromYaw(0);floorMsg.pose.orientation = orientQuat;floorMsg.scale.z = 0.01;floorMsg.color.a = 1.0;floorMsg.color.r = 1.0;floorMsg.color.g = 1.0;floorMsg.color.b = 1.0;ss.str(std::string());ss << "floor: [" << groundProx[0] << ", " << groundProx[1] << ", " << groundProx[2] << ", " << groundProx[3] << ", " << groundProx[4] << "]";floorMsg.text = ss.str();floorPublisher.publish(floorMsg);}//############################################################################################################################################# }void handlerVelocity(const geometry_msgs::Twist::ConstPtr& msg) {// Controls the velocity of each wheel based on linear and angular velocities.double linear = msg->linear.x/3; // Divide by 3 to adapt the values received from the rviz "teleop" module that are too high.double angular = msg->angular.z/3;// Kinematic model for differential robot.double wl = (linear - (WHEEL_SEPARATION / 2.0) * angular) / WHEEL_DIAMETER;double wr = (linear + (WHEEL_SEPARATION / 2.0) * angular) / WHEEL_DIAMETER;// At input 1000, angular velocity is 1 cycle / s or 2*pi/s.speedLeft = int(wl * 1000.0);speedRight = int(wr * 1000.0);command[3] = speedLeft & 0xFF; // left motor LSBcommand[4] = speedLeft >> 8; // left motor MSBcommand[5] = speedRight & 0xFF; // right motor LSBcommand[6] = speedRight >> 8; // right motor MSB if(DEBUG_SPEED_RECEIVED)std::cout << "[" << epuckname << "] " << "new speed: " << speedLeft << ", " << speedRight << std::endl;}void handlerLED(const std_msgs::UInt8MultiArray::ConstPtr& msg) {// Controls the state of each LED on the standard robotfor (int i = 0; i < LED_NUMBER; i++) {if(msg->data[i] == 0) {command[7] &= ~(1<<i);} else {command[7] |= (1<<i);}}if(DEBUG_LED_RECEIVED) {std::cout << "[" << epuckname << "] " << "new LED status: " << std::endl;for (int i = 0; i < LED_NUMBER; i++)std::cout << msg->data[i] << ", ";}

}void handlerRgbLeds(const std_msgs::UInt8MultiArray::ConstPtr& msg) {command[8] = msg->data[0]; // LED2 redcommand[9] = msg->data[1]; // LED2 greencommand[10] = msg->data[2]; // LED2 bluecommand[11] = msg->data[3]; // LED4 redcommand[12] = msg->data[4]; // LED4 greencommand[13] = msg->data[5]; // LED4 bluecommand[14] = msg->data[6]; // LED6 redcommand[15] = msg->data[7]; // LED6 greencommand[16] = msg->data[8]; // LED6 bluecommand[17] = msg->data[9]; // LED8 redcommand[18] = msg->data[10]; // LED8 greencommand[19] = msg->data[11]; // LED8 blue if(DEBUG_RGB_RECEIVED) {std::cout << "[" << epuckname << "] " << "new RGB status: " << std::endl;for (int i = 0; i < RGB_LED_NUMBER; i++) {std::cout << i << ": " << msg->data[i*3] << ", " << msg->data[i*3+1] << ", " << msg->data[i*3+2];}}

}int main(int argc,char *argv[]) {int robotId = 0; double init_xpos, init_ypos, init_theta;int rosRate = 0;unsigned int bufIndex = 0;int i = 0;command[0] = 0x80;command[1] = 2; // Sensors enabled.command[2] = 1; // Calibrate proximity sensors.command[3] = 0; // left motor LSBcommand[4] = 0; // left motor MSBcommand[5] = 0; // right motor LSBcommand[6] = 0; // right motor MSBcommand[7] = 0; // lEDscommand[8] = 0; // LED2 redcommand[9] = 0; // LED2 greencommand[10] = 0; // LED2 bluecommand[11] = 0; // LED4 redcommand[12] = 0; // LED4 greencommand[13] = 0; // LED4 bluecommand[14] = 0; // LED6 redcommand[15] = 0; // LED6 greencommand[16] = 0; // LED6 bluecommand[17] = 0; // LED8 redcommand[18] = 0; // LED8 greencommand[19] = 0; // LED8 bluecommand[20] = 0; // speaker expected_recv_packets = 1;/*** The ros::init() function needs to see argc and argv so that it can perform* any ROS arguments and name remapping that were provided at the command line.* For programmatic remappings you can use a different version of init() which takes* remappings directly, but for most command-line programs, passing argc and argv is* the easiest way to do it. The third argument to init() is the name of the node.** You must call one of the versions of ros::init() before using any other* part of the ROS system.*/ros::init(argc, argv, "epuck_driver_cpp");/*** NodeHandle is the main access point to communications with the ROS system.* The first NodeHandle constructed will fully initialize this node, and the last* NodeHandle destructed will close down the node.*/ros::NodeHandle np("~"); // Private.ros::NodeHandle n; // Public.np.getParam("epuck2_id", robotId);np.param<std::string>("epuck2_address", epuckAddress, "");np.param<std::string>("epuck2_name", epuckname, "epuck2");np.param("xpos", init_xpos, 0.0);np.param("ypos", init_ypos, 0.0);np.param("theta", init_theta, 0.0);np.param("camera", camera_enabled, false);np.param("floor", ground_sensors_enabled, false);//np.param("ros_rate", rosRate, 7); if(DEBUG_ROS_PARAMS) {std::cout << "[" << epuckname << "] " << "epuck id: " << robotId << std::endl;std::cout << "[" << epuckname << "] " << "epuck address: " << epuckAddress << std::endl;std::cout << "[" << epuckname << "] " << "epuck name: " << epuckname << std::endl;std::cout << "[" << epuckname << "] " << "init pose: " << init_xpos << ", " << init_ypos << ", " << theta << std::endl;std::cout << "[" << epuckname << "] " << "camera enabled: " << camera_enabled << std::endl;std::cout << "[" << epuckname << "] " << "ground sensors enabled: " << ground_sensors_enabled << std::endl;//std::cout << "[" << epuckname << "] " << "ros rate: " << rosRate << std::endl;}if(epuckAddress.compare("")==0) {std::cerr << "Robot address cannot be empty" << std::endl;return -1;}if(initConnectionWithRobot()<0) {std::cerr << "Can't connect to the robot" << std::endl;exit(1);} imuPublisher = n.advertise<sensor_msgs::Imu>("imu", 10);for(i=0; i<8; i++) {/*** The advertise() function is how you tell ROS that you want to* publish on a given topic name. This invokes a call to the ROS* master node, which keeps a registry of who is publishing and who* is subscribing. After this advertise() call is made, the master* node will notify anyone who is trying to subscribe to this topic name,* and they will in turn negotiate a peer-to-peer connection with this* node. advertise() returns a Publisher object which allows you to* publish messages on that topic through a call to publish(). Once* all copies of the returned Publisher object are destroyed, the topic* will be automatically unadvertised.** The second parameter to advertise() is the size of the message queue* used for publishing messages. If messages are published more quickly* than we can send them, the number here specifies how many messages to* buffer up before throwing some away.*/std::stringstream ss;ss.str("");ss << "proximity" << i;proxPublisher[i] = n.advertise<sensor_msgs::Range>(ss.str(), 10);//proxMsg[i] = new sensor_msgs::Range();proxMsg[i].radiation_type = sensor_msgs::Range::INFRARED;ss.str("");ss << epuckname << "/base_prox" << i;proxMsg[i].header.frame_id = ss.str();proxMsg[i].field_of_view = 0.26; // About 15 degrees...to be checked!proxMsg[i].min_range = 0.005; // 0.5 cm.proxMsg[i].max_range = 0.05; // 5 cm. } laserPublisher = n.advertise<sensor_msgs::LaserScan>("scan", 10);odomPublisher = n.advertise<nav_msgs::Odometry>("odom", 10);currentTime = ros::Time::now();lastTime = ros::Time::now(); microphonePublisher = n.advertise<visualization_msgs::Marker>("microphone", 10); distSensPublisher = n.advertise<sensor_msgs::Range>("dist_sens", 10);distSensMsg.radiation_type = sensor_msgs::Range::INFRARED;std::stringstream ss;ss.str("");ss << epuckname << "/base_dist_sens";distSensMsg.header.frame_id = ss.str();distSensMsg.field_of_view = 0.43; // About 25 degrees (+/- 12.5)distSensMsg.min_range = 0.005; // 5 mm.distSensMsg.max_range = 2; // 2 m. magFieldPublisher = n.advertise<sensor_msgs::MagneticField>("mag_field", 10);magFieldVectorPublisher = n.advertise<visualization_msgs::Marker>("mag_field_vector", 10);battPublisher = n.advertise<sensor_msgs::BatteryState>("battery", 10);if(camera_enabled) {imagePublisher = n.advertise<sensor_msgs::Image>("camera", 1);command[1] = 3; // Camera and sensors enabled.expected_recv_packets = 2;}if(ground_sensors_enabled) {floorPublisher = n.advertise<visualization_msgs::Marker>("floor", 10);}/*** The subscribe() call is how you tell ROS that you want to receive messages* on a given topic. This invokes a call to the ROS* master node, which keeps a registry of who is publishing and who* is subscribing. Messages are passed to a callback function, here* called handlerVelocity. subscribe() returns a Subscriber object that you* must hold on to until you want to unsubscribe. When all copies of the Subscriber* object go out of scope, this callback will automatically be unsubscribed from* this topic.** The second parameter to the subscribe() function is the size of the message* queue. If messages are arriving faster than they are being processed, this* is the number of messages that will be buffered up before beginning to throw* away the oldest ones.*/cmdVelSubscriber = n.subscribe("mobile_base/cmd_vel", 10, handlerVelocity);cmdLedSubscriber = n.subscribe("mobile_base/cmd_led", 10, handlerLED);cmdRgbLedsSubscriber = n.subscribe("mobile_base/rgb_leds", 10, handlerRgbLeds);cmdNokovSubscriber = n.subscribe("/vrpn_client_node/Tracker0/pose", 1000, NoKovCallback00);theta = init_theta;xPos = init_xpos;yPos = init_ypos;//ros::Rate loop_rate(rosRate);while (ros::ok()) {updateSensorsAndActuators();updateRosInfo();ros::spinOnce();//loop_rate.sleep(); // Do not call "sleep" otherwise the bluetooth communication will hang.// We communicate as fast as possible, this shouldn't be a problem...}closeConnection();}