DINOv3 论文精读(逐段解析)

DINOv3 论文精读(逐段解析)

论文地址:https://arxiv.org/abs/2508.10104

工程地址:https://github.com/facebookresearch/dinov3

2025

Meta AI 研究院

======================================================================

【论文总结】:DINOv3是一个突破性的自监督视觉基础模型,其核心技术创新围绕三个关键方面:大规模数据与模型协同扩展、Gram锚定技术解决密集特征退化、多阶段训练策略。首先,通过精心设计的三重数据策略(聚类策划+检索策划+标准数据集混合)和70亿参数ViT架构,实现了数据与模型的协同扩展,解决了传统自监督学习在规模化时的稳定性问题。其次,最具创新性的Gram锚定技术通过约束patch特征间的Gram矩阵相似性结构,有效解决了长期训练中密集特征质量退化的问题,使模型在保持全局语义理解能力的同时维持精确的空间定位能力。最后,采用多阶段训练流程:基础自监督训练→Gram锚定细化→高分辨率适应→知识蒸馏,每个阶段都针对特定目标进行优化,最终产生了一个真正通用的视觉编码器,在无需微调的情况下就能在目标检测、语义分割、深度估计等多种任务上达到最优性能,为计算机视觉领域树立了新的技术标杆。

======================================================================

Abstract

Self-supervised learning holds the promise of eliminating the need for manual data annotation, enabling models to scale effortlessly to massive datasets and larger architectures. By not being tailored to specific tasks or domains, this training paradigm has the potential to learn visual representations from diverse sources, ranging from natural to aerial images— using a single algorithm. This technical report introduces DINOv3, a major milestone toward realizing this vision by leveraging simple yet effective strategies. First, we leverage the benefit of scaling both dataset and model size by careful data preparation, design, and optimization. Second, we introduce a new method called Gram anchoring, which effectively addresses the known yet unsolved issue of dense feature maps degrading during long training schedules. Finally, we apply post-hoc strategies that further enhance our models’ flexibility with respect to resolution, model size, and alignment with text. As a result, we present a versatile vision foundation model that outperforms the specialized state of the art across a broad range of settings, without fine-tuning. DINOv3 produces high-quality dense features that achieve outstanding performance on various vision tasks, significantly surpassing previous self- and weakly-supervised foundation models. We also share the DINOv3 suite of vision models, designed to advance the state of the art on a wide spectrum of tasks and data by providing scalable solutions for diverse resource constraints and deployment scenarios.

【翻译】自监督学习有望消除手动数据标注的需求,使模型能够毫不费力地扩展到大规模数据集和更大的架构。通过不针对特定任务或领域进行定制,这种训练范式有潜力从多样化的来源学习视觉表示,从自然图像到航拍图像——使用单一算法。这份技术报告介绍了DINOv3,这是通过利用简单而有效的策略来实现这一愿景的重要里程碑。首先,我们通过仔细的数据准备、设计和优化来利用扩展数据集和模型大小的好处。其次,我们引入了一种称为Gram锚定的新方法,它有效地解决了密集特征图在长期训练计划中退化的已知但未解决的问题。最后,我们应用后处理策略,进一步增强我们模型在分辨率、模型大小和与文本对齐方面的灵活性。因此,我们提出了一个多功能的视觉基础模型,在不进行微调的情况下,在广泛的设置范围内超越了专业的最先进技术。DINOv3产生高质量的密集特征,在各种视觉任务上取得出色性能,显著超越了之前的自监督和弱监督基础模型。我们还分享了DINOv3视觉模型套件,旨在通过为不同资源约束和部署场景提供可扩展解决方案,在广泛的任务和数据范围内推进最先进技术。

【解析】自监督学习的本质是让模型从未标注的数据中自主学习有用的表示,这种方法的优势在于它不依赖人工标注,因此可以充分利用互联网上海量的图像数据。传统的监督学习需要为每张图像提供标签,这不仅成本高昂,而且限制了数据规模的扩展。而自监督学习通过设计巧妙的学习任务,让模型从数据本身的结构和模式中学习,从而绕过了标注瓶颈。DINOv3的第一个重要贡献是数据和模型的协同扩展。深度学习中,数据量和模型规模通常需要同步增长才能达到最佳效果。数据准备不仅包括收集更多图像,还涉及数据质量控制、多样性保证和分布平衡。模型设计则需要考虑如何有效利用增加的参数量,避免过拟合和训练不稳定等问题。第二个核心创新是Gram锚定技术。在长期训练过程中,密集特征图会逐渐失去细节信息,这是一个困扰自监督学习的关键问题。密集特征图包含图像中每个位置的特征信息,对于语义分割、目标检测等需要精确空间定位的任务至关重要。Gram锚定通过特定的正则化策略来保持这些密集特征的质量,确保模型在获得全局理解能力的同时,不会牺牲局部细节的表达能力。后处理策略的引入进一步提升了模型的实用性。最终的成果是一个真正通用的视觉基础模型,它在无需微调的情况下就能在多种任务上达到最优性能。"冻结骨干网络"的使用方式大大降低了模型部署的复杂性和计算成本,同时保证了在不同任务间的一致性表现。

1 Introduction

Foundation models have become a central building block in modern computer vision, enabling broad generalization across tasks and domains through a single, reusable model. Self-supervised learning (SSL) is a powerful approach for training such models, by learning directly from raw pixel data and leveraging the natural co-occurrences of patterns in images. Unlike weakly and fully supervised pretraining methods ( Radford et al. , 2021 ; Dehghani et al. , 2023 ; Bolya et al. , 2025 ) which require images paired with high-quality metadata, SSL unlocks training on massive, raw image collections. This is particularly effective for training large-scale visual encoders thanks to the availability of virtually unlimited training data. DINOv2 ( Oquab et al. , 2024 ) exemplifies these strengths, achieving impressive results in image understanding tasks ( Wang et al. , 2025 ) and enabling pre-training for complex domains such as histopathology ( Chen et al. , 2024 ). Models trained with SSL exhibit additional desirable properties: they are robust to input distribution shifts, provide strong global and local features, and generate rich embeddings that facilitate physical scene understanding. Since SSL models are not trained for any specific downstream task, they produce versatile and robust generalist features. For instance, DINOv2 models deliver strong performance across diverse tasks and domains without requiring task-specific finetuning, allowing a single frozen backbone to serve multiple purposes. Importantly, self-supervised learning is especially suitable to train on the vast amount of available observational data in domains like histopathology ( Vorontsov et al. , 2024 ), biology ( Kim et al. , 2025 ), medical imaging ( PérezGarcía et al. , 2025 ), remote sensing ( Cong et al. , 2022 ; Tolan et al. , 2024 ), astronomy ( Parker et al. , 2024 ), or high-energy particle physics ( Dillon et al. , 2022 ). These domain often lack metadata and have already been shown to benefit from foundation models like DINOv2. Finally, SSL, requiring no human intervention, is well-suited for lifelong learning amid the growing volume of web data.

【翻译】基础模型已成为现代计算机视觉的核心构建块,通过单个可重用模型实现跨任务和领域的广泛泛化。自监督学习(SSL)是训练此类模型的强大方法,通过直接从原始像素数据学习并利用图像中模式的自然共现性。与需要图像配对高质量元数据的弱监督和全监督预训练方法(Radford et al., 2021; Dehghani et al., 2023; Bolya et al., 2025)不同,SSL解锁了在大规模原始图像集合上的训练。由于几乎无限的训练数据的可用性,这对于训练大规模视觉编码器特别有效。DINOv2(Oquab et al., 2024)体现了这些优势,在图像理解任务中取得了令人印象深刻的结果(Wang et al., 2025),并为病理学等复杂领域的预训练提供了支持(Chen et al., 2024)。用SSL训练的模型表现出额外的理想特性:它们对输入分布偏移具有鲁棒性,提供强大的全局和局部特征,并生成有助于物理场景理解的丰富嵌入。由于SSL模型不是为任何特定的下游任务训练的,它们产生多功能且鲁棒的通用特征。例如,DINOv2模型在不需要任务特定微调的情况下,在各种任务和领域中提供强大的性能,允许单个冻结的骨干网络服务于多种目的。重要的是,自监督学习特别适合在病理学(Vorontsov et al., 2024)、生物学(Kim et al., 2025)、医学成像(PérezGarcía et al., 2025)、遥感(Cong et al., 2022; Tolan et al., 2024)、天文学(Parker et al., 2024)或高能粒子物理学(Dillon et al., 2022)等领域的大量可用观测数据上进行训练。这些领域通常缺乏元数据,并且已经被证明受益于像DINOv2这样的基础模型。最后,不需要人工干预的SSL非常适合在不断增长的网络数据量中进行终身学习。

【解析】基础模型的核心价值在于其"一次训练,处处使用"的特性。随着互联网数据的持续增长,模型需要能够不断适应新的数据分布和视觉概念,而自监督学习的无监督特性使这种持续学习成为可能。

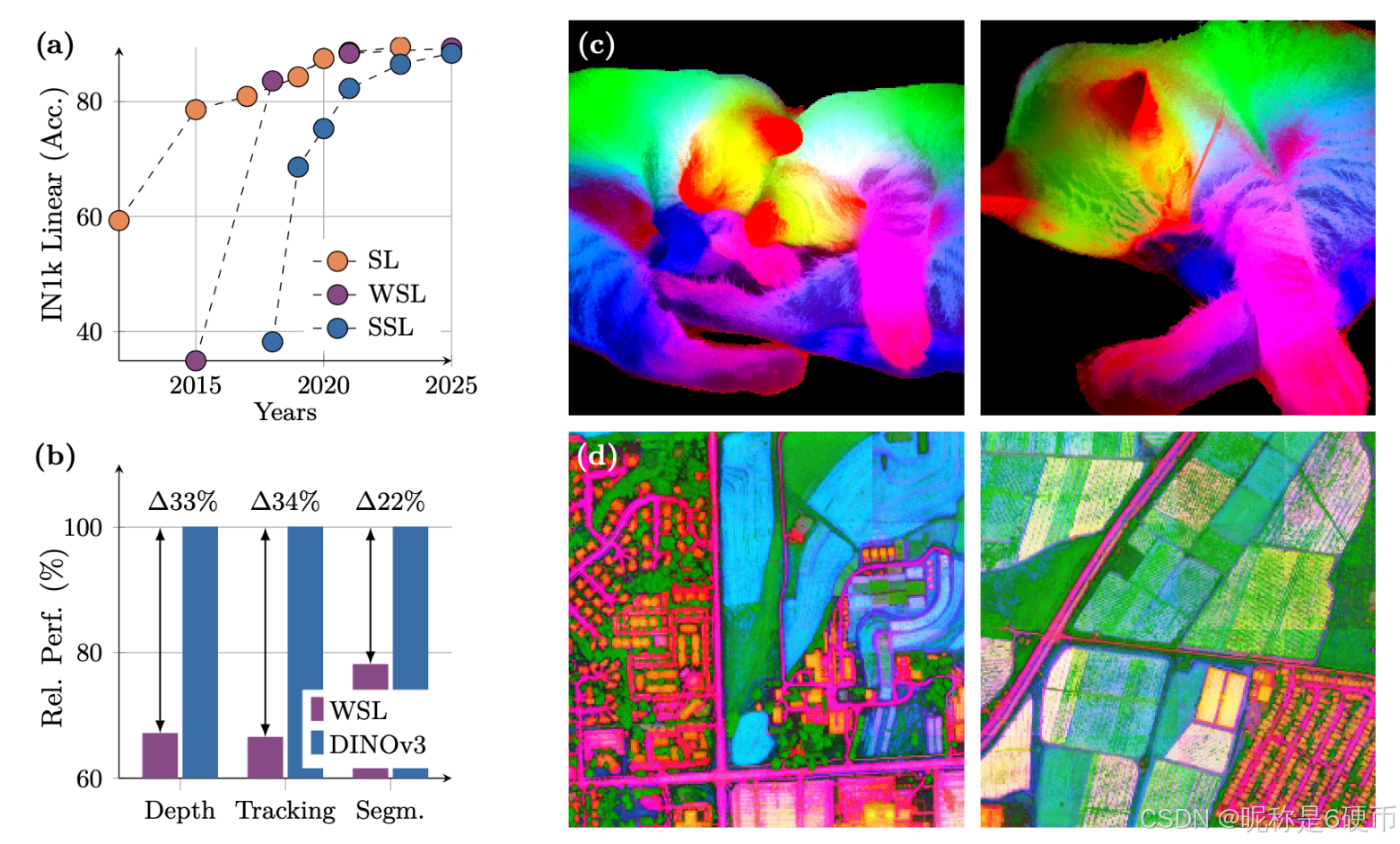

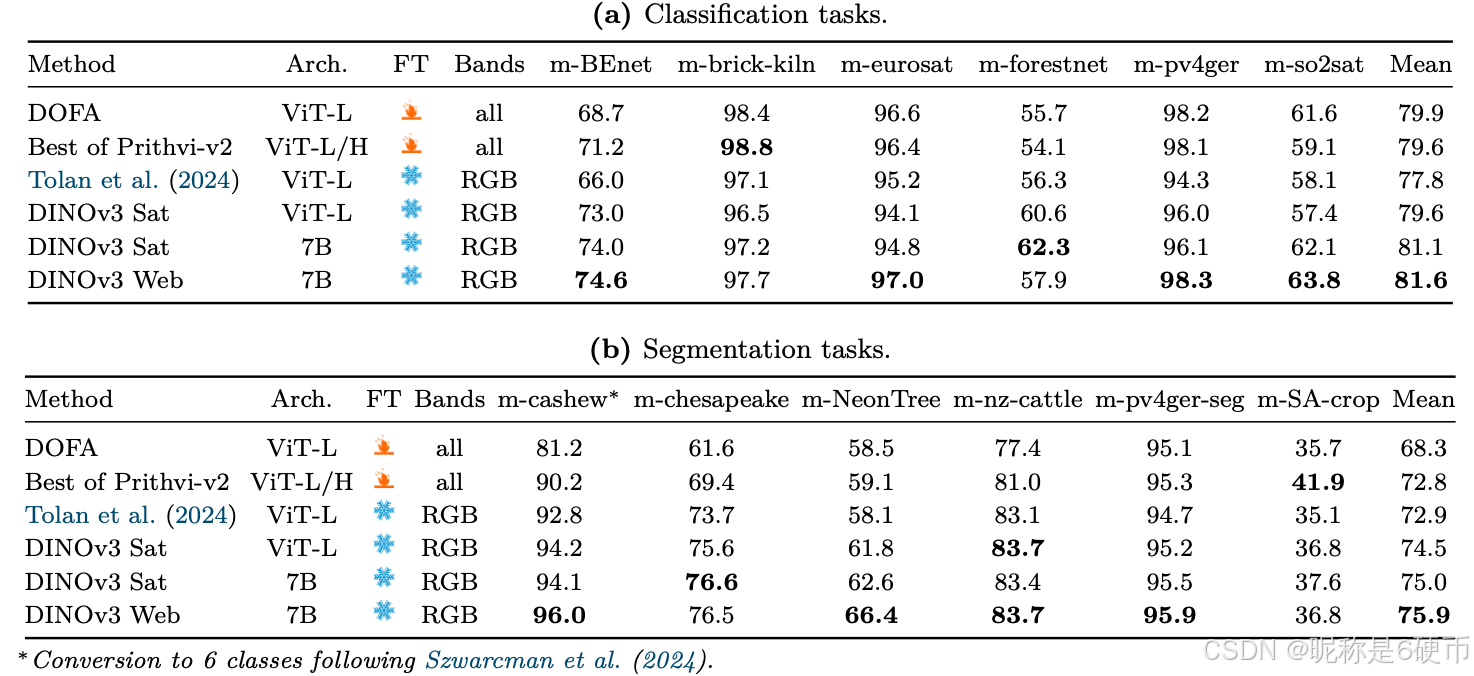

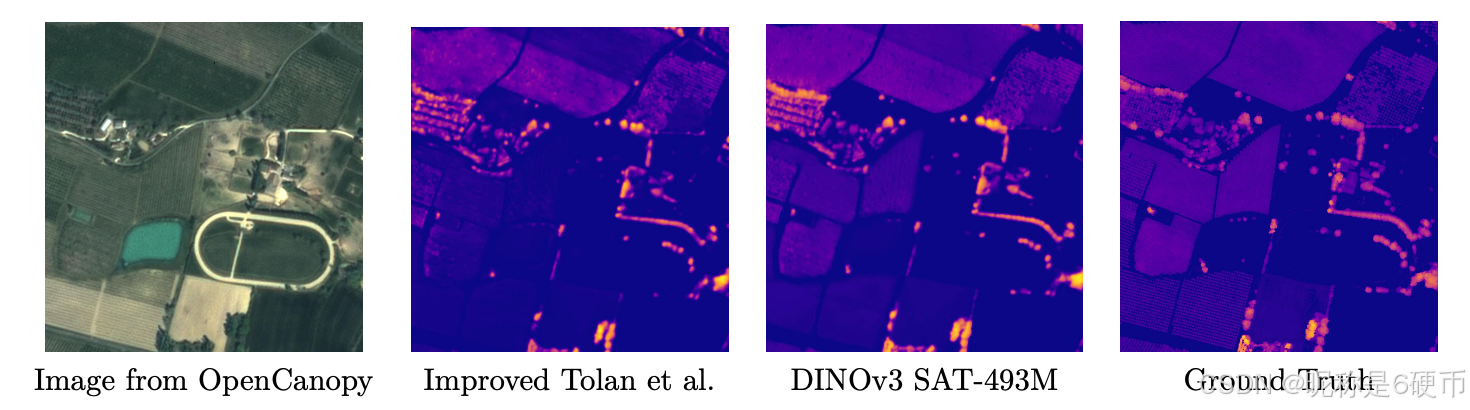

Figure 1: (a) Evolution of linear probing results on ImageNet1k (IN1k) over the years, comparing fully(SL), weakly- (WSL) and self-supervised learning (SSL) methods. Despite coming into the picture later, SSL has quickly progressed and now reached the Imagenet accuracy plateau of recent years. On the other hand, we demonstrate that SSL offers the unique promise of high-quality dense features. With DINOv3, we markedly improve over weakly-supervised models on dense tasks, as shown by the relative performance of the best-in-class WSL models to DINOv3 (b). We also produce PCA maps of features obtained from high resolution images with DINOv3 trained on natural © and aerial images (d).

【翻译】图1:(a) 多年来ImageNet1k (IN1k)上线性探测结果的演变,比较了全监督(SL)、弱监督(WSL)和自监督学习(SSL)方法。尽管SSL出现较晚,但它快速发展,现在已经达到了近年来ImageNet准确率的平台期。另一方面,我们证明SSL提供了高质量密集特征的独特优势。通过DINOv3,我们在密集任务上显著超越了弱监督模型,如最佳WSL模型与DINOv3的相对性能所示(b)。我们还生成了从DINOv3在自然图像©和航拍图像(d)上训练得到的高分辨率图像特征的PCA图。

【解析】这个图表展示了自监督学习发展的技术突破。线性探测是评估预训练模型质量的标准方法,它将预训练的特征提取器冻结,只训练一个简单的线性分类器来完成下游任务。能够直接反映预训练模型学到的表示质量,而不受下游任务特定优化的影响。图中显示的演变过程反映了深度学习发展的三个主要阶段:全监督学习依赖大量人工标注数据,在早期取得了显著成功;弱监督学习利用图像与文本的配对关系,通过CLIP等模型实现了新的突破;自监督学习虽然起步较晚,但通过巧妙的任务设计避免了对标注数据的依赖,最终在性能上追平甚至超越了其他方法。密集特征质量的提升是DINOv3的核心优势。传统的视觉模型往往专注于全局图像理解,在提取局部细节特征时表现不佳。密集特征指的是模型对图像中每个位置都能产生有意义的特征表示,这对于语义分割、目标检测、深度估计等需要精确空间定位的任务至关重要。PCA可视化展示了模型学到的特征在语义上的连贯性和空间上的精确性,不同颜色区域对应不同的语义概念,颜色边界与实际物体边界的吻合程度反映了特征质量的高低。

In practice, the promise of SSL, namely producing arbitrarily large and powerful models by leveraging large amounts of unconstrained data, remains challenging at scale. While model instabilities and collapse are mitigated by the heuristics proposed by Oquab et al. ( 2024 ), more problems emerge from scaling further. First, it is unclear how to collect useful data from unlabeled collections. Second, in usual training practice, employing cosine schedules implies knowing the optimization horizon a priori, which is difficult when training on large image corpora. Third, the performance of the features gradually decreases after early training, confirmed by visual inspection of the patch similarity maps. This phenomenon appears in longer training runs with models above ViT-Large size (300M parameters), reducing the usefulness of scaling DINOv2.

【翻译】在实践中,SSL的潜力,即通过利用大量无约束数据来产生任意大型和强大的模型,在规模化时仍然具有挑战性。虽然Oquab等人(2024)提出的启发式方法缓解了模型不稳定性和崩溃问题,但进一步扩展时会出现更多问题。首先,如何从未标记的集合中收集有用数据尚不清楚。其次,在通常的训练实践中,采用余弦调度需要先验地知道优化视界,这在大型图像语料库上训练时很困难。第三,特征的性能在早期训练后逐渐下降,这通过补丁相似性图的视觉检查得到了证实。这种现象出现在ViT-Large规模以上(300M参数)模型的长期训练运行中,降低了扩展DINOv2的有用性。

【解析】这段话表明了自监督学习规模化过程中面临的三个技术挑战。数据收集的困难在于无标注数据的质量参差不齐,需要设计有效的数据筛选和预处理策略来确保训练数据的多样性和代表性,同时避免噪声数据对模型性能的负面影响。优化调度的问题源于深度学习训练的复杂性。余弦学习率调度是一种常用的学习率衰减策略,它要求预先设定总的训练步数,然后按照余弦函数的形状逐渐降低学习率。但在大规模数据集上,很难准确估计达到收敛所需的训练时间,这使得制定有效的学习率调度变得困难。最关键的问题是特征质量的退化现象。在长期训练过程中,模型虽然在全局任务上表现持续改善,但局部特征的质量却开始下降。这种现象可以通过补丁相似性图观察到:高质量的特征应该让语义相似的图像区域在特征空间中距离较近,而语义不同的区域距离较远。当这种相似性模式变得模糊或混乱时,说明模型的密集特征表示能力在退化。这个问题在大模型中更加严重,因为更多的参数和更长的训练时间放大了这种退化效应,这也是为什么简单地扩大DINOv2模型规模无法带来预期收益的根本原因。

Addressing the problems above leads to this work, DINOv3 , which advances SSL training at scale. We demonstrate that a single frozen SSL backbone can serve as a universal visual encoder that achieves stateof-the-art performance on challenging downstream tasks, outperforming supervised and metadata-reliant pre-training strategies. Our research is guided by the following objectives: (1) training a foundational model versatile across tasks and domains, (2) improving the shortcomings of existing SSL models on dense features, (3) disseminating a family of models that can be used off-the-shelf. We discuss the three aims in the following.

【翻译】解决上述问题导致了这项工作,DINOv3,它推进了SSL的大规模训练。我们证明了单个冻结的SSL骨干网络可以作为通用视觉编码器,在具有挑战性的下游任务上达到最先进的性能,超越了监督学习和依赖元数据的预训练策略。我们的研究遵循以下目标:(1) 训练一个跨任务和领域的通用基础模型,(2) 改善现有SSL模型在密集特征方面的不足,(3) 传播一系列可以开箱即用的模型。我们在下文中讨论这三个目标。

【解析】通用视觉编码器的实现需要模型具备强大的特征抽象能力,能够从原始像素数据中提取出既包含全局语义信息又保留局部细节的多层次表示。这种表示需要具备足够的普适性,使得同一套特征能够同时支持图像分类、目标检测、语义分割、深度估计等多种视觉任务。第一个目标强调了基础模型的跨域泛化能力,这要求模型不仅能在自然图像上表现优异,还能适应医学影像、卫星图像、显微镜图像等专业领域的数据分布。第二个目标针对密集特征质量的提升,密集特征指模型对图像中每个空间位置都能产生有意义的特征表示,这对于需要精确空间定位的任务至关重要。现有SSL模型往往在长期训练过程中出现密集特征退化现象,导致局部细节信息丢失。第三个目标通过提供不同规模的预训练模型来满足不同计算资源约束下的应用需求,降低先进视觉技术的使用门槛。

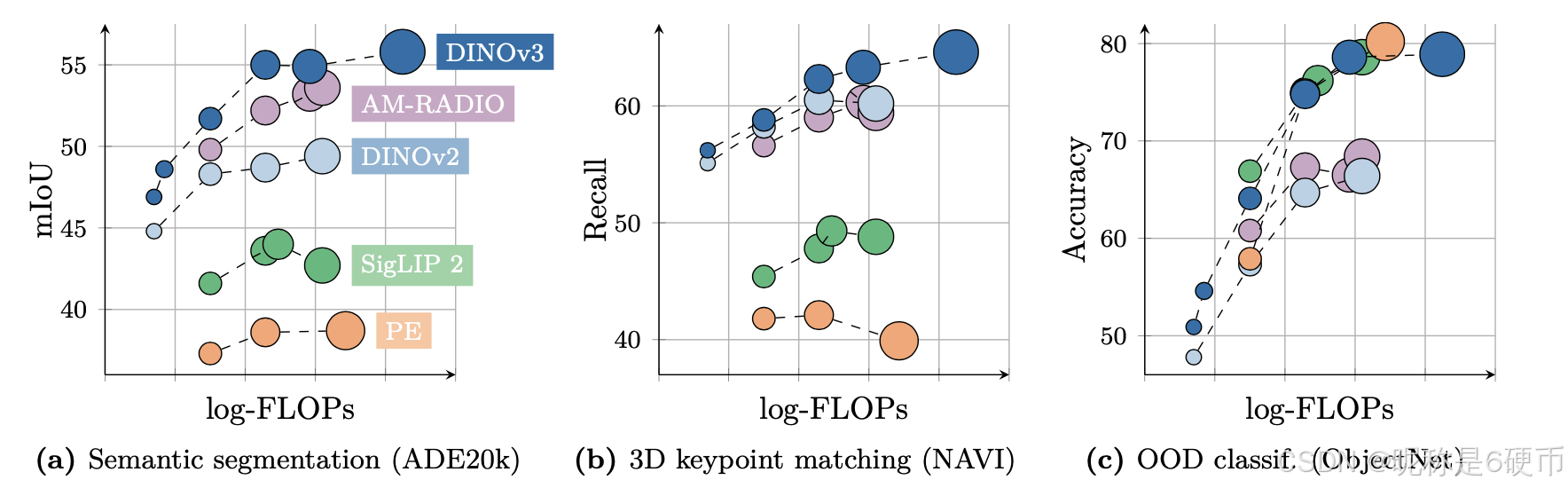

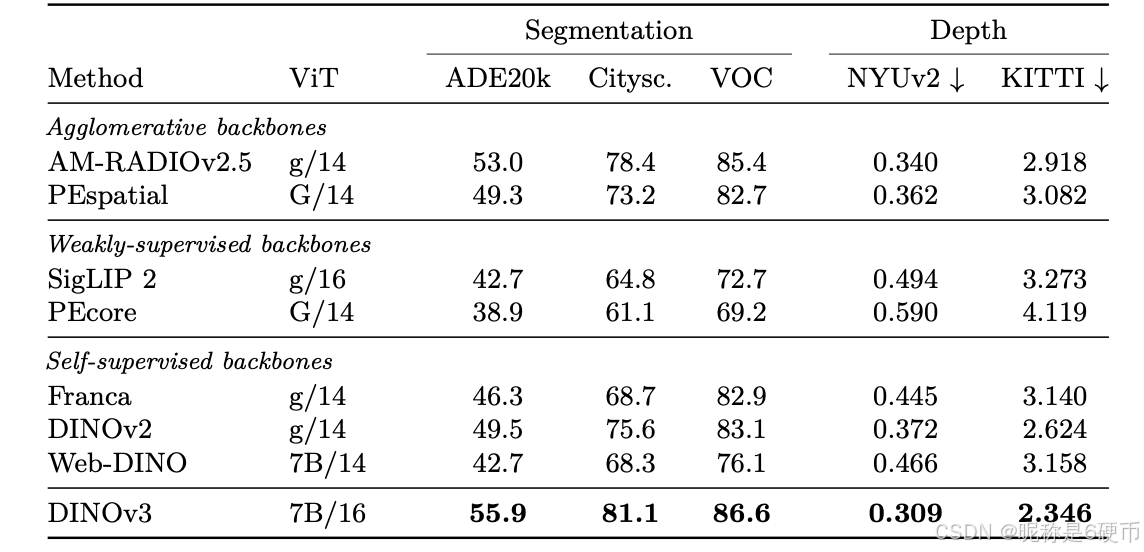

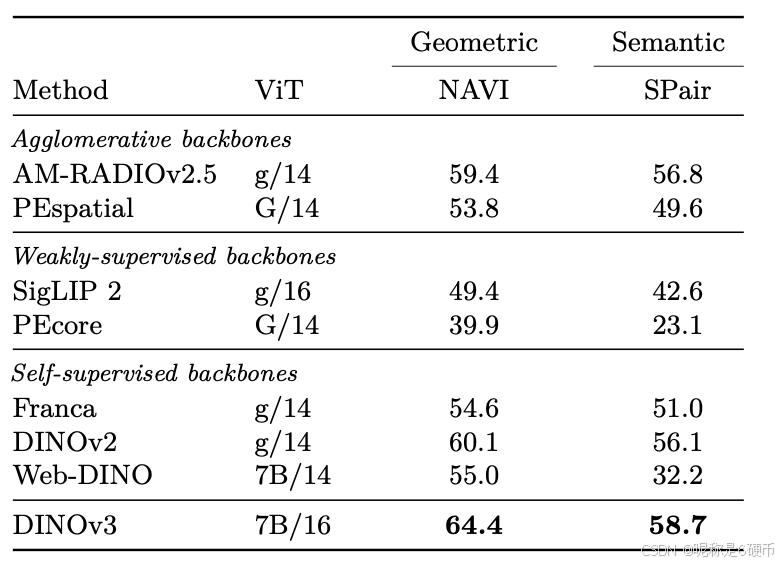

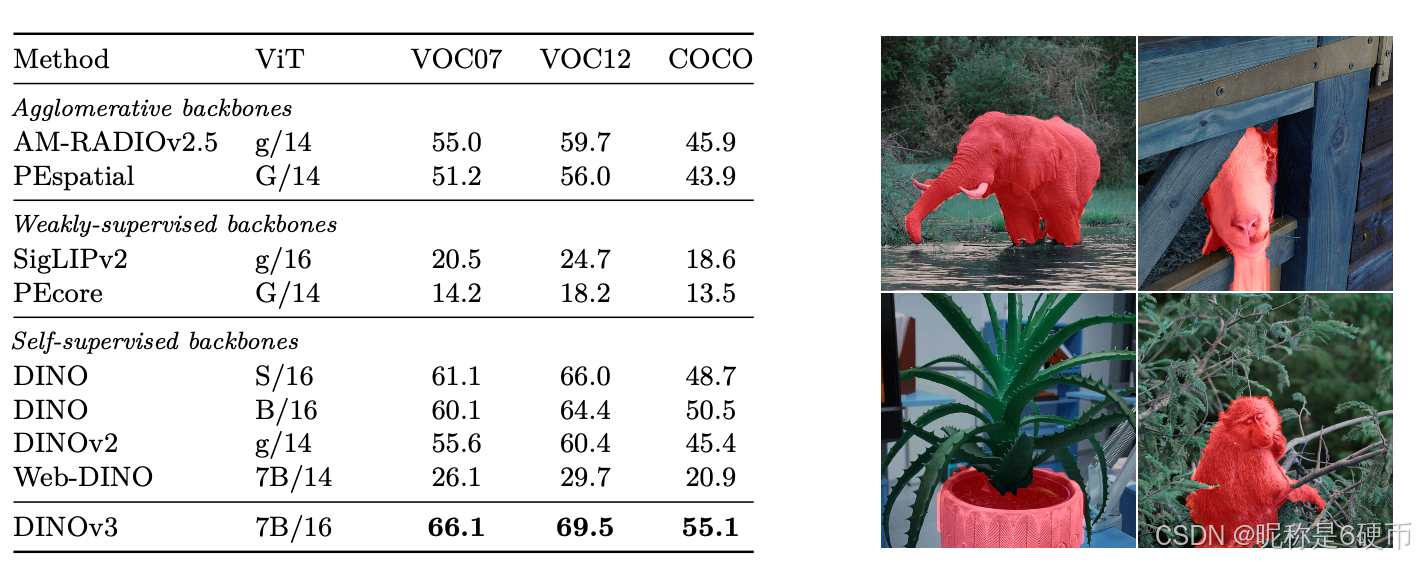

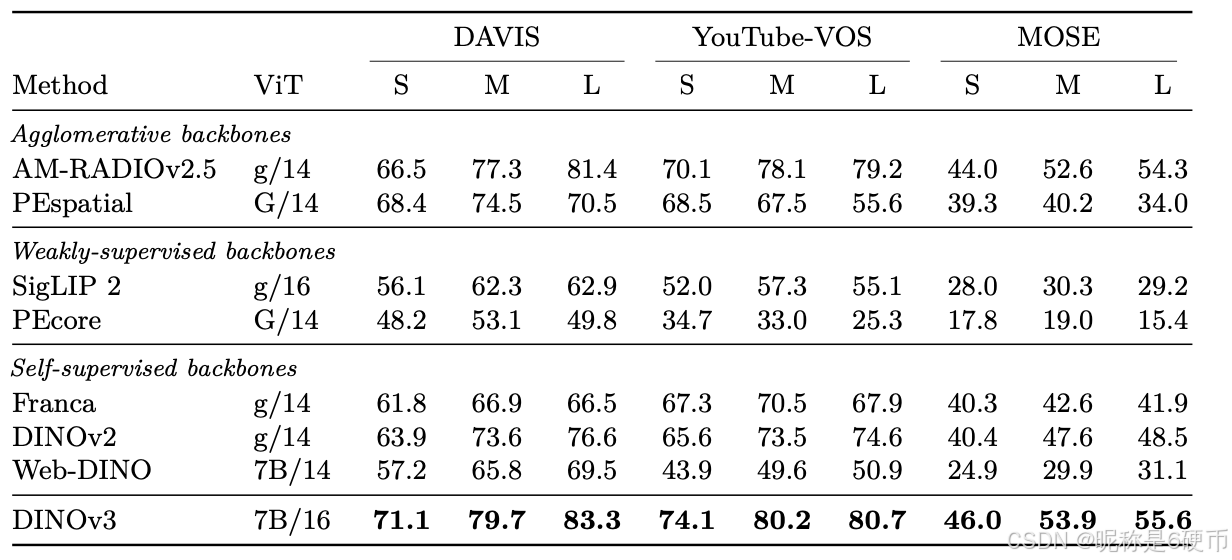

Figure 2: Performance of the DINOv3 family of models, compared to other families of self- or weaklysupervised models, on different benchmarks. DINOv3 significantly surpasses others on dense benchmarks, including models that leverage mask annotation priors such as AM-RADIO ( Heinrich et al. , 2025 ).

【翻译】图2:DINOv3模型家族与其他自监督或弱监督模型家族在不同基准测试上的性能比较。DINOv3在密集基准测试上显著超越其他模型,包括利用掩码标注先验的模型,如AM-RADIO (Heinrich et al., 2025)。

【解析】性能对比图展示了DINOv3在密集预测任务上的显著优势。密集基准测试任务对模型的局部特征表示能力要求极高,需要模型能够准确捕捉物体边界、纹理细节和空间关系。AM-RADIO等模型利用掩码标注先验,即通过人工标注的分割掩码来指导训练过程,虽然能够提供精确的空间定位信息,但需要大量的人工标注成本。DINOv3能够在完全无监督的情况下超越这些利用额外监督信号的方法,说明其自监督学习算法在特征学习上的有效性。

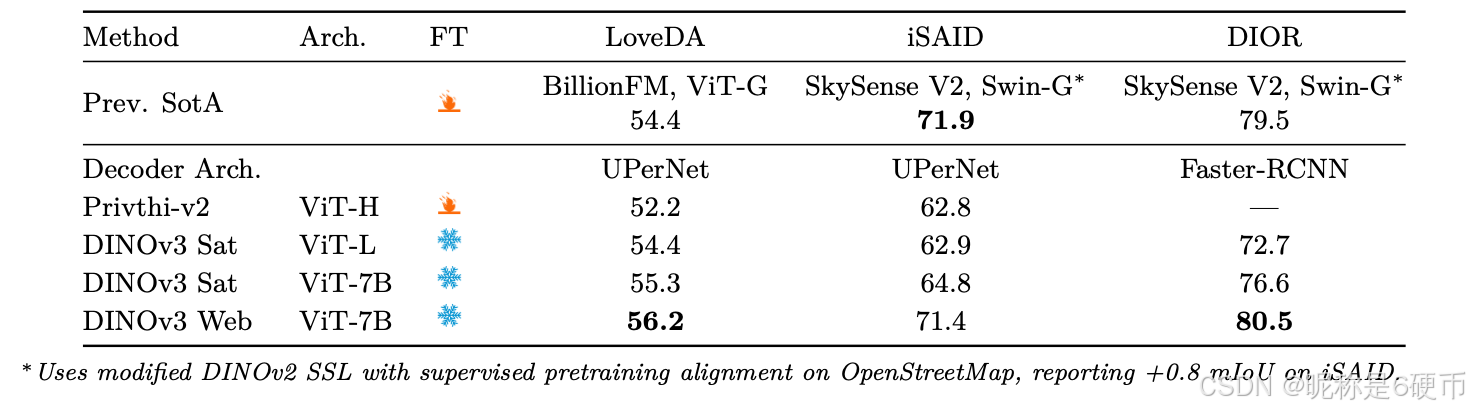

Strong & Versatile Foundational Models DINOv3 aims to offer a high level of versatility along two axes, which is enabled by the scaling of the model size and training data. First, a key desirable property for SSL models is to achieve excellent performance while being kept frozen, ideally reaching similar stateof-the-art results as specialized models. In that case, a single forward pass can deliver cutting-edge results across multiple tasks, leading to substantial computational savings—an essential advantage for practical applications, particularly on edge devices. We show the wide breadth of tasks that DINOv3 can successfully be applied to in Sec. 6 . Second, a scalable SSL training pipeline that does not depend on metadata unlocks numerous scientific applications. By pre-training on a diverse set of images, whether web images or observational data, SSL models generalize across a large set of domains and tasks. As illustrated in Fig. 1 (d), the PCA of DINOv3 features extracted from a high-resolution aerial image clearly allows to separates roads, houses, and greenery, highlighting the model’s feature quality.

【翻译】强大且多功能的基础模型 DINOv3旨在沿着两个轴提供高水平的多功能性,这通过模型大小和训练数据的扩展得以实现。首先,SSL模型的一个关键理想特性是在保持冻结状态下实现出色性能,理想情况下达到与专业模型相似的最先进结果。在这种情况下,单次前向传播可以在多个任务中提供前沿结果,从而带来大量的计算节省——这对于实际应用来说是一个重要优势,特别是在边缘设备上。我们在第6节中展示了DINOv3可以成功应用的任务的广泛范围。其次,不依赖元数据的可扩展SSL训练管道解锁了众多科学应用。通过在多样化的图像集合上进行预训练,无论是网络图像还是观测数据,SSL模型都能在大量领域和任务中泛化。如图1(d)所示,从高分辨率航拍图像中提取的DINOv3特征的PCA清楚地允许分离道路、房屋和绿地,突出了模型的特征质量。

【解析】DINOv3的多功能性设计体现在两个维度上。第一个维度是"冻结骨干网络"的通用性。第二个维度是跨领域的泛化能力。

Superior Feature Maps Through Gram Anchoring Another key feature of DINOv3 is a significant improvement of its dense feature maps. The DINOv3 SSL training strategy aims at producing models excelling at high-level semantic tasks while producing excellent feature maps amenable to solving geometric tasks such as depth estimation, or 3D matching. In particular, the models should produce dense features that can be used off-the-shelf or with little post-processing. The compromise between dense and global representation is especially difficult to optimize when training with vast amounts of images, since the objective of high-level understanding can conflict with the quality of the dense feature maps. These contradictory objectives lead to a collapse of dense features with large models and long training schedules. Our new Gram anchoring strategy effectively mitigates this collapse (see Sec. 4 ). As a result, DINOv3 obtains significantly better dense feature maps than DINOv2, staying clean even at high resolutions (see Fig. 3 ).

【翻译】通过Gram锚定实现优质特征图 DINOv3的另一个关键特性是其密集特征图的显著改进。DINOv3 SSL训练策略旨在产生在高级语义任务中表现出色的模型,同时产生适合解决诸如深度估计或3D匹配等几何任务的优秀特征图。特别是,模型应该产生可以开箱即用或只需很少后处理的密集特征。当使用大量图像进行训练时,密集表示和全局表示之间的妥协特别难以优化,因为高级理解的目标可能与密集特征图的质量冲突。这些矛盾的目标导致大型模型和长期训练计划中密集特征的崩溃。我们新的Gram锚定策略有效地缓解了这种崩溃(见第4节)。因此,DINOv3获得了比DINOv2显著更好的密集特征图,即使在高分辨率下也保持清晰(见图3)。

【解析】密集特征图质量的提升是DINOv3的核心技术突破之一。在计算机视觉中,模型需要同时具备两种不同层次的理解能力:全局语义理解和局部几何理解。全局语义理解关注的是"这是什么"的问题,比如识别图像中包含的物体类别、场景类型等高层语义信息;而局部几何理解则关注"在哪里"和"什么形状"的问题,需要对图像中每个像素位置都能产生有意义的特征表示,这对于深度估计、三维重建、精确分割等任务至关重要。传统上,这两个目标存在天然的冲突:为了获得更好的全局语义理解,模型倾向于学习更加抽象和概括的特征表示,这个过程往往会丢失局部的细节信息;而保持局部细节信息则可能影响模型对全局语义的抽象能力。在大规模长期训练过程中,这种冲突会导致"密集特征崩溃"现象——模型的密集特征图逐渐失去空间精确性和语义一致性,变得模糊和不可用。Gram锚定技术通过引入特定的正则化机制来解决这个问题。Gram矩阵原本是用来描述特征之间相关性的数学工具,在这里被用作锚定点,确保训练过程中密集特征的质量不会随着训练的进行而退化。这种方法使得DINOv3能够在保持强大全局理解能力的同时,产生高质量的密集特征图,这些特征图即使在高分辨率输入下也能保持清晰和准确。

The DINOv3 Family of Models Solving the degradation of dense feature map with Gram anchoring unlocks the power of scaling. As a consequence, training a much larger model with SSL leads to significant performance improvements. In this work, we successfully train a DINO model with 7B parameters. Since such a large model requires significant resources to run, we apply distillation to compress its knowledge into smaller variants. As a result, we present the DINOv3 family of vision models , a comprehensive suite designed to address a wide spectrum of computer vision challenges. This model family aims to advance the state of the art by offering scalable solutions adaptable to diverse resource constraints and deployment scenarios. The distillation process produces model variants at multiple scales, including Vision Transformer (ViT) Small, Base, and Large, as well as ConvNeXt-based architectures. Notably, the efficient and widely adopted ViT-L model achieves performance close to that of the original 7B teacher across a variety of tasks. Overall, the DINOv3 family demonstrates strong performance on a broad range of benchmarks, matching or exceeding the accuracy of competing models on global tasks, while significantly outperforming them on dense prediction tasks, as visible in Fig. 2 .

【翻译】DINOv3模型系列:通过Gram锚定解决密集特征图退化问题释放了缩放的力量。因此,使用SSL训练更大的模型带来了显著的性能改进。在这项工作中,我们成功训练了一个具有70亿参数的DINO模型。由于如此大的模型需要大量资源来运行,我们应用蒸馏将其知识压缩到较小的变体中。因此,我们提出了DINOv3视觉模型系列,这是一个旨在解决广泛的计算机视觉挑战的综合套件。该模型系列旨在通过提供适应不同资源约束和部署场景的可扩展解决方案来推进最先进技术。蒸馏过程产生了多个规模的模型变体,包括Vision Transformer (ViT) Small、Base和Large,以及基于ConvNeXt的架构。值得注意的是,高效且广泛采用的ViT-L模型在各种任务上实现了接近原始70亿参数教师模型的性能。总体而言,DINOv3系列在广泛的基准测试中表现出强劲的性能,在全局任务上匹配或超越竞争模型的准确性,同时在密集预测任务上显著超越它们,如图2所示。

【解析】自监督学习模型中,当模型规模超过一定阈值并进行长期训练时,密集特征图会出现质量退化现象,限制模型扩展的收益。Gram锚定通过特定的正则化机制保持特征图的内在结构稳定性,使得更大规模的模型训练成为可能。70亿参数模型在实际部署中面临巨大的计算资源挑战,知识蒸馏成为解决这一矛盾的关键手段,通过让小模型学习大模型的预测行为和内部表示,在保持性能的同时大幅降低计算成本。蒸馏过程不仅仅是简单的参数压缩,而是一种知识传递过程,大模型作为教师网络指导小模型的训练,使小模型能够获得超越其自身容量的表达能力。DINOv3模型通过提供从Small到Large的多个规模变体,用户可以根据具体的资源约束和性能需求选择最适合的模型。

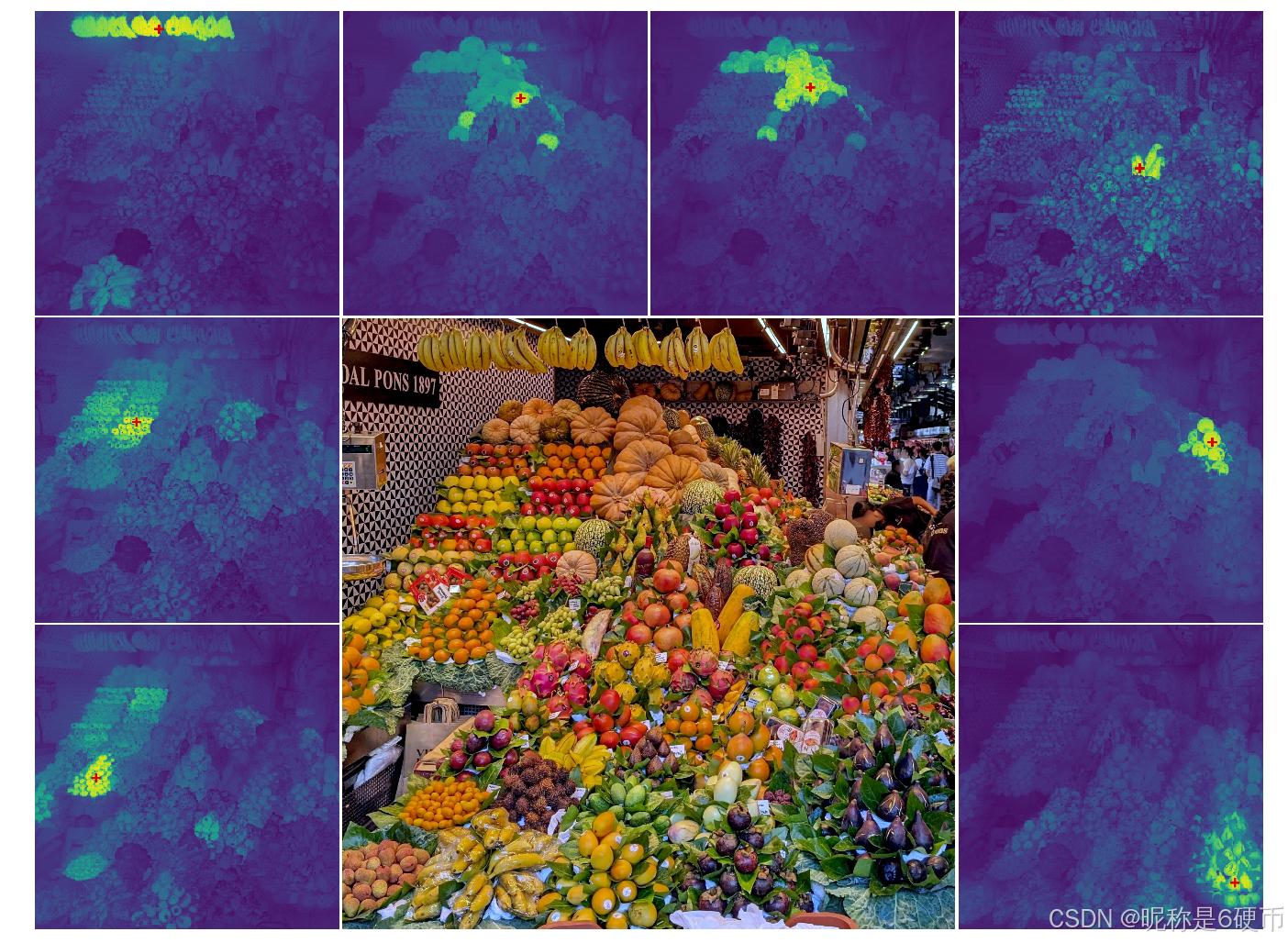

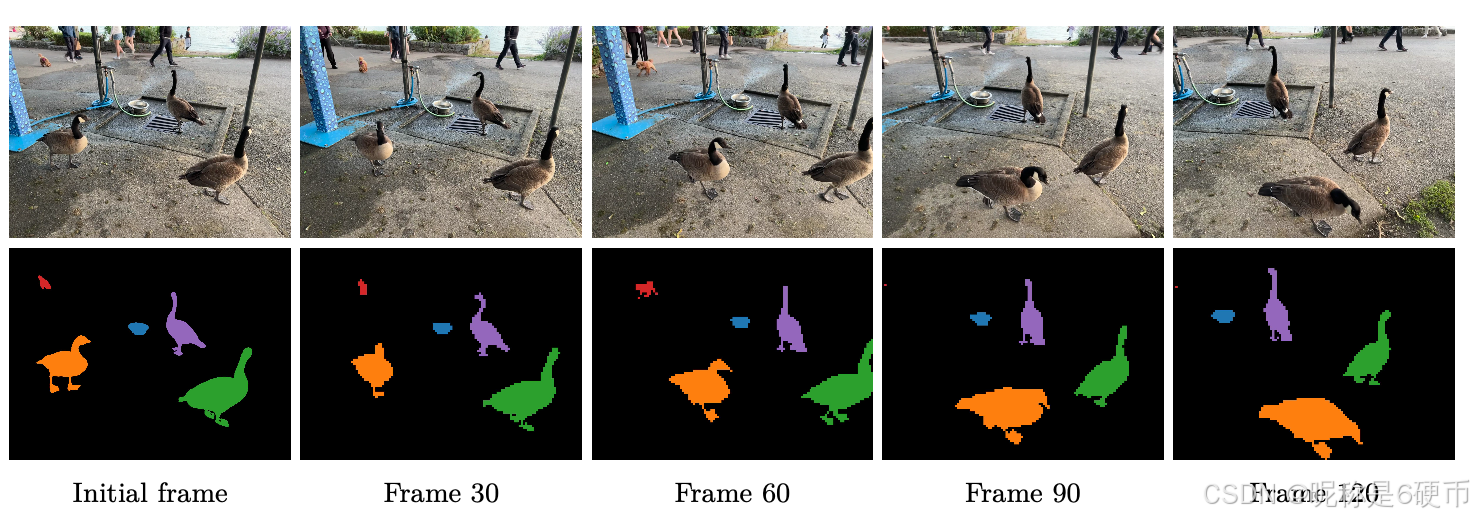

Figure 3: High-resolution dense features. We visualize the cosine similarity maps obtained with DINOv3 output features between the patches marked with a red cross and all other patches. Input image at 4096×40964096\times40964096×4096 . Please zoom in , do you agree with DINOv3?

【翻译】图3:高分辨率密集特征。我们可视化了使用DINOv3输出特征在标有红十字的补丁与所有其他补丁之间获得的余弦相似性图。输入图像为4096×40964096\times40964096×4096。请放大查看,你同意DINOv3的结果吗?

【解析】这个可视化展示了DINOv3在超高分辨率图像上的密集特征提取能力。4096×40964096\times40964096×4096属于极高分辨率,余弦相似性度量用于评估特征质量,计算两个特征向量之间的夹角余弦值,值越接近1说明特征越相似,越接近0说明差异越大。通过选择图像中的特定位置(红十字标记)作为查询点,然后计算该位置的特征与图像中所有其他位置特征的相似性,可以直观地观察模型对语义概念的理解程度。高质量的视觉特征应该能够识别出语义相似的区域,比如同一个物体的不同部分、相似的纹理或材质等。可视化结果中相似性的空间分布模式能够反映模型是否真正理解了图像的语义结构,而不是仅仅基于低级的颜色或纹理特征进行匹配。高分辨率下的特征一致性对于需要精确空间定位的任务极其重要,它能够支持精细的图像分析和编辑应用。

Overview of Contributions In this work, we introduce multiple contributions to address the challenge of scaling SSL towards a large frontier model. We build upon recent advances in automatic data curation ( Vo et al. , 2024 ) to obtain a large “background” training dataset that we carefully mix with a bit of specialized data (ImageNet-1k). This allows leveraging large amounts of unconstrained data to improve the model performance. This contribution (i) around data scaling will be described in Sec. 3.1 .

We increase our main model size to 7B parameters by defining a custom variant of the ViT architecture. We include modern position embeddings (axial RoPE) and develop a regularization technique to avoid positional artifacts. Departing from the multiple cosine schedules in DINOv2, we train with constant hyperparameter schedules for 1M iterations. This allows producing models with stronger performance. This contribution (ii) on model architecture and training will be described in Sec. 3.2 .

With the above techniques, we are able to train a model following the DINOv2 algorithm at scale. However, as mentioned previously, scale leads to a degradation of dense features. To address this, we propose a core improvement of the pipeline with a Gram anchoring training phase. This cleans the noise in the feature maps, leading to impressive similarity maps, and drastically improving the performance on both parametric and non-parametric dense tasks. This contribution (iii) on Gram training will be described in Sec. 4 .

【翻译】贡献概述 在这项工作中,我们引入了多项贡献来解决将SSL扩展到大型前沿模型的挑战。我们基于自动数据策划的最新进展(Vo等人,2024)来获得一个大型"背景"训练数据集,我们将其与少量专业数据(ImageNet-1k)仔细混合。这允许利用大量无约束数据来改善模型性能。关于数据扩展的贡献(i)将在第3.1节中描述。

我们通过定义ViT架构的自定义变体将主模型大小增加到70亿参数。我们包括现代位置嵌入(轴向RoPE)并开发了一种正则化技术来避免位置伪影。与DINOv2中的多个余弦调度不同,我们使用恒定超参数调度训练100万次迭代。这允许产生性能更强的模型。关于模型架构和训练的贡献(ii)将在第3.2节中描述。

通过上述技术,我们能够大规模地训练遵循DINOv2算法的模型。然而,如前所述,规模导致密集特征的退化。为了解决这个问题,我们提出了使用Gram锚定训练阶段对管道的核心改进。这清理了特征图中的噪声,产生了令人印象深刻的相似性图,并大幅改善了参数化和非参数化密集任务的性能。关于Gram训练的贡献(iii)将在第4节中描述。

【解析】总结了DINOv3的三个技术贡献,每个贡献都针对自监督学习规模化过程中的难题。第一个贡献解决的是数据质量与数量的平衡问题。在大规模训练中,简单地增加数据量并不能保证模型性能的提升,因为网络数据的质量参差不齐,包含大量噪声和冗余信息。自动数据策划技术通过算法自动筛选和组织训练数据,确保数据的多样性和代表性。将大规模"背景"数据与精心策划的ImageNet-1k数据混合,既保证了数据的广度又确保了质量,混合策略能够让模型在保持泛化能力的同时获得更好的性能。第二个贡献涉及模型架构的创新设计。将模型规模扩展到70亿参数是一个巨大的技术挑战,需要解决计算效率、训练稳定性和位置编码等多个问题。轴向RoPE(Rotary Position Embedding)是一种先进的位置编码方法,能够更好地处理不同长度和分辨率的输入,同时保持计算效率。位置伪影是指由于位置编码不当导致的模型对图像中特定位置产生偏见的现象,正则化技术的引入有效缓解了这个问题。恒定超参数调度相比于复杂的余弦调度更加稳定,避免了学习率变化过于复杂导致的训练不稳定性。第三个贡献是最为关键的Gram锚定技术。在大规模训练过程中,虽然全局特征质量持续改善,但密集特征往往会出现退化现象,表现为特征图中出现噪声、空间一致性下降等问题。Gram矩阵能够捕捉特征之间的二阶统计信息,通过锚定机制确保特征图的空间一致性和语义连贯性,从而解决了规模化训练中密集特征退化的核心问题。

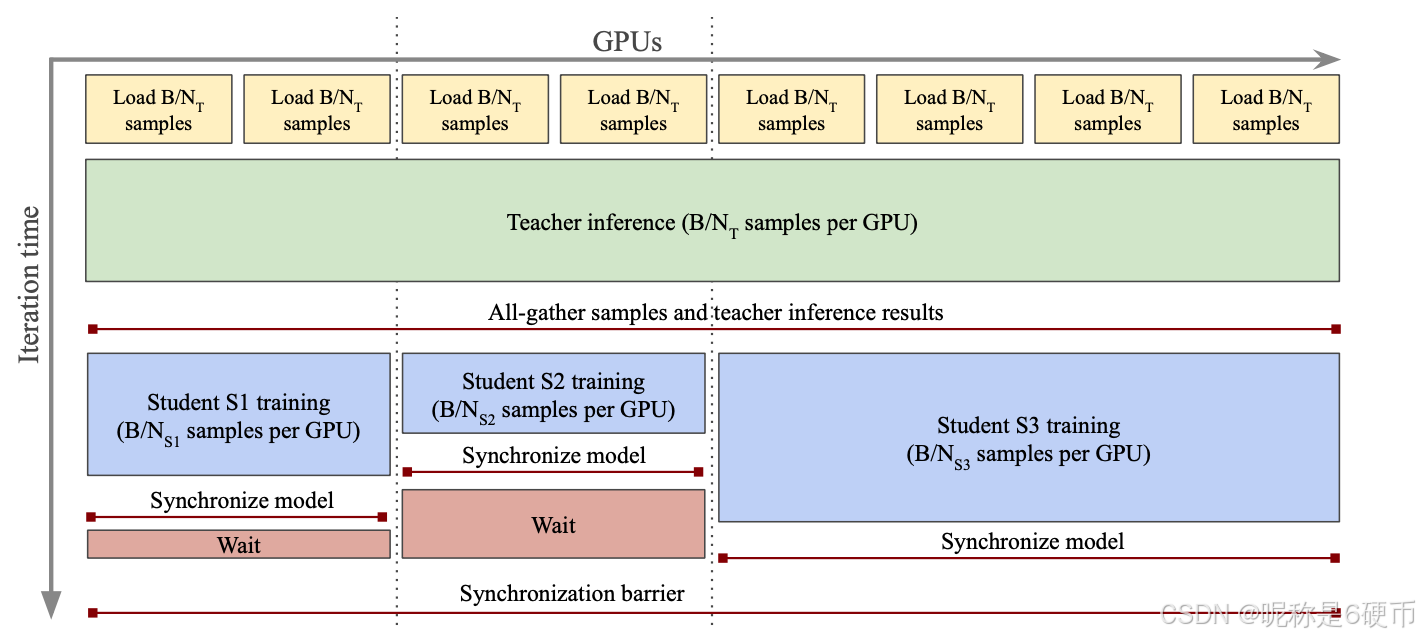

Following previous practice, the last steps of our pipeline consist of a high-resolution post-training phase and distillation into a series of high-performance models of various sizes. For the latter, we develop a novel and efficient single-teacher multiple-students distillation procedure. This contribution (iv) transfers the power of our 7B frontier model to a family of smaller practical models for common usage, that we describe in Sec. 5.2 .

【翻译】按照以往的做法,我们管道的最后步骤包括高分辨率后训练阶段和蒸馏成一系列不同大小的高性能模型。对于后者,我们开发了一种新颖且高效的单教师多学生蒸馏程序。这一贡献(iv)将我们70亿参数前沿模型的能力转移到一系列较小的实用模型中,供常见使用,我们在第5.2节中描述。

【解析】描述了DINOv3训练流程的最终阶段。高分辨率后训练是在主要训练完成后进行的额外优化步骤,专门针对高分辨率输入进行模型调优,确保模型在处理高分辨率图像时仍能保持优秀的特征提取能力。通过单教师多学生的蒸馏策略,可以同时训练多个不同规模的学生模型,这些学生模型在保持相对较小计算量的同时,能够学习到教师模型的核心能力。

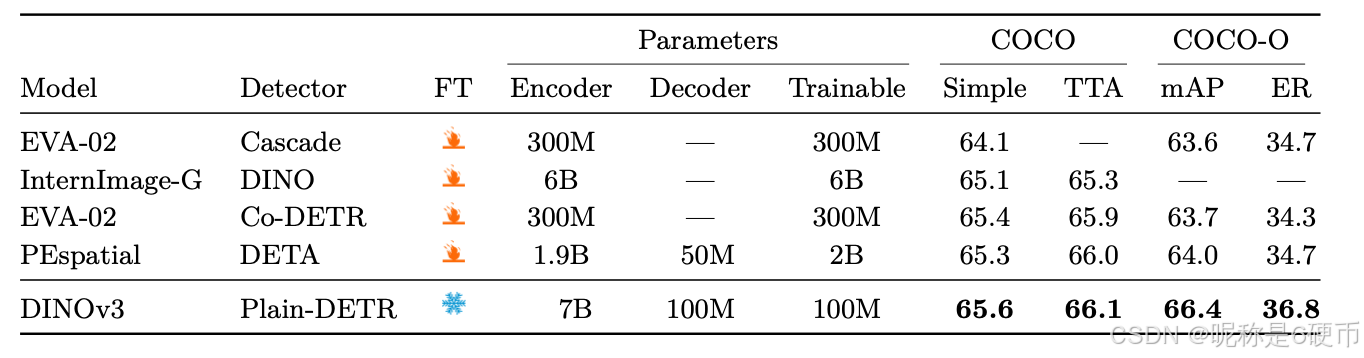

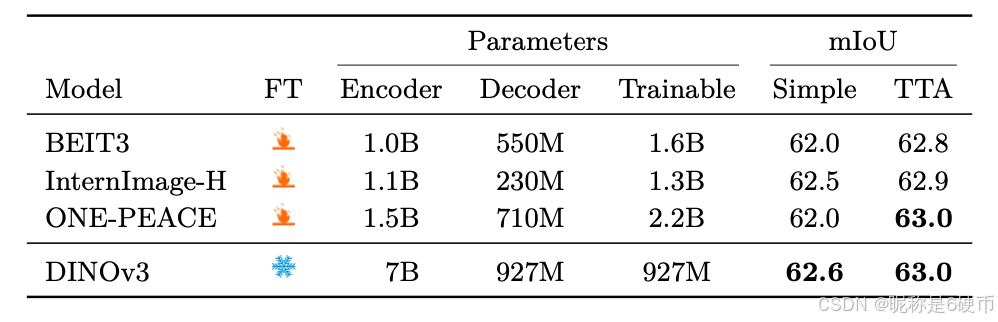

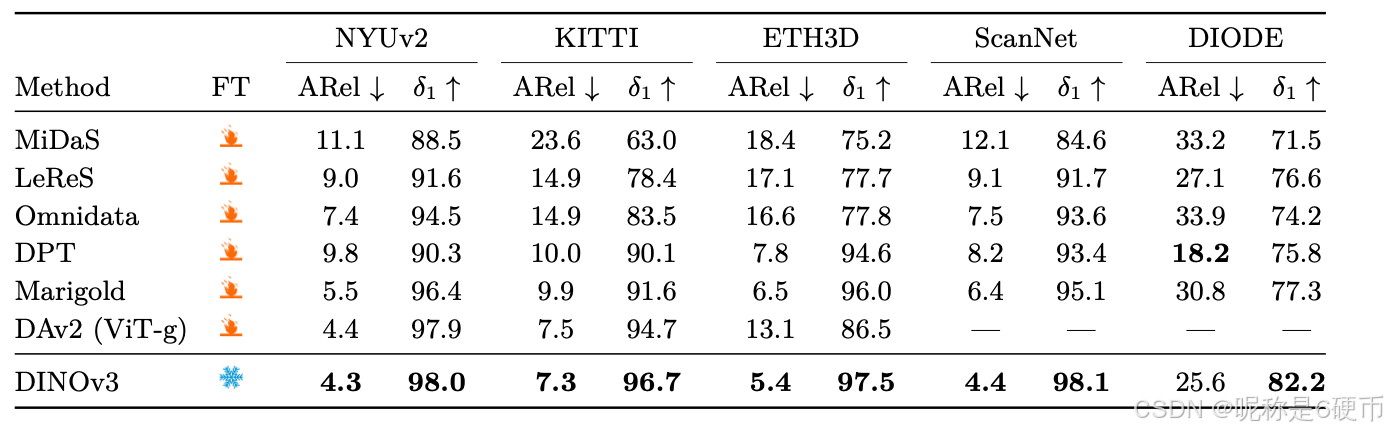

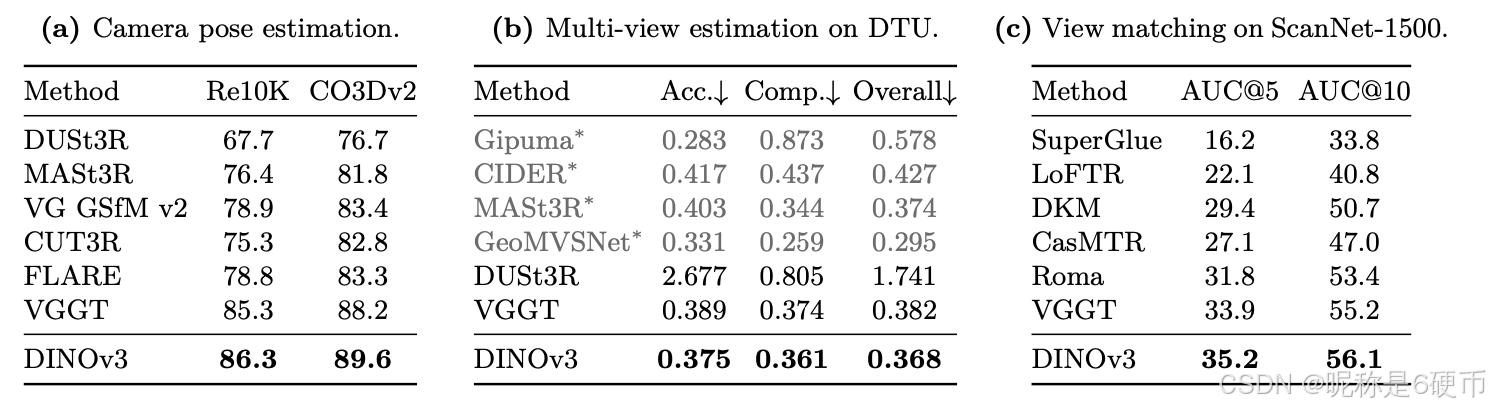

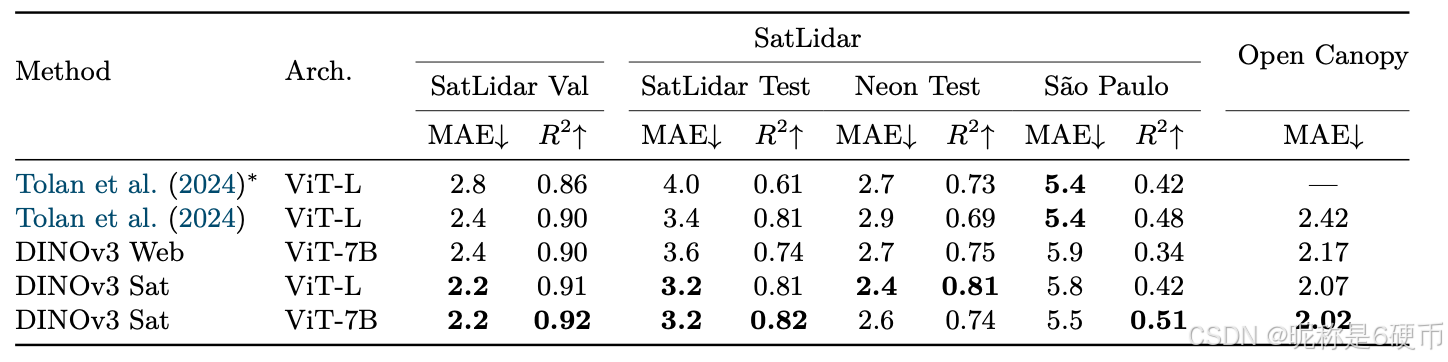

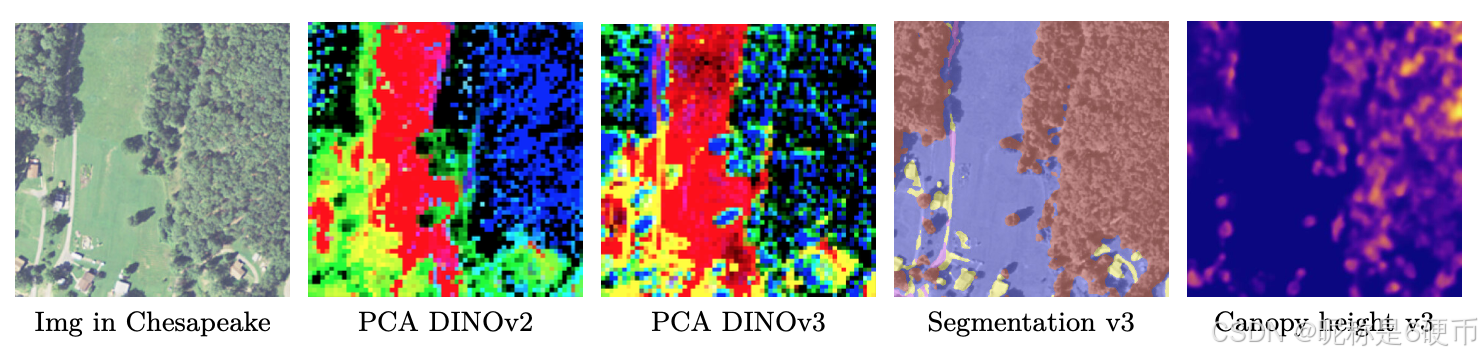

As measured in our thorough benchmarking, results in Sec. 6 show that our approach defines a new standard in dense tasks and performs comparably to CLIP derivatives on global tasks. In particular, with a frozen vision backbone , we achieve state-of-the-art performance on longstanding computer vision problems such as object detection (COCO detection, mAP 66.1) and image segmentation (ADE20k, mIoU 63.0), outperforming specialized fine-tuned pipelines. Moreover, we provide evidence of the generality of our approach across domains by applying the DINOv3 algorithm to satellite imagery, in Sec. 8 , surpassing all prior approaches.

【翻译】通过我们全面的基准测试,第6节的结果表明我们的方法在密集任务上定义了新标准,并在全局任务上与CLIP衍生模型表现相当。特别是,使用冻结的视觉骨干网络,我们在长期存在的计算机视觉问题上实现了最先进的性能,如目标检测(COCO检测,mAP 66.1)和图像分割(ADE20k,mIoU 63.0),超越了专门的微调管道。此外,我们通过在第8节将DINOv3算法应用于卫星图像,提供了我们方法跨领域通用性的证据,超越了所有先前的方法。

2 Related Work

Self-Supervised Learning Learning without annotations requires an artificial learning task that provides supervision in lieu for training. The art and challenge of SSL lies in carefully designing these so-called pre-text tasks in order to learn powerful representations for downstream tasks. The language domain, by its discrete nature, offers straightforward ways to set up such tasks, which led to many successful unsupervised pre-training approaches for text data. Examples include word embeddings ( Mikolov et al. , 2013 ; Bojanowski et al. , 2017 ), sentence representations ( Devlin et al. , 2018 ; Liu et al. , 2019 ), and plain language models ( Mikolov et al. , 2010 ; Zaremba et al. , 2014 ). In contrast, computer vision presents greater challenges due to the continuous nature of the signal. Early attempts mimicking language approaches extracted supervisory signals from parts of an image to predict other parts, e.g . by predicting relative patch position ( Doersch et al. , 2015 ), patch re-ordering ( Noroozi and Favaro , 2016 ; Misra and Maaten , 2020 ), or inpainting ( Pathak et al. , 2016 ). Other tasks involve re-colorizing images ( Zhang et al. , 2016 ) or predicting image transformations ( Gidaris et al. , 2018 ).

【翻译】自监督学习:无标注学习需要一个人工学习任务来代替训练中的监督信号。SSL的艺术和挑战在于精心设计这些所谓的代理任务,以便为下游任务学习强大的表示。语言领域由于其离散性质,提供了设置此类任务的直接方式,这导致了许多成功的文本数据无监督预训练方法。示例包括词嵌入(Mikolov等人,2013;Bojanowski等人,2017)、句子表示(Devlin等人,2018;Liu等人,2019)和普通语言模型(Mikolov等人,2010;Zaremba等人,2014)。相比之下,计算机视觉由于信号的连续性质而面临更大的挑战。早期模仿语言方法的尝试从图像的一部分提取监督信号来预测其他部分,例如通过预测相对补丁位置(Doersch等人,2015)、补丁重新排序(Noroozi和Favaro,2016;Misra和Maaten,2020)或修复(Pathak等人,2016)。其他任务涉及重新着色图像(Zhang等人,2016)或预测图像变换(Gidaris等人,2018)。

Among these tasks, inpainting-based approaches have gathered significant interest thanks to the flexibility of the patch-based ViT architecture ( He et al. , 2021 ; Bao et al. , 2021 ; El-Nouby et al. , 2021 ). The objective is to reconstruct corrupted regions of an image, which can be viewed as a form of denoising auto-encoding and is conceptually related to the masked token prediction task in BERT pretraining ( Devlin et al. , 2018 ). Notably, He et al. ( 2021 ) demonstrated that pixel-based masked auto-encoders (MAE) can be used as strong initializations for finetuning on downstream tasks. In the following, Baevski et al. ( 2022 ; 2023 ); Assran et al. ( 2023 ) showed that predicting a learned latent space instead of the pixel space leads to more powerful, higher-level features—a learning paradigm called JEPA: “Joint-Embedding Predictive Architecture” ( LeCun , 2022 ). Recently, JEPAs have also been extended to video training ( Bardes et al. , 2024 ; Assran et al. , 2025 ).

【翻译】在这些任务中,基于修复的方法由于基于patch的ViT架构的灵活性而获得了显著关注(He等人,2021;Bao等人,2021;El-Nouby等人,2021)。目标是重建图像的损坏区域,这可以被视为一种去噪自编码形式,在概念上与BERT预训练中的掩码token预测任务相关(Devlin等人,2018)。值得注意的是,He等人(2021)证明了基于像素的掩码自编码器(MAE)可以用作下游任务微调的强初始化。接下来,Baevski等人(2022;2023);Assran等人(2023)表明,预测学习的潜在空间而不是像素空间会产生更强大、更高级的特征——这种学习范式称为JEPA:“联合嵌入预测架构”(LeCun,2022)。最近,JEPA也已扩展到视频训练(Bardes等人,2024;Assran等人,2025)。

A second line of work, closer to ours, leverages discriminative signals between images to learn visual representations. This family of methods traces its origins to early deep learning research ( Hadsell et al. , 2006 ), but gained popularity with the introduction of instance classification techniques ( Dosovitskiy et al. , 2016 ; Bojanowski and Joulin , 2017 ; Wu et al. , 2018 ). Subsequent advancements introduced contrastive objectives and information-theoretic criteria ( Hénaff et al. , 2019 ; He et al. , 2020 ; Chen and He , 2020 ; Chen et al. , 2020a ; Grill et al. , 2020 ; Bardes et al. , 2021 ), as well as self clustering-based strategies ( Caron et al. , 2018 ; Asano et al. , 2020 ; Caron et al. , 2020 ; 2021 ). More recent approaches, such as iBOT ( Zhou et al. , 2021 ), combine these discriminative losses with masked reconstruction objectives. All of these methods show the ability to learn strong features and achieve high performance on standard benchmarks like ImageNet ( Russakovsky et al. , 2015 ). However, most face challenges scaling to larger model sizes ( Chen et al. , 2021 ).

【翻译】第二类与我们工作更接近的研究利用图像间的判别信号来学习视觉表示。这一方法系列源于早期深度学习研究(Hadsell等人,2006),但随着实例分类技术的引入(Dosovitskiy等人,2016;Bojanowski和Joulin,2017;Wu等人,2018)而获得了普及。后续的进展引入了对比目标和信息论准则(Hénaff等人,2019;He等人,2020;Chen和He,2020;Chen等人,2020a;Grill等人,2020;Bardes等人,2021),以及基于自聚类的策略(Caron等人,2018;Asano等人,2020;Caron等人,2020;2021)。更近期的方法,如iBOT(Zhou等人,2021),将这些判别损失与掩码重建目标相结合。所有这些方法都显示出学习强特征并在ImageNet(Russakovsky等人,2015)等标准基准上实现高性能的能力。然而,大多数方法在扩展到更大模型规模时面临挑战(Chen等人,2021)。

Vision Foundation Models The deep learning revolution began with the AlexNet breakthrough ( Krizhevsky et al. , 2012 ), a deep convolutional neural network that outperformed all previous methods on the ImageNet challenge ( Deng et al. , 2009 ; Russakovsky et al. , 2015 ). Already early on, features learned end-to-end on the large manually-labeled ImageNet dataset were found to be highly effective for a wide range of transfer learning tasks ( Oquab et al. , 2014 ). Early work on vision foundation models then focused on architecture development, including VGG ( Simonyan and Zisserman , 2015 ), GoogleNet ( Szegedy et al. , 2015 ), and ResNets ( He et al. , 2016 ).

【翻译】视觉基础模型 深度学习革命始于AlexNet的突破(Krizhevsky等人,2012),这是一个深度卷积神经网络,在ImageNet挑战赛上超越了所有先前的方法(Deng等人,2009;Russakovsky等人,2015)。早期就发现,在大型手动标注的ImageNet数据集上端到端学习的特征对于广泛的迁移学习任务非常有效(Oquab等人,2014)。早期的视觉基础模型工作随后专注于架构开发,包括VGG(Simonyan和Zisserman,2015)、GoogleNet(Szegedy等人,2015)和ResNets(He等人,2016)。

Given the effectiveness of scaling , subsequent works explored training larger models on big datasets. Sun et al. ( 2017 ) expanded supervised training data with the proprietary JFT dataset containing 300 million labeled images, showing impressive results. JFT also enabled significant performance gains for Kolesnikov et al. ( 2020 ). In parallel, scaling was explored using a combination of supervised and unsupervised data. For instance, an ImageNet-supervised model can be used to produce pseudo-labels for unsupervised data, which then serve to train larger networks ( Yalniz et al. , 2019 ). Subsequently, the availability of large supervised datasets such as JFT also facilitated the adaptation of the transformer architecture to computer vision ( Dosovitskiy et al. , 2020 ). In particular, achieving performance comparable to that of the original vision transformer (ViT) without access to JFT requires substantial effort ( Touvron et al. , 2020 ; 2022 ). Due to the learning capacity of ViTs, scaling efforts were further extended by Zhai et al. ( 2022a ), culminating in the very large ViT-22B encoder ( Dehghani et al. , 2023 ).

【翻译】鉴于扩展的有效性,后续工作探索了在大数据集上训练更大的模型。Sun等人(2017)使用包含3亿标注图像的专有JFT数据集扩展了监督训练数据,显示出令人印象深刻的结果。JFT也为Kolesnikov等人(2020)带来了显著的性能提升。与此同时,使用监督和无监督数据组合的扩展方法也被探索。例如,ImageNet监督模型可以用于为无监督数据生成伪标签,然后用于训练更大的网络(Yalniz等人,2019)。随后,大型监督数据集如JFT的可用性也促进了transformer架构在计算机视觉中的适应(Dosovitskiy等人,2020)。特别是,在无法访问JFT的情况下实现与原始视觉transformer(ViT)相当的性能需要大量努力(Touvron等人,2020;2022)。由于ViT的学习能力,扩展努力进一步扩展(Zhai等人,2022a),最终产生了非常大的ViT-22B编码器(Dehghani等人,2023)。

Given the complexity of manually labeling large datasets, weakly-supervised training —where annotations are derived from metadata associated with images—provides an effective alternative to supervised training. Early on, Joulin et al. ( 2016 ) demonstrated that a network can be pre-trained by simply predicting all words in the image caption as targets. This initial approach was further refined by leveraging sentence structures ( Li et al. , 2017 ), incorporating other types of metadata and involve curation ( Mahajan et al. , 2018 ), and scaling ( Singh et al. , 2022 ). However, weakly-supervised algorithms only reached their full potential with the introduction of contrastive losses and the joint-training of caption representations, as exemplified by Align ( Jia et al. , 2021 ) and CLIP ( Radford et al. , 2021 ).

【翻译】鉴于手动标注大数据集的复杂性,弱监督训练——其中标注源自与图像相关的元数据——为监督训练提供了有效的替代方案。早期,Joulin等人(2016)证明了网络可以通过简单地预测图像标题中的所有单词作为目标来进行预训练。这种初始方法通过利用句子结构(Li等人,2017)、纳入其他类型的元数据并涉及策划(Mahajan等人,2018)以及扩展(Singh等人,2022)得到进一步完善。然而,弱监督算法只有在引入对比损失和标题表示的联合训练后才达到其全部潜力,如Align(Jia等人,2021)和CLIP(Radford等人,2021)所示。

This highly successful approach inspired numerous open-source reproductions and scaling efforts . OpenCLIP ( Cherti et al. , 2023 ) was the first open-source effort to replicate CLIP by training on the LAION dataset ( Schuhmann et al. , 2021 ); following works leverage pre-trained backbones by fine-tuning them in a CLIP-style manner ( Sun et al. , 2023 ; 2024 ). Recognizing that data collection is a critical factor in the success of CLIP training, MetaCLIP ( Xu et al. , 2024 ) precisely follows the original CLIP procedure to reproduce its results, whereas Fang et al. ( 2024a ) use supervised datasets to curate pretraining data. Other works focus on improving the training loss, e.g . using a sigmoid loss in SigLIP ( Zhai et al. , 2023 ), or leveraging a pre-trained image encoder ( Zhai et al. , 2022b ). Ultimately though, the most critical components for obtaining cutting-edge foundation models are abundant high-quality data and substantial compute resources. In this vein, SigLIP 2 ( Tschannen et al. , 2025 ) and Perception Encoder (PE) ( Bolya et al. , 2025 ) achieve impressive results after training on more than 40B image-text pairs. The largest PE model is trained on 86B billion samples with a global batch size of 131K. Finally, a range of more complex and natively multimodal approaches have been proposed; these include contrastive captioning ( Yu et al. , 2022 ), masked modeling in the latent space ( Bao et al. , 2021 ; Wang et al. , 2022b ; Fang et al. , 2023 ; Wang et al. , 2023a ), and auto-regressive training ( Fini et al. , 2024 ).

【翻译】这种高度成功的方法启发了众多开源复现和扩展工作。OpenCLIP(Cherti等人,2023)是第一个通过在LAION数据集(Schuhmann等人,2021)上训练来复制CLIP的开源努力;后续工作通过以CLIP风格的方式微调预训练骨干网络来利用它们(Sun等人,2023;2024)。认识到数据收集是CLIP训练成功的关键因素,MetaCLIP(Xu等人,2024)精确遵循原始CLIP程序来复现其结果,而Fang等人(2024a)使用监督数据集来策划预训练数据。其他工作专注于改进训练损失,例如在SigLIP中使用sigmoid损失(Zhai等人,2023),或利用预训练图像编码器(Zhai等人,2022b)。然而,获得尖端基础模型的最关键组件最终是丰富的高质量数据和大量计算资源。在这方面,SigLIP 2(Tschannen等人,2025)和感知编码器(PE)(Bolya等人,2025)在超过400亿图像-文本对上训练后取得了令人印象深刻的结果。最大的PE模型在860亿样本上训练,全局批量大小为131K。最后,已经提出了一系列更复杂和本质上多模态的方法;这些包括对比标题(Yu等人,2022)、潜在空间中的掩码建模(Bao等人,2021;Wang等人,2022b;Fang等人,2023;Wang等人,2023a)和自回归训练(Fini等人,2024)。

In contrast, relatively little work has focused on scaling unsupervised image pretraining . Early efforts include Caron et al. ( 2019 ) and Goyal et al. ( 2019 ) utilizing the YFCC dataset ( Thomee et al. , 2016 ). Further progress has been achieved by focusing on larger datasets and models ( Goyal et al. , 2021 ; 2022a ), as well as initial attempts at data curation for SSL ( Tian et al. , 2021 ). Careful tuning of the training algorithms, larger architectures, and more extensive training data lead to the impressive results of DINOv2 ( Oquab et al. , 2024 ); for the first time, an SSL model matched or surpassed open-source CLIP variants on a range of tasks. This direction has recently been further pushed by Fan et al. ( 2025 ) by scaling to larger models without data curation, or by Venkataramanan et al. ( 2025 ) using open datasets and improved training recipes.

【翻译】相比之下,专注于扩展无监督图像预训练的工作相对较少。早期的努力包括Caron等人(2019)和Goyal等人(2019)利用YFCC数据集(Thomee等人,2016)。通过关注更大的数据集和模型(Goyal等人,2021;2022a),以及SSL数据策划的初步尝试(Tian等人,2021),取得了进一步的进展。训练算法的仔细调优、更大的架构和更广泛的训练数据导致了DINOv2的令人印象深刻的结果(Oquab等人,2024);这是SSL模型首次在一系列任务上匹配或超越开源CLIP变体。这一方向最近进一步被Fan等人(2025)通过扩展到更大的模型而不进行数据策划,或被Venkataramanan等人(2025)使用开放数据集和改进的训练配方所推动。

Dense Transformer Features A broad range of modern vision applications consume dense features of pre-trained transformers, including multi-modal models ( Liu et al. , 2023 ; Beyer et al. , 2024 ), generative models ( Yu et al. , 2025 ; Yao et al. , 2025 ), 3D understanding ( Wang et al. , 2025 ), video understanding ( Lin et al. , 2023a ; Wang et al. , 2024b ), and robotics ( Driess et al. , 2023 ; Kim et al. , 2024 ). On top of that, traditional vision tasks such as detection, segmentation, or depth estimation require accurate local descriptors. To enhance the quality of SSL-trained local descriptors, a substantial body of work focuses on developing local SSL losses . Examples include leveraging spatio-temporal consistency in videos, e.g . using point track loops as training signal ( Jabri et al. , 2020 ), exploiting the spatial alignment between different crops of the same image ( Pinheiro et al. , 2020 ; Bardes et al. , 2022 ), or enforcing consistency between neighboring patches ( Yun et al. , 2022 ). Darcet et al. ( 2025 ) show that predicting clustered local patches leads to improved dense representations. DetCon ( Hénaff et al. , 2021 ) and ORL ( Xie et al. , 2021 ) perform contrastive learning on region proposals but assume that such proposals exist a priori ; this assumption is relaxed by approaches such as ODIN ( Hénaff et al. , 2022 ) and SlotCon ( Wen et al. , 2022 ). Without changing the training objective, Darcet et al. ( 2024 ) show that adding register tokens to the input sequence greatly improves dense feature maps, and recent works find this can be done without model training ( Jiang et al. , 2025 ; Chen et al. , 2025 ).

【翻译】密集Transformer特征:广泛的现代视觉应用消费预训练transformer的密集特征,包括多模态模型(Liu等人,2023;Beyer等人,2024)、生成模型(Yu等人,2025;Yao等人,2025)、3D理解(Wang等人,2025)、视频理解(Lin等人,2023a;Wang等人,2024b)和机器人技术(Driess等人,2023;Kim等人,2024)。除此之外,传统的视觉任务如检测、分割或深度估计都需要准确的局部描述符。为了提高SSL训练的局部描述符质量,大量工作专注于开发局部SSL损失。例子包括利用视频中的时空一致性,例如使用点轨迹循环作为训练信号(Jabri等人,2020),利用同一图像不同裁剪之间的空间对齐(Pinheiro等人,2020;Bardes等人,2022),或强制相邻patch之间的一致性(Yun等人,2022)。Darcet等人(2025)表明预测聚类的局部patch可以改善密集表示。DetCon(Hénaff等人,2021)和ORL(Xie等人,2021)对区域提议执行对比学习,但假设这些提议先验存在;这一假设被ODIN(Hénaff等人,2022)和SlotCon(Wen等人,2022)等方法放松了。在不改变训练目标的情况下,Darcet等人(2024)表明向输入序列添加寄存器token可以大大改善密集特征图,最近的工作发现这可以在不进行模型训练的情况下完成(Jiang等人,2025;Chen等人,2025)。

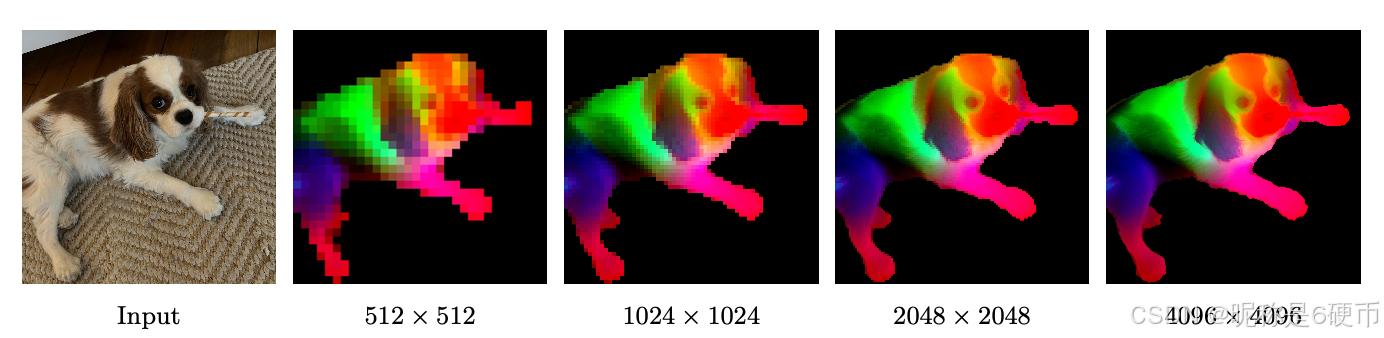

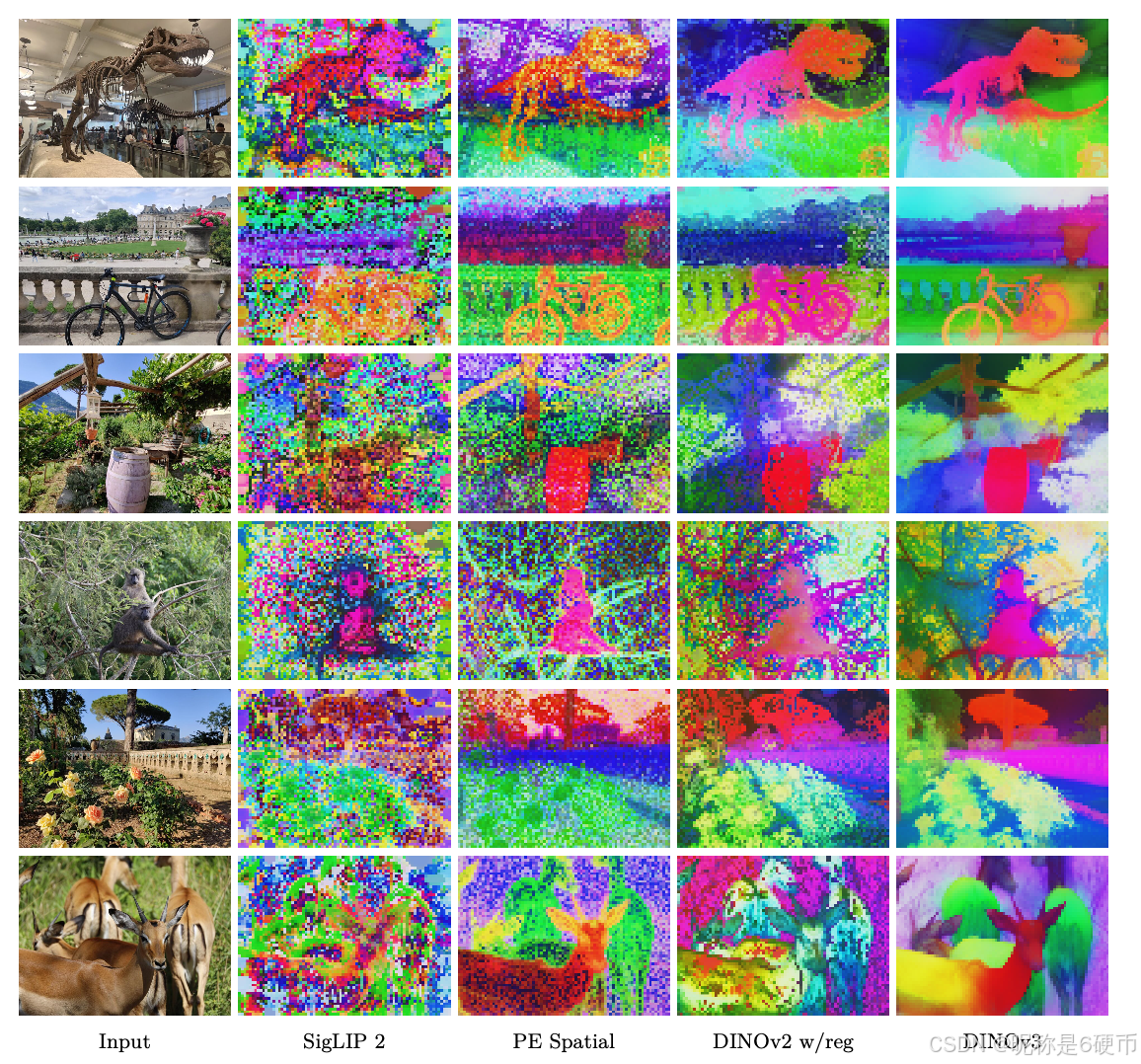

Figure 4: DINOv3 at very high resolution. We visualize dense features of DINOv3 by mapping the first three components of a PCA computed over the feature space to RGB. To focus the PCA on the subject, we mask the feature maps via background subtraction. With increasing resolution, DINOv3 produces crisp features that stay semantically meaningful. We visualize more PCAs in Sec. 6.1.1 .

【翻译】图4:超高分辨率下的DINOv3。我们通过将在特征空间上计算的PCA的前三个主成分映射到RGB来可视化DINOv3的密集特征。为了将PCA聚焦在主体上,我们通过背景减法来掩蔽特征图。随着分辨率的增加,DINOv3产生清晰的特征,保持语义的意义。我们在第6.1.1节中可视化更多的PCA。

【解析】这里展示了DINOv3在处理高分辨率图像时的能力。PCA(主成分分析)是一种降维技术,用于提取数据中最重要的信息。在这个可视化中,研究者将DINOv3提取的高维特征向量通过PCA降维到3维,然后将这3个维度分别映射到RGB颜色的红、绿、蓝通道。这样做的目的是用颜色来表示特征的不同方面,让我们能够直观地看到模型在不同空间位置提取到的特征差异。背景减法通过移除背景像素的干扰,让PCA专注于分析前景物体的特征。图4展示了一个重要现象:当输入图像分辨率越来越高时,DINOv3能够提取出更加精细和清晰的特征表示,而且这些特征在语义上仍然是有意义的。这说明模型具有良好的多尺度特征提取能力,能够在保持语义理解的同时捕获细节信息。

A recent trend are distillation-based, " agglomerative " methods that combine information from multiple image encoders with varying in global and local feature quality, trained using different levels of supervision ( Ranzinger et al. , 2024 ; Bolya et al. , 2025 ): AM-RADIO ( Ranzinger et al. , 2024 ) combines the strengths of the fully-supervised SAM ( Kirillov et al. , 2023 ), the weakly-supervised CLIP, and the self-supervised DINOv2 into a unified backbone. The Perception Encoder ( Bolya et al. , 2025 ) similarly distills SAM(v2) into a specialized dense variant called PEspatial. They use an objective enforcing cosine similarity between student and teacher patches to be high, where their teacher is trained with mask annotations. Similar losses were shown to be effective in the context of style transfer, by reducing the inconsistency between the Gram matrices of feature dimensions ( Gatys et al. , 2016 ; Johnson et al. , 2016 ; Yoo et al. , 2024 ). In this work, we adopt a Gram objective to regularize cosine similarity between student and teacher patches, favoring them being close. In our case, we use earlier iterations of the SSL model itself as the teacher, demonstrating that early-stage SSL models effectively guides SSL training for both global and dense tasks.

【翻译】最近的趋势是基于蒸馏的"聚合"方法,这些方法结合来自多个图像编码器的信息,这些编码器在全局和局部特征质量上有所不同,使用不同级别的监督进行训练(Ranzinger等人,2024;Bolya等人,2025):AM-RADIO(Ranzinger等人,2024)将完全监督的SAM(Kirillov等人,2023)、弱监督的CLIP和自监督的DINOv2的优势结合到一个统一的骨干网络中。感知编码器(Bolya等人,2025)类似地将SAM(v2)蒸馏为一个专门的密集变体,称为PEspatial。它们使用一个目标来强制学生和教师补丁之间的余弦相似度很高,其中教师使用掩码标注进行训练。类似的损失在风格迁移的背景下被证明是有效的,通过减少特征维度的Gram矩阵之间的不一致性(Gatys等人,2016;Johnson等人,2016;Yoo等人,2024)。在这项工作中,我们采用Gram目标来正则化学生和教师补丁之间的余弦相似度,偏向于使它们接近。在我们的情况下,我们使用SSL模型本身的早期迭代作为教师,证明早期阶段的SSL模型有效地指导SSL训练,用于全局和密集任务。

【解析】这段话介绍了一种新兴的模型训练策略——聚合式蒸馏方法。知识蒸馏是一种模型压缩和知识传递技术,通常让小模型(学生)学习大模型(教师)的知识。但这里提到的"聚合"方法有所不同,它不是简单的模型压缩,而是将多个具有不同特长的预训练模型的知识整合到一个新的统一模型中。AM-RADIO方法很有代表性:它整合了三个不同训练方式的模型——SAM专长于分割任务(完全监督),CLIP擅长理解图文关系(弱监督),DINOv2在自监督学习方面表现出色。通过整合这些模型的优势,可以得到一个在多种任务上都表现良好的统一骨干网络。余弦相似度衡量两个向量夹角的指标,值越接近1说明两个向量越相似。在蒸馏过程中,通过最大化学生和教师网络对应补丁特征的余弦相似度,可以让学生网络学到教师网络的特征表示能力。Gram矩阵捕获特征之间的相关性模式,特别是在风格迁移任务中用于保持纹理和风格信息。本工作创新性地将早期训练阶段的同一个SSL模型作为教师,指导后续训练阶段,这种自我指导的策略既保持了全局任务的性能,又改善了密集预测任务的效果。

Other works focus on post-hoc improvements to the local features of SSL-trained models. For example, Ziegler and Asano ( 2022 ) fine-tune a pre-trained model with a dense clustering objective; similarly, Salehi et al. ( 2023 ) fine-tune by aligning patch features temporally, in both cases enhance the quality of local features. Closer to us, Pariza et al. ( 2025 ) propose a patch-sorting based objective to encourage the student and teacher to produce features with consistent neighbor ordering. Without finetuning, STEGO ( Hamilton et al. , 2022 ) learns a non-linear projection on top of frozen SSL features to form compact clusters and amplify correlation patterns. Alternatively, Simoncini et al. ( 2024 ) augment self-supervised features by concatenating gradients from different self-supervised objectives to frozen SSL features. Recently, Wysoczańska et al. ( 2024 ) show that noisy feature maps are significantly improved through a weighted average of patches.

【翻译】其他工作专注于对SSL训练模型的局部特征进行事后改进。例如,Ziegler和Asano(2022)使用密集聚类目标对预训练模型进行微调;类似地,Salehi等人(2023)通过在时间上对齐patch特征来进行微调,这两种情况都增强了局部特征的质量。与我们更接近的是,Pariza等人(2025)提出了基于patch排序的目标,以鼓励学生和教师产生具有一致邻居排序的特征。在不进行微调的情况下,STEGO(Hamilton等人,2022)在冻结的SSL特征之上学习非线性投影,以形成紧密的聚类并放大相关模式。另外,Simoncini等人(2024)通过将来自不同自监督目标的梯度连接到冻结的SSL特征来增强自监督特征。最近,Wysoczańska等人(2024)表明通过patch的加权平均可以显著改善噪声特征图。

【解析】这段话讨论的是改善自监督学习(SSL)模型局部特征质量的后处理方法。传统的SSL方法在训练完成后,其提取的局部特征(即patch级别的特征)可能存在质量不够理想的问题,因此研究者们开发了各种后处理技术来解决这一问题。密集聚类目标是指在每个空间位置都进行聚类操作,而不仅仅是全局特征聚类,这样可以让相似的局部区域在特征空间中更加紧密。时间对齐是利用视频序列中相同物体在不同帧中的对应关系来约束特征学习,确保同一物体的特征在时间维度上保持一致性。patch排序方法通过确保教师网络和学生网络对相同图像区域的相邻关系判断保持一致,来提高特征的空间连贯性。STEGO方法采用了一种无需重新训练的策略,它在已经训练好的SSL特征基础上添加一个可学习的非线性映射层,这个映射层专门用于增强特征的局部聚类性质和空间相关性。梯度连接技术通过融合不同自监督学习目标产生的梯度信息来丰富特征表示,这种方法能够综合多种自监督信号的优势。加权平均方法则是通过对邻近patch的特征进行加权融合来减少特征噪声,提高特征图的平滑性和一致性。

Related, but not specific to SSL, some recent works generate high-resolution feature maps from ViT feature maps ( Fu et al. , 2024 ), which are often low-resolution due to patchification of images. In contrast with this body of work, our models natively deliver high-quality dense feature maps that remain stable and consistent across resolutions, as shown in Fig. 4 .

【翻译】相关但不特定于SSL的一些最近工作从ViT特征图生成高分辨率特征图(Fu等人,2024),由于图像的patch化,这些特征图通常是低分辨率的。与这些工作相比,我们的模型原生地提供高质量的密集特征图,这些特征图在不同分辨率下保持稳定和一致,如图4所示。

【解析】这段话强调了DINOv3相对于其他方法的优势。Vision Transformer (ViT)由于其patch化的处理方式,即将输入图像分割成固定大小的patch块,导致输出的特征图分辨率相对较低。例如,如果输入图像是224×224像素,patch大小是16×16,那么得到的特征图只有14×14的空间分辨率。为了解决这个问题,一些研究工作开发了上采样或插值技术来从低分辨率的ViT特征图重建高分辨率特征图。然而,这类方法存在几个问题:首先,它们需要额外的计算开销来进行特征图重建;其次,重建过程可能引入伪影或失真;最重要的是,这些方法在处理不同分辨率输入时可能表现不一致。相比之下,DINOv3通过改进的架构设计和训练策略,能够直接产生高质量的密集特征图,无需后处理步骤。这些特征图不仅在空间上具有丰富的细节,而且在面对不同输入分辨率时能够保持特征质量的稳定性和一致性。

3 无监督大规模训练

DINOv3 is a next-generation model designed to produce the most robust and flexible visual representations to date by pushing the boundaries of self-supervised learning. We draw inspiration from the success of large language models (LLMs), for which scaling-up the model capacity leads to outstanding emerging properties . By leveraging models and training datasets that are an order of magnitude larger, we seek to unlock the full potential of SSL and drive a similar paradigm shift for computer vision, unencumbered by the limitations inherent to traditional supervised or task-specific approaches. In particular, SSL produces rich, high-quality visual features that are not biased toward any specific supervision or task, thereby providing a versatile foundation for a wide range of downstream applications. While previous attempts at scaling SSL models have been hindered by issues of instability, this section describes how we harness the benefits of scaling with careful data preparation, design, and optimization. We first describe the dataset creation procedure ( Sec. 3.1 ), then present the self-supervised SSL recipe used for this first training phase of DINOv3 ( Sec. 3.2 ). This includes the choice of architecture, loss functions, and optimization techniques. The second training phase, focusing on dense features, will be described in Sec. 4 .

【翻译】DINOv3是一个下一代模型,旨在通过推动自监督学习的边界来产生迄今为止最稳健和灵活的视觉表示。我们从大型语言模型(LLM)的成功中汲取灵感,对于这些模型,扩大模型容量会导致出色的新兴属性。通过利用比以往大一个数量级的模型和训练数据集,我们寻求释放SSL的全部潜力,并推动计算机视觉领域的类似范式转变,不受传统监督或任务特定方法固有限制的阻碍。特别是,SSL产生丰富、高质量的视觉特征,这些特征不偏向于任何特定的监督或任务,从而为广泛的下游应用提供了多功能的基础。虽然之前扩展SSL模型的尝试受到不稳定性问题的阻碍,但本节描述了我们如何通过仔细的数据准备、设计和优化来利用扩展的好处。我们首先描述数据集创建程序(第3.1节),然后介绍用于DINOv3第一个训练阶段的自监督SSL配方(第3.2节)。这包括架构、损失函数和优化技术的选择。专注于密集特征的第二个训练阶段将在第4节中描述。

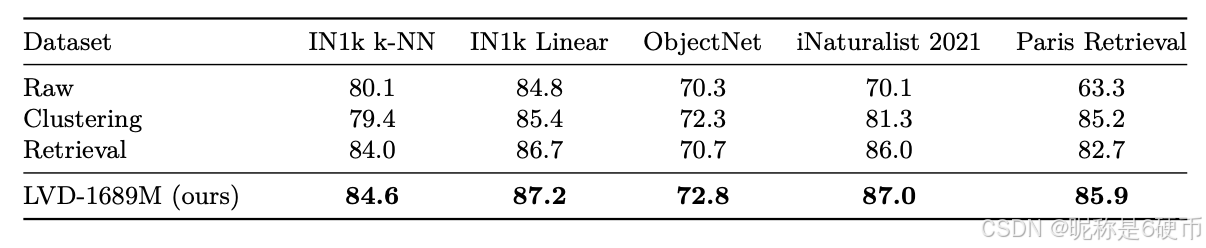

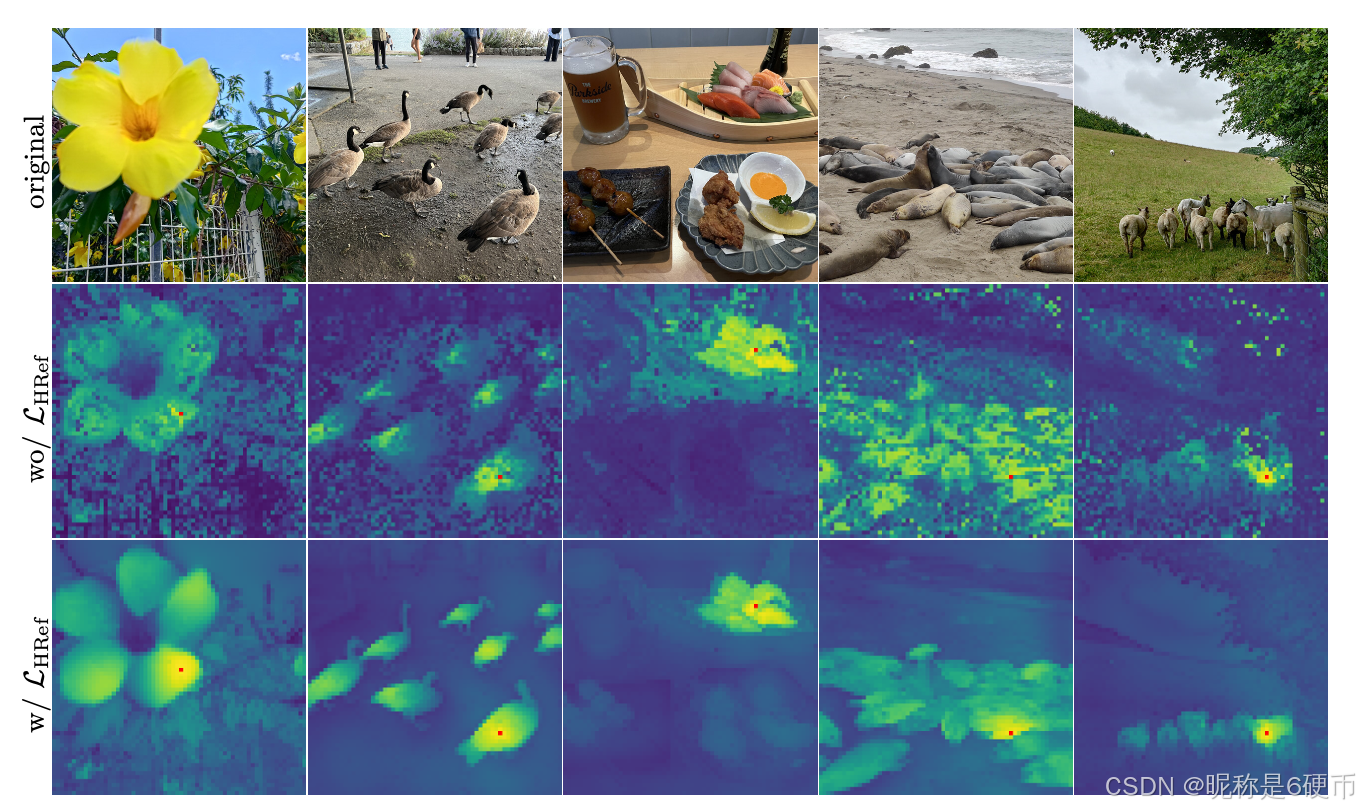

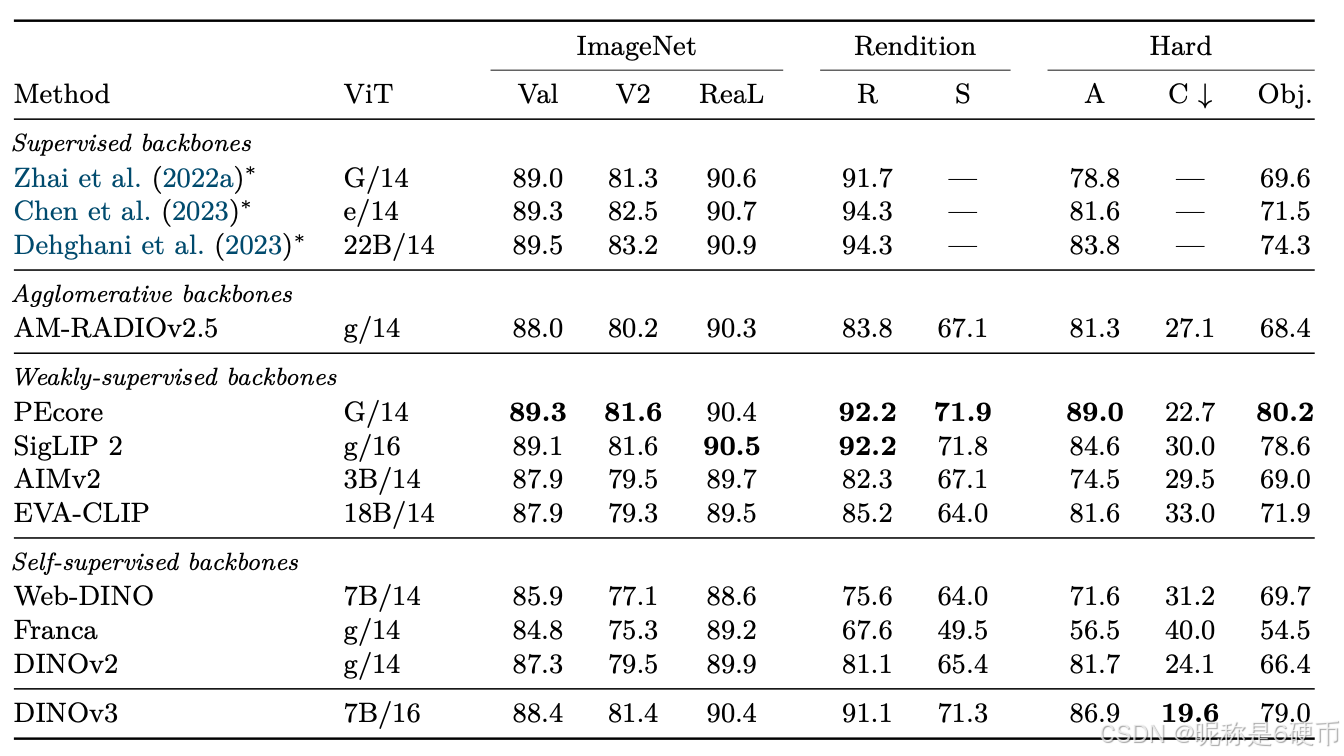

Table 1: Influence of training data on features quality shown via performance on downstream tasks. We compare datasets curated with clustering ( Vo et al. , 2024 ) and retrieval ( Oquab et al. , 2024 ) to raw data and to our data mixture. This ablation study is run for a shorter schedule of 200k iterations.

【翻译】表1:通过下游任务性能显示的训练数据对特征质量的影响。我们将使用聚类方法(Vo等人,2024)和检索方法(Oquab等人,2024)策划的数据集与原始数据和我们的数据混合进行比较。这项消融研究在200k次迭代的较短计划下运行。

3.1 数据准备

Data scaling is one of the driving factors behind the success of large foundation models ( Touvron et al. , 2023 ; Radford et al. , 2021 ; Xu et al. , 2024 ; Oquab et al. , 2024 ). However, increasing naively the size of the training data does not necessarily translate into higher model quality and better performance on downstream benchmarks ( Goyal et al. , 2021 ; Oquab et al. , 2024 ; Vo et al. , 2024 ): Successful data scaling efforts typically involve careful data curation pipelines. These algorithms may have different objectives: either focusing on improving data diversity and balance , or data usefulness —its relevance to common practical applications. For the development of DINOv3, we combine two complementary approaches to improve both the generalizability and performance of the model, striking a balance between the two objectives.

【翻译】数据扩展是大型基础模型成功背后的驱动因素之一(Touvron等人,2023;Radford等人,2021;Xu等人,2024;Oquab等人,2024)。然而,简单地增加训练数据的规模并不一定能转化为更高的模型质量和在下游基准测试上的更好性能(Goyal等人,2021;Oquab等人,2024;Vo等人,2024):成功的数据扩展工作通常涉及仔细的数据策划管道。这些算法可能有不同的目标:要么专注于改善数据的多样性和平衡性,要么专注于数据的有用性——即其与常见实际应用的相关性。在DINOv3的开发中,我们结合了两种互补的方法来改善模型的泛化性和性能,在两个目标之间取得平衡。

【解析】这段话阐述了在训练大型AI模型时数据处理的复杂性。数据扩展不仅仅是简单地收集更多数据,大量的低质量或不相关数据可能会引入噪声,影响模型的学习效果,甚至可能导致模型过拟合到无关的模式上。DINOv3一方面要确保模型具有良好的泛化能力,能够处理各种未见过的情况;另一方面要确保模型在实际应用中表现出色,能够解决真实世界的问题。

Data Collection and Curation We build our large-scale pre-training dataset by leveraging a large data pool of web images collected from public posts on Instagram. These images already went through platformlevel content moderation to help prevent harmful contents and we obtain an initial data pool of approximately 17 billions of images. Using this raw data pool, we create three dataset parts . We construct the first part by applying the automatic curation method based on hierarchical kkk -means from Vo et al. ( 2024 ). We employ DINOv2 as image embeddings, and use 5 levels of clustering with the number of clusters from the lowest to highest levels being 200M, 8M, 800k, 100k, and 25k respectively. After building the hierarchy of clusters, we apply the balanced sampling algorithm proposed in Vo et al. ( 2024 ). This results in a curated subset of 1,689 million images (named LVD-1689M) that guarantees a balanced coverage of all visual concepts appearing on the web. For the second part, we adopt a retrieval-based curation system similar to the procedure proposed by Oquab et al. ( 2024 ). We retrieve images from the data pool that are similar to those from selected seed datasets, creating a dataset that covers visual concepts relevant for downstream tasks. For the third part, we use raw publicly available computer vision datasets including ImageNet1k ( Deng et al. , 2009 ), ImageNet22k ( Russakovsky et al. , 2015 ), and Mapillary Street-level Sequences ( Warburg et al. , 2020 ). This final part allows us to optimize our model’s performance, following Oquab et al. ( 2024 ).

【翻译】数据收集和策划:我们通过利用从Instagram公开帖子收集的网络图像大数据池来构建我们的大规模预训练数据集。这些图像已经经过平台级内容审核,以帮助防止有害内容,我们获得了大约170亿张图像的初始数据池。使用这个原始数据池,我们创建了三个数据集部分。我们通过应用Vo等人(2024)基于分层kkk-means的自动策划方法来构建第一部分。我们使用DINOv2作为图像嵌入,并使用5个聚类级别,从最低到最高级别的聚类数量分别为200M、8M、800k、100k和25k。在构建聚类层次结构后,我们应用Vo等人(2024)提出的平衡采样算法。这产生了一个包含16.89亿张图像的策划子集(命名为LVD-1689M),保证对网络上出现的所有视觉概念的平衡覆盖。对于第二部分,我们采用类似于Oquab等人(2024)提出的程序的基于检索的策划系统。我们从数据池中检索与来自选定种子数据集的图像相似的图像,创建一个涵盖与下游任务相关的视觉概念的数据集。对于第三部分,我们使用原始的公开可用的计算机视觉数据集,包括ImageNet1k(Deng等人,2009)、ImageNet22k(Russakovsky等人,2015)和Mapillary街道级序列(Warburg等人,2020)。这最后一部分使我们能够优化模型的性能,遵循Oquab等人(2024)的做法。

【解析】DINOv3的整个数据准备流程采用了三重策略来确保数据质量和多样性。第一部分采用分层聚类方法,这是一种无监督的数据组织技术。分层k-means聚类通过多个层次逐步细化数据分组,从最粗糙的200M个聚类开始,逐步细化到25k个聚类。层次化设计,在不同的抽象层次上捕获视觉概念的相似性。在最高层,聚类可能按照基本的视觉属性(如颜色、纹理)分组;在较低层,聚类则更加精细,可能按照具体的对象类别或场景类型分组。平衡采样算法确保每个聚类在最终数据集中都有适当的代表性,避免某些视觉概念被过度或不足采样。第二部分的检索基础策划方法采用了不同的策略,它不是依赖数据的内在结构,而是基于与特定种子数据集的相似性来选择数据。有目的地收集与特定应用领域相关的数据,确保模型在实际应用中的有效性。种子数据集通常是精心策划的高质量数据集,通过检索与这些数据相似的图像,可以扩展高质量数据的规模。第三部分直接使用已有的标准计算机视觉数据集,这些数据集经过了广泛的验证和使用,能够为模型提供稳定的性能基准。这种三重策略的组合确保了数据集既具有广泛的覆盖性(通过聚类方法),又具有任务相关性(通过检索方法),同时保持了与现有基准的兼容性(通过标准数据集)。

Data Sampling During pre-training, we use a sampler to mix different data parts together. There are several different options for mixing the above data components. One is to train with homogeneous batches of data that come from a single, randomly selected component in each iteration. Alternatively, we can optimize the model on heterogeneous batches that are assembled by data from all components, selected using certain ratios. Inspired by Charton and Kempe ( 2024 ), who observed that it is beneficial to have homogeneous batches consisting of very high quality data from a small dataset, we randomly sample in each iteration either a homogeneous batch from ImageNet1k alone or a heterogeneous batch mixing data from all other components. In our training, homogeneous batches from ImageNet1k account for 10% of training.

【翻译】数据采样 在预训练期间,我们使用采样器将不同的数据部分混合在一起。有几种不同的选项来混合上述数据组件。一种是使用同质批次进行训练,这些批次来自每次迭代中随机选择的单个组件。另一种方法是,我们可以在异质批次上优化模型,这些批次由来自所有组件的数据组装而成,使用特定比例进行选择。受到Charton和Kempe(2024)的启发,他们观察到拥有由小数据集中非常高质量数据组成的同质批次是有益的,我们在每次迭代中随机采样要么是仅来自ImageNet1k的同质批次,要么是混合来自所有其他组件数据的异质批次。在我们的训练中,来自ImageNet1k的同质批次占训练的10%。

【解析】同质批次(homogeneous batches)指的是每个训练批次中的所有样本都来自同一个数据源或具有相似的特征分布,优势在于能够让模型在每次更新时专注学习特定类型数据的模式,避免不同数据源之间的干扰。异质批次(heterogeneous batches)则是将来自不同数据源的样本混合在一个批次中,这种方式能够让模型同时接触到多样化的数据模式,促进模型的泛化能力。DINOv3采用的混合策略是一种平衡方案:大部分时间(90%)使用异质批次来确保模型能够学习到丰富多样的视觉模式,同时保留10%的时间使用来自ImageNet1k的同质批次。ImageNet1k作为计算机视觉领域的经典数据集,具有高质量的标注和良好的数据分布,专门使用这些高质量数据的同质批次可以为模型提供稳定可靠的学习信号,有助于模型收敛到更好的局部最优解。这种策略的设计思路来源于Charton和Kempe的研究发现,他们证明了高质量小数据集的同质批次训练能够显著提升模型性能,因为高质量数据能够提供更清晰、更一致的学习目标,减少噪声数据对模型训练的负面影响。

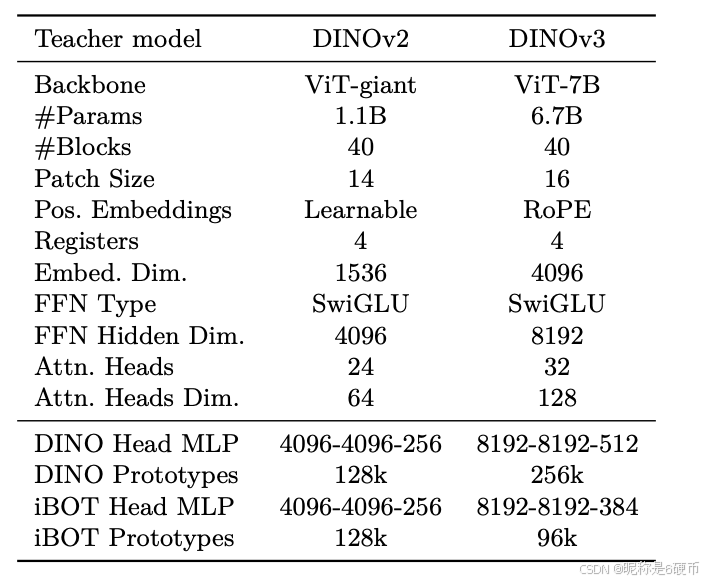

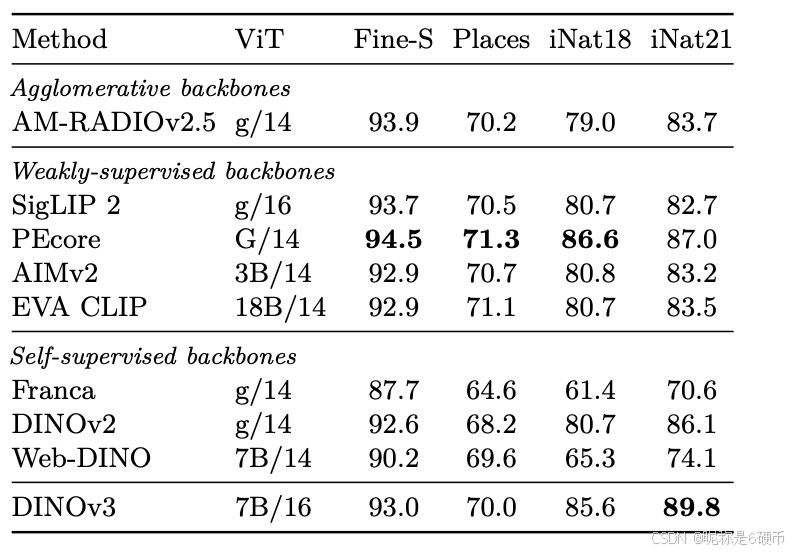

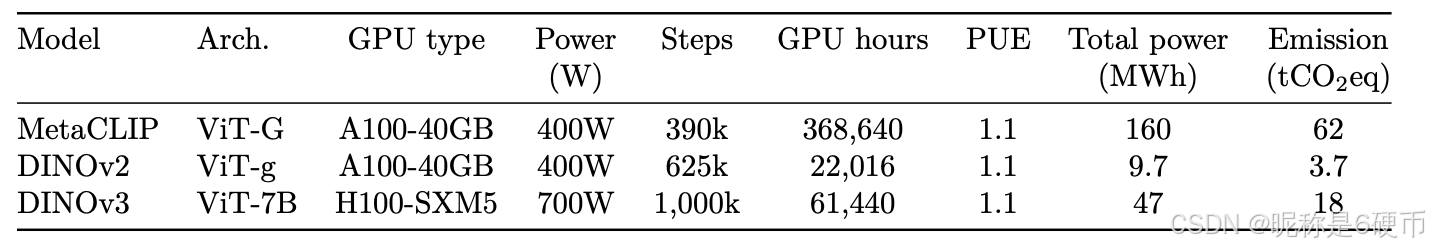

Table 2: Comparison of the teacher architectures used in DINOv2 and DINOv3 models. We keep the model 40 blocks deep, and increase the embedding dimension to 4096. Importantly, we use a patch size of 16 pixels, changing the effective sequence length for a given resolution.

【翻译】表2:DINOv2和DINOv3模型中使用的教师架构比较。我们保持模型40个块的深度,并将嵌入维度增加到4096。重要的是,我们使用16像素的patch大小,这改变了给定分辨率下的有效序列长度。

Data Ablation To assess the impact of our data curation technique, we perform an ablation study to compare our data mix against datasets curated with clustering or retrieval-based methods alone, and the raw data pool. To this end, we train a model on each dataset and compare their performance on standard downstream tasks. For efficiency, we use a shorter schedule of 200k iterations instead of 1M iterations. In Tab. 1 , it can be seen that no single curation technique works best across all benchmarks, and that our full pipeline allows us to obtain the best of both worlds.

【翻译】数据消融 为了评估我们数据策划技术的影响,我们进行了一项消融研究,将我们的数据混合与仅使用聚类或基于检索方法策划的数据集以及原始数据池进行比较。为此,我们在每个数据集上训练一个模型,并比较它们在标准下游任务上的性能。为了提高效率,我们使用200k次迭代的较短计划,而不是1M次迭代。从表1中可以看出,没有单一的策划技术在所有基准测试中都表现最佳,我们的完整流程使我们能够获得两全其美的效果。

3.2 自监督大规模训练

While models trained with SSL have demonstrated interesting properties ( Chen et al. , 2020b ; Caron et al. , 2021 ), most SSL algorithms have not been scaled-up to larger models sizes. This is either due to issues with training stability ( Darcet et al. , 2025 ), or overly simplistic solutions that fail to capture the full complexity of the visual world. When trained at scale ( Goyal et al. , 2022a ), models trained with SSL do not necessarily show impressive performance. One notable exception is DINOv2, a model with 1.1 billion parameters trained on curated data, matching the performance of weakly-supervised models like CLIP ( Radford et al. , 2021 ). A recent effort to scale DINOv2 to 7 billion parameters ( Fan et al. , 2025 ) demonstrates promising results on global tasks, but with disappointing results on dense prediction. Here, we aim to scale up the model and data, and obtain even more powerful visual representations with both improved global and local properties.

【翻译】虽然使用SSL训练的模型已经展现出有趣的特性(Chen等人,2020b;Caron等人,2021),但大多数SSL算法还没有扩展到更大的模型规模。这要么是由于训练稳定性问题(Darcet等人,2025),要么是过于简单的解决方案无法捕获视觉世界的全部复杂性。当大规模训练时(Goyal等人,2022a),使用SSL训练的模型不一定表现出令人印象深刻的性能。一个值得注意的例外是DINOv2,这是一个在策划数据上训练的11亿参数模型,与像CLIP这样的弱监督模型的性能相匹配(Radford等人,2021)。最近将DINOv2扩展到70亿参数的努力(Fan等人,2025)在全局任务上显示出有前景的结果,但在密集预测上结果令人失望。在这里,我们的目标是扩大模型和数据规模,获得更强大的视觉表示,同时改进全局和局部特性。

【解析】自监督学习(SSL)扩展到大规模模型时面临多重挑战。首先是训练稳定性问题,大型模型在自监督训练过程中容易出现梯度爆炸、梯度消失或收敛困难等问题,这些问题在有监督学习中通过标签信息的指导相对容易解决,但在自监督学习中需要更精巧的技术手段。其次是表示学习的复杂性,现实世界的视觉信息具有极高的复杂性和多样性,简单的自监督目标函数可能无法捕获这种复杂性,导致学到的表示过于简化。DINOv2作为成功案例的重要性在于它证明了自监督学习在大规模数据和模型上的可行性,其11亿参数的规模和与CLIP相匹配的性能打破了人们对自监督学习局限性的认知。然而,将模型进一步扩展到70亿参数时遇到的问题揭示了一个重要现象:全局任务和密集预测任务对模型表示的要求不同。全局任务(如图像分类)主要关注整体语义信息,而密集预测任务(如语义分割、目标检测)需要精细的局部空间信息。这种差异说明在扩展模型规模时,需要特别关注如何平衡全局表示和局部表示的学习,确保模型在获得更强全局理解能力的同时不丢失空间细节信息。

Learning Objective We train the model with a discriminative self-supervised strategy which is a mix of several self-supervised objectives with both global and local loss terms. Following DINOv2 ( Oquab et al. , 2024 ), we use an image-level objective ( Caron et al. , 2021 ) LDINO\mathcal{L}_{\mathrm{DINO}}LDINO , and balance it with a patch-level latent reconstruction objective ( Zhou et al. , 2021 ) LiBOT\mathcal{L}_{\mathrm{iBOT}}LiBOT . We also replace the centering from DINO with the Sinkhorn-Knopp from SwAV ( Caron et al. , 2020 ) in both objectives. Each objective is computed using the output of a dedicated head on top of the backbone network, allowing for some specialization of features before the computation of the losses. Additionally, we use a dedicated layer normalization applied to the backbone outputs of the local and global crops. Empirically, we found this change to stabilize ImageNet kNNclassification late in training (+0.2 accuracy) and improve dense performance ( e.g . +1+1+1 mIoU on ADE20k segmentation, -0.02 RMSE on NYUv2 depth estimation). In addition, a Koleo regularizer LKoleo\scriptstyle{\mathcal{L}}_{\mathrm{Koleo}}LKoleo is added to encourage the features within a batch to spread uniformly in the space ( Sablayrolles et al. , 2018 ). We use a distributed implementation of Koleo in which the loss is applied in small batches of 16 samples—possibly across GPUs. Our initial training phase is carried by optimizing the following loss:

【翻译】学习目标 我们使用一种判别式自监督策略来训练模型,该策略混合了几个包含全局和局部损失项的自监督目标。遵循DINOv2(Oquab等人,2024),我们使用图像级目标(Caron等人,2021)LDINO\mathcal{L}_{\mathrm{DINO}}LDINO,并将其与patch级潜在重构目标(Zhou等人,2021)LiBOT\mathcal{L}_{\mathrm{iBOT}}LiBOT平衡。我们还将DINO中的centering替换为SwAV(Caron等人,2020)中的Sinkhorn-Knopp方法,应用于两个目标。每个目标都使用骨干网络顶部专用头的输出来计算,允许在计算损失之前对特征进行一些专门化。此外,我们使用专用的层归一化应用于局部和全局裁剪的骨干输出。根据经验,我们发现这种变化能够稳定训练后期的ImageNet kNN分类(+0.2准确率)并改善密集性能(例如,ADE20k分割+1 mIoU,NYUv2深度估计-0.02 RMSE)。另外,添加了Koleo正则化器LKoleo\scriptstyle{\mathcal{L}}_{\mathrm{Koleo}}LKoleo来鼓励批次内的特征在空间中均匀分布(Sablayrolles等人,2018)。我们使用Koleo的分布式实现,其中损失应用于16个样本的小批次中——可能跨GPU。我们的初始训练阶段通过优化以下损失进行:

【解析】DINOv3的学习目标设计采用了多目标融合的策略,同时优化全局语义理解和局部空间细节保持。DINO损失(LDINO\mathcal{L}_{\mathrm{DINO}}LDINO)作为图像级目标,主要负责学习整体语义表示,它通过教师-学生网络的知识蒸馏机制,让学生网络学习教师网络对整张图像的全局理解。iBOT损失(LiBOT\mathcal{L}_{\mathrm{iBOT}}LiBOT)则专注于patch级的潜在重构,这个目标确保模型能够从局部patch的特征中重构出丢失的信息,从而保持对空间细节的敏感性。Sinkhorn-Knopp算法的引入替代了原始DINO中的centering操作,这是一个重要的技术改进。Centering操作的目的是防止模型输出坍塌到同一点,但简单的centering可能会影响特征的表达能力。Sinkhorn-Knopp算法通过迭代优化的方式实现更加平衡的特征分布,它将特征分布问题建模为最优传输问题,能够更好地保持特征的多样性和区分性。专用头的设计允许不同的损失函数在特征空间的不同子空间中进行优化,这种架构设计避免了不同目标之间的直接冲突,让每个目标都能在适合自己的特征表示空间中进行优化。层归一化的应用进一步稳定了训练过程,特别是在处理不同尺度的全局和局部裁剪时,层归一化确保了特征的数值稳定性。Koleo正则化器的作用是防止特征坍塌和促进特征多样性,它通过惩罚批次内特征之间的过度相似性,鼓励模型学习更加丰富和均匀分布的特征表示,这对于自监督学习尤为重要,因为缺乏显式标签指导容易导致特征退化。

LPre=LDINO+LiBOT+0.1∗LDKoleo.\mathcal{L}_{\mathrm{Pre}}=\mathcal{L}_{\mathrm{DINO}}+\mathcal{L}_{\mathrm{iBOT}}+0.1*\mathcal{L}_{\mathrm{DKoleo.}} LPre=LDINO+LiBOT+0.1∗LDKoleo.

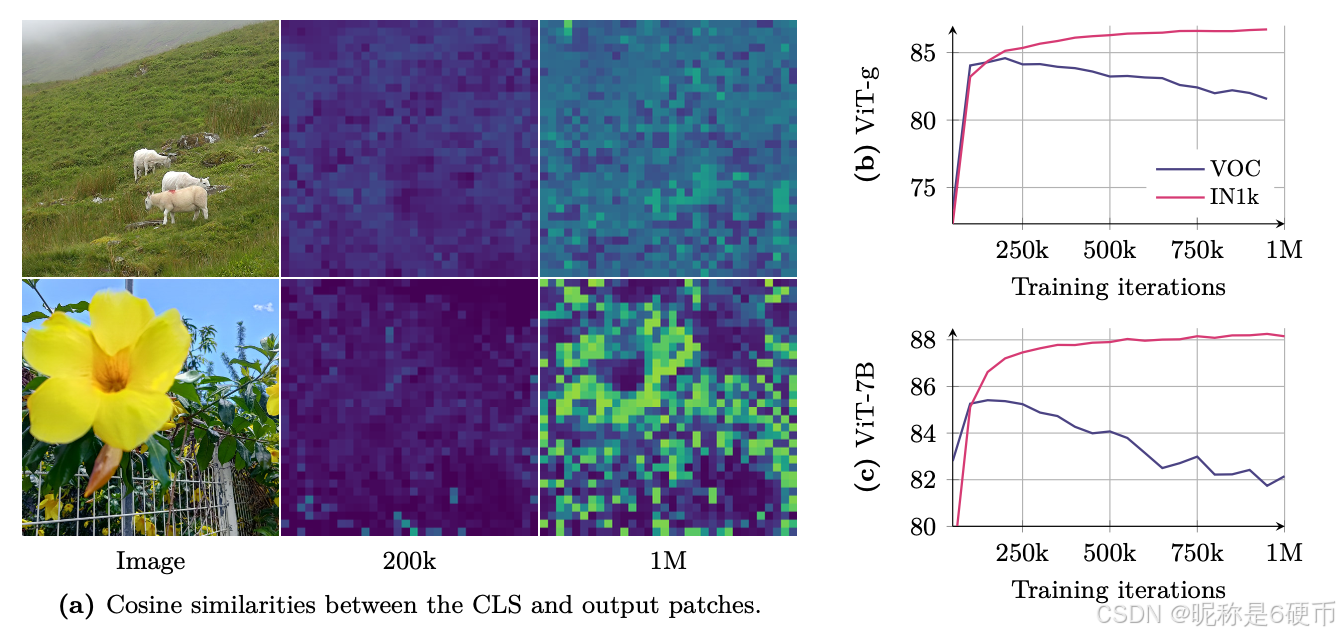

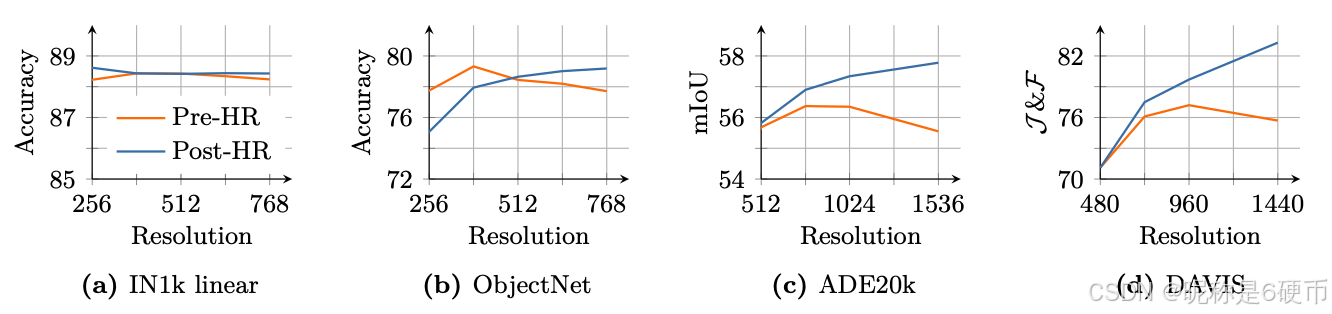

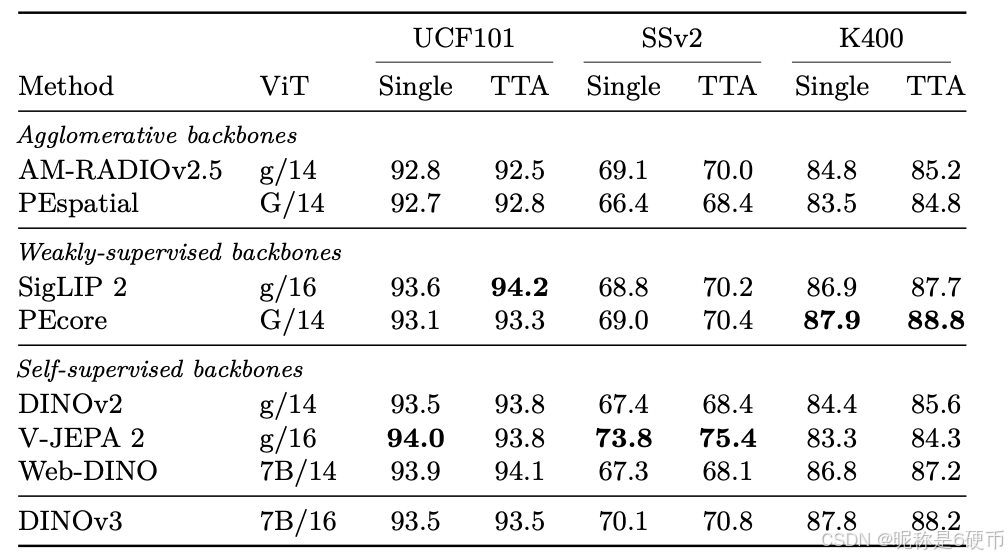

Figure 5: Evolution of the cosine similarities (a) and of the accuracy on ImageNet1k linear (IN1k) and segmentation on VOC for ViT-g (b) and ViT-7B ©. We observe that the segmentation performance is maximal when the cosine similarities between the patch tokens and the class tokens are low. As training progresses, these similarities increase and the performance on dense tasks decreases.

【翻译】图5:余弦相似度的演化(a)以及ViT-g(b)和ViT-7B(c)在ImageNet1k线性(IN1k)和VOC分割上的准确率。我们观察到当patch token和class token之间的余弦相似度较低时,分割性能达到最大值。随着训练的进行,这些相似度增加,密集任务的性能下降。

【解析】这个现象揭示了Vision Transformer在长期训练过程中的一个问题:全局表示和局部表示之间的竞争关系。在Vision Transformer中,class token(分类token)负责聚合整个图像的全局信息,而patch token则保留局部空间信息。当这两种token之间的余弦相似度较低时,说明它们保持着相对独立的表示空间,patch token能够保留丰富的局部细节信息,这对于需要精确空间定位的密集预测任务(如语义分割)是至关重要的。随着训练的深入,模型逐渐倾向于将更多信息集中到class token中以优化全局任务的性能,这导致patch token与class token的表示越来越相似。这种相似度的增加实际上说明patch token正在失去其独特的局部表示能力,变得更像全局特征的副本。这种现象在大规模模型(如7B参数的ViT)中尤为明显,因为更大的模型容量使得这种特征同质化现象更容易发生。这个发现对理解自监督学习中的表示塌陷问题具有重要意义,也为后续提出的Gram Anchoring方法提供了理论基础。

Updated Model Architecture For the model scaling aspect of this work, we increase the size of the model to 7B parameters, and provide in Tab. 2 a comparison of the corresponding hyperparameters with the 1.1B parameter model trained in the DINOv2 work. We also employ a custom variant of RoPE: our base implementation assigns coordinates in a normalized [−1,1][-1,1][−1,1] box to each patch, then applies a bias in the multi-head attention operation depending on the relative position of two patches. In order to improve the robustness of the model to resolutions, scales and aspect ratios, we employ RoPE-box jittering . The coordinate box [−1,1][-1,1][−1,1] is randomly scaled to [−s,s][-s,s][−s,s] , where s∈[0.5,2]s\in[0.5,2]s∈[0.5,2] . Together, these changes enable DINOv3 to better learn detailed and robust visual features, improving its performance and scalability.

【翻译】更新的模型架构 对于这项工作的模型扩展方面,我们将模型大小增加到70亿参数,并在表2中提供了与DINOv2工作中训练的11亿参数模型对应超参数的比较。我们还采用了RoPE的自定义变体:我们的基础实现为每个patch分配归一化[−1,1][-1,1][−1,1]框中的坐标,然后根据两个patch的相对位置在多头注意力操作中应用偏置。为了提高模型对分辨率、尺度和纵横比的鲁棒性,我们采用RoPE-box抖动。坐标框[−1,1][-1,1][−1,1]被随机缩放到[−s,s][-s,s][−s,s],其中s∈[0.5,2]s\in[0.5,2]s∈[0.5,2]。这些变化共同使DINOv3能够更好地学习详细和鲁棒的视觉特征,提高其性能和可扩展性。

【解析】模型架构的更新主要围绕两个核心方面:规模扩展和位置编码优化。将参数量从11亿扩展到70亿代表了一个重大的规模跃升,这不仅仅是简单的参数数量增加,而是模型表示能力的质的提升。这种规模的增长使模型能够捕获更复杂的视觉模式和更细粒度的特征关系。RoPE(Rotary Position Embedding)的自定义实现是一个重要的技术创新。传统的位置编码方法在处理可变分辨率图像时存在局限性,而RoPE通过为每个patch分配归一化坐标系统[−1,1][-1,1][−1,1]中的位置,能够更灵活地处理不同尺寸的输入。多头注意力机制中的位置相关偏置使模型能够显式地利用空间关系信息,这对于理解图像中对象之间的空间布局至关重要。RoPE-box抖动技术是一个数据增强策略,通过随机缩放坐标框到[−s,s][-s,s][−s,s]范围(其中sss在0.5到2之间变化),模型在训练过程中接触到不同的空间尺度信息。这种抖动策略强制模型学习尺度不变的特征表示,提高了对不同分辨率、尺度和纵横比图像的泛化能力。这种设计特别重要,因为在实际应用中,输入图像的尺寸和比例往往是多变的,模型需要具备处理这种变化的鲁棒性。

Optimization Training large models on very large datasets represents a complicated experimental workflow. Because the interplay between model capacity and training data complexity is hard to assess a priori , it is impossible to guess the right optimization horizon. To overcome this, we get rid of all parameter scheduling, and train with constant learning rate, weight decay, and teacher EMA momentum. This has two main benefits. First, we can continue training as long as downstream performance continues to improve. Second, the number of optimization hyperparameters is reduced, making it easier to choose them properly. For the training to start properly, we still use a linear warmup for learning rate and teacher temperature. Following common practices, we use AdamW ( Loshchilov and Hutter , 2017 ), and set the total batch size to 4096 images split across 256 GPUs. We train our models using the multi-crop strategy ( Caron et al. , 2020 ), taking 2 global crops and 8 local crops per image. We use square images with a side length of 256/112 pixels for global/local crops, which, along with the change in patch size, results in the same effective sequence length per image as in DINOv2 and a total sequence length of 3 . 7M tokens per batch. Additional hyperparameters can be found in App. C and in the code release.

【翻译】优化 在超大数据集上训练大型模型代表了一个复杂的实验工作流程。由于模型容量和训练数据复杂性之间的相互作用很难先验评估,因此不可能猜测正确的优化时间范围。为了克服这个问题,我们去除所有参数调度,使用恒定的学习率、权重衰减和教师EMA动量进行训练。这有两个主要好处。首先,只要下游性能继续提高,我们就可以继续训练。其次,优化超参数的数量减少了,使得正确选择它们变得更容易。为了让训练正确开始,我们仍然对学习率和教师温度使用线性预热。遵循常见做法,我们使用AdamW(Loshchilov和Hutter,2017),并将总批次大小设置为4096张图像,分布在256个GPU上。我们使用多裁剪策略(Caron等人,2020)训练模型,每张图像采用2个全局裁剪和8个局部裁剪。我们使用边长为256/112像素的正方形图像进行全局/局部裁剪,结合patch大小的变化,这导致每张图像的有效序列长度与DINOv2相同,每批次总序列长度为3.7M个token。额外的超参数可以在附录C和代码发布中找到。

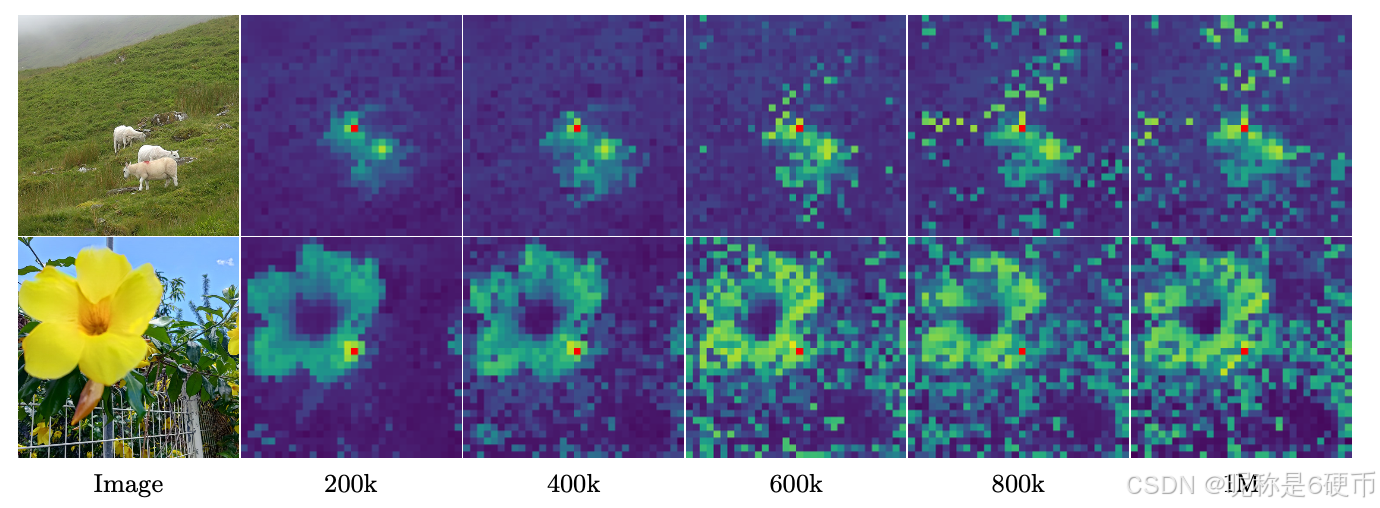

Figure 6: Evolution of the cosine similarity between the patch noted in red and all other patches. As training progresses, the features produced by the model become less localized and the similarity maps become noisier.

【翻译】图6:标记为红色的patch与所有其他patch之间余弦相似度的演化。随着训练的进行,模型产生的特征变得不那么局部化,相似度图变得更加嘈杂。

【解析】这个可视化清晰地展示了长期训练过程中patch级特征质量的退化现象。在训练初期,相似度图显示出良好的空间局部性,即相邻的patch具有较高的相似度,而距离较远的patch相似度较低,这说明模型能够很好地保持空间邻近性的语义一致性。随着训练迭代次数的增加,这种空间局部性逐渐丧失,原本应该与参考patch相似度较低的远距离patch开始显示出不合理的高相似度。这种现象的根本原因在于模型在优化全局表示性能的过程中,逐渐将更多的语义信息集中到class token中,导致patch token失去了独特的局部表示能力。相似度图的噪声增加说明模型无法稳定地区分不同空间位置的特征,这直接影响了需要精确空间定位的密集预测任务的性能。

4 Gram锚定:密集特征的正则化方法

To fully leverage the benefits of large-scale training, we aim to train the 7B model for an extended duration, with the notion that it could potentially train indefinitely. As expected, prolonged training leads to improvements on global benchmarks. However, as training progresses, the performance degrades on dense tasks ( Figs. 5b and 5c ). This phenomenon, which is due to the emergence of patch-level inconsistencies in feature representations, undermines the interest behind extended training. In this section, we first analyze the loss of patch-level consistency, then propose a new objective to mitigate it, called Gram anchoring . We finally discuss the impact of our approach on both training stability and model performance.

【翻译】为了充分利用大规模训练的好处,我们的目标是对70亿参数模型进行长时间训练,认为它可能可以无限期地训练下去。正如预期的那样,长时间训练导致全局基准测试的改进。然而,随着训练的进行,密集任务的性能下降(图5b和5c)。这种现象是由于特征表示中出现patch级不一致性,破坏了长时间训练的意义。在本节中,我们首先分析patch级一致性的丢失,然后提出一个新的目标来缓解它,称为Gram锚定。我们最后讨论我们方法对训练稳定性和模型性能的影响。

【解析】大规模模型的长期训练呈现出一个矛盾现象:全局性能和局部性能的分化发展趋势。在理想情况下,随着训练时间的延长和参数规模的增大,模型应该在所有任务上都表现出持续的性能提升。然而,实际观察到的现象却表明,70亿参数的大模型在长期训练过程中会出现性能分化。全局任务(如图像分类)的性能确实随着训练时间延长而稳步改善,这说明模型在学习整体语义表示方面确实受益于更多的训练迭代。但密集预测任务(如语义分割、目标检测)的性能却逐渐恶化,这暴露了一个深层次的问题。这种性能分化的根源在于patch级特征表示的一致性逐渐丧失。在Vision Transformer架构中,图像被分割成多个patch,每个patch都有对应的特征表示。在训练初期,相邻或语义相关的patch往往具有相似的特征表示,这种空间连续性对于密集预测任务至关重要。然而,随着训练的深入,这种patch级的一致性开始退化,相邻patch的特征表示可能变得截然不同,导致特征图中出现不规则的跳跃和不连续性。这种不一致性虽然可能不影响全局语义理解(因为全局任务主要依赖于整体特征聚合),但对需要精确空间定位和边界判断的密集任务造成严重损害。因此,需要设计专门的机制来在长期训练过程中维持patch级特征的空间一致性,这就是Gram锚定方法提出的动机。

4.1 训练过程中Patch级一致性的丢失

During extended training, we observe consistent improvements in global metrics but a notable decline in performance on dense prediction tasks. This behavior was previously observed, to a lesser extent, during the training of DINOv2, and also discussed in the scaling effort of Fan et al. ( 2025 ). However, to the best of our knowledge, it remains unresolved to date. We illustrate the phenomenon in Figs. 5b and 5c , which present the performance of the model across iterations on both image classification and segmentation tasks. For classification, we train a linear classifier on ImageNet-1k using the CLS token and report top-1 accuracy. For segmentation, we train a linear layer on patch features extracted from Pascal VOC and report mean Intersection over Union (mIoU). We observe that both for the ViT-g and the ViT-7B, the classification accuracy monotonically improves throughout training. However, segmentation performance declines in both cases after approximately 200k iterations, falling below its early levels in the case of the ViT-7B.

【翻译】在长期训练过程中,我们观察到全局指标的持续改善,但密集预测任务的性能显著下降。这种行为之前在DINOv2的训练中也观察到过(程度较轻),Fan等人(2025)的扩展工作中也讨论过。然而,据我们所知,这个问题至今仍未解决。我们在图5b和5c中展示了这种现象,呈现了模型在图像分类和分割任务上随迭代次数变化的性能。对于分类,我们使用CLS token在ImageNet-1k上训练线性分类器并报告top-1准确率。对于分割,我们在从Pascal VOC提取的patch特征上训练线性层并报告平均交并比(mIoU)。我们观察到,对于ViT-g和ViT-7B,分类准确率在整个训练过程中单调提高。然而,在大约200k迭代后,两种情况下的分割性能都出现下降,在ViT-7B的情况下甚至低于早期水平。

To better understand this degradation, we analyze the quality of patch features by visualizing cosine similarities between patches. Fig. 6 shows the cosine similarity maps between the backbone’s output patch features and a reference patch (highlighted in red). At 200k iterations, the similarity maps are smooth and well-localized, indicating consistent patch-level representations. However, by 600k iterations and beyond, the maps degrade substantially, with an increasing number of irrelevant patches with high similarity to the reference patch. This loss of patch-level consistency correlates with the drop in dense task performance.

【翻译】为了更好地理解这种退化,我们通过可视化patch之间的余弦相似度来分析patch特征的质量。图6显示了主干网络输出的patch特征与参考patch(用红色突出显示)之间的余弦相似度图。在20万次迭代时,相似度图平滑且定位良好,表明patch级表示的一致性。然而,到了60万次迭代及以后,相似度图显著退化,越来越多不相关的patch与参考patch表现出高相似度。这种patch级一致性的丢失与密集任务性能的下降相关。

【解析】可视化分析Vision Transformer训练过程中patch级特征质量变化的。在20万次迭代的早期阶段,余弦相似度图呈现出理想的空间连续性特征:与参考patch在空间上相邻的patch显示出较高的相似度,而距离较远的patch则表现出较低的相似度。这种空间局部性说明模型成功学习到了空间邻近性与语义相似性之间的对应关系,这是进行精确空间定位任务的基础。然而,随着训练进行到60万次迭代,这种理想的空间结构开始瓦解。原本应该与参考patch语义差异较大的远距离patch开始异常地显示出高相似度,这打破了空间连续性假设。标志着patch级特征表示失去了空间定位能力,模型无法再准确区分不同空间位置的语义差异。这种patch级一致性的丧失直接导致了密集预测任务性能的下降,因为这些任务需要模型在像素级或区域级进行精确的语义判断,而混乱的空间相似度关系会产生错误的预测边界和不连续的分割结果。

These patch-level irregularities differ from the high-norm patch outliers described in Darcet et al. ( 2024 ). Specifically, with the integration of register tokens, patch norms remain stable throughout training. However, we notice that the cosine similarity between the CLS token and the patch outputs gradually increases during training. This is expected, yet it means that the locality of the patch features diminishes. We visualize this phenomenon in Fig. 5a , which depicts the cosine maps at 200k and 1M iterations. In order to mitigate the drop on dense tasks, we propose a new objective specifically designed to regularize the patch features and ensure a good patch-level consistency, while preserving high global performance.

【翻译】这些patch级的不规律性与Darcet等人(2024)描述的高范数patch异常值不同。具体来说,通过集成寄存器token,patch范数在整个训练过程中保持稳定。然而,我们注意到CLS token和patch输出之间的余弦相似度在训练过程中逐渐增加。这是预期的,但这说明patch特征的局部性在减弱。我们在图5a中可视化了这种现象,描绘了20万次和100万次迭代时的余弦图。为了缓解密集任务上的性能下降,我们提出了一个新的目标,专门设计用于正则化patch特征并确保良好的patch级一致性,同时保持高全局性能。

【解析】这里提到的patch级不规律性与之前文献中描述的高范数异常值问题在本质上是不同的。Darcet等人观察到的高范数patch异常值主要是指某些patch的特征向量范数异常大,这会影响整个网络的数值稳定性。而register token的引入有效解决了这个范数异常问题,使得所有patch的特征范数在训练过程中保持相对稳定。然而,DINOv3面临的是一个更深层的结构性问题:CLS token与patch token之间的余弦相似度逐渐增加。在理想情况下,CLS token应该聚合全局信息,而patch token应该保持局部空间信息,两者应该在功能上有所区分。相似度的增加说明patch token正在失去其独特的局部表示能力,变得越来越像全局特征的副本。这种现象在图5a的对比中清晰可见:20万次迭代时patch特征还能保持良好的空间局部性,但到100万次迭代时这种局部性基本消失。这促使研究者提出Gram锚定方法,该方法通过显式的正则化来维持patch级特征的空间一致性,既保证了全局表示的学习效果,又防止了局部特征质量的退化。

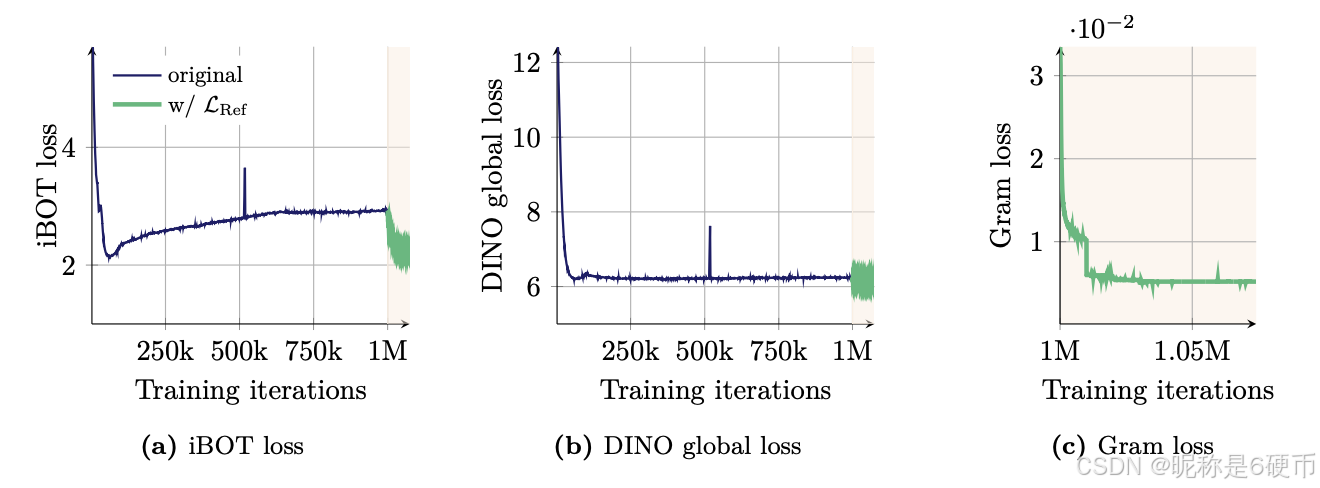

Figure 7: Evolution trough the training iterations of the patch-level iBOT loss, the global loss DINO (applied to the global crops) and the newly introduced Gram loss. We highlight the iterations of the refinement step LRef\mathcal{L}_{\mathrm{Ref}}LRef which uses the Gram objective.

【翻译】图7:patch级iBOT损失、全局DINO损失(应用于全局裁剪)和新引入的Gram损失在训练迭代过程中的演化。我们突出显示了使用Gram目标的细化步骤LRef\mathcal{L}_{\mathrm{Ref}}LRef的迭代次数。

4.2 Gram锚定目标

Throughout our experiments, we have identified a relative independence between learning strong discriminative features and maintaining local consistency, as observed in the lack of correlation between global and dense performance. While combining the global DINO loss with the local iBOT loss has begun to address this issue, we observe that the balance is unstable, with global representation dominating as training progresses. Building on this insight, we propose a novel solution that explicitly leverages this independence.

【翻译】在我们的实验中,我们发现学习强判别特征和维持局部一致性之间存在相对独立性,这从全局性能和密集性能之间缺乏相关性中可以观察到。虽然将全局DINO损失与局部iBOT损失结合已经开始解决这个问题,但我们观察到这种平衡是不稳定的,随着训练的进行,全局表示占主导地位。基于这一洞察,我们提出了一个明确利用这种独立性的新解决方案。

We introduce a new objective which mitigates the degradation of patch-level consistency by enforcing the quality of the patch-level consistency, without impacting the features themselves. This new loss function operates on the Gram matrix: the matrix of all pairwise dot products of patch features in an image. We want to push the Gram matrix of the student towards that of an earlier model, referred to as the Gram teacher . We select the Gram teacher by taking an early iteration of the teacher network, which exhibits superior dense properties. By operating on the Gram matrix rather than the feature themselves, the local features are free to move, provided the structure of similarities remains the same. Suppose we have an image composed of PPP patches, and a network that operates in dimension ddd . Let us denote by XS\mathbf{X}_{S}XS (respectively XG\mathbf{X}_{G}XG ) the P×dP\times dP×d matrix of L2{\bf L}_{2}L2 -normalized local features of the student (respectively the Gram teacher). We define the loss LGram\mathcal{L}_{\mathrm{Gram}}LGram as follows:

【翻译】我们引入了一个新的目标,通过强化patch级一致性的质量来缓解patch级一致性的退化,而不影响特征本身。这个新的损失函数作用于Gram矩阵:图像中patch特征所有成对点积的矩阵。我们希望将学生模型的Gram矩阵推向早期模型的Gram矩阵,我们称之为Gram教师。我们通过选择教师网络的早期迭代来选择Gram教师,该迭代表现出优越的密集特性。通过操作Gram矩阵而不是特征本身,局部特征可以自由移动,只要相似性结构保持相同。假设我们有一个由PPP个patch组成的图像,以及一个在维度ddd上操作的网络。让我们用XS\mathbf{X}_{S}XS(分别用XG\mathbf{X}_{G}XG)来表示学生模型(分别是Gram教师)的L2{\bf L}_{2}L2归一化局部特征的P×dP\times dP×d矩阵。我们定义损失LGram\mathcal{L}_{\mathrm{Gram}}LGram如下:

【解析】Gram锚定方法的创新在于通过操作Gram矩阵来间接约束patch特征的空间结构,而不是直接对特征向量本身进行约束。Gram矩阵是一个P×PP \times PP×P的对称矩阵,其中每个元素Gij=xiTxjG_{ij} = \mathbf{x}_i^T \mathbf{x}_jGij=xiTxj表示第iii个patch和第jjj个patch特征向量之间的点积,本质上捕获了patch之间的相似性关系。这种设计的巧妙之处在于,它保持了特征学习的灵活性:patch特征向量仍然可以在高维空间中自由演化和优化,只要它们之间的相对相似性结构与参考模型保持一致。Gram教师的选择策略也很重要,研究团队选择训练早期阶段的模型作为参考,因为早期模型在局部一致性方面表现更好,能够提供理想的空间结构模板。通过让当前训练中的学生模型的Gram矩阵向早期Gram教师的Gram矩阵靠拢,可以有效地"修复"在长期训练过程中丢失的空间局部性。L2{\bf L}_{2}L2归一化确保了不同patch特征向量具有相同的范数,使得Gram矩阵中的值主要反映角度相似性而不是幅度差异,这有助于稳定训练过程并确保相似性度量的准确性。这种方法的另一个优势是它不会直接干预全局特征学习过程,因为它主要作用于局部patch之间的相互关系,而全局表示主要由class token承载,从而实现了局部一致性维持和全局性能优化的解耦。

LGram=∥XS⋅XS⊤−XG⋅XG⊤∥F2.\mathcal{L}_{\mathrm{Gram}}=\left\|\mathbf{X}_{S}\cdot\mathbf{X}_{S}^{\top}-\mathbf{X}_{G}\cdot\mathbf{X}_{G}^{\top}\right\|_{\mathrm{F}}^{2}. LGram=XS⋅XS⊤−XG⋅XG⊤F2.

We only compute this loss on the global crops. Even though it can be applied early on during the training, for efficiency, we start only after 1M iterations. Interestingly, we observe that the late application of LGram\mathcal{L}_{\mathrm{Gram}}LGram still manages to “repair” very degraded local features. In order to further improve performance, we update the Gram teacher every 10k iterations at which the Gram teacher becomes identical to the main EMA teacher. We call this second step of training the refinement step , which optimizes the objective LRef\mathcal{L}_{\mathrm{Ref}}LRef , with

LRef=wDLDINO+LiBOT+wDKLDKoleo+wGramLGram.\mathcal{L}_{\mathrm{Ref}}=w_{\mathrm{D}}\mathcal{L}_{\mathrm{DINO}}+\mathcal{L}_{\mathrm{iBOT}}+w_{\mathrm{DK}}\mathcal{L}_{\mathrm{DKoleo}}+w_{\mathrm{Gram}}\mathcal{L}_{\mathrm{Gram}}. LRef=wDLDINO+LiBOT+wDKLDKoleo+wGramLGram.

【翻译】我们只在全局裁剪上计算这个损失。尽管它可以在训练早期应用,但为了效率,我们只在100万次迭代后开始。有趣的是,我们观察到延迟应用LGram\mathcal{L}_{\mathrm{Gram}}LGram仍然能够"修复"严重退化的局部特征。为了进一步提高性能,我们每10k次迭代更新一次Gram教师,此时Gram教师变得与主EMA教师相同。我们称训练的第二步为细化步骤,它优化目标LRef\mathcal{L}_{\mathrm{Ref}}LRef。

【解析】Gram锚定损失的是效率与效果的平衡考量。首先,损失计算只作用于全局裁剪而非局部裁剪,这是因为全局裁剪包含了完整图像的所有patch信息,能够提供完整的空间相似性结构,而局部裁剪只是图像的部分区域,无法反映完整的空间关系。其次,选择在100万次迭代后才引入LGram\mathcal{L}_{\mathrm{Gram}}LGram损失是一个关键的时序决策。在训练初期,模型仍在学习基础的视觉表示,patch级一致性问题尚未显现,过早引入额外的约束可能会干扰基础特征的学习。而在100万次迭代后,模型已经学到了丰富的语义表示,但开始出现patch级一致性退化,此时引入Gram锚定能够精确地解决目标问题。即使在特征已经严重退化的后期阶段引入Gram损失,仍然能够有效"修复"局部特征质量,这说明Vision Transformer的特征表示具有良好的可塑性。Gram教师的动态更新策略也很关键:每10k次迭代将Gram教师更新为当前的EMA教师,确保参考模板始终保持相对新鲜的状态,避免使用过时的特征结构作为约束目标。细化阶段的损失函数LRef\mathcal{L}_{\mathrm{Ref}}LRef是多个损失项的加权组合,包括全局DINO损失、局部iBOT损失、DKoleo损失和新的Gram损失,各项权重wDw_{\mathrm{D}}wD、wDKw_{\mathrm{DK}}wDK、wGramw_{\mathrm{Gram}}wGram的设置需要精心调节,以确保在修复局部特征一致性的同时不损害已经学到的全局表示能力。

We visualize the evolution of different losses in Fig. 7 and observe that applying the Gram objective significantly influences the iBOT loss, causing it to decrease more rapidly. This suggests that the stability introduced by the stable Gram teacher positively impacts the iBOT objective. In contrast, the Gram objective does not have a significant effect on the DINO losses. This observation implies that the Gram and iBOT objectives impact the features in a similar way, whereas the DINO losses affect them differently.

【翻译】我们在图7中可视化了不同损失的演化,观察到应用Gram目标显著影响iBOT损失,使其下降得更快。这说明稳定的Gram教师引入的稳定性对iBOT目标产生积极影响。相比之下,Gram目标对DINO损失没有显著效果。这一观察说明Gram和iBOT目标以相似的方式影响特征,而DINO损失则以不同的方式影响它们。

【解析】这段分析揭示了不同损失函数之间的相互作用机制。图7的损失演化曲线显示,当引入Gram锚定目标后,iBOT损失的下降速度明显加快,这种现象背后反映了深层的特征学习机制。iBOT损失主要关注patch级别的掩码重建任务,需要模型在局部patch之间建立准确的空间相关性,而Gram矩阵恰好编码了patch之间的相似性结构。因此,稳定的Gram教师提供的空间结构约束直接有助于iBOT目标的优化,两者在feature space中存在互补的约束作用。具体来说,Gram约束确保了patch特征之间的相似性关系保持空间连续性,而这种连续性正是iBOT掩码重建任务所需要的先验知识,使得模型能够更容易地从周围未被掩码的patch中推断出被掩码patch的内容。相反,DINO损失主要作用于全局class token,关注整体图像的语义表示学习,它的优化路径相对独立于patch之间的局部相似性结构。因此Gram目标对DINO损失的影响较小,这种差异性说明了Vision Transformer中全局特征学习和局部特征学习在某种程度上是解耦的。这种观察为理解多目标训练中不同损失函数的相互作用提供了重要洞察,也验证了Gram锚定方法能够选择性地改善局部特征质量而不损害全局表示能力。

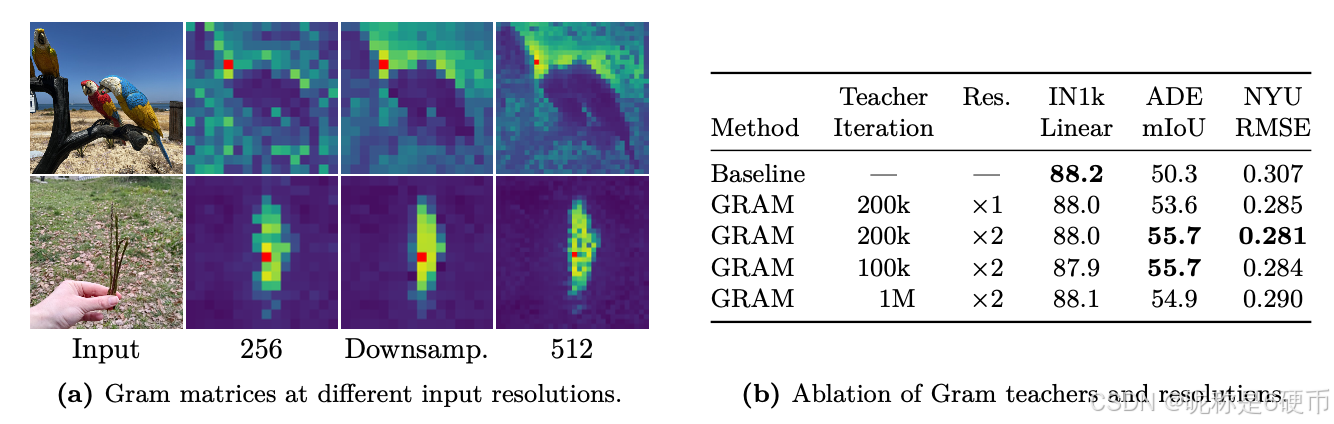

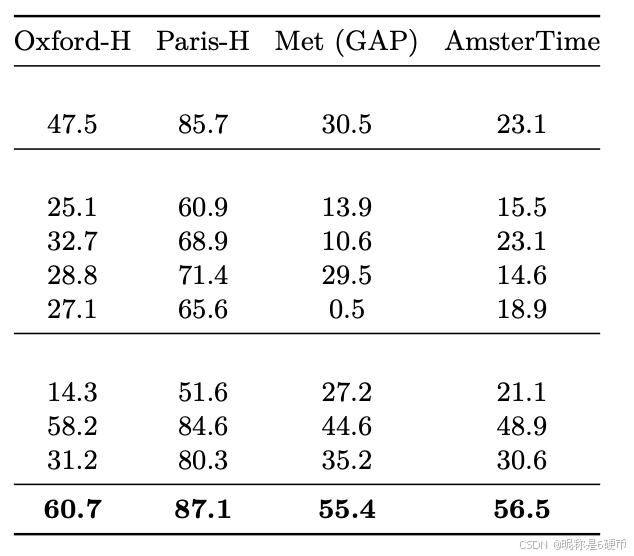

Figure 8: Evolution of the results on different benchmarks after applying our proposed Gram anchoring method. We visualize results when continuing the original training with our refinement step, noted ’ LRef\mathcal{L}_{\mathrm{Ref}}{}^{}LRef '. We also plot results obtained when using higher-resolution features for the Gram objective as introduced in following Sec. 4.3 and noted ’ LHRef\boldsymbol{\mathcal{L}}_{\mathrm{HRef}}LHRef '. We highlight the iterations which use the Gram objective.

【翻译】图8:应用我们提出的Gram锚定方法后不同基准测试结果的演化。我们可视化了使用细化步骤继续原始训练的结果,记为’LRef\mathcal{L}_{\mathrm{Ref}}{}^{}LRef‘。我们还绘制了使用高分辨率特征进行Gram目标的结果,如4.3节中介绍的,记为’LHRef\boldsymbol{\mathcal{L}}_{\mathrm{HRef}}LHRef'。我们突出显示了使用Gram目标的迭代次数。

【解析】图8展示了Gram锚定方法在多个benchmark上的效果演化,图中包含两条主要曲线:LRef\mathcal{L}_{\mathrm{Ref}}LRef代表基础的Gram锚定细化过程,而LHRef\boldsymbol{\mathcal{L}}_{\mathrm{HRef}}LHRef则代表结合高分辨率特征的进阶版本。图中突出显示的Gram目标应用迭代区间对于理解方法的时序效应至关重要,因为它显示了Gram锚定不是在整个训练过程中持续应用,而是在特定的refinement阶段引入。这种阶段性应用策略避免了早期训练中不必要的约束,让模型首先学习基础的视觉表示,然后在后期通过Gram约束来修复局部特征质量。不同benchmark的表现变化趋势:密集预测任务(如语义分割)通常显示出更显著的改善,因为这些任务对patch级特征的空间一致性更为敏感,而全局分类任务的改善相对较小但依然稳定,这验证了方法对全局表示学习的无害性。高分辨率版本LHRef\boldsymbol{\mathcal{L}}_{\mathrm{HRef}}LHRef的进一步性能提升说明了高质量Gram教师的重要性,通过使用更细致的空间结构作为约束目标,能够指导学生模型学习到更精确的局部特征表示。

Regarding performance, we observe the impact of the new loss is almost immediate. As shown in Fig. 8 , incorporating Gram anchoring leads to significant improvements on dense tasks within the first 10k iterations. We also see notable gains on the ADE20k benchmark following the Gram teacher updates. Additionally, longer training further benefits performance on the ObjectNet benchmark and other global benchmarks show mild impact from the new loss.

【翻译】关于性能,我们观察到新损失的影响几乎是立即的。如图8所示,引入Gram锚定在前10k次迭代内就在密集任务上带来了显著改善。我们还看到在Gram教师更新后ADE20k基准测试中的显著收益。此外,更长时间的训练进一步提升了ObjectNet基准测试的性能,其他全局基准测试显示出新损失的轻微影响。

4.3 利用高分辨率特征

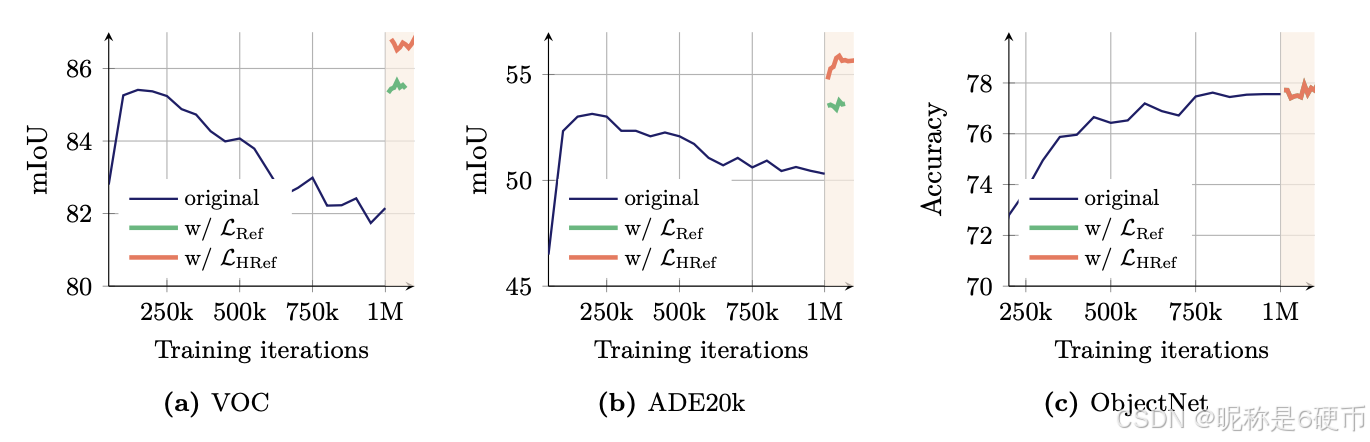

Recent work shows that a weighted average of patch features can yield stronger local representations by smoothing outlier patches and enhancing patch-level consistency ( Wysoczańska et al. , 2024 ). On the other hand, feeding higher-resolution images into the backbone produces finer and more detailed feature maps. We leverage the benefits of both observations to compute high-quality features for Gram teacher. Specifically, we first input images at twice the normal resolution into the Gram teacher, then 2×2\times2× down-sample the resulting feature maps with the bicubic interpolation to achieve the desired smooth feature maps that match the size of the student output. Fig. 9a visualizes the Gram matrices of patch features obtained with images at resolutions 256 and 512, as well as those obtained after 2 ×\times× down-sampling features from the 512-resolution (denoted as ‘downsamp.’). We observe that the superior patch-level consistency in the higher-resolution features is preserved through down-sampling, resulting in smoother and more coherent patch-level representations. As a side note, our model can seamlessly process images at varying resolutions without requiring adaptation, thanks to the adoption of Rotary Positional Embeddings (RoPE) introduced by Su et al. ( 2024 ).

【翻译】最近的工作表明,patch特征的加权平均可以通过平滑异常patch并增强patch级一致性来产生更强的局部表示(Wysoczańska et al.,2024)。另一方面,将更高分辨率的图像输入主干网络会产生更精细和更详细的特征图。我们利用这两个观察的优势来计算Gram教师的高质量特征。具体来说,我们首先将两倍正常分辨率的图像输入Gram教师,然后使用双三次插值对生成的特征图进行2×2\times2×下采样,以获得与学生输出大小匹配的所需平滑特征图。图9a可视化了在分辨率256和512下获得的patch特征的Gram矩阵,以及从512分辨率特征进行2×\times×下采样后获得的Gram矩阵(标记为’downsamp.')。我们观察到高分辨率特征中优越的patch级一致性通过下采样得以保持,从而产生更平滑、更连贯的patch级表示。顺便提一下,由于采用了Su等人(2024)引入的旋转位置嵌入(RoPE),我们的模型可以无缝处理不同分辨率的图像而无需适应。

【解析】这里介绍的高分辨率Gram锚定技术是对基础Gram锚定方法的改进。核心思想是通过结合两个独立的研究发现来构建更优质的Gram教师模型。第一个发现是patch特征的加权平均能够平滑异常patch并增强空间一致性,这种平滑操作有助于消除由于数据噪声或训练不稳定导致的局部特征不连续性。第二个发现是高分辨率输入能够产生更精细的特征图,包含更丰富的空间细节信息。研究团队巧妙地将这两个优势结合:首先使用2×2\times2×的高分辨率图像作为Gram教师的输入,这样可以获得包含更多空间细节的特征表示,然后通过双三次插值进行2×2\times2×下采样,将特征图尺寸调整到与学生模型输出相匹配。双三次插值是一种高质量的图像缩放算法,它通过考虑周围16个像素的加权平均来计算新的像素值,这种插值方法不仅能够保持图像的细节信息,还能产生平滑的过渡效果,从而实现了前述两个优势的完美结合。图9a的可视化结果验证了这种方法的有效性:高分辨率特征确实具有更好的patch级一致性,而且这种一致性在下采样过程中得以保持,最终产生的特征表示既包含丰富的空间细节又具有良好的空间连续性。值得注意的是,模型能够处理不同分辨率输入的能力来源于旋转位置嵌入(RoPE)的使用,RoPE通过将位置信息编码为旋转矩阵的形式,使得模型能够自然地泛化到训练时未见过的序列长度,这对于处理不同分辨率图像(对应不同数量的patch)至关重要。

We compute the Gram matrix of the down-sampled features and use it to replace XG\mathbf{X}_{G}XG in the objective LGram\mathcal{L}_{\mathrm{Gram}}LGram . We note the new resulting refinement objective as LHRef\mathcal{L}_{\mathrm{HRef}}LHRef . This approach enables the Gram objective to effectively distill the improved patch consistency of smoothed high-resolution features into the student model. As shown in Fig. 8 and Fig. 9b , this distillation translates into better predictions on dense tasks, yielding additional gains on top of the benefit brought by LRef\mathcal{L}_{\mathrm{Ref}}LRef ( +2+2+2 mIoU on ADE20k). We also ablate the choice of Gram teacher in Fig. 9b . Interestingly, choosing the Gram teacher from 100k or 200k does not significantly impact the results, but using a much later Gram teacher (1M iterations) is detrimental because the patch-level consistency of such a teacher is inferior.

【翻译】我们计算下采样特征的Gram矩阵,并用它来替换目标LGram\mathcal{L}_{\mathrm{Gram}}LGram中的XG\mathbf{X}_{G}XG。我们将新的细化目标记为LHRef\mathcal{L}_{\mathrm{HRef}}LHRef。这种方法使Gram目标能够有效地将平滑高分辨率特征的改进patch一致性蒸馏到学生模型中。如图8和图9b所示,这种蒸馏转化为密集任务上更好的预测,在LRef\mathcal{L}_{\mathrm{Ref}}LRef带来的好处基础上产生额外收益(在ADE20k上+2 mIoU)。我们还在图9b中消融了Gram教师的选择。有趣的是,从100k或200k选择Gram教师对结果没有显著影响,但使用更晚的Gram教师(1M次迭代)是有害的,因为这样的教师的patch级一致性较差。

【解析】高分辨率Gram锚定方法的具体实现涉及将高质量的下采样特征矩阵XG\mathbf{X}_{G}XG替换到原始的Gram损失函数中,形成新的高分辨率细化目标LHRef\mathcal{L}_{\mathrm{HRef}}LHRef。这种替换的本质是将Gram锚定的参考标准从普通分辨率的早期模型特征提升到高分辨率平滑处理后的特征,从而为学生模型提供更高质量的空间结构约束。蒸馏过程的工作机制是:高分辨率Gram教师产生的Gram矩阵包含更精确的patch间相似性关系,当学生模型的Gram矩阵被约束向这个高质量参考靠拢时,学生模型被迫学习到更准确的空间特征表示。实验结果显示,这种改进在密集预测任务上带来了额外的性能提升,特别是在ADE20k语义分割任务上获得了2个mIoU的额外收益,这说明改进的空间特征质量直接转化为了实际应用性能的提升。关于Gram教师选择时机的消融研究揭示了一个重要的训练动态:100k和200k迭代的教师模型在性能上相当,说明在这个时间段内模型的patch级一致性相对稳定,而1M迭代的教师模型性能反而下降,这验证了前面提到的长期训练导致patch级一致性退化的现象。这个观察进一步支持了选择早期训练阶段模型作为Gram教师的策略,因为早期模型确实保持了更好的局部空间结构,能够为后续的特征学习提供更有效的指导。这种时机选择的重要性说明,在自监督学习中,不同训练阶段的模型具有不同的特征学习特性,合理利用这些特性能够显著改善最终的模型质量。

Finally, we qualitatively illustrate the effect of Gram anchoring to patch-level consistency in Fig. 10 which visualizes the Gram matrices patch features obtained with the initial training and high-resolution Gram anchoring refinement. We observe great improvements in feature correlations that our high-resolution refinement procedure brings about.

【翻译】最后,我们在图10中定性地说明了Gram锚定对patch级一致性的影响,该图可视化了通过初始训练和高分辨率Gram锚定细化获得的patch特征的Gram矩阵。我们观察到我们的高分辨率细化过程带来的特征相关性的巨大改善。

Figure 9: Quantitative and qualitative study of the impact of high-resolution Gram. We show (a) the improved cosine maps after down-sampling the high-resolution maps into smaller ones, and (b) the quantitative improvements brought by varying the training iteration and the resolution of the Gram teacher.

【翻译】图9:高分辨率Gram影响的定量和定性研究。我们展示了(a)将高分辨率图下采样为较小图后改进的余弦图,以及(b)通过改变训练迭代次数和Gram教师分辨率带来的定量改进。